Emerging Information Technologies for the Energy Management of Onboard Microgrids in Transportation Applications

Abstract

:1. Introduction

- Provide an overview of recent EMS research, focusing on the applications of two emerging information technologies—AI and DT;

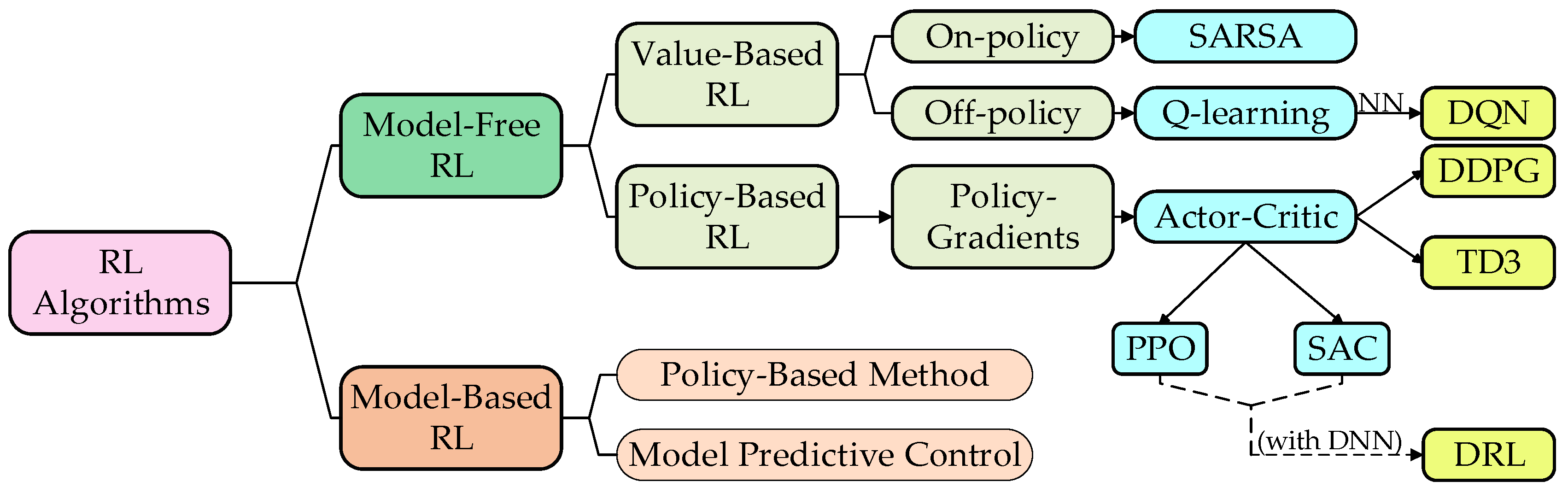

- In the AI domain, classify reinforcement learning-based EMS into model-based versus model-free approaches based on the utilization of models;

- Assess the current state of DT-based EMS research and elucidate the application scenarios of DT in the intelligent transportation environment.

- Provide the future trends of AI and DT technology in OBMG energy management, exploring current challenges and future directions.

2. Emerging Information Technologies

2.1. AI Technology

2.1.1. Three Groups in ML

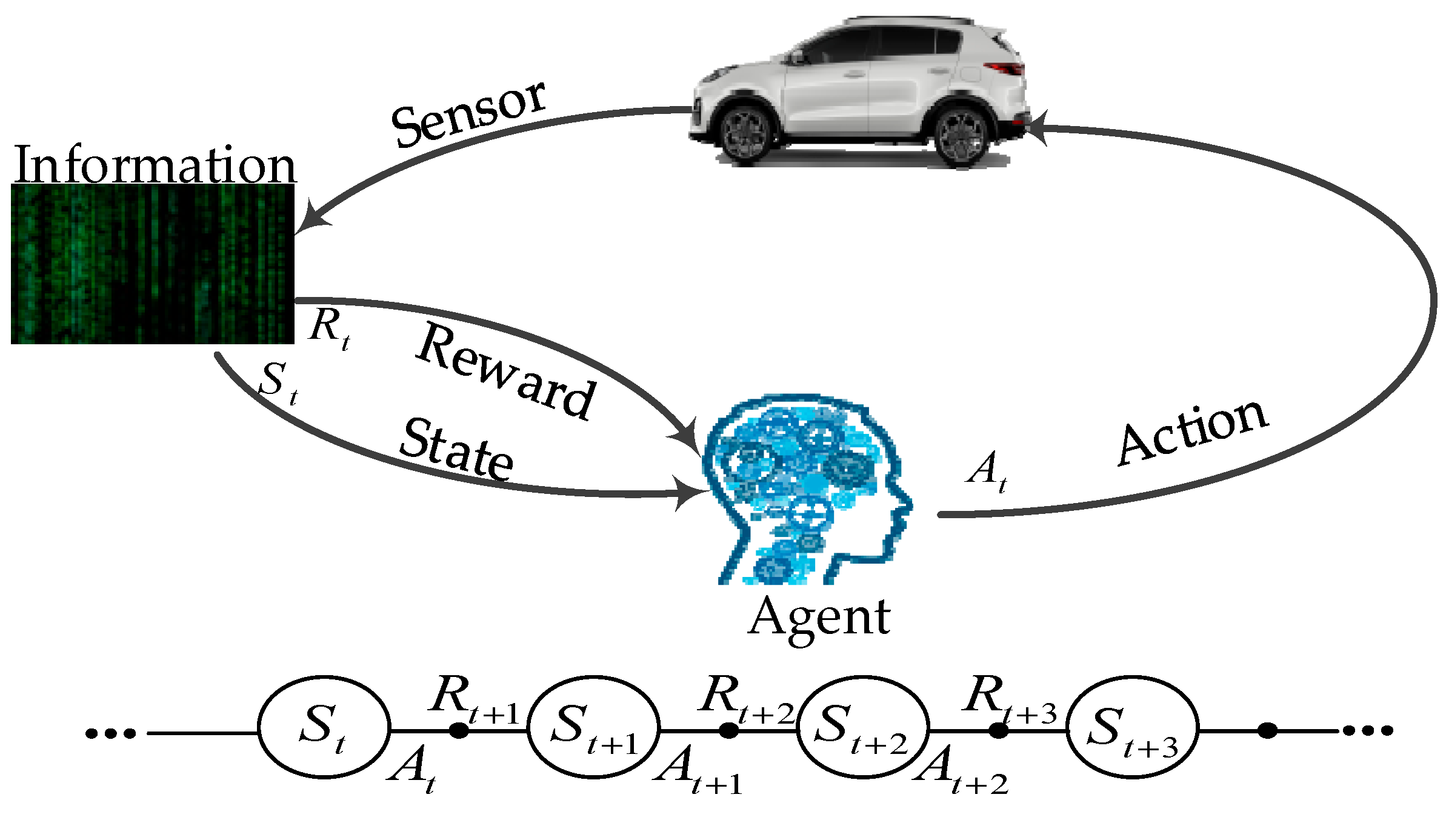

2.1.2. RL

2.2. DT Technology

2.2.1. Development and Evolution of DT

2.2.2. Composition of DT

2.3. Other Information Technologies

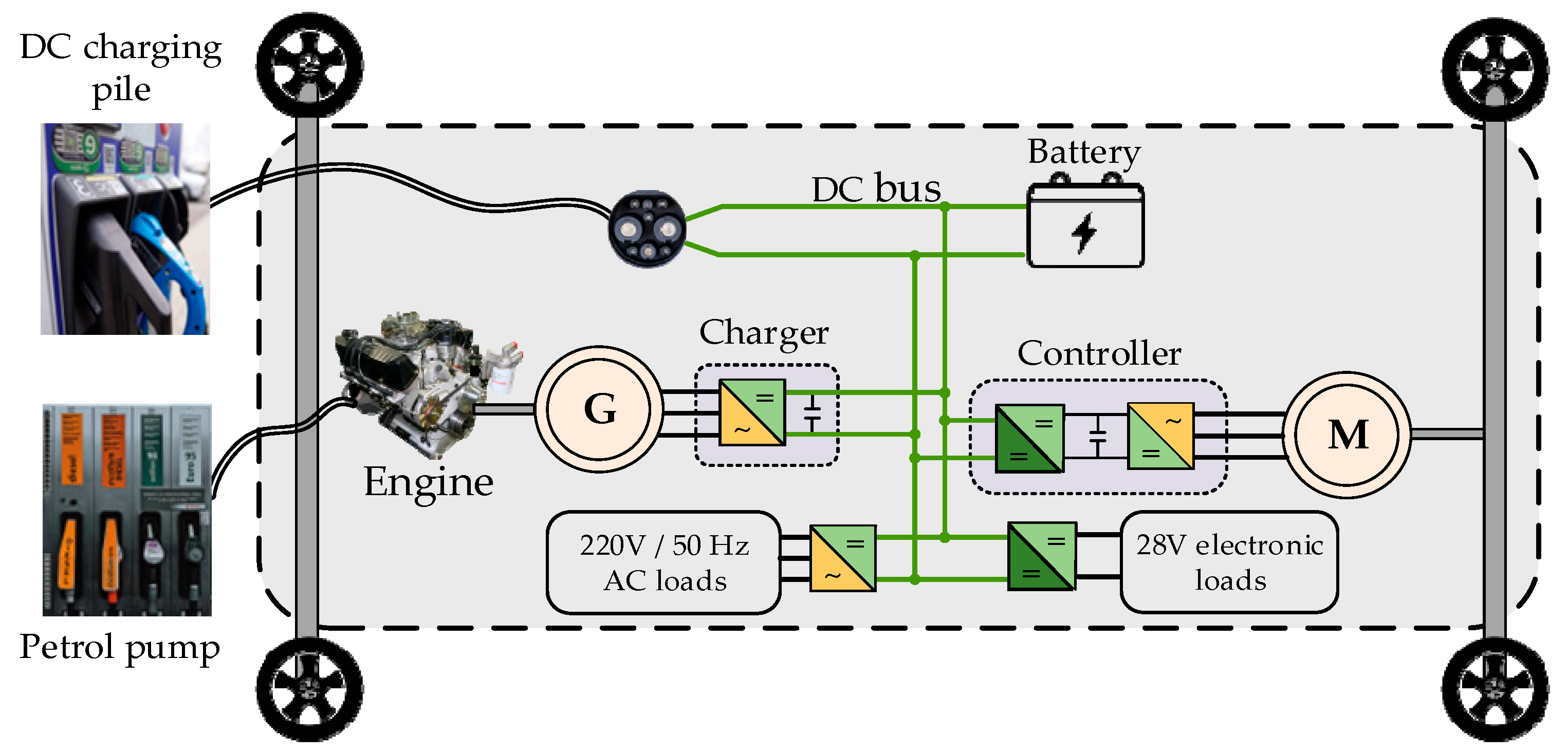

3. Onboard Microgrid Energy Management System

3.1. The Energy Management System of OBMG Overview

3.2. Traditional Energy Management Strategies

3.2.1. Rule-Based Control Strategies

3.2.2. Optimization-Based Control Strategies

4. AI Technology for Energy Management

4.1. Overview of AI in Energy Management

4.2. RL for Energy Management

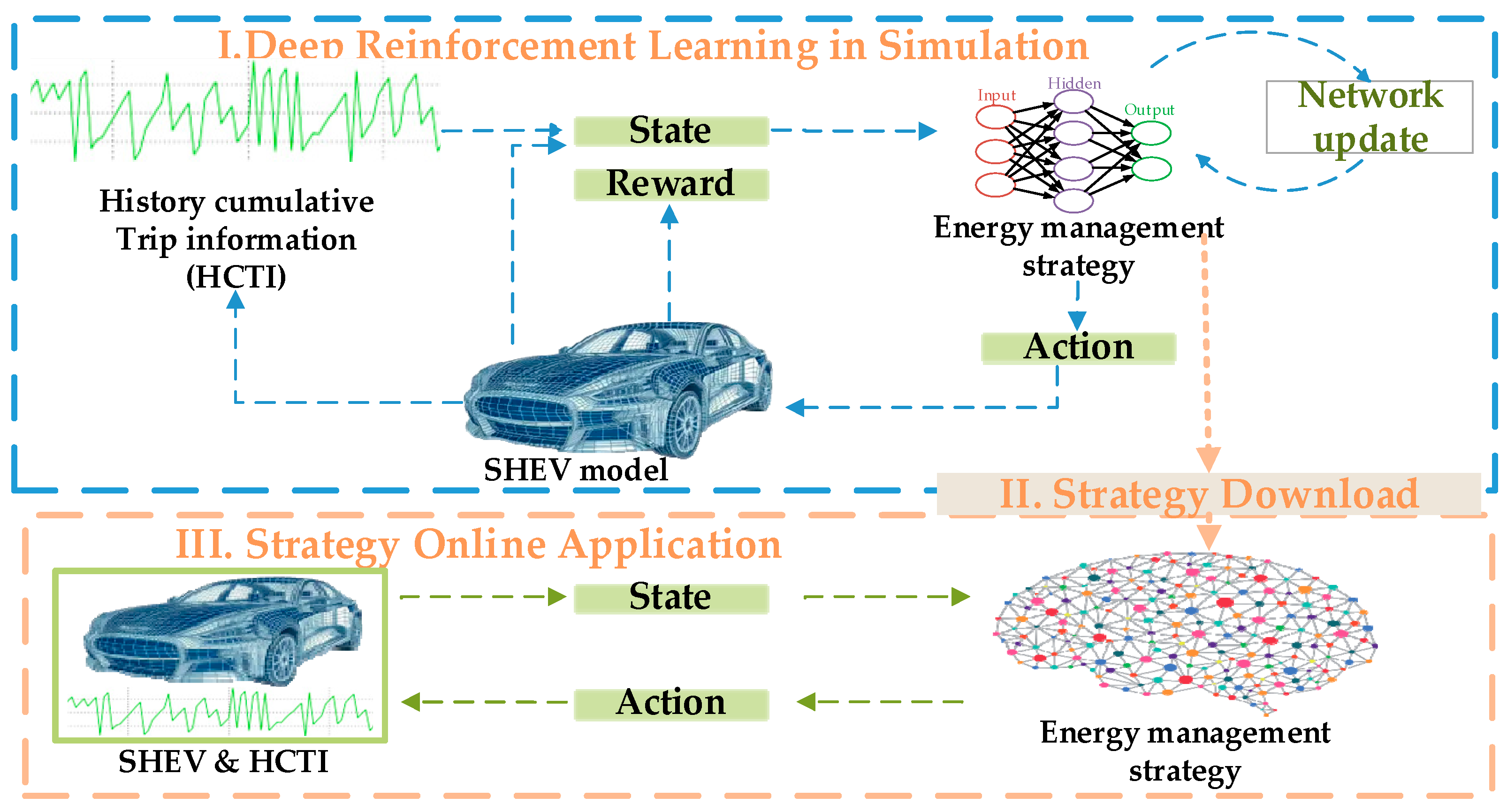

4.2.1. Model-Free RL

4.2.2. Model-Based RL

4.3. Other AI Methods for Energy Management

5. DT Technology for Energy Management

5.1. DT Applied in OBMG

5.2. DT Applied in the Transportation Grid

6. Future Trends

- Data quality and reliability are crucial for the effective utilization of DT and AI. Nevertheless, in the electrical industry, data quality and reliability often encounter challenges caused by factors like sensor noise and data collection errors. Consequently, the need to address how to enhance data quality and reliability to mitigate the impact of data uncertainty remains an unresolved issue.

- Model accuracy and precision are crucial aspects in the development of DT models and AI algorithms. Due to the inherent complexity of electrical systems, building accurate models and designing precise algorithms becomes increasingly challenging. Therefore, further research and improvement are necessary to enhance the accuracy of models and algorithms in this domain.

- Privacy and security protection are of paramount importance in the utilization of DT and AI. Due to the substantial amount of data and information collected and processed in these applications, it is crucial to address the protection of sensitive business and personal privacy information within the electrical industry. Ensuring the security and privacy of such information remains a significant concern that requires attention and resolution.

- Standards and interoperability are vital for promoting the widespread adoption of DT and AI. To accomplish this, it is essential to develop common standards and specifications, as well as enhance interoperability among diverse systems. The establishment of such measures will facilitate the seamless cross-platform and cross-system integration and application of DT and AI technologies.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Nomenclature

| AI | Artificial intelligence |

| BMS | Battery management system |

| DDPG | Deep deterministic policy gradient |

| DNN | Deep neural network |

| DP | Dynamic programming |

| DQN | Deep Q-network |

| DRL | Deep reinforcement learning |

| DT | Digital twin |

| ECMS | Equivalent consumption minimization strategy |

| EMS | Energy management strategies |

| ERS | electric railway system |

| FC | Fuel cell |

| GA | Genetic algorithms |

| GUI | graphical user interface |

| HEV | Hybrid electric vehicle |

| IoT | Internet of Things |

| IoV | Internet of vehicles |

| MDP | Markov decision process |

| ML | Machine learning |

| MPC | Model predictive control |

| NN | Neural network |

| OBMG | Onboard microgrid |

| PID | Proportional-integral-derivative |

| PPO | Proximal policy optimization |

| PSO | particle swarm optimization |

| RL | Reinforcement learning |

| SAC | Soft actor–critic |

| SARSA | state-action-reward-status-action |

| SL | Supervised learning |

| SoC | State of charge |

| TD3 | Twin delayed DDPG |

| UL | Unsupervised learning |

References

- Singh, N. India’s Strategy for Achieving Net Zero. Energies 2022, 15, 5852. [Google Scholar] [CrossRef]

- Deng, R.; Liu, Y.; Chen, W.; Liang, H. A Survey on Electric Buses—Energy Storage, Power Management, and Charging Scheduling. IEEE Trans. Intell. Transp. Syst. 2021, 22, 9–22. [Google Scholar] [CrossRef]

- Hu, X.; Han, J.; Tang, X.; Lin, X. Powertrain Design and Control in Electrified Vehicles: A Critical Review. IEEE Trans. Transp. Electrif. 2021, 7, 1990–2009. [Google Scholar] [CrossRef]

- Tarafdar-Hagh, M.; Taghizad-Tavana, K.; Ghanbari-Ghalehjoughi, M.; Nojavan, S.; Jafari, P.; Mohammadpour Shotorbani, A. Optimizing Electric Vehicle Operations for a Smart Environment: A Comprehensive Review. Energies 2023, 16, 4302. [Google Scholar] [CrossRef]

- Chu, S.; Majumdar, A. Opportunities and Challenges for a Sustainable Energy Future. Nature 2012, 488, 294–303. [Google Scholar] [CrossRef] [PubMed]

- Mavlutova, I.; Atstaja, D.; Grasis, J.; Kuzmina, J.; Uvarova, I.; Roga, D. Urban Transportation Concept and Sustainable Urban Mobility in Smart Cities: A Review. Energies 2023, 16, 3585. [Google Scholar] [CrossRef]

- Rimpas, D.; Kaminaris, S.D.; Piromalis, D.D.; Vokas, G.; Arvanitis, K.G.; Karavas, C.-S. Comparative Review of Motor Technologies for Electric Vehicles Powered by a Hybrid Energy Storage System Based on Multi-Criteria Analysis. Energies 2023, 16, 2555. [Google Scholar] [CrossRef]

- Patnaik, B.; Kumar, S.; Gawre, S. Recent Advances in Converters and Storage Technologies for More Electric Aircrafts: A Review. IEEE J. Miniaturization Air Space Syst. 2022, 3, 78–87. [Google Scholar] [CrossRef]

- Wang, X.; Bazmohammadi, N.; Atkin, J.; Bozhko, S.; Guerrero, J.M. Chance-Constrained Model Predictive Control-Based Operation Management of More-Electric Aircraft Using Energy Storage Systems under Uncertainty. J. Energy Storage 2022, 55, 105629. [Google Scholar] [CrossRef]

- Buticchi, G.; Bozhko, S.; Liserre, M.; Wheeler, P.; Al-Haddad, K. On-Board Microgrids for the More Electric Aircraft—Technology Review. IEEE Trans. Ind. Electron. 2019, 66, 5588–5599. [Google Scholar] [CrossRef]

- Ajanovic, A.; Haas, R.; Schrödl, M. On the Historical Development and Future Prospects of Various Types of Electric Mobility. Energies 2021, 14, 1070. [Google Scholar] [CrossRef]

- Cao, Y.; Yao, M.; Sun, X. An Overview of Modelling and Energy Management Strategies for Hybrid Electric Vehicles. Appl. Sci. 2023, 13, 5947. [Google Scholar] [CrossRef]

- Zhang, H.; Peng, J.; Tan, H.; Dong, H.; Ding, F. A Deep Reinforcement Learning-Based Energy Management Framework With Lagrangian Relaxation for Plug-In Hybrid Electric Vehicle. IEEE Trans. Transp. Electrif. 2021, 7, 1146–1160. [Google Scholar] [CrossRef]

- Leite, J.P.S.P.; Voskuijl, M. Optimal Energy Management for Hybrid-Electric Aircraft. Aircr. Eng. Aerosp. Technol. 2020, 92, 851–861. [Google Scholar] [CrossRef]

- Motapon, S.N.; Dessaint, L.-A.; Al-Haddad, K. A Comparative Study of Energy Management Schemes for a Fuel-Cell Hybrid Emergency Power System of More-Electric Aircraft. IEEE Trans. Ind. Electron. 2014, 61, 1320–1334. [Google Scholar] [CrossRef]

- Xue, Q.; Zhang, X.; Teng, T.; Zhang, J.; Feng, Z.; Lv, Q. A Comprehensive Review on Classification, Energy Management Strategy, and Control Algorithm for Hybrid Electric Vehicles. Energies 2020, 13, 5355. [Google Scholar] [CrossRef]

- Salmasi, F.R. Control Strategies for Hybrid Electric Vehicles: Evolution, Classification, Comparison, and Future Trends. IEEE Trans. Veh. Technol. 2007, 56, 2393–2404. [Google Scholar] [CrossRef]

- Worku, M.Y.; Hassan, M.A.; Abido, M.A. Real Time-Based under Frequency Control and Energy Management of Microgrids. Electronics 2020, 9, 1487. [Google Scholar] [CrossRef]

- Rasool, M.; Khan, M.A.; Zou, R. A Comprehensive Analysis of Online and Offline Energy Management Approaches for Optimal Performance of Fuel Cell Hybrid Electric Vehicles. Energies 2023, 16, 3325. [Google Scholar] [CrossRef]

- Wang, X.; Atkin, J.; Bazmohammadi, N.; Bozhko, S.; Guerrero, J.M. Optimal Load and Energy Management of Aircraft Microgrids Using Multi-Objective Model Predictive Control. Sustainability 2021, 13, 13907. [Google Scholar] [CrossRef]

- Teng, F.; Ding, Z.; Hu, Z.; Sarikprueck, P. Technical Review on Advanced Approaches for Electric Vehicle Charging Demand Management, Part I: Applications in Electric Power Market and Renewable Energy Integration. IEEE Trans. Ind. Appl. 2020, 56, 5684–5694. [Google Scholar] [CrossRef]

- Xu, L.; Guerrero, J.M.; Lashab, A.; Wei, B.; Bazmohammadi, N.; Vasquez, J.C.; Abusorrah, A. A Review of DC Shipboard Microgrids—Part II: Control Architectures, Stability Analysis, and Protection Schemes. IEEE Trans. Power Electron. 2022, 37, 4105–4120. [Google Scholar] [CrossRef]

- Boglou, V.; Karavas, C.-S.; Arvanitis, K.; Karlis, A. A Fuzzy Energy Management Strategy for the Coordination of Electric Vehicle Charging in Low Voltage Distribution Grids. Energies 2020, 13, 3709. [Google Scholar] [CrossRef]

- Boglou, V.; Karavas, C.; Karlis, A.; Arvanitis, K.G.; Palaiologou, I. An Optimal Distributed RES Sizing Strategy in Hybrid Low Voltage Networks Focused on EVs’ Integration. IEEE Access 2023, 11, 16250–16270. [Google Scholar] [CrossRef]

- Boglou, V.; Karavas, C.-S.; Karlis, A.; Arvanitis, K. An Intelligent Decentralized Energy Management Strategy for the Optimal Electric Vehicles’ Charging in Low-Voltage Islanded Microgrids. Int. J. Energy Res. 2022, 46, 2988–3016. [Google Scholar] [CrossRef]

- Bhatti, G.; Mohan, H.; Raja Singh, R. Towards the Future of Smart Electric Vehicles: Digital Twin Technology. Renew. Sustain. Energy Rev. 2021, 141, 110801. [Google Scholar] [CrossRef]

- Joshi, A.; Capezza, S.; Alhaji, A.; Chow, M.-Y. Survey on AI and Machine Learning Techniques for Microgrid Energy Management Systems. IEEECAA J. Autom. Sin. 2023, 10, 1513–1529. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Z.; Li, G.; Liu, Y.; Chen, H.; Cunningham, G.; Early, J. Machine Learning-Based Vehicle Model Construction and Validation—Toward Optimal Control Strategy Development for Plug-In Hybrid Electric Vehicles. IEEE Trans. Transp. Electrif. 2022, 8, 1590–1603. [Google Scholar] [CrossRef]

- Gan, J.; Li, S.; Wei, C.; Deng, L.; Tang, X. Intelligent Learning Algorithm and Intelligent Transportation-Based Energy Management Strategies for Hybrid Electric Vehicles: A Review. IEEE Trans. Intell. Transp. Syst. 2023, 1–17. [Google Scholar] [CrossRef]

- Feiyan, Q.; Weimin, L. A Review of Machine Learning on Energy Management Strategy for Hybrid Electric Vehicles. In Proceedings of the 2021 6th Asia Conference on Power and Electrical Engineering (ACPEE), Chongqing, China, 8–11 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 315–319. [Google Scholar]

- Zhao, S.; Blaabjerg, F.; Wang, H. An Overview of Artificial Intelligence Applications for Power Electronics. IEEE Trans. Power Electron. 2021, 36, 4633–4658. [Google Scholar] [CrossRef]

- Park, H.-A.; Byeon, G.; Son, W.; Jo, H.-C.; Kim, J.; Kim, S. Digital Twin for Operation of Microgrid: Optimal Scheduling in Virtual Space of Digital Twin. Energies 2020, 13, 5504. [Google Scholar] [CrossRef]

- Shamsuzzoha, A.; Nieminen, J.; Piya, S.; Rutledge, K. Smart City for Sustainable Environment: A Comparison of Participatory Strategies from Helsinki, Singapore and London. Cities 2021, 114, 103194. [Google Scholar] [CrossRef]

- Major, P.; Li, G.; Hildre, H.P.; Zhang, H. The Use of a Data-Driven Digital Twin of a Smart City: A Case Study of Ålesund, Norway. IEEE Instrum. Meas. Mag. 2021, 24, 39–49. [Google Scholar] [CrossRef]

- Biswas, A.; Emadi, A. Energy Management Systems for Electrified Powertrains: State-of-the-Art Review and Future Trends. IEEE Trans. Veh. Technol. 2019, 68, 6453–6467. [Google Scholar] [CrossRef]

- Ali, A.M.; Moulik, B. On the Role of Intelligent Power Management Strategies for Electrified Vehicles: A Review of Predictive and Cognitive Methods. IEEE Trans. Transp. Electrif. 2022, 8, 368–383. [Google Scholar] [CrossRef]

- Celsi, L.R.; Valli, A. Applied Control and Artificial Intelligence for Energy Management: An Overview of Trends in EV Charging, Cyber-Physical Security and Predictive Maintenance. Energies 2023, 16, 4678. [Google Scholar] [CrossRef]

- Ozay, M.; Esnaola, I.; Yarman Vural, F.T.; Kulkarni, S.R.; Poor, H.V. Machine Learning Methods for Attack Detection in the Smart Grid. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 1773–1786. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Ramkumar, K.R.; Kukkar, A. Machine Learning Techniques and Implementation of Different ML Algorithms. In Proceedings of the 2021 2nd Global Conference for Advancement in Technology (GCAT), Bangalore, India, 1–3 October 2021; pp. 1–6. [Google Scholar]

- Ravi Kumar, R.; Babu Reddy, M.; Praveen, P. A Review of Feature Subset Selection on Unsupervised Learning. In Proceedings of the 2017 Third International Conference on Advances in Electrical, Electronics, Information, Communication and Bio-Informatics (AEEICB), Chennai, India, 27–28 February 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 163–167. [Google Scholar]

- Kiumarsi, B.; Vamvoudakis, K.G.; Modares, H.; Lewis, F.L. Optimal and Autonomous Control Using Reinforcement Learning: A Survey. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2042–2062. [Google Scholar] [CrossRef]

- Raković, S.V. The Minkowski-Bellman Equation. arXiv 2019, arXiv:2008.13010. [Google Scholar]

- Nguyen, S.; Abdelhakim, M.; Kerestes, R. Survey Paper of Digital Twins and Their Integration into Electric Power Systems. In Proceedings of the 2021 IEEE Power & Energy Society General Meeting (PESGM), Washington, DC, USA, 26 July 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–5. [Google Scholar]

- Dimogiannis, K.; Sankowski, A.; Holc, C.; Newton, G.; Walsh, D.A.; O’Shea, J.; Khlobystov, A.; Sankowski, A. Understanding the Mg Cycling Mechanism on a MgTFSI-Glyme Electrolyte. ECS Meet. Abstr. 2022, MA2022-01, 574. [Google Scholar] [CrossRef]

- Shafto, M.; Conroy, M.; Doyle, R.; Glaessgen, E.; Kemp, C.; LeMoigne, J.; Wang, L. Modeling, Simulation, Information Technology and Processing Roadmap; NASA: Washington, DC, USA, 2010. [Google Scholar]

- Ríos, J.; Morate, F.M.; Oliva, M.; Hernández, J.C. Framework to Support the Aircraft Digital Counterpart Concept with an Industrial Design View. Int. J. Agil. Syst. Manag. 2016, 9, 212. [Google Scholar] [CrossRef]

- Grieves, M. Digital Twin: Manufacturing Excellence through Virtual Factory Replication. White Pap. 2015, 1, 1–7. [Google Scholar]

- Zhang, G.; Huo, C.; Zheng, L.; Li, X. An Architecture Based on Digital Twins for Smart Power Distribution System. In Proceedings of the 2020 3rd International Conference on Artificial Intelligence and Big Data (ICAIBD), Chengdu, China, 28–31 May 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 29–33. [Google Scholar]

- Niloy, F.A.; Nayeem, M.A.; Rahman, M.M.; Dowla, M.N.U. Blockchain-Based Peer-to-Peer Sustainable Energy Trading in Microgrid Using Smart Contracts. In Proceedings of the 2021 2nd International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 5–7 January 2021; pp. 61–66. [Google Scholar]

- Dinesha, D.L.; Balachandra, P. Establishing Interoperability in Blockchain Enabled Interconnected Smart Microgrids Using Ignite CLI. In Proceedings of the 2023 IEEE Green Technologies Conference (GreenTech), Denver, CO, USA, 19–21 April 2023; pp. 46–50. [Google Scholar]

- Laayati, O.; El Hadraoui, H.; Bouzi, M.; El-Alaoui, A.; Kousta, A.; Chebak, A. Smart Energy Management System: Blockchain-Based Smart Meters in Microgrids. In Proceedings of the 2022 4th Global Power, Energy and Communication Conference (GPECOM), Cappadocia, Turkey, 14–17 June 2022; pp. 580–585. [Google Scholar]

- Raju, L.; Surabhi, S.; Vimalan, K.M. Blockchain Based Energy Transaction in Microgrid. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24–26 November 2022; pp. 1–6. [Google Scholar]

- Gomozov, O.; Trovao, J.P.F.; Kestelyn, X.; Dubois, M.R. Adaptive Energy Management System Based on a Real-Time Model Predictive Control With Nonuniform Sampling Time for Multiple Energy Storage Electric Vehicle. IEEE Trans. Veh. Technol. 2016, 66, 5520–5530. [Google Scholar] [CrossRef]

- Li, J.; Zhou, Q.; Williams, H.; Xu, H.; Du, C. Cyber-Physical Data Fusion in Surrogate-Assisted Strength Pareto Evolutionary Algorithm for PHEV Energy Management Optimization. IEEE Trans. Ind. Inform. 2022, 18, 4107–4117. [Google Scholar] [CrossRef]

- Boutasseta, N.; Bouakkaz, M.S.; Fergani, N.; Attoui, I.; Bouraiou, A.; Neçaibia, A. Solar Energy Conversion Systems Optimization Using Novel Jellyfish Based Maximum Power Tracking Strategy. Procedia Comput. Sci. 2021, 194, 80–88. [Google Scholar] [CrossRef]

- Garcia-Triviño, P.; Fernández-Ramírez, L.; García-Vázquez, C.; Jurado, F. Energy Management System of Fuel-Cell-Battery Hybrid Tramway. IEEE Trans. Ind. Electron. 2010, 57, 4013–4023. [Google Scholar] [CrossRef]

- Li, C.-Y.; Liu, G.-P. Optimal Fuzzy Power Control and Management of Fuel Cell/Battery Hybrid Vehicles. J. Power Sources 2009, 192, 525–533. [Google Scholar] [CrossRef]

- Pisu, P.; Koprubasi, K.; Rizzoni, G. Energy Management and Drivability Control Problems for Hybrid Electric Vehicles. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 12–15 December 2005; IEEE: Piscataway, NJ, USA, 2005; pp. 1824–1830. [Google Scholar]

- García, P.; Torreglosa, J.; Fernández, L.; Jurado, F. Viability Study of a FC-Battery-SC Tramway Controlled by Equivalent Consumption Minimization Strategy. Int. J. Hydrogen Energy 2012, 37, 9368–9382. [Google Scholar] [CrossRef]

- Vural, B.; Boynuegri, A.; Nakir, I.; Erdinc, O.; Balikci, A.; Uzunoglu, M.; Gorgun, H.; Dusmez, S. Fuel Cell and Ultra-Capacitor Hybridization: A Prototype Test Bench Based Analysis of Different Energy Management Strategies for Vehicular Applications. Int. J. Hydrogen Energy 2010, 35, 11161–11171. [Google Scholar] [CrossRef]

- Roncancio, J.S.; Vuelvas, J.; Patino, D.; Correa-Flórez, C.A. Flower Greenhouse Energy Management to Offer Local Flexibility Markets. Energies 2022, 15, 4572. [Google Scholar] [CrossRef]

- Aldbaiat, B.; Nour, M.; Radwan, E.; Awada, E. Grid-Connected PV System with Reactive Power Management and an Optimized SRF-PLL Using Genetic Algorithm. Energies 2022, 15, 2177. [Google Scholar] [CrossRef]

- Pinheiro, G.G.; Da Silva, C.H.; Guimarães, B.P.B.; Gonzatti, R.B.; Pereira, R.R.; Sant’Ana, W.C.; Lambert-Torres, G.; Santana-Filho, J. Power Flow Control Using Series Voltage Source Converters in Distribution Grids. Energies 2022, 15, 3337. [Google Scholar] [CrossRef]

- Laayati, O.; Hadraoui, H.E.; Bouzi, M.; Elmaghraoui, A.; Ledmaoui, Y.; Chebak, A. Tabu Search Optimization for Energy Management in Microgrids: A Solution to Grid-Connected and Standalone Operation Modes. In Proceedings of the 2023 5th Global Power, Energy and Communication Conference (GPECOM), Nevsehir, Turkiye, 14 June 2023; IEEE: Piscataway, NJ, USA, 2005; pp. 401–406. [Google Scholar]

- Hare, J.; Shi, X.; Gupta, S.; Bazzi, A. Fault Diagnostics in Smart Micro-Grids: A Survey. Renew. Sustain. Energy Rev. 2016, 60, 1114–1124. [Google Scholar] [CrossRef]

- Rahman Fahim, S.; Sarker, S.K.; Muyeen, S.M.; Sheikh, M.; Islam, R.; Das, S.K. Microgrid Fault Detection and Classification: Machine Learning Based Approach, Comparison, and Reviews. Energies 2020, 13, 3460. [Google Scholar] [CrossRef]

- Laayati, O.; El Hadraoui, H.; El Magharaoui, A.; El-Bazi, N.; Bouzi, M.; Chebak, A.; Guerrero, J.M. An AI-Layered with Multi-Agent Systems Architecture for Prognostics Health Management of Smart Transformers: A Novel Approach for Smart Grid-Ready Energy Management Systems. Energies 2022, 15, 7217. [Google Scholar] [CrossRef]

- Tahir, M.; Tenbohlen, S. Transformer Winding Fault Classification and Condition Assessment Based on Random Forest Using FRA. Energies 2023, 16, 3714. [Google Scholar] [CrossRef]

- Atalar, F.; Ersoy, A.; Rozga, P. Investigation of Effects of Different High Voltage Types on Dielectric Strength of Insulating Liquids. Energies 2022, 15, 8116. [Google Scholar] [CrossRef]

- Li, G.; Görges, D. Fuel-Efficient Gear Shift and Power Split Strategy for Parallel HEVs Based on Heuristic Dynamic Programming and Neural Networks. IEEE Trans. Veh. Technol. 2019, 68, 9519–9528. [Google Scholar] [CrossRef]

- Xi, Z.; Wu, D.; Ni, W.; Ma, X. Energy-Optimized Trajectory Planning for Solar-Powered Aircraft in a Wind Field Using Reinforcement Learning. IEEE Access 2022, 10, 87715–87732. [Google Scholar] [CrossRef]

- Kong, H.; Fang, Y.; Fan, L.; Wang, H.; Zhang, X.; Hu, J. A Novel Torque Distribution Strategy Based on Deep Recurrent Neural Network for Parallel Hybrid Electric Vehicle. IEEE Access 2019, 7, 65174–65185. [Google Scholar] [CrossRef]

- Mosayebi, M.; Gheisarnejad, M.; Farsizadeh, H.; Andresen, B.; Khooban, M.H. Smart Extreme Fast Portable Charger for Electric Vehicles-Based Artificial Intelligence. IEEE Trans. Circuits Syst. II Express Briefs 2023, 70, 586–590. [Google Scholar] [CrossRef]

- Anselma, P.G.; Huo, Y.; Roeleveld, J.; Belingardi, G.; Emadi, A. Integration of On-Line Control in Optimal Design of Multimode Power-Split Hybrid Electric Vehicle Powertrains. IEEE Trans. Veh. Technol. 2019, 68, 3436–3445. [Google Scholar] [CrossRef]

- Alfakih, T.; Hassan, M.M.; Gumaei, A.; Savaglio, C.; Fortino, G. Task Offloading and Resource Allocation for Mobile Edge Computing by Deep Reinforcement Learning Based on SARSA. IEEE Access 2020, 8, 54074–54084. [Google Scholar] [CrossRef]

- Zhu, J.; Song, Y.; Jiang, D.; Song, H. A New Deep-Q-Learning-Based Transmission Scheduling Mechanism for the Cognitive Internet of Things. IEEE Internet Things J. 2018, 5, 2375–2385. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, H.; Zheng, W.; Xia, Y.; Li, Y.; Chen, P.; Guo, K.; Xie, H. Multi-Objective Workflow Scheduling with Deep-Q-Network-Based Multi-Agent Reinforcement Learning. IEEE Access 2019, 7, 39974–39982. [Google Scholar] [CrossRef]

- Bøhn, E.; Coates, E.M.; Moe, S.; Johansen, T.A. Deep Reinforcement Learning Attitude Control of Fixed-Wing UAVs Using Proximal Policy Optimization. In Proceedings of the 2019 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 11–14 June 2019; pp. 523–533. [Google Scholar]

- Wu, J.; Wei, Z.; Li, W.; Wang, Y.; Li, Y.; Sauer, D.U. Battery Thermal- and Health-Constrained Energy Management for Hybrid Electric Bus Based on Soft Actor-Critic DRL Algorithm. IEEE Trans. Ind. Inform. 2021, 17, 3751–3761. [Google Scholar] [CrossRef]

- Qiu, C.; Hu, Y.; Chen, Y.; Zeng, B. Deep Deterministic Policy Gradient (DDPG)-Based Energy Harvesting Wireless Communications. IEEE Internet Things J. 2019, 6, 8577–8588. [Google Scholar] [CrossRef]

- Khalid, J.; Ramli, M.A.M.; Khan, M.S.; Hidayat, T. Efficient Load Frequency Control of Renewable Integrated Power System: A Twin Delayed DDPG-Based Deep Reinforcement Learning Approach. IEEE Access 2022, 10, 51561–51574. [Google Scholar] [CrossRef]

- Qiu, D.; Wang, Y.; Hua, W.; Strbac, G. Reinforcement Learning for Electric Vehicle Applications in Power Systems: A Critical Review. Renew. Sustain. Energy Rev. 2023, 173, 113052. [Google Scholar] [CrossRef]

- Xu, B.; Hu, X.; Tang, X.; Lin, X.; Li, H.; Rathod, D.; Filipi, Z. Ensemble Reinforcement Learning-Based Supervisory Control of Hybrid Electric Vehicle for Fuel Economy Improvement. IEEE Trans. Transp. Electrif. 2020, 6, 717–727. [Google Scholar] [CrossRef]

- Guo, X.; Liu, T.; Tang, B.; Tang, X.; Zhang, J.; Tan, W.; Jin, S. Transfer Deep Reinforcement Learning-Enabled Energy Management Strategy for Hybrid Tracked Vehicle. IEEE Access 2020, 8, 165837–165848. [Google Scholar] [CrossRef]

- Du, G.; Zou, Y.; Zhang, X.; Guo, L.; Guo, N. Heuristic Energy Management Strategy of Hybrid Electric Vehicle Based on Deep Reinforcement Learning with Accelerated Gradient Optimization. IEEE Trans. Transp. Electrif. 2021, 7, 2194–2208. [Google Scholar] [CrossRef]

- Tang, X.; Chen, J.; Pu, H.; Liu, T.; Khajepour, A. Double Deep Reinforcement Learning-Based Energy Management for a Parallel Hybrid Electric Vehicle With Engine Start–Stop Strategy. IEEE Trans. Transp. Electrif. 2022, 8, 1376–1388. [Google Scholar] [CrossRef]

- He, H.; Wang, Y.; Li, J.; Dou, J.; Lian, R.; Li, Y. An Improved Energy Management Strategy for Hybrid Electric Vehicles Integrating Multistates of Vehicle-Traffic Information. IEEE Trans. Transp. Electrif. 2021, 7, 1161–1172. [Google Scholar] [CrossRef]

- Tang, X.; Zhang, J.; Pi, D.; Lin, X.; Grzesiak, L.M.; Hu, X. Battery Health-Aware and Deep Reinforcement Learning-Based Energy Management for Naturalistic Data-Driven Driving Scenarios. IEEE Trans. Transp. Electrif. 2022, 8, 948–964. [Google Scholar] [CrossRef]

- Zhang, B.; Zou, Y.; Zhang, X.; Du, G.; Jiao, F.; Guo, N. Online Updating Energy Management Strategy Based on Deep Reinforcement Learning With Accelerated Training for Hybrid Electric Tracked Vehicles. IEEE Trans. Transp. Electrif. 2022, 8, 3289–3306. [Google Scholar] [CrossRef]

- Tang, X.; Chen, J.; Yang, K.; Toyoda, M.; Liu, T.; Hu, X. Visual Detection and Deep Reinforcement Learning-Based Car Following and Energy Management for Hybrid Electric Vehicles. IEEE Trans. Transp. Electrif. 2022, 8, 2501–2515. [Google Scholar] [CrossRef]

- Peng, J.; Fan, Y.; Yin, G.; Jiang, R. Collaborative Optimization of Energy Management Strategy and Adaptive Cruise Control Based on Deep Reinforcement Learning. IEEE Trans. Transp. Electrif. 2023, 9, 34–44. [Google Scholar] [CrossRef]

- Lee, H.; Song, C.; Kim, N.; Cha, S.W. Comparative Analysis of Energy Management Strategies for HEV: Dynamic Programming and Reinforcement Learning. IEEE Access 2020, 8, 67112–67123. [Google Scholar] [CrossRef]

- Li, Y.; He, H.; Peng, J.; Wang, H. Deep Reinforcement Learning-Based Energy Management for a Series Hybrid Electric Vehicle Enabled by History Cumulative Trip Information. IEEE Trans. Veh. Technol. 2019, 68, 7416–7430. [Google Scholar] [CrossRef]

- Liu, J.; Chen, Y.; Zhan, J.; Shang, F. Heuristic Dynamic Programming Based Online Energy Management Strategy for Plug-In Hybrid Electric Vehicles. IEEE Trans. Veh. Technol. 2019, 68, 4479–4493. [Google Scholar] [CrossRef]

- Lee, H.; Cha, S.W. Energy Management Strategy of Fuel Cell Electric Vehicles Using Model-Based Reinforcement Learning with Data-Driven Model Update. IEEE Access 2021, 9, 59244–59254. [Google Scholar] [CrossRef]

- Wu, J.; Zou, Y.; Zhang, X.; Liu, T.; Kong, Z.; He, D. An Online Correction Predictive EMS for a Hybrid Electric Tracked Vehicle Based on Dynamic Programming and Reinforcement Learning. IEEE Access 2019, 7, 98252–98266. [Google Scholar] [CrossRef]

- Lee, H.; Cha, S.W. Reinforcement Learning Based on Equivalent Consumption Minimization Strategy for Optimal Control of Hybrid Electric Vehicles. IEEE Access 2021, 9, 860–871. [Google Scholar] [CrossRef]

- Lee, W.; Jeoung, H.; Park, D.; Kim, T.; Lee, H.; Kim, N. A Real-Time Intelligent Energy Management Strategy for Hybrid Electric Vehicles Using Reinforcement Learning. IEEE Access 2021, 9, 72759–72768. [Google Scholar] [CrossRef]

- Biswas, A.; Anselma, P.G.; Emadi, A. Real-Time Optimal Energy Management of Multimode Hybrid Electric Powertrain with Online Trainable Asynchronous Advantage Actor–Critic Algorithm. IEEE Trans. Transp. Electrif. 2022, 8, 2676–2694. [Google Scholar] [CrossRef]

- Hu, B.; Li, J. An Adaptive Hierarchical Energy Management Strategy for Hybrid Electric Vehicles Combining Heuristic Domain Knowledge and Data-Driven Deep Reinforcement Learning. IEEE Trans. Transp. Electrif. 2022, 8, 3275–3288. [Google Scholar] [CrossRef]

- Chen, L.; Liao, Z.; Ma, X. Nonlinear Model Predictive Control for Heavy-Duty Hybrid Electric Vehicles Using Random Power Prediction Method. IEEE Access 2020, 8, 202819–202835. [Google Scholar] [CrossRef]

- Feng, X.; Weng, C.; He, X.; Han, X.; Lu, L.; Ren, D.; Ouyang, M. Online State-of-Health Estimation for Li-Ion Battery Using Partial Charging Segment Based on Support Vector Machine. IEEE Trans. Veh. Technol. 2019, 68, 8583–8592. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, Y.; Chen, Z.; Li, G.; Liu, Y. A Novel Learning-Based Model Predictive Control Strategy for Plug-In Hybrid Electric Vehicle. IEEE Trans. Transp. Electrif. 2022, 8, 23–35. [Google Scholar] [CrossRef]

- Cheng, X.; Li, C.; Liu, X. A Review of Federated Learning in Energy Systems. In Proceedings of the 2022 IEEE/IAS Industrial and Commercial Power System Asia (I&CPS Asia), Shanghai, China, 7–9 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2089–2095. [Google Scholar]

- Tao, Y.; Qiu, J.; Lai, S.; Sun, X.; Wang, Y.; Zhao, J. Data-Driven Matching Protocol for Vehicle-to-Vehicle Energy Management Considering Privacy Preservation. IEEE Trans. Transp. Electrif. 2023, 9, 968–980. [Google Scholar] [CrossRef]

- Liu, T.; Tang, X.; Wang, H.; Yu, H.; Hu, X. Adaptive Hierarchical Energy Management Design for a Plug-In Hybrid Electric Vehicle. IEEE Trans. Veh. Technol. 2019, 68, 11513–11522. [Google Scholar] [CrossRef]

- Fathy, A.; Al-Dhaifallah, M.; Rezk, H. Recent Coyote Algorithm-Based Energy Management Strategy for Enhancing Fuel Economy of Hybrid FC/Battery/SC System. IEEE Access 2019, 7, 179409–179419. [Google Scholar] [CrossRef]

- Alahmadi, A.A.A.; Belkhier, Y.; Ullah, N.; Abeida, H.; Soliman, M.S.; Khraisat, Y.S.H.; Alharbi, Y.M. Hybrid Wind/PV/Battery Energy Management-Based Intelligent Non-Integer Control for Smart DC-Microgrid of Smart University. IEEE Access 2021, 9, 98948–98961. [Google Scholar] [CrossRef]

- Wang, X.V.; Chen, M. Artificial Intelligence in the Digital Twins: State of the Art, Challenges, and Future Research Topics [Version 2; Peer Review: 2 Approved]|Digital Twin. Available online: https://digitaltwin1.org/articles/1-12/v2 (accessed on 20 June 2023).

- Liu, J.; Li, C.; Bai, J.; Luo, Y.; Lv, H.; Lv, Z. Security in IoT-Enabled Digital Twins of Maritime Transportation Systems. IEEE Trans. Intell. Transp. Syst. 2023, 24, 2359–2367. [Google Scholar] [CrossRef]

- Yang, J.; Lin, F.; Chakraborty, C.; Yu, K.; Guo, Z.; Nguyen, A.-T.; Rodrigues, J.J.P.C. A Parallel Intelligence-Driven Resource Scheduling Scheme for Digital Twins-Based Intelligent Vehicular Systems. IEEE Trans. Intell. Veh. 2023, 8, 2770–2785. [Google Scholar] [CrossRef]

- Jafari, S.; Byun, Y.-C. Prediction of the Battery State Using the Digital Twin Framework Based on the Battery Management System. IEEE Access 2022, 10, 124685–124696. [Google Scholar] [CrossRef]

- An Overview of Digital Twins Methods Applied to Lithium-Ion Batteries|IEEE Conference Publication|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/10006169 (accessed on 23 April 2023).

- Bugueño, V.; Barbosa, K.A.; Rajendran, S.; Díaz, M. An Overview of Digital Twins Methods Applied to Lithium-Ion Batteries. In Proceedings of the 2022 IEEE International Conference on Automation/XXV Congress of the Chilean Association of Automatic Control (ICA-ACCA), Curicó, Chile, 24–28 October 2022; pp. 1–7. [Google Scholar]

- Li, Y.; Wang, S.; Duan, X.; Liu, S.; Liu, J.; Hu, S. Multi-Objective Energy Management for Atkinson Cycle Engine and Series Hybrid Electric Vehicle Based on Evolutionary NSGA-II Algorithm Using Digital Twins. Energy Convers. Manag. 2021, 230, 113788. [Google Scholar] [CrossRef]

- Guo, J.; Wu, X.; Liang, H.; Hu, J.; Liu, B. Digital-Twin Based Power Supply System Modeling and Analysis for Urban Rail Transportation. In Proceedings of the 2020 IEEE International Conference on Energy Internet (ICEI), Sydney, Australia, 24–28 August 2020; pp. 74–79. [Google Scholar]

- Mombiela, D.C.; Zadeh, M. Integrated Design and Control Approach for Marine Power Systems Based On Operational Data; “Digital Twin to Design”. In Proceedings of the 2021 IEEE Transportation Electrification Conference & Expo (ITEC), Chicago, IL, USA, 21–25 June 2021; pp. 520–527. [Google Scholar]

- Zhang, C.; Zhou, Q.; Shuai, B.; Williams, H.; Li, Y.; Hua, L.; Xu, H. Dedicated Adaptive Particle Swarm Optimization Algorithm for Digital Twin Based Control Optimization of the Plug-in Hybrid Vehicle. IEEE Trans. Transp. Electrif. 2022, 9, 3137–3148. [Google Scholar] [CrossRef]

- Wu, J.; Wang, X.; Dang, Y.; Lv, Z. Digital Twins and Artificial Intelligence in Transportation Infrastructure: Classification, Application, and Future Research Directions. Comput. Electr. Eng. 2022, 101, 107983. [Google Scholar] [CrossRef]

- Song, J.; He, G.; Wang, J.; Zhang, P. Shaping Future Low-Carbon Energy and Transportation Systems: Digital Technologies and Applications. iEnergy 2022, 1, 285–305. [Google Scholar] [CrossRef]

- Sun, W.; Wang, P.; Xu, N.; Wang, G.; Zhang, Y. Dynamic Digital Twin and Distributed Incentives for Resource Allocation in Aerial-Assisted Internet of Vehicles. IEEE Internet Things J. 2022, 9, 5839–5852. [Google Scholar] [CrossRef]

- Kaleybar, H.J.; Brenna, M.; Castelli-Dezza, F.; Zaninelli, D. Sustainable MVDC Railway System Integrated with Renewable Energy Sources and EV Charging Station. In Proceedings of the 2022 IEEE Vehicle Power and Propulsion Conference (VPPC), Merced, CA, USA, 1–4 November 2022; pp. 1–6. [Google Scholar]

| Ref. | Traditional Strategy | Artificial Intelligence | Digital Twin | |||

|---|---|---|---|---|---|---|

| Rule-Based | Optimization-Based | Unsupervised Learning | Supervised Learning | Reinforcement Learning | ||

| [26] | ✓ | ✓ | ✓ | |||

| [27] | ✓ | ✓ | ✓ | ✓ | ✓ | |

| [29] | ✓ | ✓ | ✓ | |||

| [35] | ✓ | ✓ | ✓ | |||

| [36] | ✓ | ✓ | ||||

| [37] | ✓ | ✓ | ||||

| this review | ✓ | ✓ | ✓ | ✓ | ||

| EMS | Advantages | Disadvantage | |

|---|---|---|---|

| Model-free RL | Traditional reinforcement learning [13,31,83] | Less memory usage; Continuous learning of the decision maker; Robustness against unprecedented situation | Lack of explanability in the decision-making process; Inconvergence issues are prone to occur during training |

| Deep-reinforcement learning [30,84,85,86,87,88,89,90,91] | Handle complex energy management systems at high latitudes;Near global optimal controller | Higher demand for data; More difficult to design and train | |

| Model-based RL | Policy-Based Method [92,93,94,95,96,97,98,99,100] | Effectively utilizing trained models to achieve optimal control; Decision can be interpretability | Performance depends on trained model and prediction accuracy; Difficulties in building accuratel models. |

| Model Predictive Control [101,102,103] | Inherent ability to tackle con-straints on input, output, andstates; Real-time optimization | Depends on prediction accuracy;Seldom achieves globaloptimal solution | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Xiao, X.; Gao, Y.; Xia, Y.; Dragičević, T.; Wheeler, P. Emerging Information Technologies for the Energy Management of Onboard Microgrids in Transportation Applications. Energies 2023, 16, 6269. https://doi.org/10.3390/en16176269

Huang Z, Xiao X, Gao Y, Xia Y, Dragičević T, Wheeler P. Emerging Information Technologies for the Energy Management of Onboard Microgrids in Transportation Applications. Energies. 2023; 16(17):6269. https://doi.org/10.3390/en16176269

Chicago/Turabian StyleHuang, Zhen, Xuechun Xiao, Yuan Gao, Yonghong Xia, Tomislav Dragičević, and Pat Wheeler. 2023. "Emerging Information Technologies for the Energy Management of Onboard Microgrids in Transportation Applications" Energies 16, no. 17: 6269. https://doi.org/10.3390/en16176269

APA StyleHuang, Z., Xiao, X., Gao, Y., Xia, Y., Dragičević, T., & Wheeler, P. (2023). Emerging Information Technologies for the Energy Management of Onboard Microgrids in Transportation Applications. Energies, 16(17), 6269. https://doi.org/10.3390/en16176269