Energy-Efficient and Timeliness-Aware Continual Learning Management System

Abstract

:1. Introduction

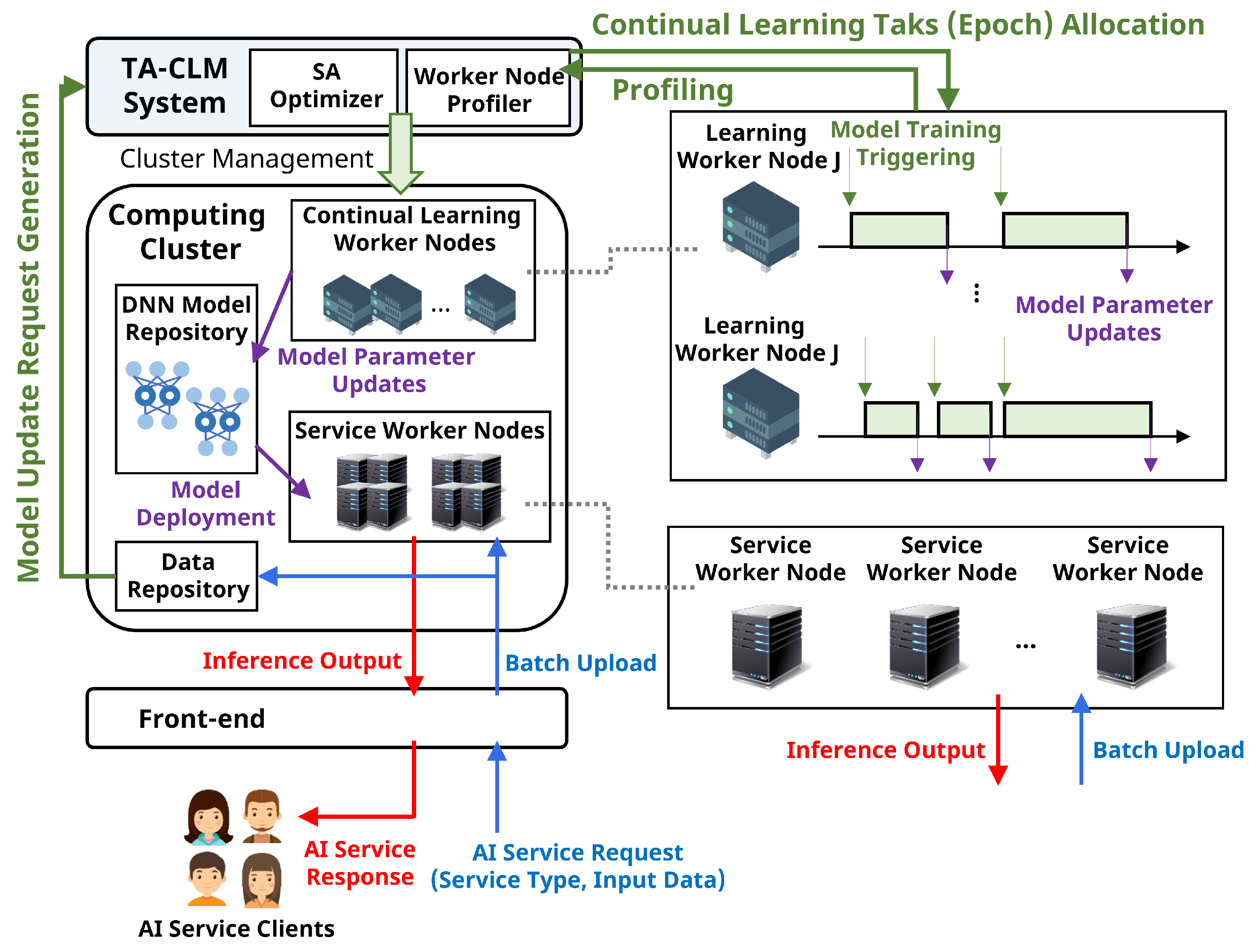

2. Timeliness-Aware Continual Learning Management System

3. Proposed System Model

3.1. Model Parameter Update Latency

3.2. Energy Consumption of Continual Learning Worker Nodes

3.3. Task Allocation Constraints

3.4. Optimization Problem

3.5. Simulated Annealing Procedures

| Algorithm 1: Simulated Annealing based Model Update Task Allocation |

|

4. Experiments

4.1. Experimental Setup

4.1.1. Cluster and Task Setup

4.1.2. Compared Approaches

4.1.3. Evaluation Metric

4.2. Experimental Result

5. Conclusions

Funding

Data Availability Statement

Conflicts of Interest

References

- Manias, D.M.; Chouman, A.; Shami, A. Model Drift in Dynamic Networks. IEEE Commun. Mag. 2023, 61, 78–84. [Google Scholar] [CrossRef]

- Webb, G.I.; Hyde, R.; Cao, H.; Nguyen, H.L.; Petitjean, F. Characterizing concept drift. Data Min. Knowl. Discov. 2016, 30, 964–994. [Google Scholar] [CrossRef]

- Jain, M.; Kaur, G.; Saxena, V. A K-Means clustering and SVM based hybrid concept drift detection technique for network anomaly detection. Expert Syst. Appl. 2022, 193, 116510. [Google Scholar] [CrossRef]

- Zhou, M.; Lu, J.; Song, Y.; Zhang, G. Multi-Stream Concept Drift Self-Adaptation Using Graph Neural Network. IEEE Trans. Knowl. Data Eng. 2023, 35, 12828–12841. [Google Scholar] [CrossRef]

- Gama, J.; Indrė, Ž.; Albert, B.; Mykola, P.; Abdelhamid, B. Learning under concept drift: A review. ACM Comput. Surv. (CSUR) 2014, 46, 1–37. [Google Scholar] [CrossRef]

- Lu, J.; Liu, A.; Dong, F.; Gu, F.; Gama, J.; Zhang, G. Learning under concept drift: A review. IEEE Trans. Knowl. Data Eng. 2018, 31, 463–473. [Google Scholar] [CrossRef]

- Suárez, C.; Andrés, L.; David, Q.; Alejandro, C. A survey on machine learning for recurring concept drifting data streams. Expert Syst. Appl. 2023, 213, 118934. [Google Scholar] [CrossRef]

- Ashfahani, A.; Pratama, M. Autonomous deep learning: Continual learning approach for dynamic environments. In Proceedings of the 2019 SIAM International Conference on Data Mining (SDM), Calgary, AB, Canada, 2–4 May 2019; pp. 666–674. [Google Scholar]

- Ashfahani, A.; Pratama, M. Continual deep learning by functional regularisation of memorable past. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; pp. 4453–4464. [Google Scholar]

- Mundt, M.; Yongwon, H.; Iuliia, P.; Visvanathan, R. A wholistic view of continual learning with deep neural networks: Forgotten lessons and the bridge to active and open world learning. Neural Netw. 2023, 160, 306–336. [Google Scholar] [CrossRef] [PubMed]

- Guo, Y.; Liu, B.; Zhao, D. Online continual learning through mutual information maximization. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2022; pp. 8109–8126. [Google Scholar]

- Wang, Z.; Zhang, Z.; Lee, C.Y.; Zhang, H.; Sun, R.; Ren, X.; Su, G.; Perot, V.; Dy, J.; Pfister, T. Learning to prompt for continual learning. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), New Orleans, LA, USA, 19–24 June 2022; pp. 139–149. [Google Scholar]

- Cano, A.; Krawczyk, B. ROSE: Robust online self-adjusting ensemble for continual learning on imbalanced drifting data streams. Mach. Learn. 2022, 111, 2561–2599. [Google Scholar] [CrossRef]

- Jiang, J.; Liu, F.; Wing, W.N.; Quan, T.; Weizheng, W.; Quoc, V.P. Dynamic incremental ensemble fuzzy classifier for data streams in green internet of things. IEEE Trans. Green Commun. Netw. 2022, 6, 1316–1329. [Google Scholar] [CrossRef]

- Oakamoto, K.; Naoki, H.; Shigemasa, T. Distributed online adaptive gradient descent with event-triggered communication. IEEE Trans. Control. Netw. Syst. 2023. [Google Scholar] [CrossRef]

- Wen, H.; Cheng, H.; Qiu, H.; Wang, L.; Pan, L.; Li, H. Optimizing mode connectivity for class incremental learning. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023; pp. 36940–36957. [Google Scholar]

- Crankshaw, D.; Wang, X.; Zhou, G.; Franklin, M.J.; Gonzalez, J.E.; Stoica, I. Clipper: A Low-Latency online prediction serving system. In Proceedings of the 14th USENIX Symposium on Networked Systems Design and Implementation (NSDI 17), Boston, MA, USA, 27–29 March 2017; pp. 613–627. [Google Scholar]

- Kang, D.K.; Ha, Y.G.; Peng, L.; Youn, C.H. Cooperative Distributed GPU Power Capping for Deep Learning Clusters. IEEE Trans. Ind. Electron. 2021, 69, 7244–7254. [Google Scholar] [CrossRef]

- Tian, H.; Yu, M.; Wang, W. Continuum: A platform for cost-aware, low-latency continual learning. In Proceedings of the ACM Symposium on Cloud Computing (SoCC), Carlsbad, CA, USA, 11–13 October 2018; pp. 26–40. [Google Scholar]

- Rang, W.; Yang, D.; Cheng, D.; Wang, Y. Data life aware model updating strategy for stream-based online deep learning. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 2571–2581. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, H.; Wen, Y.; Sun, P.; Ta, N.B.D. Modelci-e: Enabling continual learning in deep learning serving systems. arXiv 2021, arXiv:2106.03122. [Google Scholar]

- Xie, M.; Ren, K.; Lu, Y.; Yang, G.; Xu, Q.; Wu, B.; Lin, J.; Ao, H.; Xu, W.; Shu, H. Kraken: Memory-efficient continual learning for large-scale real-time recommendations. In Proceedings of the SC20: International Conference for High Performance Computing, Networking, Storage and Analysis, Atlanta, GA, USA, 9–19 November 2020; pp. 1–17. [Google Scholar]

- Thinakaran, P.; Kanak, M.; Jashwant, G.; Mahmut, T.K.; Chita, R.D. SandPiper: A Cost-Efficient Adaptive Framework for Online Recommender Systems. In Proceedings of the 2022 IEEE International Conference on Big Data (Big Data), Osaka, Japan, 17–20 December 2022; pp. 17–20. [Google Scholar]

- Kwon, B.; Taewan, K. Toward an online continual learning architecture for intrusion detection of video surveillance. IEEE Access 2022, 10, 89732–89744. [Google Scholar] [CrossRef]

- Gawande, N.A.; Daily, J.A.; Siegel, C.; Tallent, N.R.; Vishnu, A. Scaling deep learning workloads: Nvidia dgx-1/pascal and intel knights landing. Elsevier Future Gener. Comput. Syst. 2020, 108, 1162–1172. [Google Scholar] [CrossRef]

- Chaudhary, S.; Ramjee, R.; Sivathanu, M.; Kwatra, N.; Viswanatha, S. Balancing efficiency and fairness in heterogeneous GPU clusters for deep learning. In Proceedings of the Fifteenth European Conference on Computer Systems (EuroSys), Heraklion, Crete, Greece, 27–30 April 2020; pp. 1–16. [Google Scholar]

- Xu, J.; Zhou, W.; Fu, Z.; Zhou, H.; Li, L. A survey on green deep learning. arXiv 2021, arXiv:2111.05193. [Google Scholar]

- NVIDIA. Available online: https://www.nvidia.com/en-us/ (accessed on 15 October 2023).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE/CVF Conference on Computer vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- NVIDIA-SMI. Available online: https://developer.nvidia.com/nvidia-system-management-interface (accessed on 15 October 2023).

- Abe, Y.; Sasaki, H.; Kato, S.; Inoue, K.; Edahiro, M.; Peres, M. Power and performance characterization and modeling of GPU-accelerated systems. In Proceedings of the 2014 IEEE 28th International Parallel and Distributed Processing Symposium (IPDPS), Phoenix, AZ, USA, 19–23 May 2014; pp. 113–122. [Google Scholar]

- Dowsland, K.A.; Thompson, J. Simulated annealing. In Handbook of Natural Computing; Springer: Berlin/Heidelberg, Germany, 2012; pp. 1623–1655. [Google Scholar]

- Weng, Q.; Xiao, W.; Yu, Y.; Wang, W.; Wang, C.; He, J.; Li, H.; Zhang, L.; Lin, W.; Ding, Y. MLaaS in the wild: Workload analysis and scheduling in Large-Scale heterogeneous GPU clusters. In Proceedings of the 19th USENIX Symposium on Networked Systems Design and Implementation (NSDI 22), Renton, WA, USA, 4–6 April 2022; pp. 945–960. [Google Scholar]

- Jiang, Y. Data-driven fault location of electric power distribution systems with distributed generation. IEEE Trans. Parallel Distrib. Syst. 2019, 11, 129–137. [Google Scholar] [CrossRef]

- Integer Programming 9. Available online: https://web.mit.edu/15.053/www/AMP.htm (accessed on 15 October 2023).

- CUDA. Available online: https://developer.nvidia.com/cuda-downloads (accessed on 15 October 2023).

- CUDNN. Available online: https://developer.nvidia.com/cudnn (accessed on 15 October 2023).

- PyTorch. Available online: https://pytorch.org/ (accessed on 15 October 2023).

- Anaconda. Available online: https://www.anaconda.com/ (accessed on 15 October 2023).

- Pytorch-cifar100. Available online: https://github.com/weiaicunzai/pytorch-cifar100 (accessed on 15 October 2023).

- Python. Available online: https://www.python.org/ (accessed on 15 October 2023).

| Notation | Description |

|---|---|

| Decision variable for task allocation of i-th request onto j-th worker node at time slot g. | |

| Auxiliary decision variable. | |

| , | Model coefficients to predict . |

| Data sample size of i-th request. | |

| Arrival time of i-th request. | |

| DNN model update time for i-th request on j-th worker node. | |

| Power consumption for training DNN model on j-th worker node. | |

| DNN model update latency of i-th request on j-th worker node at triggering time slot g. | |

| , , | (Indicator, linear, exponential) cost metrics for violation of model update latency bound. |

| Model update latency bound for i-th request. | |

| Energy consumption for i-th request on j-th worker node. | |

| Energy consumption cost for . | |

| Violation cost of h-th constraint set. |

| Node1 (Laptop) | Node2 | Node3 | Node4 | |

|---|---|---|---|---|

| CPU | i7-9750H | i7-4790 | i5-11400F | i9-10900K |

| Board | MS-16W1 | B85M Pro4 | B560M-A | Z490 Ext4 |

| MEM | DDR4 16 GB | DDR3 32GB | DDR4 32 GB | DDR4 64 GB |

| GPU | RTX2060m (6 GB) | RTX2060 (6 GB) | RTX3060 (12 GB) | RTX3090 (24 GB) |

| Disk | KINGSTON 512 GB | SSD850 256 GB | WD SN350 1 TB | SSD970 1 TB |

| Node1 (Laptop) | Node2 | Node3 | Node4 | ||

|---|---|---|---|---|---|

| VGG16 | Power (W) | 43 | 143 | 131 | 338 |

| Time (s) | 74.5 | 39.5 | 36.5 | 20.09 | |

| MobileNet | Power (W) | 41 | 140 | 133 | 343 |

| Time (s) | 62.1 | 31.1 | 21.6 | 16.06 | |

| ShuffleNet | Power (W) | 39 | 125 | 132 | 301 |

| Time (s) | 139.6 | 86.2 | 50.8 | 43.5 | |

| Attention56 | Power (W) | 47 | 156 | 146 | 262 |

| Time (s) | 232.7 | 129.5 | 100.3 | 83.9 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kang, D.-K. Energy-Efficient and Timeliness-Aware Continual Learning Management System. Energies 2023, 16, 8018. https://doi.org/10.3390/en16248018

Kang D-K. Energy-Efficient and Timeliness-Aware Continual Learning Management System. Energies. 2023; 16(24):8018. https://doi.org/10.3390/en16248018

Chicago/Turabian StyleKang, Dong-Ki. 2023. "Energy-Efficient and Timeliness-Aware Continual Learning Management System" Energies 16, no. 24: 8018. https://doi.org/10.3390/en16248018