A Novel Voltage-Abnormal Cell Detection Method for Lithium-Ion Battery Mass Production Based on Data-Driven Model with Multi-Source Time Series Data

Abstract

1. Introduction

- A data-driven voltage-abnormal cell detection method is proposed which utilizes the multi-source time series data of the cell to detect voltage-abnormal cells quickly and accurately without a long time wait before detection.

- The abnormal detection model takes the order of different source data into account and adopts a recurrent-based data embedding method to utilize order information for better detection performance.

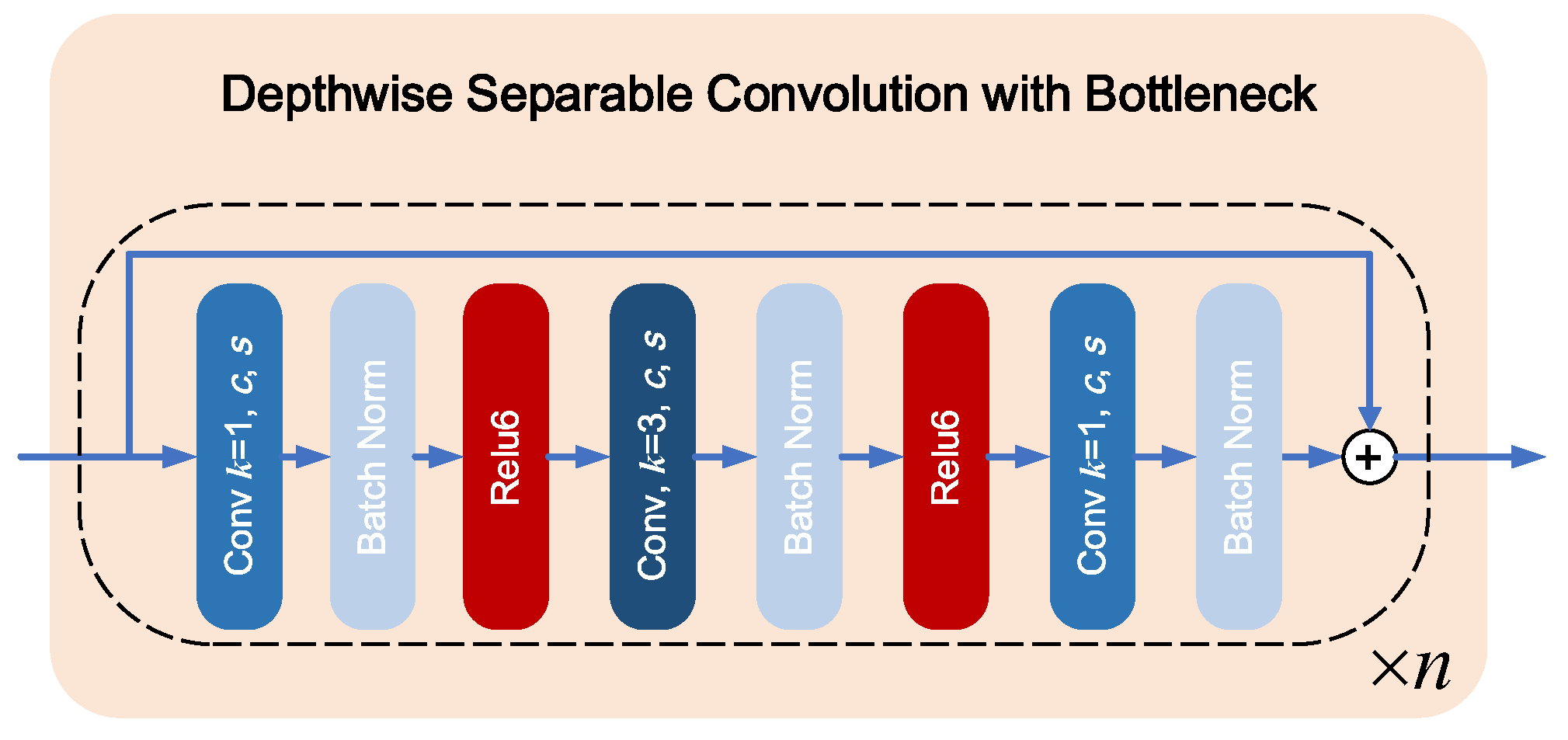

- We modified and simplified the structure of MobileNet to improve the computational efficiency and reduce the model redundancy to adapt it to the mass production of LIBs.

2. Proposed Method

2.1. Data Representation

2.2. Model Architecture

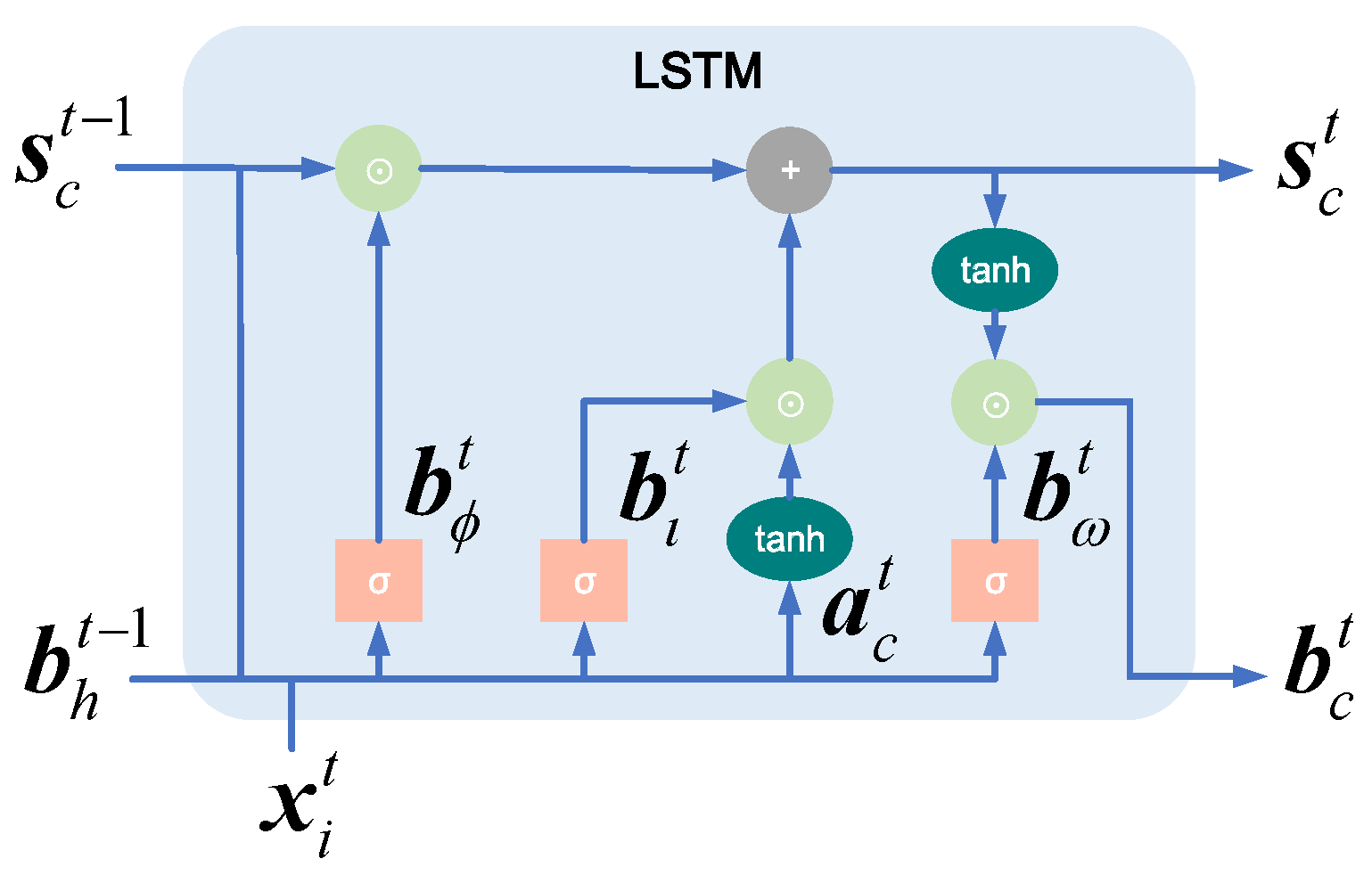

2.2.1. Recurrent Data Embedding

2.2.2. Simplified MobileNet

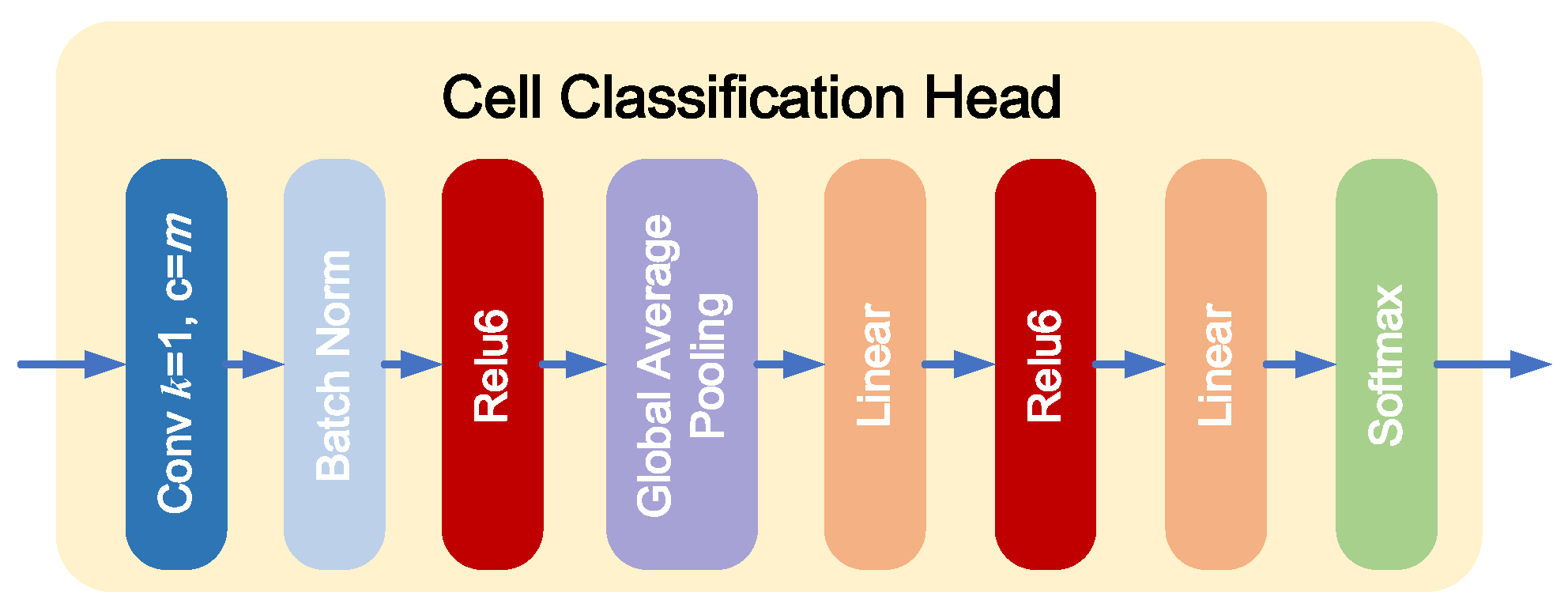

2.2.3. Cell Classification Head

2.3. Voltage-Abnormal Cell Detection

3. Experiments

3.1. Data Preparation

3.2. Experimental Details

3.3. Results and Discussion

3.3.1. Comparison of Different Models for Voltage-Abnormal Cell Detection

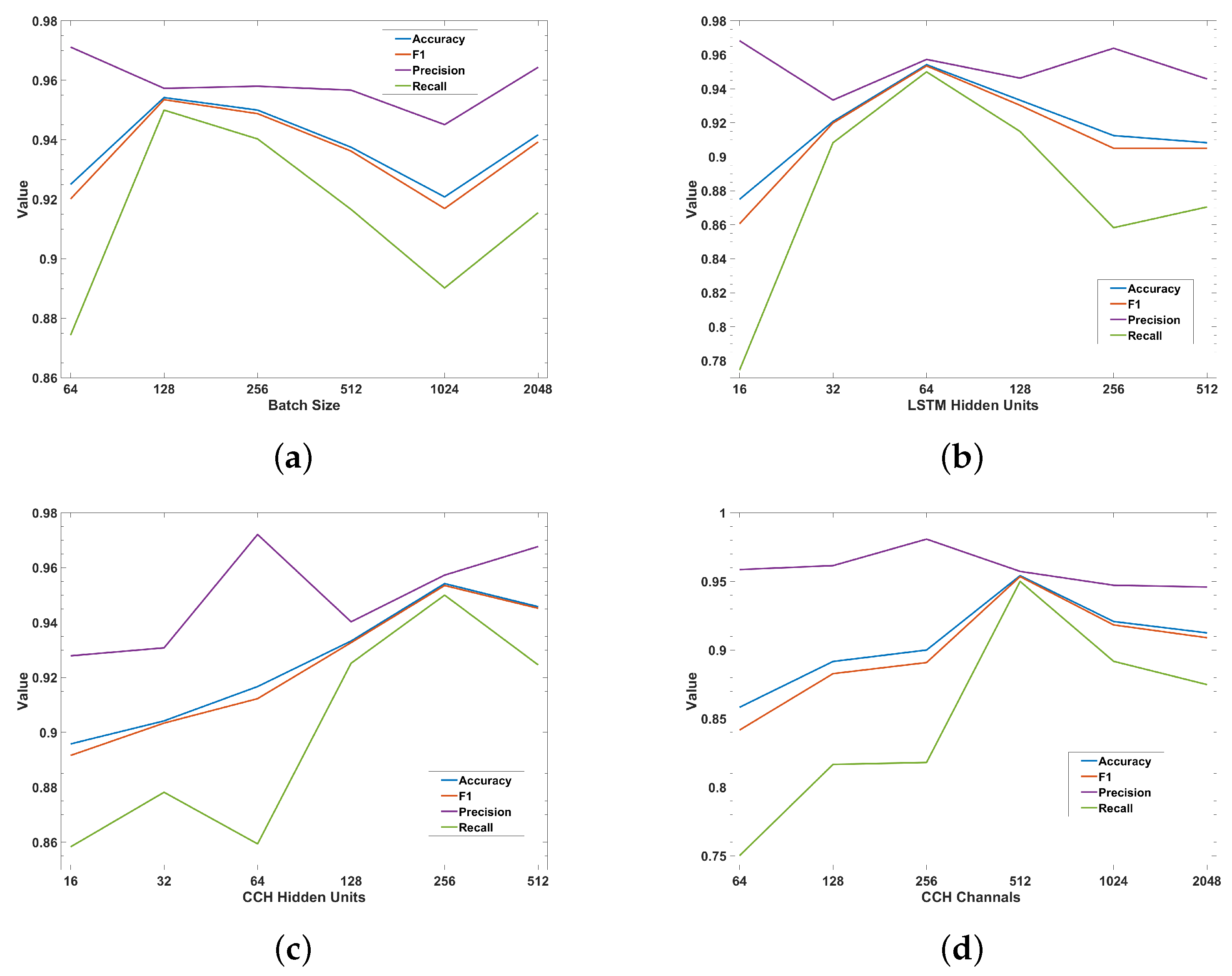

3.3.2. Influence of the Different Parameters on Voltage-Abnormal Cell Detection

3.4. Ablation

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Shen, N.; Wang, Y.; Peng, H.; Hou, Z. Renewable Energy Green Innovation, Fossil Energy Consumption, and Air Pollution—Spatial Empirical Analysis Based on China. Sustainability 2020, 12, 6397. [Google Scholar] [CrossRef]

- Fang, X.; Xie, L.; Li, X. Distributed localization in dynamic networks via complex laplacian. Automatica 2023, 151, 110915. [Google Scholar] [CrossRef]

- Coester, A.; Hofkes, M.W.; Papyrakis, E. Economic analysis of batteries: Impact on security of electricity supply and renewable energy expansion in Germany. Appl. Energy 2020, 275, 115364. [Google Scholar] [CrossRef]

- Fang, X.; Xie, L.; Li, X. Integrated relative-measurement-based network localization and formation maneuver control. IEEE Trans. Autom. Control. 2023, 69, 906–1913. [Google Scholar] [CrossRef]

- Schnell, J.; Nentwich, C.; Endres, F.; Kollenda, A.; Distel, F.; Knoche, T.; Reinhart, G. Data mining in lithium-ion battery cell production. J. Power Sources 2019, 413, 360–366. [Google Scholar] [CrossRef]

- Sarker, I.H. Machine Learning: Algorithms, Real-World Applications and Research Directions. SN Comput. Sci. 2021, 2, 160. [Google Scholar] [CrossRef] [PubMed]

- Mall, P.K.; Singh, P.K.; Yadav, D. GLCM Based Feature Extraction and Medical X-RAY Image Classification using Machine Learning Techniques. In Proceedings of the 2019 IEEE Conference on Information and Communication Technology, Jeju, Republic of Korea, 16–18 October 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Peng, Y.; Guo, Y.; Hao, R.; Xu, C. Network traffic prediction with Attention-based Spatial–Temporal Graph Network. Comput. Netw. 2024, 243, 110296. [Google Scholar] [CrossRef]

- Xiao, R.; Zhang, Z.; Dan, Y.; Yang, Y.; Pan, Z.; Deng, J. Multifeature Extraction and Semi-Supervised Deep Learning Scheme for State Diagnosis of Converter Transformer. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Li, S.; Zhao, P. Big data driven vehicle battery management method: A novel cyber-physical system perspective. J. Energy Storage 2021, 33, 102064. [Google Scholar] [CrossRef]

- Haider, S.N.; Zhao, Q.; Li, X. Data driven battery anomaly detection based on shape based clustering for the data centers class. J. Energy Storage 2020, 29, 101479. [Google Scholar] [CrossRef]

- Duquesnoy, M.; Lombardo, T.; Chouchane, M.; Primo, E.N.; Franco, A.A. Data-driven assessment of electrode calendering process by combining experimental results, in silico mesostructures generation and machine learning. J. Power Sources 2020, 480, 229103. [Google Scholar] [CrossRef]

- Jin, H.; Gao, Z.; Zuo, Z.; Zhang, Z.; Wang, Y.; Zhang, A. A Combined Model-Based and Data-Driven Fault Diagnosis Scheme for Lithium-Ion Batteries. IEEE Trans. Ind. Electron. 2024, 71, 6274–6284. [Google Scholar] [CrossRef]

- Ma, M.; Wang, Y.; Duan, Q.; Wu, T.; Sun, J.; Wang, Q. Fault detection of the connection of lithium-ion power batteries in series for electric vehicles based on statistical analysis. Energy 2018, 164, 745–756. [Google Scholar] [CrossRef]

- Wu, T.; Cheng, K.; Kang, J.; Liu, R. Remaining Useful Life Prediction of Lithium-Ion Batteries Based on a Combination of Ensemble Empirical Mode Decomposition and Deep Belief Network–Long Short-Term Memory. Energy Technol. 2024, 12, 2301033. [Google Scholar] [CrossRef]

- Wang, L.Y.; Chen, W.; Lin, F.; Yin, G. Data-Driven Statistical Analysis and Diagnosis of Networked Battery Systems. IEEE Trans. Sustain. Energy 2017, 8, 1177–1186. [Google Scholar] [CrossRef]

- Li, D.; Zhang, Z.; Liu, P.; Wang, Z.; Zhang, L. Battery Fault Diagnosis for Electric Vehicles Based on Voltage Abnormality by Combining the Long Short-Term Memory Neural Network and the Equivalent Circuit Model. IEEE Trans. Power Electron. 2021, 36, 1303–1315. [Google Scholar] [CrossRef]

- Li, X.; Lyu, M.; Gao, X.; Yuan, C.; Zhen, D. An adaptive threshold method for multi-faults diagnosis of lithium-ion batteries based on electro-thermal model. Measurement 2023, 222, 113671. [Google Scholar] [CrossRef]

- Wang, Y.; Tian, J.; Chen, Z.; Liu, X. Model based insulation fault diagnosis for lithium-ion battery pack in electric vehicles. Measurement 2019, 131, 443–451. [Google Scholar] [CrossRef]

- Sun, T.; Zhu, H.; Xu, Y.; Jin, C.; Zhu, G.; Han, X.; Lai, X.; Zheng, Y. Internal short circuit fault diagnosis for the lithium-ion batteries with unknown parameters based on transfer learning optimized residual network by multi-label data processing. J. Clean. Prod. 2024, 444, 141224. [Google Scholar] [CrossRef]

- Tian, L.; Dong, C.; Mu, Y.; Yu, X.; Jia, H. Online lithium-ion battery intelligent perception for thermal fault detection and localization. Heliyon 2024, 10, e25298. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Zhang, Y.; Tang, Q.; Zhang, Y.; Wang, J.; Stimming, U.; Lee, A.A. Identifying degradation patterns of lithium ion batteries from impedance spectroscopy using machine learning. Nat. Commun. 2020, 11, 1706. [Google Scholar] [CrossRef]

- He, W.; Williard, N.; Osterman, M.; Pecht, M. Prognostics of lithium-ion batteries based on Dempster–Shafer theory and the Bayesian Monte Carlo method. J. Power Sources 2011, 196, 10314–10321. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, W.; Yu, H.; Zhang, Z.; Yang, X.; Liang, F.; Li, S.; Yang, S.; Liu, X. Remaining useful life and state of health prediction for lithium batteries based on differential thermal voltammetry and a deep learning model. iScience 2022, 25, 105638. [Google Scholar] [CrossRef]

- Liu, C.; Tan, J.; Wang, X. A data-driven decision-making optimization approach for inconsistent lithium-ion cell screening. J. Intell. Manuf. 2020, 31, 833–845. [Google Scholar] [CrossRef]

- How, D.N.T.; Hannan, M.A.; Hossain Lipu, M.S.; Ker, P.J. State of Charge Estimation for Lithium-Ion Batteries Using Model-Based and Data-Driven Methods: A Review. IEEE Access 2019, 7, 136116–136136. [Google Scholar] [CrossRef]

- Hu, Q.; Zhang, S.; Xie, Z.; Mi, J.; Wan, J. Noise model based v-support vector regression with its application to short-term wind speed forecasting. Neural Netw. 2014, 57, 1–11. [Google Scholar] [CrossRef]

- Davis, R.A.; Nielsen, M.S. Modeling of time series using random forests: Theoretical developments. arXiv 2020, arXiv:2008.02479. [Google Scholar] [CrossRef]

- Shiraishi, H.; Nakamura, T.; Shibuki, R. Time Series Quantile Regression Using Random Forests. J. Time Ser. Anal. 2024, 45, 639–659. [Google Scholar] [CrossRef]

- Silva, R.R.C.; Caminhas, W.M.; de Lima e Silva, P.C.; Guimarães, F.G. A C4.5 Fuzzy Decision Tree Method for Multivariate Time Series Forecasting. In Proceedings of the 2021 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE), Luxembourg, 11–14 July 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Qiu, X.; Zhang, L.; Nagaratnam Suganthan, P.; Amaratunga, G.A. Oblique random forest ensemble via Least Square Estimation for time series forecasting. Inf. Sci. 2017, 420, 249–262. [Google Scholar] [CrossRef]

- Ilic, I.; Gorgulu, B.; Cevik, M.; Baydogan, M.G. Explainable boosted linear regression for time series forecasting. Pattern Recognit. 2021, 120, 108144. [Google Scholar] [CrossRef]

- He, X. A Survey on Time Series Forecasting. In 3D Imaging—Multidimensional Signal Processing and Deep Learning; Patnaik, S., Kountchev, R., Tai, Y., Kountcheva, R., Eds.; Springer Nature Singapore: Singapore, 2023; Volume 348, pp. 13–23. [Google Scholar] [CrossRef]

- Wang, Z.; Ruan, S.; Huang, T.; Zhou, H.; Zhang, S.; Wang, Y.; Wang, L.; Huang, Z.; Liu, Y. A lightweight multi-layer perceptron for efficient multivariate time series forecasting. Knowl.-Based Syst. 2024, 288, 111463. [Google Scholar] [CrossRef]

- Zhang, X.; Zhong, C.; Zhang, J.; Wang, T.; Ng, W.W. Robust recurrent neural networks for time series forecasting. Neurocomputing 2023, 526, 143–157. [Google Scholar] [CrossRef]

- Sak, H.; Senior, A.W.; Beaufays, F. Long Short-Term Memory Based Recurrent Neural Network Architectures for Large Vocabulary Speech Recognition. arXiv 2014, arXiv:1402.1128. [Google Scholar]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:1803.01271. [Google Scholar]

- Phandoidaen, N.; Richter, S. Forecasting time series with encoder-decoder neural networks. arXiv 2020, arXiv:2009.08848. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond Efficient Transformer for Long Sequence Time-Series Forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020. [Google Scholar]

- Bloemheuvel, S.; van den Hoogen, J.; Jozinović, D.; Michelini, A.; Atzmueller, M. Graph neural networks for multivariate time series regression with application to seismic data. Int. J. Data Sci. Anal. 2022, 16, 317–332. [Google Scholar] [CrossRef]

- Chen, X. A novel transformer-based DL model enhanced by position-sensitive attention and gated hierarchical LSTM for aero-engine RUL prediction. Sci. Rep. 2024, 14, 10061. [Google Scholar] [CrossRef]

- Li, D.; Chen, D.; Shi, L.; Jin, B.; Goh, J.; Ng, S.K. MAD-GAN: Multivariate Anomaly Detection for Time Series Data with Generative Adversarial Networks. In Proceedings of the International Conference on Artificial Neural Networks, Munich, Germany, 17–19 September 2019. [Google Scholar]

- Kieu, T.; Yang, B.; Guo, C.; Jensen, C.S. Outlier Detection for Time Series with Recurrent Autoencoder Ensembles. In Proceedings of the Twenty-Eighth International Joint Conference on Artificial Intelligence, IJCAI-19. International Joint Conferences on Artificial Intelligence Organization, Macao, China, 10–16 August 2019; pp. 2725–2732. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Wang, X.; He, J.; Shen, S.; Liu, Z. A cell screening method for lithium-ion battery grouping based on pre-trained data-driven model with multi-source time series data. J. Energy Storage 2024, 85, 110902. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Graves, A. Long Short-Term Memory. In Supervised Sequence Labelling with Recurrent Neural Networks; Springer: Berlin/Heidelberg, Germany, 2012; pp. 37–45. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network In Network. arXiv 2014, arXiv:1312.4400. [Google Scholar] [CrossRef]

- Liu, C.; Tan, J.; Shi, H.; Wang, X. Lithium-Ion Cell Screening with Convolutional Neural Networks Based on Two-Step Time-Series Clustering and Hybrid Resampling for Imbalanced Data. IEEE Access 2018, 6, 59001–59014. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations using RNN Encoder–Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar] [CrossRef]

- Wang, Z.; Yan, W.; Oates, T. Time series classification from scratch with deep neural networks: A strong baseline. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AL, USA, 14–19 May 2017; pp. 1578–1585. [Google Scholar] [CrossRef]

- Branco, P.; Torgo, L.; Ribeiro, R. A Survey of Predictive Modelling under Imbalanced Distributions. arXiv 2015, arXiv:1505.01658. [Google Scholar]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

| Block Index 1 | q | c | n | s |

|---|---|---|---|---|

| 1 | 1 | 16 | 1 | 1 |

| 2 | 6 | 64 | 2 | 2 |

| 3 | 6 | 256 | 1 | 1 |

| Dataset | Ratio of Normal Cell | Ratio of Abnormal Cell | Number of Samples |

|---|---|---|---|

| Train | 50% | 50% | 2400 |

| Validation | 50% | 50% | 240 |

| Test | 50% | 50% | 240 |

| Total | 50% | 50% | 2880 |

| Evaluation Index | Formulation | Range | Best Value |

|---|---|---|---|

| Accuracy | |||

| Precision | |||

| Recall | |||

| F1 | |||

| G-mean |

| Model | Evaluation Index | |||||

|---|---|---|---|---|---|---|

| Accuracy | F1 | G-Mean | Precision | Recall | Time (ms) | |

| MLP | 0.8458 | 0.8275 | 0.8342 | 0.9465 | 0.7355 | 0.0034 |

| RNN | 0.8792 | 0.8682 | 0.8711 | 0.9413 | 0.8067 | 0.0036 |

| LSTM | 0.9042 | 0.8971 | 0.8982 | 0.9424 | 0.8560 | 0.0056 |

| GRU | 0.9250 | 0.9227 | 0.9232 | 0.9557 | 0.8902 | 0.0038 |

| FCN | 0.8875 | 0.8820 | 0.8844 | 0.9287 | 0.8450 | 0.6171 |

| ResNet | 0.9125 | 0.9023 | 0.9035 | 0.9722 | 0.8418 | 1.1777 |

| Transformer | 0.9417 | 0.9403 | 0.9403 | 0.9572 | 0.9246 | 0.0207 |

| Ours | 0.9542 | 0.9535 | 0.9536 | 0.9573 | 0.9500 | 0.0509 |

| Model | w/o RDE | w/o MobileNet | Ours | |

|---|---|---|---|---|

| Component | Recurrent Data Embedding | × | ||

| Simplified MobileNet | × | |||

| Evaluation Index | Accuracy | 0.9125 | 0.8958 | 0.9542 |

| F1 | 0.9095 | 0.8879 | 0.9535 | |

| G-mean | 0.9102 | 0.8907 | 0.9536 | |

| Precision | 0.9466 | 0.9617 | 0.9573 | |

| Recall | 0.8751 | 0.8254 | 0.9500 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; He, J.; Huang, F.; Liu, Z.; Deng, A.; Long, R. A Novel Voltage-Abnormal Cell Detection Method for Lithium-Ion Battery Mass Production Based on Data-Driven Model with Multi-Source Time Series Data. Energies 2024, 17, 3472. https://doi.org/10.3390/en17143472

Wang X, He J, Huang F, Liu Z, Deng A, Long R. A Novel Voltage-Abnormal Cell Detection Method for Lithium-Ion Battery Mass Production Based on Data-Driven Model with Multi-Source Time Series Data. Energies. 2024; 17(14):3472. https://doi.org/10.3390/en17143472

Chicago/Turabian StyleWang, Xiang, Jianjun He, Fuxin Huang, Zhenjie Liu, Aibin Deng, and Rihui Long. 2024. "A Novel Voltage-Abnormal Cell Detection Method for Lithium-Ion Battery Mass Production Based on Data-Driven Model with Multi-Source Time Series Data" Energies 17, no. 14: 3472. https://doi.org/10.3390/en17143472

APA StyleWang, X., He, J., Huang, F., Liu, Z., Deng, A., & Long, R. (2024). A Novel Voltage-Abnormal Cell Detection Method for Lithium-Ion Battery Mass Production Based on Data-Driven Model with Multi-Source Time Series Data. Energies, 17(14), 3472. https://doi.org/10.3390/en17143472