A Lifecycle Approach for Artificial Intelligence Ethics in Energy Systems

Abstract

1. Introduction

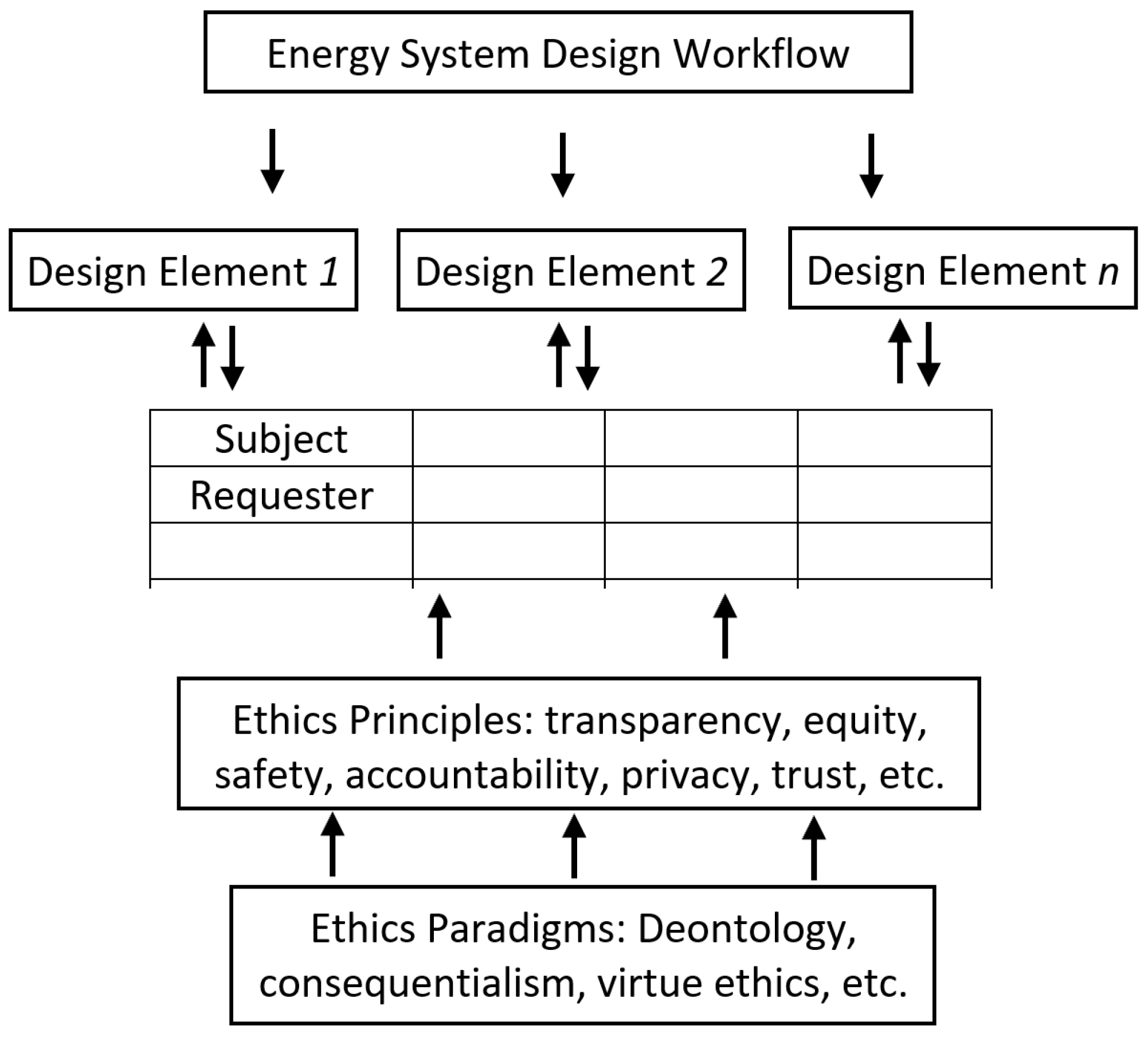

2. The Proposed AI Ethics Lifecycle Approach for Energy Systems

2.1. Design Phase

2.2. Development Phase

2.3. Operation Phase

2.4. Evaluation Phase

3. Evaluation of the Proposed Approach

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Jobin, A.; Ienca, M.; Vayena, E. The global landscape of AI ethics guidelines. Nat. Mach. Intell. 2019, 1, 389–399. [Google Scholar] [CrossRef]

- Hagendorff, T. The ethics of AI ethics: An evaluation of guidelines. Minds Mach. 2020, 30, 99–120. [Google Scholar] [CrossRef]

- Mittelstadt, B. Principles alone cannot guarantee ethical AI. Nat. Mach. Intell. 2019, 1, 501–507. [Google Scholar] [CrossRef]

- Bose, B.K. Artificial intelligence techniques in smart grid and renewable energy systems—Some example applications. Proc. IEEE 2017, 105, 2262–2273. [Google Scholar] [CrossRef]

- De Silva, D.; Yu, X.; Alahakoon, D.; Holmes, G. Semi-supervised classification of characterized patterns for demand forecasting using smart electricity meters. In Proceedings of the 2011 International Conference on Electrical Machines and Systems, IEEE, Beijing, China, 20–23 August 2011; pp. 1–6. [Google Scholar]

- De Silva, D.; Burstein, F.; Jelinek, H.; Stranieri, A. Addressing the complexities of big data analytics in healthcare: The diabetes screening case. Australas. J. Inf. Syst. 2015, 19. [Google Scholar] [CrossRef]

- Nawaratne, R.; Alahakoon, D.; De Silva, D.; Kumara, H.; Yu, X. Hierarchical two-stream growing self-organizing maps with transience for human activity recognition. IEEE Trans. Ind. Inform. 2019, 16, 7756–7764. [Google Scholar] [CrossRef]

- Nallaperuma, D.; De Silva, D.; Alahakoon, D.; Yu, X. Intelligent detection of driver behavior changes for effective coordination between autonomous and human driven vehicles. In Proceedings of the IECON 2018-44th Annual Conference of the IEEE Industrial Electronics Society, IEEE, Washington, DC, USA, 21–23 October 2018; pp. 3120–3125. [Google Scholar]

- Nawaratne, R.; Bandaragoda, T.; Adikari, A.; Alahakoon, D.; De Silva, D.; Yu, X. Incremental knowledge acquisition and self-learning for autonomous video surveillance. In Proceedings of the IECON 2017-43rd Annual Conference of the IEEE Industrial Electronics Society, IEEE, Beijing, China, 29 October–1 November 2017; pp. 4790–4795. [Google Scholar]

- Chamishka, S.; Madhavi, I.; Nawaratne, R.; Alahakoon, D.; De Silva, D.; Chilamkurti, N.; Nanayakkara, V. A voice-based real-time emotion detection technique using recurrent neural network empowered feature modelling. Multimed. Tools Appl. 2022, 81, 35173–35194. [Google Scholar] [CrossRef]

- Wu, C.J.; Raghavendra, R.; Gupta, U.; Acun, B.; Ardalani, N.; Maeng, K.; Chang, G.; Aga, F.; Huang, J.; Bai, C.; et al. Sustainable ai: Environmental implications, challenges and opportunities. Proc. Mach. Learn. Syst. 2022, 4, 795–813. [Google Scholar]

- Kleyko, D.; Osipov, E.; De Silva, D.; Wiklund, U.; Alahakoon, D. Integer self-organizing maps for digital hardware. In Proceedings of the 2019 International Joint Conference on Neural Networks (IJCNN), IEEE, Budapest, Hungary, 14–19 July 2019; pp. 1–8. [Google Scholar]

- Ziosi, M.; Mökander, J.; Novelli, C.; Casolari, F.; Taddeo, M.; Floridi, L. The EU AI Liability Directive: Shifting the burden from proof to evidence. AI Soc. Knowl. Cult. Commun. 2023. [Google Scholar] [CrossRef]

- Mökander, J.; Floridi, L. Operationalising AI governance through ethics-based auditing: An industry case study. AI Ethics 2023, 3, 451–468. [Google Scholar] [CrossRef]

- Morley, J.; Floridi, L.; Kinsey, L.; Elhalal, A. From what to how: An initial review of publicly available AI ethics tools, methods and research to translate principles into practices. Sci. Eng. Ethics 2020, 26, 2141–2168. [Google Scholar] [CrossRef]

- Novelli, C.; Casolari, F.; Rotolo, A.; Taddeo, M.; Floridi, L. Taking AI risks seriously: A new assessment model for the AI Act. AI Soc. 2023, 1–5. [Google Scholar] [CrossRef]

- Vitak, J.; Proferes, N.; Shilton, K.; Ashktorab, Z. Ethics regulation in social computing research: Examining the role of institutional review boards. J. Empir. Res. Hum. Res. Ethics 2017, 12, 372–382. [Google Scholar] [CrossRef]

- de Almeida, P.G.R.; dos Santos, C.D.; Farias, J.S. Artificial intelligence regulation: A framework for governance. Ethics Inf. Technol. 2021, 23, 505–525. [Google Scholar] [CrossRef]

- Morley, J.; Elhalal, A.; Garcia, F.; Kinsey, L.; Mökander, J.; Floridi, L. Ethics as a service: A pragmatic operationalisation of AI ethics. Minds Mach. 2021, 31, 239–256. [Google Scholar] [CrossRef] [PubMed]

- Floridi, L.; Cowls, J.; King, T.C.; Taddeo, M. How to design AI for social good: Seven essential factors. In Ethics, Governance, and Policies in Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2021; pp. 125–151. [Google Scholar]

- Floridi, L.; Cowls, J.; Beltrametti, M.; Chatila, R.; Chazerand, P.; Dignum, V.; Luetge, C.; Madelin, R.; Pagallo, U.; Rossi, F.; et al. AI4People—An ethical framework for a good AI society: Opportunities, risks, principles, and recommendations. Minds Mach. 2018, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Trentesaux, D.; Rault, R. Designing ethical cyber-physical industrial systems. IFAC-PapersOnLine 2017, 50, 14934–14939. [Google Scholar] [CrossRef]

- IEEE. The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Ethically Aligned Design: A Vision for Prioritizing Human Well-Being with Autonomous and Intelligent Systems. 2017. Available online: https://sagroups.ieee.org/global-initiative/wp-content/uploads/sites/542/2023/01/ead1e.pdf (accessed on 1 May 2024).

- EU-HLEG. AI Ethics Guidelines by the High-Level Expert Group on Artificial Intelligence. 2019. Available online: https://digital-strategy.ec.europa.eu/en/library/ethics-guidelines-trustworthy-ai (accessed on 1 May 2024).

- EU-HLEG. Proposal for a Regulation of The European Parliament and of the Council Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act) and Amending Certain Union Legislative Acts. 2021. Available online: https://eur-lex.europa.eu/legal-content/EN/TXT/HTML/?uri=CELEX:52021PC0206 (accessed on 1 May 2024).

- Floridi, L.; Cowls, J. A unified framework of five principles for AI in society. In Machine Learning and the City: Applications in Architecture and Urban Design; John Wiley & Sons: Hoboken, NJ, USA, 2022; pp. 535–545. [Google Scholar]

- Pant, A.; Hoda, R.; Tantithamthavorn, C.; Turhan, B. Ethics in AI through the practitioner’s view: A grounded theory literature review. Empir. Softw. Eng. 2024, 29, 67. [Google Scholar] [CrossRef]

- Borenstein, J.; Howard, A. Emerging challenges in AI and the need for AI ethics education. AI Ethics 2021, 1, 61–65. [Google Scholar] [CrossRef] [PubMed]

- De Silva, D.; Jayatilleke, S.; El-Ayoubi, M.; Issadeen, Z.; Moraliyage, H.; Mills, N. The Human-Centred Design of a Universal Module for Artificial Intelligence Literacy in Tertiary Education Institutions. Mach. Learn. Knowl. Extr. 2024, 6, 1114–1125. [Google Scholar] [CrossRef]

- Trentesaux, D.; Caillaud, E.; Rault, R. A framework fostering the consideration of ethics during the design of industrial cyber-physical systems. In Proceedings of the International Workshop on Service Orientation in Holonic and Multi-Agent Manufacturing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 349–362. [Google Scholar] [CrossRef]

- Floridi, L. The Ethics of Artificial Intelligence: Principles, Challenges, and Opportunities; Oxford University Press: Oxford, UK, 2023. [Google Scholar]

- Trentesaux, D.; Caillaud, E.; Rault, R. A vision of applied ethics in industrial cyber-physical sytems. In Proceedings of the International Workshop on Service Orientation in Holonic and Multi-Agent Manufacturing, Cluny, France, 18–19 November 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 319–331. [Google Scholar] [CrossRef]

- De Silva, D.; Alahakoon, D. An artificial intelligence life cycle: From conception to production. Patterns 2022, 3, 100489. [Google Scholar] [CrossRef] [PubMed]

- De Silva, D.; Mills, N.; El-Ayoubi, M.; Manic, M.; Alahakoon, D. ChatGPT and Generative AI Guidelines for Addressing Academic Integrity and Augmenting Pre-Existing Chatbots. In Proceedings of the 2023 IEEE International Conference On Industrial Technology (ICIT), Orlando, FL, USA, 4–6 April 2023; pp. 1–6. [Google Scholar]

- Madon, T.; Hofman, K.J.; Kupfer, L.; Glass, R.I. Implementation science. Science 2007, 318, 1728–1729. [Google Scholar] [CrossRef] [PubMed]

- La Trobe University—About Us. Available online: https://www.latrobe.edu.au/about (accessed on 1 March 2024).

- Gamage, G.; Kahawala, S.; Mills, N.; De Silva, D.; Manic, M.; Alahakoon, D.; Jennings, A. Augmenting Industrial Chatbots in Energy Systems using ChatGPT Generative AI. In Proceedings of the 2023 IEEE 32nd International Symposium on Industrial Electronics (ISIE), Helsinki, Finland, 19–21 June 2023; pp. 1–6. [Google Scholar]

- Moraliyage, H.; Dahanayake, S.; De Silva, D.; Mills, N.; Rathnayaka, P.; Nguyen, S.; Alahakoon, D.; Jennings, A. A robust artificial intelligence approach with explainability for measurement and verification of energy efficient infrastructure for net zero carbon emissions. Sensors 2022, 22, 9503. [Google Scholar] [CrossRef] [PubMed]

- La Trobe Energy AI Platform—Published Work. Available online: https://leap-ai.info/publications/index.html (accessed on 1 May 2024).

| Design Element | Subject | Requester | Temporality |

|---|---|---|---|

| Data Representation | Energy System, Sub-Modules, Downstream Applications | Owners, Designers, Developers, Engineers | Design to Deployment |

| Data Processing | Energy System, Sub-Modules, Downstream Applications | Designers, Developers, Engineers, Operators | Design to Operation |

| Data Quality | Energy System, Sub-Modules, Decision Processes | Designers, Developers, Engineers, Operators, Managers, Policymakers | Design to Operation |

| System Accuracy | Energy System, Sub-Modules, Downstream Applications, Consumer Experience | Engineers, Operators, Managers, Policymakers, Users | Operation to Termination |

| System Responsiveness | Downstream Applications, Consumer Experience | Operators, Technicians, Policymakers, Owners | Operation to Termination |

| System Usability | Energy System, Downstream Applications, Consumer Experience | Operators, Technicians, Policymakers, Owners | Design to Operation |

| System Integration | Energy System, Integration Platforms | Operators, Managers, Engineers, Owners | Operation to Expansion |

| Insight Quality | Energy System, Decision Processes, Policy Implements | Operators, Managers, Engineers, Owners, Consumers | Operation to Termination |

| Decision Quality | Energy System, Decision Processes, Policy Implements | Owners, Managers, Policymakers, Advocacy, Community | Operation to Termination |

| Design Element | Ethics Practice | AI Capability |

|---|---|---|

| Data Representation | Effective, Explainable, Inclusive, Robust, Trustworthy | Prediction of required data representations, Prediction of required data volumes, Classification of missing or erroneous data |

| Data Processing | Effective, Robust, Resilient, Privacy, Safe, Secure, Trustworthy | Prediction of process workloads, Outlier detection in processes, Optimization of processing based on patterns of recurrences |

| Data Quality | Accountable, Effective, Explainable, Inclusive | Classification of quality factors and variability, Prediction of loss of quality, Optimization of quality thresholds for system outcomes |

| System Accuracy | Effective, Explainable, Resilient, Robust, Safe, Secure, Trustworthy | Prediction of drop/loss in system accuracy, Metric optimization for performance gains, Association of metrics with system operation goals |

| System Responsiveness | Reliable, Resilient, Robust, Safe, Secure, Trustworthy, Unbiased | Operational load prediction, Classification of response times based on operator profiles, Profiling operator capabilities |

| System Usability | Accountable, Effective, Explainable, Inclusive | Prediction of evolving user needs, Prediction of evolving data volumes, Profiling usability factors by user groups |

| System Integration | Reliable, Resilient, Robust, Safe, Secure | Predicting downstream system dependencies, Classification of integration points and system checks, Association of integration logs, timeouts, and dropouts |

| Insight Quality | Effective, Explainable, Inclusive, Safe, Secure, Trustworthy | Classification of insight quality and acceptable accuracy thresholds, Prediction of insight quality based on data quality factors |

| Decision Quality | Accountable, Effective, Explainable, Inclusive, Safe, Secure, Trustworthy | Classification of decisions by downstream impact, Profiling socio-technical implications of decisions, Optimization of decisions for socio-economic gains |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

El-Haber, N.; Burnett, D.; Halford, A.; Stamp, K.; De Silva, D.; Manic, M.; Jennings, A. A Lifecycle Approach for Artificial Intelligence Ethics in Energy Systems. Energies 2024, 17, 3572. https://doi.org/10.3390/en17143572

El-Haber N, Burnett D, Halford A, Stamp K, De Silva D, Manic M, Jennings A. A Lifecycle Approach for Artificial Intelligence Ethics in Energy Systems. Energies. 2024; 17(14):3572. https://doi.org/10.3390/en17143572

Chicago/Turabian StyleEl-Haber, Nicole, Donna Burnett, Alison Halford, Kathryn Stamp, Daswin De Silva, Milos Manic, and Andrew Jennings. 2024. "A Lifecycle Approach for Artificial Intelligence Ethics in Energy Systems" Energies 17, no. 14: 3572. https://doi.org/10.3390/en17143572

APA StyleEl-Haber, N., Burnett, D., Halford, A., Stamp, K., De Silva, D., Manic, M., & Jennings, A. (2024). A Lifecycle Approach for Artificial Intelligence Ethics in Energy Systems. Energies, 17(14), 3572. https://doi.org/10.3390/en17143572