Research into the Fast Calculation Method of Single-Phase Transformer Magnetic Field Based on CNN-LSTM

Abstract

:1. Introduction

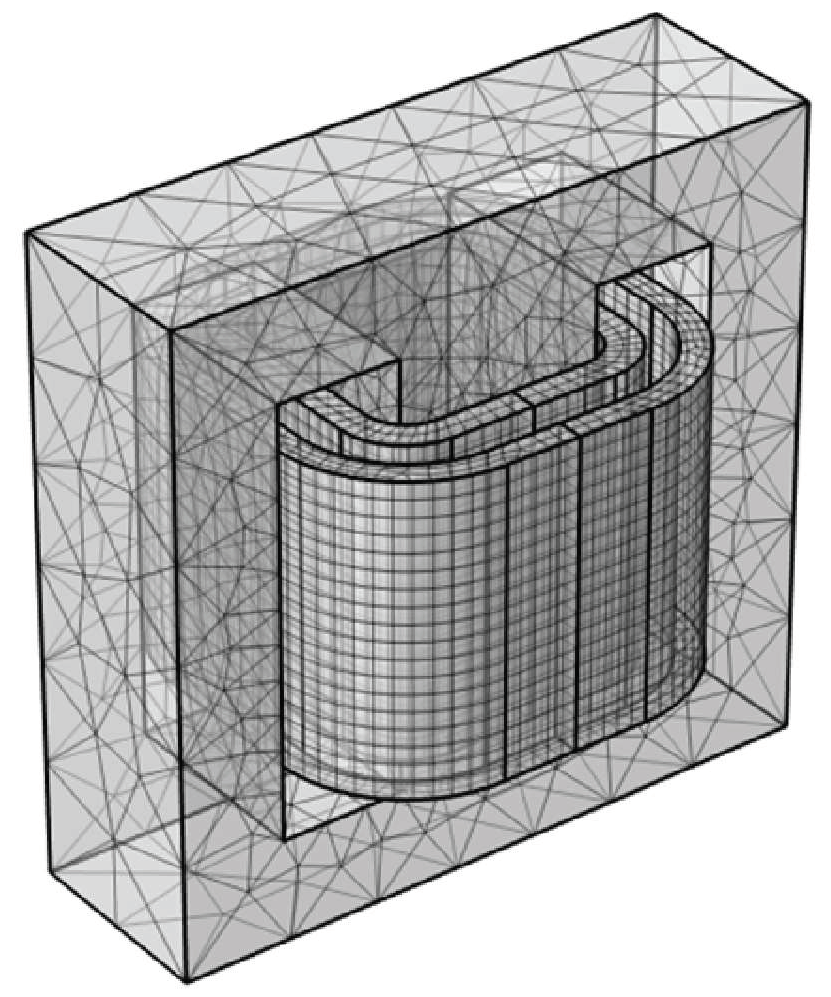

2. Construction and Verification of Finite Element Model for Single-Phase Transformer

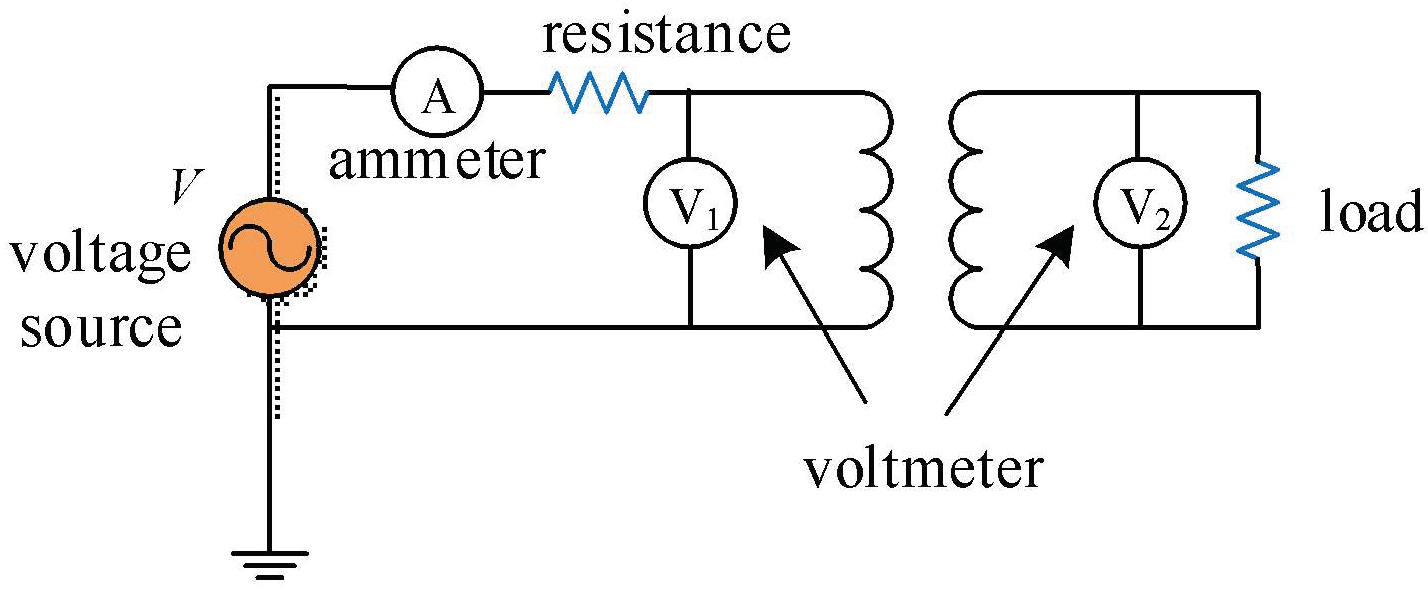

2.1. Single-Phase Transformer Test Platform and J-A Model Parameter Extraction

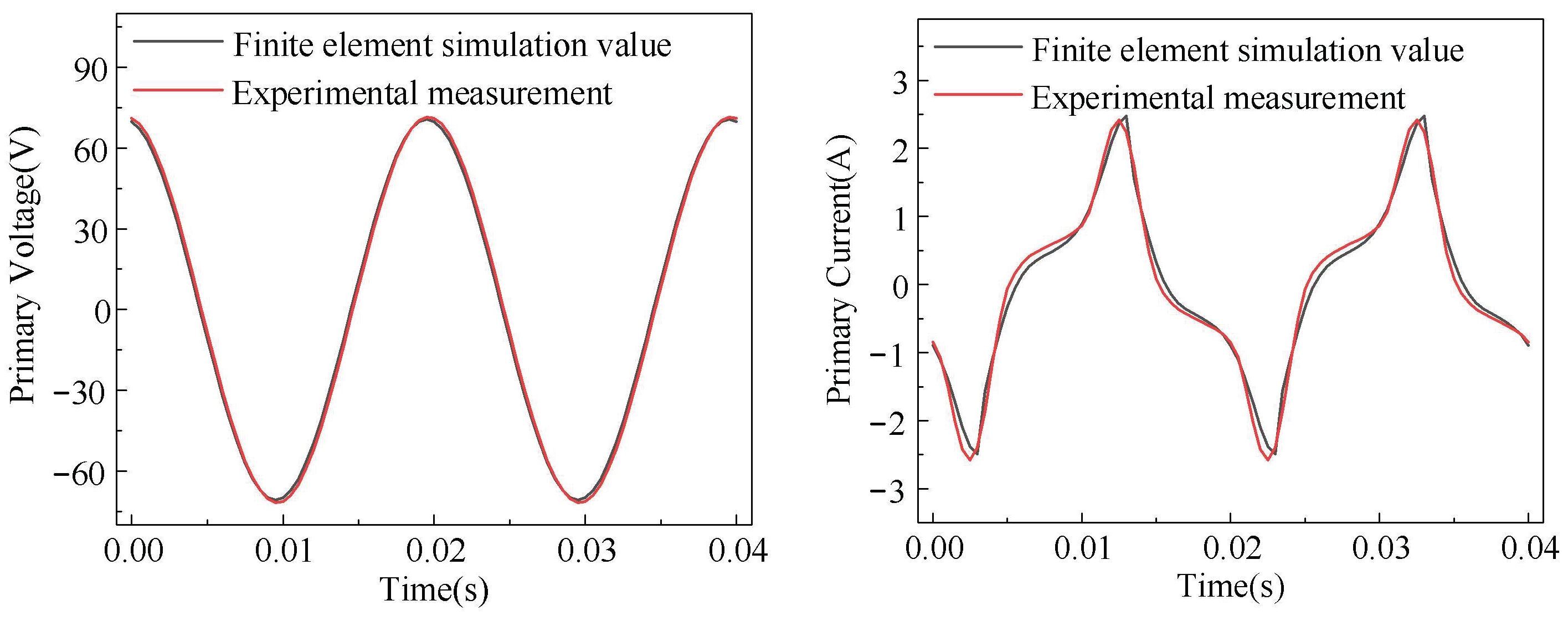

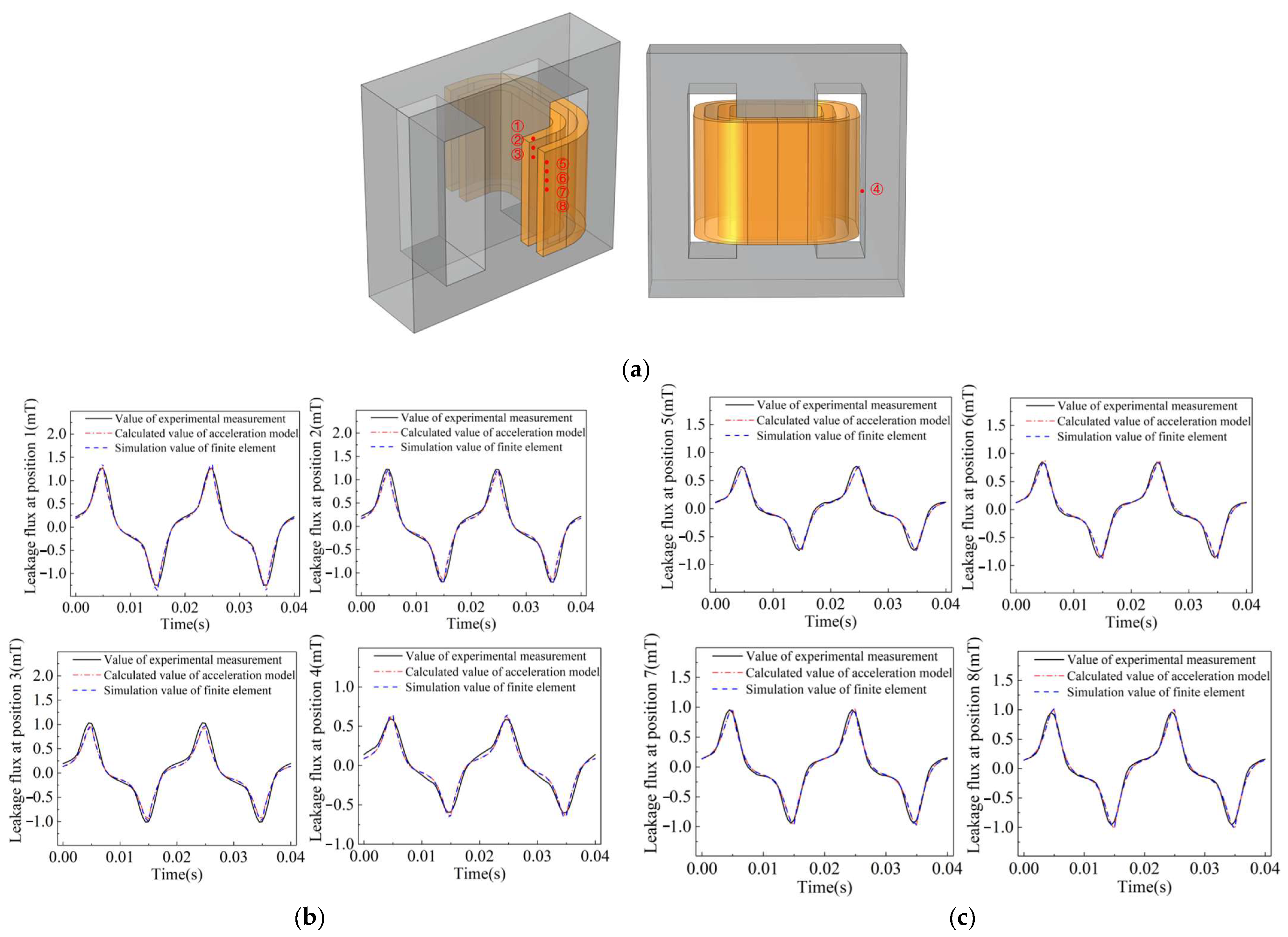

2.2. Construction and Verification of Finite Element Model for Single-Phase Transformer

- Neglecting the influence of structural components such as iron core pull plates and upper and lower clamps on transformers;

- Neglecting the gaps between transformer winding pads, pressure plates, support bars, and wire cakes;

- Ignoring the iron-core-laminated structure does not affect calculation accuracy and can reduce computational complexity; therefore, it is considered as a whole for modeling and analysis.

3. Sample Data Construction for the Fast Calculation Model of Magnetic Fields

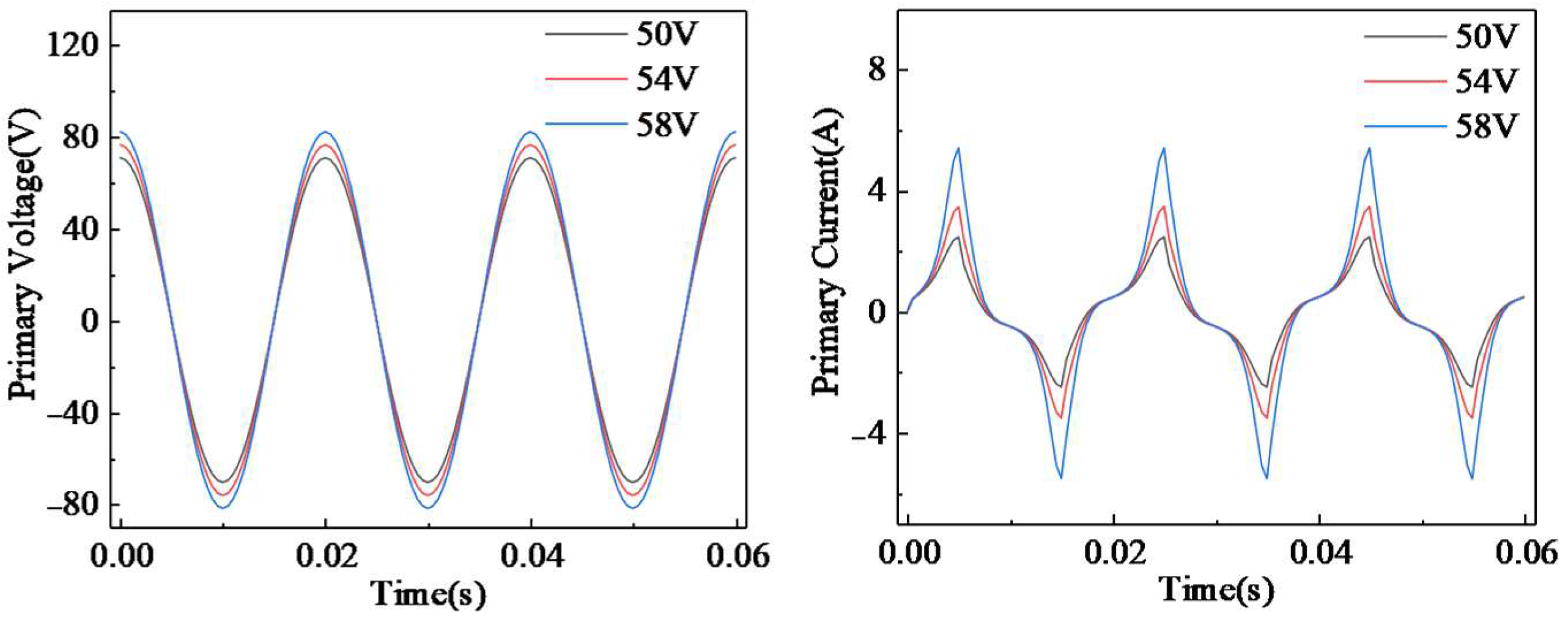

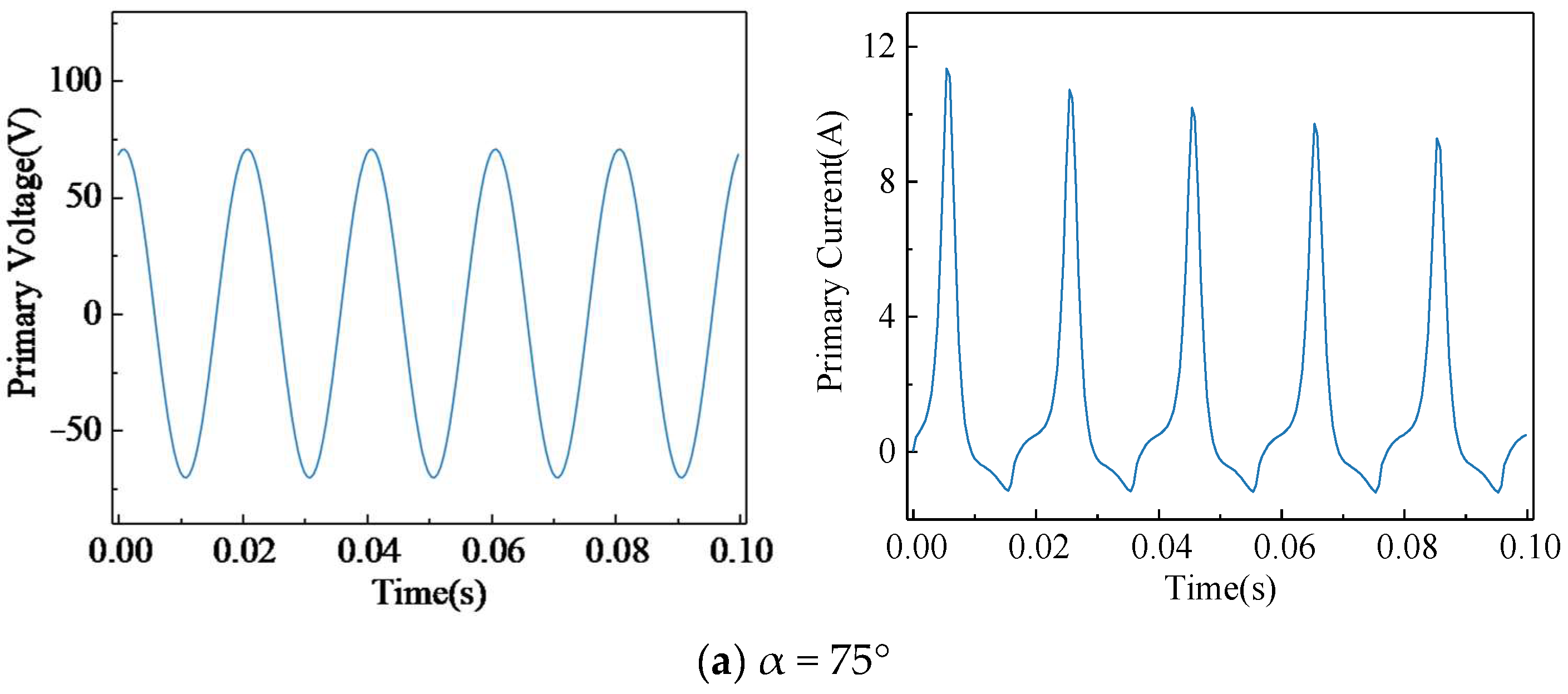

3.1. Multi-Condition Simulation of Single-Phase Transformers

3.2. Sample Data Construction

3.3. Data Preprocessing

4. Training of CNN-LSTM Magnetic Field Fast Calculation Model

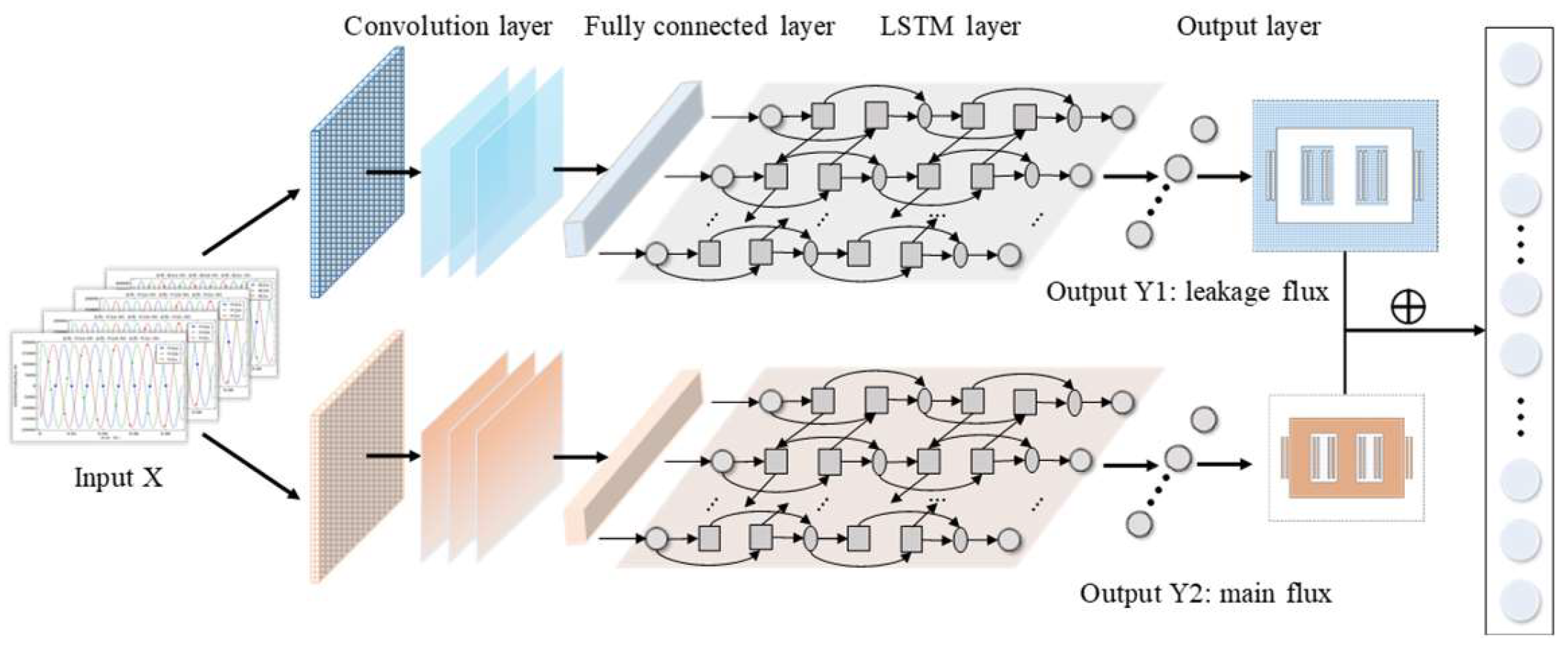

4.1. Construction of CNN-LSTM Network Model

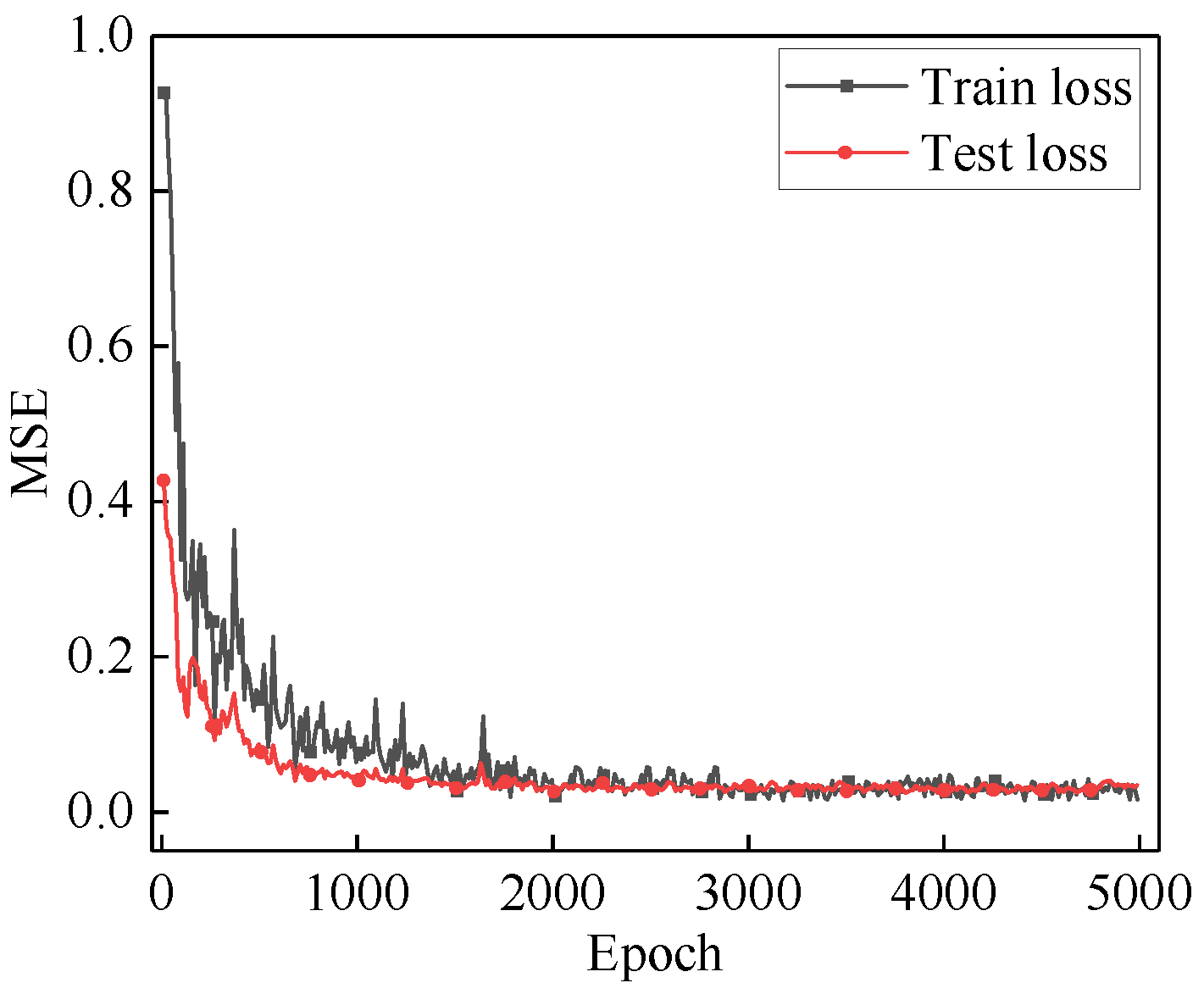

4.2. Training of CNN-LSTM Network Model

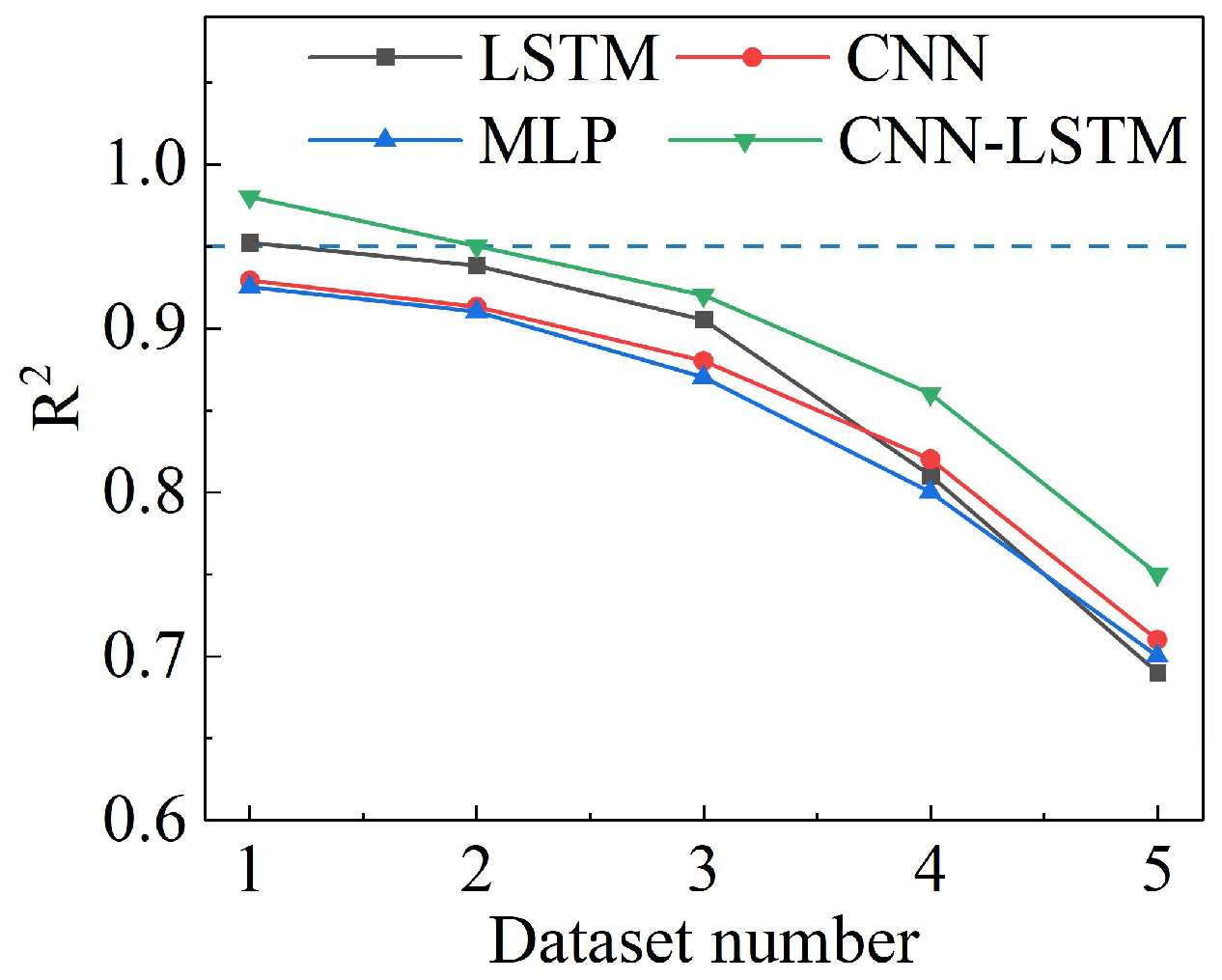

4.3. LSTM/CNN/MLP Network Comparison

5. Comparison and Verification of Fast Calculation Models for Magnetic Fields

5.1. Methodology

5.2. Comparison Validation

6. Conclusions

- This model designs a dual-branch spatial dynamic magnetic field fast calculation model based on the CNN-LSTM, which divides the spatial magnetic field solving task into two subproblems, avoiding the fitting bias caused by the difference in main and leakage magnetic flux, and has certain interpretability and accuracy.

- This model is based on a multi-condition finite element simulation that takes into account the nonlinear characteristics of the iron core, with a focus on learning and mapping the behavior of the nonlinear effects of the iron core on magnetic field distribution, in order to achieve a more efficient and accurate fast calculation of magnetic field distribution within the framework of digital twins.

- The calculated output time of the trained model at a single time step is about 0.04 s, which greatly shortens the time compared to finite element simulation. The calculated values of the model showed good consistency with the experimental measurements at different locations and time periods.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Arraño-Vargas, F.; Konstantinou, G. Modular Design and Real-Time Simulators toward Power System Digital Twins Implementation. IEEE Trans. Ind. Inform. 2023, 19, 52–61. [Google Scholar] [CrossRef]

- Yang, F.; Hao, H.; Wang, P.; Jiang, H.; Xia, Y.; Luo, A.; Liao, R. Development status of multi-physical field numerical calculation for power equipment. High Volt. Eng. 2023, 49, 2348–2364. [Google Scholar]

- Moutis, P.; Alizadeh-Mousavi, O. Digital Twin of Distribution Power Transformer for Real-Time Monitoring of Medium Voltage From Low Voltage Measurements. IEEE Trans. Power Deliv. 2021, 36, 1952–1963. [Google Scholar] [CrossRef]

- Deng, X.; Zhu, H.; Yan, K.; Zhang, Z.; Liu, S. Research on transformer magnetic balance protection based on optical fiber leakage magnetic field measurement. Trans. China Electrotech. Soc. 2024, 39, 628–642. [Google Scholar]

- Zhou, Y.; Wang, X. Online monitoring method of transformer winding deformation based on magnetic field measurement. Electr. Meas. Instrum. 2017, 54, 58–63+87. [Google Scholar]

- Zhang, C.; Zhou, L.; Li, W.; Gao, S.; Wang, D. Calculation method of energy-saving coil core loss considering boundary flux density classification. High Volt. Eng. 2023, 49, 3940–3948. [Google Scholar]

- Stulov, A.; Tikhonov, A.; Snitko, I. Fundamentals of Artificial Intelligence in Power Transformers Smart Design. In Proceedings of the 2020 International Ural Conference On Electrical Power Engineering (UralCon), Chelyabinsk, Russia, 22–24 September 2020. [Google Scholar]

- Pan, Q.; Ma, W.; Zhao, Z.; Kang, J. Development and application of magnetic field measurement methods. Trans. China Electrotech. Soc. 2005, 3, 7–13. [Google Scholar]

- Wan, B. Research on the Detection Method of Electromagnetic Wave Magnetic Field Component at the Joint of Transformer Partial Discharge Oil Tank. Master’s Thesis, North China Electric Power University (Beijing), Beijing, China, 2023. [Google Scholar]

- Taher, A.; Sudhoff, S.; Pekarek, S. Calculation of a Tape-Wound Transformer Leakage Inductance Using the MEC Model. IEEE Trans. Energy Convers. 2015, 30, 541–549. [Google Scholar] [CrossRef]

- Zhao, Y.; Dai, Y.; Zhuang, J.; Cai, G.; Chen, Z.; Liu, X. Optimization method of insulation material performance parameters of medium frequency transformer based on thermo-solid coupling. Trans. China Electrotech. Soc. 2023, 38, 1051–1063. [Google Scholar]

- Yan, C.; Hao, Z.; Zhang, B.; Zheng, T. Modeling and Simulation of Deformation Rupture of Power Transformer Tank. Trans. China Electrotech. Soc. 2016, 31, 180–187. [Google Scholar]

- ANSYS. Ansys Twin Builder. Available online: https://www.ansys.com/zh-cn/products/digital-twin/ansys-twin-builder (accessed on 10 May 2024).

- Zhang, C.; Liu, D.; Gao, C.; Liu, Y.; Liu, G. Three-dimensional magnetic field reduction model and loss analysis of 110 kV oil-immersed transformer based on Twin Builder. High Volt. Eng. 2024, 50, 941–951. [Google Scholar]

- COMSOL. COMSOL Multiphysics®6.2 Release Highlights. Available online: https://cn.comsol.com/release/6.2 (accessed on 10 May 2024).

- Liu, Y.; Li, Y.; Li, H.; Fan, X. Local hot spot detection of transformer winding based on Brillouin optical time domain peak edge analysis. Trans. China Electrotech. Soc. 2024, 39, 3486–3498. [Google Scholar]

- Taheri, A.A.; Abdali, A.; Rabiee, A. A Novel Model for Thermal Behavior Prediction of Oil-Immersed Distribution Transformers With Consideration of Solar Radiation. IEEE Trans. Power Deliv. 2019, 34, 1634–1646. [Google Scholar] [CrossRef]

- Liu, G.; Hao, S.; Hu, W.; Liu, Y.; Li, L. Calculation method of transient temperature rise of oil-immersed transformer winding based on SCAS time matching algorithm. Trans. China Electrotech. Soc. 2024, 1–14. [Google Scholar]

- Deng, X.; Wu, W.; Yang, M.; Zhu, H. Research on transformer winding deformation diagnosis based on leakage magnetic field and deep belief network. Transformer 2021, 58, 42–48. [Google Scholar]

- Deng, X.; Yan, K.; Zhu, H.; Zhang, Z. Early fault protection based on transformer winding circuit-leakage magnetic field multi-state analytical model. Grid Technol. 2023, 47, 3808–3821. [Google Scholar]

- Khan, A.; Ghorbanian, V. Lowther. Deep Learning for Magnetic Field Estimation. IEEE Trans. Magn. 2019, 55, 7202304. [Google Scholar] [CrossRef]

- Fotis, G.; Vita, V.; Ekonomou, L. Machine Learning Techniques for the Prediction of the Magnetic and Electric Field of Electrostatic Discharges. Electronics 2022, 11, 1858. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhao, Z.; Xu, B.; Sun, H.; Huang, X. Research on fast calculation method of electromagnetic field based on U-net convolutional neural network. Trans. China Electrotech. Soc. 2024, 39, 2730–2742. [Google Scholar]

- Li, P.; Hu, G. Transformer fault diagnosis method based on data-enhanced one-dimensional improved convolutional neural network. Grid Technol. 2023, 47, 2957–2967. [Google Scholar]

- Fan, Z.; Du, J. Prediction of dissolved gas volume fraction in transformer oil based on correlation variational mode decomposition and CNN-LSTM. High Volt. Eng. 2024, 50, 263–273. [Google Scholar]

- Pan, C.; An, J.; Liu, C.; Cai, G.; Sun, Z.; Luo, Y. Multi-field coupling analysis and suppression of noise characteristics of transformer bias effect. Trans. China Electrotech. Soc. 2023, 38, 5077–5088. [Google Scholar]

| Parameters | Value |

|---|---|

| Saturation intensity Ms (A/m) | 1.2166 × 106 |

| Nailing loss k (A/m) | 68.626 |

| Reversibility of magnetization c | 0.0307 |

| Interdomain coupling α | 3.027 × 10−4 |

| Domain wall density a (A/m) | 134.7 |

| Parameters | Value | Parameters | Value |

|---|---|---|---|

| Nominal capacity/VA | 50 | Turns ratio | 55:55 |

| Nominal voltage/V | 50 | Iron core material | DQ151-φ0.35 |

| Width of center leg/mm | 60 | Window height/mm | 124 |

| Iron core thickness/mm | 60 | Window width/mm | 36 |

| Width of sideward leg/mm | 30 | Winding height/mm | 90 |

| Working Condition | Load Situation | Parameterized Scanning | Number of Operating Conditions (Group) |

|---|---|---|---|

| Power frequency overvoltage | Secondary side unload | UN: 1.0–1.3 p.u. Scanning step size 0.02 p.u. | 16 |

| Secondary side load | 16 | ||

| DC bias | Secondary side unload | Is: 2 A–50 A, Scanning step size 2 A | 20 |

| Secondary side load | 20 | ||

| Inrush current | Secondary side unload | φ: 0°–120°, Scanning step size 5° | 18 |

| Total operating conditions | 90 | ||

| Training Layer Type | Parameter Structure | Training Layer Type | Parameter Structure |

|---|---|---|---|

| Convolutional layer weights | (32, 1, 3) | LSTM layer bias2 | (256) |

| Convolutional bias | (32) | Fully connected layer 1 weight | (1291, 1024) |

| LSTM layer weight 1 | (256, 32) | Fully connected layer 1 bias | (1291) |

| LSTM layer weight 2 | (256, 64) | Fully connected layer 2 weight | (17,202, 1024) |

| LSTM layer bias 1 | (256) | Fully connected layer 2 bias | (17,202) |

| Number | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Δu/p.u. | 0.02 | 0.04 | 0.06 | 0.08 | 0.1 |

| ΔI/A | 2 | 4 | 6 | 8 | 10 |

| Δφ/° | 5 | 10 | 15 | 20 | 25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Peng, Q.; Zhu, X.; Hong, Z.; Zou, D.; Guo, R.; Chu, D. Research into the Fast Calculation Method of Single-Phase Transformer Magnetic Field Based on CNN-LSTM. Energies 2024, 17, 3913. https://doi.org/10.3390/en17163913

Peng Q, Zhu X, Hong Z, Zou D, Guo R, Chu D. Research into the Fast Calculation Method of Single-Phase Transformer Magnetic Field Based on CNN-LSTM. Energies. 2024; 17(16):3913. https://doi.org/10.3390/en17163913

Chicago/Turabian StylePeng, Qingjun, Xiaoxian Zhu, Zhihu Hong, Dexu Zou, Renjie Guo, and Desheng Chu. 2024. "Research into the Fast Calculation Method of Single-Phase Transformer Magnetic Field Based on CNN-LSTM" Energies 17, no. 16: 3913. https://doi.org/10.3390/en17163913

APA StylePeng, Q., Zhu, X., Hong, Z., Zou, D., Guo, R., & Chu, D. (2024). Research into the Fast Calculation Method of Single-Phase Transformer Magnetic Field Based on CNN-LSTM. Energies, 17(16), 3913. https://doi.org/10.3390/en17163913