Comparison of Optimal SASS (Sparsity-Assisted Signal Smoothing) and Linear Time-Invariant Filtering Techniques Dedicated to 200 MW Generating Unit Signal Denoising

Abstract

:1. Introduction

2. Overview of 200 MW Generating Unit and Measured Transient Signals

3. Disturbance Test and Analysis of the Measured Signals

4. Mathematical Model of the Measured Signals

- The base frequency of the power system, which is equal to 50 Hz (only the generator field voltage Efd contains this component).

- Harmonic components from various sources. These interferences can be observed in generator field voltage Efd (1000 Hz), the exciter field current Ife (500 Hz and 1000 Hz), and the field current Ifd.

- Wideband noise, which is present in all signals.

- sP(t)—the measured signal (with noise and interferences);

- s(t)—signal free of any interferences (the clean signal);

- h(t)—harmonic interferences, which can be described as , where i is the harmonic number;

- n(t)—wideband noise.

5. Review of Existing Filtering/Approximation Methods and General Assumptions

- The first category is the methods, which try to filter out the interferences and noise (h(t) and n(t) in (1)). The number of methods which can be named here is very broad. Some of the most popular ones are those based on typical LTI digital filters (e.g., low-pass, stop-band, and notch filters), some commonly used nonlinear filters (e.g., the median filter), or some manner of adaptive filter (like the Wiener filter) [33]. The demand for computing power in the case of these methods is relatively small, and the design algorithms are relatively simple. There are also much more complex methods which require much more computing power, like those based on neural networks [34], deep learning [35], or Sparsity-Assisted Signal Smoothing (SASS) [36]. The choice of certain methods depends on many factors, but one of the most important is the character of the interferences in comparison with the clean signal. In situations where it is difficult to separate the interferences from the clean signal (e.g., if they occupy the same bandwidth), the standard LTI approach might not be sufficient enough. It is also well known that filters can introduce additional artifacts (like ringing and overshooting in the time domain). These artifacts can change the shape of the signal significantly, which is usually unacceptable in dynamical systems modeling.

- The second category is the methods which try to approximate the clean signal s(t) from the measured signal s′(t). It can be a simple Savitzky-Golay polynomial approximation [37], a more advanced method using some manner of signal transform (for example, wavelet transform [38]), or a model-based Bayesian filter (like the extended Kalman filter [39]). In this approach, the most difficult part is to find the approximating function that is a good fit for the shape of the s(t) signal. This can be a challenging task, especially if the signals contain discontinuities and steep slopes. This group of methods can also introduce some artifacts to the signal (for example, the wavelet denoising is well known for its pseudo-Gibbs effect [40]). It is worth writing here that the approximation methods are very often implemented in the form of FIR (Finite Impulse Response) or IIR (Infinite Impulse Response) digital filters in practical implementations. The Savitzky-Golay method is implemented as FIR filters [41], and the discrete wavelet transform is usually implemented using the quadrature mirror filters (QMF) [42].

- The time alignment of the clean signal should not be changed.

- Steep transition slopes and discontinuities of jump and removable kind should be preserved.

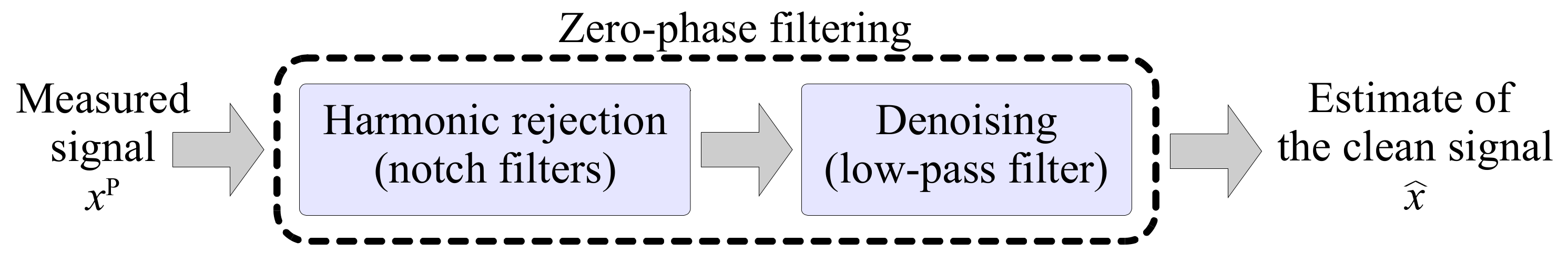

6. Sparsity-Assisted Signal Smoothing (SASS) and Reference LTI Filtering Methods

- , , and regularization parameter λ > 0.

- Zero phase distortion.

- A filter transfer function equal to the squared magnitude of the original filter transfer function.

- An effective filter order that is double the order of the given filter.

- Because the zero-phase filtering is implemented by reversing the input signal samples order, this method cannot be used in real-time applications (the whole signal must be known before the filtering is started). This makes the presented methods suitable for off-line analysis only.

7. Test Signal Synthesis

- Initially, reference (clean) signals were generated from a model of the power-generating unit operating under the same disturbance test conditions as the actual generator during the measurements. These clean signals correspond to the s(t) component in Equation (1) and serve as a baseline for assessing the filtering/approximation quality of all tested methods.

- In the subsequent step, white Gaussian noise was introduced to the clean signals, corresponding to the n(t) component in Equation (1). The signal-to-noise ratio (SNR) was adjusted to closely match that of the measured signals. To maintain the amplitude spectrum profile of the noise consistent with the measurements, it was generated at a sampling rate of 32 kHz and then downsampled to 4 kHz using the same antialiasing filter applied to the measured signals.

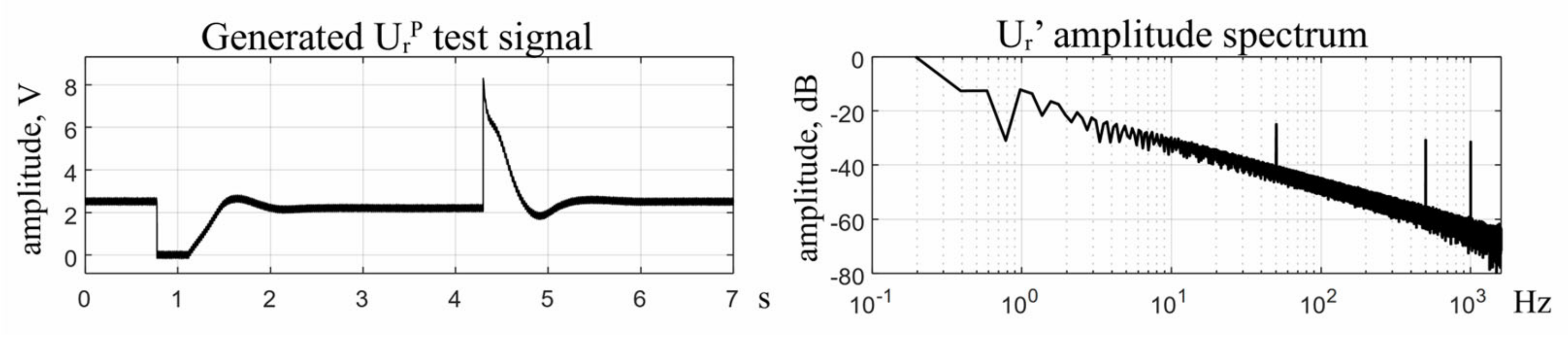

- In the last step, three harmonic components (represented by h(t) in Equation (1)) were added to the signals. The frequencies of the components are 50, 500, and 1 kHz, and the amplitudes (measured above the noise floor) are approximately 18 dB, 22 dB, and 25 dB, respectively. These harmonic components were introduced under a worst-case scenario, where their amplitudes matched the maximum amplitudes observed in all of the measured signals. An example of the Ur’ signal, including noise and harmonic components, is illustrated in Figure 5.

8. Optimization Problem Definition and the Proposed Optimal SASS Method

- P—set of parameters of the chosen filtering/approximation method (a detailed set of parameters for each method is shown in Table 2);

- f(P)—goal function.

- the RMS (root mean square) error between the reference and distorted signal;

- —the maximal error between the reference and distorted signal.

- s[n]—reference (clean) signal, sF[n]—filtered distorted (noisy) signal, N—number of samples (length) of the signal.

9. Comparison of Optimization Results for All Test Signals

| Filtering Method | Considered Parameters | Parameters’ Starting Values | Parameters’ Range (Optimization Bounds) |

|---|---|---|---|

| Low-pass Butterworth filter | Optimized parameter is the cut-off frequency fl and filter order | Starting cut-off frequency value is set to 10 Hz, and the starting filter order is set to 1 | Range of cut-off frequency equals <0.1 Hz, 1500 Hz> and tested filter orders are in range <1, 4> |

| Notch filters | The notch frequencies are fixed (50 Hz, 500 Hz, and 1 kHz), and only the width of each filter is optimized (fw50, fw500, fw1000) | Starting values were set to fw50 = 1 Hz, fw500 = 5 Hz, and fw1000 = 10 Hz. | Possible range of width for each filter is very wide and equal to <0.1 Hz, 1000 Hz> |

| Optimal SASS | Optimized parameters are the cut-off filter frequency fc and regularization parameter λ. All optimizations were performed for chosen derivative orders and filter orders. | Starting values for fc and λ were set each time experimentally using a careful observation of filtered signal and SASS derivative signal. The fc was set to filter out the noise for the smooth signal parts, and the λ was set to have a significant value of derivative signal around the discontinuity points. | The ranges for fc and λ were set from 0.1 to 10 times the starting value. The optimization procedure was repeated for the following combinations of derivative order and filter order: (1, 1), (1, 2), (2, 1), (2, 2). |

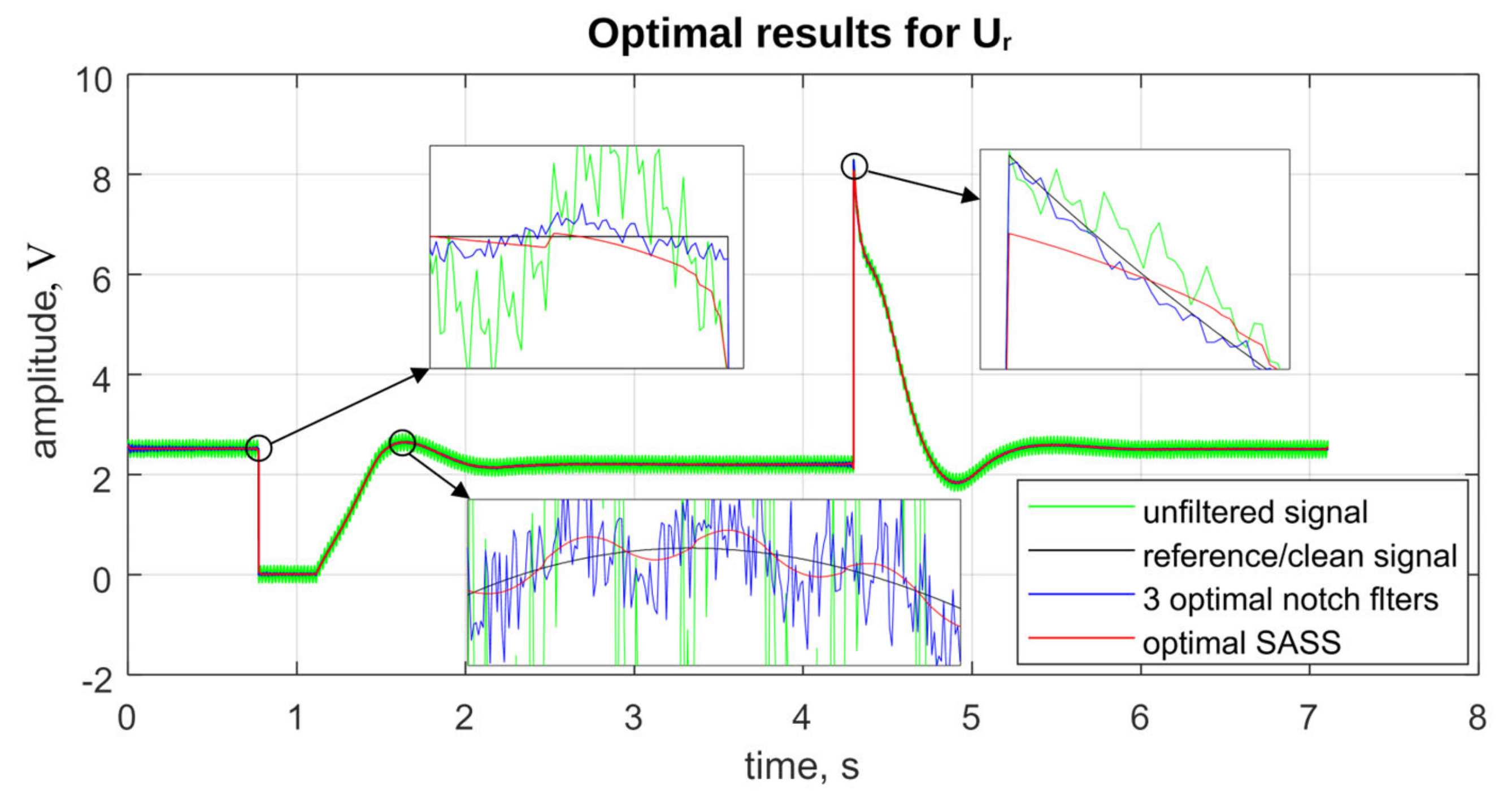

9.1. Regulator Output Voltage Ur

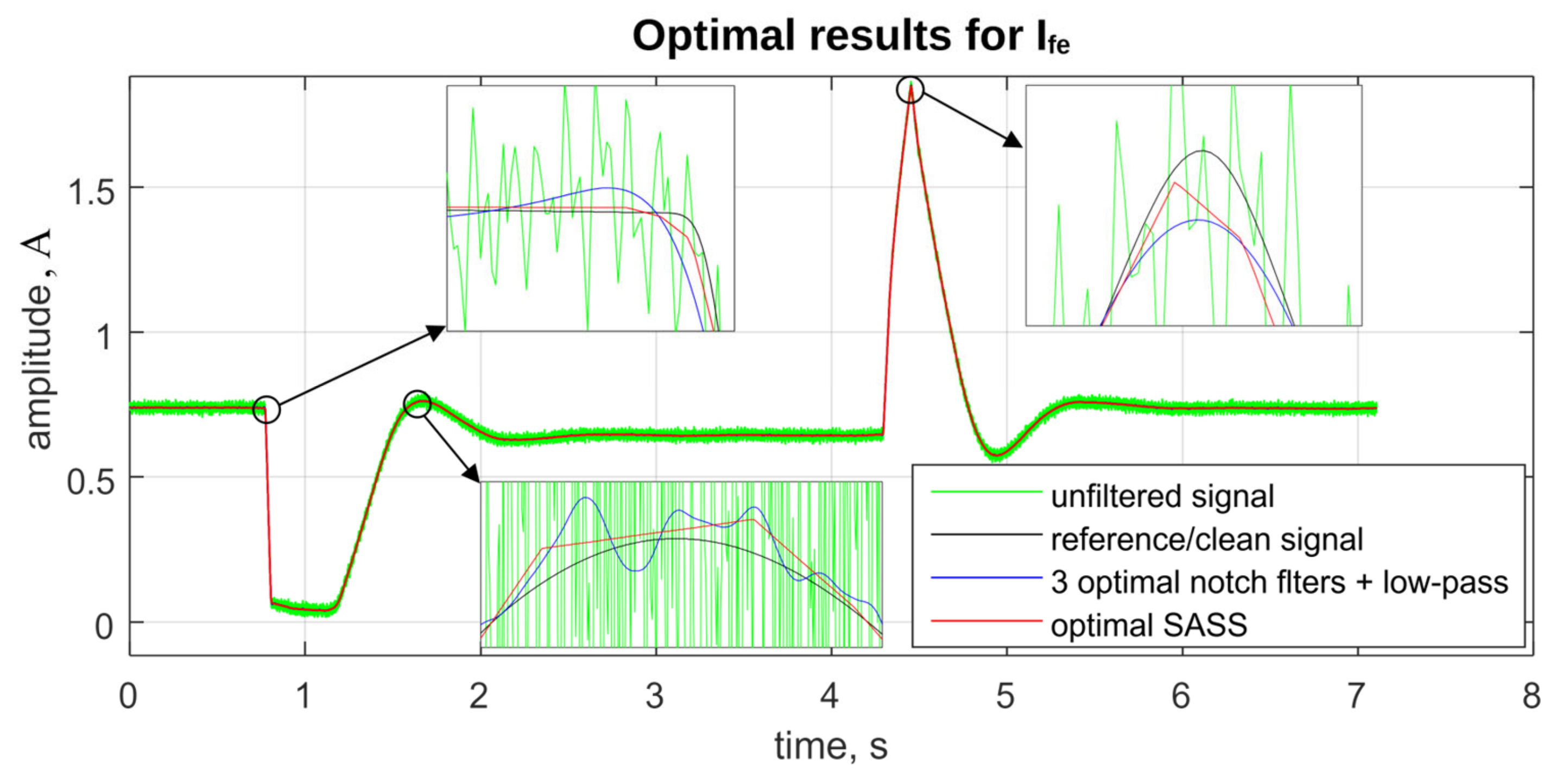

9.2. Exciter Field Current Ife

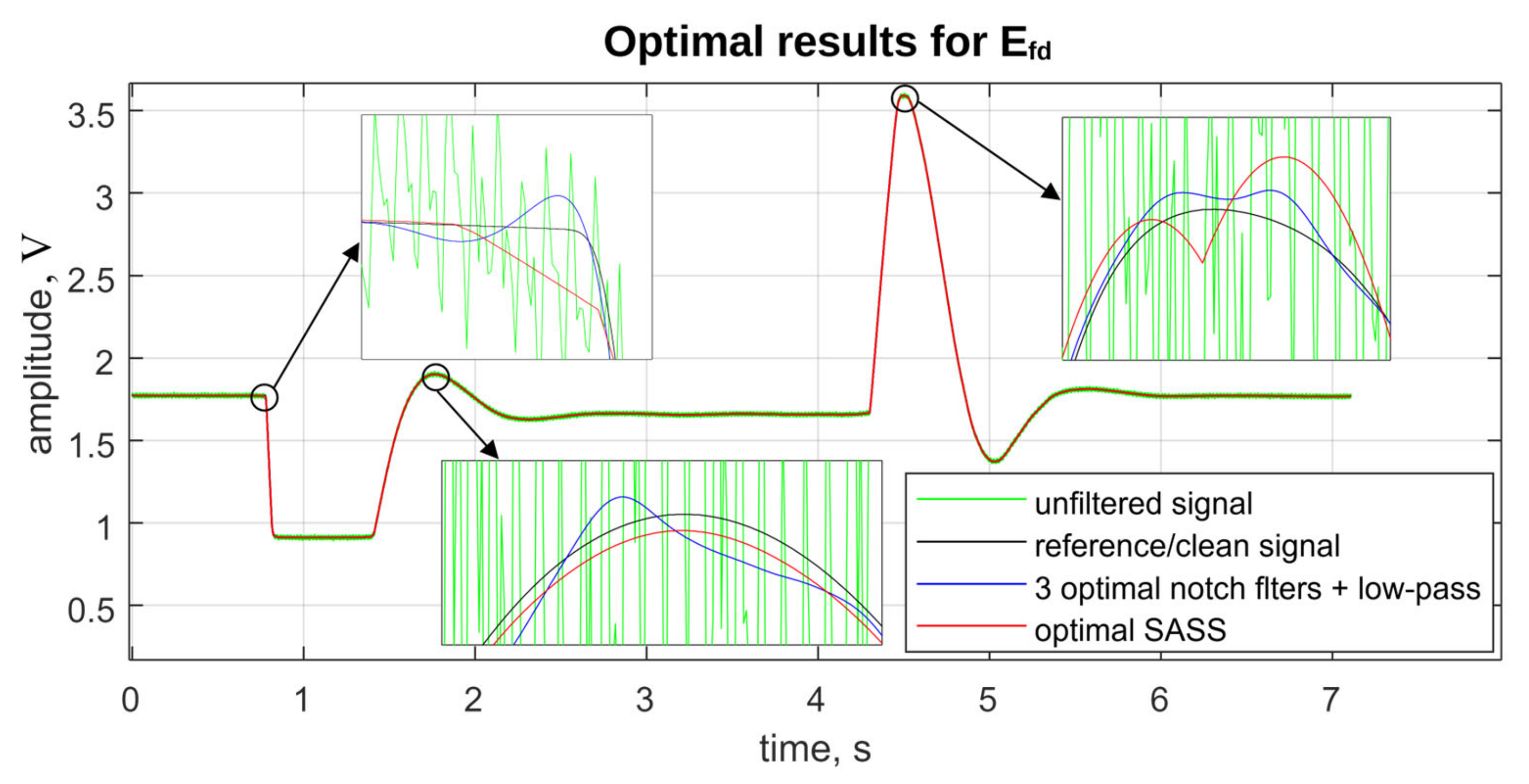

9.3. Generator Field Voltage Efd

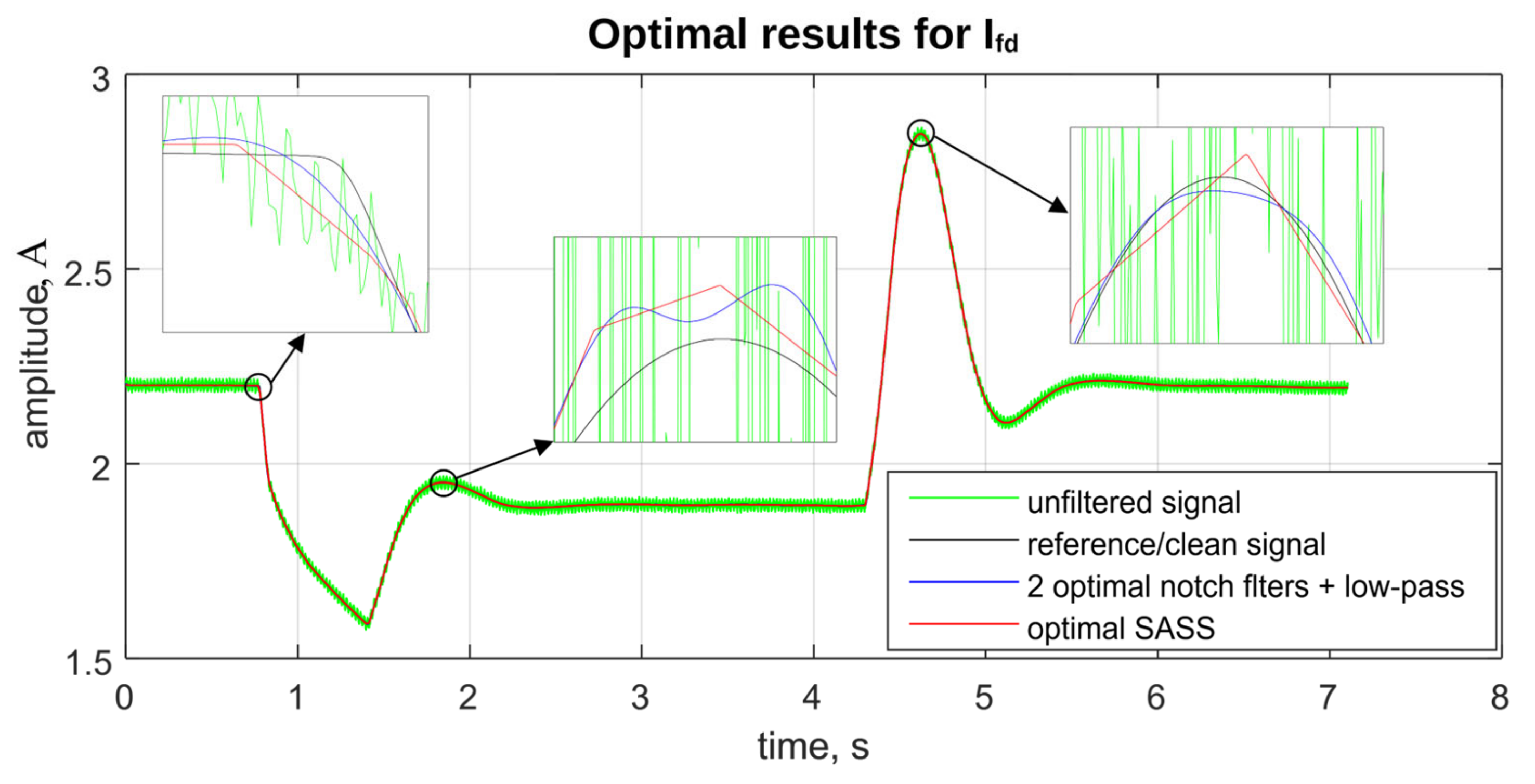

9.4. Generator Field Current Ifd

9.5. Summary of Optimization Results

10. Summary and Conclusions

11. Future Work

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jiang, H.; Zhang, Y.; Muljadi, E. New Technologies for Power System Operation and Analysis; Jiang, H., Zhang, Y., Muljadi, E., Eds.; Academic Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Sodhi, R. Simulation and Analysis of Modern Power Systems; McGraw Hill Education: New York, NY, USA, 2021. [Google Scholar]

- Goud, B.S.; Kalyan, C.N.S.; Rao, G.S.; Reddy, B.N.; Kumar, Y.A.; Reddy, C.R. Combined LFC and AVR Regulation of Multi Area Interconnected Power System Using Energy Storage Devices. In Proceedings of the IEEE 2nd International Conference on Sustainable Energy and Future Electric Transportation (SeFeT), Hyderabad, India, 4–6 August 2022; pp. 1–6. [Google Scholar]

- Machowski, J.; Lubośny, Z.; Białek, J.; Bumby, J. Power System Dynamics: Stability and Control, 3rd ed.; Wiley: Hoboken, NJ, USA, 2020; pp. 1–888. [Google Scholar]

- Vittal, V.; McCalley, J.D.; Anderson, P.M.; Fouad, A.A. Power System Control and Stability, 3rd ed.; Wiley-IEEE Press: Hoboken, NJ, USA, 2019; p. 832. [Google Scholar]

- Paszek, S.; Boboń, A.; Berhausen, S.; Majka, Ł.; Nocoń, A.; Pruski, P. Synchronous Generators and Excitation Systems Operating in a Power System: Measurement Methods and Modelling; Lecture Notes in Electrical Engineering; Springer: Cham, Switzerland, 2020; p. 631. [Google Scholar]

- Majka, Ł.; Baron, B.; Zydroń, P. Measurement-based stiff equation methodology for single phase transformer inrush current computations. Energies 2022, 15, 7651. [Google Scholar] [CrossRef]

- IEEE Std 421.5—2016; IEEE Recommended Practice for Excitation System Models for Power System Stability Studies. Energy Development and Power Generation Committee of the IEEE Power and Energy Society: New York, NY, USA, 2016.

- Máslo, K.; Kasembe, A. Extended long term dynamic simulation of power system. In Proceedings of the 52nd International Universities Power Engineering Conference (UPEC), Heraklion, Greece, 28–31 August 2017; pp. 1–5. [Google Scholar]

- Verrelli, C.M.; Marino, R.; Tomei, P.; Damm, G. Nonlinear Robust Coordinated PSS-AVR Control for a Synchronous Generator Connected to an Infinite Bus. IEEE Trans. Autom. Control. 2022, 67, 1414–1422. [Google Scholar] [CrossRef]

- Imai, S.; Novosel, D.; Karlsson, D.; Apostolov, A. Unexpected Consequences: Global Blackout Experiences and Preventive Solutions. IEEE Power Energy Mag. 2023, 21, 16–29. [Google Scholar] [CrossRef]

- Almas, M.S.; Vanfretti, L. RT-HIL Testing of an Excitation Control System for Oscillation Damping using External Stabilizing Signals. In Proceedings of the IEEE Power & Energy Society General Meeting, Denver, CO, USA, 26–30 July 2015. [Google Scholar]

- Reliability Guideline: Power Plant Model Verification and Testing for Synchronous Machines; North American Electric Reliability Corporation (NERC): Atlanta, GA, USA, 2018; p. 30326.

- Dynamic Model Acceptance Test Guideline; Version 2; Australian Energy Market Operator Limited (AEMO): Melbourne, Australia, 2021.

- Bérubé, G.R.; Hajagos, L.M. Testing & Modeling of Generator Controls; KESTREL POWER ENGINEERING, ENTRUST Solutions Group: Warrenville, IL, USA, 2003. [Google Scholar]

- Pruski, P.; Paszek, S. Location of generating units most affecting the angular stability of the power system based on the analysis of instantaneous power waveforms. Arch. Control. Sci. 2020, 30, 273–293. [Google Scholar] [CrossRef]

- IEEE Std 421.2—2014; IEEE Guide for Identification, Testing, and Evaluation of the Dynamic Performance of Excitation Control Systems. Energy Development and Power Generation Committee of the IEEE Power and Energy Society: New York, NY, USA, 2014.

- Monti, A.; Benigni, A. Modeling and Simulation of Complex Power Systems; Institution of Engineering and Technology (IET): Stevenage, UK, 2022; p. 320. [Google Scholar]

- Máslo, K.; Kasembe, A.; Kolcun, M. Simplification and unification of IEEE standard models for excitation systems. Electr. Power Syst. Res. 2016, 140, 132–138. [Google Scholar] [CrossRef]

- Majka, Ł. Using fractional calculus in an attempt at modeling a high frequency AC exciter. In Advances in Non-Integer Order Calculus and Its Applications, Proceedings of the 10th International Conference on Non-Integer Order Calculus and Its Applications, Bialystok, Poland, 20–21 September 2018; Lecture Notes in Electrical Engineering; Springer: Berlin/Heidelberg, Germany, 2020; Volume 559, pp. 55–71. [Google Scholar]

- Sowa, M.; Majka, Ł.; Wajda, K. Excitation system voltage regulator modeling with the use of fractional calculus. AEU Int. J. Electron. Commun. 2023, 159, 154471. [Google Scholar] [CrossRef]

- Lewandowski, M.; Majka, Ł. Combining optimal SASS (Sparsity Assisted Signal Smoothing) and notch filters for transient measurement signals denoising of large power generating unit. Measurement 2024, 237, 115174. [Google Scholar] [CrossRef]

- Pruski, P.; Paszek, S. Calculations of power system electromechanical eigenvalues based on analysis of instantaneous power waveforms at different disturbances. Appl. Math. Comput. 2018, 319, 104–114. [Google Scholar] [CrossRef]

- Lewandowski, M.; Majka, Ł.; Świetlicka, A. Effective estimation of angular speed of synchronous generator based on stator voltage measurement. Int. J. Electr. Power Energy Syst. 2018, 100, 391–399. [Google Scholar] [CrossRef]

- Układy Rejestracji—Kared Sp. z o.o. Oficjalna Strona Firmy. Available online: https://kared.pl/produkty/uklady-rejestracji/ (accessed on 30 May 2024).

- Micev, M.; Ćalasan, M.; Stipanović, D.; Radulović, M. Modeling the relation between the AVR setpoint and the terminal voltage of the generator using artificial neural networks. Eng. Appl. Artif. Intell. 2023, 120, 105852. [Google Scholar] [CrossRef]

- How to Perform a Step Test. Control Station, Inc., 642 Hilliard Street, Suite 2301, Manchester, Connecticut 06042, United States. 19 July 2016. Available online: https://controlstation.com/blog/perform-step-test/ (accessed on 30 May 2024).

- Kumar, K.; Singh, A.K.; Singh, R.P. Power System Stabilization Tuning and Step Response Test of AVR: A Case Study. In Proceedings of the 6th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 6–7 March 2020; pp. 482–485. [Google Scholar]

- Energotest Sp. z o.o., Cyfrowe Układy Wzbudzenia i Regulacji Napięcia Typu ETW. Available online: https://www.spie-energotest.pl/media/k-etw.pdf (accessed on 30 May 2024).

- Bethoux, O. PID Controller Design, Encyclopedia of Electrical and Electronic Power Engineering; Elsevier: Amsterdam, The Netherlands, 2023; pp. 261–267. [Google Scholar]

- Bingul, Z.; Karahan, O. A novel performance criterion approach to optimum design of PID controller using cuckoo search algorithm for AVR system. J. Frankl. Inst. 2018, 355, 5534–5559. [Google Scholar] [CrossRef]

- Jumani, T.A.; Mustafa, M.W.; Hussain, Z.; Rasid, M.M.; Saeed, M.S.; Memon, M.M.; Khan, I.; Nisar, K.S. Jaya optimization algorithm for transient response and stability enhancement of a fractional-order PID based automatic voltage regulator system. Alex. Eng. J. 2020, 59, 2429–2440. [Google Scholar] [CrossRef]

- Tan, L.; Jiang, J. Digital Signal Processing Fundamentals and Applications, 3rd ed.; Elsevier: Amsterdam, The Netherlands, 2019. [Google Scholar]

- Fan, G.; Li, J.; Hao, H. Vibration signal denoising for structural health monitoring by residual convolutional neural networks. Measurement 2020, 157, 107651. [Google Scholar] [CrossRef]

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Lin, C.-W. Deep learning on image denoising: An overview. Neural Netw. 2020, 131, 251–275. [Google Scholar] [CrossRef] [PubMed]

- Selesnick, I. Sparsity-assisted signal smoothing (revisited). In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 4546–4550. [Google Scholar]

- Niedźwiecki, M.; Ciołek, M. Fully Adaptive Savitzky-Golay Type Smoothers. In Proceedings of the 27th European Signal Processing Conference (EUSIPCO), A Coruna, Spain, 2–6 September 2019; pp. 1–5. [Google Scholar]

- Kozlowski, B. Time series denoising with wavelet transform. J. Telecommun. Inf. Technol. 2005, 3, 91–95. [Google Scholar] [CrossRef]

- Chatterjee, S.; Thakur, R.S.; Yadav, R.N.; Gupta, L.; Raghuvanshi, D.K. Review of noise removal techniques in ECG signals. IET Signal Process. 2020, 14, 569–590. [Google Scholar] [CrossRef]

- Selesnick, I.W.; Arnold, S.; Dantham, V.R. Polynomial Smoothing of Time Series With Additive Step Discontinuities. IEEE Trans. Signal Process. 2012, 60, 6305–6318. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J.E. Smoothing and Differentiation of Data by Simplified Least Squares Procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Vetterli, M.; Cormac, H. Wavelets and Filter Banks: Theory and Design. IEEE Trans. Signal Process. 1992, 40, 2207–2232. [Google Scholar] [CrossRef]

- Orfanidis, S.J. Introduction to Signal Processing; Prentice Hall Inc.: Saddle River, NJ, USA, 2009; Chapter 6, Section 6.4.3. [Google Scholar]

- Parks, T.W.; Burrus, C.S. Digital Filter Design; John Wiley & Sons: Hoboken, NJ, USA, 1987; Chapter 7, Section 7.3.3. [Google Scholar]

| Filtering Method | Optimization Algorithm | Optimized Parameters |

|---|---|---|

| Butterworth low-pass filter | Gradient | Filter cut-off frequency |

| 3 notch filters | Simulated annealing + gradient | Filters width |

| 3, 2, or 1 notch filter + low-pass Butterworth | Simulated annealing + gradient | Notch filter width, low-pass filter cut-off frequency |

| Optimal SASS | Simulated annealing + Nelder-Mead simplex | Low-pass cut-off frequency, regularization parameter |

| Errors and Goal Function Values | Low-Pass Filter | Notch Filter Width | ||||||

|---|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Order | fl, Hz | fw50, Hz | fw500, Hz | fw1000, Hz |

| No filtering | 100% | 100% | 100% | - | - | - | - | - |

| Low-pass filter | 87% | 1381% | 93% | 1 | 86 | - | - | - |

| 3 notch filters | 14% | 48% | 14% | - | - | 0.48 | 2.6 | 6.5 |

| 3 notch + low-pass | 14% | 97% | 14% | 1 | 1500 | 0.48 | 2.4 | 4.6 |

| 2 notch + low-pass | 18% | 482% | 21% | 4 | 898 | 0.48 | 2.0 | - |

| 1 notch + low-pass | 27% | 962% | 35% | 4 | 449 | 0.48 | - | - |

| Errors and Goal Function Values | SASS Parameters | ||||||

|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Derivative Order | Filter Order | fc, Hz | λ |

| No filtering | 100% | 100% | 100% | - | - | - | - |

| Optimal SASS | 11% | 140% | 11% | 1 | 1 | 11 | 1.06 |

| Optimal SASS | 7% | 110% | 7% | 1 | 2 | 20 | 1.11 |

| Optimal SASS | 40% | 1261% | 49% | 2 | 1 | 21 | 7.52 |

| Optimal SASS | 40% | 1259% | 49% | 2 | 2 | 32 | 7.67 |

| Errors and Goal Function Values | Low-Pass Filter | Notch Filter Width | ||||||

|---|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Order | fl, Hz | fw50, Hz | fw500, Hz | fw1000, Hz |

| No filtering | 100% | 100% | 100% | - | - | - | - | - |

| Low-pass filter | 13% | 68% | 13% | 4 | 38 | - | - | - |

| 3 notch filters | 19% | 28% | 19% | - | - | 2.18 | 983.3 | 986.0 |

| 3 notch + low-pass | 9.8% | 41.0% | 9.9% | 2 | 63 | 1.53 | 83.3 | 98.3 |

| 2 notch + low-pass | 9.8% | 42.5% | 9.9% | 2 | 61 | 1.40 | 115.5 | - |

| 1 notch + low-pass | 9.8% | 41.3% | 9.9% | 2 | 63 | 1.51 | - | - |

| Errors and Goal Function Values | SASS Parameters | ||||||

|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Derivative Order | Filter Order | fc, Hz | λ |

| No filtering | 100% | 100% | 100% | - | - | - | - |

| Optimal SASS | 16.6% | 63.9% | 16.8% | 1 | 1 | 21 | 0.07 |

| Optimal SASS | 12.8% | 72.7% | 13.0% | 1 | 2 | 31 | 0.08 |

| Optimal SASS | 4.6% | 21.5% | 4.7% | 2 | 1 | 1.86 | 3.82 |

| Optimal SASS | 4.8% | 18.5% | 4.8% | 2 | 2 | 12 | 2.33 |

| Errors and Goal Function Values | Low-Pass Filter | Notch Filter Width | ||||||

|---|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Order | fl, Hz | fw50, Hz | fw500, Hz | fw1000, Hz |

| No filtering | 100% | 100% | 100% | - | - | - | - | - |

| Low-pass filter | 15% | 80% | 16% | 4 | 40 | - | - | - |

| 3 notch filters | 24% | 29% | 24% | - | - | 2.1 | 303 | 381 |

| 3 notch + low-pass | 8.9% | 32.2% | 8.99% | 3 | 77 | 1.3 | 116 | 112 |

| 2 notch + low-pass | 9.0% | 30.3% | 9.04% | 3 | 82 | 1.5 | 119 | - |

| 1 notch + low-pass | 8.9% | 32.1% | 9.00% | 3 | 77 | 1.27 | - | - |

| Errors and Goal Function Values | SASS Parameters | ||||||

|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Derivative Order | Filter Order | fc, Hz | λ |

| No filtering | 100% | 100% | 100% | - | - | - | - |

| Optimal SASS | 24.4% | 96.6% | 24.6% | 1 | 1 | 32 | 0.04 |

| Optimal SASS | 14.5% | 70.7% | 14.7% | 1 | 2 | 32 | 0.05 |

| Optimal SASS | 7.1% | 25.0% | 7.1% | 2 | 1 | 0.41 | 2.23 |

| Optimal SASS | 6.8% | 27.8% | 6.9% | 2 | 2 | 12 | 0.92 |

| Errors and Goal Function Values | Low-Pass Filter | Notch Filter Width | ||||||

|---|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Order | fl, Hz | fw50, Hz | fw500, Hz | fw1000, Hz |

| No filtering | 100% | 100% | 100% | - | - | - | - | - |

| Low-pass filter | 6% | 35% | 6% | 3 | 26 | - | - | - |

| 3 notch filters | 7% | 37% | 7% | - | - | 13.0 | 998 | 999 |

| 3 notch + low-pass | 5.0% | 24.6% | 5.1% | 4 | 35 | 5.58 | 137 | 131 |

| 2 notch + low-pass | 5.0% | 23.7% | 5.0% | 3 | 37 | 3.20 | 69.2 | - |

| 1 notch + low-pass | 5.0% | 23.9% | 5.1% | 3 | 36 | 2.76 | - | - |

| Errors and Goal Function Values | SASS Parameters | ||||||

|---|---|---|---|---|---|---|---|

| Filtering Method | eRMS | eMAX | f | Derivative Order | Filter Order | fc, Hz | λ |

| No filtering | 100% | 100% | 100% | - | - | - | - |

| Optimal SASS | 16.3% | 71.6% | 16.4% | 1 | 1 | 19 | 0.10 |

| Optimal SASS | 7.2% | 45.8% | 7.4% | 1 | 2 | 22 | 0.89 |

| Optimal SASS | 5.5% | 39.2% | 5.6% | 2 | 1 | 0.34 | 4.52 |

| Optimal SASS | 6.0% | 39.2% | 6.1% | 2 | 2 | 12 | 3.48 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Łukaniszyn, M.; Lewandowski, M.; Majka, Ł. Comparison of Optimal SASS (Sparsity-Assisted Signal Smoothing) and Linear Time-Invariant Filtering Techniques Dedicated to 200 MW Generating Unit Signal Denoising. Energies 2024, 17, 4976. https://doi.org/10.3390/en17194976

Łukaniszyn M, Lewandowski M, Majka Ł. Comparison of Optimal SASS (Sparsity-Assisted Signal Smoothing) and Linear Time-Invariant Filtering Techniques Dedicated to 200 MW Generating Unit Signal Denoising. Energies. 2024; 17(19):4976. https://doi.org/10.3390/en17194976

Chicago/Turabian StyleŁukaniszyn, Marian, Michał Lewandowski, and Łukasz Majka. 2024. "Comparison of Optimal SASS (Sparsity-Assisted Signal Smoothing) and Linear Time-Invariant Filtering Techniques Dedicated to 200 MW Generating Unit Signal Denoising" Energies 17, no. 19: 4976. https://doi.org/10.3390/en17194976

APA StyleŁukaniszyn, M., Lewandowski, M., & Majka, Ł. (2024). Comparison of Optimal SASS (Sparsity-Assisted Signal Smoothing) and Linear Time-Invariant Filtering Techniques Dedicated to 200 MW Generating Unit Signal Denoising. Energies, 17(19), 4976. https://doi.org/10.3390/en17194976