1. Introduction

Nanoscale constrictions (sometimes referred to as point contacts or nanojunctions) are unique objects for the generation and investigation of ballistic electron transport in solids. Studies of such systems have been inspired by the pioneering investigations of Sharvin in the mid-1960s [

1]. Today, advances in fabrication techniques like direct growth of branched nanostructures [

2], electron beam irradiation [

3], thermal and electrical welding [

4], or atomic force microscope [

5] have allowed controlling the size and composition of nanojunctions for creating devices with desired functionalities. In this respect, a number of nanodevices based on nanojunctions like single electron transistors [

6,

7], field effect transistors [

8,

9], and heterostructure nanowires [

10,

11] have been recently reported, which promise great performance in terms of miniaturization and power consumption.

In the design of these nanostructures, simulation tools constitute a valuable alternative to the expensive and time-consuming test-and-error experimental procedure. A number of quantum electron transport simulators are available to the scientific community [

12,

13,

14,

15,

16]. The amount of information that these simulators can provide, however, is mainly restricted to the stationary regime, and therefore, their predicting capabilities are still far from those of the traditional Monte Carlo solution of the semi-classical Boltzmann transport equation [

17]. This limitation poses a serious problem in the near future as electron devices are foreseen to operate in the terahertz (THz) regime. At these frequencies, the discrete nature of electrons in the active region is expected to generate unavoidable fluctuations of the current that could interfere with the correct operation of such devices both for analog and digital applications [

18].

A formally exact approach to electron transport beyond the quasi-stationary regime relies in the modeling of the active region of electron devices as an open quantum system [

19,

20]. As such, one can then borrow any state-of-the-art mathematical tool developed to study open quantum systems [

21,

22]. A preferred technique has been the stochastic Schrödinger equation (SSE) approach [

23,

24,

25,

26,

27,

28,

29,

30]. Instead of directly solving equations of motion for the reduced density matrix, the SSE approach exploits the state vector nature of the so-called conditional states to alleviate some computational burden [

31].

As an example of the practical utility of the SSE, a Monte Carlo simulation scheme to describe quantum electron transport in open systems that is valid both for Markovian or non-Markovian regimes guaranteeing a dynamical map that preserves complete positivity has been recently proposed [

32]. The resulting algorithm for quantum transport simulations reformulates the traditional “curse of dimensionality” that plagues all state-of-the-art techniques for solving the time-dependent Schrödinger equation (TDSE). Specifically, the algorithm consists of the solution of an ensemble of single-particle SSEs that are coupled, one to each other, through effective Coulombic potentials [

33,

34,

35]. Furthermore, the simulation technique accounts for dissipation [

36] and guarantees charge and current conservation through the use of self-consistent time-dependent boundary conditions [

37,

38,

39] that partially incorporate exchange correlation [

39,

40]. Solving a large number of three-dimensional (3D) single-particle TDSEs, however, may still be a very time-consuming task. Therefore, the above technique would greatly benefit from the possibility of further reducing the dimensionality of the associated numerical problem.

It is the purpose of this work to derive and discuss a method that allows solving the 3D TDSE in terms of an ensemble of one-dimensional (1D) TDSEs. The technique is inspired by the so-called Born–Huang ansatz [

41], which is a fundamental tool in the context of ab-initio molecular dynamics that allows separating fast and slow degrees of freedom in an effective way [

42]. Here, we consider an analogous ansatz to separate transport and confinement directions. As it will be shown, the resulting technique allows to describe arbitrary geometric correlations in terms of a coupled set of 1D TDSEs. Therefore, while we have motivated the development of this method in the context of the simulation of (non-Markovian) quantum transport in open systems, the method presented here could be of great utility in many research fields where the reduction of the computational cost associated with the solution of an ensemble of SSEs may be advantageous. This includes, for example, the description of spin thermal transport [

43,

44], thermal relaxation dynamics [

19,

45], ionic motion [

46,

47], or Bose–Einstein condensates [

48,

49,

50] in terms of SSEs.

The manuscript is structured as follows. In

Section 2, we introduce a Born–Huang-like ansatz that allows expanding the 3D single-particle TDSE in terms of an infinite set of (transverse) eigenstates weighted by (longitudinal) complex coefficients. The equations of motion for the coefficients are found to be coupled and obey a non-unitary partial differential equation. In

Section 3, we apply the method to a typical 2D constriction.

Section 3.1 is devoted to finding analytical expressions for the effective potentials that appear in the equation of motion of the coefficients. A discussion on the geometrical dependence of these effective potentials is provided. In

Section 3.2, we illustrate the performance of the method to describe the dynamics of an electron across a 2D nanojunction. In

Section 4, we provide a thorough discussion on the advantages and potential drawbacks of the method. We conclude in

Section 5.

2. Single-Electron Time-Dependent Schrödinger Equation in a Born–Huang-Like Basis Expansion

As we explained in the Introduction, it is our goal to reduce the computational burden associated with the solution of an ensemble of effective single-electron 3D SSE [

32]. We consider our starting point to be the 3D TDSE of a single (spin-less) electron in the position basis, i.e.,

where we have used atomic units,

x,

y, and

z represent the three spatial coordinates, and

is a well normalized wavefunction, i.e.,

. In Equation (1),

is the full Hamiltonian of the system:

which has been assumed to be time-independent for simplicity. The time-dependence of the scalar potentials

and

will be discussed in later sections. In Equation (2),

and

are, respectively, the kinetic energy and the scalar potential associated with the longitudinal degree of freedom

x, while

and

are the kinetic energies associated with the transversal degrees of freedom

y and

z. The scalar potential

includes any other scalar potential that is not purely longitudinal, which is responsible for making the solution of Equation (1) non-separable.

It is convenient at this point to rewrite the Hamiltonian in Equation (2) in terms of longitudinal and transverse components as:

where

is the transverse Hamiltonian defined as:

An eigenvalue equation associated with the transverse Hamiltonian can now be introduced as follows:

where

and

are the corresponding eigenvalues and eigenstates respectively, and

. The eigenstates

form a complete orthonormal basis in which to expand the Hilbert space spanned by the variables

x,

y, and

z. Therefore, the 3D wavefunction in Equation (1) can be expressed in terms of transverse eigenstates

as:

where

are complex longitudinal coefficients associated with the transverse eigenstate

. Unless otherwise stated, all integrals are evaluated from

to ∞. It is important to note that since the longitudinal variable

x appears as a parameter in Equation (5), the transverse eigenstates obey the following normalization condition:

In addition, since the full wavefunction

is well normalized, then the longitudinal complex coefficients

in Equation (6) fulfill the condition:

The wavefunction expansion in Equation (6) can now be introduced into Equation (1) to obtain an equation of motion for the coefficients

(see

Appendix A):

where

are effective potential energies (that correspond to the eigenvalues in Equation (5)) and

and

are geometric (first and second order) coupling terms, which read:

Since the transverse eigenstates are the energy eigenstates of a bounded system and can always be chosen to be real, the term is zero by construction. The other terms in Equation (10) dictate the transfer of the probability presence between different longitudinal coefficients and, therefore, will be called geometric non-adiabatic couplings (GNACs). Accordingly, one can distinguish between two different dynamic regimes in Equation (9):

- (i)

Geometric adiabatic regime: This is the regime where and are both negligible. Thus, the solution of Equation (9) can be greatly simplified because it involves only one transverse eigenstate.

- (ii)

Geometric non-adiabatic regime: This is the regime where either or both and are important. Thus, the solution of Equation (9) involves the coupling between different longitudinal coefficients and hence more than one transverse eigenstate.

Interestingly, the prevalence of either the regimes (i) or (ii) can be estimated by rewriting the first order coupling terms

as (see

Appendix B for an explicit derivation):

That is, the importance of non-adiabatic transitions between transverse eigenstates depends on the interplay between the transverse potential-energy differences

and the magnitude of the classical force field, which is proportional to

. The geometric adiabatic regime (i) is reached either when the classical force field is very small or the energy differences

are large enough. In the adiabatic regime, only the diagonal terms,

, are retained, which induce a global shift of the potential-energies

felt by the longitudinal coefficients

. In this approximation, the longitudinal degree of freedom moves in the potential-energy of a single transverse state, i.e.,

. This regime is analogous to the so-called Born–Oppenheimer approximation in the context of molecular dynamics [

51], where the term

is often called the Born–Oppenheimer diagonal correction [

52]. As will be shown in our numerical example, the evolution of the system can be governed either by the geometric adiabatic or nonadiabatic regime depending on the particular spatial region where the dynamics occurs.

Let us notice at this point that the time-dependence of the Hamiltonian in Equation (2) may arise either due to a purely longitudinal time-dependent scalar potential or through the time-dependence of the non-separable potential . If the time-dependence is added only through , then nothing changes in the above development. On the contrary, if a time-dependence is included in , then the eigenstate problem in Equation (5) changes with time and so do the effective potential-energies and the first and second order GNACs and . As it will be shown later, in this circumstance, Equation (5) should be solved self-consistently with Equation (9).

Before we move to a practical example implementing the above reformulation of the 3D TDSE, let us emphasize that it is the main goal of the set of coupled equations in Equation (9) to allow the evaluation of relevant observables in terms of 1D wavefunctions only. In this respect, let us take, for example, the case of the reduced probability density

. Using the basis expansion in Equation (6),

can be written as:

and using the condition in Equation (7), the above expression reduces to:

Therefore, according to Equation (13), the reduced (longitudinal) density is simply the sum of the absolute squared value of the longitudinal coefficients

, which is in accordance with Equation (8), i.e.,

. Similarly, other relevant observables, such as the energy can easily be derived using the expansion in Equation (6) (see

Appendix C).

3. Application of the Method to a Typical Constriction

The above formulation can be cast in the form of a numerical scheme to solve the 3D TDSE, which we will call, hereafter, geometrically correlated 1D TDSE (GC-TDSE). The scheme can be divided into two different parts corresponding to their distinct mathematical nature. The first part involves the solution of the eigenvalue problem in Equation (5), which allows evaluating the transverse eigenstates and eigenvalues , as well as geometric non-adiabatic couplings and in Equation (10). These quantities are required in the second part of the algorithm to solve the equation of motion of the longitudinal coefficients in Equation (9), which ultimately allow us to evaluate the observables of interest.

In what follows, we discuss these two aspects of the algorithm for a typical 2D geometric constriction whose geometry does not change in time. We consider one degree of freedom in the transport direction and one degree of freedom in the transverse (or confinement) direction. The generalization to a 3D system, i.e., with two transverse degrees of freedom, is straightforward and does not add any physical insight with respect to the 2D case. As will be shown, the transverse eigenstates and eigenvalues, as well as the geometric non-adiabatic couplings are, for a time-independent constriction, functions that are computed only once. That is, the effective potential-energies and the non-adiabatic couplings and are computed only at the very beginning of the GC-TDSE propagation scheme. For more general time-dependent constrictions, possibly with no analytical form of , the only change in the algorithm is that Equation (5) has to be solved, self-consistently, together with Equation (9) at each time step.

3.1. Evaluation of Transverse Eigenstates (and Values) and Geometric Non-Adiabatic Couplings

Let us consider the case of a 2D nanojunction represented by the scalar potential:

where

and

define the shape of the constriction. The corresponding 2D Hamiltonian reads

where

. The wavefunction for a 2D constriction in terms of the Born–Huang expansion can be written as

, and the transverse states

are solutions of a free particle in a 1D box whose width depends on the longitudinal variable

x, i.e.,

The associated eigenvalues are given by:

where we have defined

. These energies, parametrically dependent on the longitudinal variable

x, define the effective potential-energies on which the coefficients

evolve.

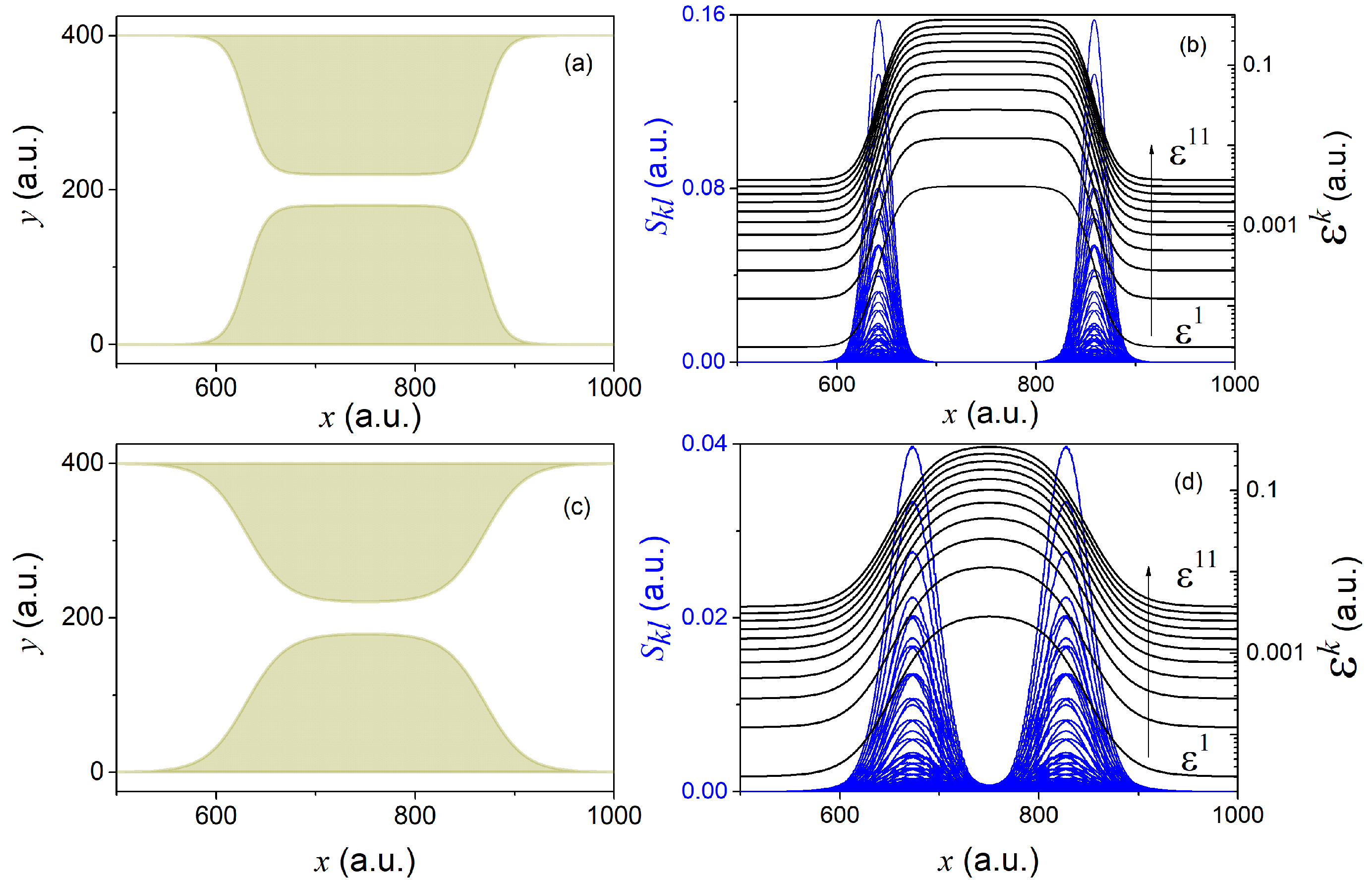

To evaluate the first and second order coupling terms

and

, we need to rely on a particular form of the constriction. Depending on the specific form of

and

, different constrictions can be conceived (see for example panels (a) and (c) of

Figure 1). Given the states in Equation (16) and a particular shape of the constriction (defined in Equation (A18a,b) of

Appendix D), it is then easy to evaluate the non-adiabatic coupling terms

and

(see panels (b) and (d) of

Figure 1).

The two different constrictions in

Figure 1 serve well to gain some insight into the general form and dependence of the effective potential-energies

, as well as of the GNACs in Equation (10). Geometries changing more abruptly lead to sharper effective potential-energies

and more peaked non-adiabatic coupling terms

and

. Sharper constrictions are thus expected to cause larger non-adiabatic transitions and hence to involve a larger number of transverse eigenstates requiring a larger number of longitudinal coefficients in order to reconstruct the reduced (longitudinal) density in Equation (12). On the contrary, smoother constrictions should yield softer non-adiabatic transitions and hence involve a smaller number of transverse eigenstates.

3.2. Time-Dependent Propagation of the Longitudinal Coefficients

Given the effective potential-energies

and the non-adiabatic couplings

and

, one can then easily find a solution for the longitudinal coefficients in Equation (9). Here, we consider the dynamics of an electron that impinges upon a constriction defined by Equations (14) and (A18a,b) in

Appendix D using the particular set of parameters,

, which corresponds to panel (c) of

Figure 1. Due to the symmetry of the states defined in Equation (16), transitions between odd and even states are forbidden, i.e.,

We will consider two different initial states. On the one hand, the initial wavefunction

will be described by:

where

is the transverse ground state defined in Equation (16), and

is a minimum uncertainty (Gaussian) wave packet with initial momentum and dispersion

a.u. and

a.u., respectively, and centered at

a.u. (while

is a normalization constant). On the other hand, we will consider the initial state to be defined by:

where now both

and

(defined above) are Gaussian wave packets. In particular,

with

a.u.,

a.u. (and

a normalization constant). The probability densities

associated with the above two initial states can be seen, respectively, in panels (a) and (b) of

Figure 2. Given the initial states in Equations (19) and (20), we can then evaluate the corresponding longitudinal coefficients as follows:

While the initial state in Equation (19) corresponds to , the state in Equation (20) involves a number of transverse eigenstates. Note that this second initial condition may be more realistic in practical situations, as large enough reservoirs may imply a quasi-continuum of transverse states according to Equation (17).

Starting either from Equation (19) or (20), we then propagate the resulting longitudinal coefficients at the initial time according to Equation (9). Specifically, we used a fourth order Runge–Kutta method with a time-step size of

a.u. and a spatial grid of 1500 points with a grid spacing

a.u. In the left panels of

Figure 3 and

Figure 4, we show the time-dependent reduced density of Equation (13) evaluated from the full 2D wavefunction (dashed green line), as well as the reduced density

evaluated using the GC-TDSE scheme for a finite number of transversal states

, i.e.,

In addition, we also show the absolute squared value of the longitudinal coefficients, i.e.,

, evaluated using the GC-TDSE. Alternatively, in the right panels of

Figure 3 and

Figure 4, we plot the population of each transverse state,

as a function of time using the GC-TDSE.

The initial state in Equation (19) yields

. This can be seen in the right-hand panel of

Figure 3. This value stays constant until the wave packet hits the constriction at around

a.u. At this moment, non-adiabatic transitions between different transverse states start to occur that lead to complicated interference patterns at later times (see, e.g., the reduced density

at

a.u.). The number of significantly populated transverse states increases up to six (while up to eleven states are required to reproduce the exact reduced density up to a 0.1% error). Among these states, only odd transverse states are accessible due to the symmetry of the initial state (as we noted in Equation (18)). Since the mean energy of the initial state in Equation (19) (

a.u.) is higher than the barrier height of the first effective potential-energy in

Figure 1d (

a.u.), one could naively expect a complete transmission of the wave packet

. However, due to the effect of the non-adiabatic coupling terms

and

,

loses a major part of its population in favor of higher energy transverse components that are reflected by much higher effective potential-energy barriers.

Starting with the second initial state in Equation (20), there are up to seven transverse states populated at the initial time, all of which are again odd states due to the symmetry of the initial conditions (see

Figure 4d). Once the wave packet hits the constriction at

a.u, States 3, 7, and 9 become more dominant than the previously dominant States 1, 3, and 5. Overall, up to 15 states become important to reproduce the exact reduced longitudinal density within a 0.1% error. Given the above two examples, it seems clear that the specific form of the impinging wavefunction does play a significant role in the scaling of the number of relevant transverse states

required to evaluate Equation (9) numerically.

Let us finally consider the effect that an external bias along the longitudinal direction might have on the number

of transverse eigenstates required to reproduce the solution of the full 2D TDSE. For that, starting with the state in Equation (20), we consider the transmission coefficient

for different values of the external potential

in Equation (15). Written in terms of the Born–Huang expansion in Equation (6), the transmission coefficient

T reads:

where

is the center of the nanojunction in the longitudinal direction (i.e., with numerical value 750 a.u.) and

is the time at which no probability density (i.e., less than 0.1%) remains inside the constriction. In

Figure 5, we show results for applied bias

and two different number of states

and

. As expected, a higher applied bias leads to a more vigorous collision of the wave packet against the constriction due to a higher longitudinal momentum/energy, which allows higher energy transverse states to be populated.

4. General Discussion

The GC-TDSE algorithm discussed in the previous sections has a clear computational advantage over the solution of the full 2D TDSE. This is particularly so when the quantities , , and , involved in the equation of motion of the longitudinal coefficients , are time-independent functions. For a time-independent transverse Hamiltonian, the quantities , , and are computed only once before the propagation of the longitudinal coefficients, and thus, the computational cost of the GC-TDSE resides, mainly, on the propagation of the 1D longitudinal coefficients.

Let us provide some numbers to estimate the numerical efficiency of the GC-TDSE algorithm. Consider the numerical solution of the full 2D TDSE in a grid. For a number of grid points , the resulting Hamiltonian has a dimension . Alternatively, the size of the Hamiltonian involved in the propagation of the longitudinal coefficients of the GC-TDSE algorithm is , where is the number of transverse eigenstates. One can then estimate the numerical efficiency of one method over the other by simply evaluating the ratio . Thus, for time-independent transverse potentials , the computational reduction associated with the GC-TDSE is . Note that the benefits of the GC-TDSE would be even more noticeable when applied to a 3D problem, for which the above ratio would become .

As we have seen in the above section, the number of required transverse states

is a function of the abruptness/smoothness of the constriction, but also of the energy of the impinging wave packet. Therefore, the computational advantage of the GC-TDSE method over the full dimensional TDSE is clearly system-dependent. Slow wave packets impinging upon smooth constrictions would maximize the benefits of the GC-TDSE. Contrarily, very energetic electrons colliding against abrupt constrictions would certainly minimize its benefits. In this respect, we must note that the GNACs have a clear dependence on the profile of the constriction. In particular, the second order coupling terms

will be sharply peaked for very abrupt constrictions (see the important differences in the size and sharpness of the

in

Figure 1 for two different constrictions). Therefore, due to the non-unitary character of the equations of motion of the longitudinal coefficients, very abrupt constrictions may demand very fine grids in practice.

Finally, let us mention that whenever the transverse Hamiltonian in Equation (4) is time-dependent, the advantage of the GC-TDSE method compared to the solution of the full dimensional TDSE is not so obvious. As we have already noticed, for a time-dependent transverse potential , the eigenvalue problem in Equation (5) must be solved self-consistently with Equation (9), i.e., at each time step. Then, a comparison of the GC-TDSE and the full dimensional TDSE in terms of numerical efficiency will depend on the specific performance of the eigensolver utilized to evaluate the transverse eigenvalues, , and eigenstates .