Artificial Neural Network Structure Optimisation in the Pareto Approach on the Example of Stress Prediction in the Disk-Drum Structure of an Axial Compressor

Abstract

1. Introduction

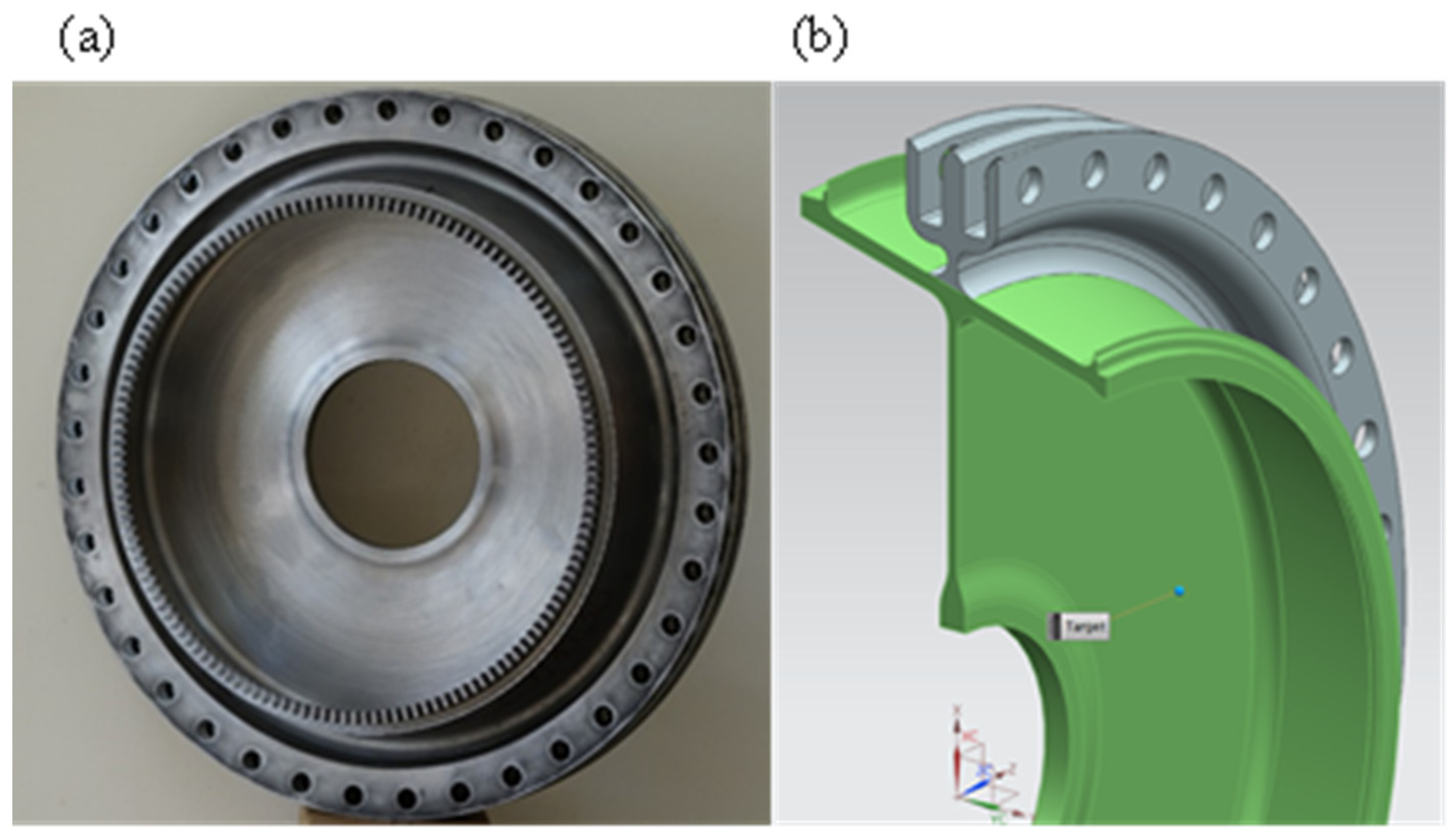

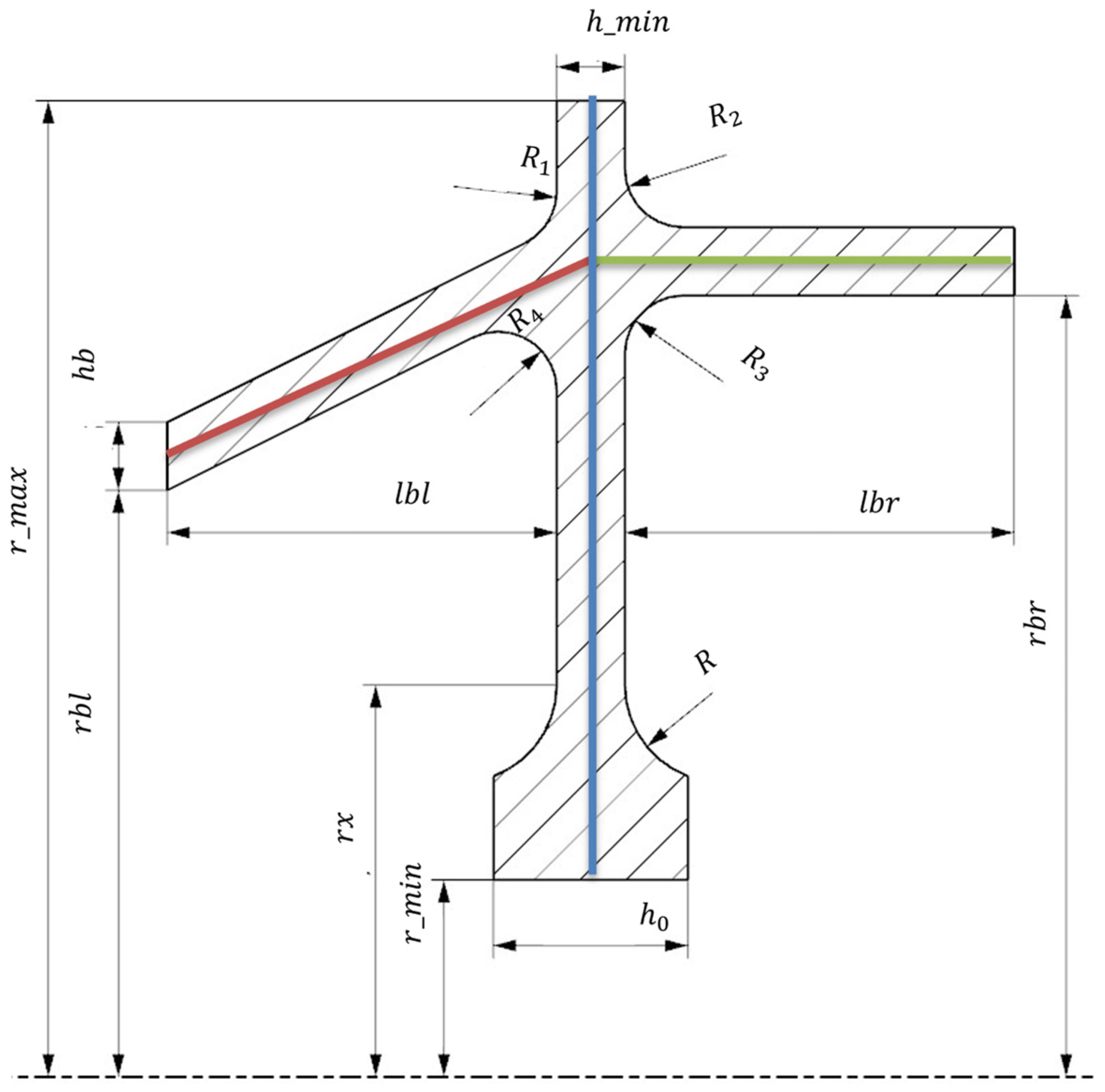

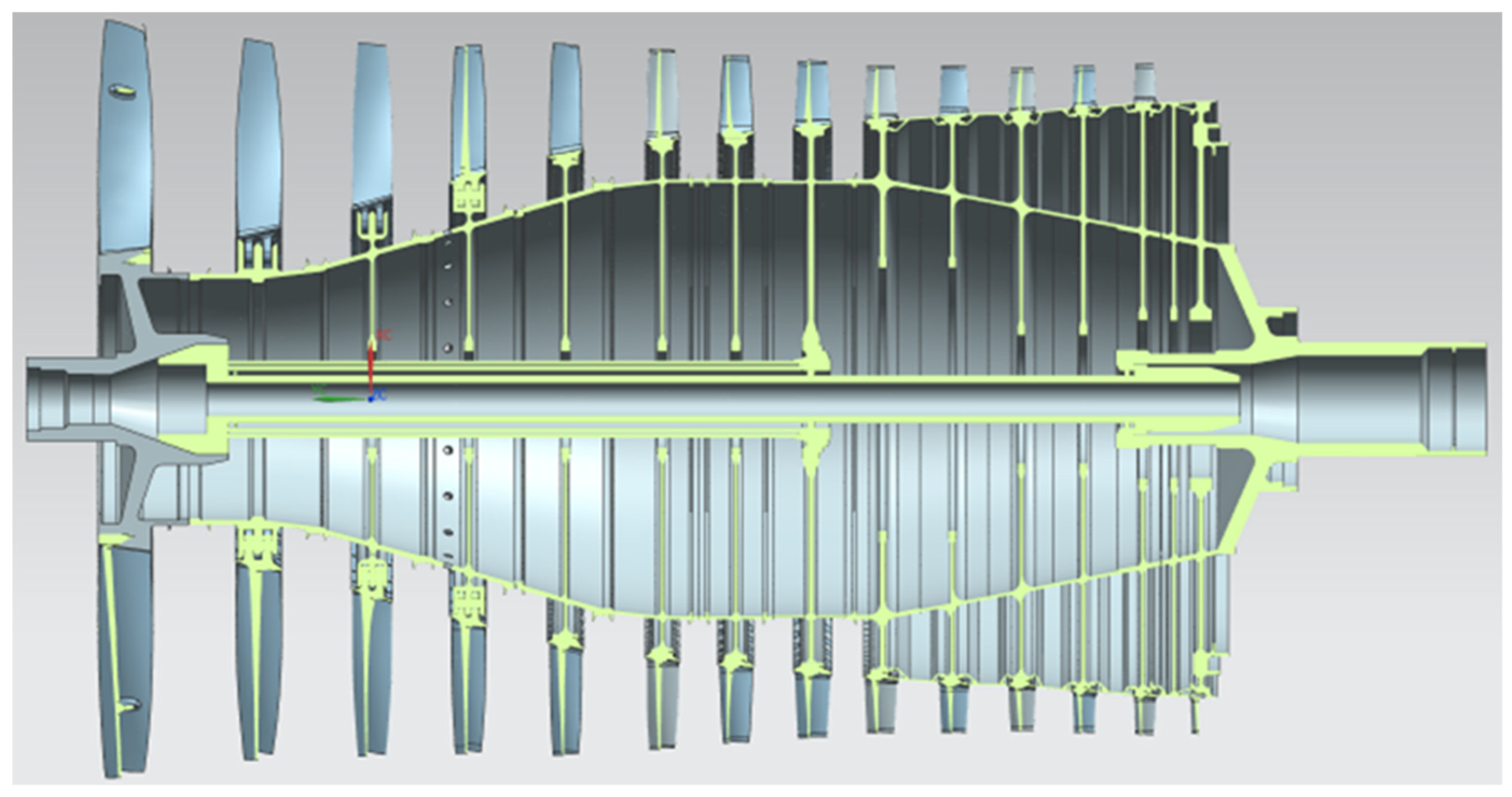

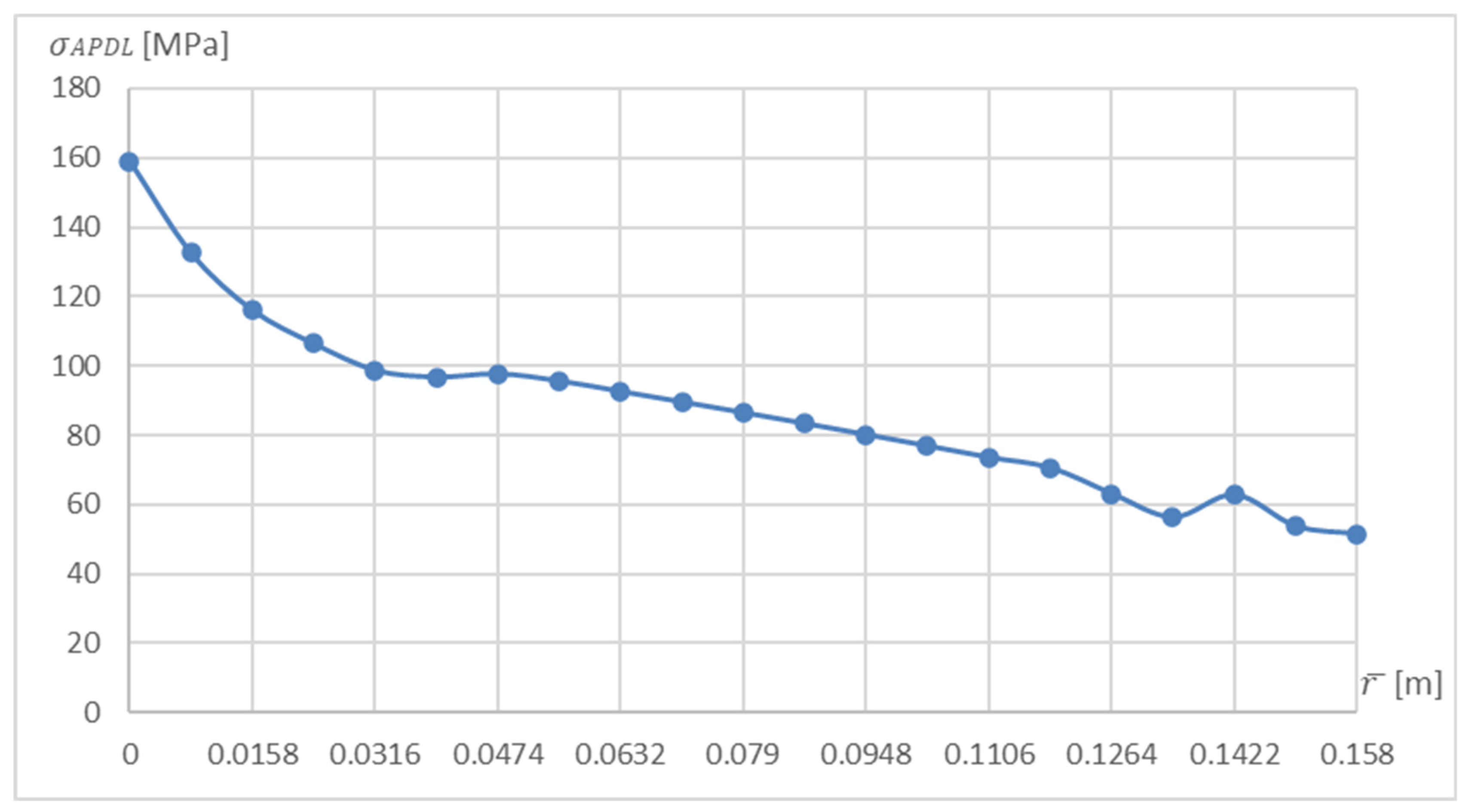

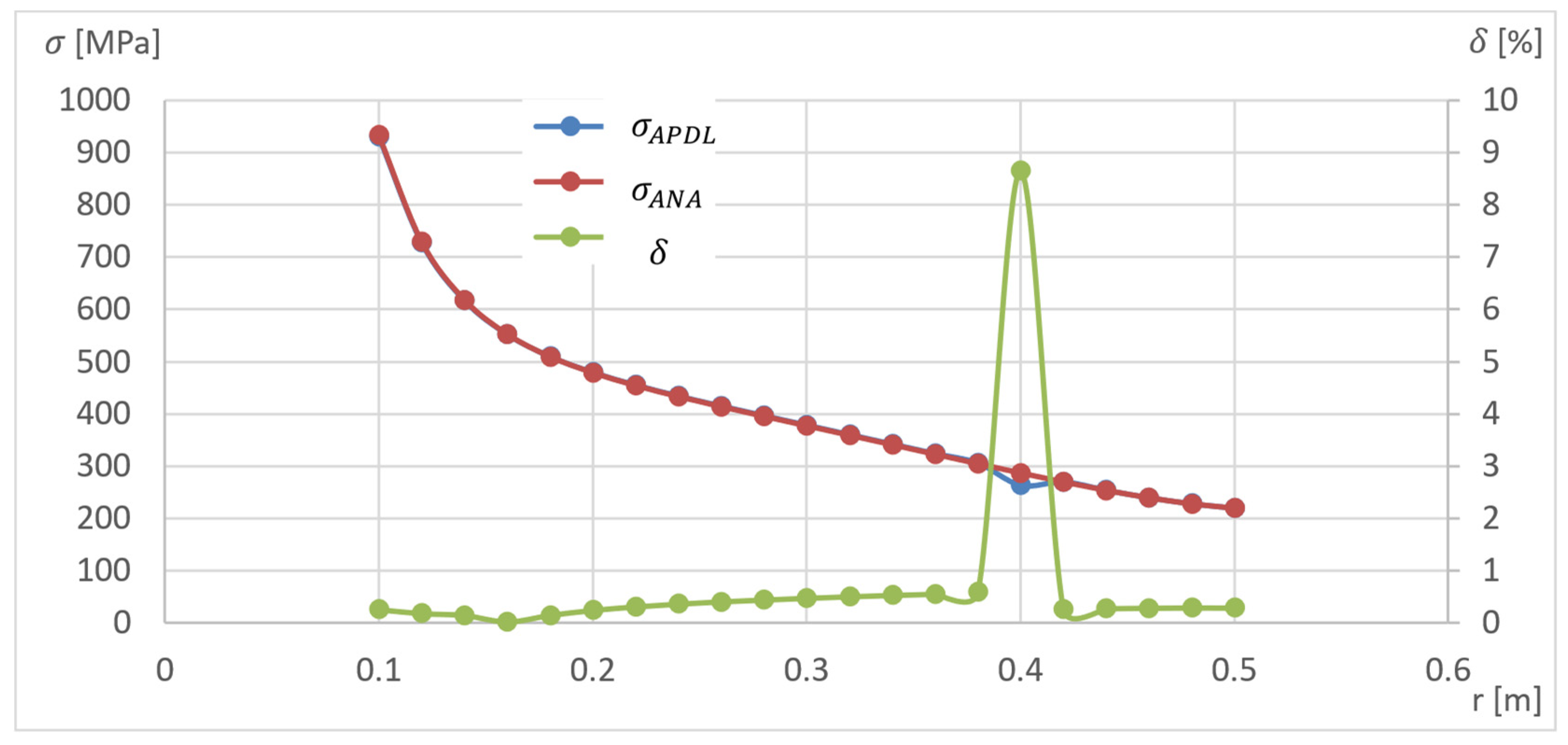

2. Use of the APDL Language to Determine the Disk Stresses

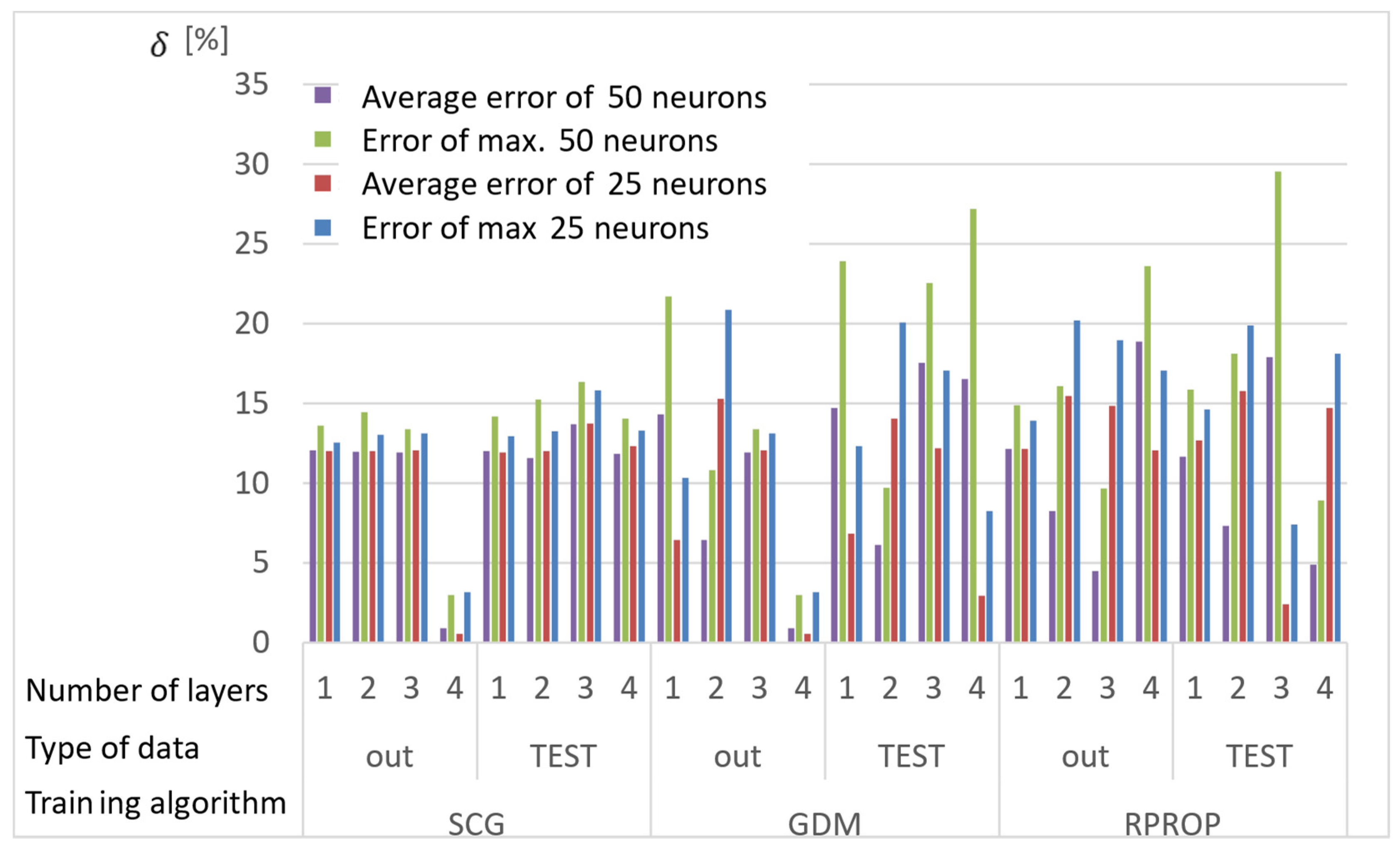

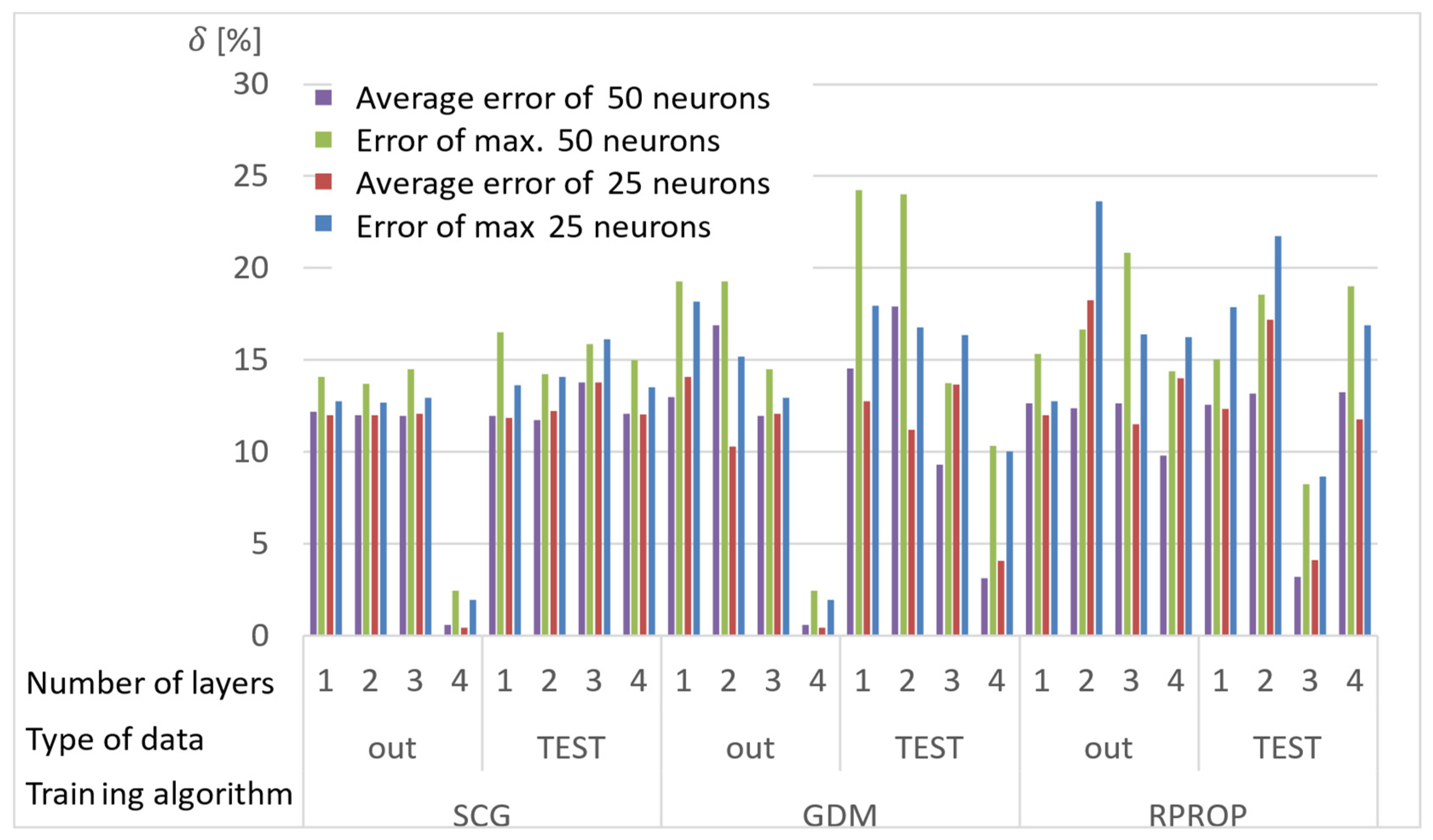

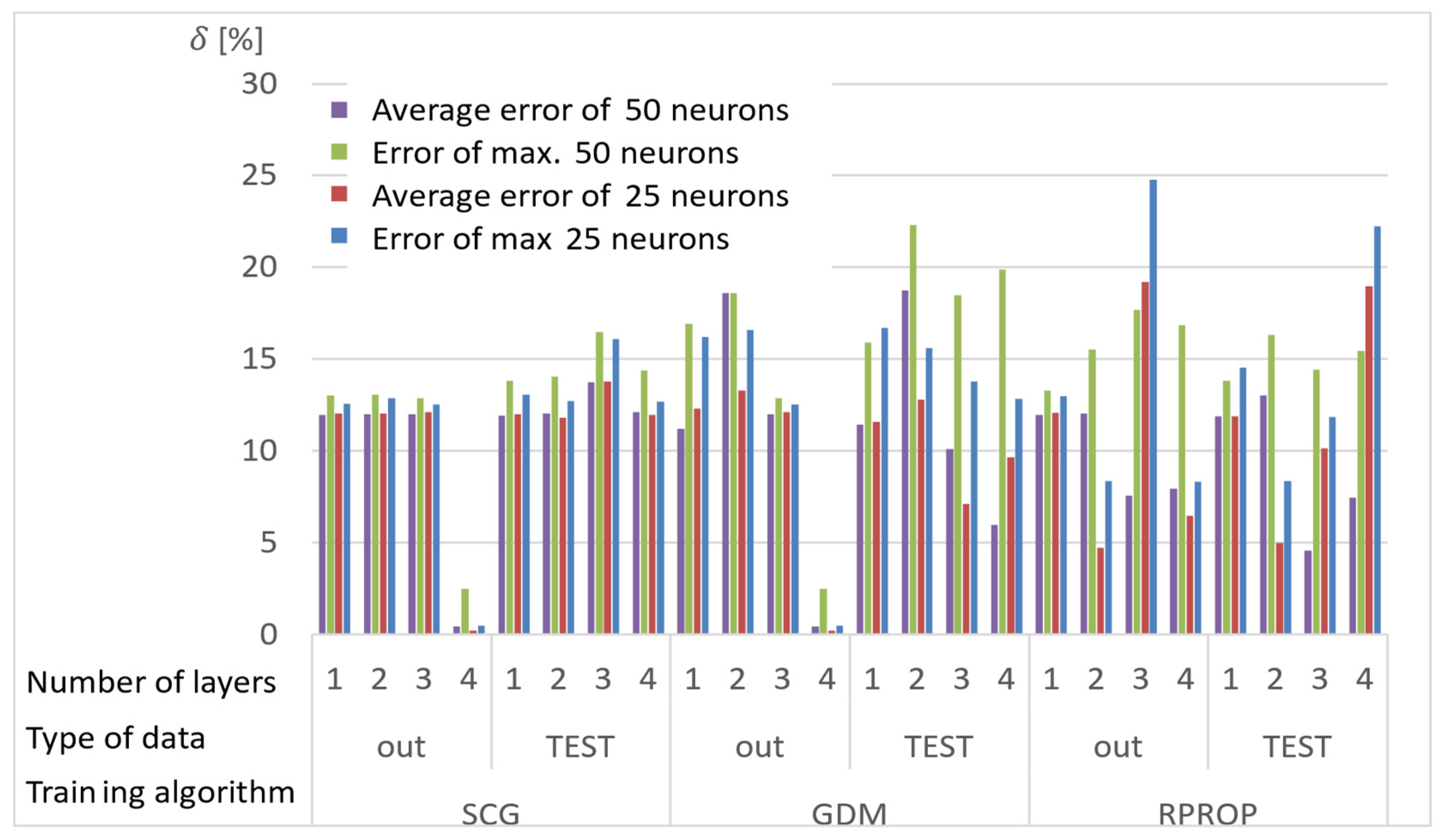

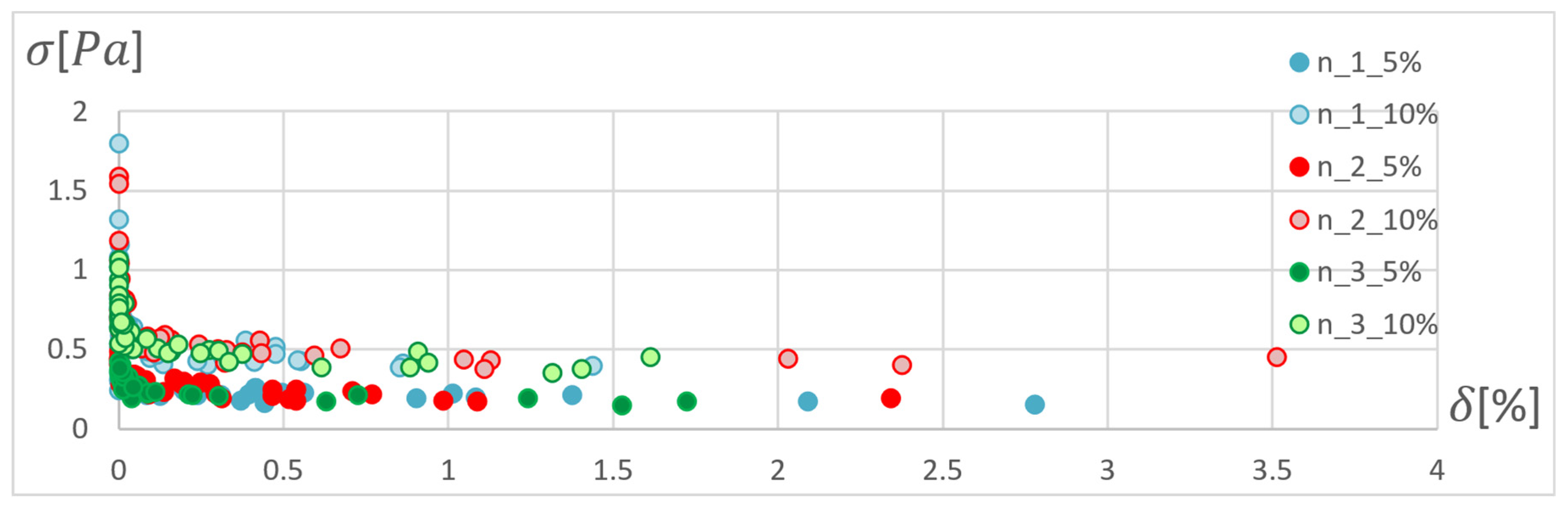

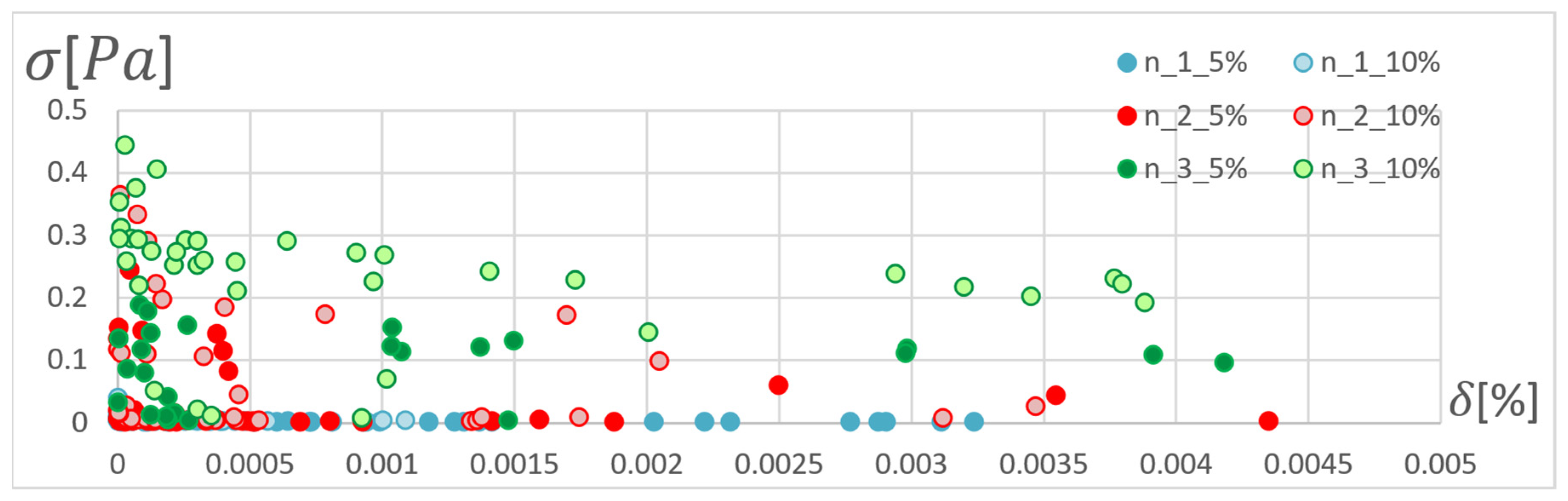

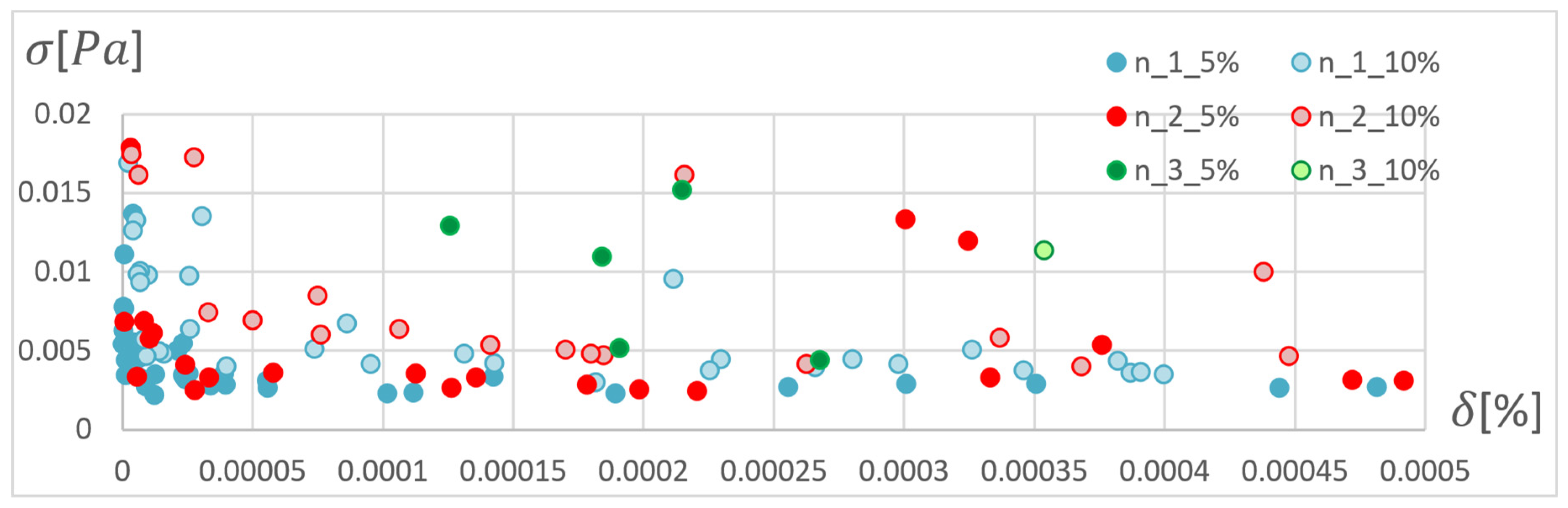

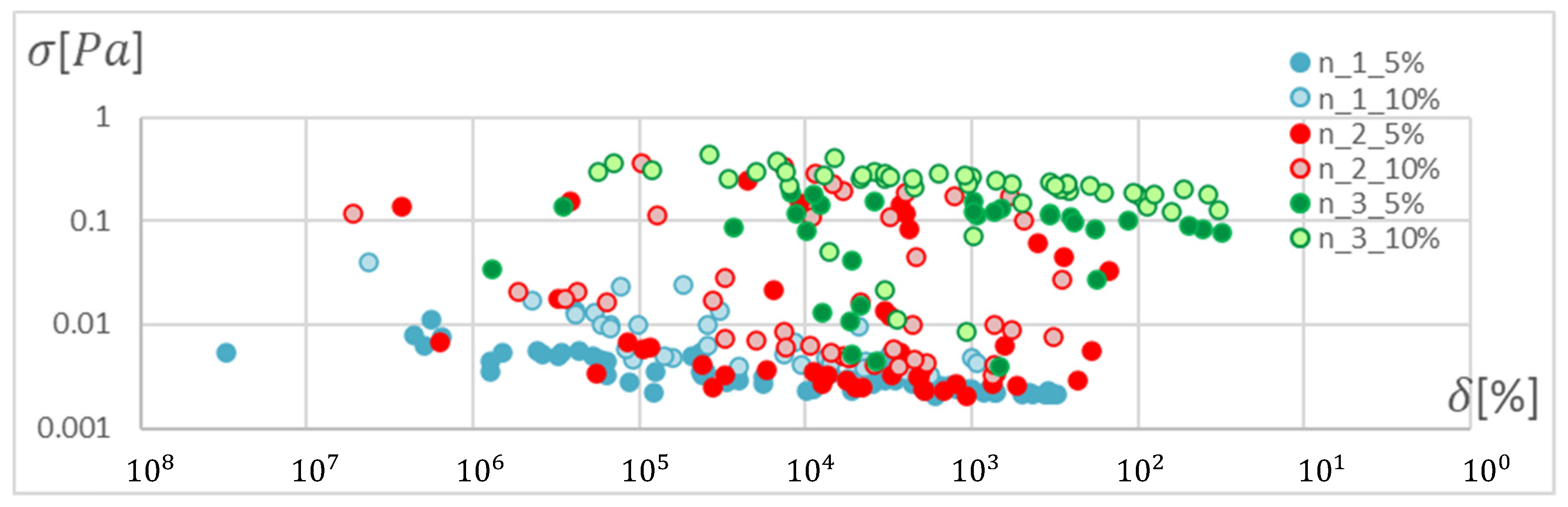

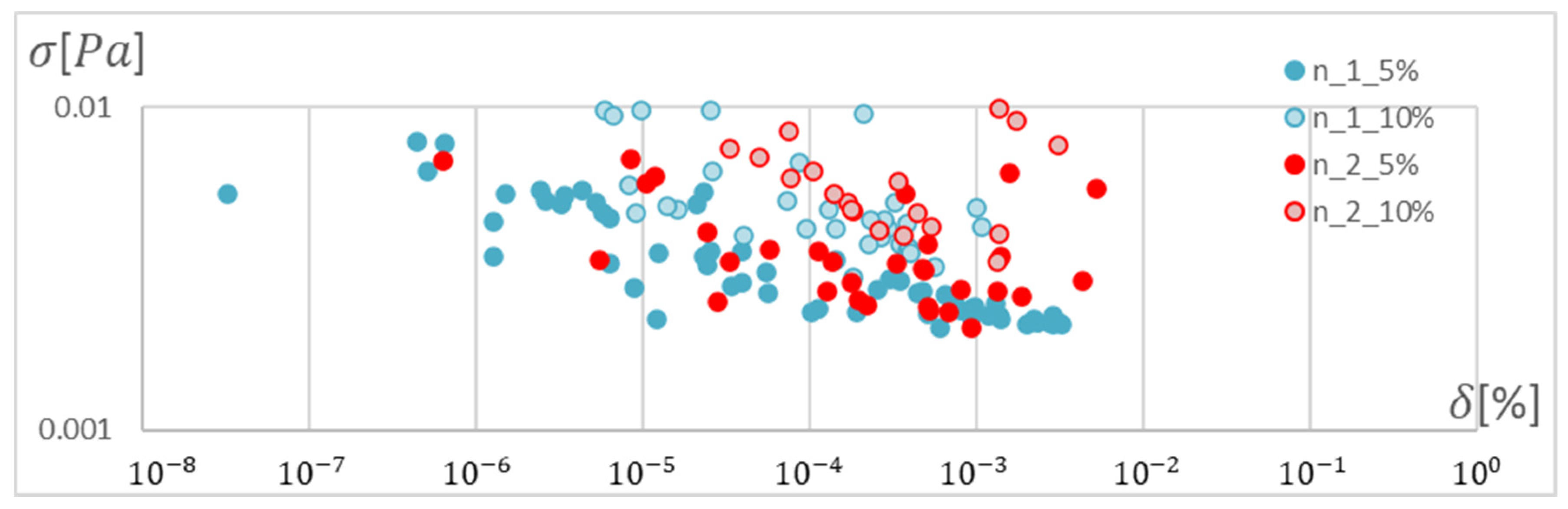

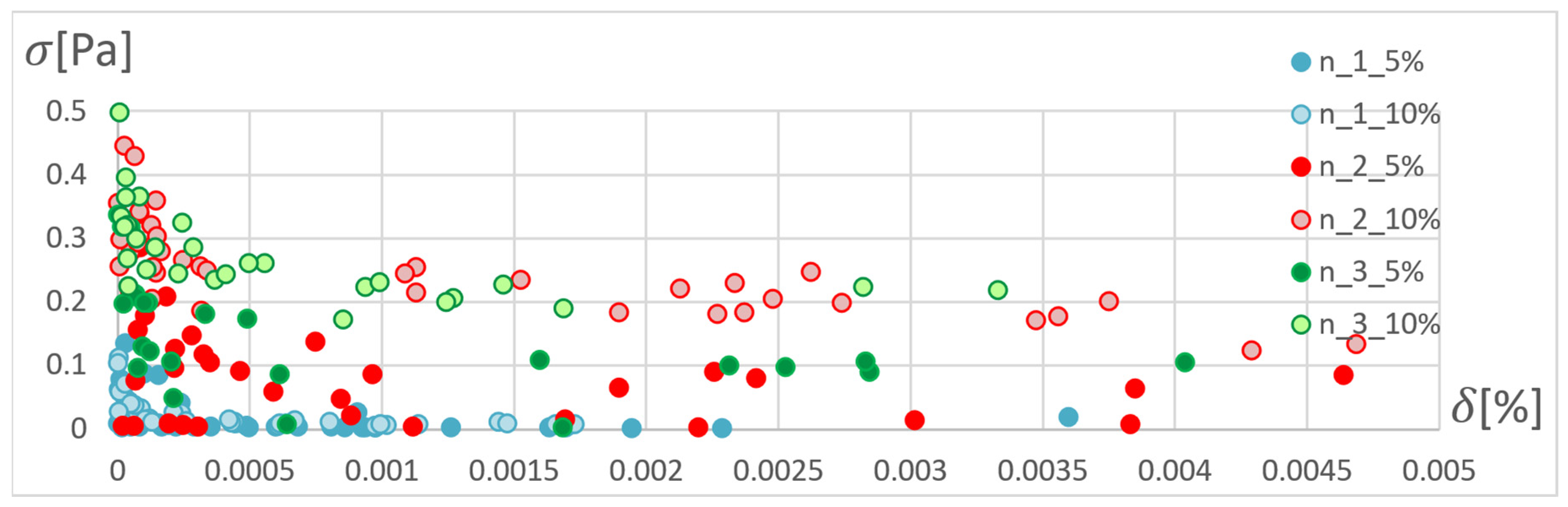

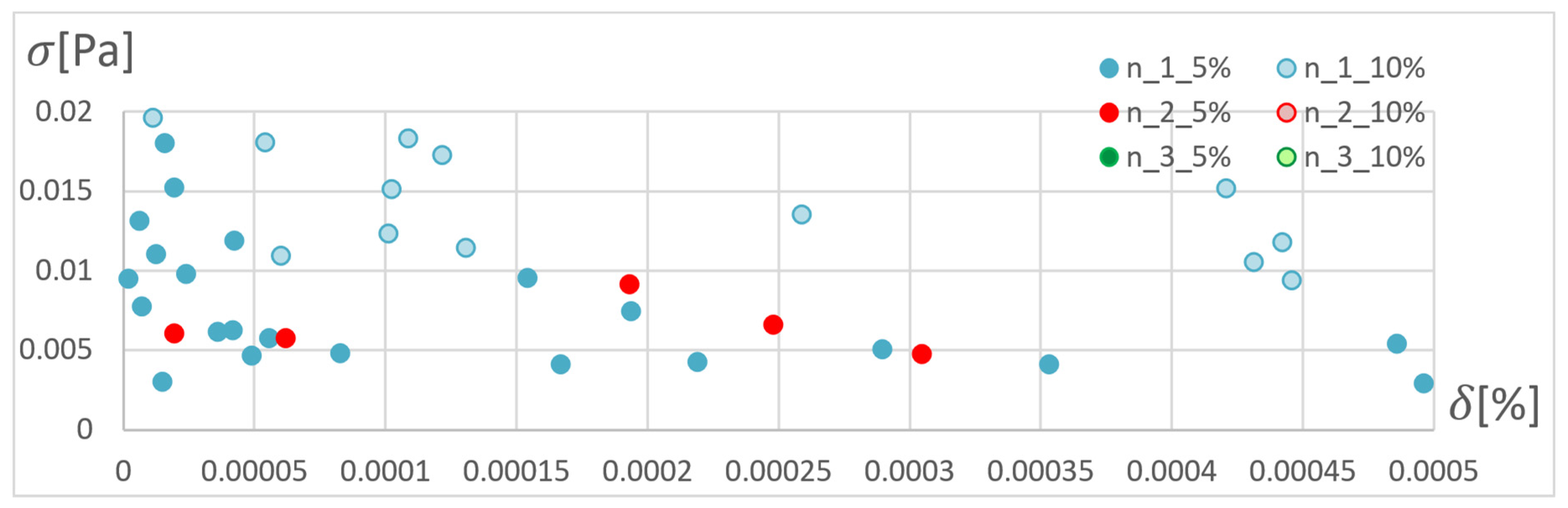

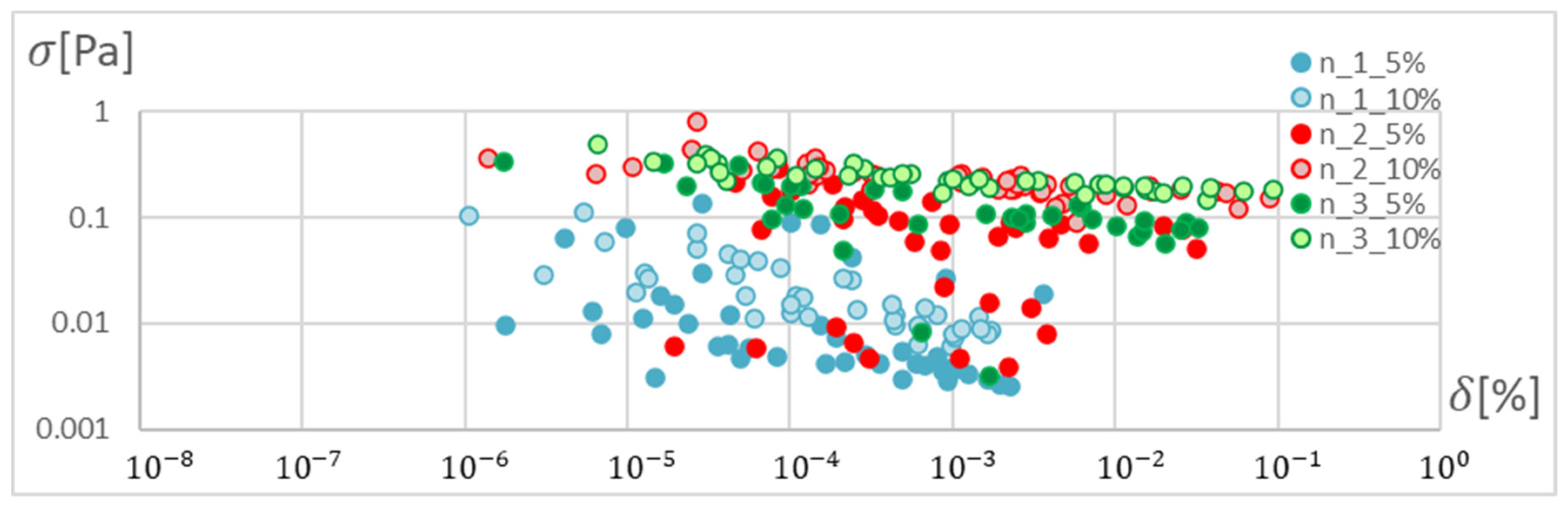

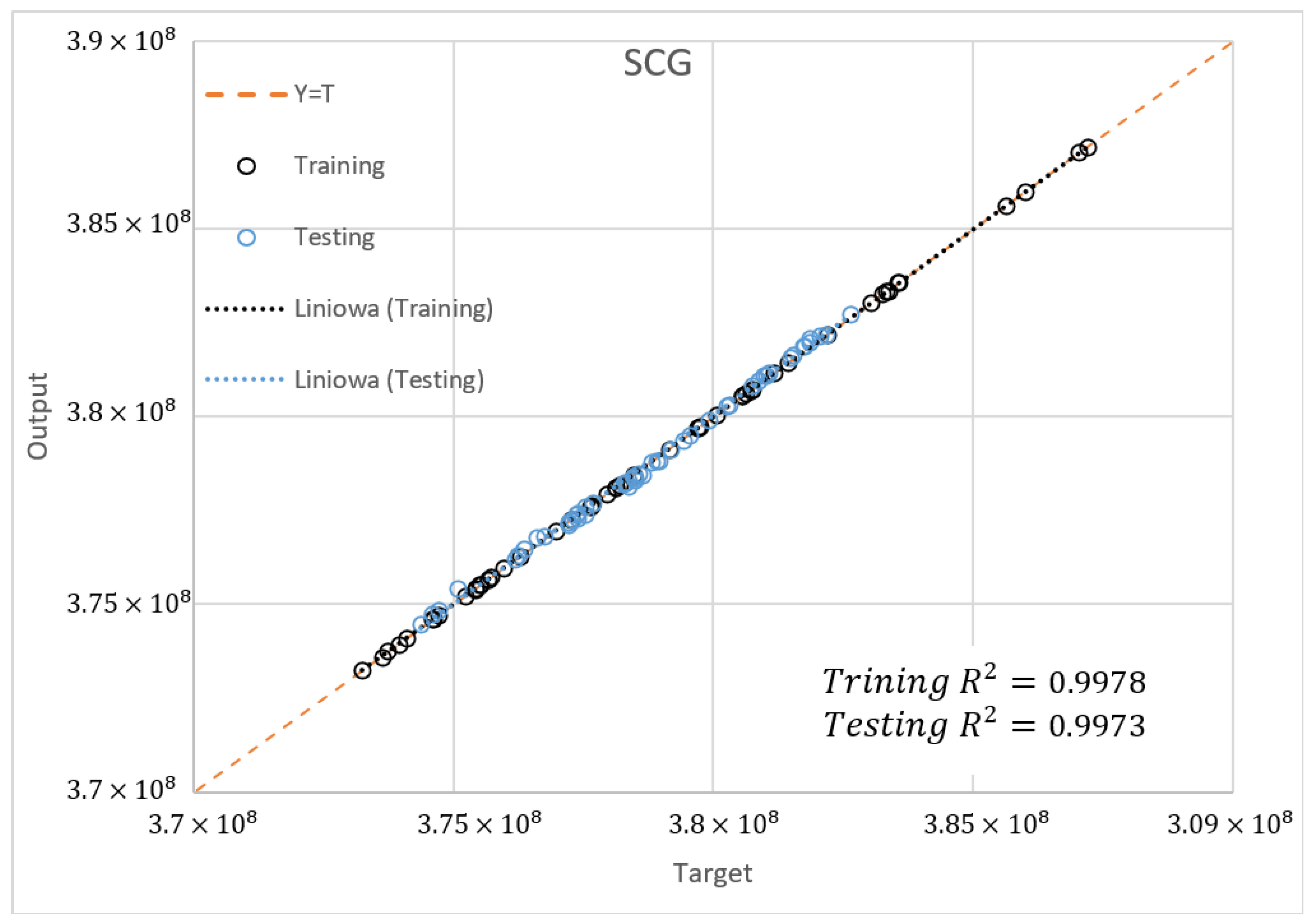

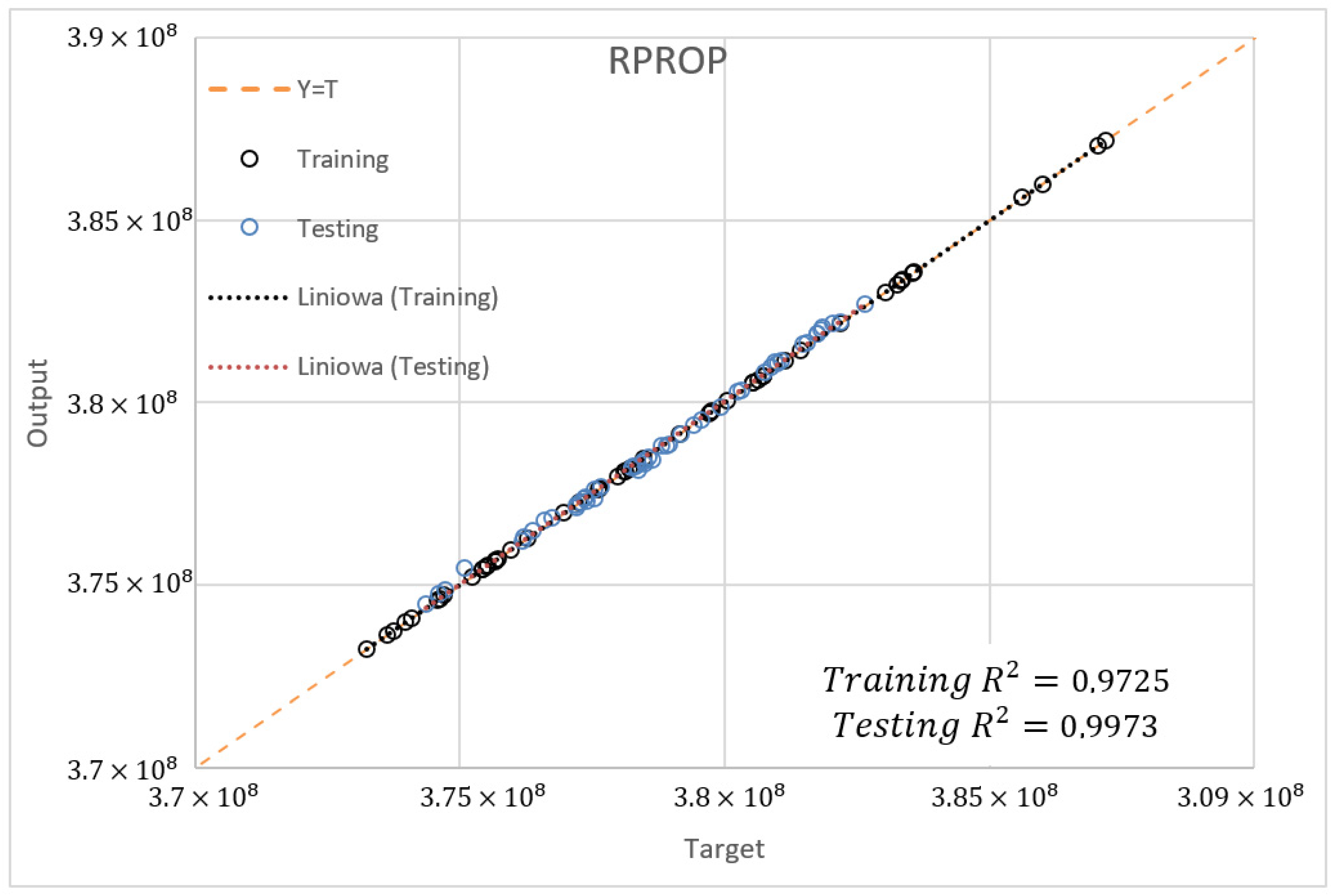

3. Selection of the Training Method

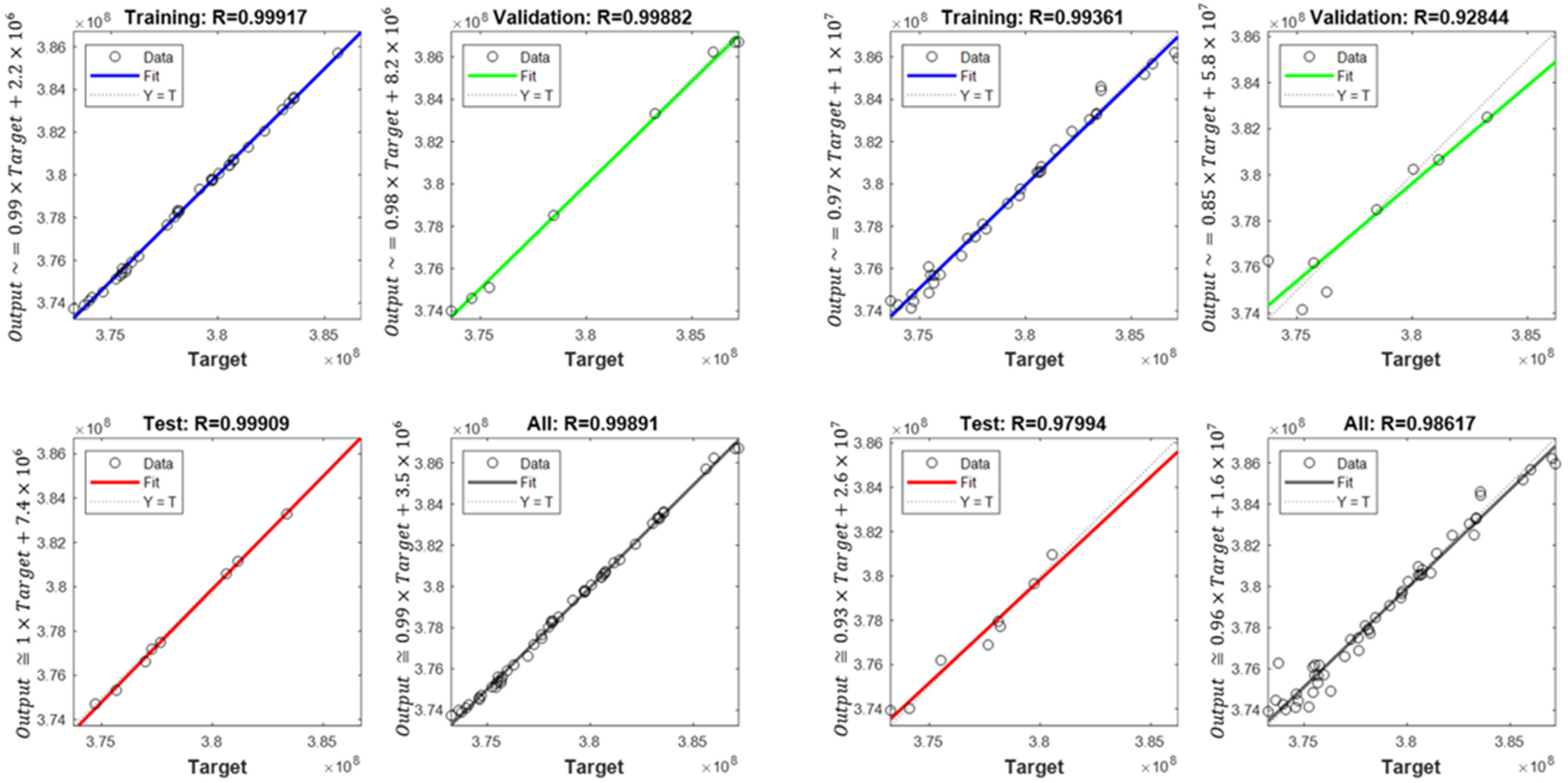

- Almost all networks achieve higher errors for networks with 50 neurons in the layer;

- Multiple training of the network has the greatest impact on the quality of the RPROP algorithm results;

- The RPROP algorithm obtains relatively large discrepancies in the calculation accuracy depending on the number of hidden layers, and especially depending on their parity;

- Errors for the first iteration of the training process for networks with one hidden layer for the SCG and RPROP algorithm are similar;

- SCG and GDM algorithms have a rapid error drop for four-layer networks;

- GDM algorithm has low sensitivity to the number of hidden layers (except for the four-layer network) and the repetition of the training process.

4. Selection of an ANN Structure

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- McCulloch, W.S.; Pitts, W.H. A logical calculus of the ideas immanent in nervous activity. Bull. Math. Biophys. 1943, 5, 115–133. [Google Scholar] [CrossRef]

- Karlik, B.; Olgac, A.V. Performance analysis of various activation functions in generalized MLP architectures of neural networks. Int. J. Artif. Intell. Expert Syst. 2011, 1, 111–122. Available online: https://www.cscjournals.org/manuscript/Journals/IJAE/Volume1/Issue4/IJAE-26.pdf (accessed on 7 May 2022).

- Hebb, D.O. The Organization of Behavior: A Neuropsychological Theory; Psychology Press: London, UK, 2005. [Google Scholar]

- Baturynska, I.; Semeniuta, O.; Martinsen, K. Optimization of process parameters for powder bed fusion additive manufacturing by combination of machine learning and finite element method: A conceptual framework. Procedia Cirp 2018, 67, 227–232. [Google Scholar] [CrossRef]

- Karban, P.; Pánek, D.; Orosz, T.; Petrášová, I.; Doležel, I. FEM based robust design optimization with Agros and Ārtap. Comput. Math. Appl. 2021, 81, 618–633. [Google Scholar] [CrossRef]

- Jeong, K.; Lee, H.; Kwon, O.M.; Jung, J.; Kwon, D.; Han, H.N. Prediction of uniaxial tensile flow using finite element-based indentation and optimized artificial neural networks. Mater. Des. 2020, 196, 109104. [Google Scholar] [CrossRef]

- Seventekidis, P.; Giagopoulos, D.; Arailopoulos, A.; Markogiannaki, O. Structural Health Monitoring using deep learning with optimal finite element model generated data. Mech. Syst. Signal Process. 2020, 145, 106972. [Google Scholar] [CrossRef]

- Maheshwari, A.; Davendralingam, N.; DeLaurentis, D.A. A comparative study of machine learning techniques for aviation applications. In Proceedings of the 2018 Aviation Technology, Integration, and Operations Conference, Atlanta, GA, USA, 25–29 June 2018; p. 3980. [Google Scholar] [CrossRef]

- Kozakiewicz, A.; Kieszek, R. Application of artificial neural networks for stress calculations of rotors. Przegląd Mech. LXXVII 2018, 1, 28–30. [Google Scholar] [CrossRef]

- Kieszek, R.; Kozakiewicz, A.; Rogólski, R. Optimization of a Jet Engine Compressor Disc with Application of Artificial Neural Networks for Calculations Related to Time and Mass. Adv. Sci. Technol. Res. J. 2021, 15, 208–218. [Google Scholar] [CrossRef]

- Kumar, A.; Srivastava, A.; Banerjee, A.; Goel, A. Performance based anomaly detection analysis of a gas turbine engine by artificial neural network approach. In Proceedings of the Annual Conference of the PHM Society, Minneapolis, MN, USA, 23–27 September 2012; Volume 4. [Google Scholar] [CrossRef]

- DePold, H.R.; Gass, F.D. The application of expert systems and neural networks to gas turbine prognostics and diagnostics. J. Eng. Gas Turbines Power 1999, 121, 607–612. [Google Scholar] [CrossRef]

- Nascimento, R.G.; Viana, F.A. Cumulative damage modeling with recurrent neural networks. AIAA J. 2020, 58, 5459–5471. [Google Scholar] [CrossRef]

- Nyulászi, L.; Andoga, R.; Butka, P.; Főző, L.; Kovacs, R.; Moravec, T. Fault detection and isolation of an aircraft turbojet engine using a multi-sensor network and multiple model approach. Acta Polytech. Hung. 2018, 15, 189–209. Available online: http://acta.uni-obuda.hu/Nyulaszi_Andoga_Butka_Fozo_Kovacs_Moravec_81.pdf (accessed on 29 April 2022).

- De Giorgi, M.G.; Ficarella, A.; De Carlo, L. Jet engine degradation prognostic using artificial neural networks. Aircr. Eng. Aerosp. Technol. 2019, 92, 296–303. [Google Scholar] [CrossRef]

- Chen, H.S.; Lan, T.S.; Lai, Y.M. Prediction Model of Working Hours of Cooling Turbine of Jet Engine with Back-propagation Neural Network. Sens. Mater. 2021, 33, 843–858. [Google Scholar] [CrossRef]

- Morinaga, M.; Mori, J.; Matsui, T.; Kawase, Y.; Hanaka, K. Identification of jet aircraft model based on frequency characteristics of noise by convolutional neural network. Acoust. Sci. Technol. 2019, 40, 391–398. [Google Scholar] [CrossRef]

- De Giorgi, M.G.; Quarta, M. Hybrid multigene genetic programming-artificial neural networks approach for dynamic performance prediction of an aeroengine. Aerosp. Sci. Technol. 2020, 103, 105902. [Google Scholar] [CrossRef]

- Kumarin, A.; Kuznetsov, A.; Makaryants, G. Hardware-in-the-loop neuro-based simulation for testing gas turbine engine control system. In Proceedings of the 2018 Global Fluid Power Society PhD Symposium (GFPS), Samara, Russia, 18–20 July 2018; IEEE: Manhattan, NY, USA; pp. 1–5. [Google Scholar] [CrossRef]

- Yu, Y.; Chen, L.; Sun, F.; Wu, C. Neural-network based analysis and prediction of a compressor’s characteristic performance map. Appl. Energy 2007, 84, 48–55. [Google Scholar] [CrossRef]

- Ghorbanian, K.; Gholamrezaei, M. An artificial neural network approach to compressor performance prediction. Appl. Energy 2009, 86, 1210–1221. [Google Scholar] [CrossRef]

- Cortés, O.; Urquiza, G.; Hernández, J.A. Optimization of operating conditions for compressor performance by means of neural network inverse. Appl. Energy 2009, 86, 2487–2493. [Google Scholar] [CrossRef]

- Grzymkowska, A. Neural model as an alternative to the numerical model of subsonic flow through the turbine palisade. Mechanik 2014, 87, 217–224. Available online: https://www.infona.pl/resource/bwmeta1.element.baztech-11e13efa-bc1b-4ac4-831d-9b64a5d1caf1 (accessed on 8 May 2022).

- Grzymkowska, A.; Szewczuk, N. Modeling of steam flow through subsonic turbine stage with the use of artificial neural networks. Mechanik 2016, 89, 698–699. [Google Scholar]

- Głuch, J.; Butterweck, A. Application of artificial neural networks for thermal-flow diagnostics of rims of steam turbines. Mechanik 2014, 87, 173–180. Available online: https://www.mechanik.media.pl/pliki/do_pobrania/artykuly/10/173-180.pdf (accessed on 29 April 2022).

- Pierret, S.; Van den Braembussche, R.A. Turbomachinery blade design using a Navier-Stokes solver and artificial neural network. J. Turbomach. 1999, 121, 326–332. [Google Scholar] [CrossRef]

- Keshtegar, B.; Bagheri, M.; Fei, C.W.; Lu, C.; Taylan, O.; Thai, D.K. Multi-extremum-modified response basis model for nonlinear response prediction of dynamic turbine blisk. Eng. Comput. 2021, 38, 1243–1254. [Google Scholar] [CrossRef]

- Zhang, C.Y.; Wei, J.S.; Wang, Z.; Yuan, Z.S.; Fei, C.W.; Lu, C. Creep-based reliability evaluation of turbine blade-tip clearance with novel neural network regression. Materials 2019, 12, 3552. [Google Scholar] [CrossRef]

- Zhang, C.; Wei, J.; Jing, H.; Fei, C.; Tang, W. Reliability-based low fatigue life analysis of turbine blisk with generalized regression extreme neural network method. Materials 2019, 12, 1545. [Google Scholar] [CrossRef]

- Song, L.K.; Bai, G.C.; Fei, C.W. Dynamic surrogate modeling approach for probabilistic creep-fatigue life evaluation of turbine disks. Aerosp. Sci. Technol. 2019, 95, 105439. [Google Scholar] [CrossRef]

- Spodniak, M.; Semrád, K.; Draganová, K. Turbine Blade Temperature Field Prediction Using the Numerical Methods. Appl. Sci. 2021, 11, 2870. [Google Scholar] [CrossRef]

- Liu, X.; Liu, Z.; Liang, Z.; Zhu, S.P.; Correia, J.A.; De Jesus, A.M. PSO-BP neural network-based strain prediction of wind turbine blades. Materials 2019, 12, 1889. [Google Scholar] [CrossRef]

- Liu, Z.; Liu, X.; Wang, K.; Liang, Z.; Correia, J.A.; De Jesus, A.M. GA-BP neural network-based strain prediction in full-scale static testing of wind turbine blades. Energies 2019, 12, 1026. [Google Scholar] [CrossRef]

- Sessarego, M.; Feng, J.; Ramos-García, N.; Horcas, S.G. Design optimization of a curved wind turbine blade using neural networks and an aero-elastic vortex method under turbulent inflow. Renew. Energy 2020, 146, 1524–1535. [Google Scholar] [CrossRef]

- Albanesi, A.; Roman, N.; Bre, F.; Fachinotti, V. A metamodel-based optimization approach to reduce the weight of composite laminated wind turbine blades. Compos. Struct. 2018, 194, 345–356. [Google Scholar] [CrossRef]

- Dornberger, R.; Büche, D.; Stoll, P. Multidisciplinary optimization in turbomachinery design. In Proceedings of the ECCOMAS 2000, Barcelona, Spain, 11–14 September 2000; Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.18.8046&rep=rep1&type=pdf (accessed on 29 April 2022).

- Kaliszewski, I. Multi-Criteria Decision Making: Soft Computing for Complex Decision Problems; Wydawnictwa Naukowo-Techniczne: Warszawa, Poland, 2008; Available online: http://cejsh.icm.edu.pl/cejsh/element/bwmeta1.element.ojs-issn-2391-761X-year-2008-issue-9-article-129/c/129-110.pdf (accessed on 29 April 2022).

- Kaliszewski, I. Quantitative Pareto Analysis by Cone Separation Technique; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2012; Available online: https://link.springer.com/content/pdf/10.1007/978-1-4615-2772-5_5.pdf (accessed on 29 April 2022).

- Timoshenko, S.; Goodier, J.N. Theory of Elasticity: By S. Timoshenko and JN Goodier; McGraw-Hill: New York, NY, USA, 1951. [Google Scholar]

- Dżygadło, Z.; Łyżwiński, M.; Otyś, J.; Szczeciński, S.; Wiatrek, R. Turbine Engine Rotor Assemblies; WKŁ: Warsaw, Poland, 1982. [Google Scholar]

- Thadedeus, W. Fowler, Jet Engines and Propulsion Systems for Engineers, Training and Education Development and the University of Cincinnati for Human Resource Development; GE Aircraft Engines: Cincinnati, OH, USA, 1989. [Google Scholar]

- Hetnarski, R.B.; Eslami, M.R.; Gladwell, G.M.L. Thermal Stresses—Advanced Theory and Applications, Solid Mechanics and Its Applications; Springer: Berlin, Germany, 2009; Volume 158, Available online: https://link.springer.com/content/pdf/10.1007/978-3-030-10436-8.pdf (accessed on 21 June 2022).

- Møller, M.F. A scaled conjugate gradient algorithm for fast supervised learning. Neural Netw. 1993, 6, 525–533. [Google Scholar] [CrossRef]

- Hagan, M.T.; Demuth, H.B.; Beale, M.H. Neural Network Design; PWS Publishing: Boston, MA, USA, 1996. [Google Scholar]

- Riedmiller, M.; Braun, H. A direct adaptive method for faster backpropagation learning: The RPROP algorithm. In Proceedings of the IEEE International Conference on Neural Networks, San Francisco, CA, USA, 28 March–1 April 1993; pp. 586–591. [Google Scholar] [CrossRef]

- Głuch, J.; Butterweck, A. Application of artificial neural networks to heat-flow diagnostics of steam turbine bladings. Mechanik 2014, XVIII, 7. [Google Scholar]

- Grzymkowska, A.; Szewczuk, N. Modelling of steam flow through transonic turbine rows using artificial neural networks. Mechanik 2016, 7, 698–699. [Google Scholar] [CrossRef][Green Version]

- Arslan, E.; Vadivel, R.; Ali, M.S.; Arik, S. Event-triggered H∞ filtering for delayed neural networks via sampled-data. Neural Netw. 2017, 91, 11–21. [Google Scholar] [CrossRef] [PubMed]

- Hymavathi, M.; Muhiuddin, G.; Syed Ali, M.; Al-Amri, J.F.; Gunasekaran, N.; Vadivel, R. Global exponential stability of fractional order complex-valued neural networks with leakage delay and mixed time varying delays. Fractal Fract. 2022, 6, 140. [Google Scholar] [CrossRef]

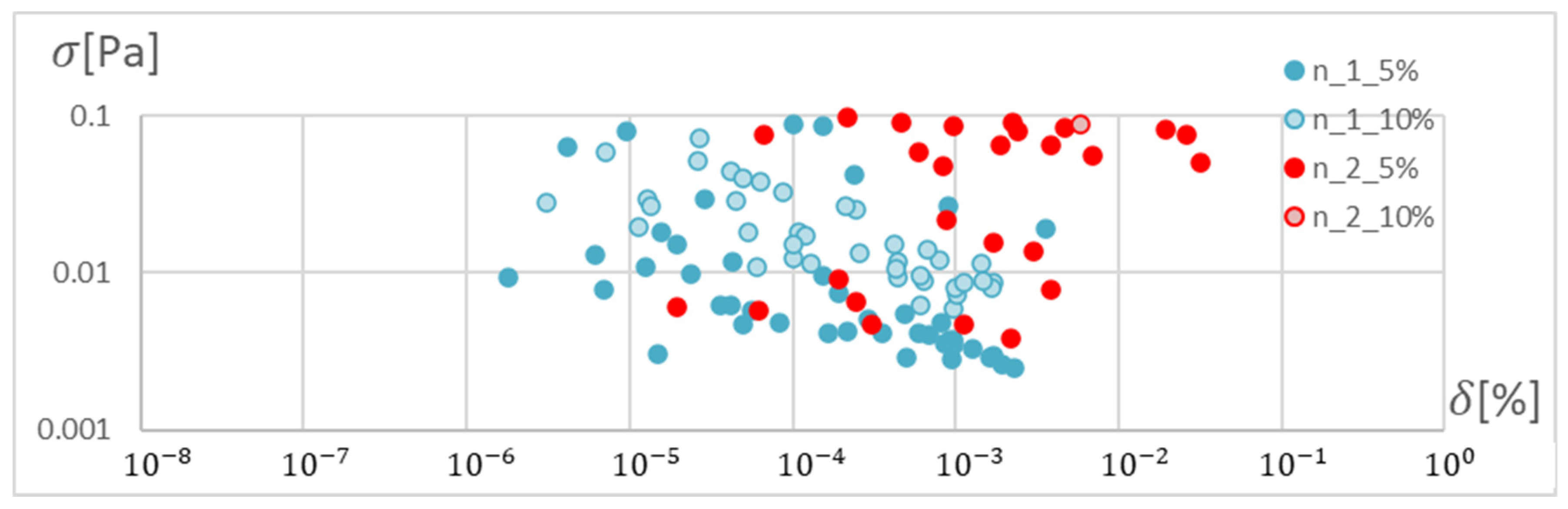

| Number of Hidden Layers | 1 | 2 | 3 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Database span | 5% | 10% | 5% | 10% | 5% | 10% | |||||||

| Average number of neurons in a layer | SCG | 2 | 3 | 5 | 8 | 4 | 7 | 23 | 27 | 8 | 21 | 19 | 5 |

| RPTOP | 3 | 3 | 12 | 6 | 16 | 6 | 17 | 22 | 9 | 33 | 23 | 6 | |

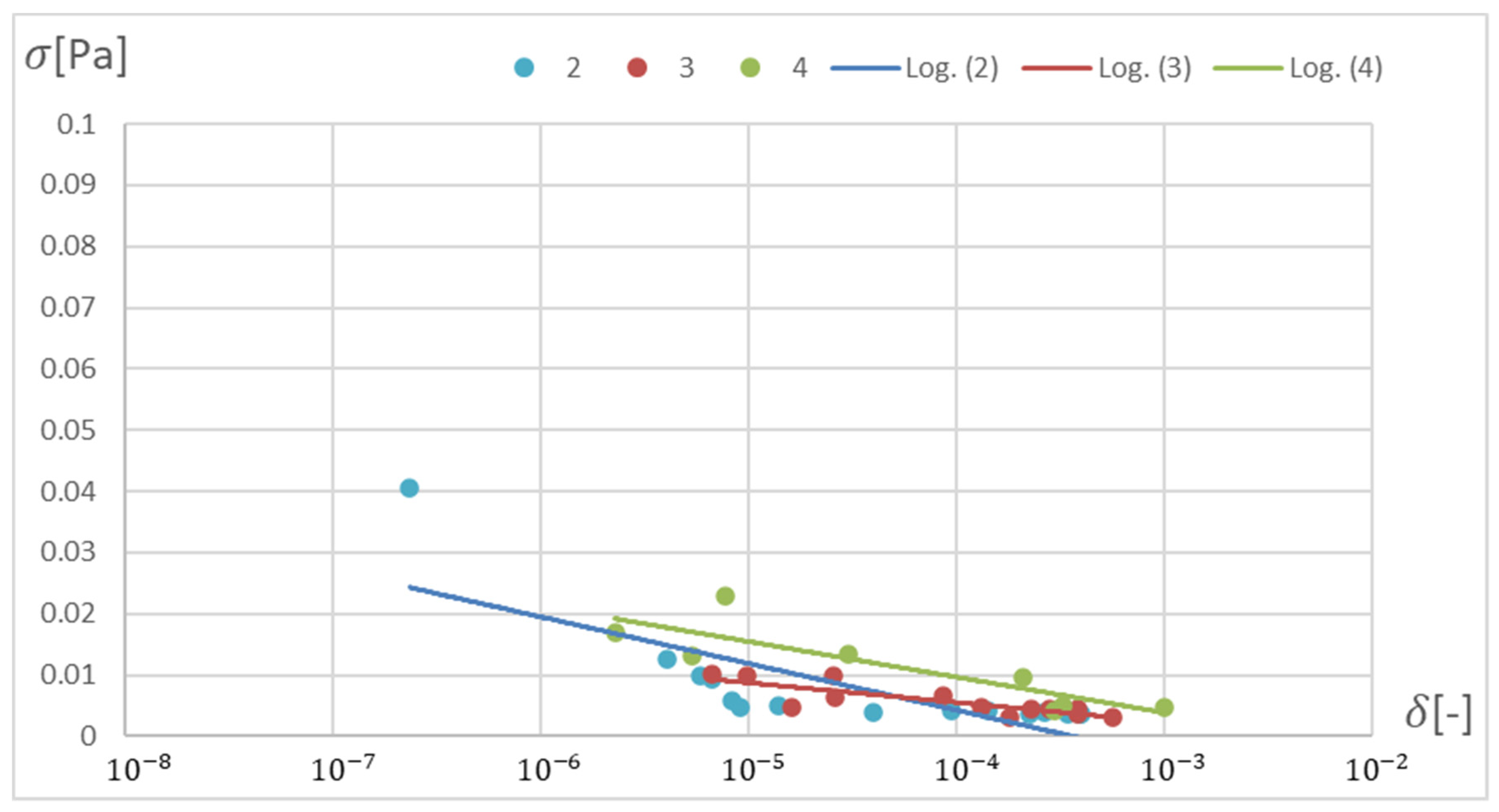

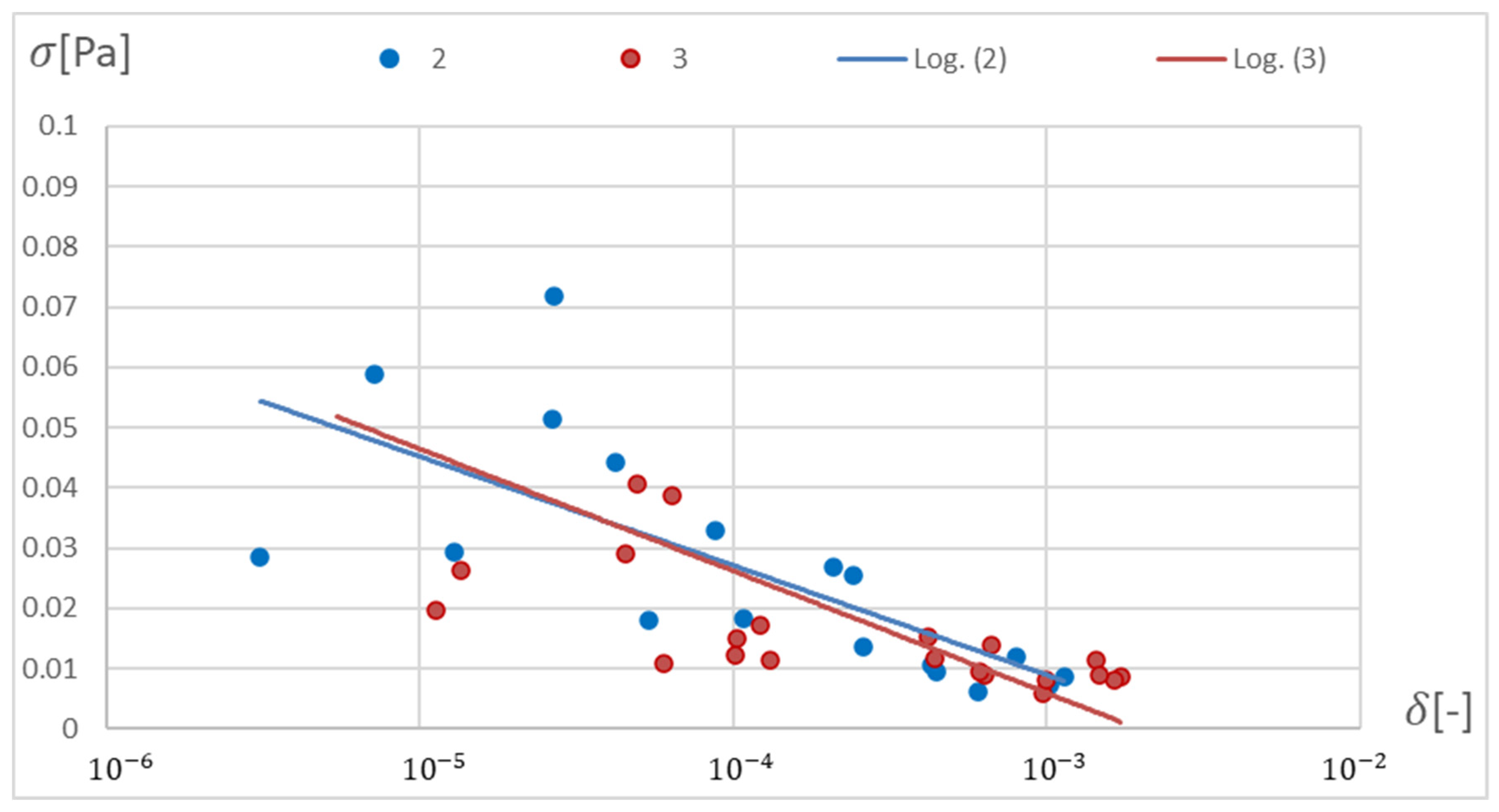

| SCG | RPROP | |||

|---|---|---|---|---|

| Number of Neurons | ||||

| 2 | 1.30 × 10−4 | 1.30 × 10−4 | 3.07 × 10−4 | 2.53 × 10−2 |

| 3 | 1.79 × 10−4 | 1.79 × 10−4 | 5.34 × 10−4 | 5.34 × 10−4 |

| 4 | 3.30 × 10−4 | 3.30 × 10−4 | - | - |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kozakiewicz, A.; Kieszek, R. Artificial Neural Network Structure Optimisation in the Pareto Approach on the Example of Stress Prediction in the Disk-Drum Structure of an Axial Compressor. Materials 2022, 15, 4451. https://doi.org/10.3390/ma15134451

Kozakiewicz A, Kieszek R. Artificial Neural Network Structure Optimisation in the Pareto Approach on the Example of Stress Prediction in the Disk-Drum Structure of an Axial Compressor. Materials. 2022; 15(13):4451. https://doi.org/10.3390/ma15134451

Chicago/Turabian StyleKozakiewicz, Adam, and Rafał Kieszek. 2022. "Artificial Neural Network Structure Optimisation in the Pareto Approach on the Example of Stress Prediction in the Disk-Drum Structure of an Axial Compressor" Materials 15, no. 13: 4451. https://doi.org/10.3390/ma15134451

APA StyleKozakiewicz, A., & Kieszek, R. (2022). Artificial Neural Network Structure Optimisation in the Pareto Approach on the Example of Stress Prediction in the Disk-Drum Structure of an Axial Compressor. Materials, 15(13), 4451. https://doi.org/10.3390/ma15134451