Prediction of Compressive Strength of Concrete Specimens Based on Interpretable Machine Learning

Abstract

:1. Introduction

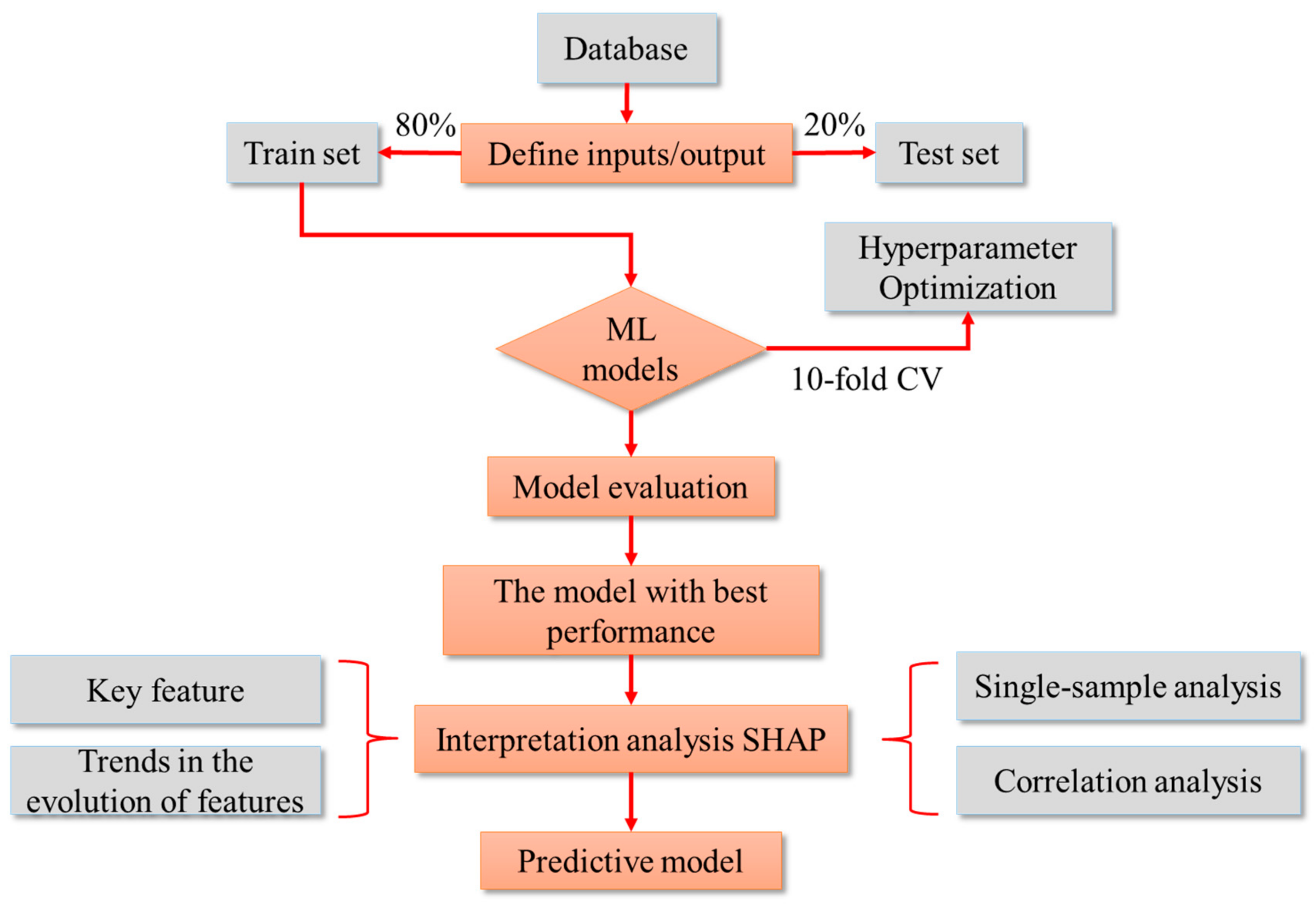

2. Research Methods and Modeling Process

2.1. Algorithmic Principle

2.2. Evaluation Metrics

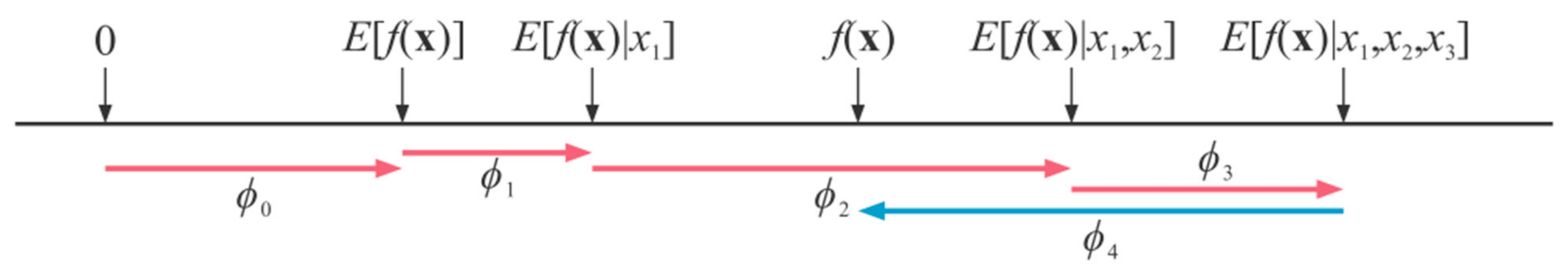

2.3. The Interpretable Method

2.4. Implementation of ML Methods

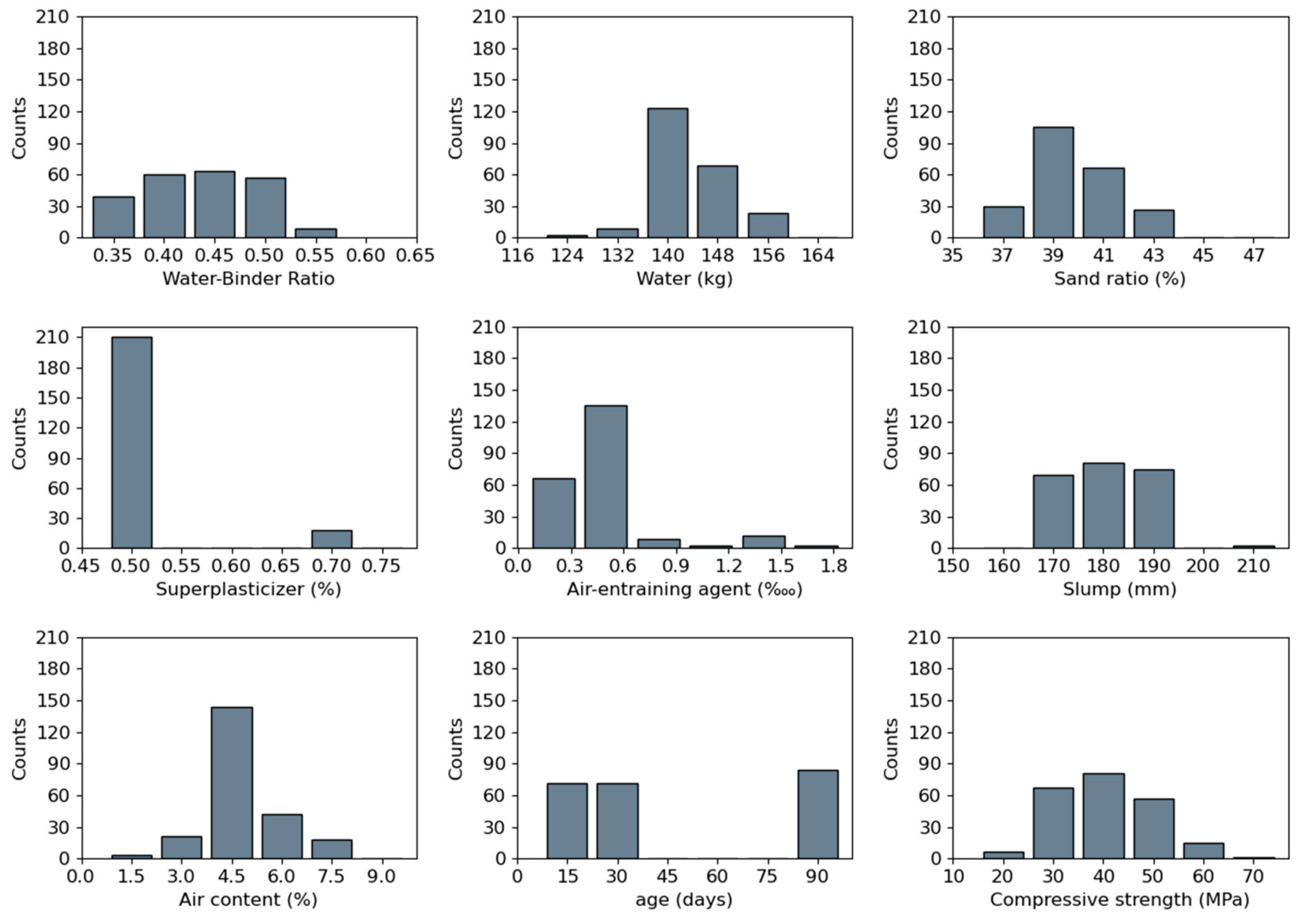

3. Experimental Database

3.1. Selection of Input and Output Features

3.2. Details of the Database

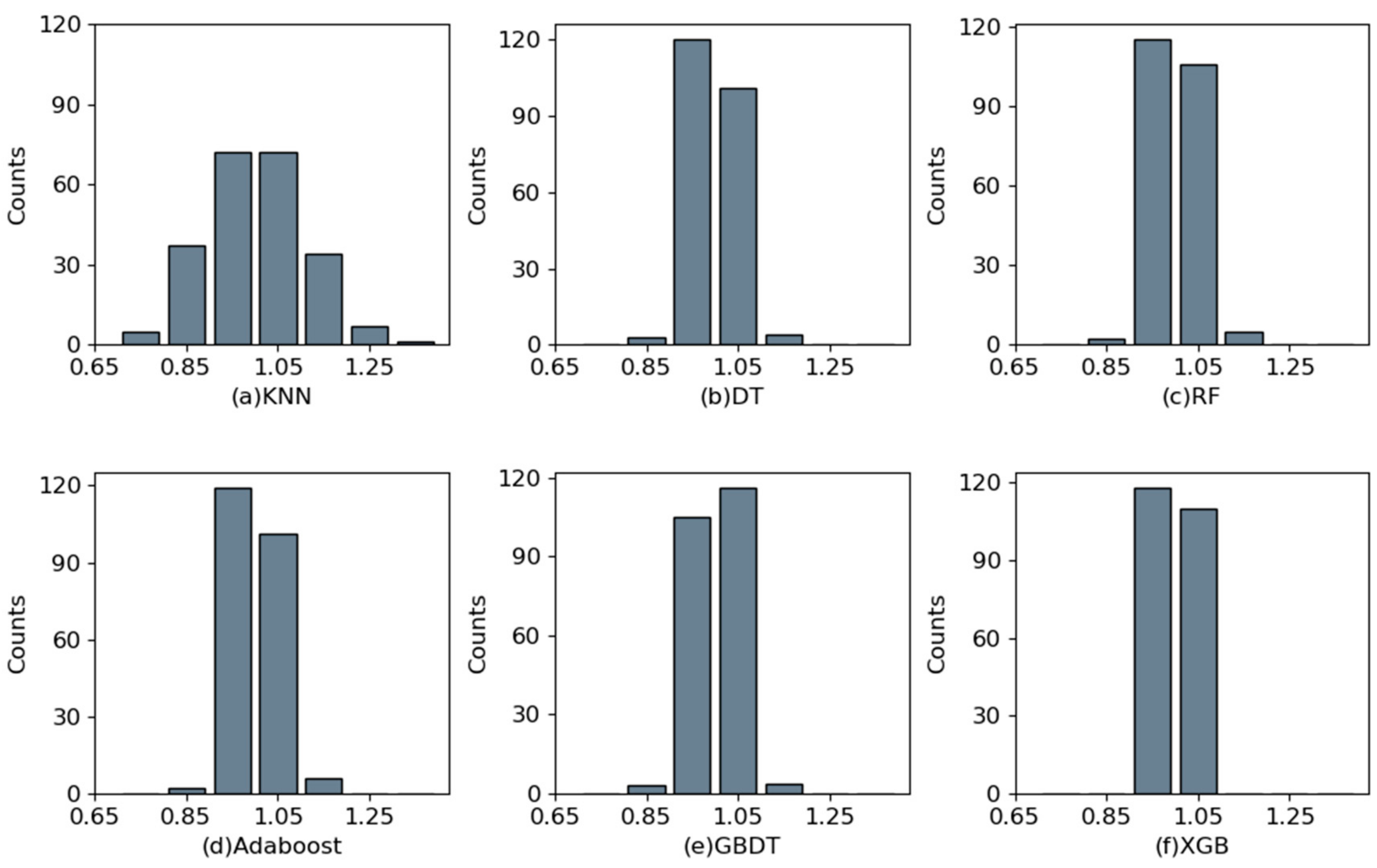

4. Model Results and Discussion

5. Model’s Interpretable Analysis

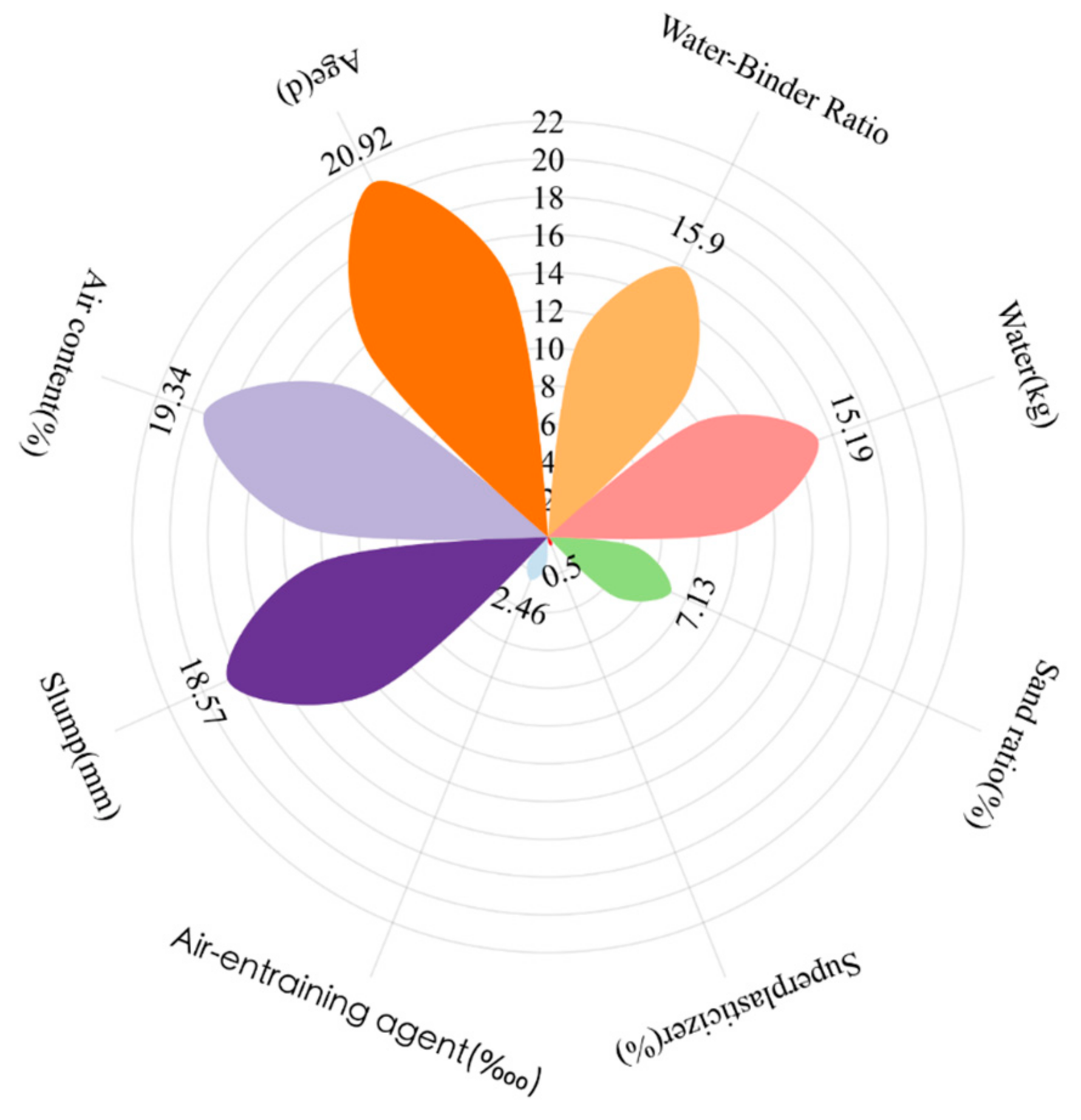

5.1. Key Features

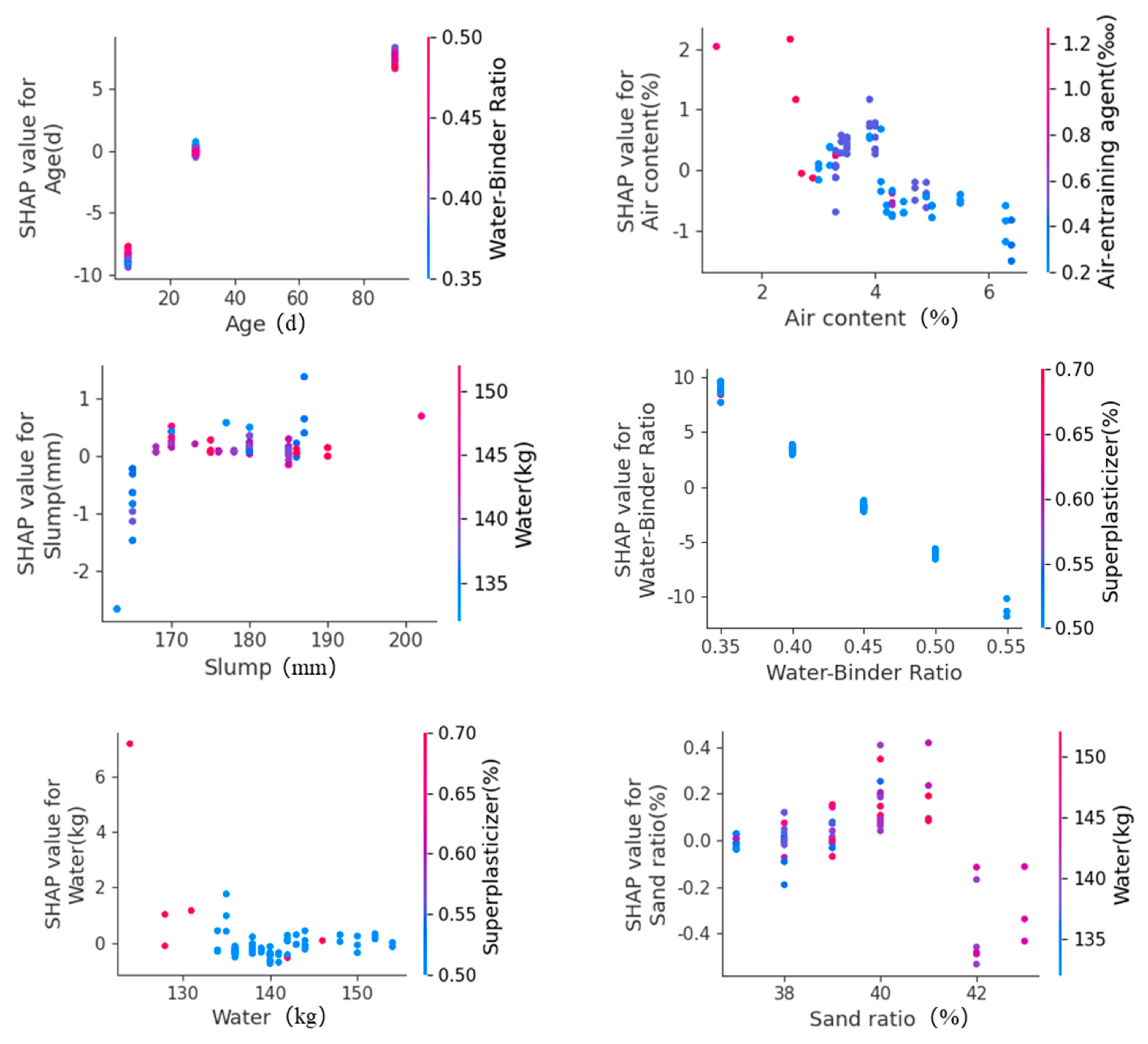

5.2. Trends in the Evolution of Features

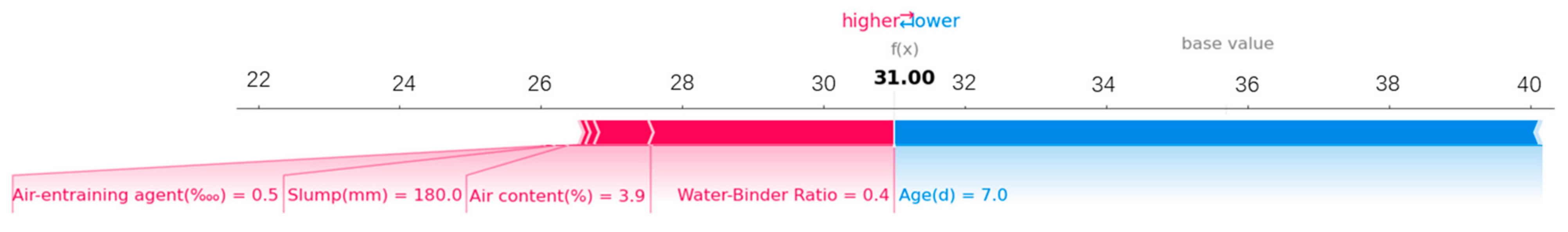

5.3. Single-Sample Analysis

5.4. Correlation Analysis

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ranjbar, I.; Toufigh, V.; Boroushaki, M. A combination of deep learning and genetic algorithm for predicting the compressive strength of high-performance concrete. Struct. Concr. 2022, 23, 2405–2418. [Google Scholar] [CrossRef]

- Adhikary, B.B.; Mutsuyoshi, H. Prediction of shear strength of steel fiber RC beams using neural networks. Constr. Build. Mater. 2006, 20, 801–811. [Google Scholar] [CrossRef]

- Mansouri, I.; Kisi, O. Prediction of debonding strength for masonry elements retrofitted with FRP composites using neuro-fuzzy and neural network approaches. Compos. B Eng. 2015, 70, 247–255. [Google Scholar] [CrossRef]

- Luo, H.; Paal, S.G. Machine learning-based backbone curve model of reinforced concrete columns subjected to cyclic loading reversals. J. Comput. Civ. Eng. 2018, 32, 04018042. [Google Scholar] [CrossRef]

- Feng, D.C.; Wang, W.J.; Mangalathu, S.; Hu, G.; Wu, T. Implementing ensemble learning methods to predict the shear strength of RC deep beams with/without web reinforcements. Eng. Struct. 2021, 235, 111979. [Google Scholar] [CrossRef]

- Mangalathua, S.; Karthikeyanb, K.; Feng, D.C.; Jeon, J.S. Machine-learning interpretability techniques for seismic performance assessment of infrastructure systems. Eng. Struct. 2022, 250, 112883. [Google Scholar] [CrossRef]

- Mangalathua, S.; Shinb, H.; Choic, E.; Jeon, J.S. Explainable machine learning models for punching shear strength estimation of flat slabs without transverse reinforcement. J. Build. Eng. 2021, 39, 102300. [Google Scholar] [CrossRef]

- Vakhshouri, B.; Nejadi, S. Prediction of compressive strength of self-compacting concrete by ANFIS models. Neurocomputing 2018, 280, 13–22. [Google Scholar] [CrossRef]

- Namyong, J.; Sangchun, Y.; Hongbum, C. Prediction of compressive strength of in-situ concrete based on mixture proportions. J. Asian Archit. Build. Eng. 2004, 3, 9–16. [Google Scholar] [CrossRef]

- Hlobil, M.; Smilauer, V.; Chanvillard, G. Micromechanical multiscale fracture model for compressive strength of blended cement pastes. Cem. Concr. Res. 2016, 83, 188–202. [Google Scholar] [CrossRef]

- Kabir, A.; Hasan, M.; Miah, K. Predicting 28 days compressive strength of concrete from 7 days test result. In Proceedings of the International Conference on Advances in Design and Construction of Structures, Bangalore, India, 19–20 October 2012; pp. 18–22. [Google Scholar]

- Zain MF, M.; Abd, S.M. Multiple regression model for compressive strength prediction of high performance concrete. J. Appl. Sci. 2009, 9, 155–160. [Google Scholar] [CrossRef]

- Hamid, Z.N.; Jamali, A.; Narimanzadeh, N.; Akbarzadeh, H. A polynomial model for concrete compressive strength prediction using GMDH-type neural networks and genetic algorithm. In Proceedings of the 5th WSEAS International Conference on System Science and Simulation in Engineering, Tenerife, Canary Islands, Spain, 16–18 December 2006; pp. 13–18. [Google Scholar]

- Li, Q.F.; Song, Z.M. High-performance concrete strength prediction based on ensemble learning. Constr. Build. Mater. 2022, 324, 126694. [Google Scholar] [CrossRef]

- Moon, S.; Munira, C.A. Utilization of prior information in neural network training for improving 28-day concrete strength prediction. J. Constr. Eng. Manag. 2021, 147, 04021028. [Google Scholar] [CrossRef]

- Feng, D.C.; Liu, Z.T.; Wang, X.D.; Chen, Y.; Chang, J.Q.; Wei, D.F.; Jiang, Z.M. Machine learning-based compressive strength prediction for concrete: An adaptive boosting approach. Constr. Build. Mater. 2020, 230, 117000. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, G.; Liang, W.; Wang, N. A comparative study on the development of hybrid SSA-RF and PSO-RF models for predicting the uniaxial compressive strengthof rocks. Case Stud. Constr. Mater. 2023, 18, e02191. [Google Scholar]

- Ni, H.-G.; Wang, J.-Z. Prediction of compressive strength of concrete by neural networks. Cem. Concr. Res. 2000, 30, 1245–1250. [Google Scholar] [CrossRef]

- Tran, V.Q.; Dang, V.Q.; Ho, L. Evaluating compressive strength of concrete made with recycled concreteaggregates using machine learning approach. Constr. Build. Mater. 2022, 323, 126578. [Google Scholar] [CrossRef]

- Dutta, S.; Samui, P.; Kim, D. Comparison of machine learning techniques to predict compressive strength of concrete. Comput. Concr. 2018, 21, 463–470. [Google Scholar]

- Zhang, B.W.; Geng, X.L. Prediction of concrete compressive strength based on tuna swarm algorithm optimization extreme learning machine. Appl. Res. Comput. 2024, 41, 444–449. [Google Scholar] [CrossRef]

- Xue, G.B.; Hu, A.L.; Wei, Y.; Fneg, Y.J.; Liang, K.; Li, L.H. Compressive Strength Predict. Concr. Based Cost-Sensitive Coefficients. J. Xi’an Univ. Technol. 2022, 38, 588–593. [Google Scholar] [CrossRef]

- Al-Shamiri, A.K.; Kim, J.H.; Yuan, T.-F.; Yoon, Y.S. Modeling the compressive strength of high-strength concrete: An extreme learning approach. Constr. Build. Mater. 2019, 208, 204–219. [Google Scholar] [CrossRef]

- Bentz, D.P. Modelling cement microstructure: Pixels, particles, and property prediction. Mater. Struct. Constr. 1999, 32, 187–195. [Google Scholar] [CrossRef]

- Karni, J. Prediction of compressive strength of concrete. Mater. Constr. 1974, 73, 197–200. [Google Scholar] [CrossRef]

- Silva, R. Use of Recycled Aggregates from Construction and Demolition Waste in the Production of Structural Concrete. Res. Net. 2015. [Google Scholar] [CrossRef]

- De Brito, J.; Kurda, R.; da Silva, P.R. Can we truly predict the compressive strength of concrete without knowing the properties of aggregates? Appl. Sci. 2018, 8, 1095. [Google Scholar] [CrossRef]

- Bentz, D.P.; Garboczi, E.J.; Bullard, J.W.; Ferraris, C.F.; Martys, N.S.; Stutzman, P.E. Virtual testing of cement and concrete. In Significance of Tests and Properties of Concrete and Concrete-Making Materials; ASTM: West Conshohocken, PA, USA, 2006. [Google Scholar]

- Mechling, J.M.; Lecomte, A.; Diliberto, C. Relation between cement composition and compressive strength of pure pastes. Cem. Concr. Compos. 2009, 31, 255–262. [Google Scholar] [CrossRef]

- Cheng, M.Y.; Prayogo, D.; Wu, Y.W. Novel Genetic Algorithm-based Evolutionary Support Vector Machine for Optimizing High-performance Concrete Mixture. J. Comput. Civ. Eng. 2014, 28, 06014003. [Google Scholar] [CrossRef]

- Taffese, W.Z.; Sistonen, E. Machine learning for durability and service-life assessment of reinforced concrete structures: Recent advances and future directions. Autom. Constr. 2017, 77, 1–14. [Google Scholar] [CrossRef]

- Deng, F.; He, Y.; Zhou, S.; Yu, Y.; Cheng, H.; Wu, X. Compressive strength prediction of recycled concrete based on deep learning. Constr. Build. Mater. 2018, 175, 562–569. [Google Scholar] [CrossRef]

- Abuodeh, O.R.; Abdalla, J.A.; Hawileh, R.A. Assessment of compressive strength of ultra-high performance concrete using deep machine learning techniques. Appl. Soft Comput. 2020, 95, 106552. [Google Scholar] [CrossRef]

- Rubene, S.; Vilnitis, M.; Noviks, J. Frequency analysis and measurements of moisture content of AAC masonry constructions by EIS. Procedia Eng. 2015, 123, 471–478. [Google Scholar] [CrossRef]

- Usmen, M.A.; Vilnitis, M. Evaluation of safety, quality and productivity in construction. IOP Conf. Ser. Mater. Sci. Eng. 2015, 96, 012061. [Google Scholar] [CrossRef]

- Barbosa, A.A.R.; Vilnītis, M. Innovation and construction management in Brazil: Challenges of companies in times of quality and productivity. IOP Conf. Ser. Mater. Sci. Eng. 2017, 251, 012040. [Google Scholar] [CrossRef]

- Cover, T.; Hart, P. Nearest neighbor pattern classification. IEEE Trans. Inf. Theory 1967, 13, 21–27. [Google Scholar] [CrossRef]

- Hang, L. Statistical Learning Method; Tsinghua University Press: Beijing, China, 2012. [Google Scholar]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V.; et al. Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Breiman, L. Bagging predictors. Mach. Learn. 1996, 24, 123–140. [Google Scholar] [CrossRef]

- Freund, Y.; Schapire, R.E. A short introduction to boosting. J.-Jpn. Soc. Artif. Intell. 1999, 14, 771–780. [Google Scholar]

- Friedman, J.H. Greedy function approximation: A gradient boosting machine. Ann. Stat. 2001, 29, 1189–1232. [Google Scholar] [CrossRef]

- Schapire, R.E.; Singer, Y. Improved boosting algorithms using confidence-rated predictions. Mach. Learn. 1999, 37, 297–336. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016. [Google Scholar]

- Schapire, R.E. The strength of weak learnability. Mach. Learn. 1990, 5, 197–227. [Google Scholar] [CrossRef]

- Bentéjac, C.; Csörgő, A.; Martínez-Muñoz, G. A comparative analysis of gradient boosting algorithms. Artif. Intell. Rev. 2021, 54, 1937–1967. [Google Scholar] [CrossRef]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. In Proceedings of the 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lundberg, S.M.; Erion, G.G.; Lee, S.I. Consistent individualized feature attribution for tree ensembles. arXiv 2018, arXiv:1802.03888. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu Press: Morrisville, NC, USA, 2020. [Google Scholar]

| Variables | Unit | Min | Max | Mean | Median | SD |

|---|---|---|---|---|---|---|

| Water–Binder Ratio | - | 0.35 | 0.55 | 0.44 | 0.45 | 0.06 |

| Water | kg/L | 124.00 | 154.00 | 140.30 | 140.00 | 5.78 |

| Sand ratio | % | 37.00 | 43.00 | 39.25 | 39.00 | 1.59 |

| Superplasticizer | % | 0.50 | 0.75 | 0.52 | 0.50 | 0.06 |

| Air-entraining agent | ‱ | 0.20 | 1.50 | 0.45 | 0.50 | 0.28 |

| Slump | mm | 163 | 202 | 177.87 | 180.00 | 8.10 |

| Air content | % | 1.20 | 6.40 | 4.08 | 4.00 | 1.00 |

| Age | d | 7.00 | 90.00 | 44.21 | 28.00 | 35.95 |

| Compressive strength | MPa | 17.10 | 61.40 | 35.69 | 35.85 | 9.58 |

| Algorithm | Hyperparameter Optimization |

|---|---|

| KNN | n_neighbors = 5 |

| DT | criterion = ‘mse’, splitter = ‘best’, min_samples_split = 2 |

| RF | n_estimators = 68, random_state = 90, max_depth = 12 |

| GBDT | learning_rate = 0.2, n_estimators = 5, max_depth = 3 |

| Adaboost | max_depth = 19, learning_rate = 0.9,n_estimators = 40 |

| XGBoost | n_estimators = 42, max_depth = 5, gamma = 0.2, learning_rate = 0.2 |

| Models | Sets | Measures | |||

|---|---|---|---|---|---|

| R2 | RMSE | MAE | MAPE(%) | ||

| KNN | Training | 0.848 | 3.699 | 2.944 | 8.291 |

| Testing | 0.725 | 5.079 | 4.334 | 12.133 | |

| DT | Training | 0.982 | 1.266 | 0.929 | 2.621 |

| Testing | 0.943 | 2.308 | 1.735 | 4.802 | |

| RF | Training | 0.980 | 1.337 | 1.007 | 2.858 |

| Testing | 0.950 | 2.167 | 1.628 | 4.409 | |

| GBDT | Training | 0.979 | 1.371 | 1.025 | 2.906 |

| Testing | 0.956 | 2.034 | 1.534 | 4.138 | |

| AdaBoost | Training | 0.980 | 1.329 | 0.970 | 2.741 |

| Testing | 0.940 | 2.368 | 1.811 | 5.038 | |

| XGBoost | Training | 0.982 | 1.266 | 0.929 | 2.622 |

| Testing | 0.966 | 2.307 | 1.734 | 4.801 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Zhong, Y.; Liao, G.; Ding, Q.; Zhang, T.; Li, X. Prediction of Compressive Strength of Concrete Specimens Based on Interpretable Machine Learning. Materials 2024, 17, 3661. https://doi.org/10.3390/ma17153661

Wang W, Zhong Y, Liao G, Ding Q, Zhang T, Li X. Prediction of Compressive Strength of Concrete Specimens Based on Interpretable Machine Learning. Materials. 2024; 17(15):3661. https://doi.org/10.3390/ma17153661

Chicago/Turabian StyleWang, Wenhu, Yihui Zhong, Gang Liao, Qing Ding, Tuan Zhang, and Xiangyang Li. 2024. "Prediction of Compressive Strength of Concrete Specimens Based on Interpretable Machine Learning" Materials 17, no. 15: 3661. https://doi.org/10.3390/ma17153661