A Multi-Layer Multi-Pass Weld Bead Cross-Section Morphology Extraction Method Based on Row–Column Grayscale Segmentation

Abstract

1. Introduction

2. Materials and Methods

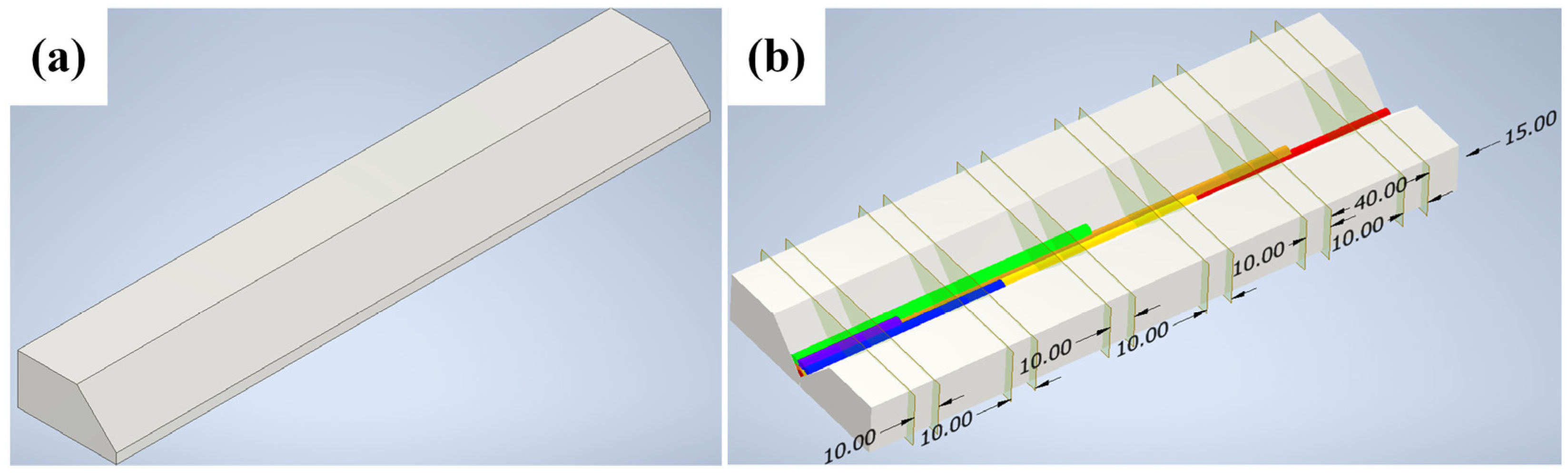

2.1. Materials Preparation

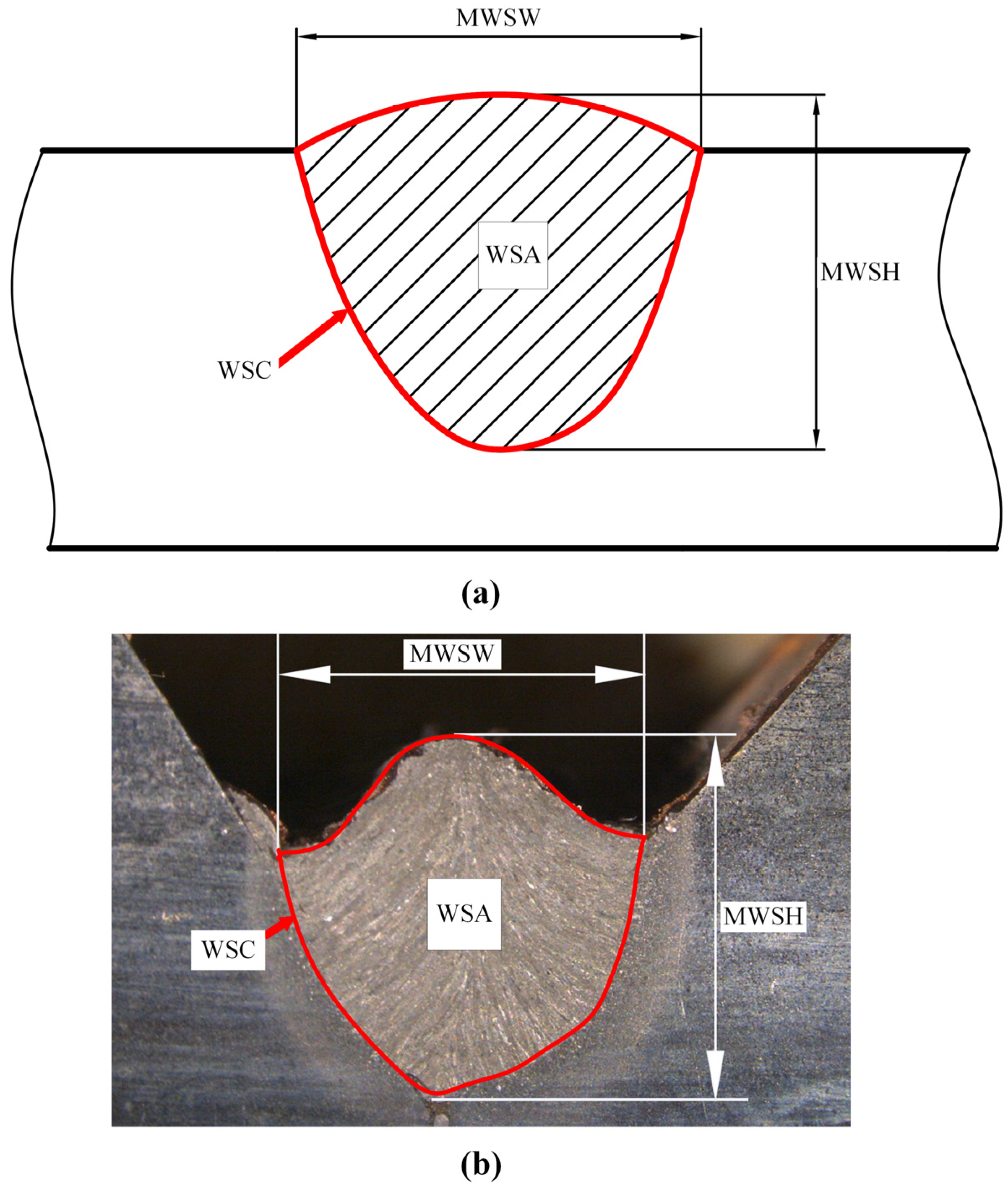

2.2. Features of Weld Bead Cross-Section Morphology

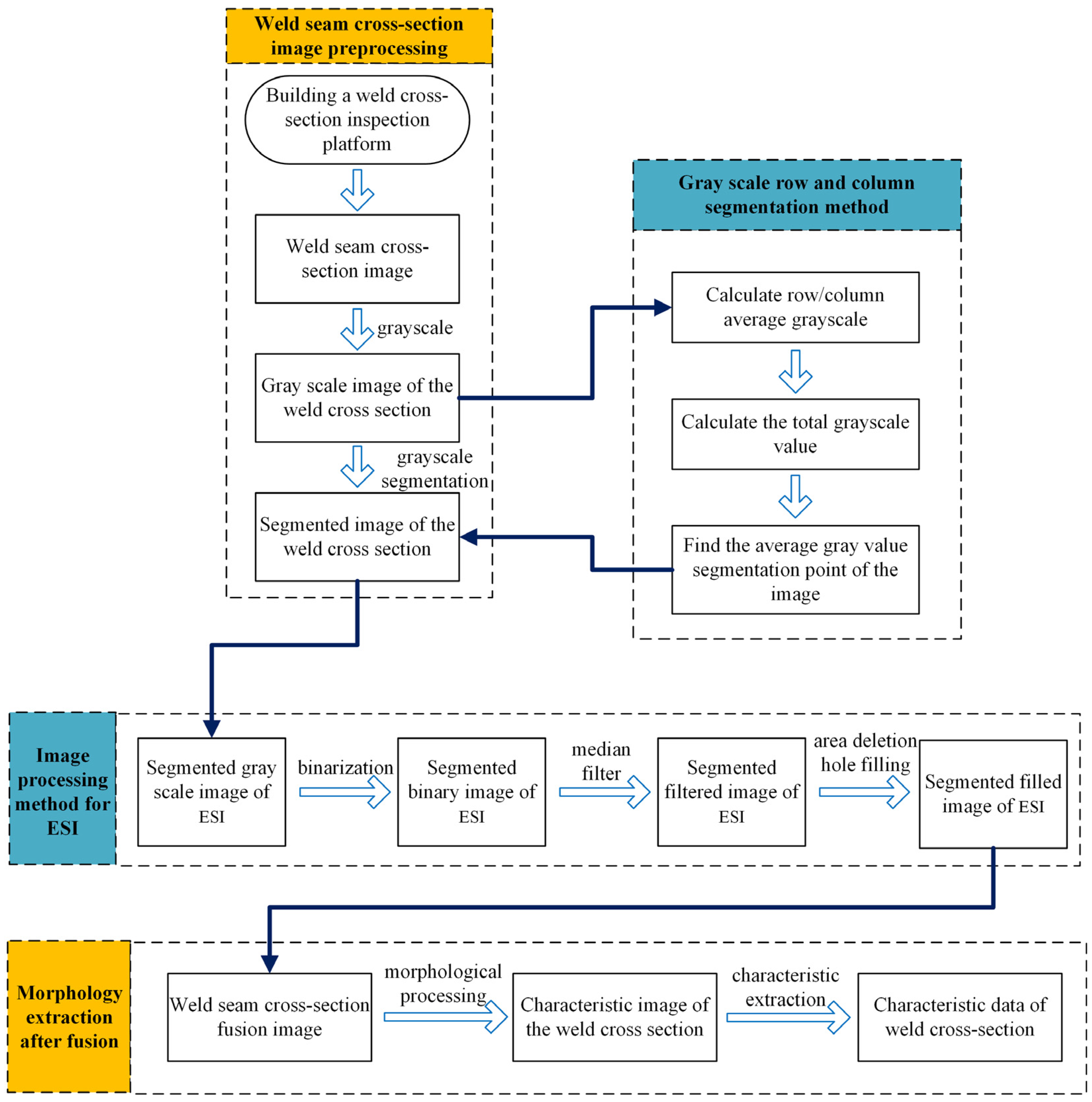

2.3. Grayscale Segmentation Method for Weld Bead Sectional Images

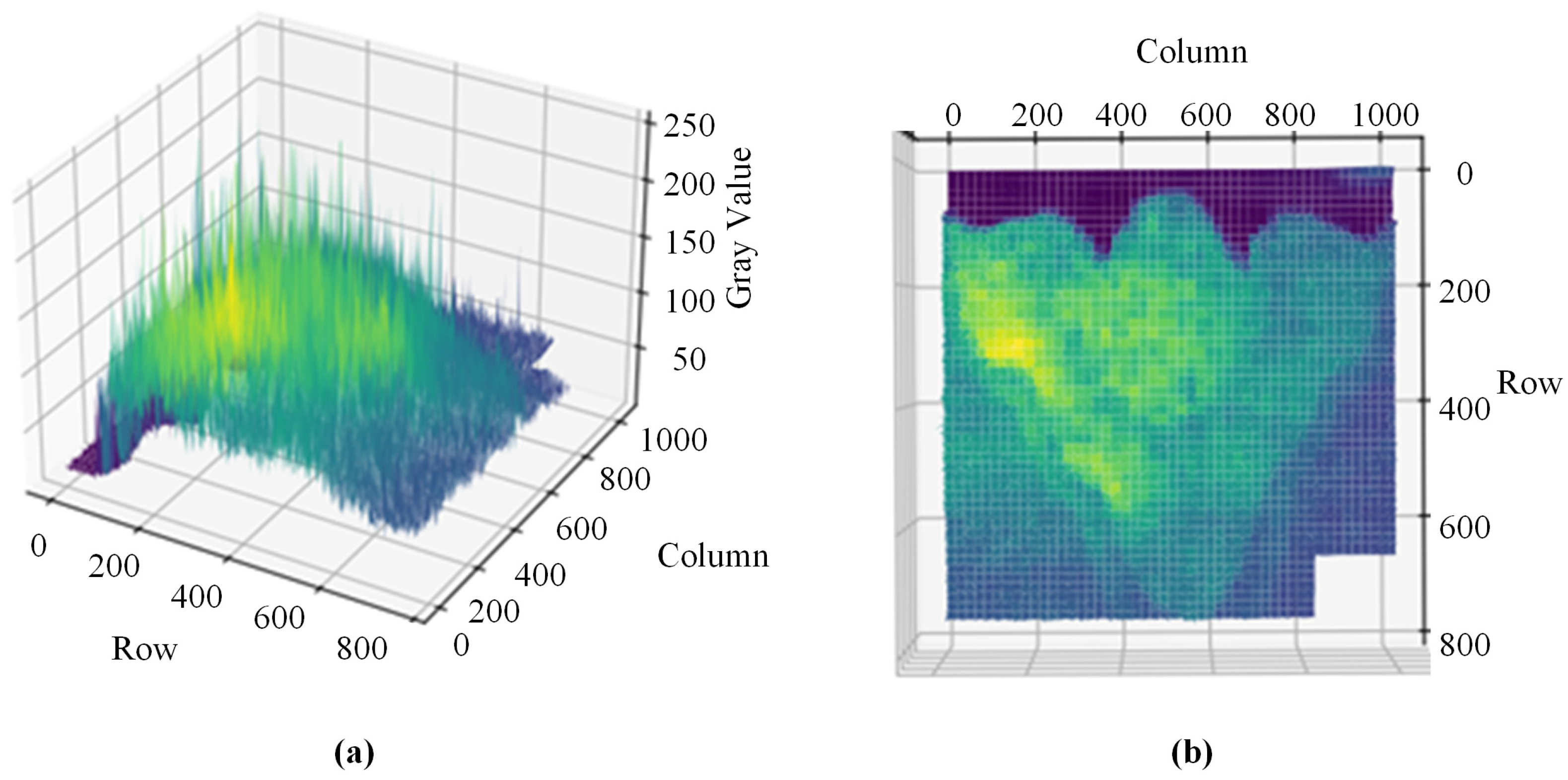

2.4. Gray Scale Analysis of a Weld Bead Cross-Section Image

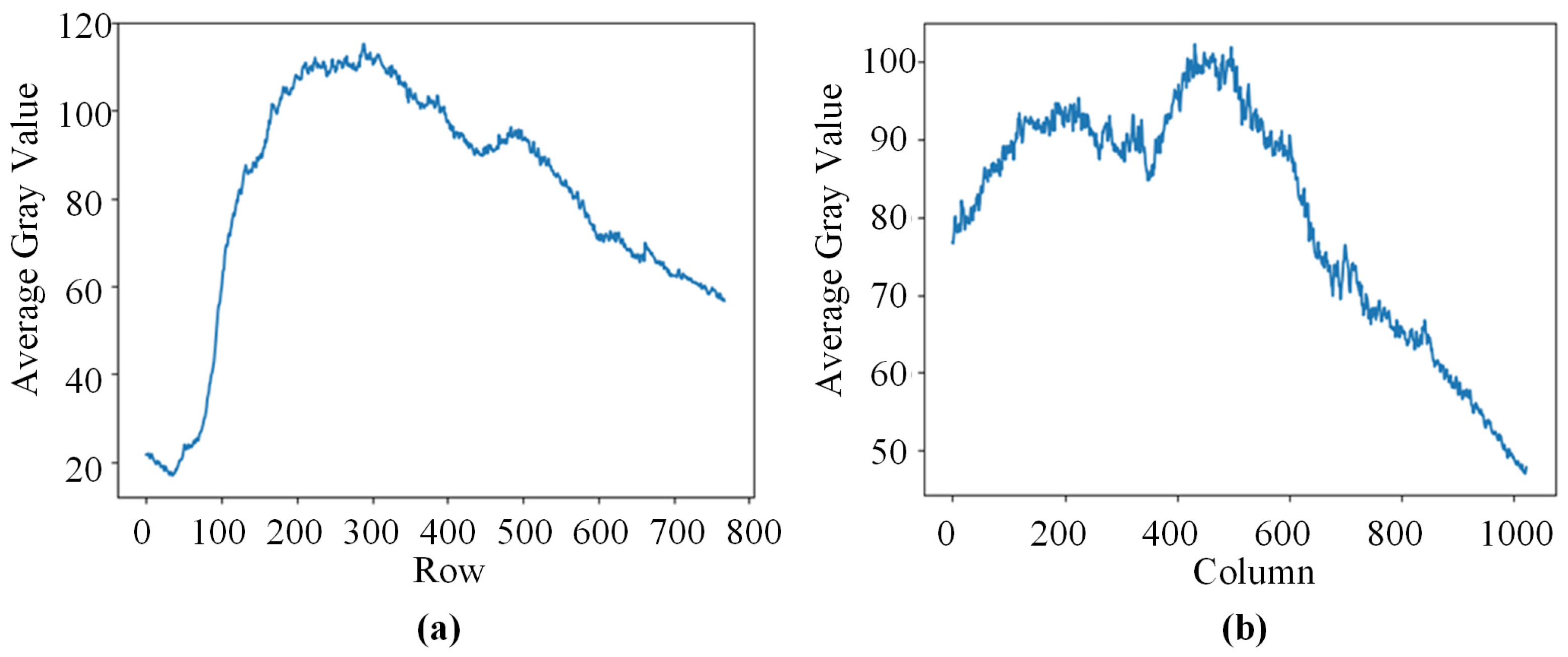

2.5. Principle of Row and Column Average Gray Value Segmentation

2.6. Weld Bead Cross-Section Image Processing Methods

2.6.1. Basic Image Processing Methods for Weld Bead Cross-Sections

- 1.

- Binarize the image after row and column grayscale segmentation

- 2.

- Binary image median filtering

- 3.

- Obtain the maximum contour of the filtered image

- 4.

- Fill the contour image to obtain the weld profile image

2.6.2. Edge Detection and Feature Extraction of Weld Bead Cross-Section Image

3. Results

3.1. Preparation for Sectional Image Row–Column Gray Segmentation

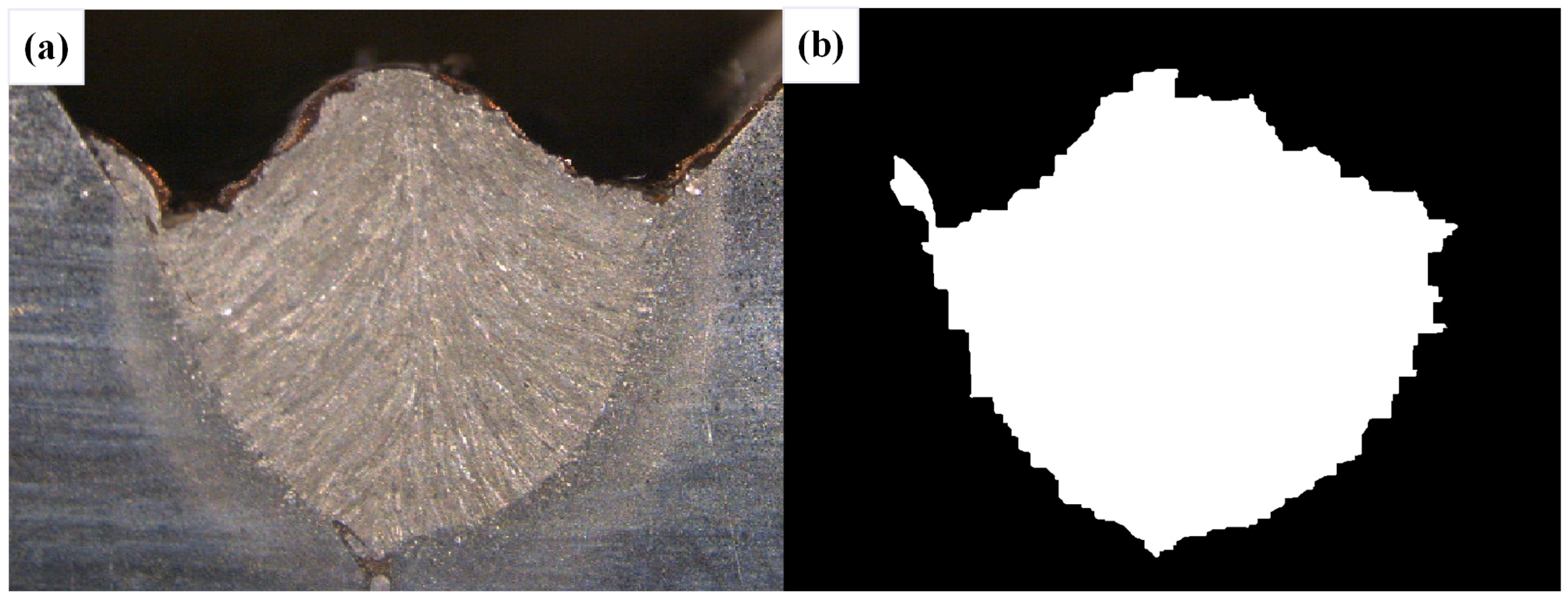

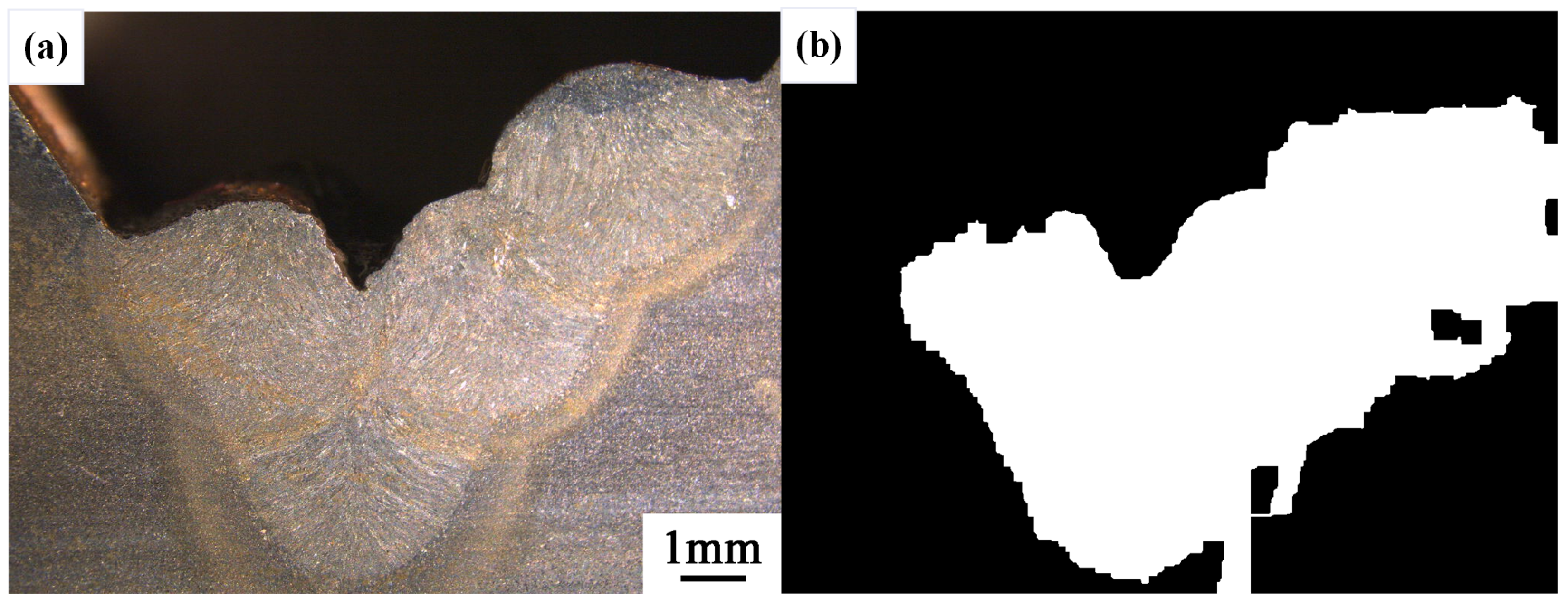

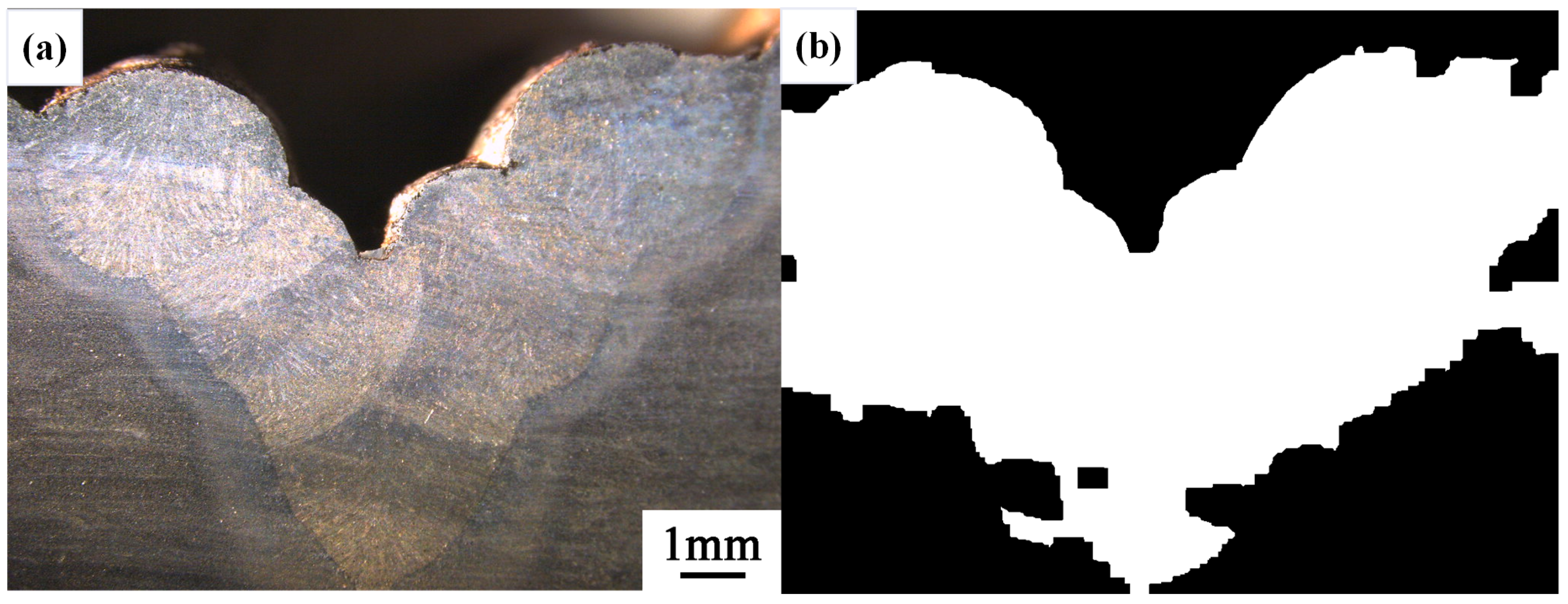

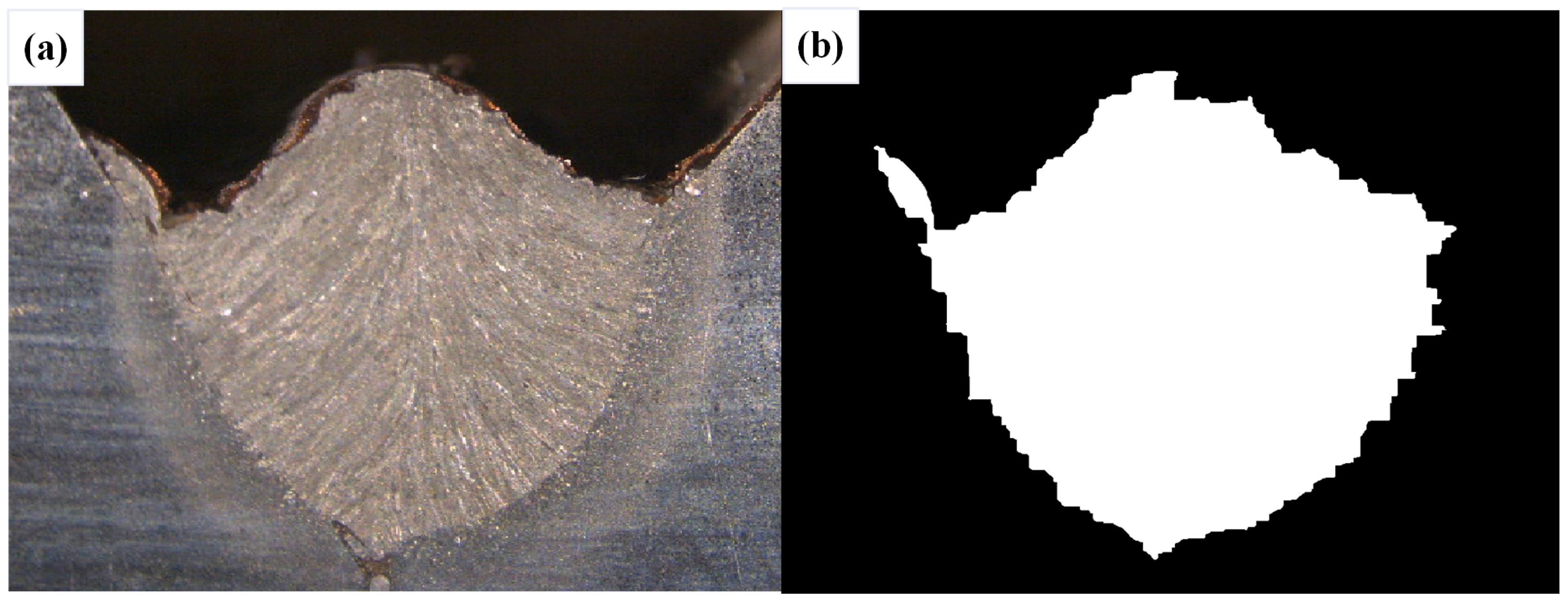

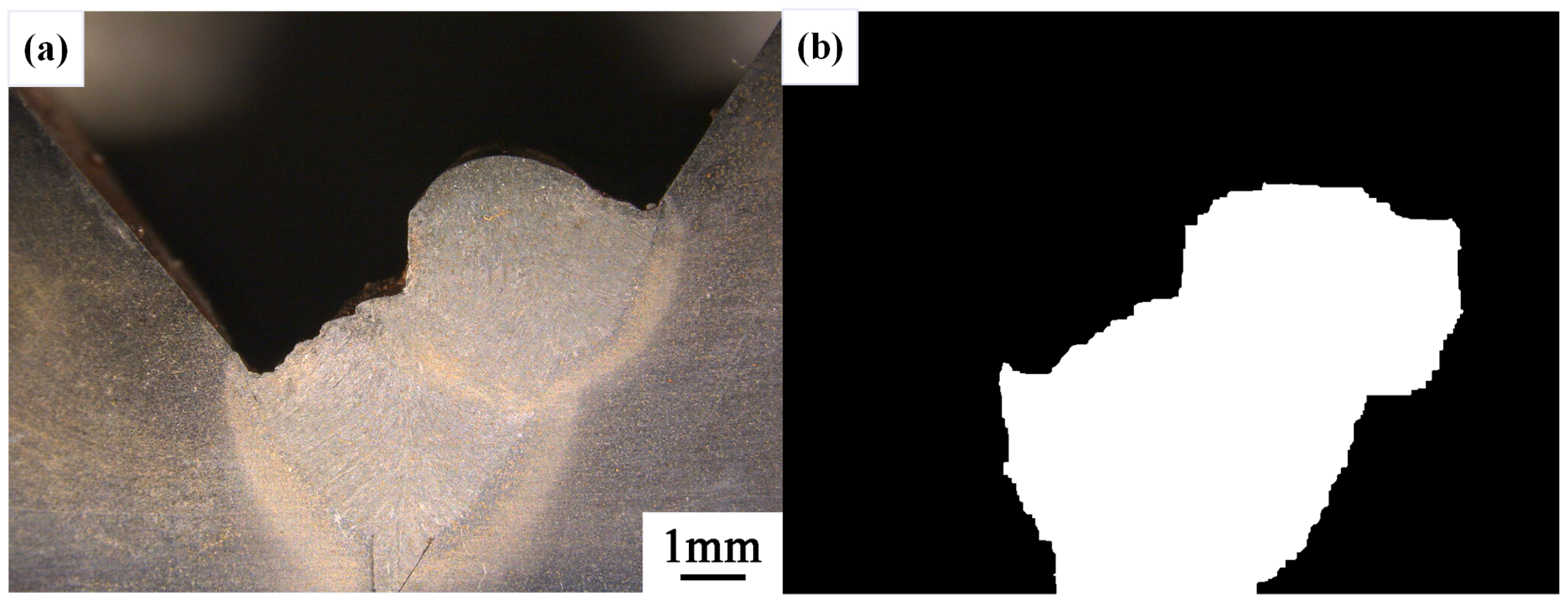

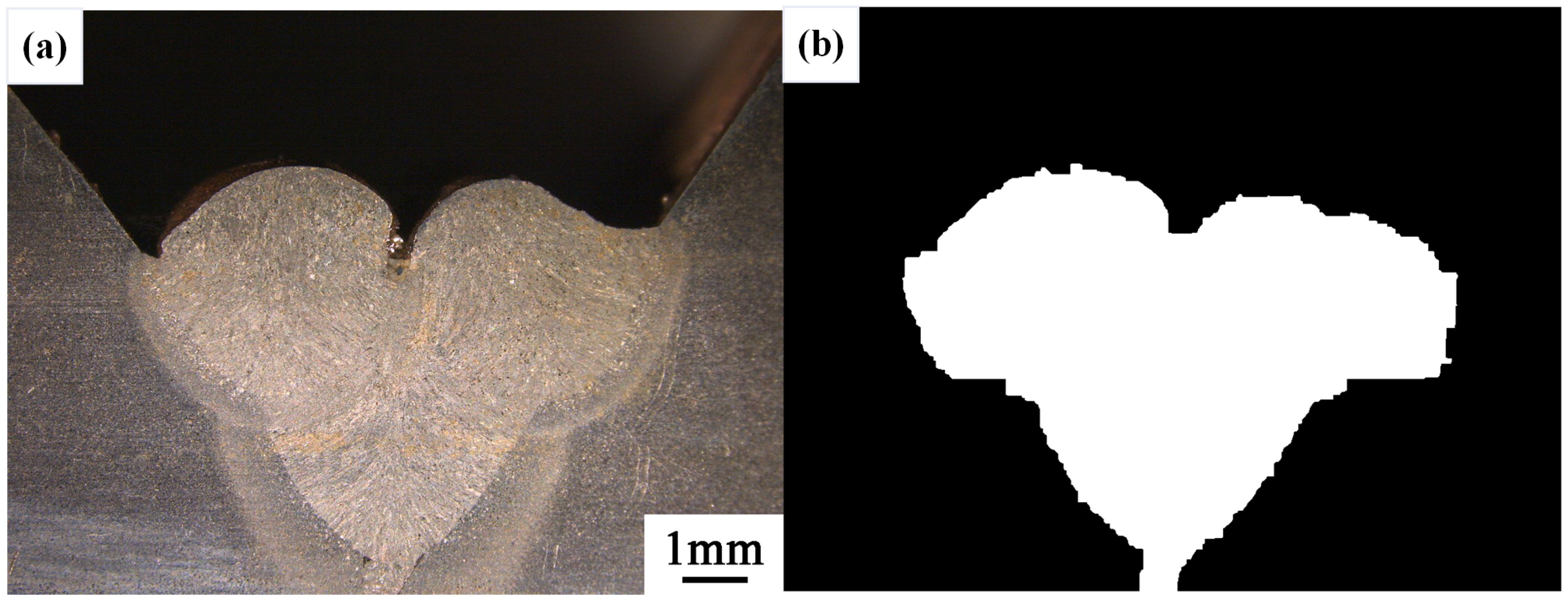

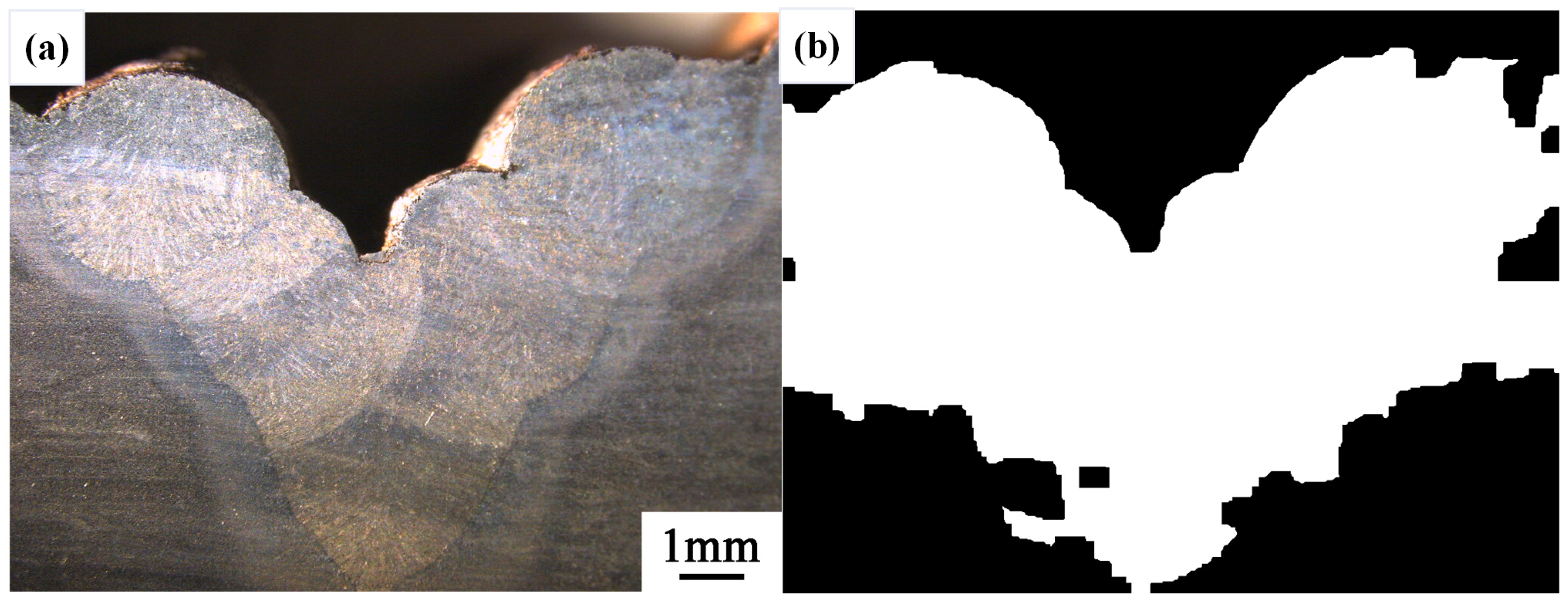

3.1.1. The Original Weld Bead Image Data

3.1.2. Sectional Image Gray Segmentation Analysis

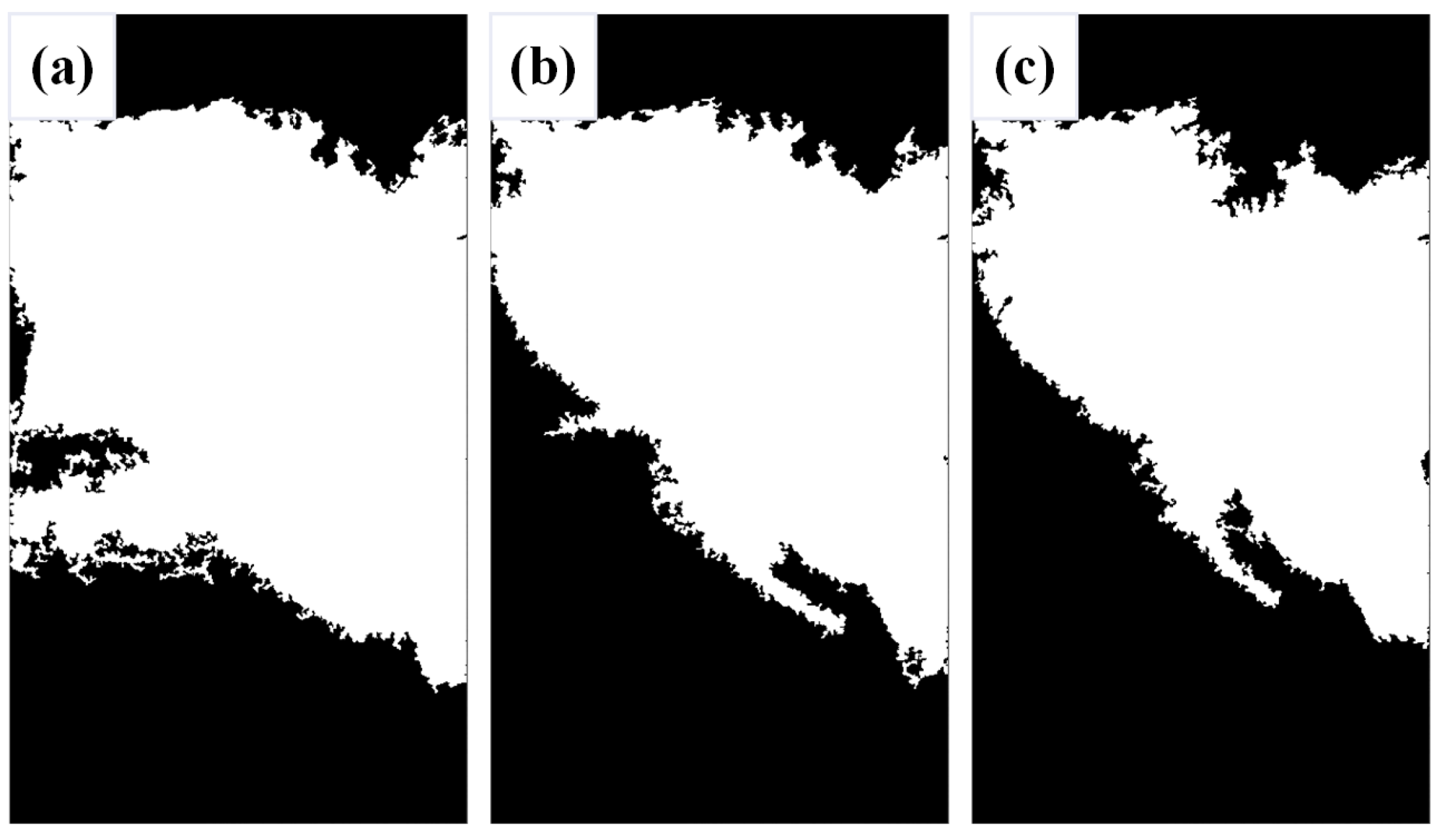

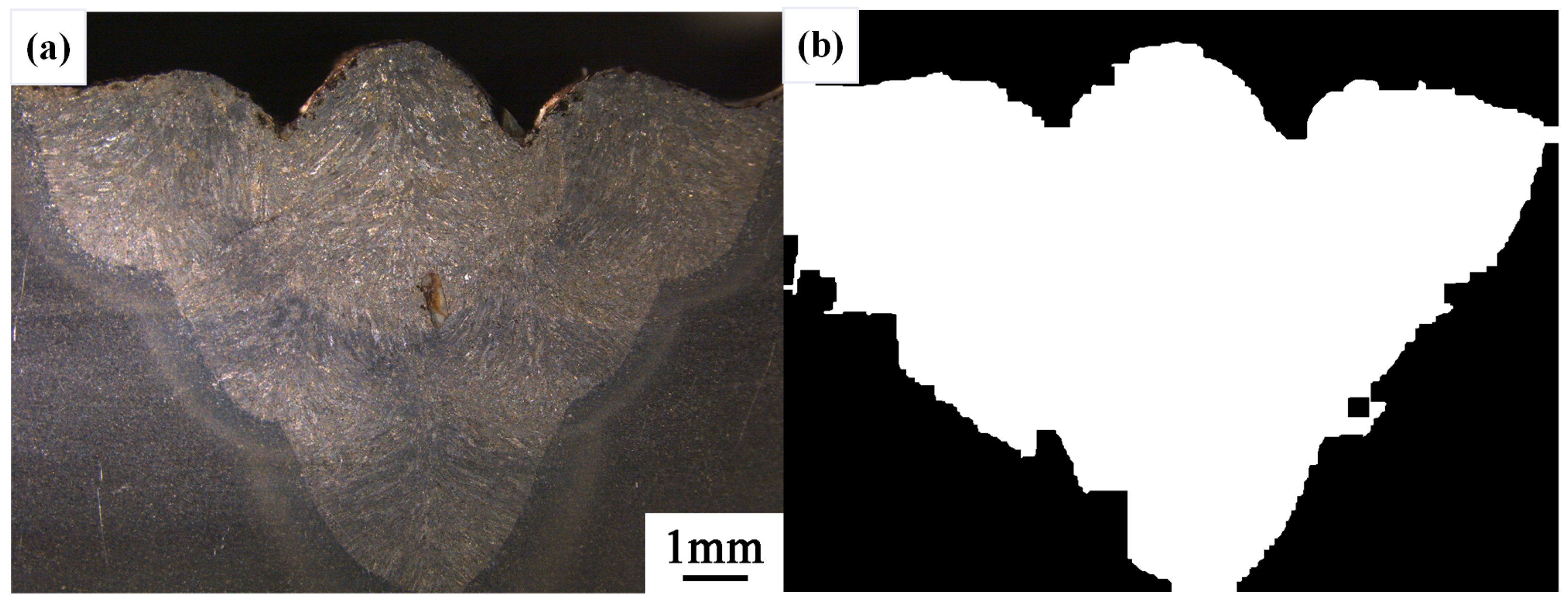

3.2. Gray Average Binarized Image

3.2.1. Binarized Method Threshold Analysis

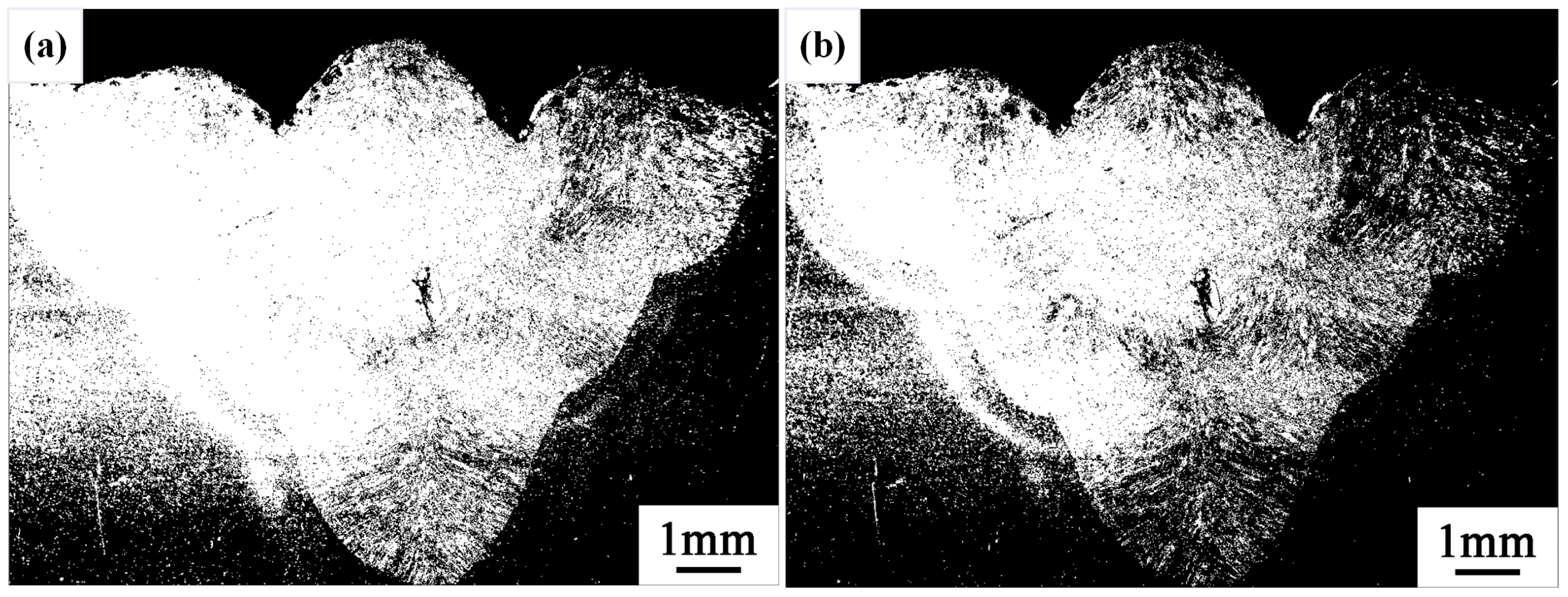

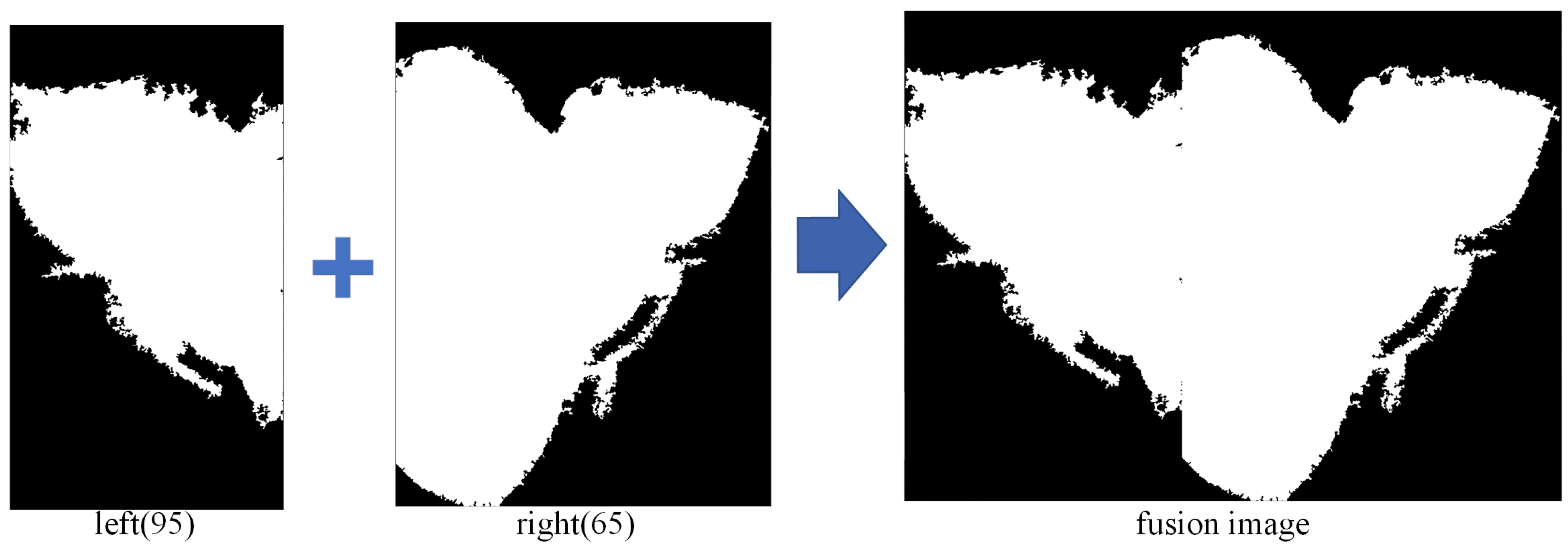

3.2.2. Fusion of Left and Right Profile Images

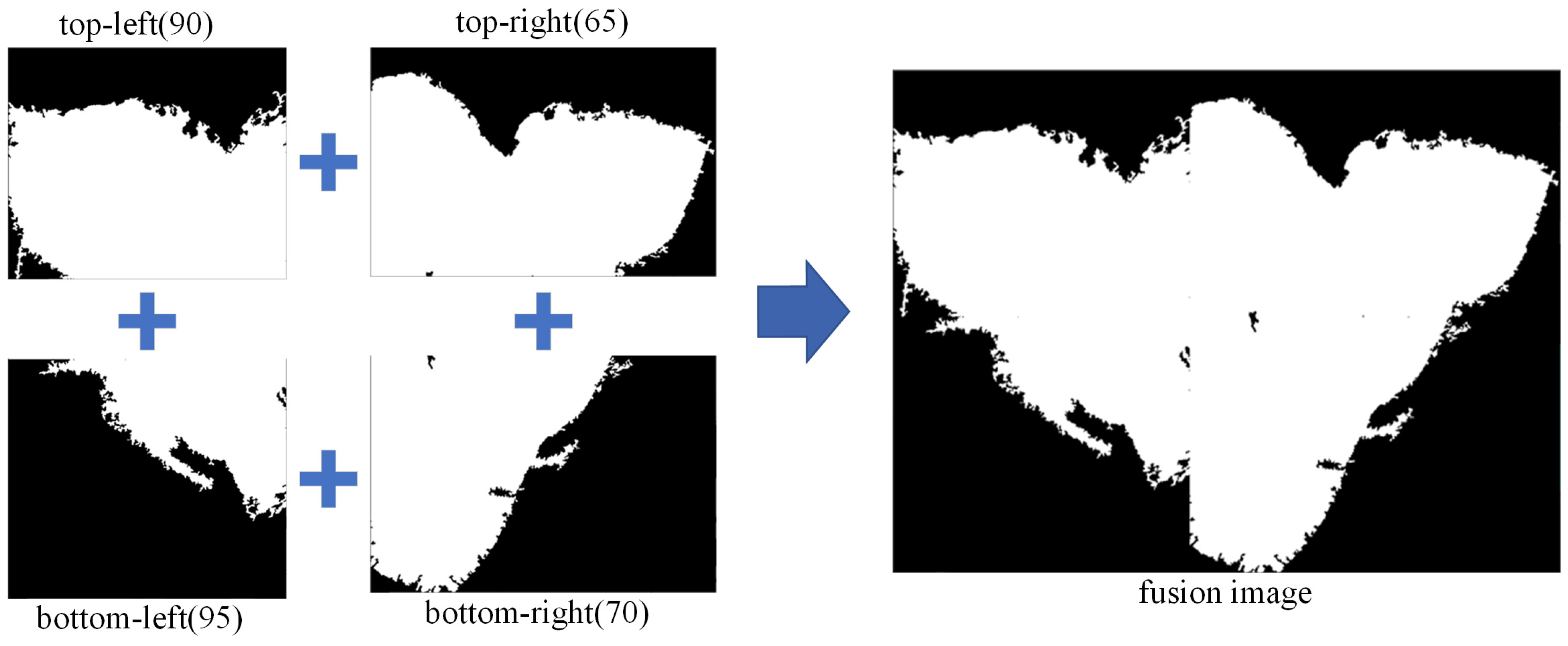

3.3. Grayscale Averaging Quadripartite Image

3.3.1. Quadripartite Method Threshold Analysis

3.3.2. Morphological Threshold Analysis

4. Discussion

4.1. Repeatability Tests

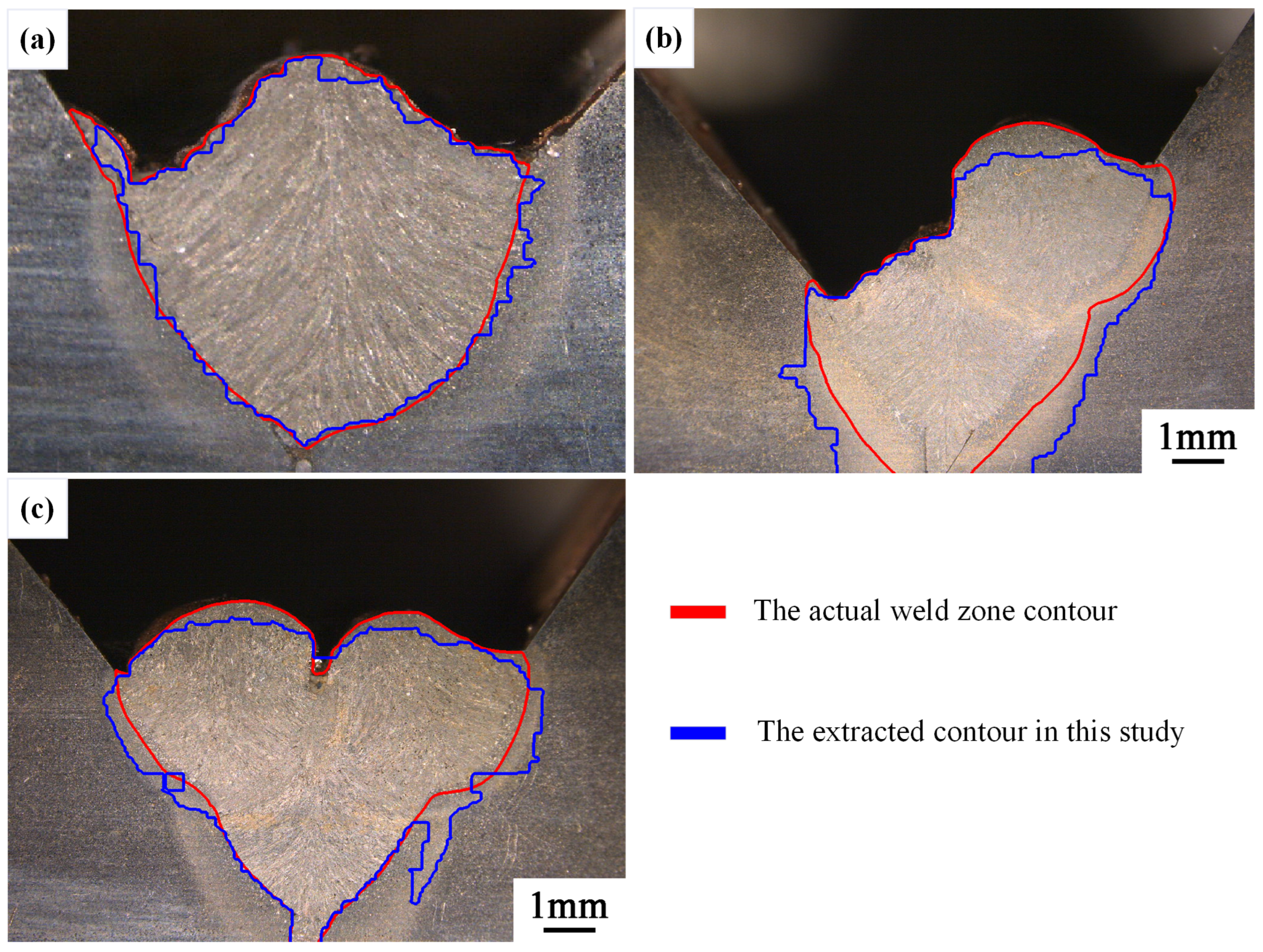

4.1.1. Repeatability Tests of the Binary Segmentation Method for Multi-Layer Multi-Pass Weld Bead Sectional Images

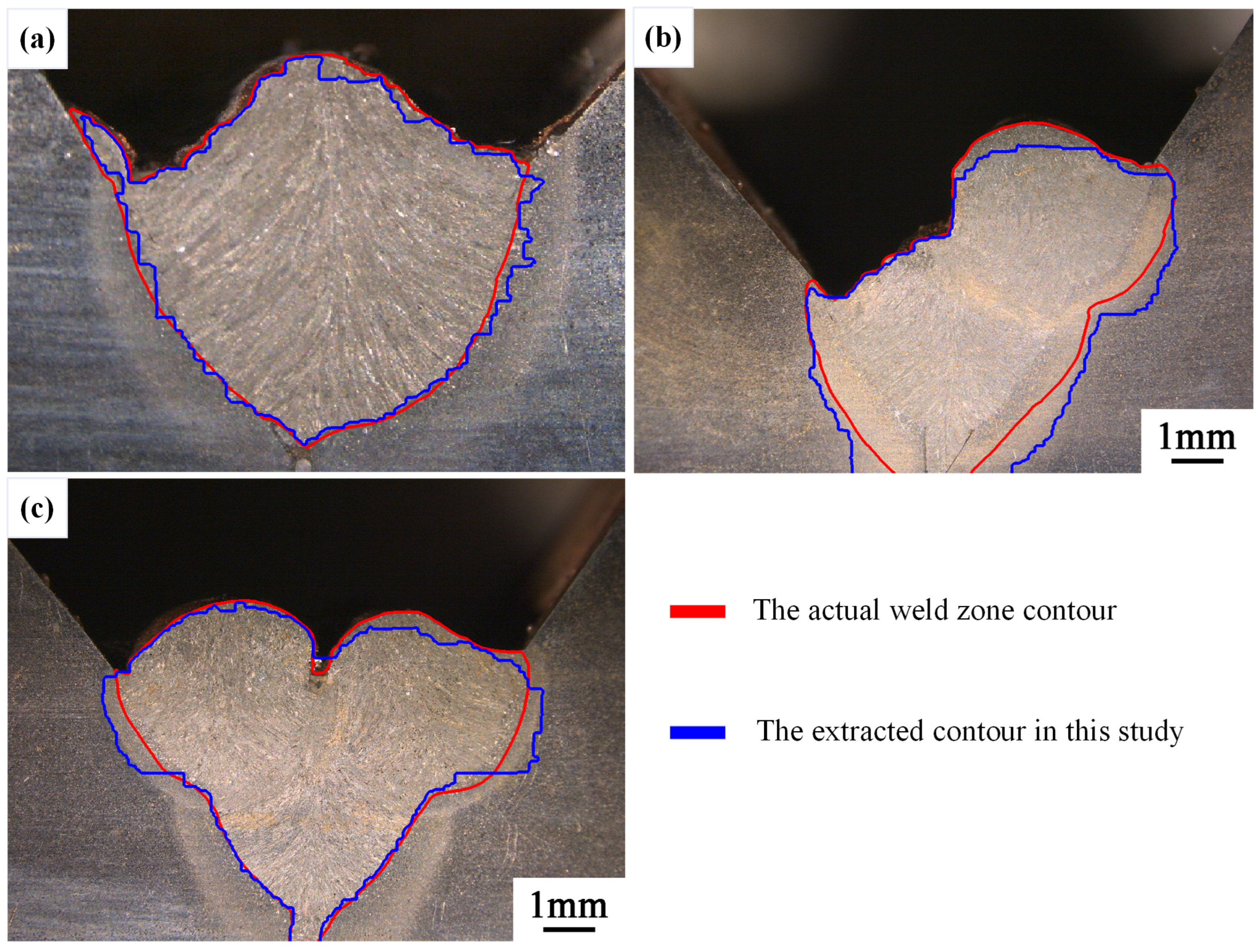

4.1.2. Repeatability Tests of Quadripartite Segmentation Method for Multi-Layer Multi-Pass Weld Bead Sectional Images

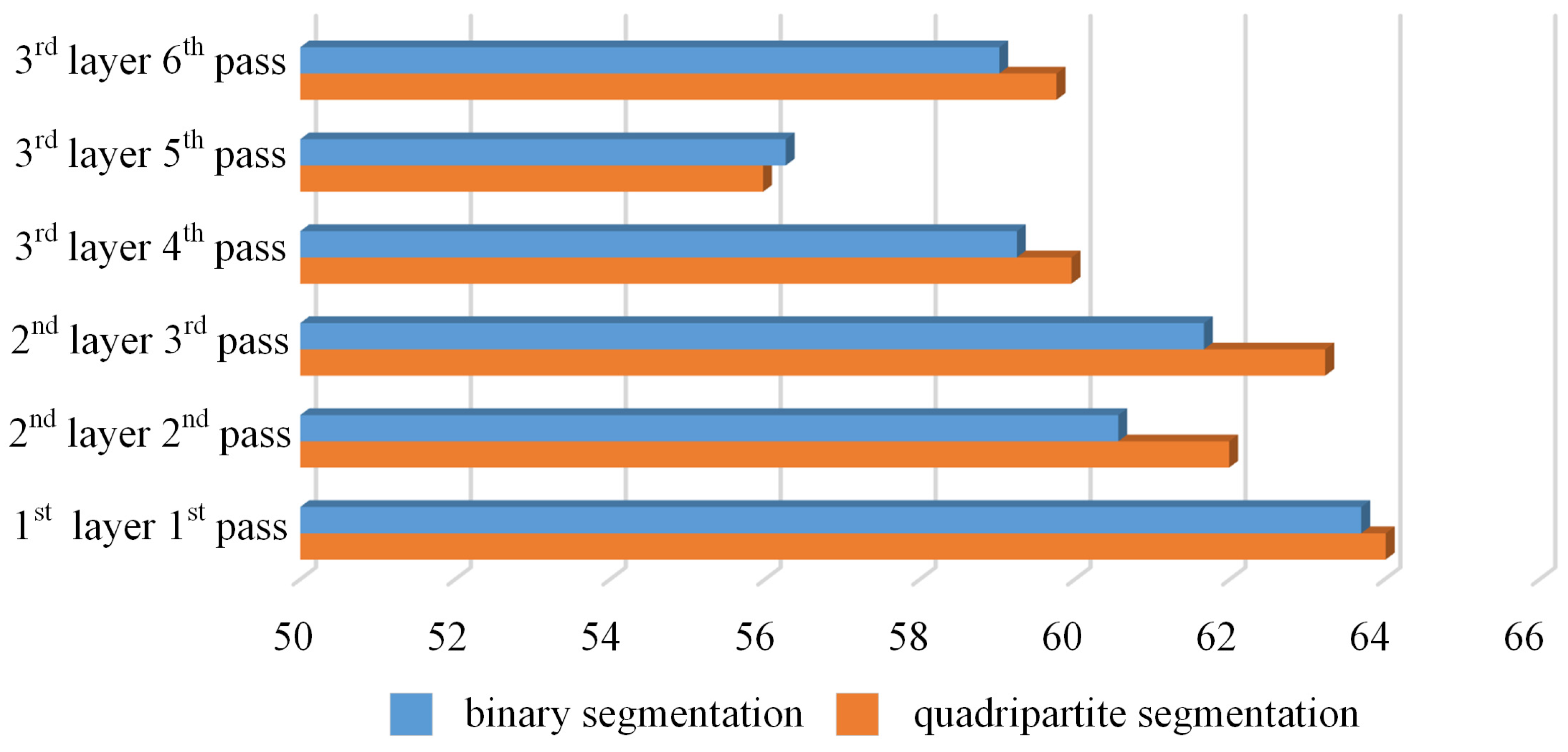

4.1.3. Comparison of Weld Contour Results

4.2. Analysis and Discussion

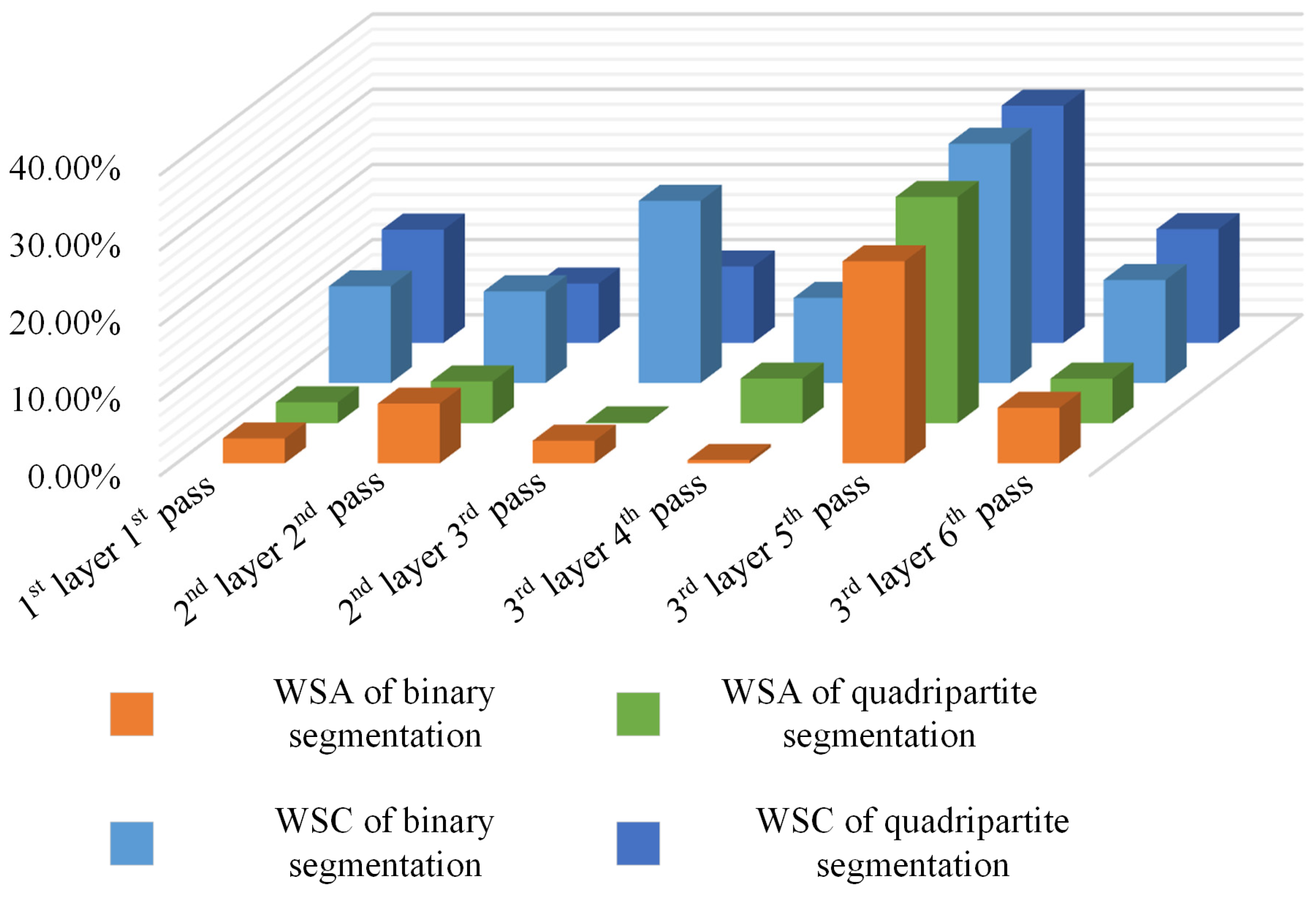

4.2.1. Comparison Analysis of Weld Bead Cross-Sectional Feature Data between the Binary Segmentation Method and the Quadripartite Segmentation Method

4.2.2. Comparison Analysis of the Quality of Weld Bead Cross-Sectional Images between the Binary Segmentation Method and the Quadripartite Segmentation Method

5. Conclusions

- (1)

- The innovation is the segmentation of the weld bead cross-section morphology into different modules based on the gray value for image processing. The ESI has an individual threshold, which allows the weld bead cross-section morphology to be extracted by region. The individually extracted morphology is then fused to obtain the complete weld bead cross-section morphology.

- (2)

- A segmentation points extraction method based on the average gray value in the row and column is proposed. This method solves the uneven distribution of the gray value of the weld image after segmentation and can extract a more convenient environment for the subsequent processing.

- (3)

- Multi-layer multi-pass weld bead cross-section morphology extraction experiments were conducted, ranging from first-layer first pass to third-layer sixth pass. The relative errors in WSC and WSA are within 10%, while the discrepancies in MWSW and MWSH can be close to the true value. The quality assessment falls within a reasonable range, which the average value of SSIM is superior to 0.9 on average and the average value of PSNR is superior to 60. This indicates that the multi-layer multi-pass weld feature extraction method based on image processing, while requiring a certain level of image quality, can identify the general contour of the weld bead and extract its shape parameters. We have reason to believe that in future work, more complex methods can be integrated to further enhance the accuracy and precision of the identification process, ultimately replacing manual identification.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sabatakakis, K.; Bourlesas, N.; Bikas, H.; Papacharalampopoulos, A.; Stavropoulos, P. Laser Welding of Dissimilar Cell Tabs: Extracting Physics Semantics from Infrared (IR) Emissions as Process Monitoring Data. Procedia CIRP 2024, 121, 222–227. [Google Scholar] [CrossRef]

- Ai, Y.; Dong, G.; Yuan, P.; Liu, X.; Yan, Y. The Influence of Keyhole Dynamic Behaviors on the Asymmetry Characteristics of Weld during Dissimilar Materials Laser Keyhole Welding by Experimental and Numerical Simulation Methods. Int. J. Therm. Sci. 2023, 190, 108289. [Google Scholar] [CrossRef]

- Dong, W.; Lian, J.; Yan, C.; Zhong, Y.; Karnati, S.; Guo, Q.; Chen, L.; Morgan, D. Deep-Learning-Based Segmentation of Keyhole in In-Situ X-ray Imaging of Laser Powder Bed Fusion. Materials 2024, 17, 510. [Google Scholar] [CrossRef] [PubMed]

- Seong, W.J.; Park, S.C.; Lee, H.K. Analytical Model for Angular Distortion in Multilayerwelding under Constraints. Appl. Sci. 2020, 10, 1848. [Google Scholar] [CrossRef]

- Cheng, W.; Zhang, X.; Lu, J.; Dai, F.; Luo, K. Effect of Laser Oscillating Welding on Microstructure and Mechanical Properties of 40Cr Steel/45 Steel Fillet Welded Joints. Optik 2021, 231, 166458. [Google Scholar] [CrossRef]

- Fang, J.; Wang, K. Weld Pool Image Segmentation of Hump Formation Based on Fuzzy C-Means and Chan-Vese Model. J. Mater. Eng. Perform. 2019, 28, 4467–4476. [Google Scholar] [CrossRef]

- Tohmyoh, H.; Ito, M.; Hasegawa, Y.; Matsui, Y. Extraction of the Outer Edge of Spot Welds from the Acoustic Image with the Aid of Image Processing. Int. J. Adv. Manuf. Technol. 2021, 114, 1731–1740. [Google Scholar] [CrossRef]

- Hao, J.; Ding, M.; Li, Z.; Liu, X.; Yang, H.; Liu, H. Molten Pool Image Processing and Quality Monitoring of Laser Cladding Process Based on Coaxial Vision. Optik 2023, 291, 171360. [Google Scholar] [CrossRef]

- Siva Shanmugam, N.; Buvanashekaran, G.; Sankaranarayanasamy, K. Some Studies on Weld Bead Geometries for Laser Spot Welding Process Using Finite Element Analysis. Mater. Des. 2012, 34, 412–426. [Google Scholar] [CrossRef]

- Zhao, X.; Chen, J.; Zhang, W.; Chen, H. A Study on Weld Morphology and Periodic Characteristics Evolution of Circular Oscillating Laser Beam Welding of SUS301L-HT Stainless Steel. Opt. Laser Technol. 2023, 159, 109030. [Google Scholar] [CrossRef]

- Wójcicka, A.; Walusiak, Ł.; Mroczka, K.; Jaworek-Korjakowska, J.K.; Oprzędkiewicz, K.; Wrobel, Z. The Object Segmentation from the Microstructure of a FSW Dissimilar Weld. Materials 2022, 15, 1129. [Google Scholar] [CrossRef] [PubMed]

- Ai, Y.; Lei, C.; Yuan, P.; Cheng, J. Analysis of Weld Seam Characteristic Parameters Identification for Laser Welding of Dissimilar Materials Based on Image Segmentation. J. Laser Appl. 2022, 34, 042050. [Google Scholar] [CrossRef]

- Zhang, B.; Shi, Y.; Cui, Y.; Wang, Z.; Chen, X. A High-Dynamic-Range Visual Sensing Method for Feature Extraction of Welding Pool Based on Adaptive Image Fusion. Int. J. Adv. Manuf. Technol. 2021, 117, 1675–1687. [Google Scholar] [CrossRef]

- Lei, T.; Gu, S.; Yu, H. Keyhole Morphology Monitoring of Laser Welding Based on Image Processing and Principal Component Analysis. Appl. Opt. 2022, 61, 1492. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Zhang, C.; Wu, J.; Zhang, S.; Zhu, L. Prediction of Weld Profile of 316L Stainless Steel Based on Generalized Regression Neural Network. Hanjie Xuebao/Trans. China Weld. Inst. 2021, 42, 40–47. [Google Scholar] [CrossRef]

- Alaknanda; Anand, R.S.; Kumar, P. Flaw Detection in Radiographic Weldment Images Using Morphological Watershed Segmentation Technique. NDT E Int. 2009, 42, 2–8. [Google Scholar] [CrossRef]

- Jingbo, S.; Chengbin, S.; Jianye, Z. Study on Weld Penetration and Microstructure of Q345B Low Alloy Steel Plate by Electron Beam Welding. Wide Heavy Plate 2022, 28, 25–27. [Google Scholar]

- Wu, X.; Su, H.; Sun, Y.; Chen, J.; Wu, C. Thermal-Mechanical Coupled Numerical Analysis of Laser + GMAW Hybrid Heat Source Welding Process. Hanjie Xuebao/Trans. China Weld. Inst. 2021, 42, 91–96. [Google Scholar] [CrossRef]

- Cui, S.; Pang, S.; Pang, D.; Zhang, Z. Influence of Welding Speeds on the Morphology, Mechanical Properties, and Microstructure of 2205 DSS Welded Joint by K-TIG Welding. Materials 2021, 14, 3426. [Google Scholar] [CrossRef] [PubMed]

- Peng, J.; Aimin, W.; Jin, Y.; Xinyi, S. Fast Recognition Algorithm of Weld Seam Image under Passive Vision. In Proceedings of the 2020 3rd World Conference on Mechanical Engineering and Intelligent Manufacturing, WCMEIM 2020, Shanghai, China, 4–6 December 2020; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA, 2020; pp. 351–355. [Google Scholar]

| Threshold | Original Figure | (15, 15) | (25, 25) | (35, 35) |

|---|---|---|---|---|

| WSC (pixel) | 3289 | 4169 | 3787 | 3604 |

| WSA (pixel2) | 404,529.5 | 422,026.5 | 428,483.0 | 432,633.5 |

| MWSH (pixel) | 728 | 726 | 726 | 726 |

| MWSW (pixel) | 1024 | 1024 | 1024 | 1024 |

| Original Image | 1st-Layer 1st-Pass | 2nd-Layer 2nd-Pass | 2nd-Layer 3rd-Pass | 3rd-Layer 4th-Pass | 3rd-Layer 5th-Pass | 3rd-Layer 6th-Pass |

|---|---|---|---|---|---|---|

| WSC (pixel) | 2507.2 | 2027.4 | 2224.6 | 2927.0 | 3419.6 | 3289.1 |

| WSA (pixel2) | 286,721.5 | 200,675.0 | 223,407.0 | 307,748.0 | 347,121.5 | 404,529.5 |

| MWSH (pixel) | 651 | 578 | 565 | 701 | 729 | 728 |

| MWSW (pixel) | 761 | 609 | 684 | 902 | 1024 | 1024 |

| Binary Segmentation | 1st-Layer 1st-Pass | 2nd-Layer 2nd-Pass | 2nd-Layer 3rd-Pass | 3rd-Layer 4th-Pass | 3rd-Layer 5th-Pass | 3rd-Layer 6th-Pass |

|---|---|---|---|---|---|---|

| WSC (pixel) | 2829.7 | 2274.7 | 2763.7 | 3258.5 | 4508.1 | 3739.7 |

| WSA (pixel2) | 277,245.0 | 216,625.0 | 230,121.0 | 309,118.5 | 440,427.0 | 434,406.5 |

| MWSH (pixel) | 643 | 535 | 539 | 657 | 721 | 726 |

| MWSW (pixel) | 748 | 649 | 728 | 867 | 1024 | 1024 |

| Quadripartite Segmentation | 1st-Layer 1st-Pass | 2nd-Layer 2nd-Pass | 2nd-Layer 3rd-Pass | 3rd-Layer 4th-Pass | 3rd-Layer 5th-Pass | 3rd-Layer 6th-Pass |

|---|---|---|---|---|---|---|

| WSC (pixel) | 2884.9 | 2187.4 | 2450.4 | 3215.2 | 4498.5 | 3786.6 |

| WSA (pixel2) | 278,755.0 | 211,807.0 | 223,177.0 | 289,484.5 | 451,563.5 | 428,483.0 |

| MWSH (pixel) | 643 | 543 | 562 | 624 | 719 | 726 |

| MWSW (pixel) | 767 | 613 | 731 | 908 | 1024 | 1024 |

| Binary Segmentation Error | 1st-Layer 1st-Pass | 2nd-Layer 2nd-Pass | 2nd-Layer 3rd-Pass | 3rd-Layer 4th-Pass | 3rd-Layer 5th-Pass | 3rd-Layer 6th-Pass |

|---|---|---|---|---|---|---|

| WSC | 12.86% | 12.20% | 24.23% | 11.32% | 31.83% | 13.70% |

| WSA | 3.31% | 7.95% | 3.01% | 0.45% | 26.88% | 7.39% |

| Quadripartite Segmentation Error | 1st-Layer 1st-Pass | 2nd-Layer 2nd-Pass | 2nd-Layer 3rd-Pass | 3rd-Layer 4th-Pass | 3rd-Layer 5th-Pass | 3rd-Layer 6th-Pass |

|---|---|---|---|---|---|---|

| WSC | 15.07% | 7.89% | 10.15% | 9.85% | 31.55% | 15.12% |

| WSA | 2.78% | 5.55% | 0.10% | 5.93% | 30.09% | 5.92% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, T.; Gong, S.; Wu, C. A Multi-Layer Multi-Pass Weld Bead Cross-Section Morphology Extraction Method Based on Row–Column Grayscale Segmentation. Materials 2024, 17, 4683. https://doi.org/10.3390/ma17194683

Lei T, Gong S, Wu C. A Multi-Layer Multi-Pass Weld Bead Cross-Section Morphology Extraction Method Based on Row–Column Grayscale Segmentation. Materials. 2024; 17(19):4683. https://doi.org/10.3390/ma17194683

Chicago/Turabian StyleLei, Ting, Shixiang Gong, and Chaoqun Wu. 2024. "A Multi-Layer Multi-Pass Weld Bead Cross-Section Morphology Extraction Method Based on Row–Column Grayscale Segmentation" Materials 17, no. 19: 4683. https://doi.org/10.3390/ma17194683

APA StyleLei, T., Gong, S., & Wu, C. (2024). A Multi-Layer Multi-Pass Weld Bead Cross-Section Morphology Extraction Method Based on Row–Column Grayscale Segmentation. Materials, 17(19), 4683. https://doi.org/10.3390/ma17194683