1. Introduction

Linear models that contain both fixed and random effects are referred to as variance components or linear-mixed models (LMMs). They arise in numerous applications, such as genetics [

1], biology, economics, epidemiology and medicine. A broad coverage of existing methodologies and applications of these models can be found in the textbooks [

2,

3].

In the simplest variance component setup, we observe a response vector

and a predictor matrix

and assume that

is an outcome of a normal random variable

, where the covariance is of the form,

The matrices

are fixed positive semi-definite matrices, and

is non-singular. The unknown mean effects

and variance component parameters

can be estimated by maximizing the log-likelihood function,

If

is known, the maximum likelihood estimator (MLE) for

is given by

To simplify the MLE estimate for

, one can adopt the restricted MLE (REML) method [

4] to remove the mean effect in the likelihood expression by projecting

onto the null space of

. Let

and suppose we have the QR decomposition,

where

is an

upper triangular matrix,

is an

zero matrix,

is an

matrix,

is

, and

and

both have orthogonal columns. If we take the Cholesky decomposition

of the matrix

, then the transformation,

removes the mean from the response, and we obtain

, where

and

. After this transformation, the restricted likelihood

will not depend on

.

Henceforth, and without loss of generality, we assume that such a transformation has been performed so that we can focus on minimizing an objective function of the form:

There exists extensive literature on optimization methods for the log-likelihood expression (

2), including Newton’s Method [

5], Fisher Scoring Method [

6], the EM and MM Algorithms [

7,

8,

9]. Newton’s method is known to scale poorly as

n, or the number of variance components

, increase due the cost of

flops required to invert a Hessian matrix at each update. Both the EM and MM algorithms have simple updating steps; however, numerical experience shows that they are slow to identify the active set

, where

is the MLE.

One class of algorithms yet to be applied to this problem are coordinate-descent (CD) algorithms. These algorithms successively minimize the objective function along coordinate directions and can be effective when the optimization for each sub-problem can be made sufficiently simple. Furthermore, only few assumptions are needed to prove that accumulation points of the iterative sequence are stationary points of the objective function. CD algorithms have been used to solve optimization problems for many years, and their popularity has grown considerably in the past few decades because of their usefulness in data science and machine learning tasks. Further in-depth discussions of CD algorithms can be found in the articles [

10,

11,

12].

In this paper, we show that a basic implementation of CD is costly for large-scale problems and does not easily succumb to standard convergence analysis. In contrast, our novel coordinate-descent algorithm called parameter-expanded coordinate descent (PX-CD) is computationally faster and more amenable to theoretical guarantees.

The PX-CD is computationally cheaper to run than the basic CD implementation because the first and second derivatives for each sub-problem can be evaluated efficiently with the conjugate gradient (CG) algorithm [

13], whereas the basic CD implementation requires repeat Cholesky factorizations for each coordinate update, each with a complexity of

. Further to this, it is often the case that the

are low-rank, and we can take advantage of this by employing the well-known Woodbury matrix identity or QR transformation within PX-CD to reduce the computational cost of each univariate minimization.

In PX-CD, the extended parameters are treated as a block of coordinates, which is updated at each iteration by searching through a coordinate hyper-plane rather than single-coordinate directions. We provide an alternate version of PX-CD, which we call parameter expanded-immediate coordinate descent (PXI-CD), where the extended coordinate block is updated multiple times within each cycle of the original parameters. We observe numerically that, for large-scale models, the number of iterations needed to converge greatly offsets the additional computational cost for each coordinate cycle. As a result, the overall convergence time is better than that of the PX-CD.

From a theoretical point of view, we show that the accumulation points of the iterative sequence generated by both the PX-CD and PXI-CD are coordinate-wise minimum points of (

2).

We remark that the improved efficiency of the PX-CD algorithm is similar to the well-known superior performance of the PX-DA (parameter-expanded data-augmentation) algorithm [

14,

15] in the Markov-chain Monte Carlo (MCMC) context—namely, the PX-DA algorithm is often much faster to converge than a basic data-augmentation Gibbs algorithm. This similarity is also the reason for using the same prefix “PX” in our nomenclature.

The remainder of the paper is structured as follows. In

Section 2, we describe the basic implementation of CD and provide examples for which it performs unsatisfactorily. In

Section 3, we introduce the PX-CD and PXI-CD and show that accumulation points of the iterations are coordinate-wise minima for the optimization. We then discuss their practical implementation and detail how to reduce the computational cost when the

are low-rank. We also extend the PX-CD algorithm for penalized estimation to perform variable selection. Then, in

Section 4, we provide numerical results when

are computer simulated and when

are constructed from a real-world genetic data set. We have made our code for these simulations available on GitHub (

https://github.com/anantmathur44/CD-for-variance-components) (accessed on 1 July 2022).

3. Parameter-Expanded CD

Since the basic CD Algorithm 1 is both expensive per coordinate update and is not amenable to standard convergence analysis [

16,

17], we consider an alternative called the parameter-expanded CD or PX-CD. We argue that our novel coordinate-descent algorithm is both faster to converge and also amenable to simple convergence analysis because the existence and uniqueness assumption holds. This constitutes our main contribution.

In the PX-CD, we use the supporting hyper-plane (first-order Taylor approximation) to the concave matrix function

, where

. The supporting hyper-plane gives the bound [

9]:

where

is an arbitrary PSD matrix, and equality is achieved if and only if

. Replacing the log-determinant term in

G with the above upper bound, we obtain the surrogate function,

The surrogate function

H has

as an extra variable in our optimization, which we set to be of the form:

where

are latent parameters. Similar to the MM algorithmic recipe [

9], we then jointly minimize the surrogate function

H with respect to both

and

using CD.

The most apparent way of selecting our coordinates is to cyclically update in the order:

where the last update is a block update of the entire block

. In other words, the expanded parameters

are treated as a block of coordinates that is updated in each cycle by searching through the coordinate hyper-plane rather than the single-coordinate directions. We refer to a full completion of updates in a single ordering as a “cycle” of updates. Suppose the initial guess for the parameters are

, then, at the end of cycle

t, we denote the updated parameters as

. In Theorem 1, we state that under certain conditions, the sequence

generated by PX-CD has limit-points, which are coordinate-wise minima for

G.

Let

be the updated covariance matrix after the

original parameters have been updated in cycle

t. Then, as the inequality in (

9) achieves equality if and only if

the update for the expanded block parameters

in cycle

t is,

In practice, we simply store at the end of each cycle.

Minimizing

H with respect to the

k-th component of the original parameter

yields a function of the form:

One of the main advantages of the PX-CD procedure over the basic coordinate descent in Algorithm 1 is that the optimization along each coordinate has a unique minimum.

Lemma 1. has a unique minimizer for .

Proof. We now show that on , the function is either strictly convex or a linear function with a strictly positive gradient.

We first consider the case where

is invertible. From [

13], we have the GEV decomposition:

where

is a diagonal matrix with non-negative entries and

is invertible. In a similar fashion to the simplified basic CD expression (

7), let

and

. Then,

can be simplified to,

We then obtain the first and second derivatives,

where

. If there exists j such that

, then

for

. Then,

h is strictly convex and attains a unique global minimizer

. Suppose that

for

then

. If we can show that

, then

is strictly increasing on

, and

is the unique global minimizer for

.

We note that the matrix

is positive-definite since

is invertible and positive semi-definite. Therefore, the symmetric square root factorization,

exists, and

due to the invariant cyclic nature of the trace. The matrix

is positive semi-definite as

for all

. Since

is a non-zero matrix and positive-semi-definite,

and

has a strictly positive slope, which implies that

is the unique global minimizer for

.

Consider the case

. Assuming

, then

will be invertible except when

. When

is singular, a simplified expression in the form of (

11) may be difficult to find. Instead, we take the singular value decomposition (SVD) of the symmetric matrix

,

where

are the real-positive eigenvalues of

, and the matrix

is orthogonal. Then, we can express the inverse as

If we assume

, then

. Then,

and

when

. Therefore,

still attains a unique minimizer as the function is strongly convex for

. □

The result of this lemma ensures the existence and uniqueness condition (

8), and thus we can ensure that accumulation or limit points of the CD iteration are also coordinate-wise minimal points. The details of the optimization follow.

3.1. Univariate Minimization via Newton’s Method

Unlike the basic CD Algorithm 1, for which each coordinate update costs , here we show that a coordinate update for the PX-CD algorithm costs only for some constant j where typically .

The function

can be minimized via the second-order Newton’s method, which numerically finds the root of

. The basic algorithm starts with an initial guess

of the root, and then

are successive better approximations. The algorithm can be terminated once successive iterates are sufficiently close together. The first and second derivatives of

h are given as

where we used differentiation of a matrix inverse, which implies that

Similar to the basic CD implementation, computing the algebraic expression in (

11) via GEV is expensive. Evaluating (

15) and (16) by explicitly calculating

is also expensive for large

n and is of time complexity

. Instead, we utilize the conjugate gradient (CG) algorithm [

13] to efficiently solve linear systems. At each iteration of Newton’s method, we approximately solve,

and store the solution in

and

, respectively, via CG algorithm. Generally,

and

can be made small with

iterations, where each iteration requires a matrix-vector-multiplication operation with a

matrix. The CG algorithm has complexity

and can be easily implemented with standard Linear Algebra packages. With the stored approximate solutions, we evaluate the first and second derivatives as

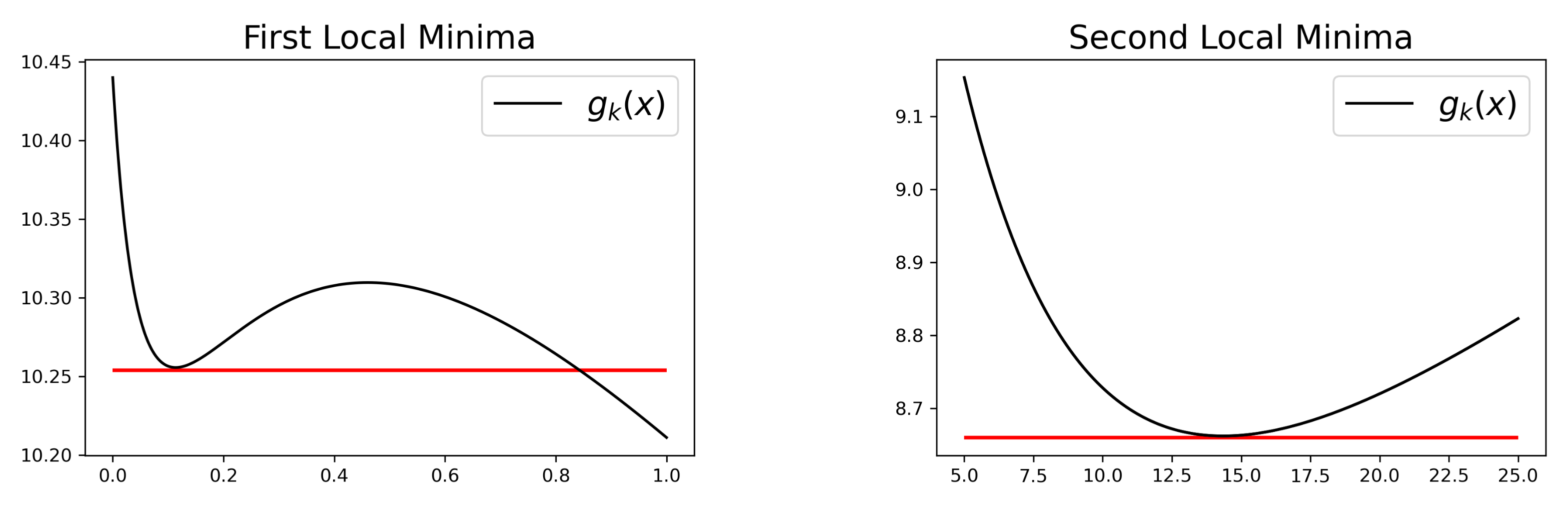

Before initiating Newton’s method, we can check if k is in the active constraint set . Following from Lemma 1, if , then is non-decreasing on . Then, is the global minimum for , and we let if we are in cycle of PX-CD. If , we initiate Newton’s method at the current value of the variance component, . If , we require so that is invertible.

In this case,

cannot be in the active constraint set and we immediately initiate Newton’s method at the starting point

. In rare cases

is sufficiently flat at

and (

14) may significantly overstep the location of the minimizer and return an approximation

. In this case, we dampen the step size until

.

3.2. Updating Regime

We now consider an alternative to the cyclic ordering of updates. Suppose we update the block

after every co-ordinate update—that is, the updating order of one complete cycle is

This ordering regime satisfies the “essentially cyclic” condition whereby, in every stretch of updates, each component is updated at least once. We refer to CD with this ordering as parameter expanded-immediate coordinate descent (PXI-CD).

In practice, this ordering implies updating the matrix after every update made to each . Since the expression for requires one to evaluate and is updated after every coordinate, we must re-compute for each k. This implies that each cycle in PXI-CD will be more expensive than PX-CD. However, we observe that in situations where is full rank and n is sufficiently large, the number of cycles needed to converge is significantly less than that required for PX-CD and basic CD.

This results in PXI-CD being the most time-efficient algorithm when the scale of the problem is large. In

Section 3.3, we show that, when

is low-rank, re-computing

comes at no additional-cost through the use of the Woodbury matrix identity. However, in this particular scenario, where

are low-rank, the performance gain from PXI-CD is not as significant as when

are full rank, and both PXI-CD and PX-CD show similar performance. Algorithm 2 summarizes both PX-CD and PXI-CD methods to obtain

.

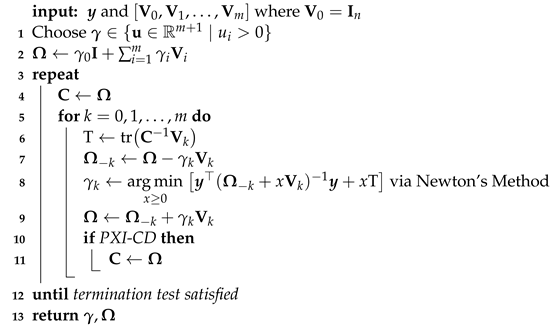

| Algorithm 2 PX-CD and PXI-CD for |

![Algorithms 15 00354 i002]() |

As mentioned previously, the novel parameter-expanded coordinate-descent algorithms, PX-CD and PXI-CD, are both amenable to standard convergence analysis.

Theorem 1 (PX-CD and PXI-CD Limit Points)

. For both PX-CD and PXI-CD in Algorithm 2, let be the coordinate-descent sequence. Then, either , or every limit-point of is a coordinate-wise minimum of (3). If we further assume that , then the sequence is bounded and is ruled out. Proof. Recall that

and

. Denote

and

as well as

. We can rewrite the optimization problem (

3) in the penalized form:

where

and

We can then apply the results in [

18], which state that every limit point of

is a coordinate-wise minimum provided that:

Each function , and has a unique minimum. For this has already been verified in Lemma 1. For , we simply recall that with equality achieved if and only if , or equivalently .

Each is lower semi-continuous. This is clearly true for because, at the point of discontinuity, we have . For , we simply check that for a given is a closed set.

The domain of is a Cartesian product and is continuous on its domain. Clearly, the domain is the Cartesian product and is continuous on its effective domain .

The updating rule is essentially cyclic—that is, there exists a constant such that every block in is updated at least once between the r-th iteration and the -th iteration for all r. In our case, each block is updated at least once in one iteration of Algorithm 2 so that we satisfy the essentially cyclic condition. In the PXI-CD, we actually update times the block .

Thus, we can conclude by Proposition 5.1 in [

18] that either

or the limit points of

are coordinate-wise minima of

.

If we further assume that

, then we can show that set

is compact, which ensures that the sequence

is bounded and rules out the possibility that

. To see that this is the case, note that

provides a lower bound to

, and this is sufficient to show that

is compact for any

under the assumption that

and

. However, these are precisely the conditions of Lemma 3 in [

9], which ensure that

is compact for a likelihood

.

Finally, note that since we update the entire block simultaneously (in both PX-CD and PXI-CD), this means that a coordinate-wise minimum of is also a coordinate-wise minimum for . □

We again emphasize that, as with all the competitor methods, the theorem does not guarantee convergence of the coordinate-descent sequence

or that the convergence will be to a local minimum. The only thing we can say for sure is that, when the sequence converges, then the limit will be a coordinate-wise minimum (which could be a saddle point in some special cases). Nevertheless, our numerical experience in

Section 4 is that the sequence always converges to a coordinate-wise minimum and that the coordinate-wise minimum is in fact a (local) minimum.

3.3. Linear Mixed Model Implementation

We now show that, for Linear Mixed Models (LMM), we can reduce the computational complexity of each sub-problem to

, for some constants

and

. As shown in

Section 3.1, solving each univariate sub-problem can be simplified to implementing Newton’s method, where at each Newton’s update, we solve two

n-dimensional linear systems.

In settings where

, for

, we are able to reduce the dimensions of the linear system that is required to be solved. To see this, let us first specify the general variance-component model (also known as the general mixed ANOVA model) [

19]. Suppose,

where

is an

matrix of known fixed numbers,

;

is an

vector of unknown constants;

is a full-rank

matrix of known fixed numbers,

;

is an

vector of independent variables from

, which are unknown and

is an

vector of independent errors from

that are unknown. In this setup,

, are the variance component parameters that are to be estimated,

and

, where

We now provide two methods that take advantage of

being low-rank. Let,

In the first method, we utilize a QR factorization that reduces the computational complexity when , i.e., the column rank of is less than n. In the second method, we use the Woodbury matrix identity to reduce the complexity when for , i.e., the column rank of each of the matrices is less than n.

3.3.1. QR Method

The following QR factorization can be viewed as a data pre-processing step that allows all PX-CD and PXI-CD computations to be

c-dimensional instead of

n-dimensional. The QR factorization only needs to be computed once initially with a cost of

operations. Let the QR factorization of

be

where

is an

upper triangular matrix,

is an

zero matrix,

is an

matrix,

is

, and

and

both have orthogonal columns. The matrix

is partitioned such that the number of columns in

is equal to the number of columns in

. Let

, where

are the first

c elements of

and

are the last

elements of

. Then,

where we define:

,

,

,

and

. The details of this derivation are provided in the

Appendix A. To implement PX-CD or PXI-CD after this transformation we run Algorithm 2 with inputs

and

. In this simplification of

H, we have the additional term

, which is dependent on

. Therefore, when we update the parameter

and implement Newton’s method we must also add

to the corresponding derivatives derived for Newton’s method in

Section 3.1.

3.3.2. Woodbury Matrix Identity

Alternatively, if

c is large (say

) but individually

, for

, we can use the Woodbury identity to reduce each linear system to

dimensions (instead of

n dimensions) when updating the component

. Suppose we are in cycle

of either PX-CD or PXI-CD and we wish to update the parameter

, where

, then we can simplify the optimization,

by viewing

as a low-rank perturbation to the matrix

. The Woodbury identity gives the expression for the inverse,

which contains the unperturbed inverse matrix

and the inverse of a smaller

matrix. In this implementation of PXI-CD and PX-CD we re-compute and store the matrix

after each coordinate update. Let

, then the line search along the

k-th component (

) of the function

H simplifies to

When implementing PXI-CD, there is no additional cost when using the Woodbury identity, as the update

is made after every coordinate update and the trace term in the line search can be evaluated cheaply because

is known. We can now implement Newton’s method to find the minimum of

. Let

If we then solve the

-dimensional linear systems

and

with CG and store the solution in the vectors

and

, respectively, we can evaluate the first and second derivatives of

as

and implement the Newton steps (

14). The derivation of these derivative expressions are provided in

Appendix B. After each coordinate

k is updated we evaluate and store the updated inverse covariance matrix,

using (

20), where we invert a smaller

matrix only. When

, no reduction in complexity can be made as the perturbation to

is full-rank and we update

as we did in

Section 3.1. If

, then we can use an alternate form of the Woodbury identity,

where

to update

after

has been updated. If

, we invert the full

updated matrix covariance to obtain

using a Cholesky factorization at cost

. This

cost for updating

is a disadvantage for this implementation if

; however, numerical simulations suggest that, when

for

, the Woodbury implementation is the fastest implementation.

3.4. Variable Selection

When the number of variance components is large, performing variable selection can enhance the model interpretation and provide more stable parameter estimation. To impose sparsity when estimating

, a lasso or ridge penalty can be added to the negative log-likelihood [

20]. The MM implementation [

9] provides modifications to the MM algorithm such that both lasso and ridge penalized expressions can be minimized. We now show that, with PX-CD, we can minimize the penalized negative log-likelihood when using the

penalty. Consider the penalized negative log-likelihood expression,

We then have the surrogate function,

If we use PX-CD to minimize

J, we need to repeatedly minimize the one-dimensional function along each co-ordinate,

. Here, we implement Newton’s method as before with the only difference now being that the derivative is increased by the constant

,

It follows from Lemma 1 that is either strictly convex or linear with strict positive gradient for . We check if to determine if is the global minimizer for . If it is, we let if we are in cycle . If , we initiate Newton’s method at the current value of the variance component . The larger the parameter is, the greater number of times this active constraint condition will be met, and therefore more variance components will be set to zero.

4. Numerical Results

In this section, we assess the efficiency of PX-CD and PXI-CD via simulation and compare them against the best current alternative method, the MM algorithm [

9]. In [

9] the MM algorithm is found to be superior to both the EM and Fisher Scoring Method in terms of performance. This superior performance was also described in [

21].

In our experiments, we additionally include the Expectation–Maximization (EM) and Fisher-Scoring (FS) method in the small-scale problem only, where

. We exclude the EM and FS method for more difficult problems, as they are too slow and unsuitable. The MM, EM and FS are executed with the Julia implementation in [

9]. We provide results in three settings. First, we simulate data from the model (

Section 3.3), where

, i.e., when the matrices

are low-rank. Secondly, we simulate when

, i.e., the matrices

are full-rank, and finally, we simulate data from model (

Section 3.3), where the matrices

are constructed from a real data-set containing genetic variants of mice.

4.1. Simulations

For the following simulations, we simulate data from the model (

Section 3.3). Since the fixed effects

can always be eliminated from the model using the REML, we focus solely on the estimation of the variance component parameters. In other words, the value of

in our simulations is irrelevant. In each simulation, we generate the fixed matrices

as

where

and

is the Frobenius matrix norm. The rank of each

is equal to the parameter

r, which we vary.

In each simulation, for , we draw the m true variance components as where . Then, we simulate the response from and estimate the vector . We vary the value of from the set and keep .

4.1.1. Low-Rank

We now present the results for where are generated as stated above and . As are low-rank, we run the Woodbury implementation of PXI-CD and exclude PX-CD as it has the same computational cost as PXI-CD for each update and exhibits almost identical performance. First, PXI-CD is run until the relative change is less than and we store the final objective value as . We then run the algorithms MM, EM and FS and terminate once . We initialize all algorithms to start from the point . Each simulation scenario is replicated 10 times. The mean running time is reported along with the standard error of the mean running time provided in parentheses.

The results of the low-rank simulations are given in

Table 1,

Table 2 and

Table 3. The results indicate that, apart from the smallest scale problem when

, our PXI-CD algorithm outperforms the MM algorithm and significantly so that the scale of the model (both

m and

r) increases.

4.1.2. Full-Rank

We now present the results when . We implement the standard PX-CD, PXI-CD and MM algorithms, where either the Woodbury, or the QR method cannot be used. Initially, PX-CD is run until the relative change is less than , and we store the final objective value as . The other algorithms terminate once . For the following simulations, we consider one iteration of a CD algorithm as a single cycle of updates. Each simulation scenario is replicated 10 times. The mean running time and mean iteration number is reported with the standard error of the mean running time and mean iteration provided in parentheses.

The results of the full-rank simulations are given in

Table 4,

Table 5 and

Table 6. PX-CD and PXI-CD both significantly outperform the MM and the basic CD algorithms in these examples. We observe that as the number of components

m increases the problem becomes increasingly difficult for the MM algorithm. An intuitive explanation for this performance gap is that the CD algorithms are able to identify the active constraint set

in only a few cycles.

When and and m is large (, ), PXI-CD is the fastest algorithm, even though it is computationally the most expensive per cycle. When and , PXI-CD is the fastest algorithm. In fact, as the problem size grows, the number of iterations that PXI-CD requires to converge is less than that of the basic CD. This simulation indicates that PXI-CD is well-suited to problems with large and when are full-rank. The basic CD algorithm, while numerically the inferior compared to the PX-CD and PXI-CD algorithms still outperforms the MM algorithm in these simulations.

4.2. Genetic Data

We now present simulation results when

are constructed from the

https://openmendel.github.io/SnpArrays.jl/latest/#Example-data (accessed on 1 July 2022) mouse single nucleotide polymorphism (SNP) array data set available from the Open Mendel project [

21]. The dataset consists of

, an

matrix consisting of

c genetic variants for

n individual mice. For this experiment,

c = 10,200 and

. We artificially generate

m different genetic regions by partitioning the columns of

into

gene matrices, where

. Then, we can compose our fixed matrices

as,

We simulate

and

as we did in

Section 4.1. In this case,

mimics a vector of quantitative trait measurements of the

n mice. This data set is well-suited for testing our method when

m is large (

). In these cases, we observe that, when initialized at the same point, the MM and PXI-CD method converge to different solutions, i.e., they may converge to different stationary points. Therefore, we run all algorithms until the relative change

is less than

. Since

, we implement PXI-CD utilizing the Woodbury identity. Each simulation scenario is replicated 10 times. The mean running time and mean iteration number are reported with the standard error of the mean running time and mean iteration provided in parentheses.

The results of the genetic study simulation are provided in

Table 7. We observe that PXI-CD outperforms the MM algorithm for all values of

m and

r for this data set in both the number of iterations and running time until convergence. When

, we observe that the MM and PXI-CD method converge to noticeably different objective values. We suspect that this is because when

the likelihood in (

2) exhibits many more local minima. On average, PXI-CD converges to a more optimal stationary point when

m is large and

.

5. Conclusions

The MLE solution for variance-component models requires the optimization of a non-convex log-likelihood function. In this paper, we showed that a basic implementation of the cyclic CD algorithm is computationally expensive to run and is not amenable to traditional convergence analysis.

To remedy this, we proposed a novel parameter-expanded CD (PX-CD) algorithm, which is both computationally faster and also subject to theoretical guarantees. PX-CD optimizes a higher-dimensional surrogate function that attains a coordinate-wise minimum with respect to each of the variance component parameters. The extra speed is derived from the fact that required quantities (such as first and second-order derivatives) are evaluated via the conjugate-gradient algorithm.

Additionally, we propose an alternative updating regime called PXI-CD, where the expanded block parameters are updated immediately after each coordinate update. This new updating regime requires more computation for each iteration as compared to PX-CD. However, numerically, we observed that, for large-scale models, where the number of variance components is large and are full-rank, the number of iterations needed to converge greatly offsets the additional computational cost per coordinate update cycle.

Our numerical experiments suggest that PX-CD and PXI-CD outperform the best current alternative—the MM algorithm. When the number of variance components m is large, we observed that PXI-CD was significantly faster than the MM algorithm and tended to converge to more optimal stationary points.

A potential extension of this work is to apply parameter-expanded CD algorithms to the multivariate-response variance-component model. Instead of the univariate response, one considers the multivariate response model with a

response matrix

. In this setup,

where

is a

matrix. The

covariance matrix is of the form

where

are unknown

variance components and

are the known

covariance matrices. The challenging aspect of this problem is that each optimization with respect to a parameter

is not univariate but is rather a search over positive semi-definite matrices—itself, a difficult optimization problem.