Pendulum Search Algorithm: An Optimization Algorithm Based on Simple Harmonic Motion and Its Application for a Vaccine Distribution Problem

Abstract

:1. Introduction

2. Related Works

3. Pendulum Search Algorithm

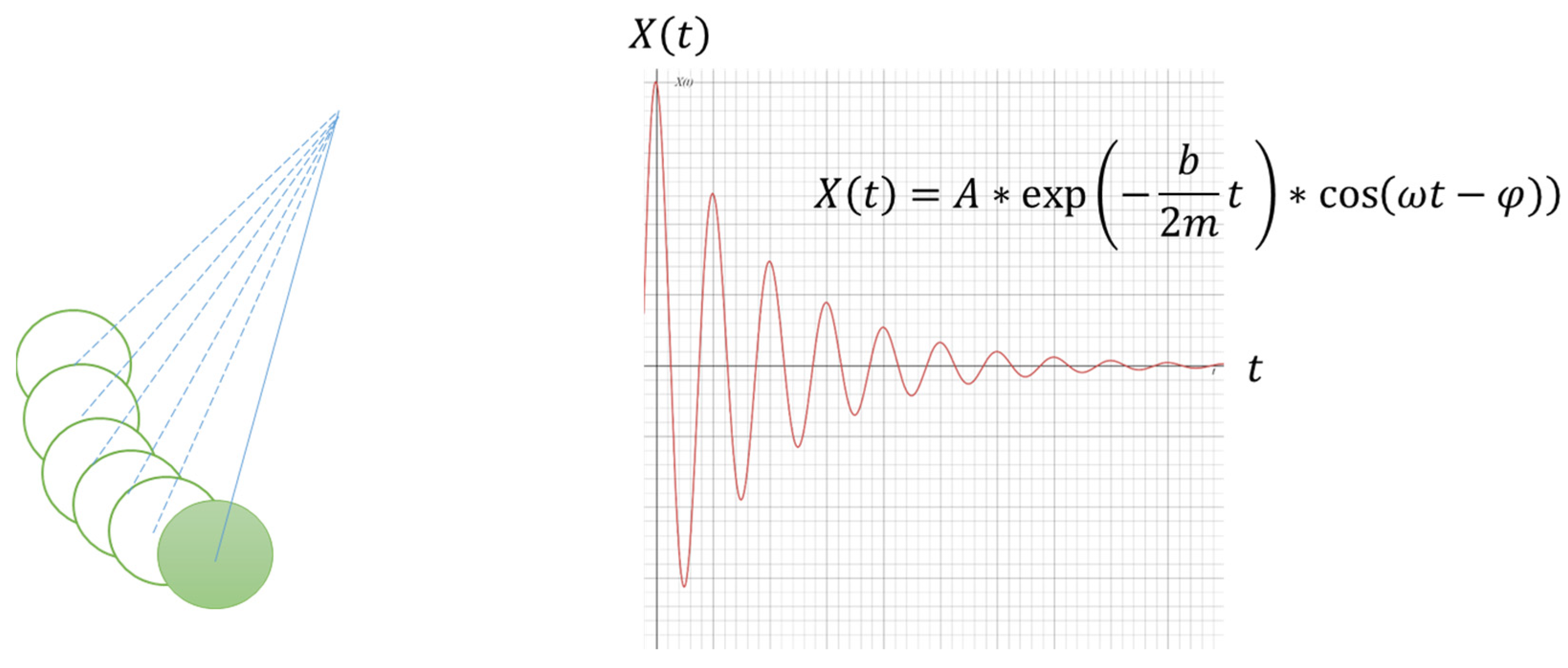

3.1. Source of Inspiration

3.2. The Algorithm

| Algorithm 1 Pseudocode of PSA |

| Initialize the agents’ parameters and positions randomly. |

| For i = 1: maximum iteration |

| For each agent |

| Update agents using Equation (1) & (2) |

| Evaluate agent’s fitness |

| End |

| Identify the best agent |

| End |

| Solution: best agent |

4. Experiment, Results & Discussion

4.1. Optimization of Benchmark Problems

4.1.1. Number of Agents

4.1.2. PSA vs PSO and SCA

4.2. PSA for Vaccine Distribution Optimization

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–73. Available online: http://www.jstor.org/stable/24939139 (accessed on 15 March 2022). [CrossRef]

- Dorigo, M.; Birattari, M.; St, T. Ant Colony Optimization. IEEE Comput. Intell. Mag. 2006, 1, 28–39. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks, Houston, TX, USA, 12 June 1997; Volume 4, pp. 1942–1948. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A Sine Cosine Algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Yang, X.S. A New Metaheuristic Bat-Inspired Algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Gonzalez, J.R., Ed.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Karaboga, D. An Idea Based on Honey bee Swarm for Numerical Optimisation; Technical Report; Computer Engineering Department, Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Yang, X.S.; Deb, S.; Fong, S. Metaheuristic Algorithms: Optimal Balance of Intensification and Diversification. Appl. Math. Inf. Sci. 2014, 8, 977–983. [Google Scholar] [CrossRef]

- Olorunda, O.; Engelbrecht, A.P. Measuring exploration/exploitation in particle swarms using swarm diversity. In Proceedings of the 2008 IEEE Congress on Evolutionary Computation (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–6 June 2008; pp. 1128–1134. [Google Scholar] [CrossRef]

- Eberhart, R.; Shi, Y. Comparing Inertia Weights and Constriction Factors in Particle Swarm Optimization. In Proceedings of the 2000 Congress on Evolutionary Computation, La Jolla, CA, USA, 16–19 July 2000; pp. 84–88. [Google Scholar]

- Bansal, J.C.; Singh, P.K.; Saraswat, M.; Verma, A.; Jadon, S.S.; Abraham, A. Inertia weight strategies in particle swarm optimization. In Proceedings of the 2011 Third World Congress on Nature and Biologically Inspired Computing, Salamanca, Spain, 19–21 October 2011; pp. 633–640. [Google Scholar] [CrossRef] [Green Version]

- Elkhateeb, N.A.; Badr, R.I. Employing Artificial Bee Colony with dynamic inertia weight for optimal tuning of PID controller. In Proceedings of the 2013 5th International Conference on Modelling, Identification and Control (ICMIC), Cairo, Egypt, 31 August–2 September 2013; pp. 42–46. [Google Scholar]

- Yang, X.S. Review of Metaheuristics and Generalized Evolutionary Walk Algorithm. arXiv 2011, arXiv:1105.3668. Available online: http://arxiv.org/abs/1105.3668 (accessed on 6 November 2014).

- Sarangi, A.; Sarangi, S.K.; Panigrahi, S.P. An approach to identification of unknown IIR systems using crossover cat swarm optimization. Perspect. Sci. 2016, 8, 301–303. [Google Scholar] [CrossRef] [Green Version]

- Binkley, K.J.; Hagiwara, M. Balancing Exploitation and Exploration in Particle Swarm Optimization: Velocity-based Reinitialization. Trans. Jpn. Soc. Artif. Intell. 2008, 23, 27–35. [Google Scholar] [CrossRef] [Green Version]

- Jancauskas, V. Empirical Study of Particle Swarm Optimization Mutation Operators. Balt. J. Mod. Comput. 2014, 2, 199–214. [Google Scholar]

- Li, C.; Yang, S.; Korejo, I. An Adaptive Mutation Operator for Particle Swarm Optimization. In Proceedings of the 2008 UK Workshop on Computational Intelligence, Leicester, UK, 10–12 September 2008; pp. 165–170. [Google Scholar]

- Abualigah, L.; Diabat, A. Advances in Sine Cosine Algorithm: A Comprehensive Survey; Springer: Berlin/Heidelberg, Germany, 2021; Volume 54. [Google Scholar]

- Gabis, A.B.; Meraihi, Y.; Mirjalili, S.; Ramdane-Cherif, A. A Comprehensive Survey of Sine Cosine Algorithm: Variants and Applications; Springer: Berlin/Heidelberg, Germany, 2021; Volume 54. [Google Scholar]

- Askari, Q.; Younas, I.; Saeed, M. Critical evaluation of sine cosine algorithm and a few recommendations. In Proceedings of the 2020 Genetic and Evolutionary Computation Conference Companion, Cancún, Mexico, 8–12 July 2020; pp. 319–320. [Google Scholar] [CrossRef]

- Aziz, N.H.A.; Ibrahim, Z.; Aziz, N.A.A.; Mohamad, M.S.; Watada, J. Single-solution Simulated Kalman Filter algorithm for global optimisation problems. Sādhanā 2018, 43, 103. [Google Scholar] [CrossRef] [Green Version]

- Rahman, T.A.B.; Ibrahim, Z.; Aziz, N.A.A.; Zhao, S.; Aziz, N.H.A. Single-Agent Finite Impulse Response Optimizer for Numerical Optimization Problems. IEEE Access 2018, 6, 9358–9374. [Google Scholar] [CrossRef]

- Mongelli, M.; Battista, N.A. A swing of beauty: Pendulums, fluids, forces, and computers. Fluids 2020, 5, 48. [Google Scholar] [CrossRef] [Green Version]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for the CEC 2014 Special Session and Competition on Single Objective Real-Parameter Numerical Optimization; Technical report; Zhengzhou University: Zhengzhou, China; Nanyang Technological University: Singapore, 2013. [Google Scholar]

- Alcalá-Fdez, J.; Sánchez, L.; García, S.; del Jesus, M.J.; Ventura, S.; Garrell, J.M.; Otero, J.; Romero, C.; Bacardit, J.; Rivas, V.M.; et al. KEEL: A software tool to assess evolutionary algorithms for data mining problems. Soft Comput. 2009, 13, 307–318. [Google Scholar] [CrossRef]

- Triguero, I.; González, S.; Moyano, J.M.; García, S.; Alcalá-Fdez, J.; Luengo, J.; Fernández, A.; del Jesús, M.J.; Sánchez, L.; Herrera, F. KEEL 3.0: An Open Source Software for Multi-Stage Analysis in Data Mining. Int. J. Comput. Intell. Syst. 2017, 10, 1238. [Google Scholar] [CrossRef] [Green Version]

- Alcalá-Fdez, J.; Fernández, A.; Luengo, J.; Derrac, J. KEEL data-mining software tool: Data set repository, integration of algorithms and experimental analysis framework. J. Mult.-Valued Log. Soft Comput. 2011, 17, 255–287. [Google Scholar]

- Ulmer, J.B.; Valley, U.; Rappuoli, R. Vaccine manufacturing: Challenges and solutions. Nat. Biotechnol. 2006, 24, 1377–1383. [Google Scholar] [CrossRef] [PubMed]

- Hu, X. Optimizing Vaccine Distribution for Different Age Groups of Population Using DE Algorithm. In Proceedings of the 2013 Ninth International Conference on Computational Intelligence and Security, Emeishan, China, 14–15 December 2013; pp. 21–25. [Google Scholar] [CrossRef]

- Liu, J.; Xia, S. Toward effective vaccine deployment: A systematic study. J. Med. Syst. 2011, 35, 1153–1164. [Google Scholar] [CrossRef] [PubMed]

- Mossong, J.; Hens, N.; Jit, M.; Beutels, P.; Auranen, K.; Mikolajczyk, R.; Massari, M.; Salmaso, S.; Tomba, G.S.; Wallinga, J. Social contacts and mixing patterns relevant to the spread of infectious diseases. PLoS Med. 2008, 5, 381–391. [Google Scholar] [CrossRef] [PubMed]

| Function Name | Ideal Fitness | |

|---|---|---|

| f4 | Shifted and Rotated Rosenbrock’s Function | 400 |

| f5 | Shifted and Rotated Ackley’s Function | 500 |

| f6 | Shifted and Rotated Weierstrass Function | 600 |

| f7 | Shifted and Rotated Griewank’s Function | 700 |

| f8 | Shifted Rastrigin’s Function | 800 |

| f9 | Shifted and Rotated Rastrigin’s Function | 900 |

| f10 | Shifted Schwefel’s Function | 1000 |

| f11 | Shifted and Rotated Schwefel’s Function | 1100 |

| f12 | Shifted and Rotated Katsuura Function | 1200 |

| f13 | Shifted and Rotated HappyCat Function | 1300 |

| f14 | Shifted and Rotated HGBat Function | 1400 |

| f15 | Shifted and Rotated Expanded Griewank’s plus Rosenbrock’s Function | 1500 |

| f16 | Shifted and Rotated Expanded Scaffer’s F6 Function | 1600 |

| Number of Agents | |||||

|---|---|---|---|---|---|

| Function Name | 10 | 20 | 30 | 40 | 50 |

| f4 | 400.0557 | 400.0003 | 400.001 | 400.0008 | 400.0013 |

| f5 | 519.997 | 519.996 | 519.9923 | 519.983 | 519.9913 |

| f6 | 601.5922 | 601.0678 | 600.3217 | 600.3587 | 600.0771 |

| f7 | 700.0595 | 700.027 | 700.0418 | 700.0591 | 700.0517 |

| f8 | 801.9904 | 800 | 800 | 800 | 800 |

| f9 | 905.9708 | 905.9698 | 904.9748 | 905.9698 | 904.9748 |

| f10 | 1007.031 | 1000.375 | 1000.25 | 1003.602 | 1000.25 |

| f11 | 1247.431 | 1226.78 | 1140.238 | 1222.493 | 1131.949 |

| f12 | 1200.059 | 1200.006 | 1200.012 | 1200.01 | 1200.017 |

| f13 | 1300.138 | 1300.132 | 1300.1 | 1300.043 | 1300.137 |

| f14 | 1400.171 | 1400.144 | 1400.123 | 1400.079 | 1400.052 |

| f15 | 1500.94 | 1500.575 | 1500.499 | 1500.578 | 1500.417 |

| f16 | 1602.263 | 1602.072 | 1602.032 | 1601.511 | 1601.108 |

| Friedman Rank | 5 | 2.9615 | 2.3462 | 2.5769 | 2.1154 |

| i | Number of Agents | p | Holm |

|---|---|---|---|

| 4 | 10 | 0.000003 | 0.0125 |

| 3 | 20 | 0.172447 | 0.016667 |

| 2 | 40 | 0.45675 | 0.025 |

| 1 | 30 | 0.709815 | 0.05 |

| PSA | PSO | SCA | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Min | Max | Mean | Std Dev | Min | Max | Mean | Std Dev | Min | Max | Mean | Std Dev | |

| f4 | 400.01 | 438.72 | 421.30 | 17.32 | 400.15 | 442.94 | 429.58 | 16.54 | 431.09 | 490.65 | 458.46 | 14.61 |

| f5 | 519.83 | 520.00 | 519.99 | 0.03 | 520.11 | 520.45 | 520.29 | 0.08 | 517.81 | 520.56 | 520.32 | 0.48 |

| f6 | 600.03 | 606.29 | 602.33 | 1.46 | 600.00 | 606.87 | 601.35 | 1.51 | 604.51 | 609.45 | 606.50 | 1.30 |

| f7 | 700.03 | 700.66 | 700.22 | 0.16 | 700.03 | 700.23 | 700.13 | 0.06 | 706.04 | 718.41 | 711.08 | 3.57 |

| f8 | 800.00 | 802.98 | 800.83 | 0.79 | 800.99 | 803.98 | 802.50 | 0.96 | 827.23 | 855.40 | 839.57 | 7.99 |

| f9 | 902.98 | 924.87 | 911.18 | 5.96 | 903.98 | 916.47 | 909.30 | 3.21 | 935.95 | 957.31 | 943.53 | 6.08 |

| f10 | 1003.41 | 1140.72 | 1026.81 | 40.76 | 1003.72 | 1365.82 | 1133.64 | 113.59 | 1744.35 | 2388.44 | 1994.61 | 173.71 |

| f11 | 1225.66 | 2166.99 | 1574.51 | 214.47 | 1106.89 | 1881.69 | 1482.65 | 198.60 | 1658.50 | 2876.79 | 2400.37 | 279.23 |

| f12 | 1200.03 | 1200.24 | 1200.11 | 0.06 | 1200.08 | 1201.40 | 1200.61 | 0.38 | 1200.91 | 1201.72 | 1201.30 | 0.19 |

| f13 | 1300.13 | 1300.48 | 1300.29 | 0.11 | 1300.06 | 1300.22 | 1300.13 | 0.04 | 1300.50 | 1300.84 | 1300.62 | 0.08 |

| f14 | 1400.07 | 1400.84 | 1400.32 | 0.20 | 1400.07 | 1400.32 | 1400.15 | 0.06 | 1400.38 | 1401.52 | 1400.92 | 0.34 |

| f15 | 1500.45 | 1503.31 | 1501.54 | 0.76 | 1500.49 | 1502.69 | 1501.16 | 0.45 | 1505.01 | 1513.12 | 1507.42 | 1.64 |

| f16 | 1601.24 | 1603.22 | 1602.39 | 0.56 | 1600.74 | 1603.14 | 1602.15 | 0.48 | 1602.72 | 1603.79 | 1603.37 | 0.22 |

| Friedman Rank | 1.3846 | 1.7692 | 2.8462 | |||||||||

| i | Algorithm | p | Holm |

|---|---|---|---|

| 2 | SCA | 0.000194 | 0.025 |

| 1 | PSO | 0.3268 | 0.05 |

| 8.27 | 1.395 | 4.165 | 1.51 | 0.715 | |

| 1.395 | 5.65 | 2.385 | 1.83 | 0.895 | |

| 4.167 | 2.385 | 6.55 | 3.425 | 1.383 | |

| 1.51 | 1.83 | 3.425 | 4.2 | 2.055 | |

| 0.715 | 0.895 | 1.383 | 2.055 | 2.66 |

| Age Group | ||||

|---|---|---|---|---|

| 0.94 m | 0.434 | 0.25 | 0.334 | |

| 0.94 m | 0.158 | 0.25 | 0.334 | |

| 2.30 m | 0.118 | 0.25 | 0.334 | |

| 1.86 m | 0.046 | 0.25 | 0.334 | |

| 0.85 m | 0.046 | 0.25 | 0.334 |

| Parameter | Value |

|---|---|

| No. of vaccine | (0.3 m = 5%, 0.6 m = 10%, 1.2 m = 20%) |

| Administered day | 50 |

| Outbreak duration | 300 |

| {0, 30, 0, 0, 0} |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ab. Aziz, N.A.; Ab. Aziz, K. Pendulum Search Algorithm: An Optimization Algorithm Based on Simple Harmonic Motion and Its Application for a Vaccine Distribution Problem. Algorithms 2022, 15, 214. https://doi.org/10.3390/a15060214

Ab. Aziz NA, Ab. Aziz K. Pendulum Search Algorithm: An Optimization Algorithm Based on Simple Harmonic Motion and Its Application for a Vaccine Distribution Problem. Algorithms. 2022; 15(6):214. https://doi.org/10.3390/a15060214

Chicago/Turabian StyleAb. Aziz, Nor Azlina, and Kamarulzaman Ab. Aziz. 2022. "Pendulum Search Algorithm: An Optimization Algorithm Based on Simple Harmonic Motion and Its Application for a Vaccine Distribution Problem" Algorithms 15, no. 6: 214. https://doi.org/10.3390/a15060214