Abstract

With the rapid development of autonomous driving technology, ensuring the safety and reliability of vehicles under various complex and adverse conditions has become increasingly important. Although autonomous driving algorithms perform well in regular driving scenarios, they still face significant challenges when dealing with adverse weather conditions, unpredictable traffic rule violations (such as jaywalking and aggressive lane changes), inadequate blind spot monitoring, and emergency handling. This review aims to comprehensively analyze these critical issues, systematically review current research progress and solutions, and propose further optimization suggestions. By deeply analyzing the logic of autonomous driving algorithms in these complex situations, we hope to provide strong support for enhancing the safety and reliability of autonomous driving technology. Additionally, we will comprehensively analyze the limitations of existing driving technologies and compare Advanced Driver Assistance Systems (ADASs) with Full Self-Driving (FSD) to gain a thorough understanding of the current state and future development directions of autonomous driving technology.

1. Introduction

The development of autonomous driving technology has made significant strides over the past few years. However, the reliability and safety of these systems under adverse weather conditions remain critical challenges. Adverse weather, such as rain, snow, and fog, can significantly impact the performance of environmental perception sensors, thereby increasing the operational risks of autonomous driving systems [1,2]. In particular, optical sensors, such as cameras and LiDAR, experience a marked decline in perception capabilities under these conditions, leading to reduced accuracy in object detection and decision-making robustness [3,4]. Studies have shown that under conditions like heavy rain, snowstorms, or dense fog, LiDAR sensorsmay mistakenly detect airborne droplets or snowflakes, which not only affects the overall accuracy of the perception system but can also lead to erroneous decisions being made by the autonomous driving system [5,6]. Additionally, adverse weather conditions can cause slippery road surfaces, increasing braking distances and further complicating the operation of autonomous driving systems [7].

To address these challenges, recent research has focused on developing new algorithms and technologies to enhance the performance of autonomous driving systems in complex environments. For instance, multi-sensor data fusion techniques, which combine data from different sensors, have significantly improved object detection accuracy in adverse weather conditions [8,9]. Moreover, deep learning models such as ResNet-50 and YOLOv8 have demonstrated excellent performance in processing images and point cloud data under challenging weather conditions [10,11,12]. Building on multi-sensor fusion, vehicle-to-infrastructure cooperative perception systems are considered a critical approach to improving the perception capabilities of autonomous driving systems in the future [13,14]. By integrating perception data from vehicles and infrastructure, this method can significantly expand the scope of environmental perception and provide more stable perception information under adverse weather conditions [15]. Research has shown that cooperative perception systems outperform traditional single-sensor systems in snowy and rainy conditions [16,17]. Table 1 compares the algorithmic focus and capabilities across different levels of autonomous driving, from Level 0 (fully manual) to Level 5 (full automation). As the level of autonomy increases, the complexity of the algorithms involved also increases, shifting from essential driver assistance features at Level 1 to advanced deep learning and AI-based decision-making at Levels 4 and 5 [10,11,12,13,14,15]. The table highlights the progression from simple rule-based systems to sophisticated AI-driven models capable of handling a wide range of driving tasks independently, reflecting the increasing reliance on machine learning, sensor fusion, and predictive control as vehicles move toward full autonomy.

Table 1.

Comparison of algorithms across autonomous driving levels.

Despite these technological advancements, further research is needed to tackle the multiple challenges of complex environments. For example, optimizing multi-sensor data fusion algorithms, designing more robust deep learning models, and developing training datasets specifically tailored to adverse weather are critical future research directions [18,19,20]. Moreover, validating perception systems in real-world adverse weather conditions is crucial. Researchers have developed several datasets, such as SID and DAWN2020, to test and validate these algorithms in complex environments [21,22,23]. These datasets encompass various road, vehicle, and pedestrian scenarios under different weather conditions, providing a solid foundation for algorithm training and evaluation [24,25,26]. The reliability and safety of autonomous driving technology under adverse weather conditions remain critical challenges for its widespread adoption. By conducting in-depth research and optimization on these issues, a more solid technical foundation can be provided for the safe operation of autonomous driving systems under extreme conditions [27,28,29,30].

This study aims to thoroughly explore the performance and challenges of autonomous driving technology under adverse weather conditions. Although autonomous driving systems can perform well in ideal environments, their reliability and safety are still significantly challenged when faced with complex environmental factors such as heavy rain, snow, and fog. These adverse conditions further impact the accuracy of the system’s decision-making and driving safety. The primary goal of this study is to comprehensively evaluate the performance of vital sensors and algorithms used in autonomous driving systems under adverse weather, identifying their strengths and limitations in various extreme conditions. Based on this, the study will propose optimization strategies through deep learning models and multi-sensor data fusion techniques to enhance autonomous driving systems’ perception and decision-making capabilities in complex environments. Finally, the study will validate the proposed algorithms using the latest datasets to ensure their applicability and robustness in real-world scenarios. Through these efforts, this study aims to provide strong support for improving the reliability of autonomous driving technology under adverse weather conditions and promoting its broader application and development.

The remainder of the paper is structured as follows: Section 2 delves into the challenges and solutions for autonomous driving in adverse weather conditions. Section 3 addresses algorithms for managing unpredictable traffic violations and concentrates on blind spot monitoring and object detection, suggesting improvements for greater accuracy. Section 4 investigates emergency maneuver strategies and their real-time decision-making processes. Section 5 provides a comparative analysis of Advanced Driver Assistance Systems (ADASs) and Full Self-Driving (FSD) systems, highlighting key differences in logic and outcomes. Finally, Section 6 offers conclusions and outlines potential future research directions.

2. Autonomous Driving in Adverse Weather Conditions

Adverse weather conditions pose significant challenges to autonomous vehicles’ reliable and safe operation. These conditions, including rain, snow, fog, and extreme temperatures, can severely impact the performance of various sensors and systems crucial for autonomous driving. This section explores the effects of adverse weather on different sensor types and discusses the implications for autonomous vehicle operation.

2.1. Impact of Adverse Weather on Sensor Performance

2.1.1. Optical Sensors (Cameras, LiDAR) in Rain, Snow, and Fog

Optical sensors, particularly cameras, and Light Detection and Ranging (LiDAR) systems are fundamental components of autonomous vehicle perception systems. However, their performance can be significantly compromised in adverse weather conditions.

Cameras: Visual cameras are susceptible to various weather-related challenges. Rasshofer et al. [31] developed a method to model and simulate rain for testing automotive sensor systems, highlighting the need for robust testing environments. Yang et al. [32] found that snow on the car can significantly reduce visual performance, potentially impacting camera-based systems in autonomous vehicles.

LiDAR: LiDAR systems, which use laser pulses to measure distances and create 3D maps of the environment, also face significant challenges in adverse weather. Han et al. [33] conducted a comprehensive study on the influence of weather on automotive LiDAR sensors, demonstrating that different weather conditions can significantly affect LiDAR performance.

Fog presents a particular challenge for LiDAR systems. Lee et al. [34] performed benchmark tests for LiDAR sensors in fog, revealing that detection capabilities can break down under dense fog conditions. Ehrnsperger et al. [35] further investigated the influences of various weather phenomena on automotive laser radar systems, providing insights into the limitations of these sensors in adverse conditions.

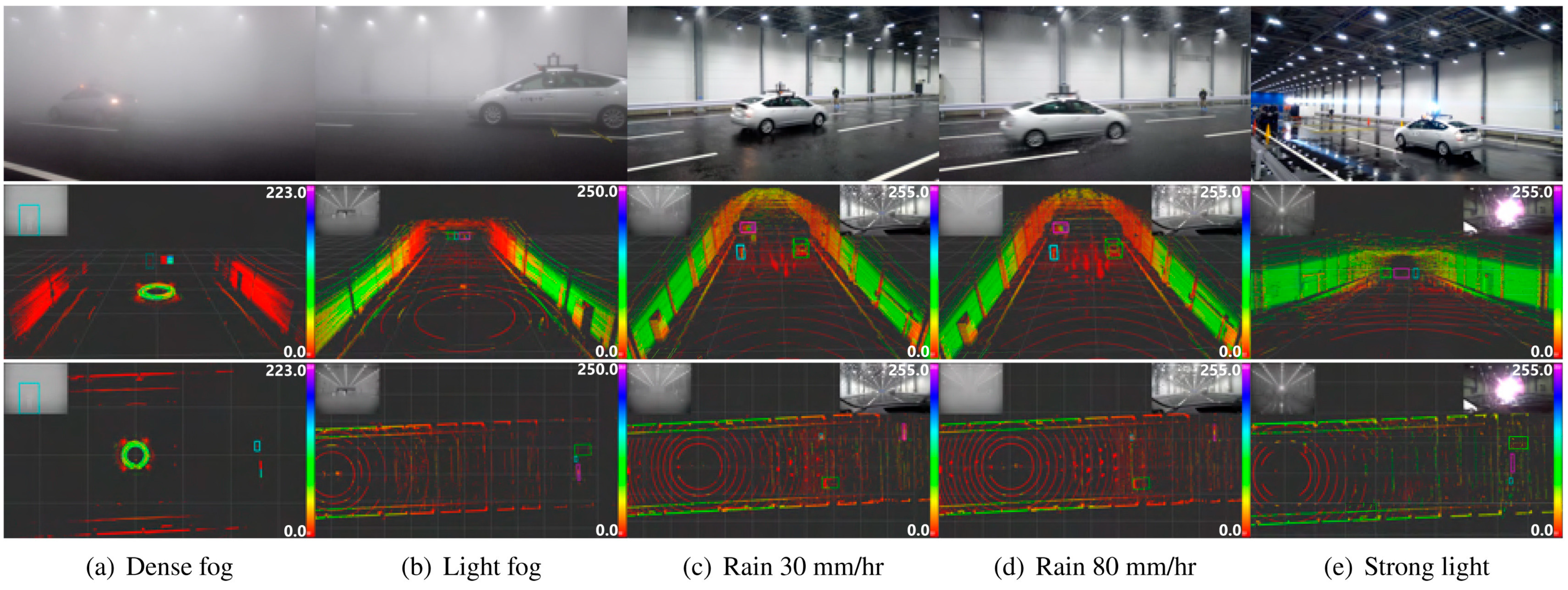

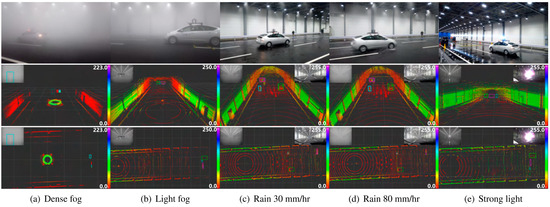

Rain also poses significant challenges for LiDAR systems. Figure 1 compares the perception capabilities of autonomous driving systems across various adverse weather conditions. It shows real-world scenes (top row), visual perception results (middle row), and LiDAR point cloud data (bottom row) for five scenarios: (a) dense fog, (b) light fog, (c) moderate rain (30 mm/h), (d) heavy rain (80 mm/h), and (e) intense light. The visual representations demonstrate how different weather conditions affect the system’s ability to detect and interpret its environment, highlighting the challenges in developing robust autonomous driving technologies for diverse weather conditions [36]. Chowdhuri et al. [37] developed a method to predict the influence of rain on LiDAR in Advanced Driver Assistance Systems (ADAS), further emphasizing the need for adaptive algorithms in autonomous driving systems.

Figure 1.

Perception capabilities of autonomous driving systems in adverse weather conditions [36].

2.1.2. Radar Performance in Extreme Weather

Radar (Radio Detection and Ranging) systems generally exhibit better resilience to adverse weather conditions than optical sensors. Brooker et al. compared HDIF LiDAR, radar, and millimeter-wave radar sensors in the rain, demonstrating that while all of them are affected by rain to some degree, radar systems are partially immune to weather-related performance degradation. [38,39].

Wang et al. [40] investigated the performance of automotive millimeter-wave radar systems in the presence of rain, finding that while these systems are more robust than optical sensors, heavy rain can still cause attenuation and affect detection capabilities.

2.1.3. Other Sensor Types and Their Weather-Related Limitations

Global Navigation Satellite System (GNSS): While not directly affected by weather conditions at the vehicle level, GNSS accuracy can be impaired during severe weather events due to atmospheric disturbances. Gamba et al. [41] discussed the challenges of using GNSS in various conditions, including their integration into multi-sensor systems for detect-and-avoid applications.

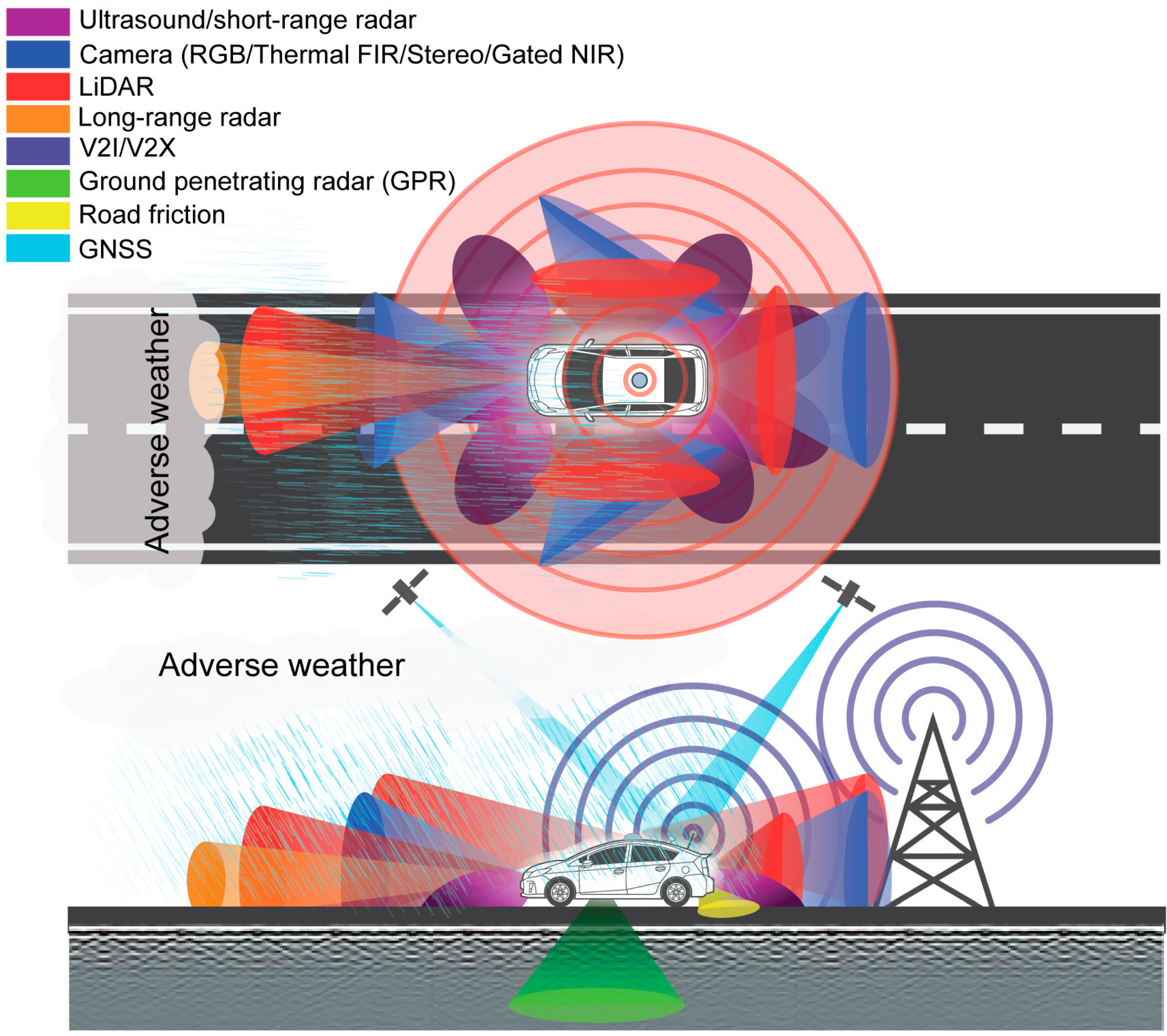

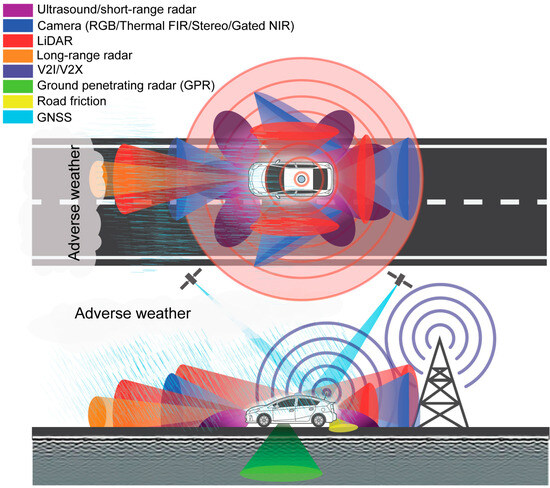

Figure 2 illustrates the comprehensive sensor array deployed in modern vehicles with Advanced Driver Assistance Systems (ADASs) [36]. It showcases how various sensor types—including radar systems, LiDAR systems, cameras, and ultrasonic sensors—work in concert to provide a wide range of safety and assistance features. From adaptive cruise control and emergency braking to parking assistance and blind spot detection, the image demonstrates how these technologies create a 360-degree sensory field around the vehicle, enhancing the safety and driving experience through multi-layered environmental awareness and responsiveness. Adverse weather conditions present significant challenges to the sensor systems relied upon by autonomous vehicles. While some sensors, like radars, show greater resilience, others, particularly optical sensors, can experience severe performance degradation. This variability underscores the importance of sensor fusion and adaptive algorithms in developing robust autonomous driving systems capable of operating safely in diverse weather conditions.

Figure 2.

Integrated sensor systems in advanced driver assistance technologies [36].

2.2. Algorithmic Challenges in Adverse Weather

Adverse weather conditions present significant challenges to autonomous driving systems, affecting various aspects of perception and decision-making. This section explores the critical challenges in object detection, lane detection, and path planning under adverse weather conditions.

2.2.1. Object Detection and Classification Issues

Object detection and classification become particularly challenging in adverse weather conditions such as heavy rain, snow, or fog. These conditions can obscure or distort the appearance of objects, making them harder to detect and classify accurately. Grigorescu et al. [42] addressed this challenge by developing DriveNet, a robust multi-task, multi-domain neural network for autonomous driving. DriveNet demonstrates improved performance across various weather conditions by leveraging a unified architecture that simultaneously handles multiple perception tasks.

The impact of adverse weather on object detection is not limited to visual sensors. Rasouli et al. [43] investigated the effects of adverse weather on various sensor modalities, including cameras, LiDAR systems, and radar systems. They developed a deep multimodal sensor fusion approach that can effectively “see through fog” even without labeled training data for foggy conditions. This work highlights the importance of combining different sensor modalities to overcome the limitations of individual sensors in challenging weather.

2.2.2. Lane Detection and Road Boundary Identification

Lane detection and road boundary identification are critical for safe autonomous driving but become significantly more challenging in adverse weather. Rain, snow, or even strong sunlight can obscure lane markings, while wet roads can create reflections that confuse traditional lane detection algorithms. Cui et al. [44] addressed these challenges by developing 3D-LaneNet+, an anchor-free lane detection method using a semi-local representation. Their approach showed improved robustness in various weather conditions, demonstrating the potential of advanced algorithms to overcome weather-related challenges in lane detection.

2.2.3. Path Planning and Decision-Making Complexities

Adverse weather also affects perception and complicates path planning and decision-making processes. Reduced visibility, decreased road friction, and the unpredictable behavior of other road users in bad weather all contribute to making these tasks more complex. Xu et al. [45] provided a comprehensive survey of autonomous driving, including the challenges in path planning and decision-making under adverse conditions. They highlighted the need for adaptive algorithms to adjust their behavior based on current weather conditions and the associated uncertainties.

2.3. Current Solutions and Advancements

2.3.1. Multi-Sensor Data Fusion Techniques

Multi-sensor data fusion has emerged as a crucial technique for improving perception in adverse weather. Hasirlioglu et al. [46] conducted a comprehensive survey on multi-sensor fusion in automated driving, covering both traditional and deep learning-based approaches. They highlighted the potential of sensor fusion in overcoming the limitations of individual sensors, particularly in adverse weather conditions where certain sensor types may be more affected than others.

2.3.2. Deep Learning Models for Adverse Weather

Deep learning models have shown great promise in tackling the challenges posed by adverse weather. Zhu et al. [47] provided a comprehensive survey of deep learning techniques for autonomous driving, including their application in adverse weather conditions. They discussed various architectures, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs), highlighting their potential to improve perception and decision-making in challenging environments.

2.3.3. Vehicle-to-Infrastructure Cooperative Perception Systems

Vehicle-to-Infrastructure (V2I) cooperative perception systems are emerging as a powerful tool for enhancing autonomous driving capabilities in adverse weather. Ferranti et al. [48] introduced SafeVRU, a research platform for studying the interaction between self-driving vehicles and vulnerable road users, which includes considerations for adverse weather conditions. This work demonstrates the potential of cooperative systems to improve safety and reliability in challenging environments.

2.4. Future Research Directions

While significant progress has been made, there are still many areas that require further research and development to fully address the challenges of autonomous driving in adverse weather conditions.

2.4.1. Optimizing Multi-Sensor Fusion Algorithms

Future research should focus on further optimizing multi-sensor fusion algorithms to better handle adverse weather conditions. This includes developing more adaptive fusion techniques that can dynamically adjust to changing weather conditions and sensor reliability. Hasirlioglu et al. [46] highlighted the need for real-time adaptive fusion strategies that can quickly respond to changing environmental conditions.

2.4.2. Developing Robust Deep Learning Models for Extreme Weather

There is a need for more robust deep-learning models that can maintain high performance across a wide range of weather conditions, including extreme weather scenarios. Grigorescu et al. [47] emphasized the importance of developing models that can generalize well to unseen weather conditions, possibly through advanced training techniques or novel architectural designs.

2.4.3. Creating Specialized Datasets for Adverse Weather Conditions

Developing specialized datasets for adverse weather conditions is crucial for training and evaluating autonomous driving algorithms. Mozaffari et al. [49] reviewed cooperative perception methods in autonomous driving, discussing the need for diverse datasets that include challenging weather scenarios. Such datasets would enable more effective training and benchmarking algorithms designed for adverse weather operations.

Creating these datasets poses unique challenges, including the difficulty of capturing data in extreme weather conditions and the need for accurate labeling of weather-affected scenes. However, the potential benefits are significant, as they would allow for more robust testing and validation of autonomous driving systems under a wide range of real-world conditions.

Looking ahead, the field of autonomous driving in adverse weather conditions continues to evolve rapidly. Wang et al. [50] highlighted the integration of advanced sensing technologies, algorithms, and novel approaches like generative AI, which promises to push the boundaries of what is possible. As research progresses in these areas, we can expect to see autonomous vehicles that are increasingly capable of safe and reliable operation across various weather conditions.

Ongoing work, such as that conducted by Hasirlioglu et al. [46] on testing methodologies for rain influence on automotive surround sensors, continues to push the boundaries of sensor technology and testing procedures for autonomous vehicles in adverse weather. These efforts are crucial in developing robust and reliable autonomous driving systems operating safely in all weather conditions.

2.5. Autonomous Driving’s Dataset Analysis for Adverse Weather Challenges

In autonomous driving research, achieving reliable performance under adverse weather conditions is crucial. Challenges like rain, snow, fog, and low light can significantly impair sensors and object detection algorithms, affecting the safety and robustness of autonomous systems. This section reviews critical datasets, algorithmic approaches, and sensor fusion techniques, providing an in-depth analysis of their advantages and limitations.

2.5.1. Datasets for Adverse Weather Conditions

To evaluate and enhance the performance of autonomous driving systems under different weather conditions, researchers have developed several specialized datasets:

IDD-AW (Indian Driving Dataset-Adverse Weather): This dataset captures unstructured driving conditions across urban, rural, and hilly areas in India, focusing on scenarios like rain, fog, snow, and low light. It provides over 5000 pairs of high-resolution RGB and Near-Infrared (NIR) images, designed to test model robustness with a metric called Safe mean Intersection over Union (Safe-mIoU), which prioritizes safety in model predictions [51]. The dataset’s emphasis on real-world diversity makes it ideal for evaluating autonomous vehicle performance in complex weather conditions.

SID (Stereo Image Dataset): SID is tailored for depth estimation and 3D perception in challenging environments such as rain, snow, and nighttime scenarios. It includes over 178,000 stereo image pairs, offering a robust foundation for training models that require precise depth information under adverse conditions [52].

DAWN (Vehicle Detection in Adverse Weather Nature): The DAWN dataset emphasizes vehicle detection under diverse conditions like fog, rain, and snow. With around 1000 annotated images, it is instrumental in evaluating object detection models in environments where visibility is greatly reduced [53].

WEDGE (Weather-Enhanced Driving Environment): Using generative models, WEDGE provides synthetic weather conditions, such as heavy rain and dust storms. This dataset consists of 3360 images, allowing researchers to evaluate model robustness and generalization across synthetic and real-world data, offering valuable insights into performance variability under different weather conditions [54].

To evaluate and enhance autonomous driving performance in adverse weather, researchers have developed specialized datasets summarized in Table 2. IDD-AW captures diverse driving conditions in India, such as rain, fog, and snow, with over 5000 pairs of high-resolution images focusing on safety metrics [51]. SID, with 178,000 stereo image pairs, supports depth estimation in challenging scenarios like rain and nighttime [52]. DAWN provides 1000 images for vehicle detection in low-visibility conditions such as fog and snow [53]. WEDGE, with 3360 synthetic images, allows testing model robustness across simulated weather scenarios like heavy rain and dust storms [54]. These datasets collectively support the development of resilient autonomous driving models for complex weather conditions.

Table 2.

Summary of key datasets for adverse weather conditions in autonomous driving.

The IDD-AW dataset is particularly valuable for training models that need to operate in less structured environments, such as rural or suburban roads, where conditions can vary significantly. In contrast, nuScenes and the Waymo Open Dataset provide comprehensive coverage of urban scenarios, including a variety of lighting conditions, from day to night. These datasets are especially suitable for training models that integrate multiple sensor modalities, such as cameras, LiDAR systems, and radar systems [55,56].

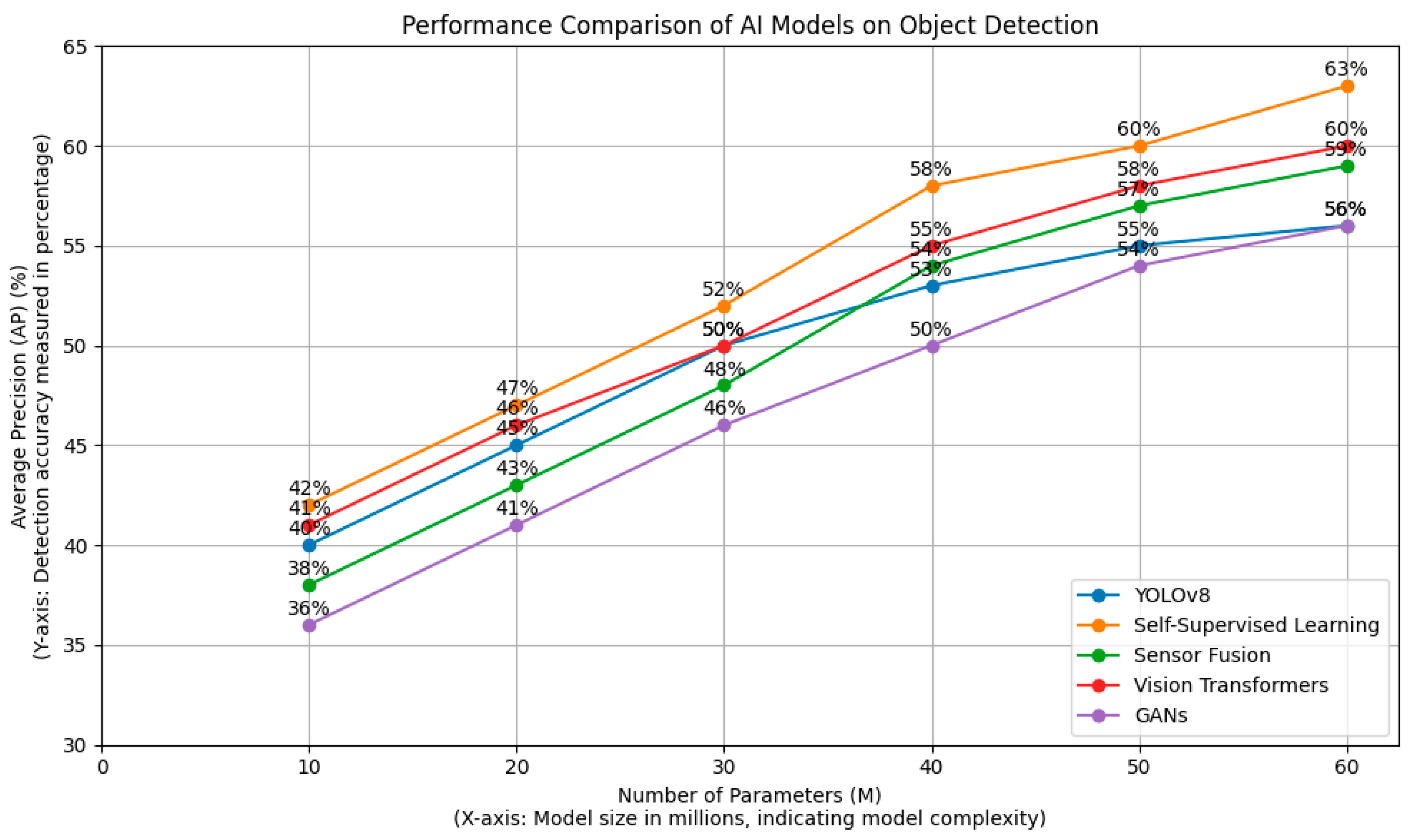

2.5.2. Analysis of Algorithm Performance

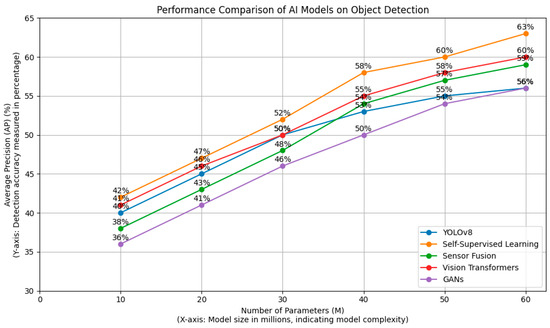

The performance of autonomous driving algorithms is highly dependent on the complexity of the model and the quality of training data. In this analysis, we examine five popular approaches—YOLOv8, self-supervised Learning, sensor fusion, Vision Transformers, and GAN-based models—evaluating their average precision (AP) under varying levels of model complexity. Figure 1 presents a comparative analysis of these algorithms, showing how their AP varies with the number of parameters (in millions). The analysis is conducted using standardized object detection benchmarks, focusing on their robustness in adverse conditions such as fog, rain, and snow.

The X-axis of Figure 3 indicates the number of parameters (in millions), representing the model’s complexity. Models with a higher parameter count are typically more capable of capturing intricate patterns in data, but they may also require more computational resources. The Y-axis represents the average precision (AP), a metric widely used to assess object detection accuracy. A higher AP indicates better detection performance, reflecting the model’s ability to correctly identify objects under varying conditions.

Figure 3.

Performance comparison of AI models on object detection.

2.5.3. Insights from the Comparative Analysis

The analysis of the performance data reveals several key insights, each offering guidance for selecting the appropriate model and dataset combination for specific use cases:

YOLOv8 demonstrates high efficiency, achieving an AP of 56% at 60 million parameters. It excels in real-time object detection due to its streamlined architecture, making it suitable for use cases where processing speed is critical. However, its performance tends to plateau with increased complexity, suggesting diminishing returns at higher parameter counts [57].

Self-supervised learning methods show a strong ability to adapt to new environments, with an AP of 63% at 60 million parameters. These models benefit from their ability to learn from large volumes of unlabeled data, making them highly effective in scenarios with diverse and dynamic weather conditions [58]. This adaptability makes self-supervised learning a promising approach for environments where labeled data are scarce or expensive to obtain.

Sensor fusion techniques, which integrate data from LiDAR systems, cameras, and radar systems, reach an AP of 59%. The combination of multiple sensors allows these models to maintain high depth perception and spatial awareness in low-visibility conditions like dense fog or heavy rain [55,59]. However, the increased complexity and computational demands of sensor fusion models can limit their applicability in real-time scenarios.

Vision Transformers provide a balance between accuracy and computational efficiency, achieving up to 60% AP. Their ability to capture long-range dependencies in image data allows them to perform well in complex urban scenarios where context is crucial [60]. This makes Vision Transformers suitable for applications that require a detailed understanding of the environment but can afford higher computational costs.

GAN-based models, while not directly competitive in AP, play a crucial role in data augmentation. By generating synthetic training data, these models enable other algorithms to improve their robustness against rare weather events, such as blizzards or sandstorms. Their ability to simulate extreme conditions makes them valuable for training models that must operate in environments where real-world data are difficult to obtain.

2.5.4. Implications for Model Selection

Selecting the appropriate combination of datasets and algorithms is critical for optimizing autonomous driving systems under varying conditions. The findings indicate that datasets like nuScenes and Waymo Open are ideal for models that require detailed scene understanding and robust object tracking capabilities [55,56]. These datasets provide rich multimodal data, making them suitable for complex tasks such as sensor fusion.

On the other hand, smaller datasets such as DAWN and WEDGE are particularly effective for refining models that need to handle rare or extreme weather conditions [53,54]. These datasets, although limited in scale, offer high-quality annotations that are invaluable for training models focused on specific challenges like low-visibility detection.

The results provide a roadmap for researchers and engineers to select the most suitable datasets and models for different autonomous driving challenges. For real-time applications, lightweight models like YOLOv8 are recommended, while scenarios that require deeper environmental analysis may benefit from self-supervised learning methods or Vision Transformers. The strategic use of GAN-generated data can further enhance the adaptability of these models in unpredictable environments.

3. Algorithms for Managing Complex Traffic Scenarios and Violations

3.1. Types of Complex Traffic Scenarios and Violations

Autonomous driving systems face a myriad of complex traffic scenarios and potential violations that challenge their decision-making capabilities. These scenarios often involve unpredictable human behavior and dynamic environments that require sophisticated algorithms to navigate safely.

3.1.1. Unpredictable Pedestrian Behavior

One of the most challenging aspects of urban driving is dealing with unpredictable pedestrian behavior, particularly jaywalking. Zhu et al. [47] highlighted this challenge in their study on human-like autonomous car-following models, noting that pedestrians often make sudden decisions that deviate from expected patterns, requiring autonomous vehicles to have rapid detection and response capabilities.

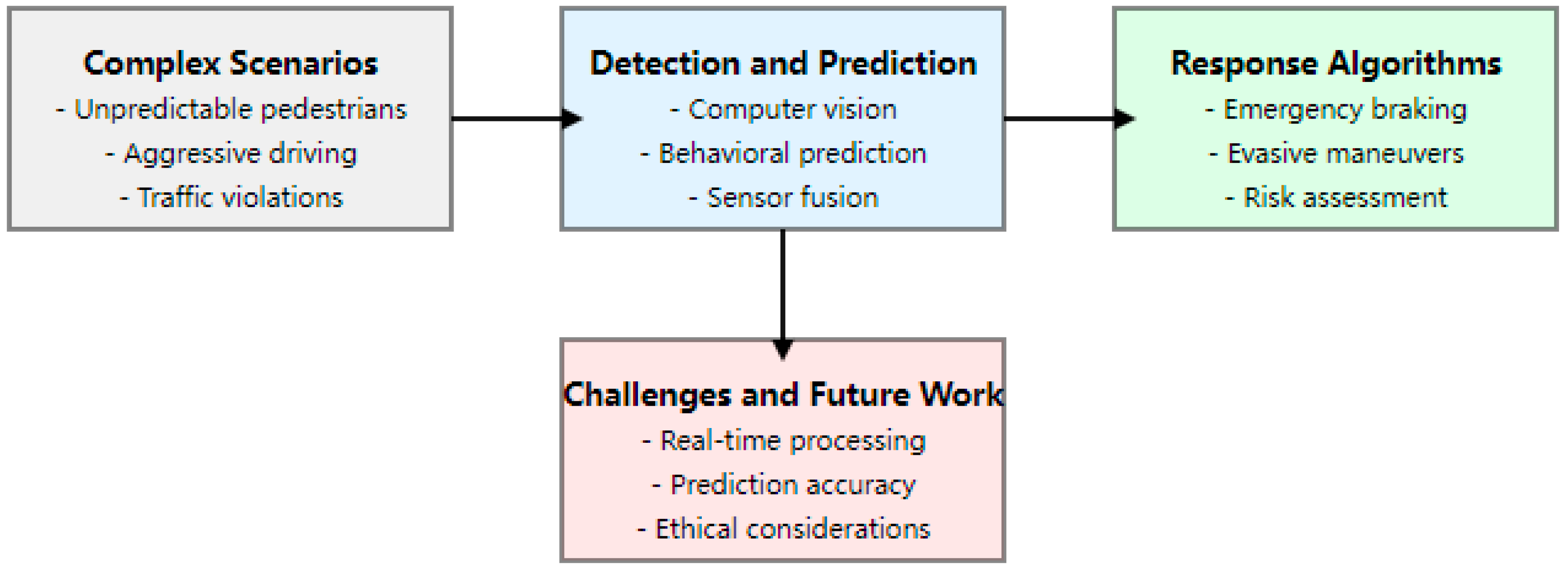

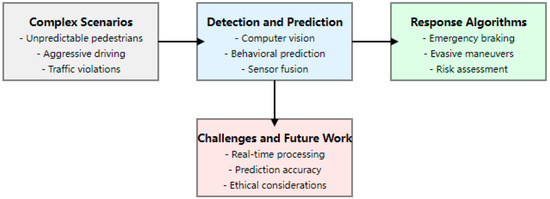

Figure 4 illustrates the algorithmic framework for managing complex traffic scenarios in autonomous driving systems. This flowchart outlines four key components: complex scenarios encountered on the road, methods for detection and prediction, response algorithms, and ongoing challenges. The diagram shows how autonomous systems progress from identifying challenging situations to executing appropriate responses while highlighting the continuous need for improvement in areas such as real-time processing and ethical decision-making. This visual representation provides a concise overview of the intricate processes of navigating complex traffic environments, emphasizing the interconnected nature of perception, prediction, and action in autonomous driving technology. Numerous factors, including individual psychology, cultural norms, and environmental conditions, influence pedestrian behavior. Rasouli and Tsotsos [48] also analyzed pedestrian behavior at crosswalks and found that factors such as group size, age, and even parked vehicles significantly affect crossing decisions. This complexity makes it difficult for autonomous systems to predict pedestrian intentions accurately.

Figure 4.

Algorithms for managing complex traffic scenarios and violations.

3.1.2. Aggressive Driving and Sudden Lane Changes

Aggressive driving behaviors, including sudden lane changes, tailgating, and speeding, pose significant challenges to autonomous systems. Mozaffari et al. [49] reviewed deep learning-based vehicle behavior prediction methods, emphasizing the importance of anticipating aggressive maneuvers to maintain safety in autonomous driving applications.

Alahi et al. [61] proposed a lane change intention prediction model based on long short-term memory (LSTM) networks, which demonstrated the potential to anticipate lane changes up to 3 s in advance with accuracy of over 90%. However, the challenge lies not just in predicting these behaviors but in responding to them safely.

3.1.3. Traffic Signal and Sign Violations

Violations of traffic signals and signs by other road users represent another critical challenge. Yurtsever et al. [62] discussed the need for robust algorithms to detect and respond to these violations, which often occur with little warning and require immediate action.

Aoude et al. [63] developed a threat assessment algorithm for detecting red light runners using Hidden Markov Models and Support Vector Machines. Their approach demonstrated the potential to predict violations up to 1.2 s before they occur, giving autonomous vehicles crucial time to respond.

3.1.4. Blind Spot Challenges and Multiple Moving Objects

Managing blind spots and tracking multiple moving objects simultaneously is crucial for safe autonomous driving. Grigorescu et al. [42] highlighted the complexity of monitoring blind spots and predicting the trajectories of multiple objects in dynamic traffic environments.

Wang et al. [64] developed a multi-object tracking and segmentation network that can simultaneously detect, segment, and track multiple objects in real-time, demonstrating the potential of deep learning in addressing this complex task.

3.2. Detection and Prediction Methods

To address these complex scenarios, various detection and prediction methods have been developed and refined.

3.2.1. Computer Vision-Based Approaches

Computer vision plays a crucial role in detecting and classifying objects and behaviors in the driving environment. Xu et al. [65] demonstrated an end-to-end learning approach for driving models using large-scale video datasets, showcasing the potential of computer vision in understanding complex traffic scenarios.

YOLO (You Only Look Once) and its variants have been particularly influential in real-time object detection. YOLOv5, introduced by Jocher et al. [66], achieved state-of-the-art performance in object detection tasks, with the ability to process images at over 100 frames per second on modern GPUs.

3.2.2. Behavioral Prediction Models

Predicting the behavior of other road users is essential for proactive decision-making [67]. Alahi et al. [61] introduced Social LSTM, a model that can predict pedestrian trajectories by considering the movements of people in a scene. This approach captures the complex social interactions influencing pedestrian behavior, leading to more accurate predictions.

For vehicle behavior prediction, Shalev-Shwartz et al. [68] proposed an Intention-aware Reciprocal Velocity Obstacles (IRVO) model that predicts vehicle trajectories by inferring drivers’ intentions. This model demonstrated improved accuracy in predicting lane change behaviors and complex intersection maneuvers.

3.2.3. Sensor Fusion for Improved Detection

Combining data from multiple sensors enhances the robustness and accuracy of detection systems. Hang et al. [69] proposed PointFusion, a sensor fusion framework that combines LiDAR point clouds with RGB images for 3D object detection. This approach leverages the strengths of both sensors: the precise depth information from LiDAR and the rich texture information from cameras.

3.2.4. Machine Learning for Object Classification and Movement Prediction

Convolutional neural networks (CNNs) remain the backbone of many object classification systems in autonomous driving. ResNet and its variants, such as ResNeXt introduced by Xie et al. [70], have demonstrated exceptional performance in classifying a wide range of objects relevant to driving scenarios.

3.3. Response Algorithms and Decision-Making Processes

Once potential hazards or violations are detected, autonomous vehicles must respond appropriately [71] and make accurate decisions in real-time.

3.3.1. Emergency Braking Systems and Evasive Maneuver Planning

Rjoub et al. [72] developed an adaptive safety-critical scenario generating method, which can be used to test and improve emergency braking and evasive maneuver algorithms under various violation scenarios. Their approach uses adversarial learning to generate challenging scenarios, helping to create more robust response algorithms.

3.3.2. Risk Assessment and Mitigation Strategies

Risk assessment is crucial for safe autonomous driving. Lefèvre et al. [73] developed a comprehensive framework for risk assessment at road intersections, combining probabilistic predictions of vehicle trajectories with a formal definition of dangerous situations. This approach allows autonomous vehicles to quantify and mitigate risks in complex intersection scenarios.

3.3.3. Ethical Considerations in Decision-Making

Ethical decision-making in autonomous vehicles [74] is a complex and controversial topic. Awad et al. [75] conducted a global survey on moral decisions made by autonomous vehicles, revealing significant cultural variations in ethical preferences. This highlights the need for flexible ethical frameworks adapting to different cultural contexts.

3.4. Challenges and Future Work

Despite significant advancements, there are several challenges that need to be solved in managing complex traffic scenarios and violations. Enhancing the algorithm’s speed and accuracy of detection, prediction, and response remains a crucial challenge. Djuric et al. [76] proposed a multi-task learning approach that simultaneously performs detection, tracking, and motion forecasting, demonstrating improved efficiency and accuracy compared to separate models for each task.

Improving the accuracy of behavioral prediction models, especially in diverse and complex environments, is crucial. Chandra et al. [77] introduced RobustTP, a trajectory prediction framework that uses attention mechanisms to capture complex interactions between road users. It shows improved performance in challenging urban environments. Yurtsever et al. [62] emphasized integrating various autonomous driving subsystems to create a cohesive and robust driving platform. Future research should focus on developing standardized interfaces and protocols for the seamless integration of perception, decision-making, and control systems.

The development of more advanced AI-driven detection systems remains a crucial direction for future research. Grigorescu et al. [42] highlighted the potential of unsupervised and self-supervised learning techniques in reducing the dependence on large labeled datasets and improving the adaptability of autonomous driving systems to new and unseen scenarios.

4. Emergency Maneuver Strategies and Blind 60Spot Management

4.1. Types of Emergency Scenarios and Blind Spot Challenges

Autonomous vehicles must be prepared to handle a variety of emergency scenarios and blind spot challenges that can arise suddenly during operation. These situations often require immediate and precise responses to ensure safety.

One of the most critical emergency scenarios involves the sudden appearance of obstacles in the vehicle’s path. These can include pedestrians, animals, fallen objects, or other vehicles [78]. Schwarting et al. [79] developed a probabilistic prediction and planning framework that enables autonomous vehicles to navigate safely around obstacles, even in scenarios with limited visibility.

System failures, such as sensor malfunctions or actuator errors, can also lead to emergency situations. Shalev-Shwartz et al. [68] proposed a reinforcement learning approach for developing robust driving policies that can gracefully handle multiple types of system failures.

Complex emergency scenarios involving multiple vehicles require sophisticated coordination and decision-making [80,81]. Choi et al. [82] introduced a cooperative collision avoidance system based on vehicle-to-vehicle (V2V) communication, enabling coordinated emergency maneuvers among multiple autonomous vehicles.

Blind spots pose unique challenges in emergency scenarios, as they limit the vehicle’s awareness of its surroundings. Yang et al. [83] developed an integrated perception system that combines data from multiple sensors to enhance blind spot detection and reduce the risk of collisions in these critical areas.

4.2. Current Technologies and Algorithms for Emergency Response and Blind Spot Management

To address these emergency scenarios and blind spot challenges, various technologies and algorithms [84] have been developed and refined.

4.2.1. Emergency Response Technologies

Emergencies often require rapid replanning of the vehicle’s trajectory. Galceran et al. [85] proposed a model predictive control (MPC) approach for real-time trajectory optimization in the presence of dynamic obstacles, enabling quick and smooth evasive maneuvers.

Ensuring system reliability is crucial for handling emergencies. Benderiu et al. [86] discussed the importance of redundancy in critical systems and proposed a fail-safe operational architecture for autonomous driving that can maintain safe operation even in the event of component failures.

4.2.2. Blind Spot Detection and Monitoring

Radar technology plays a crucial role in blind spot detection due to its ability to function in various weather conditions. Patole et al. [87] comprehensively reviewed automotive radars, highlighting their effectiveness in blind spot monitoring and collision avoidance applications.

Camera systems offer high-resolution visual data for blind spot monitoring. Liu et al. [88] developed a deep learning-based approach for blind spot detection using surround-view cameras, demonstrating improved accuracy in identifying vehicles and other objects in blind spots.

Ultrasonic sensors provide reliable close-range detection, which is particularly useful for parking scenarios and low-speed maneuvering. Choi et al. [82] proposed an integrated ultrasonic sensor system for enhanced object detection and classification in blind spots and other close-proximity areas.

4.3. Advanced Algorithmic Approaches

Recent advancements in artificial intelligence and machine learning have led to more sophisticated approaches for emergency handling and blind spot management.

Machine learning techniques can help classify and respond to various emergency scenarios. Hu et al. [89] developed a deep reinforcement learning framework for emergency decision-making in autonomous vehicles, capable of handling a wide range of critical situations.

Predicting the movement of objects in blind spots is crucial for proactive safety measures. Galceran et al. [85] introduced a probabilistic approach for predicting the intentions and trajectories of surrounding vehicles, including those in blind spots, to enable more informed decision-making.

Combining data from multiple sensor types can provide a more complete picture of the vehicle’s surroundings, including blind spots. Feng et al. [90] proposed an adaptive sensor fusion algorithm that dynamically adjusts the weighting of different sensors based on environmental conditions and sensor reliability.

Quick and accurate decision-making is essential in emergency scenarios. Dixit et al. [91] developed a hierarchical decision-making framework that combines rule-based systems with deep learning to enable rapid responses to critical situations while maintaining an overall driving strategy.

4.4. Testing, Validation, and Future Directions

Rigorous testing and validation are crucial to ensure the reliability and safety of emergency maneuver strategies and blind spot management systems.

Virtual simulations provide a safe and controllable environment for testing emergency scenarios. Kiran et al. [92] introduced CARLA, an open-source simulator for autonomous driving research, which enables the creation and testing of complex emergency scenarios.

While simulations are valuable, real-world testing [93] is essential for validating system performance. Zhao et al. [94] described a comprehensive closed-course testing methodology for evaluating autonomous vehicle safety, including protocols for assessing emergency maneuver capabilities. Collecting and analyzing data from emergency events is crucial for improving system performance.

Emergency systems must function reliably in all weather conditions. Wang et al. [95] proposed a framework for extracting and analyzing critical scenarios from large-scale naturalistic driving data, providing insights for enhancing emergency response algorithms. Bijelic et al. [96] conducted a comprehensive study on the performance of various sensor technologies in adverse weather providing valuable insights for designing robust emergency response systems.

Looking to the future, several promising directions for research and development emerge. Improving sensor capabilities is crucial for enhancing blind spot detection. Petrovskaya and Thrun [97] discussed the potential of next-generation LiDAR systems in providing high-resolution 3D mapping of vehicle surroundings, including blind spots.

Vehicle-to-everything (V2X) communication can significantly enhance situational awareness. Boban et al. [98] explored the potential of 5G and beyond technologies in enabling high-bandwidth, low-latency V2X communication for improved cooperative perception and emergency response.

Future systems must be capable of handling a wide range of emergency scenarios. Kiran et al. [92] proposed a meta-learning approach for autonomous driving that can quickly adapt to new and unseen emergency situations, potentially leading to more robust and versatile emergency response systems.

While significant progress has been made in emergency maneuver strategies and blind spot management for autonomous vehicles, numerous challenges and opportunities remain for improvement. Continued research and development in areas such as advanced sensor technologies, AI-driven decision-making algorithms, and comprehensive testing methodologies will be crucial in creating safer and more reliable autonomous driving systems capable of handling the complex and unpredictable nature of real-world driving environments.

5. Comparative Analysis and Future Outlook

5.1. ADAS vs. Full Self-Driving (FSD) Systems

The automotive industry is gradually transitioning from Advanced Driver Assistance Systems (ADASs) to Full Self-Driving (FSD) systems. This section compares these technologies, highlighting their capabilities, limitations, and performance in challenging conditions.

Advanced Driver Assistance Systems (ADASs) have made substantial strides in enhancing vehicle safety and driver comfort, contributing to a more secure driving environment. These technologies, which include a range of functionalities, are designed to support the driver by automating certain aspects of vehicle operation while keeping the human driver in control. Bengler et al. [99] extensively reviewed ADAS functionalities, highlighting key features such as adaptive cruise control, lane-keeping assistance, and automatic emergency braking. Automatic emergency braking systems are designed to detect potential collisions and apply the brakes if the driver fails to respond in time, thereby preventing accidents or mitigating their severity.

Autonomous driving technologies must balance safety and efficiency across diverse scenarios. Table 3 summarizes the challenges and solutions, highlighting environmental impacts like reduced visibility in adverse weather and sensor performance in complex traffic and emergencies. It details the use of various sensors fusion techniques and algorithms such as DeepWet-Net and YOLOv5. Testing in both simulated and real-world environments, supported by benchmark datasets like BDD100K, underscores the complexity of developing robust systems capable of handling these challenges.

Table 3.

Comprehensive comparison of autonomous vehicle systems and technologies.

In contrast, Full Self-Driving systems aim to operate vehicles without human intervention. Yurtsever et al. [62] surveyed the current state of autonomous driving technologies, discussing the progress towards Level 4 and 5 autonomies as defined by SAE International. They highlighted significant advancements in perception, planning, and control algorithms but also noted persistent challenges in handling edge cases and unpredictable scenarios.

Both ADASs and FSD systems face challenges in adverse weather and complex traffic scenarios. Kuutti et al. [100] conducted a comparative study of ADASs and autonomous driving systems in various environmental conditions. While FSD systems generally outperform ADASs in ideal conditions, the gap narrows significantly in challenging scenarios, highlighting the need for further improvements in both technologies.

The transition from ADASs to FSD systems raises important questions about human-machine interaction. Mueller et al. [101] investigated driver behavior and trust in different levels of vehicle automation. They found that the clear communication of system capabilities and limitations is crucial for maintaining appropriate driver engagement and trust.

5.2. Algorithmic Differences and Development Trajectories

The algorithmic approaches used in ADASs and FSD systems differ significantly, reflecting their distinct objectives and operational domains. This section explores these differences and the potential for integration of these technologies.

FSD systems require more complex decision-making processes compared to ADASs. Hou et al. [102] analyzed the decision-making architectures in autonomous vehicles, highlighting the shift from rule-based system learning as a common standard in ADASs to more sophisticated machine learning approaches in FSD systems. The environmental understanding capabilities of FSD systems are generally more comprehensive than those of ADASs. Chen et al. [103] compared the perception systems used in ADASs and FSD systems, noting that FSD systems typically employ more advanced sensor fusion techniques and have a broader spatial and temporal understanding of the driving environment.

One of the key challenges in developing FSD systems is handling edge cases and unpredictable scenarios. Zhao [104] discussed the concept of “operational design domain” (ODD) and its implications for autonomous driving, emphasizing the need for FSD systems to handle a more comprehensive range of scenarios compared to ADASs.

There is growing interest in integrating ADASs and FSD technologies to create more robust and versatile systems. Fagnant et al. [105] proposed a hierarchical framework that combines the reliability of ADASs with the advanced capabilities of FSD systems, potentially offering a pathway to safer and more capable autonomous vehicles.

5.3. Regulatory and Ethical Considerations

The development and deployment of autonomous driving technologies raise significant regulatory and ethical questions. This section explores the current regulatory landscape, ethical frameworks for decision-making, and liability issues in autonomous systems.

The regulatory landscape for autonomous vehicles is rapidly evolving. Taeihagh and Lim [106] provided an overview of global regulatory approaches to autonomous vehicles, highlighting the challenges in balancing innovation with safety concerns. They noted significant variations in regulatory frameworks across different countries and regions.

Ethical decision-making in autonomous vehicles remains a contentious issue. Awad et al. [103] conducted a global survey on moral decisions made by autonomous vehicles, revealing significant cultural variations in ethical preferences. This highlights the need for flexible ethical frameworks adapting to different cultural contexts.

As vehicles become more autonomous, questions of liability and responsibility become increasingly complex [107]. De Bruyne and Werbrouck [108] examined the legal implications of autonomous vehicles, discussing potential shifts in liability from drivers to manufacturers and software developers.

5.4. Future Research Directions and Societal Impact

The field of autonomous driving continues to evolve rapidly, with several key areas emerging as priorities for future research and development. This section outlines a roadmap for future advancements and discusses the potential societal impacts of autonomous vehicles.

Achieving higher levels of autonomy requires advancements in multiple areas. Badue et al. [109] outlined a roadmap for autonomous driving research, emphasizing the need for improvements in perception, decision-making, and as well as control algorithms and advancements in hardware and infrastructure. Zhao et al. [104] identified several critical areas for future research, including improved sensor fusion, more robust machine-learning models, and enhanced human-machine interfaces.

The widespread adoption of autonomous vehicles is expected to have significant societal and industrial impacts [110,111]. Fagnant and Kockelman [105] analyzed the potential effects of autonomous vehicles on traffic safety, congestion, and urban planning. They projected substantial benefits in terms of reduced accidents and improved mobility, but also noted potential challenges such as job displacement [112,113] in the transportation sector.

As autonomous driving technologies advance, the critical evaluation of current algorithms is essential. Muhammad et al. [114] proposed a framework for assessing the safety and reliability of AI-based autonomous driving systems, emphasizing the need for rigorous testing and validation processes. Despite significant progress, several major research gaps and challenges still need to be addressed [115,116]. Litman [117] identified vital areas requiring further research, including improving performance in adverse weather conditions, enhancing cybersecurity measures, and developing more sophisticated methods [118] for handling complex urban environments.

6. Conclusions

The field of autonomous driving is at a critical juncture, with ADAS technologies becoming increasingly sophisticated and FSD systems on the horizon. While significant progress has been made, numerous challenges remain in algorithmic robustness, ethical decision-making, and regulatory frameworks. Future research should focus on bridging the gap between ADASs and FSD systems, improving performance in challenging conditions, and addressing the broader societal implications of autonomous vehicles. As these technologies continue to evolve, they have the potential to revolutionize transportation, enhancing safety, efficiency, and mobility for millions of people worldwide.

Funding

This research received no external funding.

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Acknowledgments

The authors would like to thank the creators of the datasets IDD-AW, SID, DAWN, WEDGE, nuScenes, and Waymo Open Dataset for providing invaluable data that sup-ported this research. In preparing the manuscript, the author utilized generative AI and AI-assisted technologies for literature organization and grammar revision. Specifically, ChatGPT and Claude were used to assist with organizing references and refining the language and phrasing of the manuscript. However, the author solely authored the core content and research insights, with generative AI and AI-assisted technologies used only for auxiliary tasks such as language optimization and structural adjustments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sezgin, F.; Vriesman, D.; Steinhauser, D.; Lugner, R.; Brandmeier, T. Safe Autonomous Driving in Adverse Weather: Sensor Evaluation and Performance Monitoring. In Proceedings of the IEEE Intelligent Vehicles Symposium, Anchorage, AK, USA, 4–7 June 2023. [Google Scholar]

- Dey, K.C.; Mishra, A.; Chowdhury, M. Potential of intelligent transportation systems in mitigating adverse weather impacts on road mobility: A review. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1107–1119. [Google Scholar] [CrossRef]

- Yang, G.; Song, X.; Huang, C.; Deng, Z.; Shi, J.; Zhou, B. Drivingstereo: A large-scale dataset for stereo matching in autonomous driving scenarios. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Wang, W.; You, X.; Chen, L.; Tian, J.; Tang, F.; Zhang, L. A Scalable and Accurate De-Snowing Algorithm for LiDAR Point Clouds in Winter. Remote Sens. 2022, 14, 1468. [Google Scholar] [CrossRef]

- Musat, V.; Fursa, I.; Newman, P.; Cuzzolin, F.; Bradley, A. Multi-weather city: Adverse weather stacking for autonomous driving. In Proceedings of the IEEE International Conference on Computer Vision, Virtual, 11–17 October 2021. [Google Scholar]

- Ha, M.H.; Kim, C.H.; Park, T.H. Object Recognition for Autonomous Driving in Adverse Weather Condition Using Polarized Camera. In Proceedings of the 2022 10th International Conference on Control, Mechatronics and Automation, ICCMA 2022, Luxembourg, 9–12 November 2022. [Google Scholar]

- Kim, Y.; Shin, J. Efficient and Robust Object Detection Against Multi-Type Corruption Using Complete Edge Based on Lightweight Parameter Isolation. IEEE Trans. Intell. Veh. 2024, 9, 3181–3194. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Ritter, W. Benchmarking Image Sensors under Adverse Weather Conditions for Autonomous Driving. In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, China, 26–30 June 2018. [Google Scholar]

- Wu, C.H.; Tai, T.C.; Lai, C.F. Semantic Image Segmentation in Similar Fusion Background for Self-driving Vehicles. Sens. Mater. 2022, 34, 467–491. [Google Scholar] [CrossRef]

- Du, Y.; Yang, T.; Chang, Q.; Zhong, W.; Wang, W. Enhancing Lidar and Radar Fusion for Vehicle Detection in Adverse Weather via Cross-Modality Semantic Consistency. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Wang, J.; Wu, Z.; Liang, Y.; Tang, J.; Chen, H. Perception Methods for Adverse Weather Based on Vehicle Infrastructure Cooperation System: A Review. Sensors 2024, 24, 374. [Google Scholar] [CrossRef]

- Mu, M.; Wang, C.; Liu, X.; Bi, H.; Diao, H. AI monitoring and warning system for low visibility of freeways using variable weight combination model. Adv. Control Appl. Eng. Ind. Syst. 2024, e195. [Google Scholar] [CrossRef]

- Almalioglu, Y.; Turan, M.; Trigoni, N.; Markham, A. Deep learning-based robust positioning for all-weather autonomous driving. Nat. Mach. Intell. 2022, 4, 749–760. [Google Scholar] [CrossRef]

- Sun, P.P.; Zhao, X.M.; Jiang Y de Wen, S.Z.; Min, H.G. Experimental Study of Influence of Rain on Performance of Automotive LiDAR. Zhongguo Gonglu Xuebao/China J. Highw. Transp. 2022, 35, 318–328. [Google Scholar]

- Nahata, D.; Othman, K. Exploring the challenges and opportunities of image processing and sensor fusion in autonomous vehicles: A comprehensive review. AIMS Electron. Electr. Eng. 2023, 7, 271–321. [Google Scholar] [CrossRef]

- Al-Haija, Q.A.; Gharaibeh, M.; Odeh, A. Detection in Adverse Weather Conditions for Autonomous Vehicles via Deep Learning. AI 2022, 3, 303–317. [Google Scholar] [CrossRef]

- Sheeny, M.; de Pellegrin, E.; Mukherjee, S.; Ahrabian, A.; Wang, S.; Wallace, A. Radiate: A Radar Dataset for Automotive Perception in Bad Weather. In Proceedings of the IEEE International Conference on Robotics and Automation, Xi’an, China, 30 May–5 June 2021. [Google Scholar]

- Gupta, H.; Kotlyar, O.; Andreasson, H.; Lilienthal, A.J. Robust Object Detection in Challenging Weather Conditions. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024. [Google Scholar]

- Yoneda, K.; Suganuma, N.; Yanase, R.; Aldibaja, M. Automated driving recognition technologies for adverse weather conditions. IATSS Res. 2019, 43, 253–262. [Google Scholar] [CrossRef]

- Hannan Khan, A.; Tahseen, S.; Rizvi, R.; Dengel, A. Real-Time Traffic Object Detection for Autonomous Driving. 31 January 2024. Available online: https://arxiv.org/abs/2402.00128v2 (accessed on 24 October 2024).

- Hassaballah, M.; Kenk, M.A.; Muhammad, K.; Minaee, S. Vehicle Detection and Tracking in Adverse Weather Using a Deep Learning Framework. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4230–4242. [Google Scholar] [CrossRef]

- Chougule, A.; Chamola, V.; Sam, A.; Yu, F.R.; Sikdar, B. A Comprehensive Review on Limitations of Autonomous Driving and Its Impact on Accidents and Collisions. IEEE Open J. Veh. Technol. 2024, 5, 142–161. [Google Scholar] [CrossRef]

- Scheiner, N.; Kraus, F.; Appenrodt, N.; Dickmann, J.; Sick, B. Object detection for automotive radar point clouds—A comparison. AI Perspect. 2021, 3, 6. [Google Scholar] [CrossRef]

- Aloufi, N.; Alnori, A.; Basuhail, A. Enhancing Autonomous Vehicle Perception in Adverse Weather: A Multi Objectives Model for Integrated Weather Classification and Object Detection. Electronics 2024, 13, 3063. [Google Scholar] [CrossRef]

- Gehrig, S.; Schneider, N.; Stalder, R.; Franke, U. Stereo vision during adverse weather—Using priors to increase robustness in real-time stereo vision. Image Vis. Comput. 2017, 68, 28–39. [Google Scholar] [CrossRef]

- Peng, L.; Wang, H.; Li, J. Uncertainty Evaluation of Object Detection Algorithms for Autonomous Vehicles. Automot. Innov. 2021, 4, 241–252. [Google Scholar] [CrossRef]

- Hasirlioglu, S.; Doric, I.; Lauerer, C.; Brandmeier, T. Modeling and simulation of rain for the test of automotive sensor systems. In Proceedings of the IEEE Intelligent Vehicles Symposium, Gotenburg, Sweden, 19–22 June 2016. [Google Scholar]

- Bernardin, F.; Bremond, R.; Ledoux, V.; Pinto, M.; Lemonnier, S.; Cavallo, V.; Colomb, M. Measuring the effect of the rainfall on the windshield in terms of visual performance. Accid. Anal. Prev. 2014, 63, 83–88. [Google Scholar] [CrossRef]

- Heinzler, R.; Schindler, P.; Seekircher, J.; Ritter, W.; Stork, W. Weather influence and classification with automotive lidar sensors. In Proceedings of the IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019. [Google Scholar]

- Bijelic, M.; Gruber, T.; Ritter, W. A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down? In Proceedings of the IEEE Intelligent Vehicles Symposium, Changshu, Suzhou, China 26–30 June 2018.

- Rasshofer, R.H.; Spies, M.; Spies, H. Influences of weather phenomena on automotive laser radar systems. Adv. Radio Sci. 2011, 9, 49–60. [Google Scholar] [CrossRef]

- Yang, H.; Ding, M.; Carballo, A.; Zhang, Y.; Ohtani, K.; Niu, Y.; Ge, M.; Feng, Y.; Takeda, K. Synthesizing Realistic Snow Effects in Driving Images Using GANs and Real Data with Semantic Guidance. In Proceedings of the 2023 IEEE Intelligent Vehicles Symposium (IV), Anchorage, AK, USA, 4–7 June 2023. [Google Scholar]

- Han, C.; Huo, J.; Gao, Q.; Su, G.; Wang, H. Rainfall Monitoring Based on Next-Generation Millimeter-Wave Backhaul Technologies in a Dense Urban Environment. Remote Sens. 2020, 12, 1045. [Google Scholar] [CrossRef]

- Lee, S.; Lee, D.; Choi, P.; Park, D. Accuracy–power controllable lidar sensor system with 3d object recognition for autonomous vehicle. Sensors 2020, 20, 5706. [Google Scholar] [CrossRef] [PubMed]

- Ehrnsperger, M.G.; Siart, U.; Moosbühler, M.; Daporta, E.; Eibert, T.F. Signal degradation through sediments on safety-critical radar sensors. Adv. Radio Sci. 2019, 17, 91–100. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Chowdhuri, S.; Pankaj, T.; Zipser, K. MultiNet: Multi-Modal Multi-Task Learning for Autonomous Driving. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision, WACV, Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1496–1504. Available online: https://arxiv.org/abs/1709.05581v4 (accessed on 24 October 2024).

- Huang, Z.; Lv, C.; Xing, Y.; Wu, J. Multi-Modal Sensor Fusion-Based Deep Neural Network for End-to-End Autonomous Driving with Scene Understanding. IEEE Sens. J. 2021, 21, 11781–11790. [Google Scholar] [CrossRef]

- Efrat, N.; Bluvstein, M.; Oron, S.; Levi, D.; Garnett, N.; Shlomo, B.E. 3D-LaneNet+: Anchor Free Lane Detection using a Semi-Local Representation. arXiv 2020, arXiv:2011.01535. [Google Scholar]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access. 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Gamba, M.T.; Marucco, G.; Pini, M.; Ugazio, S.; Falletti, E.; lo Presti, L. Prototyping a GNSS-Based Passive Radar for UAVs: An Instrument to Classify the Water Content Feature of Lands. Sensors 2015, 15, 28287–28313. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving AI for self-driving vehicles, artificial intelligence, autonomous driving, deep learning for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Rasouli, A.; Tsotsos, J.K. Autonomous vehicles that interact with pedestrians: A survey of theory and practice. IEEE Trans. Intell. Transp. Syst. 2020, 21, 900–918. [Google Scholar] [CrossRef]

- Cui, G.; Zhang, W.; Xiao, Y.; Yao, L.; Fang, Z. Cooperative Perception Technology of Autonomous Driving in the Internet of Vehicles Environment: A Review. Sensors 2022, 22, 5535. [Google Scholar] [CrossRef]

- Xu, M.; Niyato, D.; Chen, J.; Zhang, H.; Kang, J.; Xiong, Z.; Mao, S.; Han, Z. Generative AI-Empowered Simulation for Autonomous Driving in Vehicular Mixed Reality Metaverses. IEEE J. Sel. Top. Signal Process. 2023, 17, 1064–1079. [Google Scholar] [CrossRef]

- Hasirlioglu, S.; Kamann, A.; Doric, I.; Brandmeier, T. Test methodology for rain influence on automotive surround sensors. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Rio de Janeiro, Brazil, 1–4 November 2016. [Google Scholar]

- Zhu, M.; Wang, X.; Wang, Y. Human-like autonomous car-following model with deep reinforcement learning. Transp. Res. Part C Emerg. Technol. 2018, 97, 348–368. [Google Scholar] [CrossRef]

- Ferranti, L.; Brito, B.; Pool, E.; Zheng, Y.; Ensing, R.M.; Happee, R.; Shyrokau, B.; Kooij, J.F.P.; Alonso-Mora, J.; Gavrila, D.M. SafeVRU: A research platform for the interaction of self-driving vehicles with vulnerable road users. In Proceedings of the IEEE Intelligent Vehicles Symposium, Paris, France, 9–12 June 2019. [Google Scholar]

- Mozaffari, S.; Al-Jarrah, O.Y.; Dianati, M.; Jennings, P.; Mouzakitis, A. Deep Learning-Based Vehicle Behavior Prediction for Autonomous Driving Applications: A Review. IEEE Trans. Intell. Transp. Syst. 2022, 23, 33–47. [Google Scholar] [CrossRef]

- Wang, C.; Sun, Q.; Li, Z.; Zhang, H. Human-like lane change decision model for autonomous vehicles that considers the risk perception of drivers in mixed traffic. Sensors 2020, 20, 2259. [Google Scholar] [CrossRef]

- Shaik, F.A.; Malreddy, A.; Billa, N.R.; Chaudhary, K.; Manchanda, S.; Varma, G. IDD-AW: A Benchmark for Safe and Robust Segmentation of Drive Scenes in Unstructured Traffic and Adverse Weather. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2024; pp. 4602–4611. [Google Scholar]

- El-Shair, Z.A.; Abu-Raddaha, A.; Cofield, A.; Alawneh, H.; Aladem, M.; Hamzeh, Y.; Rawashdeh, S.A. SID: Stereo Image Dataset for Autonomous Driving in Adverse Conditions. In Proceedings of the NAECON 2024-IEEE National Aerospace and Electronics Conference, Dayton, OH, USA, 15–18 July 2024; pp. 403–408. [Google Scholar]

- Kenk, M.A.; Hassaballah, M. DAWN: Vehicle Detection in Adverse Weather Nature. arXiv 2020, arXiv:2008.05402. [Google Scholar]

- Marathe, A.; Ramanan, D.; Walambe, R.; Kotecha, K. WEDGE: A Multi-Weather Autonomous Driving Dataset Built from Generative Vision-Language Models. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. NuScenes: A Multimodal Dataset for Autonomous Driving. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Sun, P.; Kretzschmar, H.; Dotiwalla, X.; Chouard, A.; Patnaik, V.; Tsui, P.; Guo, J.; Zhou, Y.; Chai, Y.; Caine, B.; et al. Scalability in Perception for Autonomous Driving: Waymo Open Dataset. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Wang, Y.; Zheng, K.; Tian, D.; Duan, X.; Zhou, J. Pre-Training with Asynchronous Supervised Learning for Reinforcement Learning Based Autonomous Driving. Front. Inf. Technol. Electron. Eng. 2021, 22, 673–686. [Google Scholar] [CrossRef]

- Tahir, N.U.A.; Zhang, Z.; Asim, M.; Chen, J.; ELAffendi, M. Object Detection in Autonomous Vehicles Under Adverse Weather: A Review of Traditional and Deep Learning Approaches. Algorithms 2024, 17, 103. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Bavirisetti, D.P.; Martinsen, H.R.; Kiss, G.H.; Lindseth, F. A Multi-Task Vision Transformer for Segmentation and Monocular Depth Estimation for Autonomous Vehicles. IEEE Open J. Intell. Transp. Syst. 2023, 4, 909–928. [Google Scholar] [CrossRef]

- Alahi, A.; Goel, K.; Ramanathan, V.; Robicquet, A.; Li, F.F.; Savarese, S. Social LSTM: Human Trajectory Prediction in Crowded Spaces. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Yurtsever, E.; Lambert, J.; Carballo, A.; Takeda, K. A Survey of Autonomous Driving: Common Practices and Emerging Technologies. IEEE Access 2020, 8, 58443–58469. [Google Scholar] [CrossRef]

- Aoude, G.S.; Desaraju, V.R.; Stephens, L.H.; How, J.P. Driver Behavior Classification at Intersections and Validation on Large Naturalistic Data Set. IEEE Trans. Intell. Transp. Syst. 2012, 13, 724–736. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, L.; Liu, Y.; Li, Y.; Wang, S. Towards Real-Time Multi-Object Tracking. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2020. [Google Scholar]

- Xu, H.; Gao, Y.; Yu, F.; Darrell, T. End-to-End Learning of Driving Models from Large-Scale Video Datasets. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Jocher, G. ultralytics/yolov5: v7.0-YOLOv5 SOTA Real-Time Instance Segmentation (v7.0). Available online: https://github.com/ultralytics/yolov5/tree/v7.0 (accessed on 24 October 2024).

- Han, H.; Xie, T. Lane Change Trajectory Prediction of Vehicles in Highway Interweaving Area Using Seq2Seq-Attention Network. Zhongguo Gonglu Xuebao/China J. Highw. Transp. 2020, 33, 106–118. [Google Scholar]

- Shalev-Shwartz, S.; Shammah, S.; Shashua, A. Safe, Multi-Agent, Reinforcement Learning for Autonomous Driving. arXiv 2016, arXiv:1610.03295v1. [Google Scholar]

- Hang, P.; Lv, C.; Huang, C.; Cai, J.; Hu, Z.; Xing, Y. An Integrated Framework of Decision Making and Motion Planning for Autonomous Vehicles Considering Social Behaviors. IEEE Trans. Veh. Technol. 2020, 69, 14458–14469. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Xu, X.; Wang, X.; Wu, X.; Hassanin, O.; Chai, C. Calibration and Evaluation of the Responsibility-Sensitive Safety Model of Autonomous Car-Following Maneuvers Using Naturalistic Driving Study Data. Transp. Res. Part C Emerg. Technol. 2021, 123, 102988. [Google Scholar] [CrossRef]

- Rjoub, G.; Wahab, O.A.; Bentahar, J.; Bataineh, A.S. Improving Autonomous Vehicles Safety in Snow Weather Using Federated YOLO CNN Learning. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2021. [Google Scholar]

- Lefèvre, S.; Vasquez, D.; Laugier, C. A Survey on Motion Prediction and Risk Assessment for Intelligent Vehicles. ROBOMECH J. 2014, 1, 1. [Google Scholar] [CrossRef]

- Bolano, A. “Moral Machine Experiment”: Large-Scale Study Reveals Regional Differences in Ethical Preferences for Self-Driving Cars. Sci. Trends 2018. [Google Scholar] [CrossRef]

- Awad, E.; Dsouza, S.; Kim, R.; Schulz, J.; Henrich, J.; Shariff, A.; Bonnefon, J.-F.; Rahwan, I. The Moral Machine Experiment. Nature 2018, 563, 59–64. [Google Scholar] [CrossRef]

- Djuric, N.; Radosavljevic, V.; Cui, H.; Nguyen, T.; Chou, F.C.; Lin, T.H.; Schneider, J. Motion Prediction of Traffic Actors for Autonomous Driving Using Deep Convolutional Networks. arXiv 2018, arXiv:1808.05819. [Google Scholar]

- Chandra, R.; Bhattacharya, U.; Bera, A.; Manocha, D. Traphic: Trajectory Prediction in Dense and Heterogeneous Traffic Using Weighted Interactions. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Li, S.; Shu, K.; Chen, C.; Cao, D. Planning and Decision-Making for Connected Autonomous Vehicles at Road Intersections: A Review. Chin. J. Mech. Eng. 2021, 34, 1–18. [Google Scholar] [CrossRef]

- Schwarting, W.; Alonso-Mora, J.; Rus, D. Planning and Decision-Making for Autonomous Vehicles. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 187–210. [Google Scholar] [CrossRef]

- Wang, J.; Wu, J.; Li, Y. The Driving Safety Field Based on Driver-Vehicle-Road Interactions. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2203–2214. [Google Scholar] [CrossRef]

- Jahromi, B.S.; Tulabandhula, T.; Cetin, S. Real-Time Hybrid Multi-Sensor Fusion Framework for Perception in Autonomous Vehicles. Sensors 2019, 19, 4357. [Google Scholar] [CrossRef] [PubMed]

- Choi, D.; An, T.H.; Ahn, K.; Choi, J. Driving Experience Transfer Method for End-to-End Control of Self-Driving Cars. arXiv 2018, arXiv:1809.01822. [Google Scholar]

- Li, G.; Yang, Y.; Zhang, T.; Qu, X.; Cao, D.; Cheng, B.; Li, K. Risk Assessment Based Collision Avoidance Decision-Making for Autonomous Vehicles in Multi-Scenarios. Transp. Res. Part C Emerg. Technol. 2021, 122, 102820. [Google Scholar] [CrossRef]

- Ye, B.-L.; Wu, W.; Gao, H.; Lu, Y.; Cao, Q.; Zhu, L. Stochastic Model Predictive Control for Urban Traffic Networks. Appl. Sci. 2017, 7, 588. [Google Scholar] [CrossRef]

- Galceran, E.; Cunningham, A.G.; Eustice, R.M.; Olson, E. Multipolicy Decision-Making for Autonomous Driving via Change-Point-Based Behavior Prediction: Theory and Experiment. Auton. Robot. 2017, 41, 1367–1382. [Google Scholar] [CrossRef]

- Benderius, O.; Berger, C.; Malmsten Lundgren, V. The Best Rated Human-Machine Interface Design for Autonomous Vehicles in the 2016 Grand Cooperative Driving Challenge. IEEE Trans. Intell. Transp. Syst. 2018, 19, 1302–1307. [Google Scholar] [CrossRef]

- Patole, S.M.; Torlak, M.; Wang, D.; Ali, M. Automotive Radars: A Review of Signal Processing Techniques. IEEE Signal Process. Mag. 2017, 34, 22–35. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot Multibox Detector. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Hu, X.; Chen, L.; Tang, B.; Cao, D.; He, H. Dynamic Path Planning for Autonomous Driving on Various Roads with Avoidance of Static and Moving Obstacles. Mech. Syst. Signal Process. 2018, 100, 482–500. [Google Scholar] [CrossRef]

- Feng, D.; Rosenbaum, L.; Timm, F.; Dietmayer, K. Leveraging Heteroscedastic Aleatoric Uncertainties for Robust Real-Time LiDAR 3D Object Detection. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019. [Google Scholar]

- Dixit, V.V.; Chand, S.; Nair, D.J. Autonomous Vehicles: Disengagements, Accidents and Reaction Times. PLoS ONE 2016, 11, e0168054. [Google Scholar] [CrossRef] [PubMed]

- Kiran, B.R.; Sobh, I.; Talpaert, V.; Mannion, P.; Sallab, A.A.A.; Yogamani, S.; Perez, P. Deep Reinforcement Learning for Autonomous Driving: A Survey. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4909–4926. [Google Scholar] [CrossRef]

- Malik, S.; Khan, M.A.; Aadam; El-Sayed, H.; Iqbal, F.; Khan, J.; Ullah, O. CARLA+: An Evolution of the CARLA Simulator for Complex Environment Using a Probabilistic Graphical Model. Drones 2023, 7, 111. [Google Scholar] [CrossRef]

- Zhao, D.; Lam, H.; Peng, H.; Bao, S.; LeBlanc, D.J.; Nobukawa, K.; Pan, C.S. Accelerated Evaluation of Automated Vehicles Safety in Lane-Change Scenarios Based on Importance Sampling Techniques. IEEE Trans. Intell. Transp. Syst. 2017, 18, 595–607. [Google Scholar] [CrossRef]

- Wang, J.; Zhang, L.; Zhang, D.; Li, K. An Adaptive Longitudinal Driving Assistance System Based on Driver Characteristics. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1–12. [Google Scholar] [CrossRef]

- Bijelic, M.; Gruber, T.; Mannan, F.; Kraus, F.; Ritter, W.; Dietmayer, K.; Heide, F. Seeing through Fog without Seeing Fog: Deep Multimodal Sensor Fusion in Unseen Adverse Weather. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Petrovskaya, A.; Thrun, S. Model-Based Vehicle Detection and Tracking for Autonomous Urban Driving. Auton. Robot. 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Boban, M.; Kousaridas, A.; Manolakis, K.; Eichinger, J.; Xu, W. Connected Roads of the Future: Use Cases, Requirements, and Design Considerations for Vehicle-To-Everything Communications. IEEE Veh. Technol. Mag. 2018, 13, 110–123. [Google Scholar] [CrossRef]

- Bengler, K.; Dietmayer, K.; Farber, B.; Maurer, M.; Stiller, C.; Winner, H. Three Decades of Driver Assistance Systems: Review and Future Perspectives. IEEE Intell. Transp. Syst. Mag. 2014, 6, 6–22. [Google Scholar] [CrossRef]

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A Survey of Deep Learning Applications to Autonomous Vehicle Control. IEEE Trans. Intell. Transp. Syst. 2021, 22, 712–733. [Google Scholar] [CrossRef]