Abstract

This study presents an innovative hybrid evolutionary algorithm that combines the Arctic Puffin Optimization (APO) algorithm with the JADE dynamic differential evolution framework. The APO algorithm, inspired by the foraging patterns of Arctic puffins, demonstrates certain challenges, including a tendency to converge prematurely at local minima, a slow rate of convergence, and an insufficient equilibrium between the exploration and exploitation processes. To mitigate these drawbacks, the proposed hybrid approach incorporates the dynamic features of JADE, which enhances the exploration–exploitation trade-off through adaptive parameter control and the use of an external archive. By synergizing the effective search mechanisms modeled after the foraging behavior of Arctic puffins with JADE’s advanced dynamic strategies, this integration significantly improves global search efficiency and accelerates the convergence process. The effectiveness of APO-JADE is demonstrated through benchmark tests against well-known IEEE CEC 2022 unimodal and multimodal functions, showing superior performance over 32 compared optimization algorithms. Additionally, APO-JADE is applied to complex engineering design problems, including the optimization of engineering structures and mechanisms, revealing its practical utility in navigating challenging, multi-dimensional search spaces typically encountered in real-world engineering problems. The results confirm that APO-JADE outperformed all of the compared optimizers, effectively addressing the challenges of unknown and complex search areas in engineering design optimization.

1. Introduction

In the field of engineering design, the quest for optimal solutions represents a formidable challenge. This difficulty arises primarily due to the complex, multi-dimensional nature of design spaces, where multiple variables and interdependencies must be navigated simultaneously [1]. Moreover, the intricate constraints that govern these spaces—ranging from material properties and environmental considerations to budgetary and time constraints—further complicate the optimization process [2]. Traditional optimization methods, such as gradient-based techniques and linear programming, often grapple with several limitations that undermine their effectiveness in such complex scenarios [3].

One of the most significant limitations of traditional methods is their tendency to converge to local optima rather than global ones. This issue is particularly problematic in complex design landscapes that feature numerous feasible solutions, separated by suboptimal regions [4]. Furthermore, traditional methods often entail high computational costs, especially as the dimensionality of the problem increases, which can make them impractical for large-scale applications or for use in real-time scenarios [5]. Additionally, these methods typically rely on the availability and accuracy of gradient information, which may not be obtainable for all types of problems, such as those involving discontinuous or non-differentiable spaces [6].

In recent years, there has been significant growth in the development of metaheuristic algorithms to address these challenges [7]. These algorithms provide a powerful, adaptable, and efficient approach to solving optimization problems in diverse fields [8]. Unlike traditional methods, metaheuristics operate independently of gradient information, employing strategies inspired by natural or social processes to locate optimal solutions [9]. Their flexibility enables their application across a broad spectrum of problems, ranging from abstract mathematical models to real-world engineering design challenges [10,11]. Additionally, metaheuristics are highly regarded for their capability to overcome local optima, ensuring a more thorough exploration of the solution space and enhancing the probability of identifying global optima [12]. Due to their stochastic and dynamic nature, these algorithms are particularly well suited for addressing the complexities and uncertainties characteristic of engineering design, offering innovative and efficient solutions in a rapidly advancing technological landscape [13].

The Arctic Puffin Optimization (APO) algorithm, introduced by [14], is a recently developed metaheuristic inspired by the distinctive foraging strategies of Arctic puffins. This algorithm draws on the puffins’ ability to navigate and adapt to the harsh and variable conditions of the Arctic, effectively balancing exploration and exploitation phases. Despite its potential, APO, like many nature-inspired algorithms, can benefit from enhancements to address complex, multimodal engineering design problems more effectively.

In this study, we propose an innovative hybrid algorithm that synergizes the strengths of Arctic Puffin Optimization with JADE, an advanced differential evolution algorithm characterized by its dynamic self-adaptive parameter control mechanism [15]. JADE’s dynamic parameter tuning significantly accelerates convergence and improves the precision of the evolutionary process, making it particularly advantageous for continuous optimization tasks. By integrating JADE’s adaptive strategies with the robust exploratory capabilities of APO, the resulting hybrid algorithm is designed to enhance performance in tackling challenging engineering design problems.

This research introduces the Hybrid Arctic Puffin Optimization with JADE (APO-JADE) algorithm, demonstrating its application in solving engineering design problems. Through comprehensive comparative evaluations with established optimization techniques, the results underscore the superior efficiency, convergence speed, and solution quality of APO-JADE. Furthermore, the versatility of the proposed algorithm is validated through its application to real-world engineering problems, highlighting its potential as a robust and effective tool for complex design optimization challenges.

1.1. Research Contribution

The primary contributions of this research are outlined as follows:

- Proposing of the Hybrid APO-JADE Algorithm: This study introduces the Hybrid Arctic Puffin Optimization with JADE (APO-JADE) algorithm, integrating the exploratory capabilities of APO with the adaptive evolutionary mechanisms of JADE to enhance optimization performance and reliability.

- Outperforming Performance on Standardized Benchmarks: Through extensive evaluations on the CEC2022 benchmark functions, APO-JADE is demonstrated to outperform existing algorithms, achieving superior convergence speed, solution accuracy, and computational efficiency.

- Practical Applications in Engineering Design: The APO-JADE algorithm has been effectively applied to optimize the design of planetary gear trains and three-bar truss structures, validating its robustness and practical utility in solving complex engineering optimization problems.

- Statistical Analysis and Comparison with the State of the Art: This research includes a thorough statistical comparison of APO-JADE with other state-of-the-art optimization techniques, highlighting its superior performance across various metrics.

- Versatility and Applicability: The adaptability of APO-JADE is showcased through its ability to handle diverse optimization landscapes, emphasizing its potential as a general-purpose optimization technique for a variety of industrial applications.

1.2. Paper Structure

This paper is structured as follows: The Literature Review section examines advancements in engineering design optimization, with a particular focus on hybrid algorithms developed to address the limitations of standalone optimization methods. This section emphasizes improvements in solution accuracy, convergence rates, and the ability to overcome local optima by leveraging the complementary strengths of multiple algorithms. The Hybrid Arctic Puffin Optimization with JADE (APO-JADE) section introduces the conceptual framework of the APO-JADE algorithm, elaborating on the integration of APO’s nature-inspired mechanisms with JADE’s adaptive parameter control and external archive strategies. The Mathematical Model section presents the mathematical formulations underpinning the APO-JADE algorithm, encompassing initialization, population dynamics, hybridization mechanisms, and the equations guiding its exploration and exploitation phases.

The Results and Discussion section provides a comprehensive performance analysis of APO-JADE based on rigorous testing using CEC2022 benchmark functions. This analysis includes statistical evaluations, convergence behavior, search dynamics, fitness assessments, diversity metrics, and box plot visualizations. The Engineering Design Optimization Applications section demonstrates the practical applicability of APO-JADE by addressing two real-world engineering optimization problems: the design of a planetary gear train and a three-bar truss structure. This section includes detailed problem formulations, fitness functions, constraints, and optimization outcomes. Finally, the Conclusion section summarizes the key findings, underscores the significant enhancements achieved by APO-JADE, and proposes potential avenues for future research and development.

2. Literature Review

In the domain of engineering design optimization, a variety of hybrid algorithms have been developed to address the limitations of standalone optimization methods. These hybrid strategies aim to enhance solution precision, accelerate convergence rates, and improve the capability to evade local optima by integrating the complementary strengths of multiple algorithms.

Hu et al. [16] proposed the Dynamic Hybrid Dandelion Optimizer (DETDO), which incorporates dynamic tent chaotic mapping, differential evolution (DE), and dynamic t-distribution perturbation. This method addresses the original dandelion optimizer’s shortcomings, such as limited exploitation capabilities and slow convergence rates. Experimental findings reveal that DETDO delivers superior optimization accuracy and faster convergence, establishing its suitability for real-world engineering challenges. Similarly, Saberi et al. [17] introduced a biomimetic electrospun cartilage decellularized matrix (CDM)/chitosan nanofiber hybrid material for tissue engineering, optimized using the Box–Behnken design to achieve optimal mechanical properties and structural characteristics. This hybrid material demonstrated improved cell proliferation and enhanced nanofiber properties, making it a promising solution for tissue engineering applications.

Verma and Parouha [18] developed the haDEPSO algorithm, a hybrid approach combining advanced differential evolution (aDE) and particle swarm optimization (aPSO). This algorithm achieves a balance between global and local search capabilities, leading to superior solutions for intricate engineering optimization problems. Similarly, Hashim et al. [19] introduced AOA-BSA, a hybrid optimization algorithm that merges the Archimedes Optimization Algorithm (AOA) with the Bird Swarm Algorithm (BSA). This integration enhances the exploitation phase while maintaining a balance between exploration and exploitation, demonstrating exceptional performance in solving both constrained and unconstrained engineering problems. Zhang et al. [20] presented the CSDE hybrid algorithm, which combines Cuckoo Search (CS) with differential evolution (DE). By segmenting the population into subgroups and independently applying CS and DE, the algorithm avoids premature convergence and achieves superior global optima for constrained engineering problems.

Sun [21] proposed a hybrid role-engineering optimization method that integrates natural language processing with integer linear programming to construct optimal role-based access control systems, significantly improving security. Verma and Parouha [22] further extended their work on haDEPSO for constrained function optimization, demonstrating its effectiveness in solving complex engineering challenges by employing a multi-population strategy. This approach combines advanced differential evolution with Particle Swarm Optimization, outperforming other state-of-the-art algorithms. Lastly, Panagant et al. [23] developed the HMPANM algorithm, which integrates the Marine Predators Optimization Algorithm with the Nelder–Mead method. This hybrid algorithm has proven highly effective in optimizing structural design problems within the automotive industry, showcasing its practical application in industrial component optimization.

Yildiz and Mehta [24] proposed the HTSSA-NM and MRFO algorithms to optimize the structural and shape parameters of automobile brake pedals. These algorithms demonstrated strong performance in achieving lightweight, efficient designs, outperforming several established metaheuristics. Similarly, Duan and Yu [25] introduced a collaboration-based hybrid GWO-SCA optimizer (cHGWOSCA), which integrates the Grey Wolf Optimizer (GWO) and the Sine Cosine Algorithm (SCA). This hybrid method enhances global exploration and local exploitation, achieving notable success in global optimization and solving constrained engineering design problems. Barshandeh et al. [26] developed the HMPA, a hybrid multi-population algorithm that combines artificial ecosystem-based optimization with Harris Hawks Optimization. By dynamically exchanging solutions among sub-populations, this approach effectively balances exploration and exploitation, solving a wide array of engineering optimization challenges.

Uray et al. [27] presented a hybrid harmony search algorithm augmented by the Taguchi method to optimize algorithm parameters for engineering design problems. This combination improves the robustness and effectiveness of the optimization process by leveraging statistical methods for parameter estimation. Varaee et al. [28] introduced a hybrid algorithm that combines Particle Swarm Optimization (PSO) with the Generalized Reduced Gradient (GRG) algorithm, achieving a balance between exploration and exploitation. This method exhibited competitive results when applied to benchmark optimization problems and constrained engineering challenges. Fakhouri et al. [29] proposed a novel hybrid evolutionary algorithm that integrates PSO, the Sine Cosine Algorithm (SCA), and the Nelder–Mead Simplex (NMS) optimization method, significantly enhancing the search process and demonstrating superior performance in solving engineering design problems.

Dhiman [30] introduced the SSC algorithm, a hybrid metaheuristic combining sine–cosine functions with the Spotted Hyena Optimizer’s attack strategy and the Chimp Optimization Algorithm. This approach proved effective in addressing real-world complex problems and engineering applications. Kundu and Garg [31] developed the LSMA-TLBO algorithm, which integrates the Slime Mould Algorithm (SMA) with Teaching–Learning-Based Optimization (TLBO) and employs Lévy flight-based mutation. This hybrid approach achieved remarkable performance in numerical optimization and engineering design problems. Yang et al. [32] optimized a ladder-shaped hybrid anode for GaN-on-Si Schottky Barrier Diodes, achieving reduced reverse leakage current and exceptional electrical characteristics.

Yang et al. [33] proposed a hybrid proxy model for optimizing engineering parameters in deflagration fracturing for shale reservoirs. This model effectively balances reservoir failure degree with stimulation range, providing an efficient solution for multi-objective optimization in deflagration fracturing engineering. Zhong et al. [34] introduced the Hybrid Remora Crayfish Optimization Algorithm (HRCOA), designed to address continuous optimization problems and wireless sensor network coverage optimization. The algorithm demonstrated scalability and effectiveness across various optimization scenarios. Yildiz and Erdaş [35] developed the Hybrid Taguchi–Salp Swarm Optimization Algorithm (HTSSA), specifically aimed at enhancing the optimization of structural design problems in industry. This algorithm achieved superior results in shape optimization challenges when compared to recent optimization techniques.

Cheng et al. [36] proposed a robust optimization methodology for engineering structures with hybrid probabilistic and interval uncertainties. By incorporating stochastic and interval uncertain system parameters, the approach utilized a multi-layered refining Latin hypercube sampling-based Monte Carlo simulation and a novel genetic algorithm to solve robust optimization problems. The method was validated through complex engineering structural applications. Finally, Huang and Hu [37] developed the Hybrid Beluga Whale Optimization Algorithm (HBWO), which integrates Quasi-Oppositional-Based Learning (QOBL), dynamic and spiral predation strategies, and the Nelder–Mead Simplex search method. The HBWO algorithm demonstrated exceptional feasibility and effectiveness in solving practical engineering problems.

Tang et al. [38] introduced the Multi-Strategy Particle Swarm Optimization Hybrid Dandelion Optimization Algorithm (PSODO), which addresses challenges such as slow convergence rates and susceptibility to local optima. This algorithm demonstrated substantial improvements in global optimization accuracy, convergence speed, and computational efficiency. Similarly, Chagwiza et al. [39] developed a hybrid matheuristic algorithm by integrating the Grotschel–Holland and Max–Min Ant System algorithms. This approach proved effective in solving complex design and network engineering problems by increasing the certainty of achieving optimal solutions. Liu et al. [40] proposed a hybrid algorithm that combines the Seeker Optimization Algorithm with Particle Swarm Optimization, achieving superior performance on benchmark functions and in constrained engineering optimization scenarios.

Adegboye and Ülker [41] presented the AEFA-CSR, a hybrid algorithm that integrates the Cuckoo Search Algorithm with Refraction Learning into the Artificial Electric Field Algorithm. This integration enhances convergence rates and solution precision, yielding promising results across benchmark functions and engineering applications. Wang et al. [42] proposed the Improved Hybrid Aquila Optimizer and Harris Hawks Algorithm (IHAOHHO), which showed exceptional performance in standard benchmark functions and industrial engineering design problems. Kundu and Garg [43] introduced the TLNNABC, a hybrid algorithm combining the Artificial Bee Colony (ABC) algorithm with the Neural Network Algorithm (NNA) and Teaching–Learning-Based Optimization (TLBO). This approach demonstrated remarkable effectiveness in reliability optimization and engineering design applications.

Knypiński et al. [44] employed hybrid variations of the Cuckoo Search (CS) and Grey Wolf Optimization (GWO) algorithms to optimize steady-state functional parameters of LSPMSMs while adhering to non-linear constraint functions. The primary goal was to minimize design parameters related to motor performance, such as efficiency and operational stability. The hybridization leveraged the exploratory capabilities of one algorithm and the exploitative strengths of the other, resulting in a balanced search mechanism capable of escaping local optima and improving overall optimization outcomes.

Dhiman [45] proposed the Emperor Salp Algorithm (ESA), a hybrid bio-inspired metaheuristic optimization method that integrates the strengths of the Emperor Penguin Optimizer with the Salp Swarm Algorithm. This hybrid approach demonstrated superior robustness and the ability to achieve optimal solutions, outperforming several competing algorithms in comparative evaluations.

2.1. Overview of Arctic Puffin Optimization (APO)

The Arctic Puffin Optimization (APO) algorithm [14] is a bio-inspired metaheuristic approach developed to address complex engineering design optimization challenges. This algorithm draws inspiration from the survival strategies and foraging behaviors of Arctic puffins, incorporating two distinct phases: aerial flight (exploration) and underwater foraging (exploitation). These phases are meticulously designed to achieve a balance between global exploration and local exploitation, thereby improving the algorithm’s efficiency in locating optimal solutions [14].

The conceptual foundation of the APO algorithm is rooted in the behavior of Arctic puffins, which exhibit highly coordinated flight patterns and group foraging strategies to enhance their hunting efficiency. These birds fly at low altitudes, dive underwater to capture prey, and dynamically adapt their behaviors based on environmental conditions. When food resources are scarce, puffins adjust their underwater positions strategically to maximize foraging success and use signaling mechanisms to communicate with one another, thereby minimizing risks from predators [14].

2.2. Mathematical Model

2.2.1. Population Initialization

In the Arctic Puffin Optimization (APO) algorithm, each Arctic puffin symbolizes a candidate solution. The initial positions of these solutions are generated randomly within predefined bounds, as described by Equation (1) [14]:

Here, represents the position of the i-th puffin, rand denotes a random value uniformly distributed between 0 and 1, ub and lb indicate the upper and lower bounds of the search space, respectively, and N corresponds to the total population size.

2.2.2. Aerial Flight Stage (Exploration)

This stage emulates the coordinated flight and searching behaviors exhibited by Arctic puffins, employing techniques such as Lévy flight and velocity factors to enable efficient exploration of the solution space.

Aerial Search Strategy

The positions of the puffins are updated using the Lévy flight mechanism, as represented in Equation (2) [14]:

In this equation, denotes the position of the i-th puffin at iteration t, represents a random value generated based on the Lévy flight distribution, and R incorporates a normal distribution factor to introduce stochasticity and enhance exploration.

Swooping Predation Strategy

The swooping predation strategy, modeled by Equation (3), adjusts the displacement of the puffin to simulate a rapid dive for capturing prey [14]:

where S is a velocity coefficient.

2.2.3. Merging Candidate Positions

The positions obtained from the exploration and exploitation stages are combined and ranked based on their fitness values. From this sorted pool, the top N individuals are selected to constitute the updated population. This merging and selection process is mathematically represented by Equations (4)–(6) [14]:

2.3. Underwater Foraging Stage (Exploitation)

The underwater foraging stage involves strategies that enhance the algorithm’s local search capabilities. These include gathering foraging, intensifying search, and avoiding predators.

2.3.1. Gathering Foraging

In the gathering foraging strategy, puffins update their positions based on cooperative behavior, as shown in Equation (7):

where F is the cooperative factor, set to 0.5.

2.3.2. Intensifying Search

The intensifying search strategy adjusts the search strategy when food resources are depleted, as described by Equations (8) and (9):

In these equations, T represents the total number of iterations, while t denotes the current iteration. The factor f is dynamically adjusted based on the iteration progress and incorporates a random component to enhance search intensification.

2.3.3. Avoiding Predators

The strategy for avoiding predators is modeled by Equation (10):

Here, represents a uniformly distributed random variable within the range [0, 1].

The Arctic Puffin Optimization (APO) algorithm leverages a variety of exploration and exploitation mechanisms inspired by the natural behaviors of Arctic puffins. By incorporating Lévy flight for efficient global exploration, swooping predation for accelerated search, and adaptive underwater foraging strategies for intensified local search, the algorithm achieves a well-calibrated balance between exploration and exploitation. Additionally, the merging and selection processes further enhance the refinement of solutions, establishing APO as a highly effective approach for addressing complex optimization challenges.

2.4. Overview of Dynamic Differential Evolution with Optional External Archive (JADE)

The JADE optimizer (Dynamic Differential Evolution with Optional External Archive) [15] is a sophisticated extension of the traditional differential evolution (DE) algorithm. This advanced variant integrates dynamic parameter control mechanisms alongside an optional external archive to maintain and enhance population diversity. These features enable JADE to effectively address complex optimization challenges by achieving a balanced trade-off between exploration and exploitation [15].

2.5. Inspiration and Motivation

The development of the JADE optimizer stems from the objective of enhancing the conventional differential evolution algorithm by incorporating dynamic parameter adaptation and preserving diversity through the use of an external archive. This innovative approach significantly improves the algorithm’s efficiency and effectiveness in addressing a wide range of optimization challenges [15].

2.6. Mathematical Model

2.6.1. Population Initialization

In the JADE optimizer, the initial population is randomly generated within specified bounds, as represented by Equation (11) [15]:

Here, denotes the position of the i-th individual, rand is a random value uniformly distributed between 0 and 1, ub and lb represent the upper and lower bounds, respectively, and N is the population size.

2.6.2. Mutation Strategy

JADE employs a current-to-pbest mutation strategy, described by Equation (12):

In this equation, represents the mutant vector, is the current vector, is a randomly selected vector from the top of the population, and are randomly chosen vectors from the population, and F is the scaling factor.

2.6.3. Crossover Strategy

Here, represents the trial vector, is the mutant vector, is the current vector, is a uniformly distributed random value, denotes the crossover rate, and is a randomly chosen index to ensure at least one element from the mutant vector is selected.

2.6.4. Selection Strategy

The selection strategy determines the individuals for the next generation based on fitness evaluation, as expressed in Equation (14) [15]:

Here, f represents the fitness function used to evaluate the solutions.

2.6.5. Parameter Adaptation

JADE dynamically adjusts the parameters F and using historical data and a learning process, as modeled by Equations (15) and (16):

In these equations, represents a normal distribution with means and , and standard deviations and , respectively.

2.6.6. External Archive

JADE incorporates an external archive to maintain a set of inferior solutions, enhancing population diversity and guiding the mutation process. The archive is updated by adding new solutions and removing older ones based on predefined criteria.

3. Hybrid Arctic Puffin Optimization (APO) with JADE

The hybrid Arctic Puffin Optimization (APO) with JADE represents a novel optimization algorithm that combines the complementary strengths of two distinct methods: Arctic Puffin Optimization (APO) and JADE (Dynamic Differential Evolution with Optional External Archive). APO draws inspiration from the natural behaviors of Arctic puffins, particularly their foraging and predation strategies, which are translated into exploration and exploitation mechanisms within the optimization framework [14]. Conversely, JADE enhances the traditional differential evolution (DE) algorithm by introducing dynamic parameter control and an external archive, significantly improving diversity maintenance and convergence efficiency.

By integrating these approaches, the hybrid algorithm benefits from JADE’s adaptive mechanisms, which dynamically adjust control parameters and incorporate an external archive of inferior solutions to enhance population diversity. Simultaneously, it capitalizes on APO’s robust exploration and exploitation strategies, inspired by the puffins’ efficient foraging behaviors. This combination results in a hybrid algorithm capable of navigating complex optimization landscapes, escaping local optima, and converging to high-quality solutions.

The development of the APO-JADE algorithm is motivated by the need for a more robust and efficient optimization tool that integrates the strengths of APO and JADE. The hybrid approach recognizes that different optimization strategies offer unique benefits, which, when combined, can effectively address their respective limitations.

JADE contributes to the hybrid algorithm through its dynamic parameter control and external archive mechanisms. The dynamic parameter control adjusts the crossover rate () and scaling factor (F) based on the evolving search environment, ensuring adaptability and maintaining a balance between exploration (searching new regions) and exploitation (refining current solutions). Furthermore, the external archive stores inferior solutions, which can reintroduce diversity into the population, preventing premature convergence and enabling the algorithm to escape local optima.

APO enhances the hybrid algorithm through its bio-inspired mechanisms, which mimic the natural behaviors of Arctic puffins. These behaviors include collective foraging and dynamic adjustments to search strategies based on environmental feedback. During the exploration phase, modeled after puffins’ aerial flight, Lévy flights are employed to facilitate long-distance jumps in the solution space, enabling a broad search. In the exploitation phase, inspired by underwater foraging, the algorithm fine-tunes solutions around the best-found candidates, ensuring effective utilization of promising regions within the search space.

By integrating these complementary strategies, the APO-JADE algorithm achieves a comprehensive search capability that effectively balances exploration and exploitation. This results in a robust optimization process capable of addressing complex, high-dimensional problem spaces and discovering high-quality solutions.

3.1. Mathematical Model

3.1.1. JADE Parameters

The JADE parameters are initialized to ensure effective adaptation and diversity:

If the upper bound (ub) and lower bound (lb) are scalar values, they are expanded into vectors with a dimension equal to the problem’s dimensionality (dim). This ensures consistent boundary constraints across all dimensions of the search space.

3.1.2. Population Initialization

The population is generated using an initialization function that creates a random distribution of candidate solutions within the specified bounds:

Here, represents the population size, dim denotes the dimensionality of the problem, and ub and lb correspond to the upper and lower bounds, respectively.

3.1.3. Hybrid JADE-APO Loop

The optimization process alternates between the mechanisms of JADE and APO, iteratively refining the solution. This loop continues for a specified maximum number of iterations ().

JADE Mechanism

During the initial half of the iterations, the algorithm operates under the JADE mechanism:

Evaluate the fitness of the current population:

where represents the fitness value of the i-th individual in the population P, computed using the fitness function . The notation denotes the i-th individual across all dimensions.

Dynamically update and F using normal and Cauchy distributions:

As outlined in the equations, denotes the crossover probability for the i-th individual, sampled from a normal distribution with mean and standard deviation . Similarly, represents the scaling factor for differential mutation, sampled from a Cauchy distribution with location and scale . Both parameters are dynamically constrained to remain within their valid ranges.

Mutant vectors are generated using the current-to-pbest mutation strategy, as described in Equation (27):

Here, represents the mutant vector for the i-th individual at generation , is the current position of the i-th individual, is the position of the p-best individual, and and are the positions of two randomly selected individuals. F serves as the scaling factor for mutation.

Crossover is applied to generate trial vectors, as expressed in Equation (28):

In this equation, represents the trial vector generated for the i-th individual. The crossover operation combines the mutant vector and the current vector based on a crossover probability . A randomly selected index ensures that at least one dimension is taken from the mutant vector.

The fitness of trial vectors is evaluated, and the population is updated as shown in Equation (29):

Here, represents the updated position of the i-th individual. If the fitness value of the trial vector is better than or equal to the fitness of the current vector , the trial vector replaces the current vector; otherwise, the current vector is retained.

APO Mechanism

During the second half of the iterations, the algorithm employs the APO mechanism.

Here, B represents the factor that modulates the balance between exploration and exploitation, rand is a uniformly distributed random number, l is the current iteration, and denotes the maximum number of iterations.

Positions are updated using Lévy flight and swooping strategies, as expressed in Equation (31):

Here, Y represents the updated position, X is the current position, represents the Lévy flight operator, is a randomly selected position, and randn is a normally distributed random number.

The updated positions are bounded within the search space using Equation (32):

Here, X represents the adjusted position, and the SpaceBound function ensures that all positions remain within the predefined upper () and lower () bounds.

The APO-JADE algorithm effectively integrates the dynamic parameter control and external archive features of JADE with the exploration and exploitation strategies of APO. This hybridization ensures a robust optimization process capable of addressing complex and multi-dimensional optimization problems. The APO mechanism also includes detailed behavioral modeling equations inspired by the natural foraging behaviors of Arctic puffins.

3.1.4. Behavioral Conversion Factor

The behavioral conversion factor B transitions between exploration and exploitation phases, as shown in Equation (33):

3.1.5. Levy Flight

The Levy flight mechanism enables large, random jumps in the search space to enhance global exploration, as described in Equation (34):

Here, u and v are random variables sampled from a normal distribution, and represents the stability parameter that controls the step size.

3.1.6. Swooping Strategy

The swooping strategy mimics rapid predation behavior, facilitating local exploitation, as expressed in Equation (35):

In this equation, R is a randomly generated vector, and tan denotes the tangent function used to simulate sharp directional changes.

3.1.7. Bounding Positions

To ensure feasibility, the positions of individuals are bounded within the predefined search space, as shown in Equation (36):

Here, SpaceBound is a function that adjusts X to ensure it remains within the upper bound () and lower bound ().

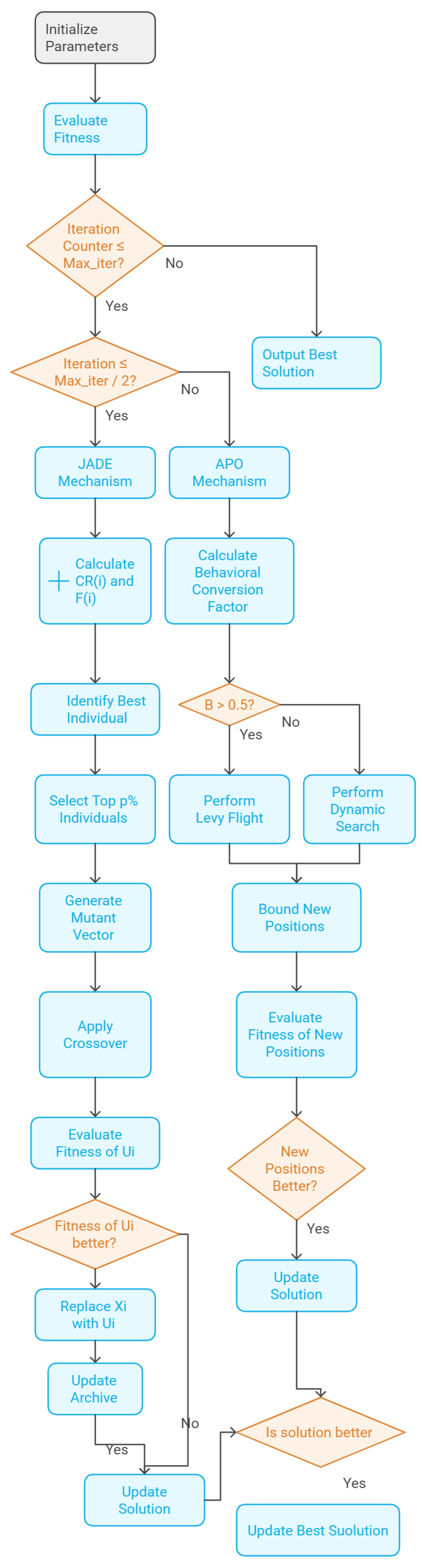

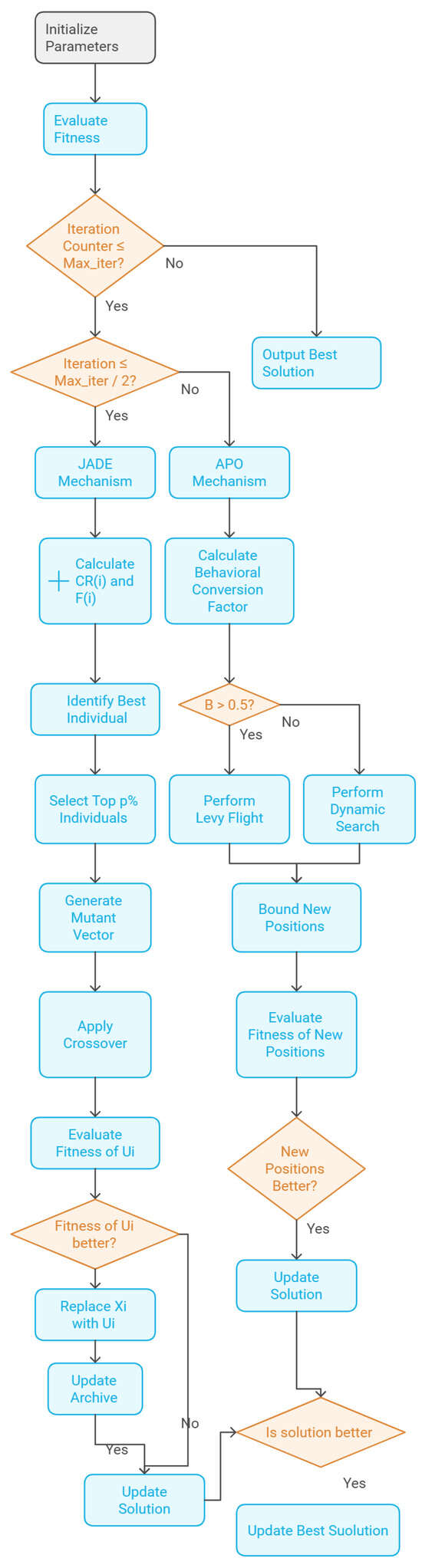

3.2. Hybrid APO-JADE Algorithm

The Hybrid APO-JADE algorithm (See Algorithm 1) enhances the optimization process by dynamically adjusting the crossover rate () and scaling factor (F) using normal and Cauchy distributions, respectively. Additionally, it employs an external archive to maintain diversity and mitigate premature convergence. The algorithm alternates between the JADE and APO mechanisms in two distinct phases, ensuring a robust balance between exploration and exploitation, thereby efficiently navigating complex optimization landscapes and converging to high-quality solutions, the steps of the algorithm has been shown in Figure 1.

| Algorithm 1 Hybrid APO-JADE algorithm. |

|

Figure 1.

Flowchart of the Hybrid APO-JADE algorithm.

4. Exploration and Exploitation

The APO-JADE algorithm employs a strategic combination of exploration and exploitation to efficiently navigate the search space and identify optimal solutions.

Exploration involves investigating diverse regions of the search space to identify promising solutions. This is achieved through mechanisms like Levy flights, which enable large, random jumps, facilitating escape from local optima (Equation (34)). The behavioral conversion factor B dynamically adjusts the focus on exploration based on the iteration progress (Equation (30)).

Exploitation refines the identified promising solutions by focusing on specific regions of the search space. This is accomplished through the swooping strategy, which intensively searches around the best solutions (Equation (35)), and JADE’s dynamic parameter control, which fine-tunes and F based on algorithm performance (Equations (28) and (29)).

5. Experimental Setup

All optimization algorithms were assessed using the benchmark functions provided in the Congress on Evolutionary Computation (CEC) 2022 suite. For each function, every algorithm was executed across 30 independent runs. Each run employed a population size of N, where (specify value here). The initial population for all algorithms was randomly initialized within the specified boundaries of each function, ensuring consistency by using the same random seed across all experiments.

The performance metrics reported include the mean, standard deviation (Std), and standard error of the mean (SEM), which are defined as follows:

- Mean: The arithmetic mean of the best fitness values obtained over the 30 runs.

- Standard deviation (Std): A measure of the variability in the fitness values across the 30 runs.

- Standard error of the mean (SEM): Computed as

IEEE CEC2022 Benchmark Functions

The CEC2022 benchmark suite (see Table 1) comprises 12 standard optimization functions that are widely recognized for evaluating the performance of optimization algorithms. These functions present a diverse range of mathematical challenges, including unimodal and multimodal landscapes, as well as hybrid and composition functions. Each function is defined within a 10-dimensional search space with boundaries . The suite includes simpler functions such as Zakharov (F1) and Rosenbrock (F2), alongside more complex problems like Rastrigin’s (F4), Levy (F5), and a series of hybrid (F6–F8) and composition functions (F9–F12). The minimum known objective values () for these functions vary, offering a spectrum of difficulties designed to assess the robustness and efficiency of optimization algorithms.

Table 1.

CEC2022 benchmark functions.

6. Results and Discussion

This section presents a comprehensive evaluation of the proposed JADEAPO algorithm, benchmarked against an extensive set of state-of-the-art optimization methods including FLO, STOA, SOA, SPBO, AO, SSOA, TTHHO, ChOA, CPO [46], and ROA [47], as summarized in Table 2, and the parameter settings are presented in Table 3 and its parameters are presented in Table 3. These well-established approaches draw upon a wide array of evolutionary paradigms—ranging from natural and biological inspirations to principles grounded in physical or mathematical processes. The analysis relies on standard performance metrics derived from the CEC 2022 benchmark suite, ensuring a consistent and rigorous comparison of algorithmic effectiveness. The results are presented in Table 4, Table 5 and Table 6 offer a detailed statistical comparison of JADEAPO’s performance.

Table 2.

Compared optimizers.

Table 3.

Optimizers with parameter settings.

Table 4.

Statistical comparison results of Congress on Evolutionary Computation (CEC) 2022.

Table 5.

Statistical comparison results of Congress on Evolutionary Computation (CEC) 2022.

Table 6.

Statistical comparison results of Congress on Evolutionary Computation (CEC) 2022.

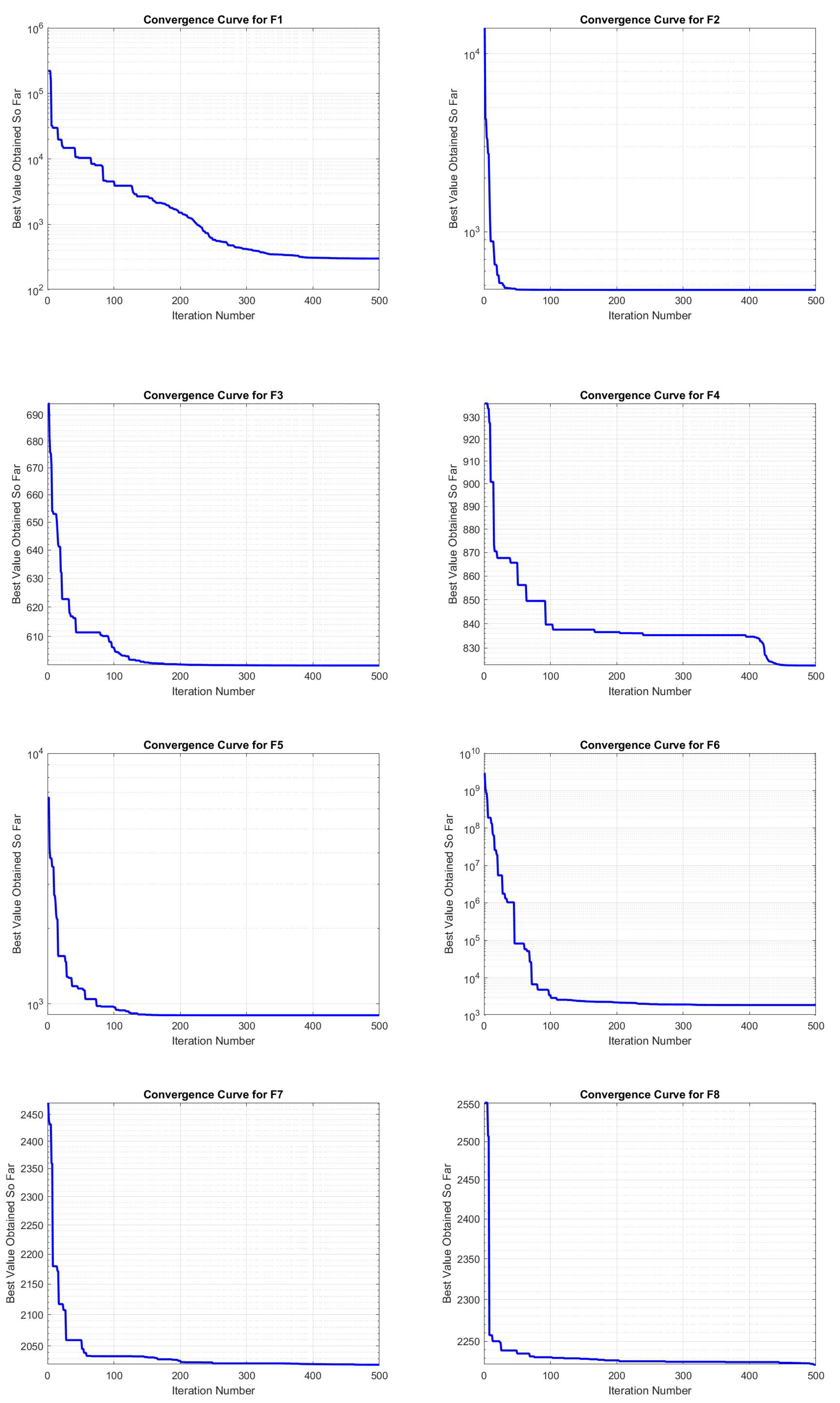

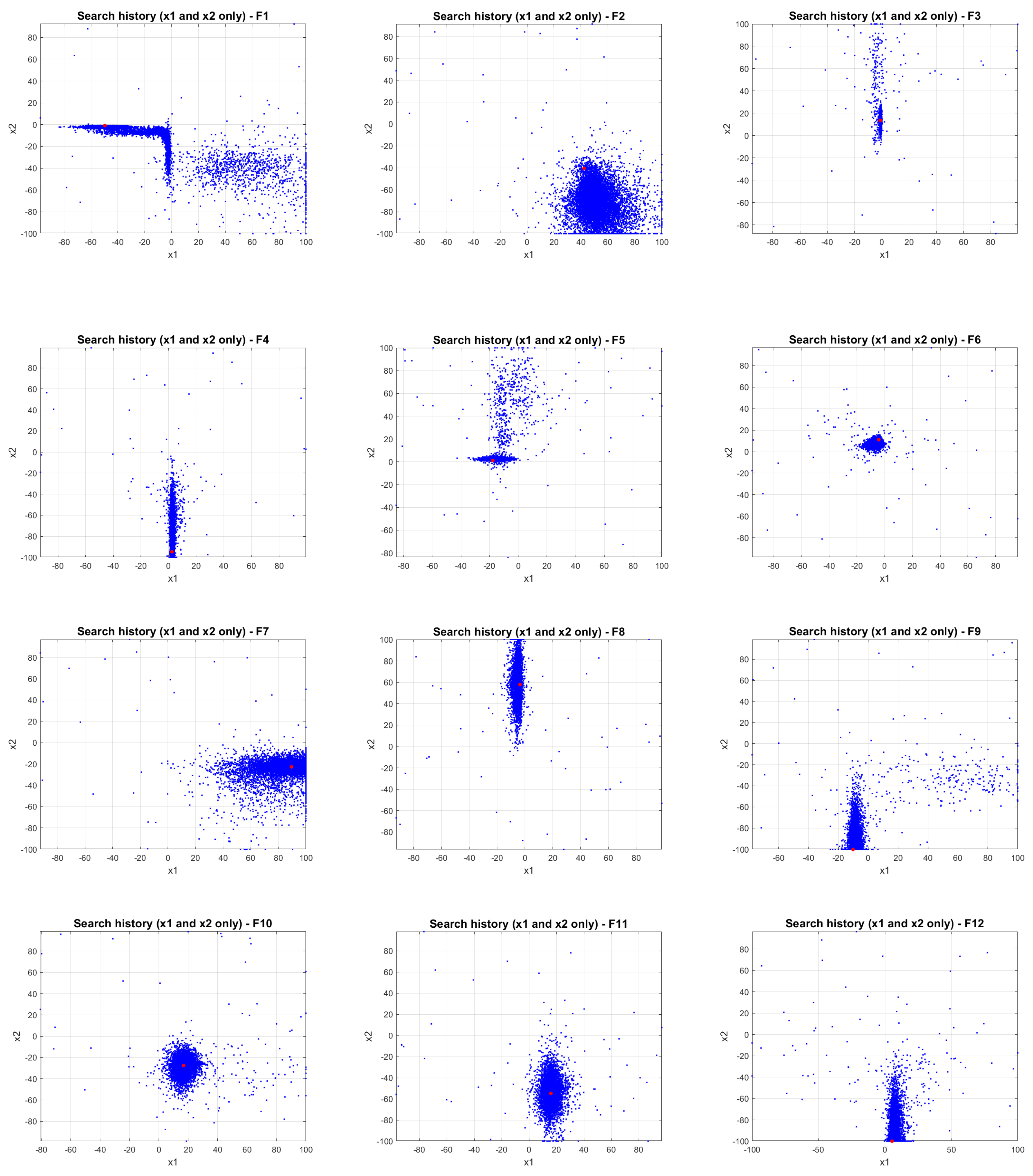

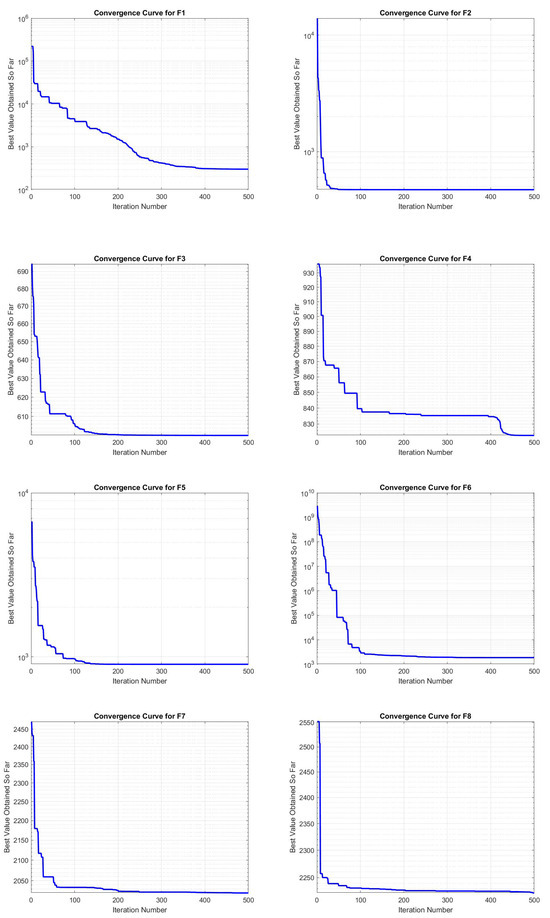

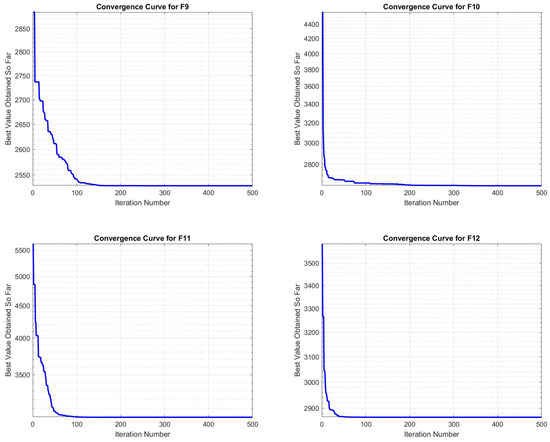

6.1. Convergence Curve

As illustrated in Figure 2 and Figure 3, the convergence curves of the APO-JADE algorithm for the CEC2022 benchmark functions (F1–F12) effectively highlight its capability to navigate the search space and achieve optimal solutions. For F1, the curve exhibits a rapid initial decline, signifying that the algorithm quickly identifies a promising region of the search space, with convergence observed around iteration 400. Similarly, for F2, a sharp decrease is evident in the early iterations, with the algorithm stabilizing swiftly and maintaining the optimal solution after approximately 100 iterations. In the case of F3, the curve demonstrates a consistent reduction in the best fitness value obtained, with steady improvements culminating in convergence around iteration 250. Similar to F1, the F4 curve exhibits rapid initial improvement followed by gradual flattening, suggesting effective exploration and subsequent exploitation. The F5 curve shows very steep initial decline, reaching near-optimal solutions within the first 50 iterations, followed by minimal further improvement. The curve for F6 demonstrates a steep initial drop with gradual improvement, stabilizing around iteration 300. For F7, the curve indicates rapid early improvement, steady decline, and flattening around iteration 200, highlighting effective exploration and exploitation. The F9 curve exhibits a sharp initial decline, stabilizing early, which indicates efficient identification and maintenance of optimal solutions. The F11 curve shows steep initial decline followed by gradual stabilization around iteration 200, suggesting robust exploration and fine-tuning. Lastly, the F12 curve shows rapid initial improvement and early stabilization, indicating quick convergence to a good solution.

Figure 2.

Convergence curve analysis with CEC2022 benchmark functions (F1–F8).

Figure 3.

Convergence curve analysis with CEC2022 benchmark functions (F9–F12).

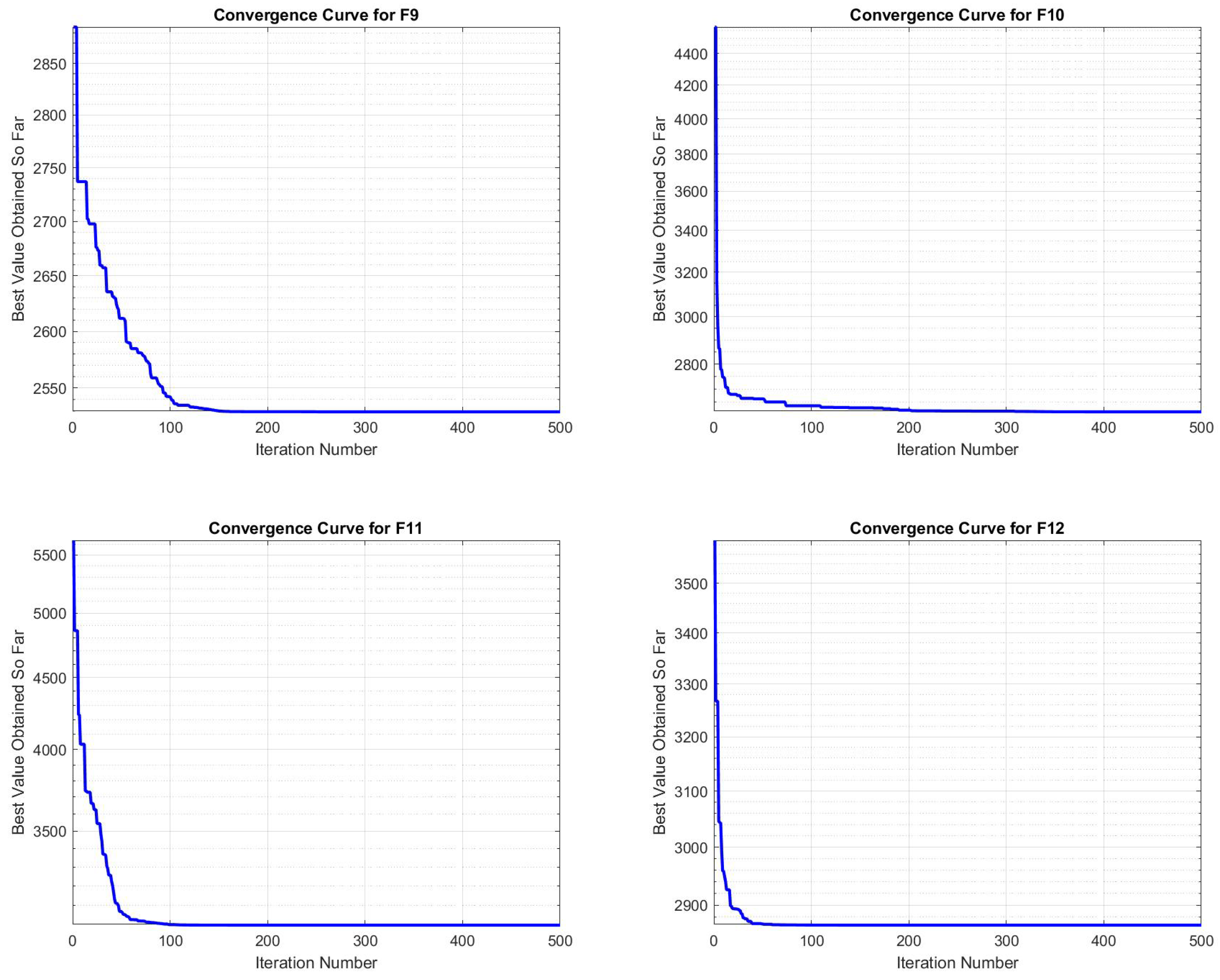

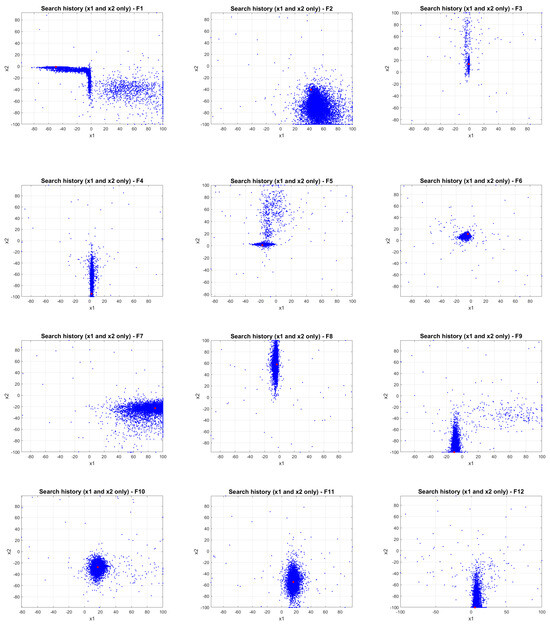

6.2. JADE APO Search History Diagram

As depicted in Figure 4, the search history curves for the Hybrid APO-JADE algorithm across various test functions (F1–F12) from the CEC2022 benchmark suite provide critical insights into its exploration and exploitation dynamics. For F1, the search history reveals a dense clustering of points, indicative of intensive exploration around the best solution identified. The horizontal and vertical coverage of the search space ensures comprehensive exploration. In the case of F2, the search history exhibits a pronounced focus around the optimal solutions, with a tightly concentrated cluster, reflecting effective exploitation following an initial exploratory phase. For F3, the search history shows a vertically aligned distribution of points, signifying a concentrated search along a specific dimension, likely influenced by the structural characteristics of the function. Similarly, the search history for F4 displays vertical clustering, emphasizing intensive exploitation around the optimal region. The F6 search history illustrates a dense central cluster with some dispersed points, demonstrating a well-balanced approach between exploration and exploitation. Finally, for F8, the search history indicates a strong vertical clustering with minimal dispersion, highlighting a thorough and targeted search in specific regions of the solution space. The F9 search history curve shows a concentrated cluster around the optimal solution, indicating efficient exploitation after initial exploration. The F10 search history presents a circular clustering around the best solutions, highlighting effective exploitation with minimal exploration outside the optimal region. For F11, the search history curve demonstrates a focused search with some dispersion, indicating both exploration and exploitation activities. Finally, the F12 search history shows a dense clustering around the optimal solution with a few dispersed points, suggesting a strong focus on exploitation after initial exploration.

Figure 4.

Search history analysis for CEC2022 (F1–F12).

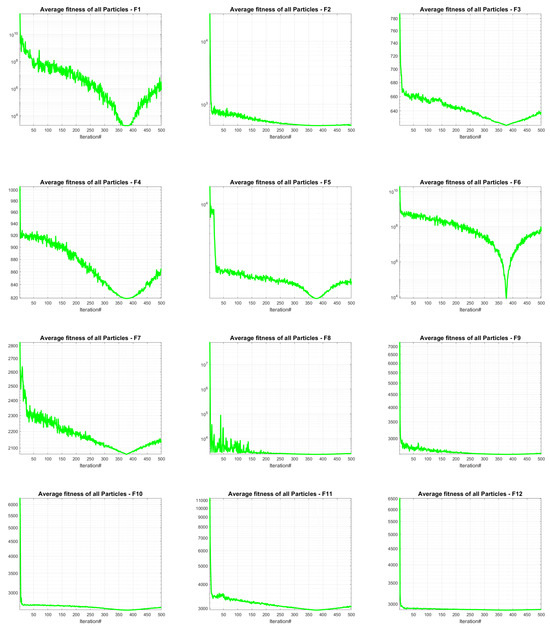

6.3. JADE APO Average Fitness Diagram

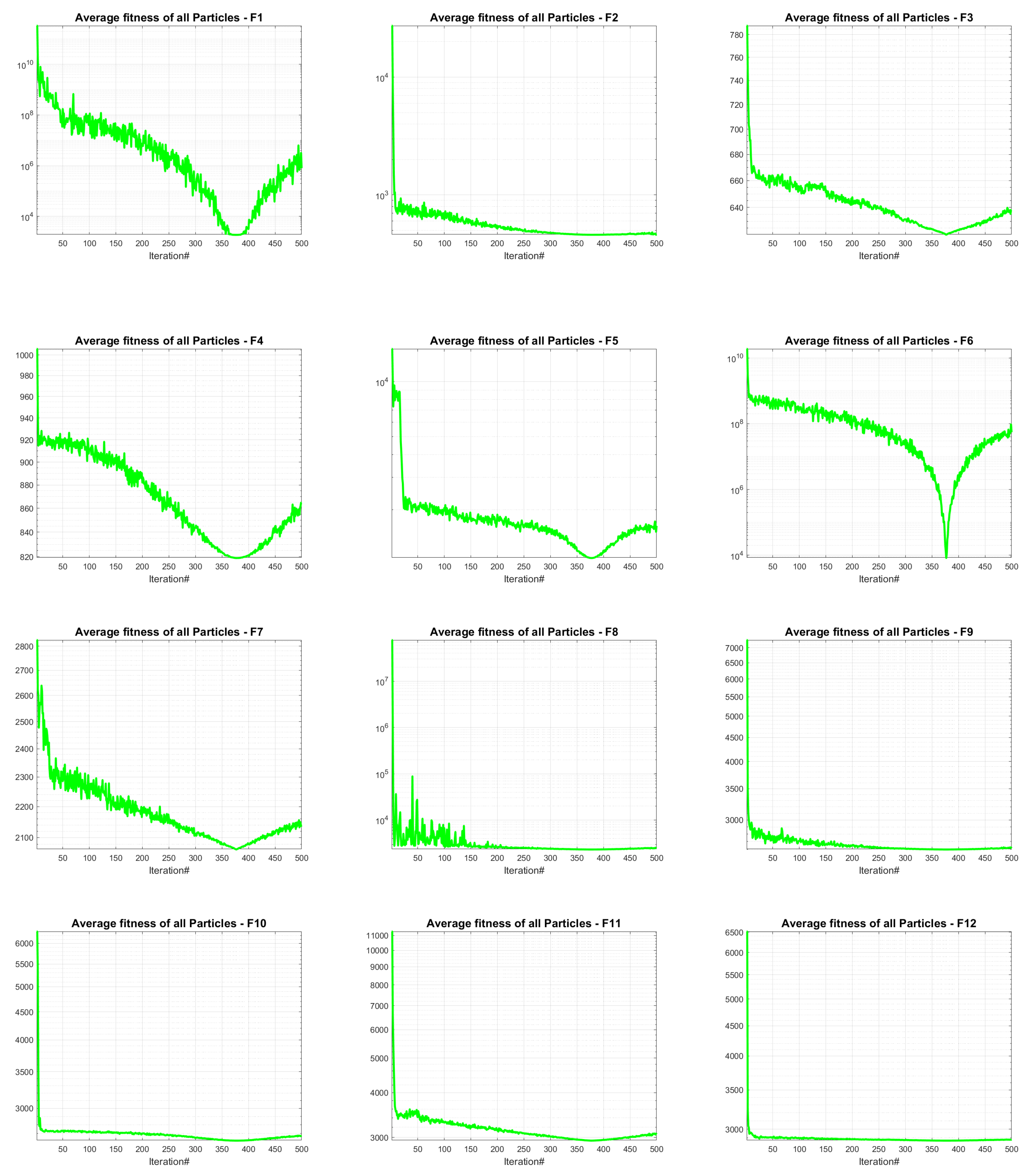

As illustrated in Figure 5, the average fitness curves of the APO-JADE algorithm over the CEC2022 benchmark functions provide valuable insights into its performance and convergence characteristics. For F1, the average fitness begins at a high value and exhibits a significant drop during the early iterations, signifying rapid initial improvement. This is followed by a steady decline and a slight increase towards the end, reflecting the transition from exploration to exploitation. In F2, the average fitness experiences a sharp decline within the first 50 iterations and stabilizes at a low value, indicating efficient convergence to a near-optimal solution in the early stages. The curve for F3 follows a similar trajectory, with an initial steep drop and a gradual decrease, highlighting effective exploitation of the search space. The curve for F4 demonstrates a consistent reduction, reaching a low point around iteration 350, indicative of a delayed yet robust convergence process. For F5 and F6, the curves exhibit significant reductions in average fitness early on, followed by a plateau and a final dip, underscoring the algorithm’s capacity to refine solutions progressively over iterations. In the case of F8, the average fitness decreases rapidly before stabilizing, showcasing efficient convergence. The curves for F9 and F10 display an initial steep decline, followed by minor oscillations and eventual stabilization, reflecting a balance between exploration and fine-tuning. Finally, the curve for F12 mirrors the trends observed in other functions, with a rapid decrease in fitness values, early stabilization, and sustained robustness, demonstrating the algorithm’s effective convergence behavior.

Figure 5.

Average Fitness analysis for CEC2022 (F1–F12).

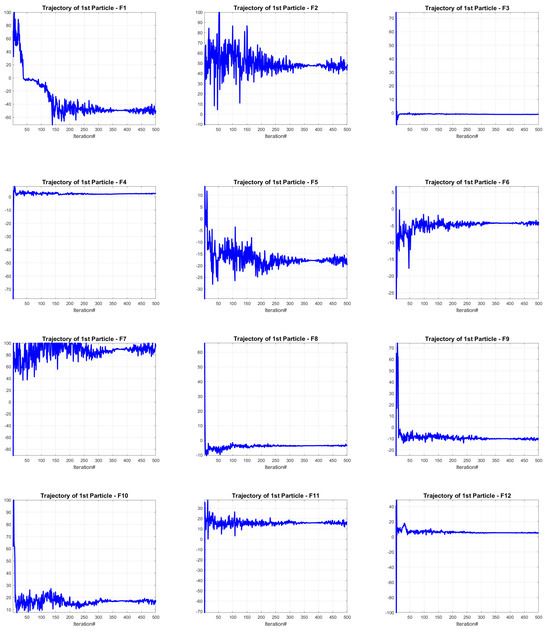

6.4. APO-JADE First Particle Trajectory Diagram

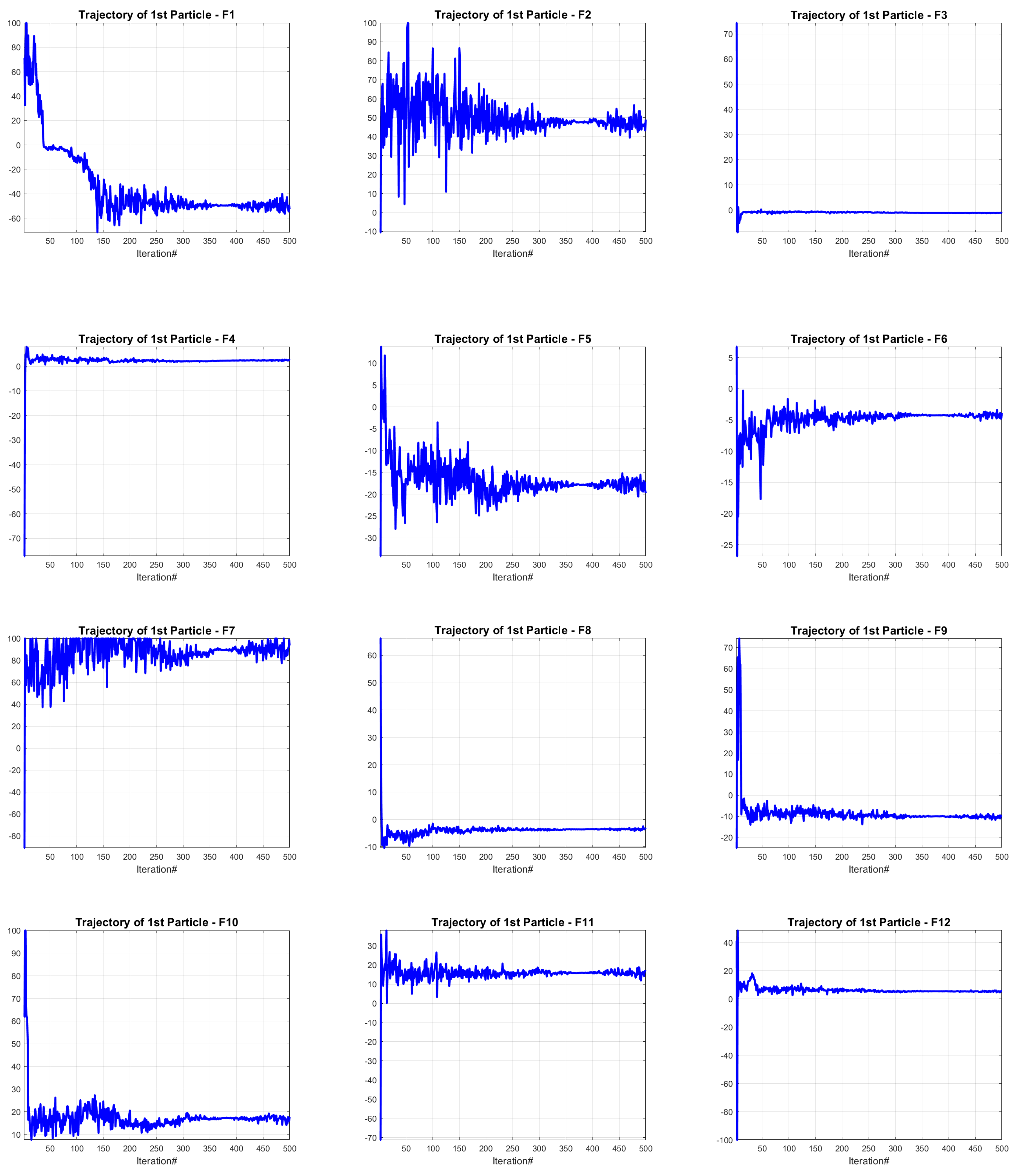

As illustrated in Figure 6, the trajectory curves of the first particle in the APO-JADE algorithm for various functions within the CEC2022 benchmark offer valuable insights into the algorithm’s search dynamics. For function F1, the particle exhibits a trajectory characterized by high variance during the initial stages, reflecting extensive exploration. This variance diminishes progressively as the algorithm converges toward the global optimum, indicating a transition from exploration to exploitation. Similarly, in F2, the trajectory exhibits large oscillations initially, signifying a broad search space coverage before settling into a more refined search pattern as the iterations progress. For F3 and F4, the trajectories show a rapid convergence towards the global optimum with minimal oscillations, reflecting a quick and steady exploitation phase. The trajectory for F5 demonstrates a combination of exploration and exploitation phases, with initial large oscillations followed by a steady approach towards the optimum. F6 and F7 display significant initial oscillations, indicating extensive exploration, which later stabilizes as the particles converge. Functions F9 and F11 exhibit similar patterns of high initial variance with eventual stabilization, highlighting the algorithm’s ability to transition from exploration to exploitation effectively. Finally, for F12, the trajectory shows rapid convergence with minimal oscillations, indicating an efficient search process.

Figure 6.

APO-JADE first particle trajectory diagram for CEC2022 (F1–F12).

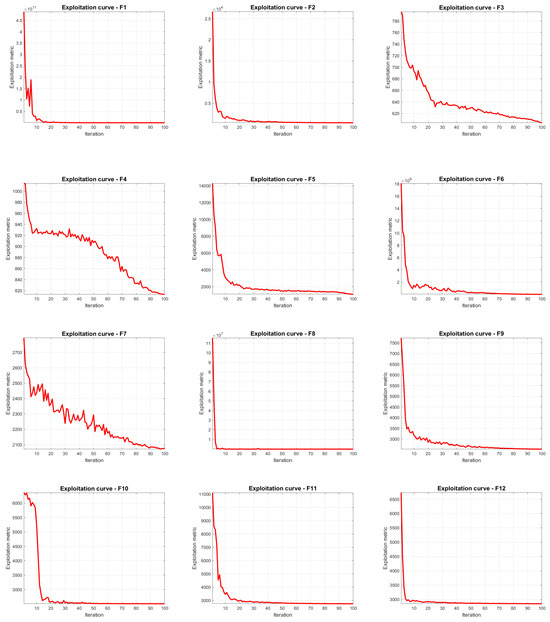

6.5. APO-JADE Exploitation Diagram

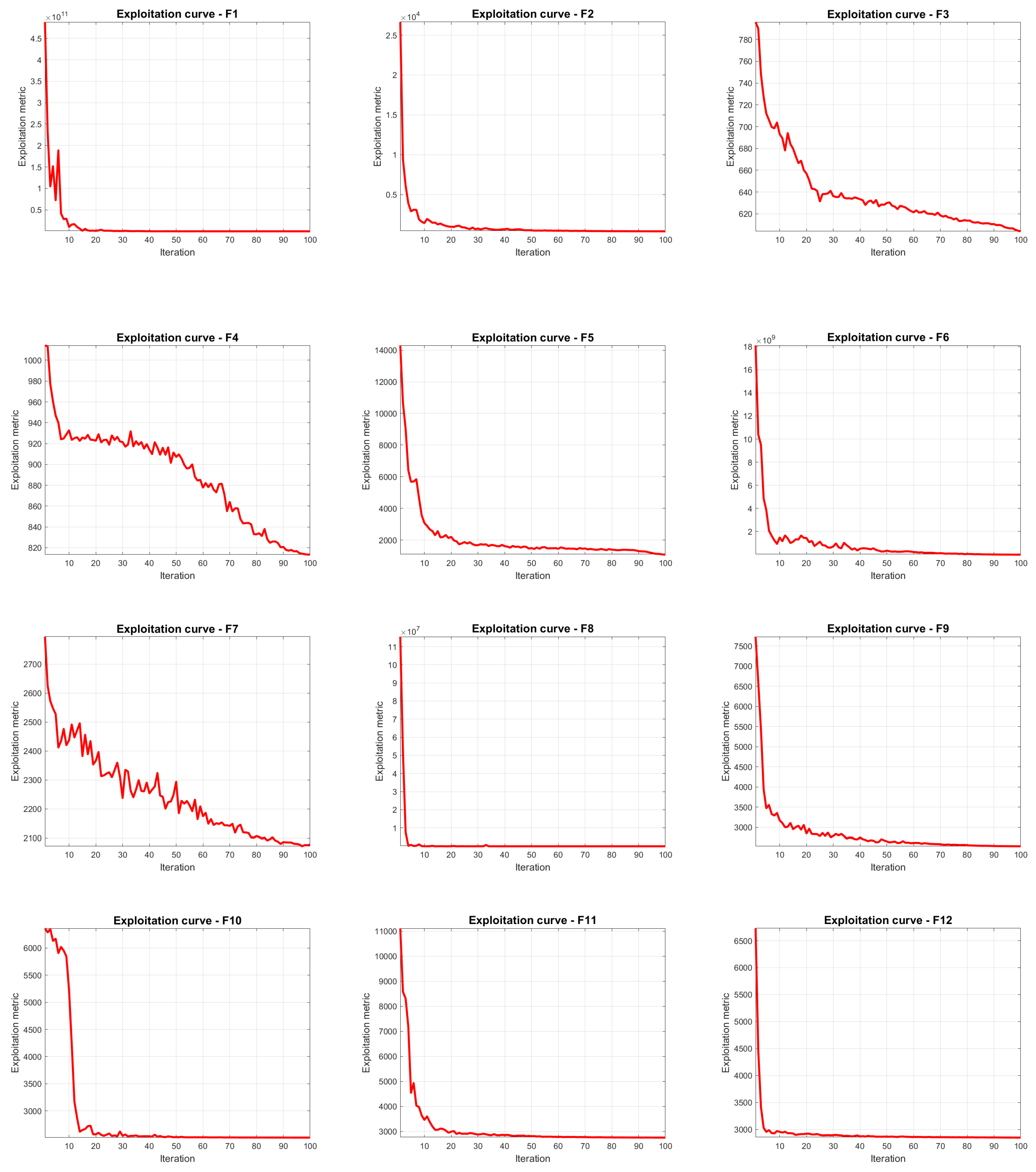

As illustrated in Figure 7, the exploitation curves of the APO-JADE algorithm across the CEC2022 benchmark functions reveal its proficiency in concentrating search efforts on promising regions of the solution space over successive iterations. For function F1, the exploitation metric exhibits a rapid decrease within the first 10 iterations, stabilizing near a lower bound. This behavior indicates efficient early exploration of the search space. Similarly, functions F2, F3, and F4 demonstrate a steep decline in the exploitation metric during the initial iterations, reflecting the algorithm’s swift convergence toward local optima. Notably, for functions F2 and F6, the exploitation metric undergoes a pronounced drop, emphasizing the algorithm’s robust ability to exploit high-potential regions effectively. The patterns observed in other functions, such as F8, F9, F10, and F11, also show a rapid initial reduction in the exploitation metric, followed by stabilization. This trend underscores the algorithm’s consistent capacity to exploit viable solutions across diverse problem landscapes. The uniform pattern of an early, rapid decrease followed by a plateau across all benchmark functions suggests that the APO-JADE algorithm efficiently narrows the search space at the outset, enabling a focused refinement process in subsequent iterations. The algorithm’s ability to maintain this behavior across various optimization challenges highlights its adaptability and effectiveness in balancing exploration and exploitation, ultimately leading to convergence on optimal solutions.

Figure 7.

APO-JADE exploitation diagram for CEC2022 (F1–F12).

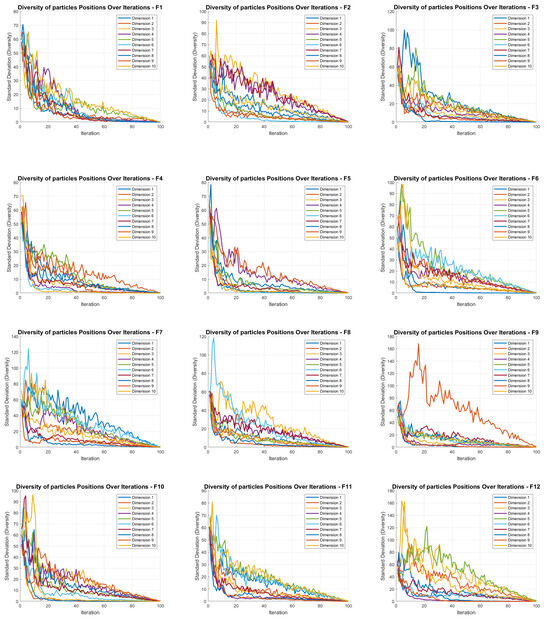

6.6. JADE APO Diversity Diagram

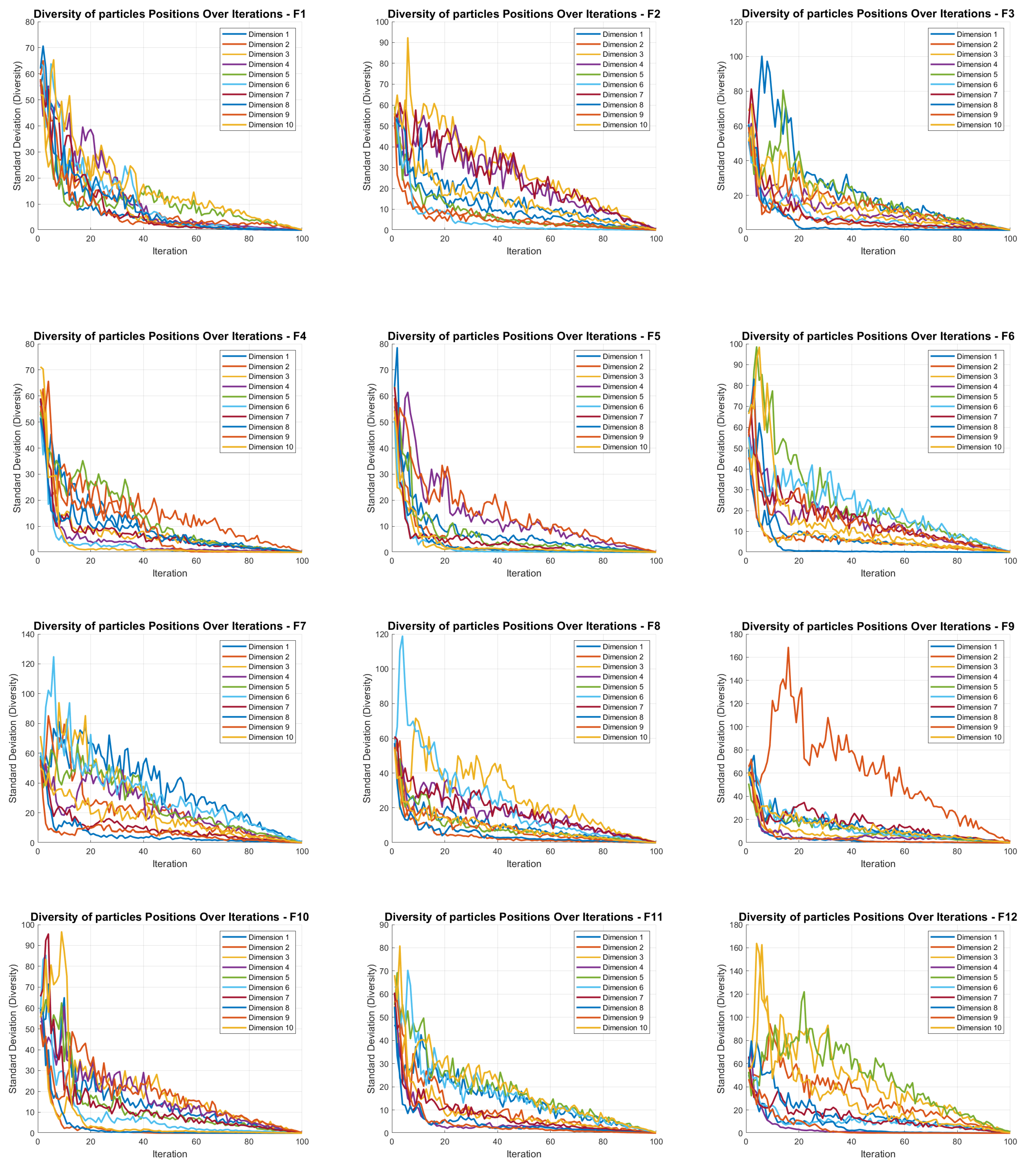

As illustrated in Figure 8, the diversity curves of the APO-JADE algorithm across the CEC2022 benchmark functions reveal a distinct pattern of diminishing diversity over successive iterations. At the outset, the standard deviation of particle positions across all dimensions is relatively high, indicating extensive exploration of the search space. However, as the optimization process advances, a rapid decline in diversity is observed within the first 20 to 30 iterations. This reduction signifies that the particles are converging toward promising regions within the search space, reflecting a transition from broad exploration to focused exploitation. This rapid convergence phase is followed by a more gradual reduction in diversity, stabilizing around a low value after approximately 60 iterations. Each dimension follows a similar pattern, although the extent of diversity and the rate of decrease can vary slightly among different dimensions. This consistent pattern across all benchmark functions indicates that APO-JADE effectively balances exploration and exploitation, quickly narrowing down the search to optimal regions while maintaining enough diversity to avoid premature convergence. The final low but non-zero diversity suggests that the algorithm still retains some exploration capacity, potentially aiding in fine-tuning the final solutions.

Figure 8.

Diversity analysis for CEC2022 (F1–F12).

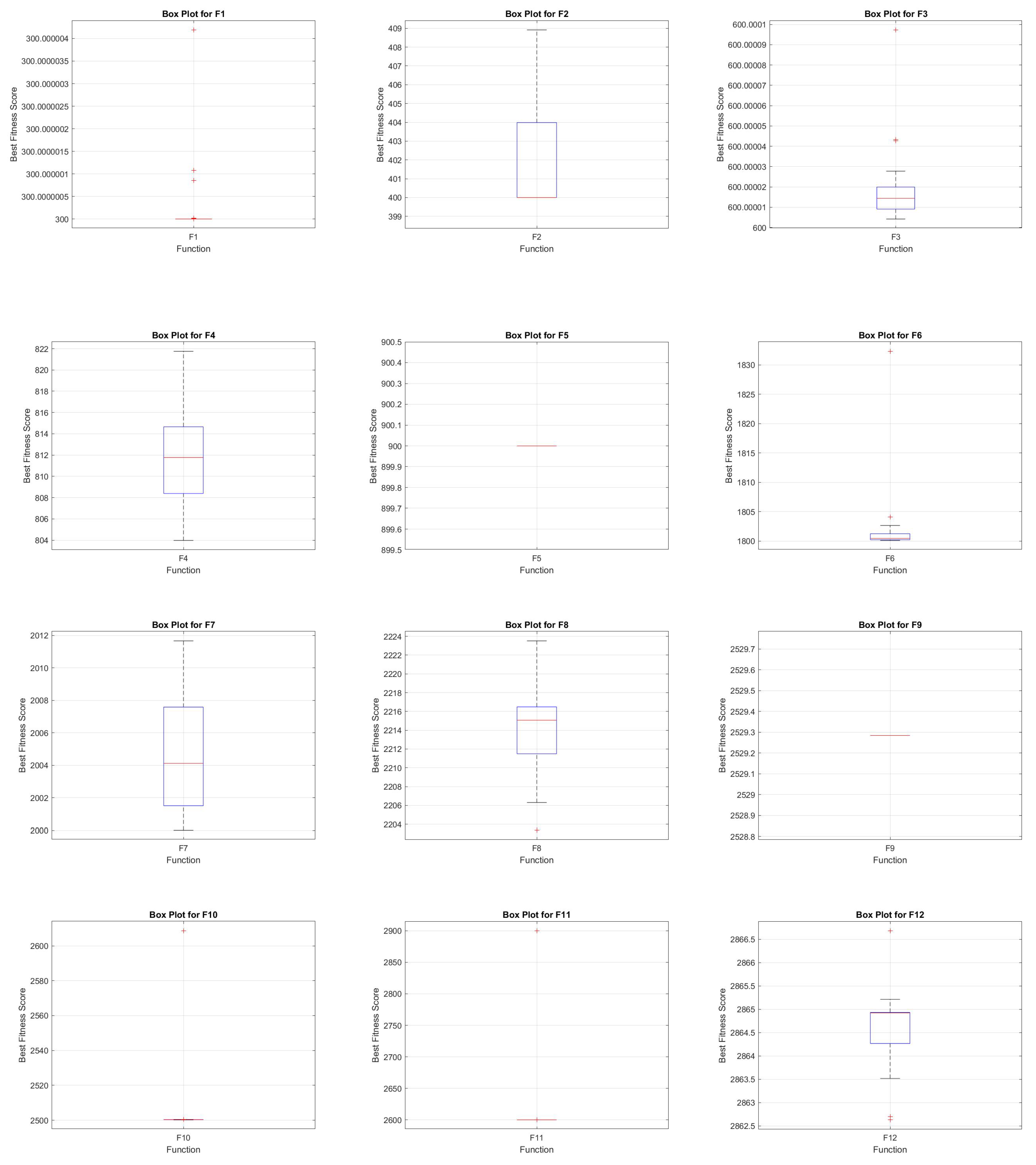

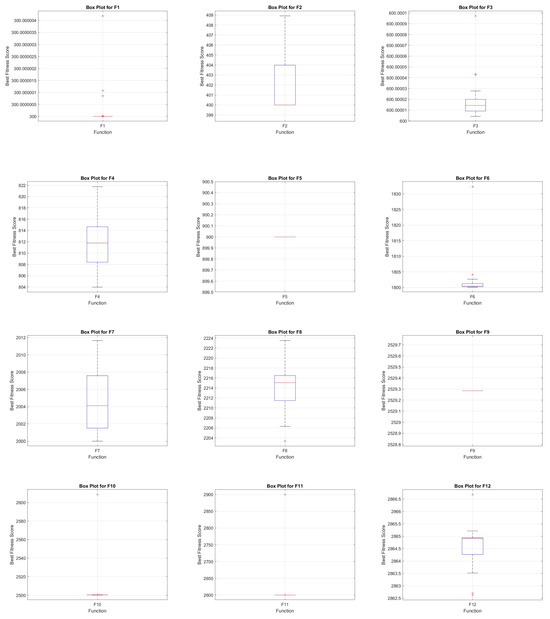

6.7. JADE APO Box Plot Analysis

As shown in Figure 9, the box plot of fitness scores provides a statistical overview of the fitness values obtained by the algorithm across various functions. For instance, the box plot for F1 demonstrates minimal variance and a lack of significant outliers, indicating consistent performance. In contrast, F2 exhibits a broader range of fitness values, suggesting some variability in the algorithm’s performance. For F3, the box plot shows a narrow range of fitness scores concentrated near 600, with a few minor outliers slightly exceeding this value. This reflects a high degree of consistency, with occasional deviations. Similarly, F4 displays a compact distribution with a median around 812, signifying stable performance with slight variability in the upper range. The F5 box plot highlights almost no variability, with fitness values tightly clustered around 900, showcasing exceptional consistency. In the case of F8, the interquartile range is wider, and some outliers are present, with the median fitness value around 2216. This suggests greater variability and occasional suboptimal runs. The occurrence of outliers in functions such as F3 and F12 indicates occasional deviations, which may be attributed to the complexity of the search space or the presence of multiple local optima.

Figure 9.

Box plot analysis of CEC2022 (F1–F12).

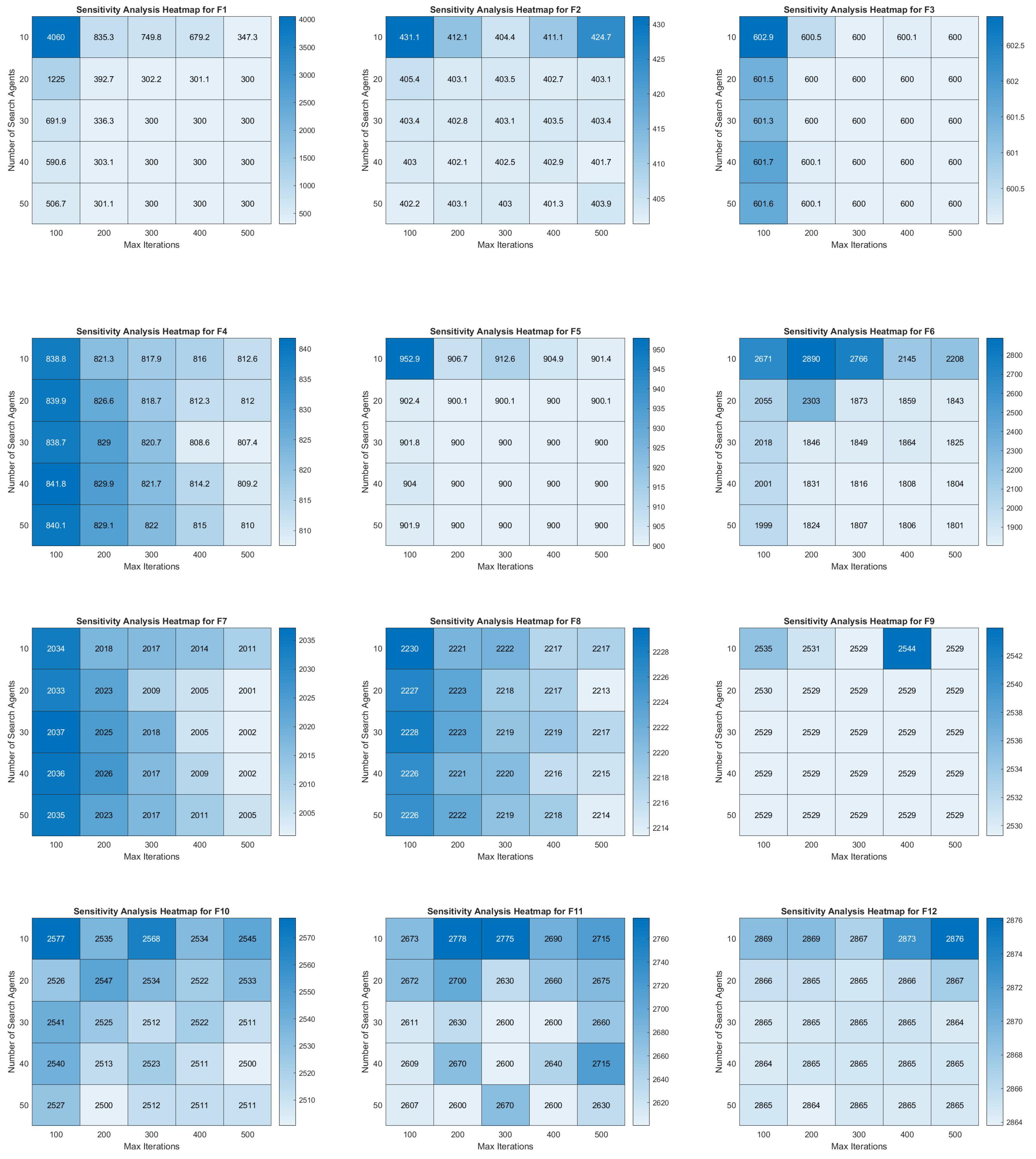

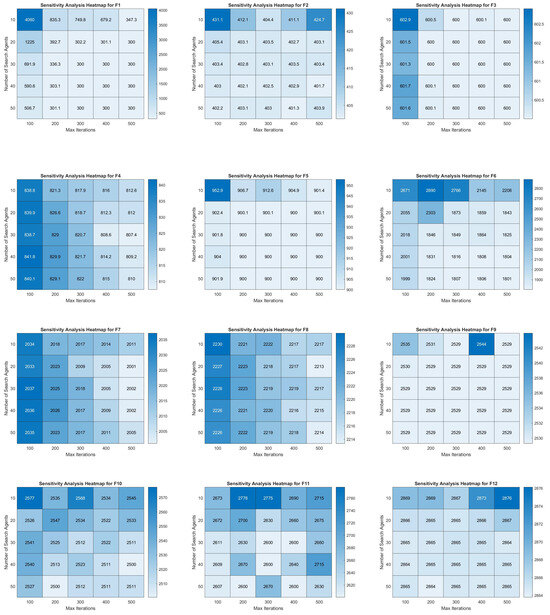

6.8. JADE APO Heat Map Analysis

As depicted in Figure 10, the sensitivity analysis heat maps for the APO-JADE algorithm applied to the CEC2022 benchmark functions illustrate performance variations based on the number of search agents and the maximum number of iterations. For the F1 function, the heat map shows substantial performance improvement as the number of iterations increases, particularly when the number of search agents exceeds 20, resulting in optimal values around 300. Similarly, for the F2 function, a stable region emerges where increasing iterations beyond 200 provides negligible performance gains, maintaining a best fitness score near 40. The F3 function demonstrates consistent performance across varying numbers of agents and iterations, with fitness values stabilizing around 600. For the F4 function, the heat map indicates that increasing both the number of agents and iterations generally enhances performance, with best fitness scores improving to approximately 810 as configurations optimize. The F5 function exhibits strong sensitivity to the number of agents and iterations, with scores converging near 900. In contrast, the F6 function reveals a more gradual improvement in performance, achieving optimal scores of approximately 1800 with higher iterations and agents. The F7 function demonstrates stability, with slight performance improvements as iterations increase, stabilizing around 2000. For the F8 function, the sensitivity analysis indicates consistent performance around 2215, showcasing robust behavior across varying configurations. Finally, the F10 and F12 functions reveal that performance improves with increasing iterations and agents, with fitness scores stabilizing at approximately 2500 and 2865, respectively. These heat maps provide valuable insights into the impact of algorithm parameters on performance, highlighting the importance of balancing search agents and iterations for different functions.

Figure 10.

Sensitivity analysis of CEC2022 (F1–F12).

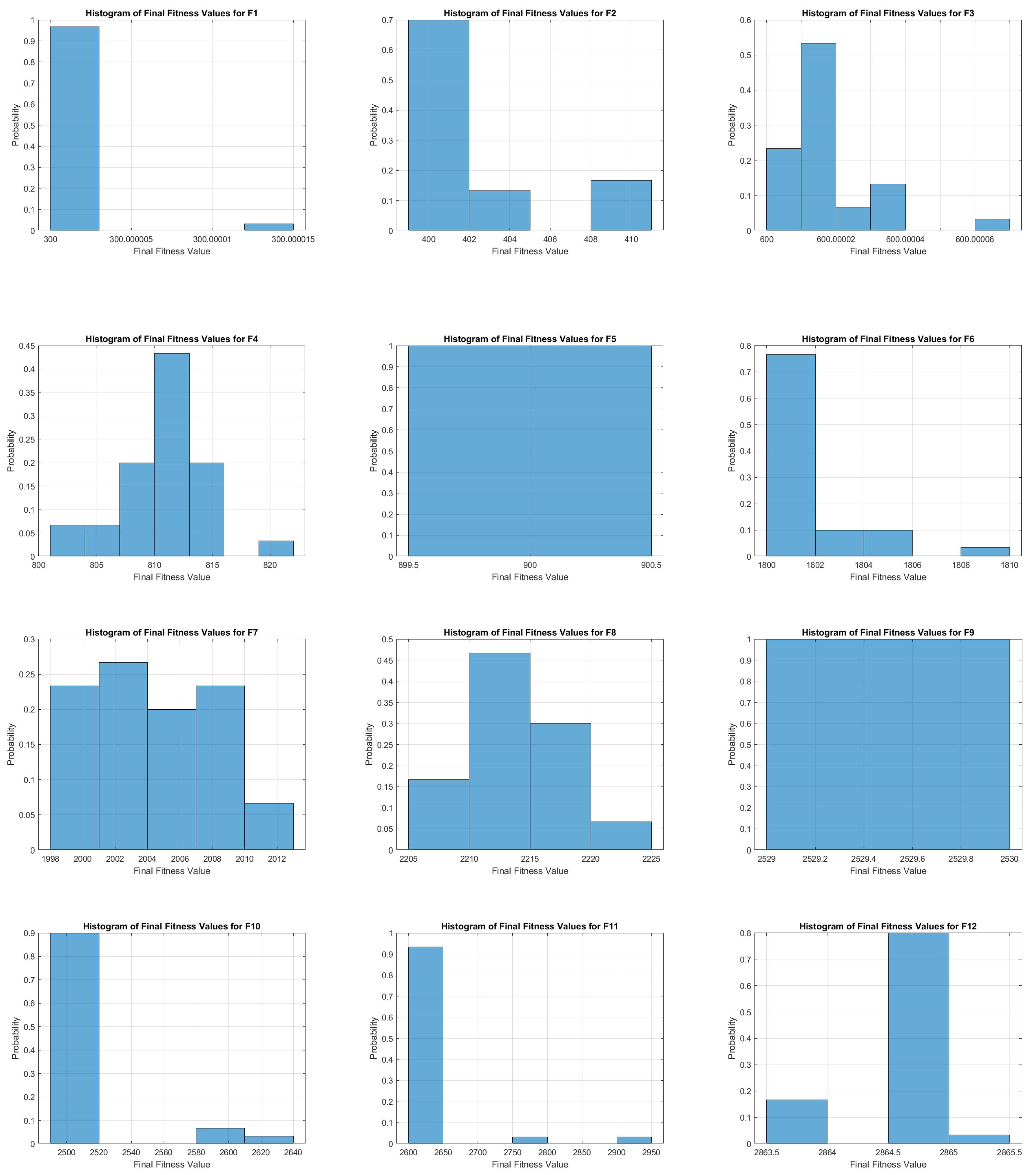

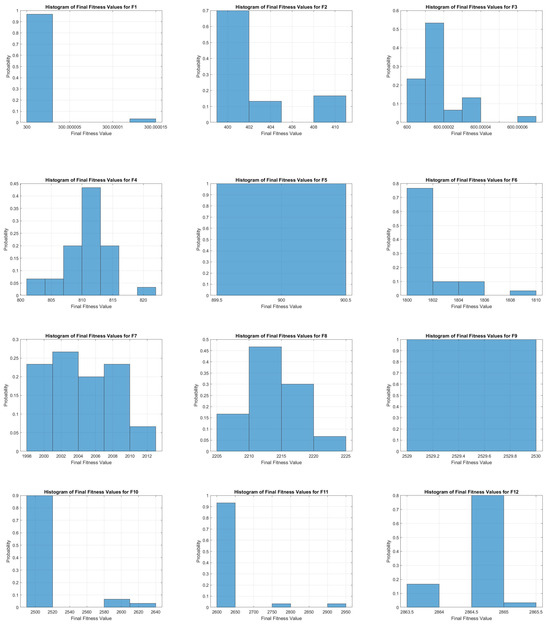

6.9. JADE APO Histogram Analysis

As illustrated in Figure 11, the histogram analysis of final fitness values for functions F1–F12 using the JADE dynamic Arctic Parameter Optimization (APO) algorithm reveals distinct distributions, showcasing the variability in optimization outcomes for each function. For F1, the fitness values are highly concentrated around 300, with a prominent probability peak, indicating consistent optimization performance. In contrast, F2 demonstrates a bimodal distribution with peaks near 400 and 410, reflecting variability in the optimization process. The fitness values for F3 exhibit a skewed distribution leaning toward 600, whereas F4 presents a more symmetric distribution centered around 810. The histogram for F5 demonstrates a narrow peak around 900, indicating highly consistent optimization results. F6 shows a predominant peak at 1800 with a few higher values, reflecting occasional deviations in the optimization outcome. F8 has a broad distribution with a peak around 2210, indicating variability in the final fitness values. The distribution for F9 is extremely narrow around 2530, similar to F5, reflecting consistent optimization results. Finally, F11 and F12 show broader distributions centered around 2600 and 2864 respectively, indicating some variability in the final fitness values.

Figure 11.

Histogram analysis of CEC2022 (F1–F12).

7. Application of APO-JADE for Planetary Gear Train Design Optimization Problem

The planetary gear train design model (see Figure 12) aims to optimize the gear ratios of a planetary gear train while ensuring compliance with mechanical and geometric constraints. This model incorporates multiple variables, a defined fitness function, a set of constraints, and a penalty function to address any violations of the constraints [64].

Figure 12.

Planetary gear train design.

7.1. Parameter Initialization

The design variables include the number of teeth on each gear and the module of gear pairs, which are initialized as follows [64]:

7.2. Fitness Function

The fitness function is formulated to minimize the deviation between the actual gear ratios and their desired target values [64]:

Equation (43) calculates the maximum deviation of actual gear ratios from their desired values.

7.3. Constraints

The design must satisfy several geometric and operational constraints:

7.4. Penalty Function

A penalty function is applied to heavily penalize constraint violations, as shown in Equation (48):

where equals 1 if indicates a constraint violation and 0 otherwise.

7.5. Fitness Function

The overall fitness function combines the deviation in gear ratios with a penalty for any constraint violations. Since the primary objective function f is maximized, the penalty term is subtracted to ensure constraint violations reduce the overall fitness. The modified fitness function is defined as

Equation (49) defines the fitness of a design, which is maximized during the optimization process while minimizing constraint violations.

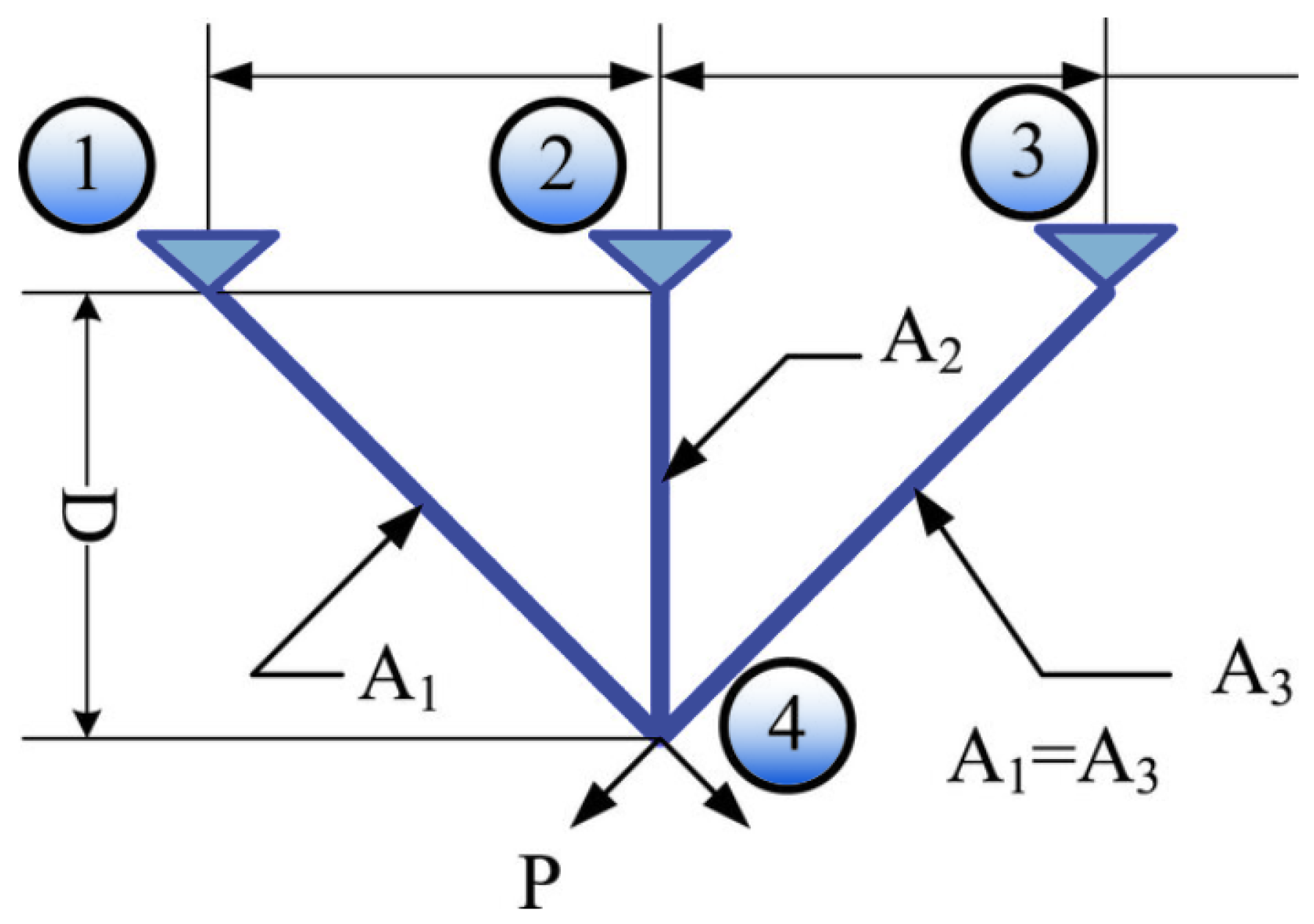

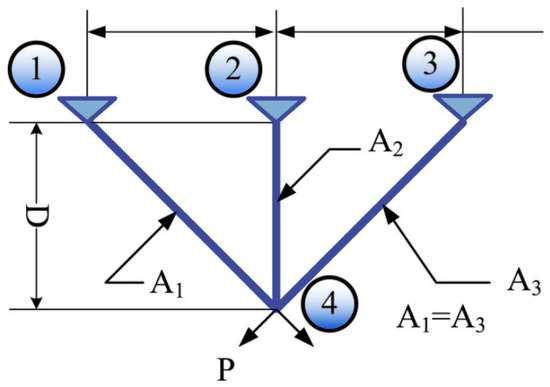

8. Three-Bar Truss Design Optimization Problem

The Three-Bar Truss Design problem (refer to Figure 13) focuses on optimizing the material distribution within a truss system to minimize the total material length while adhering to stress constraints under an applied load. This structural optimization task aims to determine the optimal cross-sectional areas that can withstand the specified loading conditions without exceeding the permissible material stress limits.

Figure 13.

Three-Bar Truss Design.

8.1. Variables

- : Cross-sectional area of the horizontal bar.

- : Cross-sectional area of the diagonal bars.

8.2. Fitness Function

The objective is to minimize the total length of material used, as shown in Equation (50):

where l is the length of each truss member, assumed to be 100 units for simplification.

8.3. Constraints

8.4. Penalty Function

To ensure compliance with the constraints, a penalty function is incorporated into the fitness function. This function imposes a significant penalty when any constraints are violated, as expressed in Equation (54):

Here, is a function that evaluates to 1 if (indicating a violation) and 0 otherwise. The parameter is a large number (e.g., ) to ensure significant penalization.

8.5. Fitness Function

The overall fitness function that needs to be minimized combines the fitness function and the penalty for any constraint violations, as shown in Equation (55):

Table 7 and Table 8 show results from various optimization algorithms. APO-JADE achieved the best fitness value (BestF) of 1.339959, with design variables x1 at 6.017209 and x2 at 5.316636, indicating that it found the most optimal truss design. FOX closely followed APO-JADE with a fitness value of 1.339962, while Grey Wolf Optimizer (GWO) and SMA also performed well with slightly higher fitness values. Other algorithms like AVOA were competitive, achieving values close to APO-JADE. As the list progresses, algorithms like AO, HHO, SA, and ChOA have higher BestF values, indicating less optimal designs. Significant increases in BestF for optimizers like SCA, COA, OOA, WOA, BO, and GA show poorer performance compared to APO-JADE.

Table 7.

Results of Three-Bar Truss Design optimization problem.

Table 8.

Statistical results of Three-Bar Truss Design optimization problem.

9. Planetary Gear Train Design Optimization Problem

The provided code snippet addresses an optimization problem related to the design of a planetary gear train. The objective is to optimize the gear ratios and dimensions while ensuring adherence to all specified constraints. Below is a detailed explanation of the code’s components.

Initially, the code defines a significantly large penalty factor (), which is utilized to impose substantial penalties within the fitness function for any violations of the constraints.

9.1. Parameter Initialization

The variables are initialized based on the input vector x:

- x is rounded to ensure integer values, as gears must have integer numbers of teeth.

- and are predefined arrays representing possible values for the number of planets and module sizes, respectively.

- , , , , , and represent the numbers of teeth on different gears.

- p is the number of planets, and and are the module sizes for different gear pairs, selected from .

9.2. Fitness Function

The fitness function is designed to minimize the deviation between the actual and desired gear ratios, as follows:

- represents the gear ratio of the first stage.

- denotes the gear ratio of the second stage.

- corresponds to the gear ratio of the ring gear.

- The desired gear ratios are denoted as , , and .

The fitness function f is formulated to capture the maximum deviation among the three gear ratios.

9.3. Constraints

Various constraints are defined to ensure the gear design is feasible:

- is the maximum allowable diameter.

- , , , , , and are predefined constants representing allowable deviations.

- is the angle calculated based on the gear geometry.

The constraints to are defined as follows:

- Constraint on the maximum diameter involving and .

- Constraint on the maximum diameter involving and , .

- Constraint on the maximum diameter involving and , .

- Constraint on the compatibility of gear sizes and .

- Constraint on the minimum tooth addendum for gears and .

- Constraint on the minimum tooth addendum for gears and .

- Constraint on the minimum tooth addendum for gears and .

- Geometric constraint involving to ensure the gear arrangement is physically feasible.

- Constraint on the positioning of gear relative to and .

- Constraint on the positioning of gear relative to and .

- Constraint to ensure is a multiple of p.

9.4. Penalty Calculation

A penalty term is calculated to heavily penalize any violation of the constraints:

- The penalty term accumulates the squared violations of each constraint, scaled by .

- The GetInequality function (assumed to be defined elsewhere) likely returns a boolean indicating if a constraint is violated.

9.5. Fitness Function

The final fitness function integrates the fitness function f and a penalty term, as expressed in Equation (56):

This formulation ensures that any design violating the constraints incurs a significantly higher fitness value due to the large penalty factor (), effectively discouraging such solutions. The objective of the optimization process is to minimize the value, thereby identifying a planetary gear train design that aligns with the desired gear ratios while satisfying all imposed constraints.

As presented in Table 9 and Table 10, the results for solving the planetary gear train design optimization problem demonstrate the performance of various algorithms, measured by the best fitness value () achieved, alongside the corresponding design variables ( to ).

Table 9.

Results of planetary gear train design optimization problem.

Table 10.

Statistical results of planetary gear train design optimization problem.

The APO-JADE algorithm attained the best fitness value () of 0.525769, with the design variables , , , , , , and . This outcome indicates that APO-JADE identified the most optimal solution by minimizing the deviation in gear ratios while adhering to all constraints.

The Grey Wolf Optimizer (GWO) closely followed APO-JADE, achieving a fitness value of 0.526281, indicating competitive performance but with a slightly higher deviation. The Slime Mould Algorithm (SMA), with a fitness value of 0.537059, performed marginally worse than APO-JADE and GWO, indicating a larger deviation from the target gear ratios. The Whale Swarm Optimization (WSO) and Whale Optimization Algorithm (WOA) both achieved fitness values around 0.53, demonstrating reasonable performance, though less optimal compared to APO-JADE.

Other algorithms, such as COOT, ChOA, and SMA, produced fitness values around 0.537059, while the Owl Optimization Algorithm (OOA) and Binary Wolf Optimization (BWO) reported significantly higher values of 0.774379 and 0.868333, respectively, indicating suboptimal solutions in comparison to APO-JADE.

10. Conclusions

The Hybrid Arctic Puffin Optimization with JADE (APO-JADE) algorithm, developed in this study, marks a significant advancement in the field of engineering optimization. By combining the unique exploration strategies of the Arctic Puffin Optimization (APO) with the adaptive and evolutionary characteristics of the JADE algorithm, APO-JADE addresses critical limitations found in traditional optimization methods. Our extensive testing on benchmark functions demonstrates that APO-JADE significantly outperforms existing algorithms in terms of convergence speed, accuracy, and computational efficiency. Moreover, when applied to real-world engineering problems, APO-JADE consistently delivered superior solutions, highlighting its ability to effectively navigate and optimize complex, multimodal design spaces. This capability is crucial for engineering applications where design parameters are hiinterdependent and optimal solutions are difficult to delineate using standard methodsghly.

Future research will focus on refining APO-JADE’s adaptability and exploring its application across a broader range of industrial problems. Additionally, integrating machine learning techniques to predict algorithm parameters dynamically could further enhance its performance and robustness.

Author Contributions

H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; methodology, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; software, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; validation, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; formal analysis, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; investigation, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; writing—original draft preparation, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; writing—review and editing, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; visualization, H.N.F., M.S.A., N.N.S., F.H. and S.N.F.; supervision, H.N.F.; project administration, H.N.F.; funding acquisition, H.N.F., M.S.A., N.N.S., F.H. and S.N.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Fogel, L.J.; Owens, A.J.; Walsh, M.J. Artificial Intelligence Through Simulated Evolution; Wiley: New York, NY, USA, 1966. [Google Scholar]

- Koza, J. Genetic Programming as a Means for Programming Computers by Natural Selection. Stat. Comput. 1994, 4, 87–112. [Google Scholar] [CrossRef]

- Lučić, P.; Teodorović, D. Computing with Bees: Attacking Complex Transportation Engineering Problems. Int. J. Artif. Intell. Tools 2003, 12, 375–394. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by Simulated Annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Rabanal, P.; Rodríguez, I.; Rubio, F. Using River Formation Dynamics to Design Heuristic Algorithms. In Unconventional Computation; Springer: Berlin/Heidelberg, Germany, 2007; pp. 163–177. [Google Scholar]

- Rashedi, E.; Nezamabadi-pour, H.; Saryazdi, S. GSA: A Gravitational Search Algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Alawadi, S.; Awaysheh, F.M.; Hamad, F. Novel hybrid success history intelligent optimizer with gaussian transformation: Application in CNN hyperparameter tuning. Clust. Comput. 2023, 27, 3717–3739. [Google Scholar] [CrossRef]

- Civicioglu, P. Transforming Geocentric Cartesian Coordinates to Geodetic Coordinates by Using Differential Search Algorithm. Comput. Geosci. 2012, 46, 229–247. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Al-Shamayleh, A.S.; Ishtaiwi, A.; Makhadmeh, S.N.; Fakhouri, S.N.; Hamad, F. Hybrid Four Vector Intelligent Metaheuristic with Differential Evolution for Structural Single-Objective Engineering Optimization. Algorithms 2024, 17, 417. [Google Scholar] [CrossRef]

- Jung, S.H. Queen-bee Evolution for Genetic Algorithms. Electron. Lett. 2003, 39, 575–576. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Alawadi, S.; Awaysheh, F.M.; Alkhabbas, F.; Zraqou, J. A cognitive deep learning approach for medical image processing. Sci. Rep. 2024, 14, 4539. [Google Scholar] [CrossRef] [PubMed]

- Fakhouri, H.; Awaysheh, F.; Alawadi, S.; Alkhalaileh, M.; Hamad, F. Four vector intelligent metaheuristic for data optimization. Computing 2024, 106, 2321–2359. [Google Scholar] [CrossRef]

- Karaboga, D.; Basturk, B. A powerful and efficient algorithm for numerical function optimization: Artificial bee colony (ABC) algorithm. J. Glob. Optim. 2007, 39, 459–471. [Google Scholar] [CrossRef]

- Wang, W.c.; Tian, W.c.; Xu, D.m.; Zang, H.f. Arctic puffin optimization: A bio-inspired metaheuristic algorithm for solving engineering design optimization. Adv. Eng. Softw. 2024, 195, 103694. [Google Scholar] [CrossRef]

- Zhang, J.; Sanderson, A.C. JADE: Adaptive differential evolution with optional external archive. IEEE Trans. Evol. Comput. 2009, 13, 945–958. [Google Scholar] [CrossRef]

- Hu, G.; Zheng, Y.; Abualigah, L.; Hussien, A.G. DETDO: An adaptive hybrid dandelion optimizer for engineering optimization. Adv. Eng. Inform. 2023, 57, 102004. [Google Scholar] [CrossRef]

- Saberi, A.; Khodaverdi, E.; Kamali, H.; Movaffagh, J.; Mohammadi, M.; Yari, D.; Moradi, A.; Hadizadeh, F. Fabrication and Characterization of Biomimetic Electrospun Cartilage Decellularized Matrix (CDM)/Chitosan Nanofiber Hybrid for Tissue Engineering Applications: Box-Behnken Design for Optimization. J. Polym. Environ. 2024, 32, 1573–1592. [Google Scholar] [CrossRef]

- Verma, P.; Parouha, R.P. Engineering Design Optimization Using an Advanced Hybrid Algorithm. Int. J. Swarm Intell. Res. (IJSIR) 2022, 13, 18. [Google Scholar] [CrossRef]

- Hashim, F.A.; Khurma, R.A.; Albashish, D.; Amin, M.; Hussien, A.G. Novel hybrid of AOA-BSA with double adaptive and random spare for global optimization and engineering problems. Alex. Eng. J. 2023, 73, 543–577. [Google Scholar] [CrossRef]

- Zhang, Z.; Ding, S.; Jia, W. A hybrid optimization algorithm based on cuckoo search and differential evolution for solving constrained engineering problems. Eng. Appl. Artif. Intell. 2019, 85, 254–268. [Google Scholar] [CrossRef]

- Sun, W. Hybrid Role-Engineering Optimization with Multiple Cardinality Constraints Using Natural Language Processing and Integer Linear Programming Techniques. Mob. Inf. Syst. 2022, 2022, 3453041. [Google Scholar] [CrossRef]

- Verma, P.; Parouha, R.P. An advanced hybrid algorithm for constrained function optimization with engineering applications. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 8185–8217. [Google Scholar] [CrossRef]

- Panagant, N.; Ylldlz, M.; Pholdee, N.; Ylldlz, A.R.; Bureerat, S.; Sait, S.M. A novel hybrid marine predators-Nelder-Mead optimization algorithm for the optimal design of engineering problems. Mater. Test. 2021, 63, 453–457. [Google Scholar] [CrossRef]

- Yildiz, A.R.; Mehta, P. Manta ray foraging optimization algorithm and hybrid Taguchi salp swarm-Nelder-Mead algorithm for the structural design of engineering components. Mater. Test. 2022, 64, 706–713. [Google Scholar] [CrossRef]

- Duan, Y.; Yu, X. A collaboration-based hybrid GWO-SCA optimizer for engineering optimization problems. Expert Syst. Appl. 2023, 213, 119017. [Google Scholar] [CrossRef]

- Barshandeh, S.; Piri, F.; Sangani, S.R. HMPA: An innovative hybrid multi-population algorithm based on artificial ecosystem-based and Harris Hawks optimization algorithms for engineering problems. Eng. Comput. 2022, 38, 1581–1625. [Google Scholar] [CrossRef]

- Uray, E.; Carbas, S.; Geem, Z.W.; Kim, S. Parameters Optimization of Taguchi Method Integrated Hybrid Harmony Search Algorithm for Engineering Design Problems. Mathematics 2022, 10, 327. [Google Scholar] [CrossRef]

- Varaee, H.; Safaeian Hamzehkolaei, N.; Safari, M. A hybrid generalized reduced gradient-based particle swarm optimizer for constrained engineering optimization problems. J. Soft Comput. Civ. Eng. 2021, 5, 86–119. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Hudaib, A.; Sleit, A. Hybrid Particle Swarm Optimization with Sine Cosine Algorithm and Nelder–Mead Simplex for Solving Engineering Design Problems. Arab. J. Sci. Eng. 2020, 45, 3091–3109. [Google Scholar] [CrossRef]

- Dhiman, G. SSC: A hybrid nature-inspired meta-heuristic optimization algorithm for engineering applications. Knowledge-Based Syst. 2021, 222, 106926. [Google Scholar] [CrossRef]

- Kundu, T.; Garg, H. LSMA-TLBO: A hybrid SMA-TLBO algorithm with lévy flight based mutation for numerical optimization and engineering design problems. Adv. Eng. Softw. 2022, 172, 103185. [Google Scholar] [CrossRef]

- Yang, C.Y.; Wu, J.H.; Chung, C.H.; You, J.Y.; Yu, T.C.; Ma, C.J.; Lee, C.T.; Ueda, D.; Hsu, H.T.; Chang, E.Y. Optimization of Forward and Reverse Electrical Characteristics of GaN-on-Si Schottky Barrier Diode Through Ladder-Shaped Hybrid Anode Engineering. IEEE Trans. Electron Devices 2022, 69, 6644–6649. [Google Scholar] [CrossRef]

- Yang, X.; Guo, T.; Yu, M.; Chen, M. Optimization of engineering parameters of deflagration fracturing in shale reservoirs based on hybrid proxy model. Geoenergy Sci. Eng. 2023, 231, 212318. [Google Scholar] [CrossRef]

- Zhong, R.; Fan, Q.; Zhang, C.; Yu, J. Hybrid remora crayfish optimization for engineering and wireless sensor network coverage optimization. Clust. Comput. 2024, 27, 10141–10168. [Google Scholar] [CrossRef]

- Yildiz, A.R.; Erdaş, M.U. A new Hybrid Taguchi-salp swarm optimization algorithm for the robust design of real-world engineering problems. Mater. Test. 2021, 63, 157–162. [Google Scholar] [CrossRef]

- Cheng, J.; Lu, W.; Liu, Z.; Wu, D.; Gao, W.; Tan, J. Robust optimization of engineering structures involving hybrid probabilistic and interval uncertainties. Struct. Multidiscip. Optim. 2021, 63, 1327–1349. [Google Scholar] [CrossRef]

- Huang, J.; Hu, H. Hybrid beluga whale optimization algorithm with multi-strategy for functions and engineering optimization problems. J. Big Data 2024, 11, 1–55. [Google Scholar] [CrossRef]

- Tang, W.; Cao, L.; Chen, Y.; Chen, B.; Yue, Y. Solving Engineering Optimization Problems Based on Multi-Strategy Particle Swarm Optimization Hybrid Dandelion Optimization Algorithm. Biomimetics 2024, 9, 298. [Google Scholar] [CrossRef] [PubMed]

- Chagwiza, G.; Jones, B.; Hove-Musekwa, S.; Mtisi, S. A new hybrid matheuristic optimization algorithm for solving design and network engineering problems. Int. J. Manag. Sci. Eng. Manag. 2018, 13, 11–19. [Google Scholar] [CrossRef]

- Liu, H.; Duan, S.; Luo, H. A hybrid engineering algorithm of the seeker algorithm and particle swarm optimization. Mater. Test. 2022, 64, 1051–1089. [Google Scholar] [CrossRef]

- Adegboye, O.R.; Deniz Ülker, E. Hybrid artificial electric field employing cuckoo search algorithm with refraction learning for engineering optimization problems. Sci. Rep. 2023, 13, 4098. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Jia, H.; Abualigah, L.; Liu, Q.; Zheng, R. An improved hybrid aquila optimizer and harris hawks algorithm for solving industrial engineering optimization problems. Processes 2021, 9, 1551. [Google Scholar] [CrossRef]

- Kundu, T.; Garg, H. A hybrid TLNNABC algorithm for reliability optimization and engineering design problems. Eng. Comput. 2022, 38, 5251–5295. [Google Scholar] [CrossRef]

- Knypiński, Ł.; Devarapalli, R.; Gillon, F. The hybrid algorithms in constrained optimization of the permanent magnet motors. IET Sci. Meas. Technol. 2024, 18, 455–461. [Google Scholar] [CrossRef]

- Dhiman, G. ESA: A hybrid bio-inspired metaheuristic optimization approach for engineering problems. Eng. Comput. 2021, 37, 323–353. [Google Scholar] [CrossRef]

- Jia, H.; Rao, H.; Wen, C.; Mirjalili, S. Crayfish optimization algorithm. Artif. Intell. Rev. 2023, 56, 1919–1979. [Google Scholar] [CrossRef]

- Jia, H.; Peng, X.; Lang, C. Remora optimization algorithm. Expert Syst. Appl. 2021, 185, 115665. [Google Scholar] [CrossRef]

- Falahah, I.A.; Al-Baik, O.; Alomari, S.; Bektemyssova, G.; Gochhait, S.; Leonova, I.; Malik, O.P.; Werner, F.; Dehghani, M. Frilled Lizard Optimization: A Novel Bio-Inspired Optimizer for Solving Engineering Applications. Comput. Mater. Contin. 2024, 79, 3631–3678. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-Qaness, M.A.; Gandomi, A.H. Aquila optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Alzoubi, S.; Abualigah, L.; Sharaf, M.; Daoud, M.S.; Khodadadi, N.; Jia, H. Synergistic swarm optimization algorithm. CMES-Comput. Model. Eng. Sci. 2024, 139, 2557. [Google Scholar] [CrossRef]

- Su, H.; Zhao, D.; Heidari, A.A.; Liu, L.; Zhang, X.; Mafarja, M.; Chen, H. RIME: A physics-based optimization. Neurocomputing 2023, 532, 183–214. [Google Scholar] [CrossRef]

- Fakhouri, H.N.; Hamad, F.; Alawamrah, A. Success history intelligent optimizer. J. Supercomput. 2022, 78, 6461–6502. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Aribowo, W.; Suprianto, B.; Kartini, U.T.; Prapanca, A. Dingo optimization algorithm for designing power system stabilizer. Indones. J. Electr. Eng. Comput. Sci. (IJEECS) 2023, 29, 1–7. [Google Scholar] [CrossRef]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Seyyedabbasi, A.; Kiani, F. Sand Cat swarm optimization: A nature-inspired algorithm to solve global optimization problems. Eng. Comput. 2023, 39, 2627–2651. [Google Scholar] [CrossRef]

- Fan, J.; Li, Y.; Wang, T. An improved African vultures optimization algorithm based on tent chaotic mapping and time-varying mechanism. PLoS ONE 2021, 16, e0260725. [Google Scholar] [CrossRef] [PubMed]