An Efficient Optimization of the Monte Carlo Tree Search Algorithm for Amazons

Abstract

:1. Introduction

- •

- We propose an effective optimization of the Monte Carlo tree search algorithm for the Amazons game: the MG-PEO algorithm optimization, which combines the Move Groups strategy with the Parallel Evaluation strategy.

- •

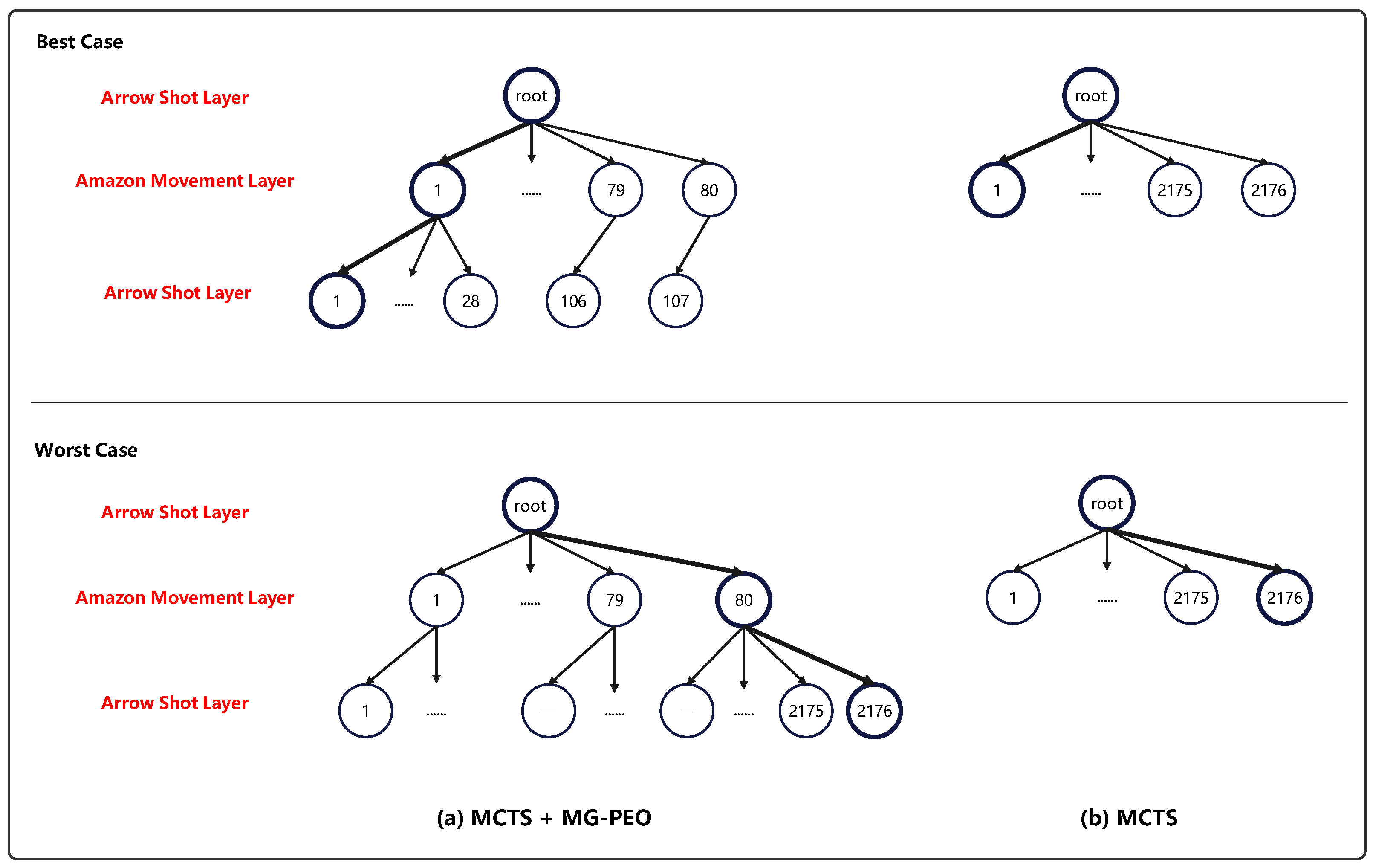

- We provide a performance analysis of the Move Groups strategy in Amazons. By defining a new criterion, the winning convergence distance, we explained the high efficiency of the strategy. Moreover, we also highlight that this strategy has certain shortcomings. The Move Groups strategy involves refining the rules of the Amazons game and using simulations to assign corresponding value scores to additional layer nodes. By utilizing these value scores (e.g., the Upper Confidence Bound Apply to Tree (UCT) strategy [15]), we can achieve effective pruning to save time. However, the addition of extra layer nodes means that more nodes need to be traversed and evaluated in the worst-case scenario, where pruning has not occurred, potentially leading to increased time-consuming overhead. This overhead is incurred as the algorithm strives to find the local optimal solution.

- •

- We propose using a Parallel Evaluation mechanism to address the potential shortcomings of the Move Groups strategy. By parallelizing the evaluation function for the Amazons game, we aim to accelerate the expansion process of the Monte Carlo tree search and minimize the likelihood of the Move Groups strategy falling into a local optimum.

2. Related Work

2.1. Amazons Playing Algorithm

2.2. Monte Carlo Tree Search Algorithm

2.3. Evaluation Function

3. Research Methodology

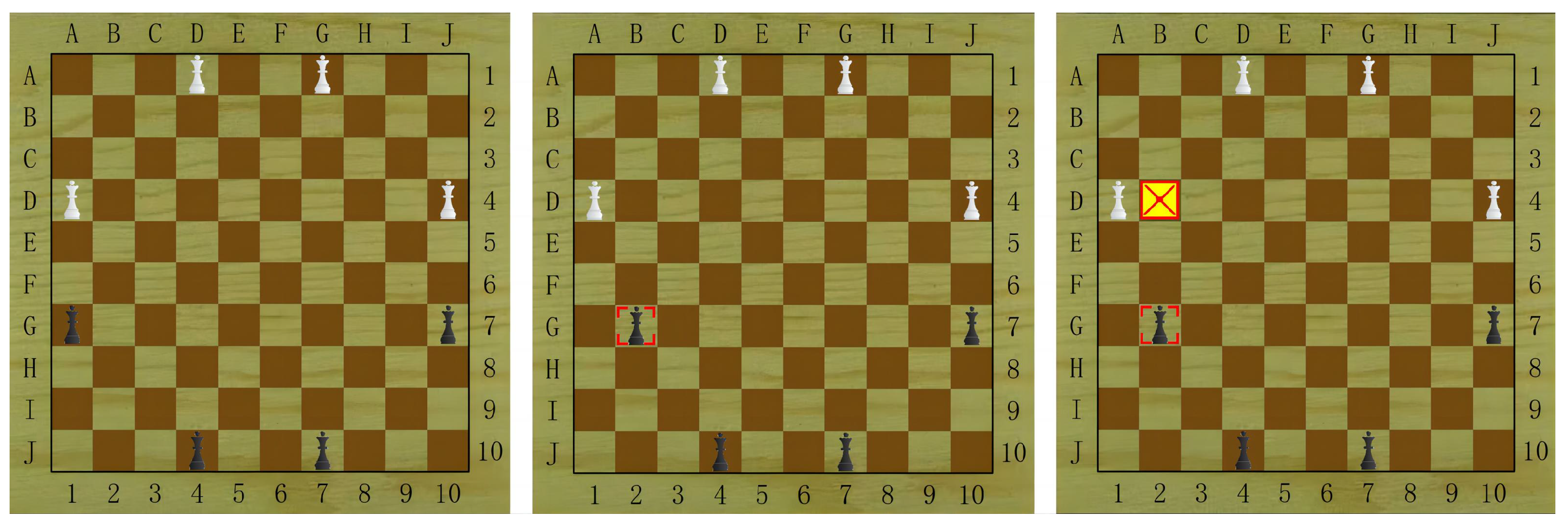

3.1. Overall Framework of Algorithm

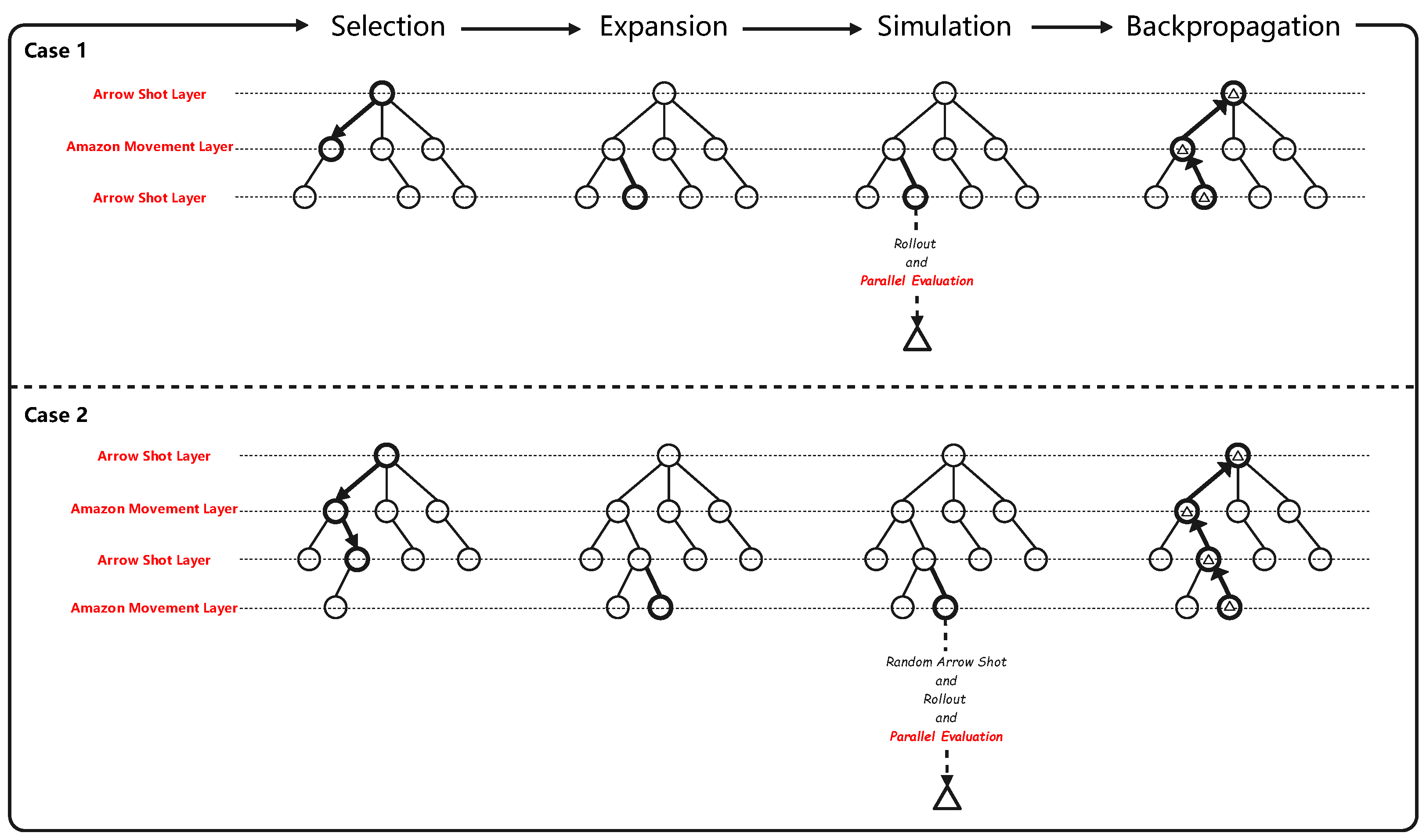

- (1)

- Selection: Starting from the root node, a certain strategy is applied to recursively go down through the tree for expandable node selection. A node is expandable if it represents a non-terminal state and has unvisited children.

- (2)

- Expansion: Expand the tree by adding one (or more) child node(s), depending on the available operations.

- (3)

- Simulation: Run a simulation from a new node to produce results according to the default strategy.

- (4)

- Backpropagation: Back up the simulation results through the selected nodes to update their statistics.

| Algorithm 1: General Algorithm Framework |

| Input: (The current game State) |

| Output: Optimal decision |

| 1: function MCTSSEARCH () |

| 2: create root node with state |

| 3: while within the computational budget do |

| 4: ← Selection () |

| 5: ← Expansion (, ) |

| 6: ←Simulation (, ) |

| 7: Backup (,) |

| 8: return BESTCHILD() |

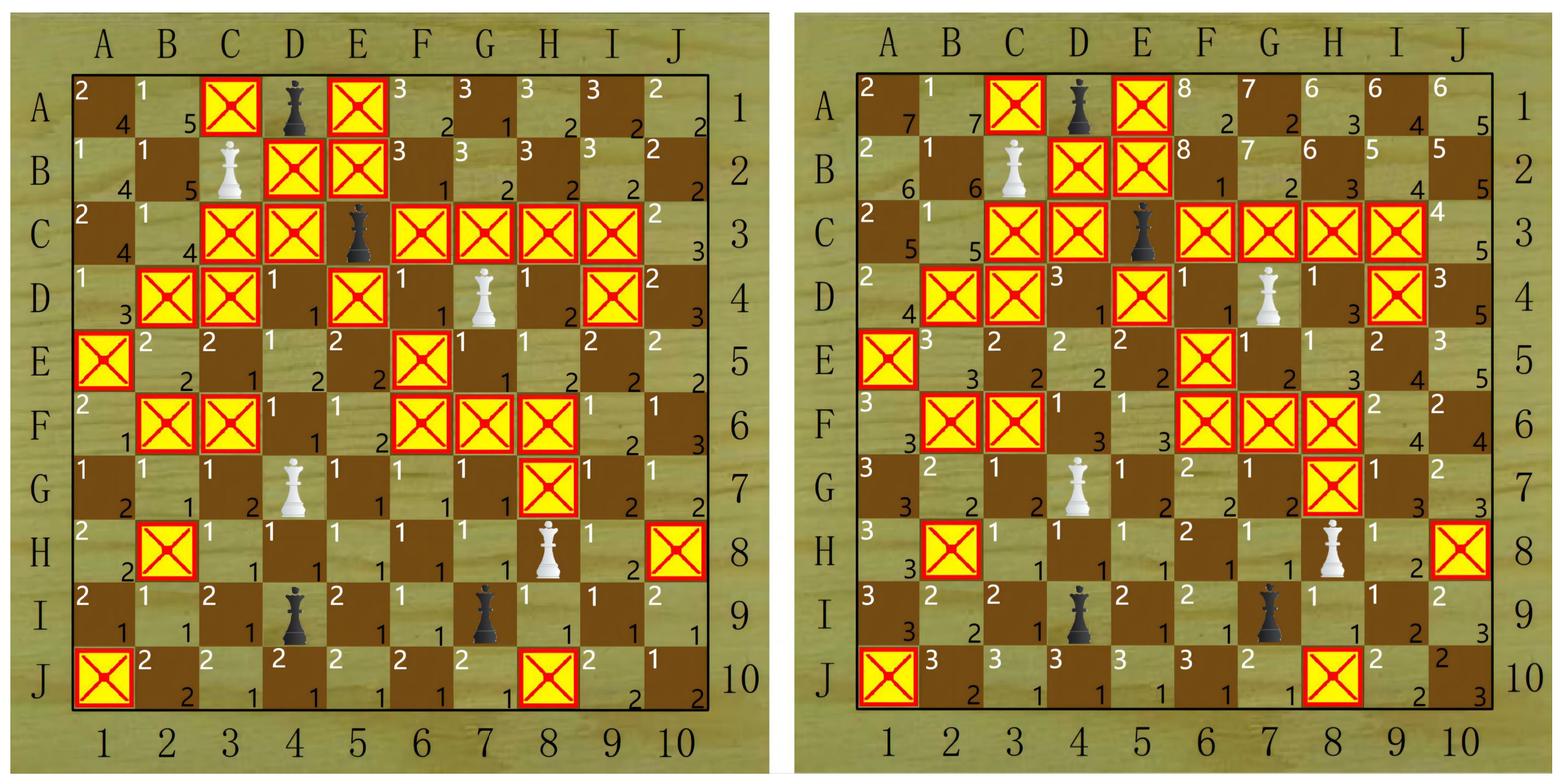

3.2. Move Groups Strategy in Algorithm

3.3. Parallel Evaluation Strategy in Algorithm

| Algorithm 2: SituationEvaluation |

| Input: (The current Game State) |

| Output: Situation Evaluation Value |

| 1: function SituationEvaluation() |

| 2: , , , , ← ParallelEvaluationMetrics () |

| 3: |

| 4: return Value |

- 1.

- “Shallow Rollout Strategy”: This means that the utility value is not calculated, not only based on the next move but also on the position of the game after randomly simulating the move several times. In this algorithm, we set the number of Rollouts to be two, and the depths to be 4 and 5. Specifically, after expanding the complete decision node (Amazon Movement plus Arrow Shot), we perform two Rollouts for the current situation. In each Rollout, we simulate two steps: the opponent’s next decision (Amazon Movement plus Arrow Shot) and our subsequent decision (Amazon Movement plus Arrow Shot), to obtain a more forward-looking utility value. See pseudo-code Algorithm 3 for details. For the analysis of the relevant parameters, see Appendix A.

- 2.

- “Tree-based Upper Confidence Interval”: As shown in Equation (8), the calculation formula of UCT consists of two components. The first term, , represents the utility value of the information in the constructed search tree, and the second term represents the exploration value of the unvisited nodes. The constant C is used to adjust the proportion of the algorithm’s trade-off between the two values. Note that the value of the root formula is infinite because of the presence of unreachable child nodes, which ensures that unreachable child nodes under the current node will be visited at least once.

| Algorithm 3: MCTS with the MG-PEO strategy |

| 1: function Selection(v) |

| 2: while v is nonTerminal do |

| 3: if v is not fully expanded then |

| 4: return v |

| 5: else |

| 6: v ← BESTCHILD(v) |

| 7: return v |

| 8: function Expansion(v,&) |

| 9: if v is an Amazon Movement Node then |

| 10: ← randomly create Arrow Shot Node |

| 11: else if v is an Arrow Shot Node |

| 12: ← randomly create the Amazon Movement Node |

| 13: ← Update the state of the board |

| 14: return |

| 15: function Simulation(v,) |

| 16: S ← |

| 17: //* depth = 4 and 5 (Independent order twice) |

| 18: S ← Rollout(S,) |

| 19: ← SituationEvaluation(S) |

| 20: return |

| 21: function Rollout(S,) |

| 22: repeat |

| 23: if is Terminal (S) then |

| 24: pass |

| 25: if v is the Amazon Movement Node then |

| 26: ← RandomArrow(S) |

| 27: S ← doAction(v,) |

| 28: depth ← depth - 1 |

| 29: else if v is the Arrow Shot Node then |

| 30: ← parent of v |

| 31: S ← doAction(,v) |

| 32: depth ← depth - 1 |

| 33: until depth = 0 or gameover |

| 34: return S |

| 35: function Backup(v,) |

| 36: N(v) ← N(v) + 1 |

| 37: Q(v) ← Q(v) + |

| 38: v ← parent of v |

| 39: function BESTCHILD(v) |

| 40: return |

4. MG-PEO Performance Analysis

4.1. Performance of the Move Groups Strategy in Amazons

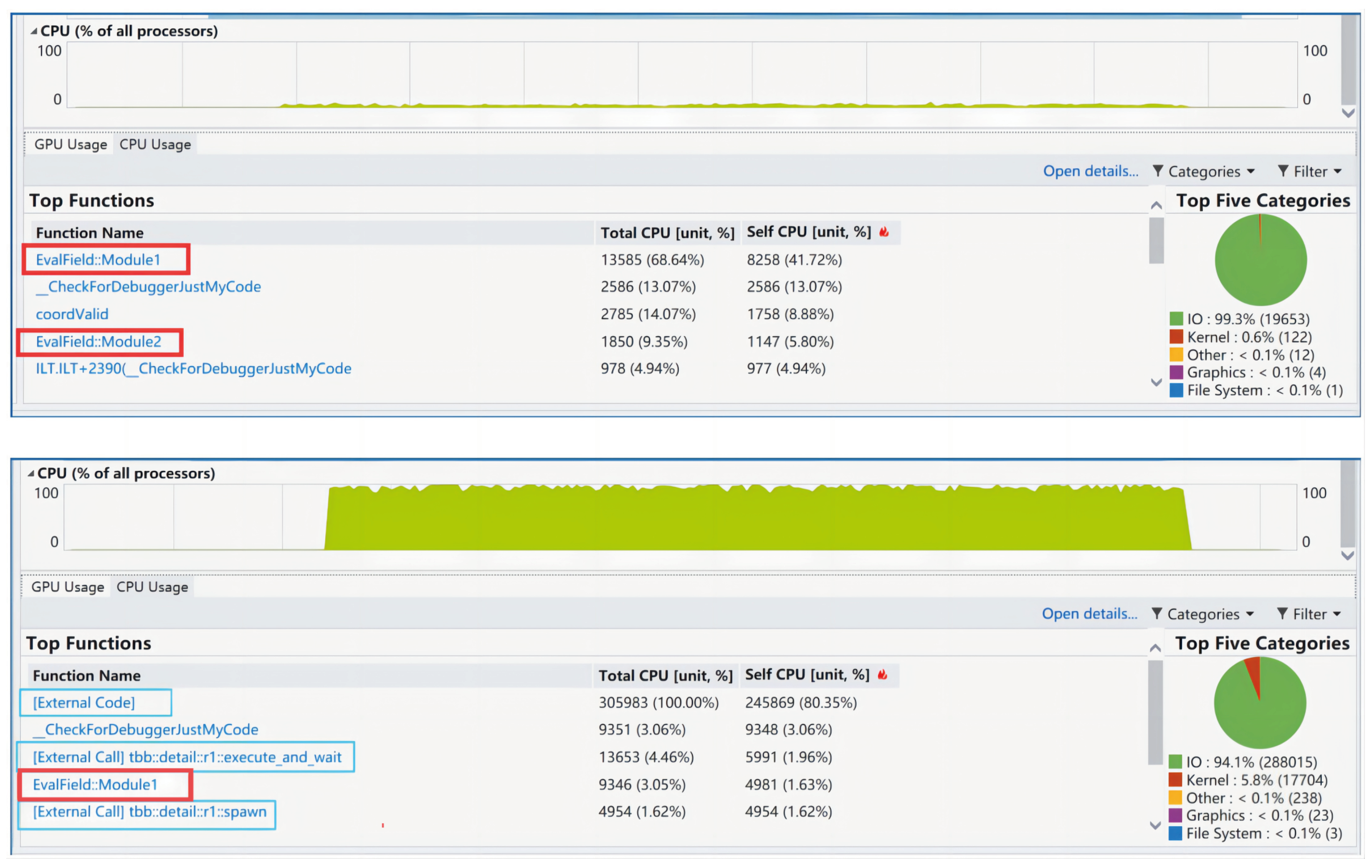

4.2. Performance of Parallel Evaluation Strategy

| Algorithm 4: Parallel Evaluation Metrics |

| Input: (The current Game State) |

| Output: ,,,, |

| 1: function ParallelEvaluationMetrics() |

| 2: //* The calculation of Territory() and Position() metrics uses the same |

| independent function four times, based on the King Move and QueenMove |

| methods, which are calculated once for black and once for white. |

| 3: //* We optimize its external calls in parallel. |

| 4: Module1 = lambda (range) |

| 5: for the in the range: |

| 6: ← |

| 7: ← |

| 8: CalTP(,) |

| 9: parallel_for(block_range(0,2),Module1) |

| 10: //* The Mobility() metric is calculated one function at a time, and the |

| internal logic is to process each board position ( = 100) independently. |

| 11: //* We optimize its inner loop processing logic in parallel. |

| 12: Module2 = lambda (range) |

| 13: for the in the range: |

| 14: ← |

| 15: ← |

| 16: CalEveryBoardPosition() |

| 17: parallel_for(block_range(0,GridSize),Module2) |

5. Experiment and Results

5.1. Experimental Environment Information

5.2. Quantitative and Qualitative Experiments

5.2.1. Winning Percentage Statistics and Analysis

5.2.2. Winning Convergence Distance Visualization and Analysis

5.2.3. Significance Test

5.3. Ablation Experiment

5.4. Generalization Analysis

- 1.

- Move Groups strategy: In some games, the branching factor can be very large, but many moves are similar. The Move Groups strategy creates an additional decision layer where all possible actions are grouped together, allowing us to handle the large branching factor of the game more efficiently. This strategy is beneficial for other games, such as Go [39] and the multi-armed bandit game with Move Groups [40]. We believe that this strategy is still worth exploring and investigating.

- 2.

- Parallel Evaluation strategy: The Parallel Evaluation strategy can provide effective acceleration, whether for simple rule-based games like Gomoku, more complex games like Chess and Go, or even modern innovative board games. Its core advantage lies in its ability to efficiently explore the decision tree, which is a common requirement in various board games.

6. Conclusions and Future Works

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Rollout Depth | 1 | 2 | 3 | 4 | 5 | 6 | 7–10 | 4 and 5 |

|---|---|---|---|---|---|---|---|---|

| Match Point Rounds | 40.25 | 40.14 | 40.0 | 39.9 | 39.84 | 40.04 | 40.1 | 39.68 |

Appendix B

Appendix C

| ID | First Player | Second Player | Result | Match Point Rounds | Winner |

|---|---|---|---|---|---|

| 1 | Amazons based on Qt’s QMainWindow framework | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 2 | Divine Move (ours) | Amazons based on Qt’s QMainWindow framework | 3:0 | 39 | Divine Move (ours) |

| 3 | Gomoku | Divine Move (ours) | 0:3 | 39 | Divine Move (ours) |

| 4 | Divine Move (ours) | Gomoku | 3:0 | 36 | Divine Move (ours) |

| 5 | Othello | Divine Move (ours) | 0:3 | 35 | Divine Move (ours) |

| 6 | Divine Move (ours) | Othello | 3:0 | 33 | Divine Move (ours) |

| 7 | God’s Algorithm | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 8 | Divine Move (ours) | God’s Algorithm | 3:0 | 42 | Divine Move (ours) |

| 9 | Amazons Gaming System | Divine Move (ours) | 0:3 | 39 | Divine Move (ours) |

| 10 | Divine Move (ours) | Amazons Gaming System | 3:0 | 37 | Divine Move (ours) |

| 11 | Advance Little Queens | Divine Move (ours) | 0:3 | 40 | Divine Move (ours) |

| 12 | Divine Move (ours) | Advance Little Queens | 3:0 | 37 | Divine Move (ours) |

| 13 | Checkmate | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 14 | Divine Move (ours) | Checkmate | 3:0 | 38 | Divine Move (ours) |

| 15 | Cliffhanger Amazons | Divine Move (ours) | 0:3 | 40 | Divine Move (ours) |

| 16 | Divine Move (ours) | Cliffhanger Amazons | 3:0 | 37 | Divine Move (ours) |

| 17 | Qi Kaide’s Victory | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 18 | Divine Move (ours) | Qi Kaide’s Victory | 3:0 | 36 | Divine Move (ours) |

| 19 | Super Amazon | Divine Move (ours) | 0:3 | 39 | Divine Move (ours) |

| 20 | Divine Move (ours) | Super Amazon | 3:0 | 36 | Divine Move (ours) |

| 21 | God’s Algorithm | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 22 | Divine Move (ours) | God’s Algorithm | 3:0 | 37 | Divine Move (ours) |

| 23 | Traveler Amazons | Divine Move (ours) | 0:3 | 40 | Divine Move (ours) |

| 24 | Divine Move (ours) | Traveler Amazons | 3:0 | 35 | Divine Move (ours) |

| 25 | Amazon Supreme Chess | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 26 | Divine Move (ours) | Amazon Supreme Chess | 3:0 | 39 | Divine Move (ours) |

| 27 | Chess Troops Falling from Heaven | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 28 | Divine Move (ours) | Chess Troops Falling from Heaven | 3:0 | 41 | Divine Move (ours) |

| 29 | Clove Amazons | Divine Move (ours) | 0:3 | 40 | Divine Move (ours) |

| 30 | Divine Move (ours) | Clove Amazons | 3:0 | 37 | Divine Move (ours) |

| 31 | Shao Guang’s Amazons | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 32 | Divine Move (ours) | Shao Guang’s Amazons | 3:0 | 39 | Divine Move (ours) |

| 33 | AI plays chess | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 34 | Divine Move (ours) | AI plays chess | 3:0 | 39 | Divine Move (ours) |

| 35 | Canopus One | Divine Move (ours) | 0:3 | 39 | Divine Move (ours) |

| 36 | Divine Move (ours) | Canopus One | 3:0 | 35 | Divine Move (ours) |

| 37 | Win the Opening Team | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 38 | Divine Move (ours) | Win the Opening Team | 3:0 | 39 | Divine Move (ours) |

| 39 | Wukong Amazons | Divine Move (ours) | 0:3 | 34 | Divine Move (ours) |

| 40 | Divine Move (ours) | Wukong Amazons | 3:0 | 30 | Divine Move (ours) |

| 41 | Traveler Amazons | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 42 | Divine Move (ours) | Traveler Amazons | 3:0 | 40 | Divine Move (ours) |

| 43 | Yue | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 44 | Divine Move (ours) | Yue | 3:0 | 40 | Divine Move (ours) |

| 45 | Final Amazon | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 46 | Divine Move (ours) | Final Amazon | 3:0 | 38 | Divine Move (ours) |

| 47 | Information University - Monte Carlo | Divine Move (ours) | 1:2 | 42 | Divine Move (ours) |

| 48 | Divine Move (ours) | Information University - Monte Carlo | 3:0 | 40 | Divine Move (ours) |

| 49 | Haha Hi | Divine Move (ours) | 1:2 | 42 | Divine Move (ours) |

| 50 | Divine Move (ours) | Haha Hi | 3:0 | 39 | Divine Move (ours) |

| 51 | Dragon Victory | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 52 | Divine Move (ours) | Dragon Victory | 3:0 | 37 | Divine Move (ours) |

| 53 | Super Amazon | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 54 | Divine Move (ours) | Super Amazon | 3:0 | 38 | Divine Move (ours) |

| 55 | Base Pairing Team | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 56 | Divine Move (ours) | Base Pairing Team | 3:0 | 41 | Divine Move (ours) |

| 57 | Pass the Level | Divine Move (ours) | 3:0 | 47 | Pass the Level |

| 58 | Divine Move (ours) | Pass the Level | 0:3 | 47 | Pass the Level |

| 59 | Dalian Jiaotong University Amazons Team 1 | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 60 | Divine Move (ours) | Dalian Jiaotong University Amazons Team 1 | 3:0 | 39 | Divine Move (ours) |

| 61 | Get Ashore | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 62 | Divine Move (ours) | Get Ashore | 3:0 | 40 | Divine Move (ours) |

| 63 | Empty | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 64 | Divine Move (ours) | Empty | 3:0 | 41 | Divine Move (ours) |

| 65 | Bull | Divine Move (ours) | 0:3 | 42 | Divine Move (ours) |

| 66 | Divine Move (ours) | Bull | 3:0 | 40 | Divine Move (ours) |

| 67 | Wukong Amazons | Divine Move (ours) | 1:2 | 34 | Divine Move (ours) |

| 68 | Divine Move (ours) | Wukong Amazons | 3:0 | 31 | Divine Move (ours) |

| 69 | Gangzi Fans Support Team | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 70 | Divine Move (ours) | Gangzi Fans Support Team | 3:0 | 39 | Divine Move (ours) |

| 71 | Thai Pants Spicy | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 72 | Divine Move (ours) | Thai Pants Spicy | 3:0 | 41 | Divine Move (ours) |

| 73 | Green Grass Cake | Divine Move (ours) | 0:3 | 34 | Divine Move (ours) |

| 74 | Divine Move (ours) | Green Grass Cake | 3:0 | 30 | Divine Move (ours) |

| 75 | Failed to Grab the Air and Didn’t Grab the Plant Brain Hypoxia Team | Divine Move (ours) | 0:3 | 40 | Divine Move (ours) |

| 76 | Divine Move (ours) | Failed to Grab the Air and Didn’t Grab the Plant Brain Hypoxia Team | 3:0 | 37 | Divine Move (ours) |

| 77 | DG | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 78 | Divine Move (ours) | DG | 3:0 | 39 | Divine Move (ours) |

| 79 | Why is Tang Yang a God | Divine Move (ours) | 0:3 | 42 | Divine Move (ours) |

| 80 | Divine Move (ours) | Why is Tang Yang a God | 3:0 | 39 | Divine Move (ours) |

| 81 | Dream Team | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 82 | Divine Move (ours) | Dream Team | 3:0 | 39 | Divine Move (ours) |

| 83 | Horse Face Skirt Daily and Easy to Wear | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 84 | Divine Move (ours) | Horse Face Skirt Daily and Easy to Wear | 3:0 | 40 | Divine Move (ours) |

| 85 | Genshin Impact Expert | Divine Move (ours) | 0:3 | 41 | Divine Move (ours) |

| 86 | Divine Move (ours) | Genshin Impact Expert | 3:0 | 37 | Divine Move (ours) |

| 87 | Code Apprentice | Divine Move (ours) | 0:3 | 42 | Divine Move (ours) |

| 88 | Divine Move (ours) | Code Apprentice | 3:0 | 41 | Divine Move (ours) |

| 89 | Amazon Drift Notes | Divine Move (ours) | 0:3 | 39 | Divine Move (ours) |

| 90 | Divine Move (ours) | Amazon Drift Notes | 3:0 | 37 | Divine Move (ours) |

| 91 | Love Will Disappear, Right? | Divine Move (ours) | 0:3 | 38 | Divine Move (ours) |

| 92 | Divine Move (ours) | Love Will Disappear, Right? | 3:0 | 33 | Divine Move (ours) |

| 93 | Little Su and the Cat | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 94 | Divine Move (ours) | Little Su and the Cat | 3:0 | 42 | Divine Move (ours) |

| 95 | Don’t Want Marla | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 96 | Divine Move (ours) | Don’t Want Marla | 3:0 | 43 | Divine Move (ours) |

| 97 | Parameter Adjustment Team | Divine Move (ours) | 0:3 | 44 | Divine Move (ours) |

| 98 | Divine Move (ours) | Parameter Adjustment Team | 3:0 | 41 | Divine Move (ours) |

| 99 | AlphaAmazon | Divine Move (ours) | 0:3 | 43 | Divine Move (ours) |

| 100 | Divine Move (ours) | AlphaAmazon | 3:0 | 42 | Divine Move (ours) |

| ID | First Player | Second Player | Result | Match Point Rounds | Winner |

|---|---|---|---|---|---|

| 1 | Amazons based on Qt’s QMainWindow framework | MCTS (baseline) | 1:2 | 43 | MCTS (baseline) |

| 2 | MCTS (baseline) | Amazons based on Qt’s QMainWindow framework | 3:0 | 41 | MCTS (baseline) |

| 3 | Gomoku | MCTS (baseline) | 3:0 | 47 | Gomoku |

| 4 | MCTS (baseline) | Gomoku | 3:0 | 45 | MCTS (baseline) |

| 5 | Othello | MCTS (baseline) | 2:1 | 47 | Othello |

| 6 | MCTS (baseline) | Othello | 2:1 | 44 | MCTS (baseline) |

| 7 | God’s Algorithm | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 8 | MCTS (baseline) | God’s Algorithm | 3:0 | 40 | MCTS (baseline) |

| 9 | Amazons Gaming System | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 10 | MCTS (baseline) | Amazons Gaming System | 3:0 | 40 | MCTS (baseline) |

| 11 | Advance Little Queens | MCTS (baseline) | 3:0 | 47 | Advance Little Queens |

| 12 | MCTS (baseline) | Advance Little Queens | 3:0 | 43 | MCTS (baseline) |

| 13 | Checkmate | MCTS (baseline) | 2:1 | 47 | Checkmate |

| 14 | MCTS (baseline) | Checkmate | 3:0 | 40 | MCTS (baseline) |

| 15 | Cliffhanger Amazons | MCTS (baseline) | 3:0 | 47 | Checkmate |

| 16 | MCTS (baseline) | Cliffhanger Amazons | 3:0 | 44 | MCTS (baseline) |

| 17 | Qi Kaide’s Victory | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 18 | MCTS (baseline) | Qi Kaide’s Victory | 3:0 | 41 | MCTS (baseline) |

| 19 | Super Amazon | MCTS (baseline) | 2:1 | 47 | Super Amazon |

| 20 | MCTS (baseline) | Super Amazon | 2:1 | 43 | MCTS (baseline) |

| 21 | God’s Algorithm | MCTS (baseline) | 3:0 | 47 | God’s Algorithm |

| 22 | MCTS (baseline) | God’s Algorithm | 2:1 | 40 | MCTS (baseline) |

| 23 | Traveler Amazons | MCTS (baseline) | 2:1 | 47 | Traveler Amazons |

| 24 | MCTS (baseline) | Traveler Amazons | 3:0 | 42 | MCTS (baseline) |

| 25 | Amazon Supreme Chess | MCTS (baseline) | 0:3 | 40 | MCTS (baseline) |

| 26 | MCTS (baseline) | Amazon Supreme Chess | 3:0 | 41 | MCTS (baseline) |

| 27 | Chess Troops Falling from Heaven | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 28 | MCTS (baseline) | Chess Troops Falling from Heaven | 3:0 | 39 | MCTS (baseline) |

| 29 | Clove Amazons | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 30 | MCTS (baseline) | Clove Amazons | 3:0 | 40 | MCTS (baseline) |

| 31 | Shao Guang’s Amazons | MCTS (baseline) | 1:2 | 43 | MCTS (baseline) |

| 32 | MCTS (baseline) | Shao Guang’s Amazons | 3:0 | 40 | MCTS (baseline) |

| 33 | AI plays chess | MCTS (baseline) | 2:1 | 47 | AI plays chess |

| 34 | MCTS (baseline) | AI plays chess | 3:0 | 42 | MCTS (baseline) |

| 35 | Canopus One | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 36 | MCTS (baseline) | Canopus One | 2:1 | 40 | MCTS (baseline) |

| 37 | Win the Opening Team | MCTS (baseline) | 3:0 | 47 | Win the Opening Team |

| 38 | MCTS (baseline) | Win the Opening Team | 3:0 | 44 | MCTS (baseline) |

| 39 | Wukong Amazons | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 40 | MCTS (baseline) | Wukong Amazons | 3:0 | 39 | MCTS (baseline) |

| 41 | Traveler Amazons | MCTS (baseline) | 0:3 | 41 | MCTS (baseline) |

| 42 | MCTS (baseline) | Traveler Amazons | 3:0 | 36 | MCTS (baseline) |

| 43 | Yue | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 44 | MCTS (baseline) | Yue | 3:0 | 41 | MCTS (baseline) |

| 45 | Final Amazon | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 46 | MCTS (baseline) | Final Amazon | 3:0 | 37 | MCTS (baseline) |

| 47 | Information University - Monte Carlo | MCTS (baseline) | 3:0 | 47 | Information University-Monte Carlo |

| 48 | MCTS (baseline) | Information University-Monte Carlo | 0:3 | 47 | Information University-Monte Carlo |

| 49 | Haha Hi | MCTS (baseline) | 3:0 | 47 | Haha Hi |

| 50 | MCTS (baseline) | Haha Hi | 3:0 | 41 | MCTS (baseline) |

| 51 | Dragon Victory | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 52 | MCTS (baseline) | Dragon Victory | 3:0 | 38 | MCTS (baseline) |

| 53 | Super Amazon | MCTS (baseline) | 0:3 | 41 | MCTS (baseline) |

| 54 | MCTS (baseline) | Super Amazon | 3:0 | 38 | MCTS (baseline) |

| 55 | Base Pairing Team | MCTS (baseline) | 3:0 | 45 | Base Pairing Team |

| 56 | MCTS (baseline) | Base Pairing Team | 0:3 | 42 | Base Pairing Team |

| 57 | Pass the Level | MCTS (baseline) | 3:0 | 47 | Pass the Level |

| 58 | MCTS (baseline) | Pass the Level | 0:3 | 47 | Pass the Level |

| 59 | Dalian Jiaotong University Amazons Team 1 | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 60 | MCTS (baseline) | Dalian Jiaotong University Amazons Team 1 | 3:0 | 42 | MCTS (baseline) |

| 61 | Get Ashore | MCTS (baseline) | 3:0 | 47 | Get Ashore |

| 62 | MCTS (baseline) | Get Ashore | 0:3 | 47 | Get Ashore |

| 63 | Empty | MCTS (baseline) | 0:3 | 45 | MCTS (baseline) |

| 64 | MCTS (baseline) | Empty | 3:0 | 41 | MCTS (baseline) |

| 65 | Bull | MCTS (baseline) | 0:3 | 45 | MCTS (baseline) |

| 66 | MCTS (baseline) | Bull | 3:0 | 40 | MCTS (baseline) |

| 67 | Wukong Amazons | MCTS (baseline) | 2:1 | 47 | Wukong Amazons |

| 68 | MCTS (baseline) | Wukong Amazons | 3:0 | 39 | MCTS (baseline) |

| 69 | Gangzi Fans Support Team | MCTS (baseline) | 3:0 | 44 | Gangzi Fans Support Team |

| 70 | MCTS (baseline) | Gangzi Fans Support Team | 1:2 | 41 | Gangzi Fans Support Team |

| 71 | Thai Pants Spicy | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 72 | MCTS (baseline) | Thai Pants Spicy | 3:0 | 40 | MCTS (baseline) |

| 73 | Green Grass Cake | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 74 | MCTS (baseline) | Green Grass Cake | 3:0 | 37 | MCTS (baseline) |

| 75 | Failed to Grab the Air and Didn’t Grab the Plant Brain Hypoxia Team | MCTS (baseline) | 0:3 | 41 | MCTS (baseline) |

| 76 | MCTS (baseline) | Failed to Grab the Air and Didn’t Grab the Plant Brain Hypoxia Team | 3:0 | 40 | MCTS (baseline) |

| 77 | DG | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 78 | MCTS (baseline) | DG | 3:0 | 39 | MCTS (baseline) |

| 79 | Why is Tang Yang a God | MCTS (baseline) | 2:1 | 47 | Why is Tang Yang a God |

| 80 | MCTS (baseline) | Why is Tang Yang a God | 3:0 | 44 | MCTS (baseline) |

| 81 | Dream Team | MCTS (baseline) | 0:3 | 43 | MCTS (baseline) |

| 82 | MCTS (baseline) | Dream Team | 3:0 | 44 | MCTS (baseline) |

| 83 | Horse Face Skirt Daily and Easy to Wear | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 84 | MCTS (baseline) | Horse Face Skirt Daily and Easy to Wear | 3:0 | 40 | MCTS (baseline) |

| 85 | Genshin Impact Expert | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 86 | MCTS (baseline) | Genshin Impact Expert | 3:0 | 39 | MCTS (baseline) |

| 87 | Code Apprentice | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 88 | MCTS (baseline) | Code Apprentice | 3:0 | 40 | MCTS (baseline) |

| 89 | Amazon Drift Notes | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 90 | MCTS (baseline) | Amazon Drift Notes | 3:0 | 42 | MCTS (baseline) |

| 91 | Love Will Disappear, Right? | MCTS (baseline) | 0:3 | 40 | MCTS (baseline) |

| 92 | MCTS (baseline) | Love Will Disappear, Right? | 3:0 | 38 | MCTS (baseline) |

| 93 | Little Su and the Cat | MCTS (baseline) | 0:3 | 44 | MCTS (baseline) |

| 94 | MCTS (baseline) | Little Su and the Cat | 3:0 | 40 | MCTS (baseline) |

| 95 | Don’t Want Marla | MCTS (baseline) | 0:3 | 41 | MCTS (baseline) |

| 96 | MCTS (baseline) | Don’t Want Marla | 3:0 | 37 | MCTS (baseline) |

| 97 | Parameter Adjustment Team | MCTS (baseline) | 0:3 | 42 | MCTS (baseline) |

| 98 | MCTS (baseline) | Parameter Adjustment Team | 3:0 | 39 | MCTS (baseline) |

| 99 | AlphaAmazon | MCTS (baseline) | 0:3 | 41 | MCTS (baseline) |

| 100 | MCTS (baseline) | AlphaAmazon | 0:3 | 47 | AlphaAmazon |

References

- Li, R.; Gao, M. Amazons search algorithm design based on CNN model. Digit. Technol. Appl. 2022, 40, 164–166. [Google Scholar]

- Guo, Q.; Li, S.; Bao, H. Research on evaluation function computer game of Amazon. Comput. Eng. Appl. 2012, 48, 50–54. [Google Scholar]

- Guo, T.; Qiu, H.; Tong, B.; Wang, Y. Optimization and Comparison of Multiple Game Algorithms in Amazons. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 6299–6304. [Google Scholar]

- Quan, J.; Qiu, H.; Wang, Y.; Li, F.; Qiu, S. Application of UCT technologies for computer games of Amazon. In Proceedings of the 2016 Chinese Control and Decision Conference (CCDC), Yinchuan, China, 28–30 May 2016; pp. 6896–6899. [Google Scholar]

- Ju, J.; Qiu, H.; Wang, F.; Wang, X.; Wang, Y. Research on Thread Optimization and Opening Library Based on Parallel PVS Algorithm in Amazons. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 28–30 May 2021; pp. 2207–2212. [Google Scholar]

- Li, Z.; Ning, C.; Cao, J.; Li, Z. Amazons Based on UCT-PVS Hybrid Algorithm. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 28–30 May 2021; pp. 2179–2183. [Google Scholar]

- Ding, M.; Bo, J.; Qi, Y.; Fu, Y.; Li, S. Design of Amazons Game System Based on Reinforcement Learning. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 6337–6342. [Google Scholar]

- Tong, B.; Qiu, H.; Guo, T.; Wang, Y. Research and Application of Parallel Computing of PVS Algorithm Based on Amazon Human-Machine Game. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 6293–6298. [Google Scholar]

- Chen, X.; Yang, L. Research on evaluation function in Amazons. Comput. Knowl. Technol. 2019, 15, 224–226. [Google Scholar]

- Wang, C.; Ding, M. Interface design and implementation of personalized Amazons. Intell. Comput. Appl. 2017, 7, 78–80. [Google Scholar]

- Zhang, L. Research on Amazons Game System Based on Minimax Search Algorithm. Master’s Thesis, Northeast University, Shenyang, China, 2010. [Google Scholar]

- Metropolis, N.; Ulam, S. The Monte Carlo Method. J. Am. Stat. Assoc. 1949, 44, 335–341. [Google Scholar] [CrossRef] [PubMed]

- Coulom, R. Efficient Selectivity and Backup Operators in Monte-Carlo Tree Search. In Proceedings of the Computers and Games, Turin, Italy, 1–5 April 2007; pp. 72–83. [Google Scholar]

- Gelly, S.; Kocsis, L.; Schoenauer, M.; Sebag, M.; Silver, D.; Szepesvári, C.; Teytaud, O. The grand challenge of computer Go: Monte Carlo tree search and extensions. Commun. ACM 2012, 55, 106–113. [Google Scholar] [CrossRef]

- Gelly, S.; Silver, D. Monte-Carlo tree search and rapid action value estimation in computer Go. Artif. Intell. 2011, 175, 1856–1875. [Google Scholar] [CrossRef]

- Vinyals, O.; Babuschkin, I.; Czarnecki, W.M.; Mathieu, M.; Dudzik, A.; Chung, J.; Choi, D.H.; Powell, R.; Ewalds, T.; Georgiev, P.; et al. Grandmaster level in StarCraft II using multi-agent reinforcement learning. Nature 2019, 575, 350–354. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the game of Go with deep neural networks and tree search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Berner, C.; Brockman, G.; Chan, B.; Cheung, V.; Dębiak, P.; Dennison, C.; Farhi, D.; Fischer, Q.; Hashme, S.; Hesse, C.; et al. Dota 2 with Large Scale Deep Reinforcement Learning. arXiv 2019, arXiv:1912.06680. [Google Scholar]

- Browne, C.B.; Powley, E.; Whitehouse, D.; Lucas, S.M.; Cowling, P.I.; Rohlfshagen, P.; Tavener, S.; Perez, D.; Samothrakis, S.; Colton, S. A Survey of Monte Carlo Tree Search Methods. IEEE Trans. Comput. Intell. AI Games 2012, 4, 1–43. [Google Scholar] [CrossRef]

- Kloetzer, J.; Iida, H.; Bouzy, B. The Monte-Carlo Approach in Amazons. In Proceedings of the Computer Games Workshop, Amsterdam, The Netherlands, 15–17 June 2007; pp. 185–192. [Google Scholar]

- Shannon, C.E. Programming a computer for playing chess. Lond. Edinb. Dublin Philos. Mag. J. Sci. 1950, 41, 256–275. [Google Scholar] [CrossRef]

- Chaslot, G.; Bakkes, S.; Szita, I.; Spronck, P. Monte-Carlo Tree Search: A New Framework for Game AI. In Proceedings of the AAAI Conference on Artificial Intelligence and Interactive Digital Entertainment, California, CA, USA, 22–24 October 2008; pp. 216–217. [Google Scholar]

- Świechowski, M.; Godlewski, K.; Sawicki, B.; Mańdziuk, J. Monte Carlo Tree Search: A review of recent modifications and applications. Artif. Intell. Rev. 2023, 56, 2497–2562. [Google Scholar] [CrossRef]

- Kloetzer, J. Monte-Carlo Opening Books for Amazons. In Computers and Games: 7th International Conference (CG 2010), Kanazawa, Japan, September 24–26 2010, Revised Selected Papers 7; Springer: Berlin/Heidelberg, Germany, 2011; pp. 124–135. [Google Scholar]

- Song, J.; Müller, M. An Enhanced Solver for the Game of Amazons. IEEE Trans. Comput. Intell. AI Games 2014, 7, 16–27. [Google Scholar] [CrossRef]

- Zhang, G.; Chen, X.; Chang, R.; Zhang, Y.; Wang, C.; Bai, L.; Wang, J.; Xu, C. Mastering the Game of Amazons Fast by Decoupling Network Learning. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- de Koning, J. INVADER Prolongs Amazons Title. ICGA J. 2011, 34, 96. [Google Scholar] [CrossRef]

- Kloetzer, J. INVADER Wins Amazons Tournament. ICGA J. 2009, 32, 112–113. [Google Scholar] [CrossRef]

- Lorentz, R. Invader Wins Eighth Amazons Gold Medal. ICGA J. 2017, 39, 228–229. [Google Scholar] [CrossRef]

- Guo, Q.; Li, S.; Bao, H. The Research of Searching Algorithm in Amazons Game. In Proceedings of the 2011 Chinese Control and Decision Conference (CCDC), Mianyang, China, 23–25 May 2011; pp. 1859–1862. [Google Scholar]

- Li, X.; Hou, L.; Wu, L. UCT Algorithm in Amazons Human-Computer Games. In Proceedings of the 26th Chinese Control and Decision Conference (CCDC), Changsha, China, 31 May–2 June 2014; pp. 3358–3361. [Google Scholar]

- Quan, J.; Qiu, H.; Wang, Y.; Li, Y.; Wang, X. Study the Performance of Search Algorithms in Amazons. In Proceedings of the 27th Chinese Control and Decision Conference (CCDC), Qingdao, China, 23–25 May 2015; pp. 5811–5813. [Google Scholar]

- Lieberum, J. An Evaluation Function for the Game of Amazons. Theor. Comput. Sci. 2005, 349, 230–244. [Google Scholar] [CrossRef]

- Chai, Z.; Fang, Z.; Zhu, J. Amazons Evaluation Optimization Strategy Based on PSO Algorithm. In Proceedings of the 2019 Chinese Control And Decision Conference (CCDC), Nanchang, China, 3–5 June 2019; pp. 6334–6336. [Google Scholar]

- Sun, Y.; Yuan, D.; Gao, M.; Zhu, P. GPU Acceleration of Monte Carlo Tree Search Algorithm for Amazons and Its Evaluation Function. In Proceedings of the 2022 International Conference on Artificial Intelligence, Information Processing and Cloud Computing (AIIPCC), Kunming, China, 21–23 June 2022; pp. 434–440. [Google Scholar]

- Kocsis, L.; Szepesvari, C.; Willemson, J. Improved Monte-Carlo Search. Univ. Tartu Est. Tech. Rep. 2006, 1, 1–22. [Google Scholar]

- Finnsson, H.; Björnsson, Y. Game-tree properties and MCTS performance. IJCAI 2011, 11, 23–30. [Google Scholar]

- Pheatt, C. Intel® threading building blocks. J. Comput. Sci. Coll. 2008, 23, 164–166. [Google Scholar]

- Childs, B.E.; Brodeur, J.H.; Kocsis, L. Transpositions and move groups in monte carlo tree search. In Proceedings of the 2008 IEEE Symposium on Computational Intelligence and Games, Perth, Australia, 15–18 December 2008; pp. 389–395. [Google Scholar]

- Van Eyck, G.; Müller, M. Revisiting move groups in monte-carlo tree search. In Advances in Computer Games: 13th International Conference, ACG 2011, Tilburg, The Netherlands, November 20–22, 2011, Revised Selected Papers 13; Springer: Berlin/Heidelberg, Germany, 2012; pp. 13–23. [Google Scholar]

| Evaluation Metrics | Territory | Position | Mobility | |||

|---|---|---|---|---|---|---|

| Evaluation Factor | ||||||

| Weight Coefficient | ||||||

| Stages | Opening | 0.14 | 0.37 | 0.13 | 0.13 | 0.20 |

| Middle | 0.30 | 0.25 | 0.20 | 0.20 | 0.05 | |

| Ending | 0.80 | 0.10 | 0.05 | 0.05 | 0.00 | |

| Algorithm | Best Case | Worst Case |

|---|---|---|

| MCTS + MG-PEO | 187 | 2256 |

| MCTS | 2176 | 2176 |

| Module | Serial Execution Time | Parallel Execution Time | Speed-Up Ratio |

|---|---|---|---|

| Module1 | 69 us | 29 us | 2.379 |

| Mudule2 | 45 us | 23 us | 1.956 |

| Whole Evaluation Function | 114 us | 54 us | 2.111 |

| Hardware and Constraints | Details |

|---|---|

| GPU | GeForce RTX 3070 |

| CPU | Intel Core i7-11800H |

| Decision-Making time | 5 s |

| Number of matches played by the same opponent | 2 |

| Number of sets per match | 3 |

| Experimental Matching Platform | SAU GAME Platform |

| Visual Studio Runtime Mode | [Release x64] |

| Algorithm | Winning Percentage |

|---|---|

| MCTS | 74% |

| MCTS + MG-PEO | 97% |

| Algorithm | Mean Value |

|---|---|

| MCTS | 42.57 |

| MCTS + MG-PEO | 39.68 |

| Match Point Rounds Data | p-Value for Shapiro–Wilk Test () | p-Value for Mann–Whitney U Test () |

|---|---|---|

| MCTS | 0.0002 | |

| MCTS + MG-PEO | 0.0013 |

| Algorithm | Winning Percentage | Number of MCTS Iterations within 1s |

|---|---|---|

| MCTS | 74% | 2176 |

| MCTS + PEO | 89% | 10,026 |

| MCTS + MG | 83% | 5663 |

| MCTS + MG-PEO | 97% | 45,536 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, L.; Zou, H.; Zhu, Y. An Efficient Optimization of the Monte Carlo Tree Search Algorithm for Amazons. Algorithms 2024, 17, 334. https://doi.org/10.3390/a17080334

Zhang L, Zou H, Zhu Y. An Efficient Optimization of the Monte Carlo Tree Search Algorithm for Amazons. Algorithms. 2024; 17(8):334. https://doi.org/10.3390/a17080334

Chicago/Turabian StyleZhang, Lijun, Han Zou, and Yungang Zhu. 2024. "An Efficient Optimization of the Monte Carlo Tree Search Algorithm for Amazons" Algorithms 17, no. 8: 334. https://doi.org/10.3390/a17080334