Abstract

In this study, a systematic review on production scheduling based on reinforcement learning (RL) techniques using especially bibliometric analysis has been carried out. The aim of this work is, among other things, to point out the growing interest in this domain and to outline the influence of RL as a type of machine learning on production scheduling. To achieve this, the paper explores production scheduling using RL by investigating the descriptive metadata of pertinent publications contained in Scopus, ScienceDirect, and Google Scholar databases. The study focuses on a wide spectrum of publications spanning the years between 1996 and 2024. The findings of this study can serve as new insights for future research endeavors in the realm of production scheduling using RL techniques.

1. Introduction

Production scheduling is considered as one of the most critical elements of manufacturing management in aligning production activities with business objectives, in ensuring a smooth flow of goods resources, and in supporting company’s ability to remain competitive in the marketplace. Scheduling algorithms play an important role in enhancing production efficiency and effectiveness, and therefore have long been a subject of extensive research in various interdisciplinary domains, such as industrial engineering, automation, and management science [1]. The production scheduling tasks can be solved using three main types of step-by-step procedures such as exact algorithms, heuristic algorithms, and meta-heuristic algorithms [2,3]. Although an exact algorithm can theoretically guarantee the optimum solution, the NP-hardness of major problems makes them impossible to address effectively and efficiently [4]. Heuristics use a set of rules to create scheduling solutions quickly and effectively without consideration of global optimization. Furthermore, the creation of rules is heavily reliant on a thorough comprehension of the particulars of the situation [5,6,7,8]. Whereas meta-heuristics can produce good scheduling solutions in a reasonable amount of computing time, the way search operators create them significantly depends on the specific situation at hand [9,10,11,12,13]. In addition, the iterative search process poses challenges in terms of time consumption and applicability in real-time scenarios when dealing with large-scale problems. Scheduling approaches based on reinforcement learning (RL) have proven to be a useful tool in this regard. Reinforcement learning is a subfield within the broader domain of machine learning. RL is considered one of the most perspective approaches for robust cooperative scheduling, which allows production managers to interact with a complex manufacturing environment, learn from previous experience, and select optimal decisions. It involves the process of an agent autonomously selecting and executing actions to accomplish a given task. The agent learns via experience and aims to maximize the rewards it receives in certain scenarios. The primary goal of RL is to optimize the cumulative reward obtained by an agent through the evaluation and selection of actions within a dynamic environment [14,15,16,17,18,19,20]. The most current development in artificial intelligence technology has allowed successful application of RL in sequential decision-making problems with multiple objectives which are usable for robot scheduling and control [21,22]. The research on production scheduling using RL since 1998 has been evolving as advancing optimization techniques compared to metaheuristics. RL significantly improves the computational efficiency of addressing scheduling problems. Numerous research articles of RL on production scheduling have been undertaken since its inception in 1996, establishing a substantial and valuable foundation (see few of them, e.g., [23,24,25,26,27]), but only two recent review papers [28,29] paid attention to this subject. However, both the papers published in 2021 were focused on different perspectives of RL-based scheduling algorithms than presented in this paper. In other words, the content of the proposed paper is in a disjunctive and complementary relation to the two mentioned studies.

The research question of this paper is multi-faceted including multiple features of this domain that require separate answers. The main features that this paper will address are: What are the main emerging research areas in the field? Which related topics are being covered in production scheduling based on RL? What are typical implementation domains within RL applied to production scheduling?

Its novelty lies in providing the additional analyses and assessments regarding RL in production scheduling. It encompasses, e.g., the citation trend for RL on production scheduling, the most influential authors in this domain, the most relevant sources in the field, comparison of deterministic types of scheduling methods and uncertain types of scheduling methods.

2. Materials and Methods

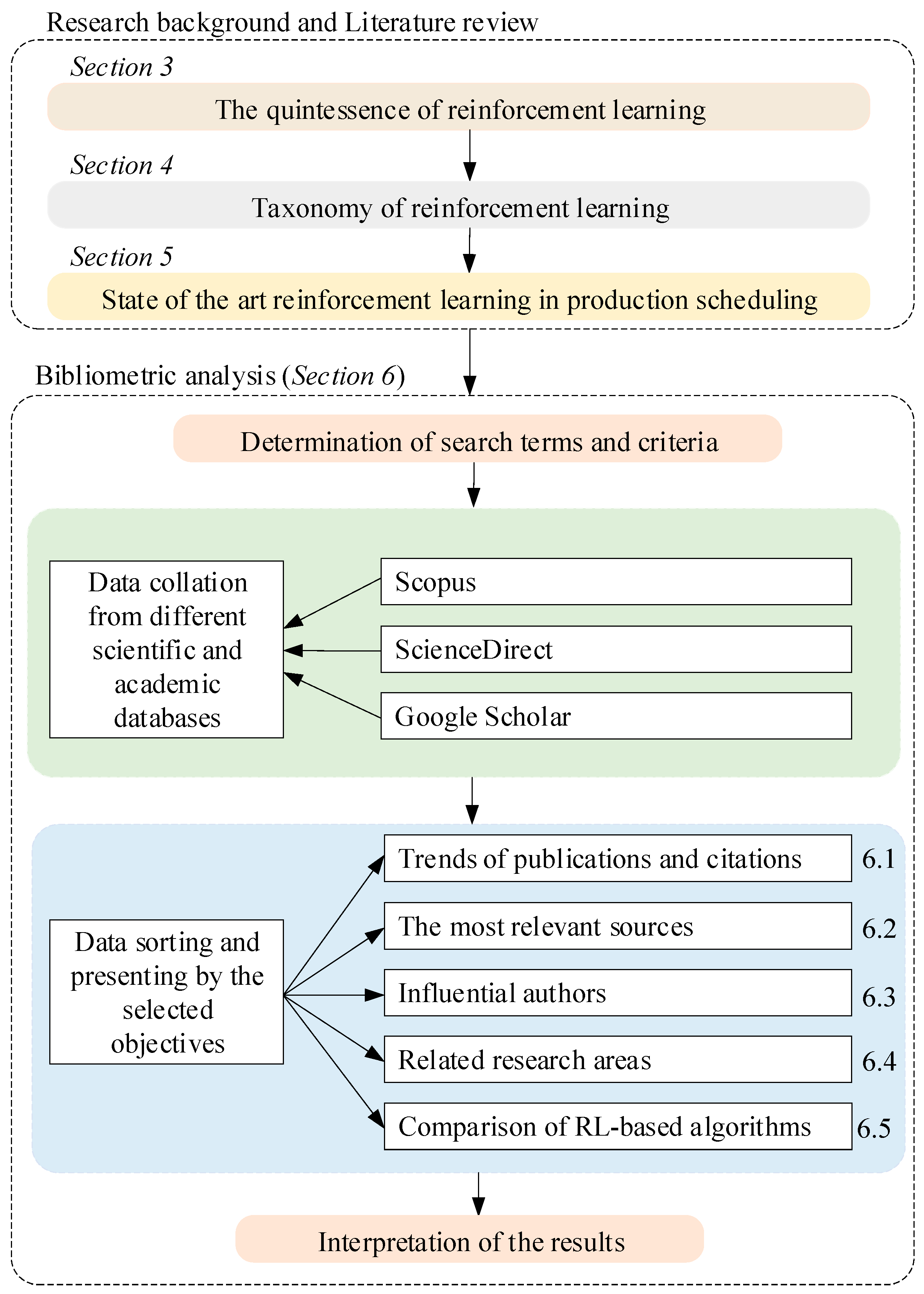

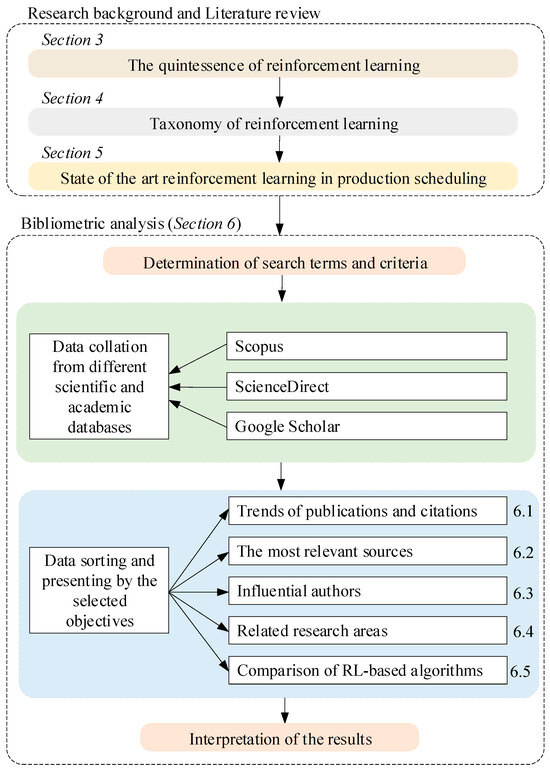

The bibliometric approach employed here, as the main research method, presents a quantitative instrument for monitoring and representing scientific progress by examining and visualizing scientific information. The growing acceptance of bibliometric methods in several academic disciplines indicates that their use brings expected effects [30,31,32,33]. The present investigation of RL-based production scheduling is conducted utilizing the procedure comprising of five coherent phases, as depicted in Figure 1.

Figure 1.

Research methodology framework.

Moreover, this research follows an inferential approach, where the sample of population is explored to determine its characteristics [34,35]. Moreover, the inferential concept of scientific representation proposed by Suárez [36] was applied here to formulate research outputs. Its essence is to employ alternative reasoning to reach results that differ from the isomorphic view of scientific representation in the sense that empirical knowledge plays an important role in inductive reasoning [37,38].

3. The Quintessence of Reinforcement Learning

Artificial Intelligence (AI) has become an integral and significant aspect of our daily life as we approach the conclusion of this decade. Artificial Intelligence has been present for the past seven decades but has gained significant momentum in the recent three decades, leading to extensive research in this field [39]. AI refers to various techniques that allow computers to acquire knowledge and make choices by analyzing data. Out of these techniques, machine learning (ML) has made significant advancements in the past two decades, transitioning from a mere curiosity in laboratories to a widely used practical technology in commercial applications. In AI, ML has become the preferred approach for creating functional software in areas such as computer vision, natural language processing, speech recognition, robot control, and various other applications [40].

Many AI developers now acknowledge that, in several cases, it is more convenient to train a system by demonstrating ideal input-output behavior rather than manually programming it to anticipate the desired response for every potential input. ML has significantly impacted various areas of computer science and sectors that deal with data-driven problems. It includes consumer services, diagnosing defects in intricate systems, and managing logistics chains. ML approaches have had diverse effects on several empirical sciences, such as biology, cosmology, and social science. These approaches have been used creatively to examine vast experimental data [41].

Three major ML paradigms are supervised learning, unsupervised learning, and reinforcement learning [15]. Supervised learning entails the process of training a model using a dataset that has been labeled, meaning that each input is associated with its corresponding accurate output. The objective is to acquire knowledge about a transformation from given inputs to corresponding outputs, which can then be utilized to make accurate forecasts on novel, unobserved data. Unsupervised learning involves the identification of concealed patterns or inherent structures in unlabeled input data. The model aims to acquire knowledge about the fundamental distribution or grouping of the data without explicit instructions on the desired outcome [28]. RL is centered around teaching an agent to make numerous choices by engaging with an environment and receiving rewards or punishments as feedback. The agent acquires the ability to optimize its activities to maximize the total rewards it receives over a period [42].

Due to its dynamic and adaptive methodology, RL is highly effective for addressing problems in the manufacturing systems and robotics research domain [22,28]. RL is distinct from supervised learning, where a model is trained on a predetermined dataset, and unsupervised learning, where the model discovers concealed patterns within data. In reinforcement learning, an agent is educated to make a series of decisions by engaging with an environment. The agent’s objective is to acquire a strategy, sometimes known as a policy, that optimizes the total rewards obtained over time [43].

RL is a decision-making process influenced by behavioral psychology. It involves an agent learning to achieve a goal by acting and receiving feedback from the environment through rewards or penalties. This trial-and-error method is akin to how humans and animals learn from their environment. The critical components of RL are the agent, the environment, actions, states, and rewards. The agent observes the environment, takes actions, and receives rewards, which are used to guide future actions [14]. RL is particularly effective in tasks with uncertain outcomes, such as playing games, controlling robots, and managing resources. In these situations, the agent must balance exploration (trying new actions) and exploitation (using established actions that lead to significant rewards) to develop an optimal policy efficiently [42].

The mathematical framework of RL is commonly represented using Markov Decision Processes (MDPs), which offer a systematic approach to modeling decision-making problems, including both random and agent-controlled outcomes. Solving an MDP entails determining a policy that prescribes the optimal action to be taken in each stage, aiming to maximize the predicted cumulative rewards in the future. The recent progress in deep learning has dramatically improved the capacities of RL [15,16]. The combination of deep neural networks and RL algorithms has resulted in the emergence of deep reinforcement learning (DRL), which has demonstrated exceptional achievements in intricate situations. Prominent instances include AlphaGo, developed by Google, which triumphed over human champions in the game of Go, as well as diverse implementations in autonomous driving, where DRL algorithms acquire the ability to navigate intricate and ever-changing surroundings successfully. Reinforcement learning remains a dynamic and swiftly advancing domain in machine learning, where ongoing research constantly pushes the limits of what artificial agents can accomplish. As we delve into the complexities of RL, we discover fresh opportunities for intelligent systems that possess the ability to acquire knowledge and adjust their behavior in real-time. RL paves the way for a future where computers can seamlessly cooperate with people and function autonomously in more advanced manners [42].

4. Taxonomy of Reinforcement Learning Algorithms

Sutton and Barto [42] emphasize the crucial role of the three essential components in the RL process:

- A policy determines the actions to be taken in each environmental state.

- A reward signal categorizes these actions as beneficial or detrimental based on the immediate outcome of transitioning between states.

- A value function assesses the long-term effectiveness of actions by considering not only a state’s immediate reward but also the anticipated future rewards.

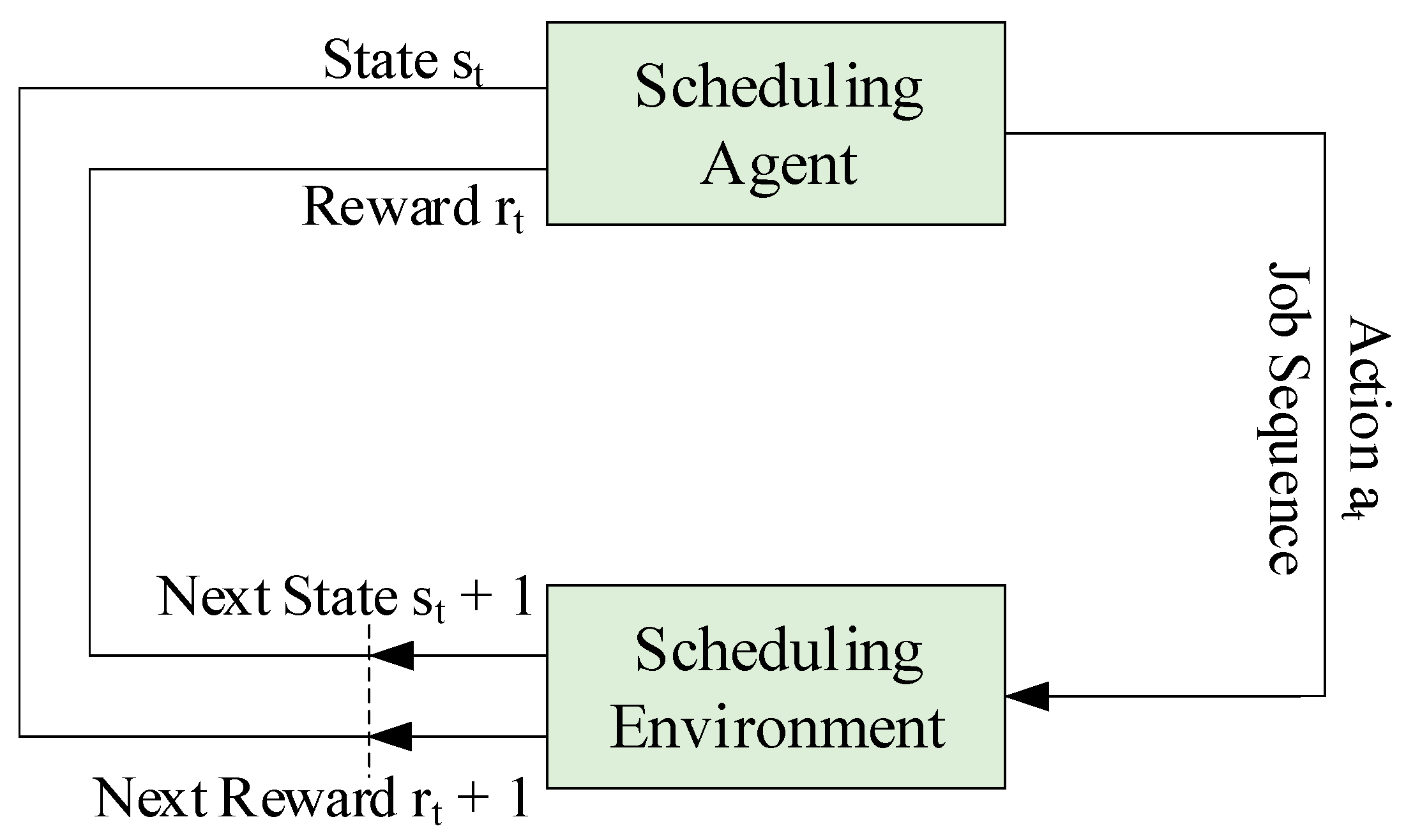

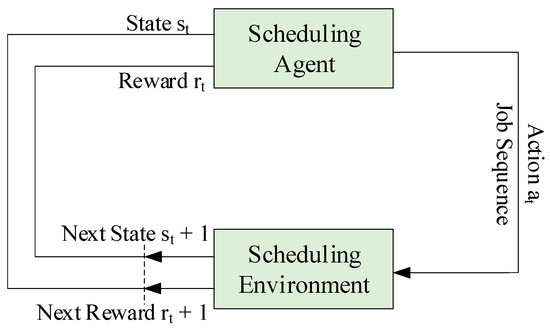

The primary objective of the agent is to optimize the cumulative reward. Consequently, the reward signal is the foundation for modifying the policy and the value function. In specific RL systems, an additional component known as a model of the environment exists, which is optional. It replicates the behaviour of the climate or enables more comprehensive assumptions about the behaviour of the environment. Models are utilized for strategic planning, which involves making decisions based on anticipation of future scenarios before their actual occurrence [24]. Model-based methods are used to handle reinforcement learning problems by utilizing models and planning, in contrast to more straightforward model-free methods. They exhibit apparent trial-and-error learning behaviour and are considered the reverse of planning [26,27]. RL processes can be represented and analysed using a mathematical framework called MDP. The stochastic mathematical model can be formalized using a 5-tuple (s, a, p, r, γ), in which the first symbol ‘s’ represents the finite collection of all possible environment states, while st—is the state at a specific time ‘t’. Letter ‘a’ represents the set of all possible actions, while the action taken at time ‘t’ is marked as at. Symbol ‘p’ refers to the transition probabilities matrix, which defines the conditional probability of transitioning to a new state ‘s’, with a reward ‘r’, given the current state ‘s’, and action ‘a’ (for all states and actions) [44]. Figure 2 shows the RL of the production scheduling cycle.

Figure 2.

RL production scheduling cycle.

The learning methodologies of RL algorithms or agents can be classified into two distinct categories, model-based and model free. Model-based RL is often known as indirect learning. The agent uses a predictive model to learn the control policy from the environment through a limited number of interactions. The agent then applies this model to subsequent episodes to obtain rewards [15]. Model-free RL, or direct learning, refers to learning where an agent learns to make decisions without explicitly building a model of the environment. The agent acquires knowledge of the control policy through experiential learning from the environment, employing trial and error methods to optimize rewards without relying on any pre-existing model. This approach showcases the adaptability of RL algorithms, allowing them to learn and evolve in dynamic environments [45].

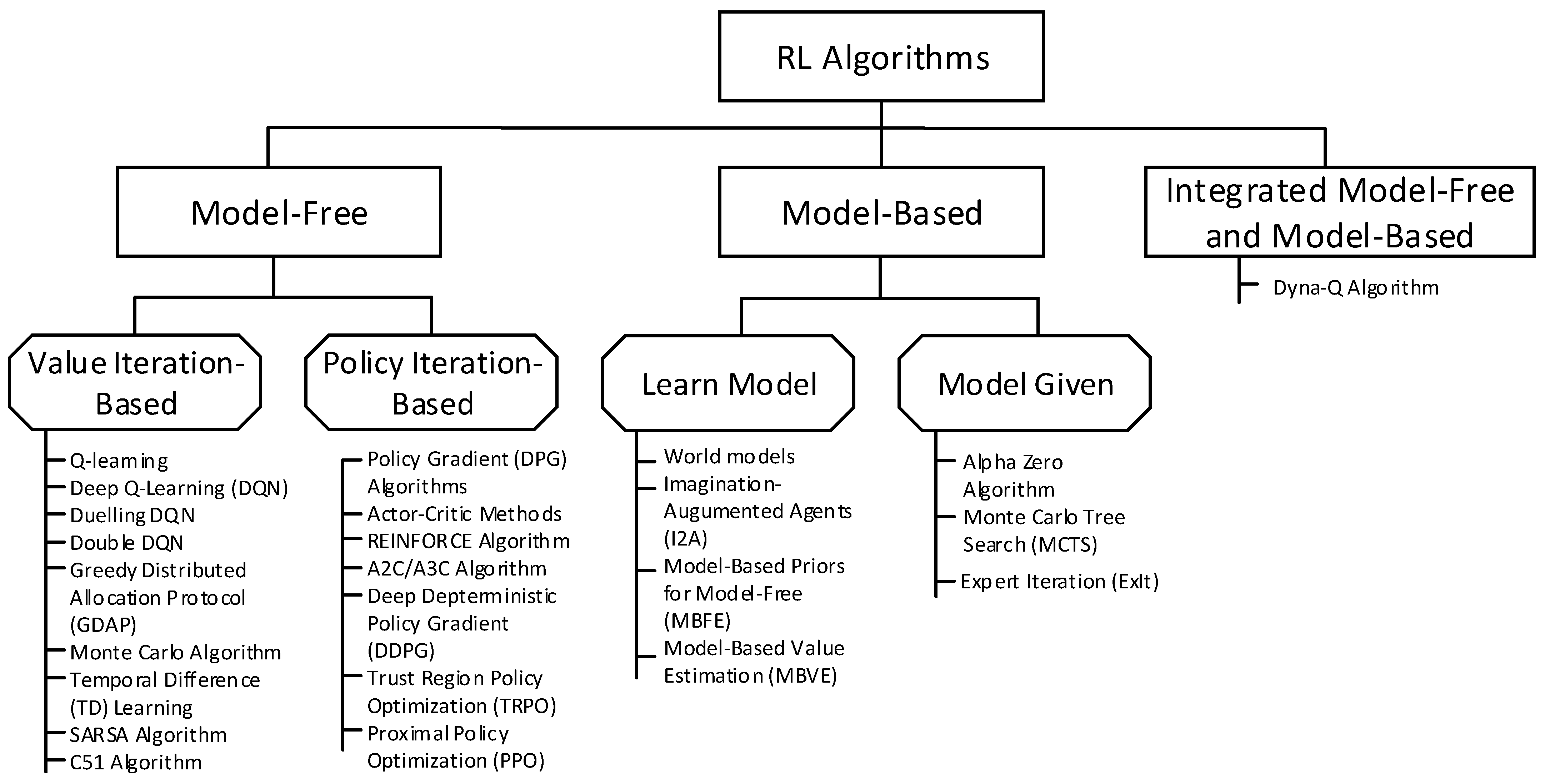

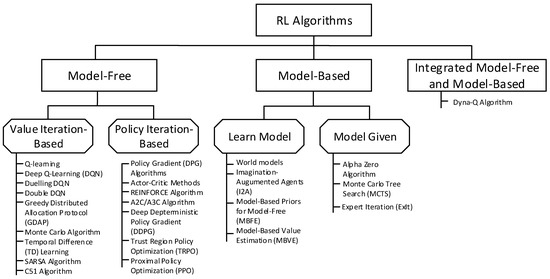

As there are many different RL algorithms, it is sensible to understand the difference among them. For this purpose, classification systems have been established to categorize them by different criteria. For instance, ALMahamid and Grolinger [46] proposed to categorize RL algorithms based on the environment type. RL algorithms can also be classified from the perspective of policy: on-policy vs. off-policy learning [47,48]. RL are mostly classified according to their learning approaches [15,45]. With this regard, updated classification of RL algorithms from the perspective of learning approaches used is provided in Figure 3.

Figure 3.

Classification of RL algorithms.

5. State of the Art of Reinforcement Learning in Production Scheduling

Deterministic scheduling encompasses organizing and planning tasks under fixed parameters and known constraints. Its goal is to optimize production efficiency and minimize turnaround times. A comprehensive work [49] explores fundamental principles and advanced techniques in deterministic scheduling, providing insights into various algorithms and their applications. Pinedo’s work [50] thoroughly reviews deterministic scheduling theory, algorithms, and practical systems, highlighting their significance in manufacturing and service operations. Flowshop scheduling involves sequencing operations on multiple machines in a fixed order to minimize makespan or total completion time. Allahverdi [51] discusses various flowshop scheduling problems, including setups, and reviews algorithms and approaches to address them. Panwalkar and Smith [52] provide a seminal survey of classic and contemporary research on flowshop scheduling, covering both exact and heuristic methods.

Reinforcement learning (RL) techniques have gained popularity, affirming the interest in agent-based models. Previous studies have primarily focused on using RL to solve job-shop scheduling challenges. The manufacturing sector faces challenges such as customer satisfaction, system degradation, sustainability, inventory, and efficiency, impacting plant sustainability and profitability. Industry 4.0 and smart manufacturing offer solutions for optimized operations and high quality products. Paraschos et al. [53] integrate RL with lean green manufacturing to create a sustainable production environment, reducing environmental impact through minimized material consumption and lifecycle extension via pull production, predictive maintenance, and circular economy practices. Rigorous experimental analysis validates its effectiveness in enhancing sustainability and material reuse.

Recently, significant progress has been made in using RL to tackle several combinatorial optimization problems, including production scheduling, Vehicle Routing Problem, and Traveling Salesman Problem [54,55,56,57,58,59]. In RL, a production scheduling task can be viewed as an environment in which an agent operates, developing a policy through offline training by interacting with this environment. This approach offers a novel way to address scheduling challenges, requiring stringent real time constraints, such as dynamic job shop scheduling problems [22,60,61,62,63,64].

In production scheduling problems, value based RL algorithms are commonly used, including Q-learning, temporal difference TD(λ) algorithm, SARSA, ARL, informed Q-learning, dual Q-learning, approximate Q-learning, gradient descent TD(λ) algorithm, revenue sharing, Q-III learning, relational RL, relaxed SMART, and TD(λ)-learning. In Deep Reinforcement Learning (DRL), many value-based approaches are employed, such as DQN (Deep Q-Learning Networks), loosely-coupled DRL, multiclass DQN, and the Q-network algorithm [43,44,65,66,67,68,69,70,71,72,73].

Qu et al. [25] applied multi-agent approximation Q-learning, demonstrating its effectiveness through numerical experiments in static and dynamic environments and various flow shop scenarios. The goal was to create and implement an efficient manufacturing schedule considering realistic interactions between labor skills and adaptive machinery. Luo [74] uses the DRL method to address dynamic flexible job shop scheduling, focusing on scenarios to reduce tardiness. Luo et al. [75] first employed ‘hierarchical multi-agent proximal policy optimization’ (HMAPPO) for the constantly changing partial-no-wait multi-objective flexible job shop problem (MOFJSP) with new job insertions and machine breakdowns.

Machine deterioration during production, increases operational expenses and causes workflow disruptions, necessitating costly corrective maintenance. Preventive maintenance prolongs machine life but entails downtime and costs. The study [76] addresses these challenges by optimizing production and maintenance schedules in multi-machine systems, introducing knowledge enhanced Reinforcement Learning to enhance RL effectiveness in guiding production decisions and fostering machine collaboration. Comparative evaluations in deterministic and stochastic scenarios highlight the algorithm’s ability to maximize business rewards and prevent failures.

According to Wang et al. [54], the production scheduling process involves manufacturing various items using a hybrid production pattern incorporating the multi-agent Deep Reinforcement Learning (MADRL) model. Popper et al. [60] proposed MADRL to optimize flexible production plants considering factors like efficiency and environmental targets. Du et al. [77] used the Deep Q-Network (DQN) method to address the flexible task shop scheduling problem (FJSP) amid changing processing rates, setup time, idle time, and task transportation. This approach integrates state indicators and actions to enhance the DQN component’s efficiency. Additionally, it includes a problem driven exploratory data analysis (EDA) component to improve data exploration.

Li et al. [78] used a Deep Reinforcement Learning (DRL) method to solve the discrete flexible job shop problem with inter tool reusability (DFJSP-ITR), addressing the multi-objective optimization problem of minimizing combined makespan and total energy consumption. The proposed solution includes few generic state characteristics, a genetic programming based action space, and a reward function. Zhou et al. [79] proposed using online scheduling strategies based on RL with composite reward functions to improve industrial systems effectiveness and robustness. The work [18] utilized an advanced DRL algorithm to optimize production scheduling in complex job shops, highlighting benefits like enhanced adaptability, global visibility, and optimization. Some authors have focused on developing various DRL algorithms capable of formulating complex strategies for production scheduling. For instance, Luo et al. [26] created an online rescheduling framework for the dynamic multi-objective flexible job shop scheduling problem, enabling minimization of total tardiness or maximization of machine usage rate. Zhou et al. [27] used the deep Q-learning technique to address dynamic scheduling in intelligent manufacturing. Another dynamic scheduling method using deep RL is proposed in [80], employing proximal policy optimization to determine the ideal scheduling policy. Wang and Usher [81] examined using the reinforcement Q-learning algorithm for agent based production scheduling. The integration of RL with production scheduling signifies a major advancement in optimizing manufacturing processes. As research and technology continue to evolve, it promises even greater efficiency and adaptability in the industry. Delving into bibliometric data can provide valuable insights into research trends, influential works, and key contributors in this rapidly developing field.

Industry 4.0 and Smart Industry lead contemporary manufacturing and production. Industry 4.0 integrates advanced technologies like the Internet of Things (IoT), Artificial Intelligence (AI), Big Data, and Cyber-Physical Systems to create highly automated and networked production environments. The concept of intelligent industry emphasizes real-time data analytics, predictive maintenance, and adaptable manufacturing processes to improve efficiency, flexibility, and production [27]. Implementing RL algorithms is crucial in these paradigms, facilitating machine learning and future prediction through interactions in the production environment. This enhances scheduling, resource allocation, and quality control, enabling firms to achieve high automation and precision in decision-making, leading to more resilient and responsive modern manufacturing [54]. Table 1 illustrates the contribution of RL algorithms to production scheduling

Table 1.

Contributions of reinforcement learning on production scheduling.

6. Bibliometric Analysis of Studies on RL in Production Scheduling

The bibliometric analysis of RL in the context of production scheduling is focusing here on its theoretical foundations and practical implications, between the years 1996 and 2024. The first two phases involve the collection of bibliographic data, which was gathered via Scopus database. The search was restricted to this database due to its prominence as a comprehensive repository of scientific literature and its frequent utilization in academic assessments. The inclusion criteria for the purpose of analysis presented in Section 6.1, Section 6.2, Section 6.3 and Section 6.4 encompassed publications that contained the terms “reinforcement learning” and ‘production’ and ‘scheduling’. Besides that, the terms “reinforcement learning” and “deterministic scheduling” or “reinforcement learning” and “uncertain scheduling” were used for analysis carried out in Section 6.5. In the next two phases of the data sorting and presentation, the Microsoft Excel and VOSviewer software 1.6.20 were employed to extract the necessary information, such as the annual scientific outputs, most relevant sources, most cited author, and keyword co-occurrence.

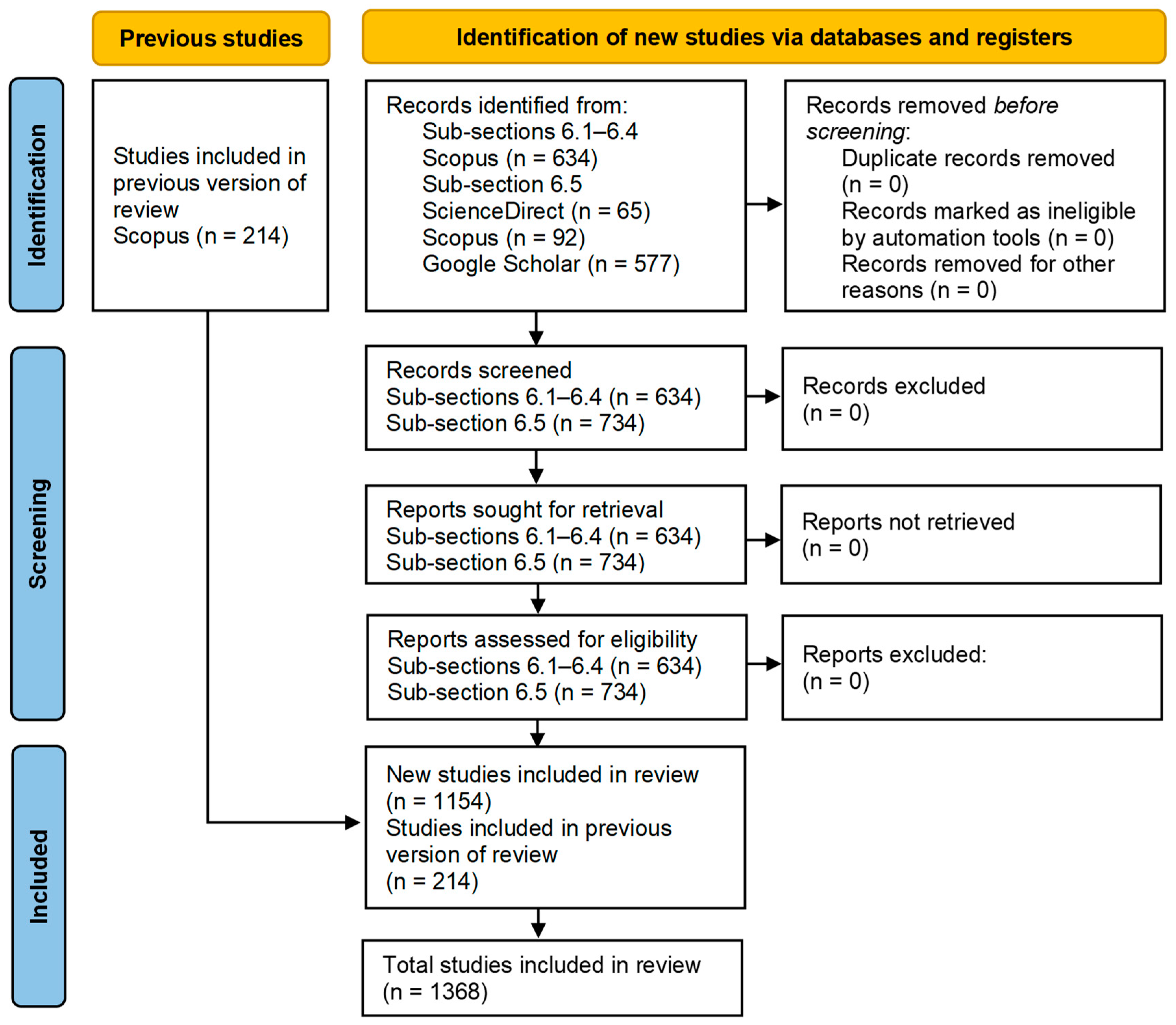

The process of selected studies for mentioned research methodology is synthetized in Figure 4, according to PRISMA guidelines.

Figure 4.

PRISMA 2020 flow diagram for updated systematic reviews which included searches of databases.

Using the above-specified keywords and search criteria (for Section 6.1, Section 6.2, Section 6.3 and Section 6.4 n = 634 publications and for Section 6.5 n = 734 publications), together 1368 co-authored articles were found until 27 May 2024, when all document types were included in review analysis (new studies n = 1154 and studies included in previous version of review n = 214).

Review analysis of Section 6.1, Section 6.2, Section 6.3 and Section 6.4 included 634 co-authored articles searched within “Article title, Abstract and Keywords”. The primary research areas that receive significant attention in the articles include Computer Science, Engineering, Mathematics, Decision Sciences, Business, Management and Counting, Energy, Chemical Engineering, Material Science, and other topics. The 634 publications in our sample were categorized into 19 distinct research topics. The eight primary research areas, along with their article distribution are displayed in Table 2.

Table 2.

Associated research disciplines.

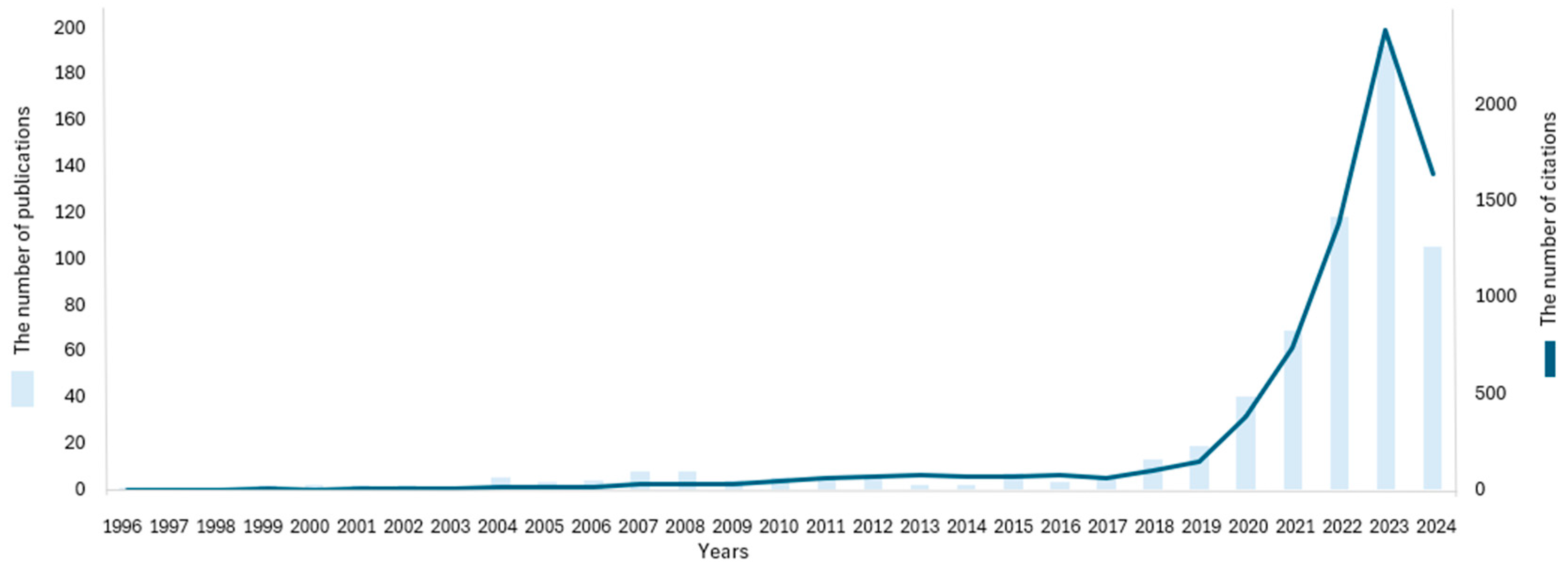

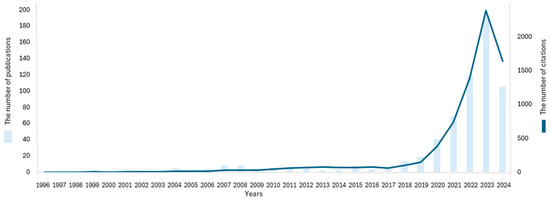

6.1. Trends of Publications and Citations

Numbers of published articles and their citations usually provide sufficiently reliable information to anticipate further development of examined research domain. Considering the numbers of publications and citations in the field of production planning using learning algorithms keeping around 29 years of data, the trend analysis graph has been derived. For this purpose, the same search terms and keywords were applied as in case of identification of major research categories, but the types of documents were extended to all the types. The reason of changing document types was to find out the initial research initiatives in this domain. The same search conditions were applied in the rest of the paper to include the larger sample of publications for the purpose of the investigation. The annual distribution of publications (out of the total 634 items) and their citations from the same database during the period from 1996 to 2024 is illustrated in Figure 5.

Figure 5.

Publication and citation trends for RL in production scheduling field from 1996 to 2024.

An examination of the yearly scientific outputs between 1996 and 2018 amply demonstrated a relatively stable low number of articles published annually. Throughout these two decenniums, the need for RL in production scheduling in real conditions apparently did not appear. During the 2019–2023 era, there was a noticeable rise in the number of publications that were registered in the most recognized database for peer reviewed content. This phenomenon can be primarily attributed to the advancements in artificial intelligence. It is noteworthy to emphasize that if this exponential trend of increasing the number of publications continues as can be seen in year 2024, then one can anticipate that during the next decade the importance of RL in manufacturing scheduling will significantly increase.

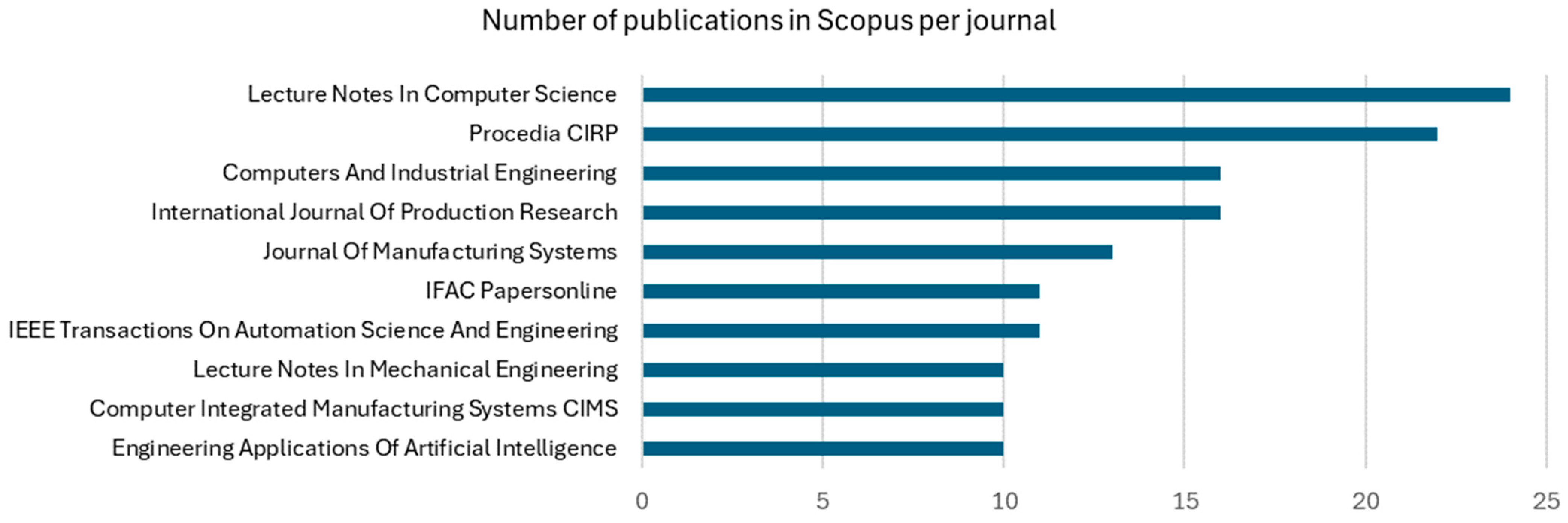

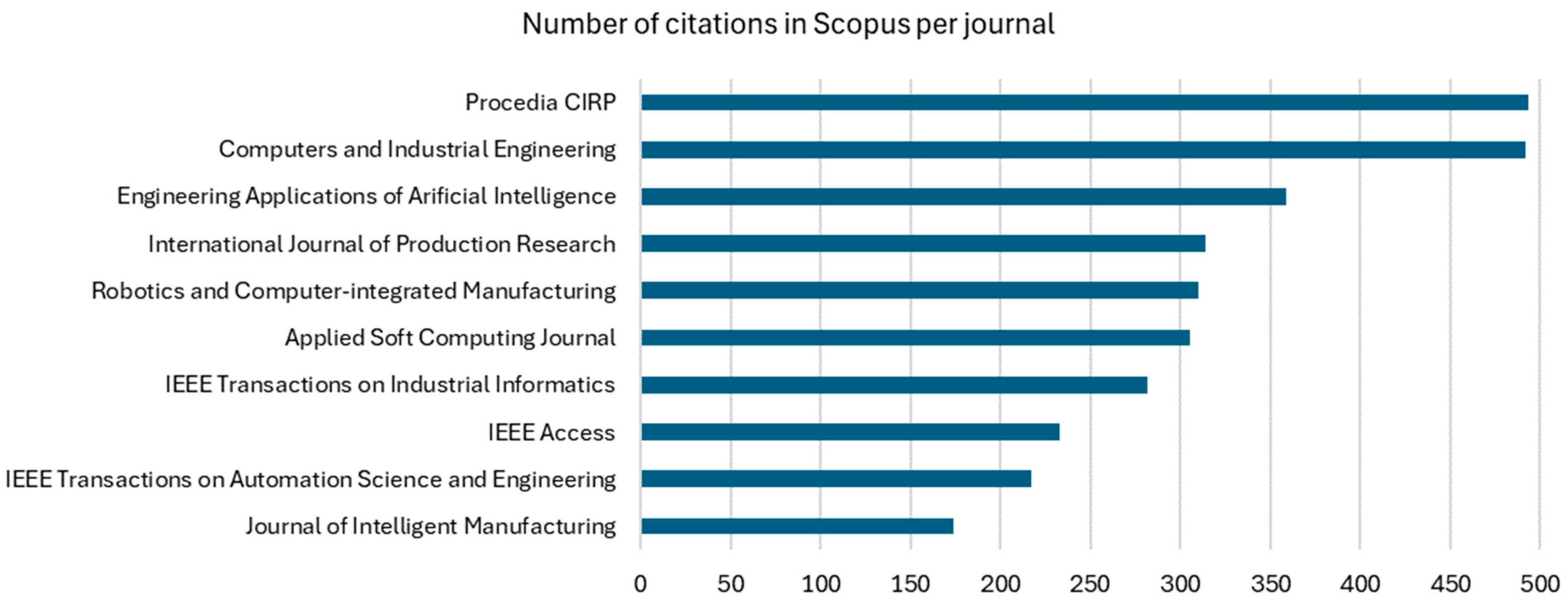

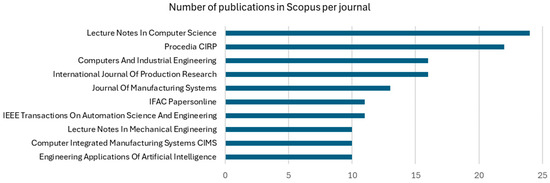

6.2. Most Relevant Sources

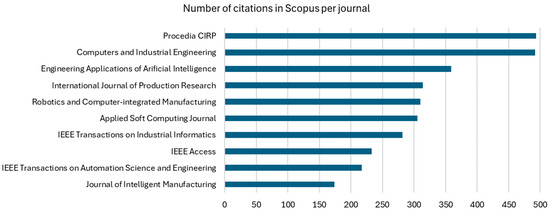

An identification of the most relevant publications from an initial dataset presents common approach in bibliometric research since such sources usually publish influential research that attracts widespread interest. As a rule, the most productive journals have the greatest influence on the development of science in a particular field since they publish more articles and generate more citations [77,78,79]. As for as the relevancy of literature in the explored field, the top ten journals that have published the most articles, are identified here. Also, the top ten most cited scientific journals are mentioned in this Sub-section. Figure 6 and Figure 7 categorize journals according to these two criteria to show that they represent documents that exhibit the utmost relevance to RL in production scheduling.

Figure 6.

Top ten published journals for RL on production scheduling field from 1996 to 2024.

Figure 7.

Top ten cited journals for RL on production scheduling field from 1996 to 2024.

The Lecture Notes in Computer Science, Procedia CIRP and Computers and Industrial Engineering along with the twelve other journals are considered the most relevant scientific publications in this field. It can also be noted that all fifteen journals listed in Figure 6 and Figure 7, regardless of the results of the metrics used, can be empirically ranked as widely recognized for disseminating advanced research on reinforcement learning applied to production scheduling. In addition, their scientific rigor is also indicated by the fact that out of the fifteen identified journals, eight met high standards for quality as they are indexed for Current Content Connect journals with a verifiable impact on steering research practices and behaviors [80,81].

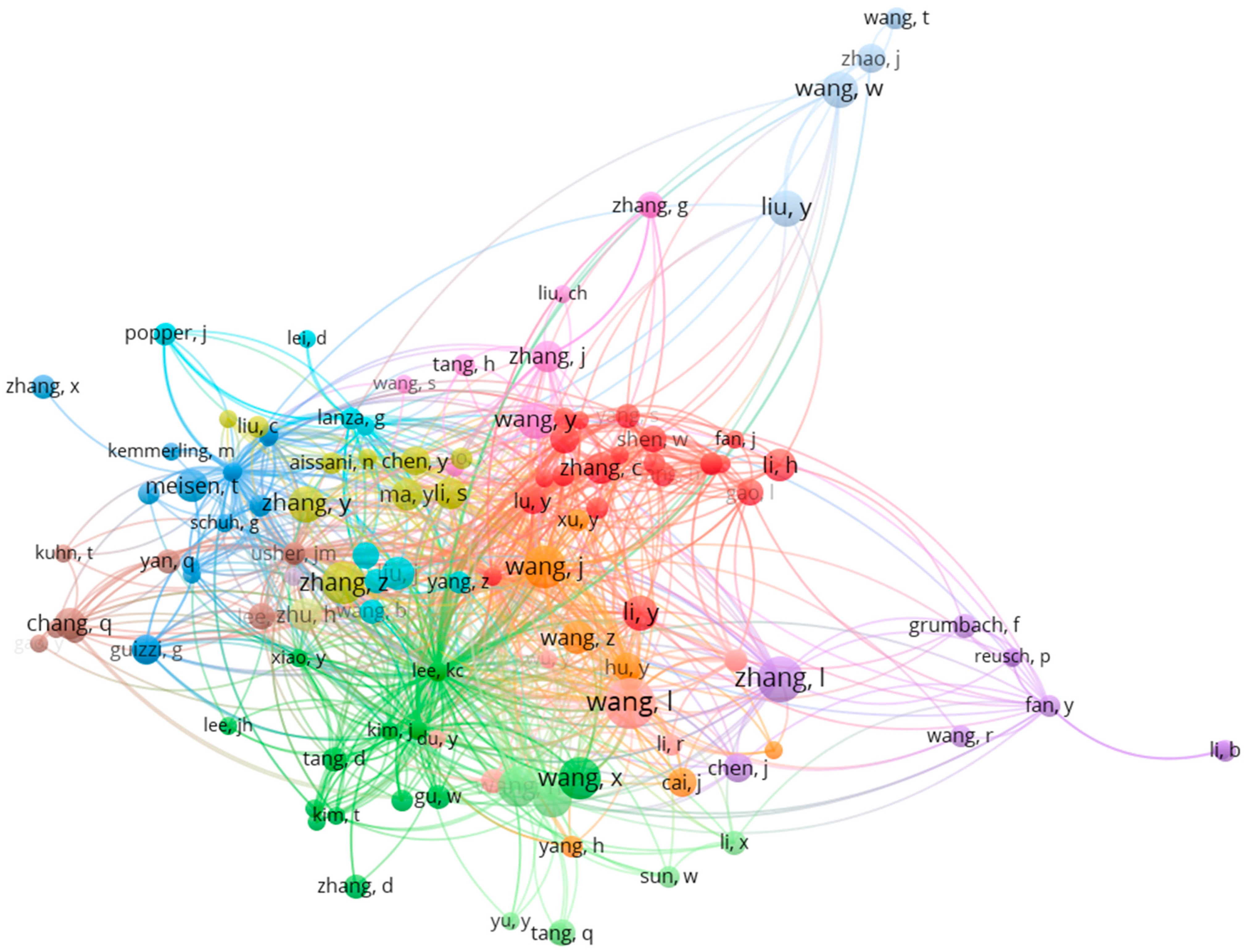

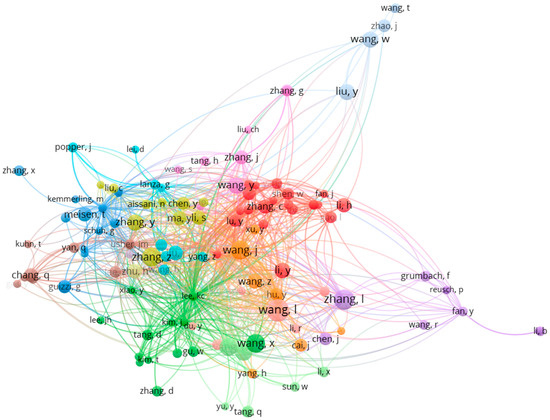

6.3. Most Cited Authors

Since the 1996s, many authors have made significant contributions to the development of this field. In this Sub-section, the intention is to present some of those authors who made significant intellectual contributions to the research. The analysis of the most cited authors was performed using data from Scopus database. In addition, co-citation analysis was carried out for each publication source (out of the total 634 items) to reveal the network between the studies. For this purpose, VOSviewer software [82] has been used. To obtain relevant information and clear graphic representation of complex relations, the following filters were employed. Filter 1: Maximum number of authors per document—15; Filter 2: Minimum number of documents of an author—3; Filter 3: Minimum number of citations of an author—10. Moreover, the full counting method has been applied meaning that the publications that have co-authors from multiple countries are counted as a full publication for each of those countries. The co-citation network of the selected sample of scholars using these settings is visualized in Figure 8.

Figure 8.

Co-citation network of the authors that have made significant contributions to the development of this field from 1996 to 2024.

This co-citation network map shows, among other things, scholars that have received the highest number of citations in the last 29 years. Of the 1376 cited authors, 138 meet the above-mentioned criteria. Each scholar from each included publication is represented by a node in this network. The size of each node indicates frequency of citation of the subject’s scholarly works. An edge is drawn between two nodes if the two scholars were cited by a common document. To rank influential scholars in the given domain based on their citation rates, the ten most cited authors were selected. Those ten authors are listed in Table 3.

Table 3.

Most influential authors.

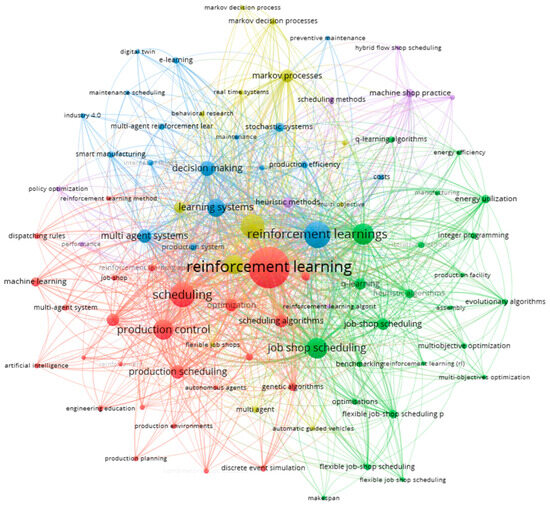

6.4. Identification of the Related Research Areas

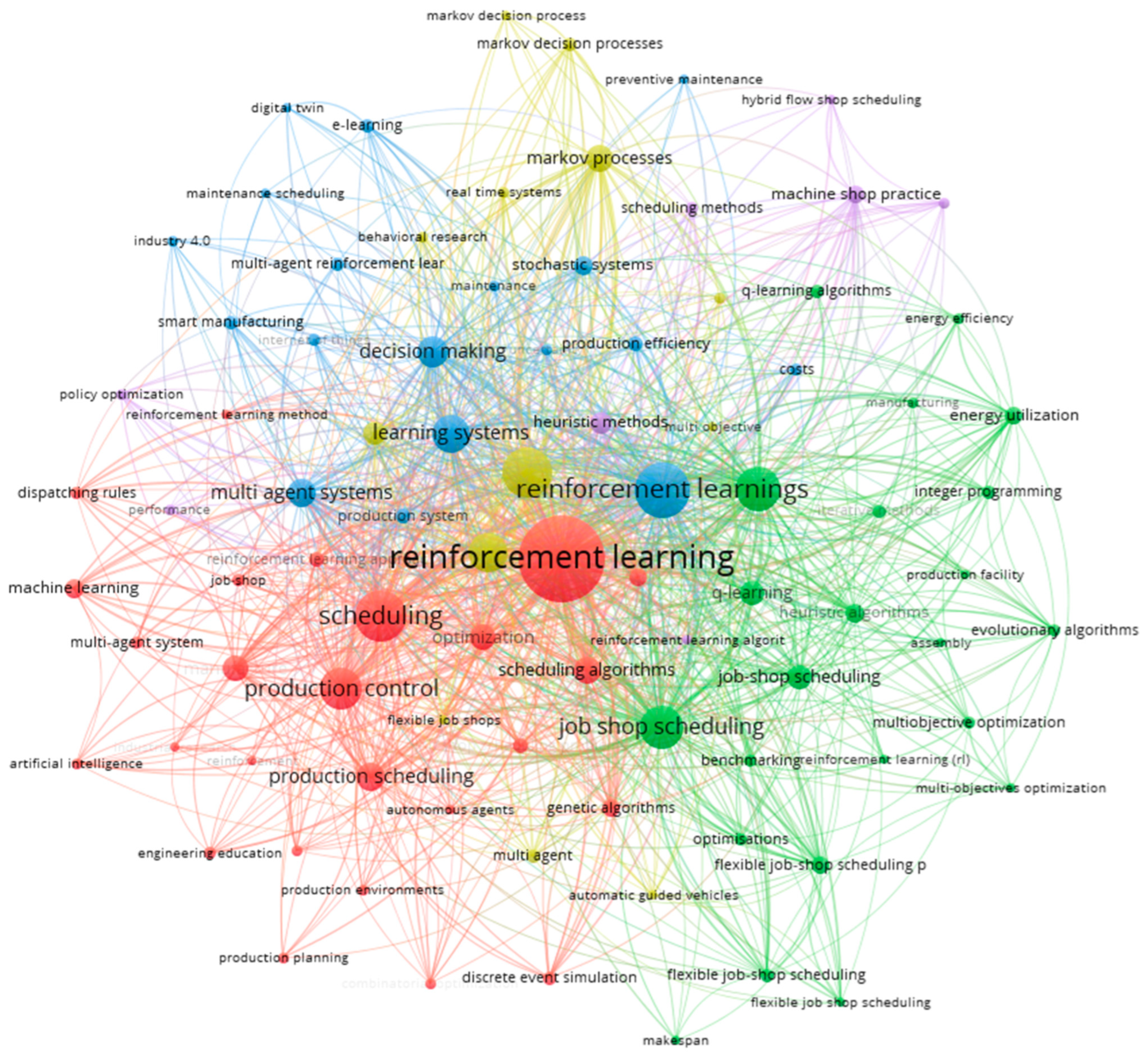

The goal of this part of the article is to identify areas of research in which the issue of production scheduling based on RL is of interest. For this purpose, the keyword analysis application has been employed to help separate important research themes which has received a high interest of researchers from less important ones. To obtain relevant and representative categories not including less significant ones, the following setting has been used: Minimum number of occurrence of keywords—14. Based on this restriction, 88 keywords meet the threshold from the total 3767 keywords. Those main keywords that were produced automatically from the titles in the papers on production scheduling based on RL along with their occurrence are shown in Figure 9.

Figure 9.

Co-occurrences keywords for RL on production scheduling field from 1996 to 2024.

As can be seen, topics can be divided into five clusters based on a computer algorithm, while each cluster has a different color as shown in Figure 9. The keywords co-occurrence map highlights these clusters where the darker the color, the greater the density value is. It allows to identify relevant research topics and their mutual relationships. Based on the obtained bibliometric results extracted from VOSviewer, ten related topics that are very close to the explored research domain were identified as shown in Table 4.

Table 4.

Most related methodologies and implementation areas of production scheduling based on RL.

The results from Table 4 point to their consistency with practical reality and operation research goals. For example, in recent years, there has been evidently increased interest in using reinforcement learning for optimization of real-time job scheduling tasks [76,77,78,79,80,81,82,83,84,85,86]. This fact can be correlated with the continuing trend of mass customization in the production of consumer goods [87,88]. As known, for mass customization is characteristic to meet dynamically changing user requirements in time, while customized products need to be completed by different deadlines. Accordingly, efficient real-time job-scheduling algorithms based on DRL become essential. The next important method that is ranked among the top 10 co-occurrences keywords is production control. It uses different control techniques to meet production targets regarding production schedules and quality (see, e.g., [89,90,91,92]). The next important co-occurred keyword in Table 3—multi agent systems. In general, incorporating multi-agent systems into reinforcement learning for production scheduling offers numerous advantages in terms of flexibility, scalability, and adaptability [93,94,95]. By enabling decentralized decision-making and continuous learning, these systems can effectively handle the complexities and dynamics of modern production environments leading to more efficient and resilient scheduling solutions [96]. Among the co-occurrences keywords it is possible to highlight also ‘smart manufacturing’ that represents the implementation domain of production scheduling based on RL. Even though smart manufacturing has also become a buzzword, which also has its drawbacks, this conception is gradually being established as the new manufacturing paradigm. On the other hand, complexity of smart manufacturing network infrastructures becomes higher and higher, and the uncertainty of such manufacturing environment becomes a serious problem [25]. These facts lead to the necessity of applying advanced dynamic planning solutions that also includes production scheduling using RL. This paragraph simultaneously answers to the main research question formulated in Section 1.

Further, application of RL in production scheduling will be here analyzed through bibliometric means from a viewpoint of different scheduling problems.

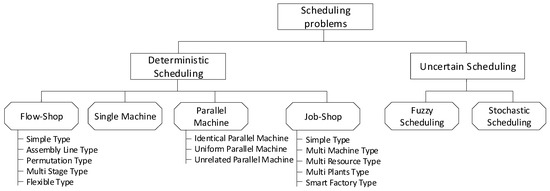

6.5. Comparison of Applications of RL in Different Scheduling Problems

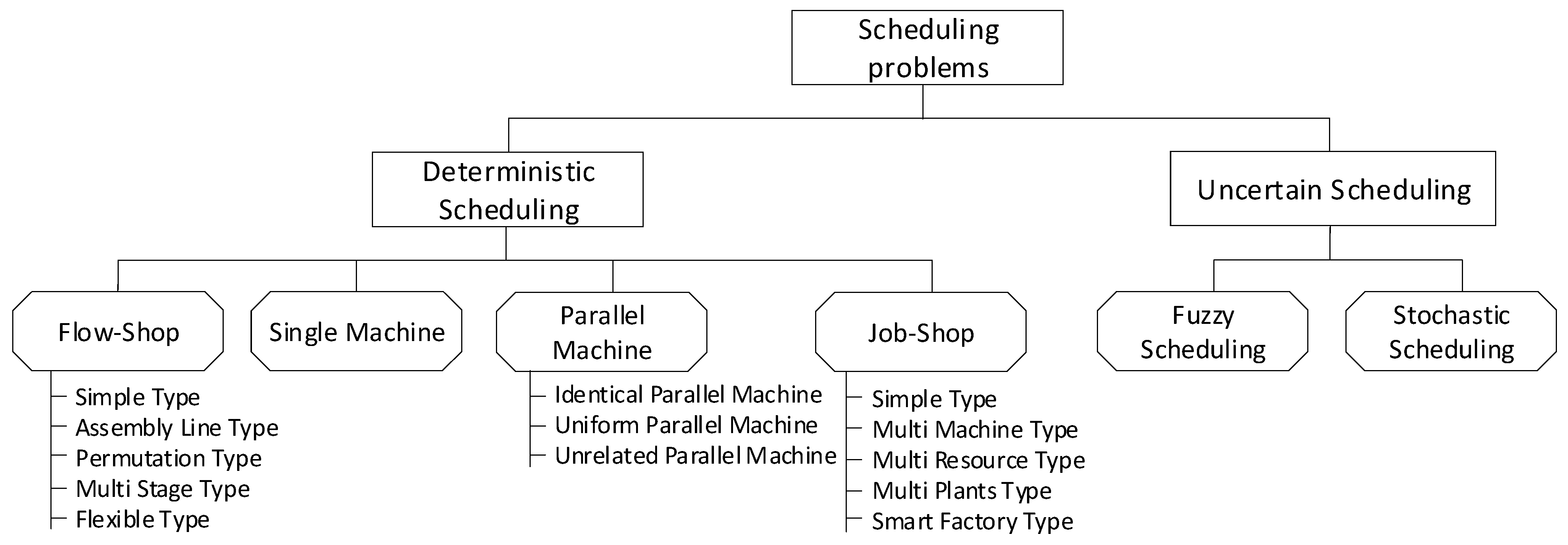

In general, scheduling methods are categorized based on time at which decision is taken into dynamic and static ones. Dynamic scheduling is related to real-time systems that require responding to changing demand requirements, while static scheduling is off-line and focuses on short-term time horizon by setting a fixed timeline for process completion. It has been found by Wang et al. [28] that production scheduling based on RL approaches are largely adopted to solve dynamic scheduling problems. Scheduling problems can also be categorized by the nature of scheduling environment as deterministic, when processing parameters are known and invariable, and non-deterministic, when input parameters are uncertain [97]. Production scheduling problems under this second criterion can be classified as shown in Figure 10.

Figure 10.

Basic classification of scheduling problems.

Understanding differences among specific scheduling problems is crucial for selection of the appropriate optimization and scheduling techniques with the aim to improve efficiency and productivity in various industries. The main difference among scheduling problems can be seen, e.g., in routing flexibility and complexity. For instance, job-shop problems have a high routing flexibility and complexity, while for flow-shop and single machine problems is typical low flexibility, and parallel machine problem has moderate routing flexibility and complexity [98,99]. Their differences also lie in nature of application domains for which they are intended. For example, for a customized manufacturing is typical job-shop scheduling [100,101], for assembly lines is mostly considered flow-shop scheduling problems [102,103], and open job shop scheduling is frequently applicable in healthcare [104,105].

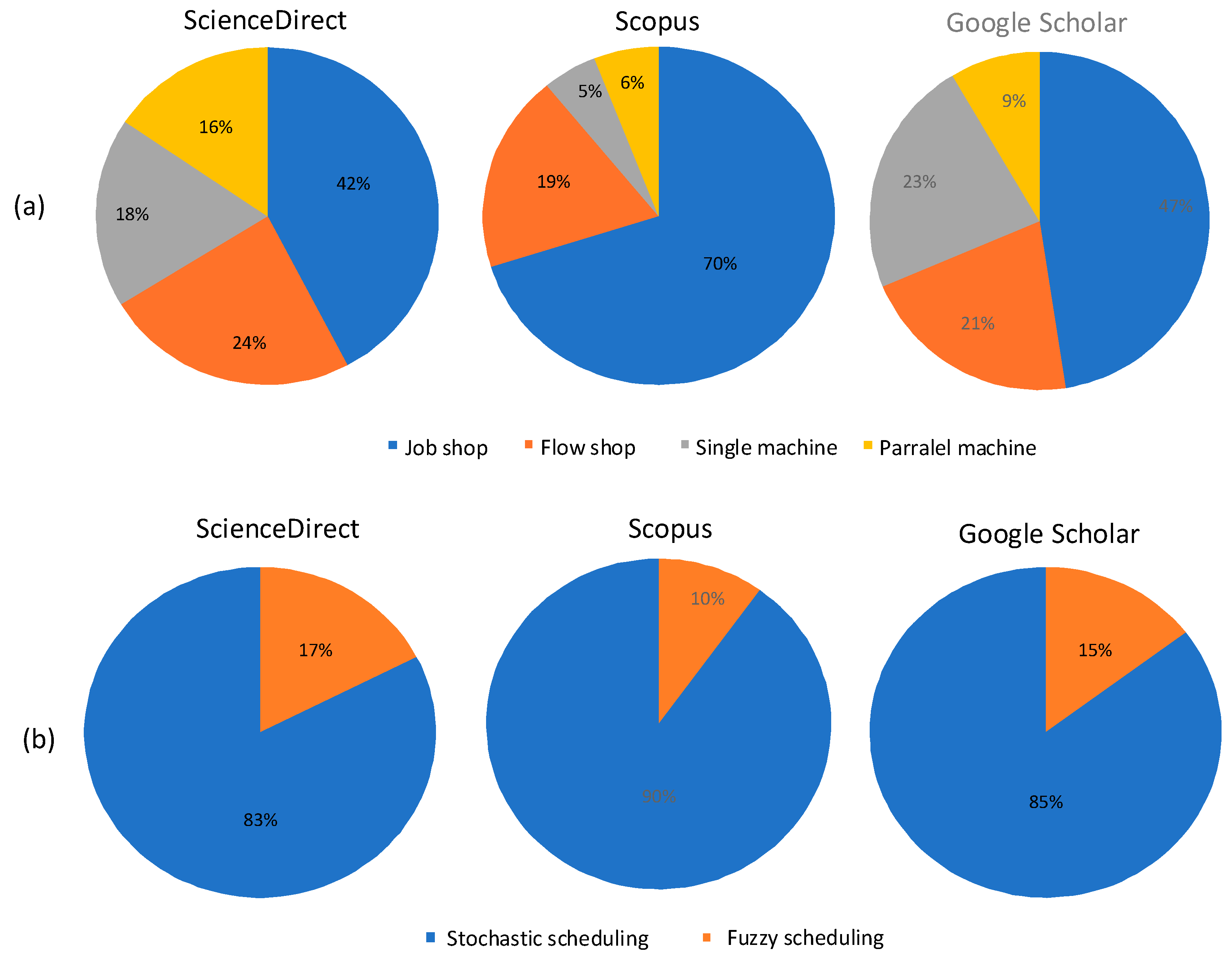

The classification is further used to explore an application frequency of RL in the identified categories of the scheduling problems. First, deterministic versus uncertain scheduling is compared according to this view. For this purpose, the search terms were defined by combining the following keywords: “Reinforcement Learning” along with “Deterministic scheduling” or “Uncertain scheduling”, respectively. Data together with inclusion criteria were collated by searching: (i) ScienceDirect database—All fields, All years, and All document types; (ii) Scopus database—All fields, All years, All document types, and (iii) Google Scholar database—All years and All document types. Together 734 publications were analyzed. Web of Science was not used in this procedure due to the low occurrence of scientific works on the subjects. The obtained data in this way are provided in Table 5.

Table 5.

Comparison of deterministic and uncertain scheduling methods based on RL.

From Table 5 is clear that deterministic scheduling based on RL is in a dominant position against uncertain scheduling using RL. Therefore, to identify the most promising area(s) of production scheduling in the context of RL, deterministic scheduling and uncertain scheduling approaches has been examined in the following. Key words used for that purpose were:

- (a)

- Scheduling AND “reinforcement learning” AND specific deterministic scheduling problems (“job shop”, “flow shop”, “open shop”, “single machine”, and “parallel machines”).

- (b)

- “Reinforcement learning” AND specific uncertain scheduling problems (“fuzzy scheduling” and “stochastic scheduling”).

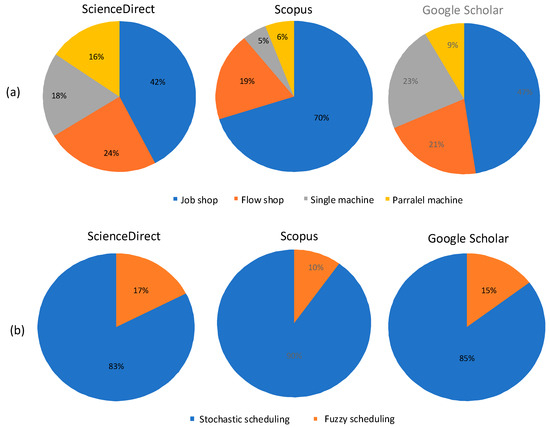

Data were retrieved from ScienceDirect database using filters ‘Research articles’, and ‘All years’; Scopus database using filter ‘Article titles’, ‘Abstracts’, ‘Keywords’, All years, and All document types, and Google Scholar database—All years and All document types. Obtained results are graphically presented and compared in percentage with each other in Figure 11.

Figure 11.

Comparison of (a) deterministic scheduling methods and (b) uncertain scheduling methods from their occurrences in literature.

One can see from Figure 11a that RL-based scheduling algorithms are mostly used to solve job shop scheduling problems [106], while flow shop production environment is the second most important type of scheduling problems, where RL is applied. Obviously, RL-based algorithms found application in other types of scheduling problems. As the most noticeable of them, it is possible to mention energy efficiency scheduling, multi-objective scheduling, and distributive scheduling. The last-mentioned scheduling problem is exploited especially in intelligent manufacturing systems [107]. Analyzing the results from Figure 11b, it is observed that RL is predominantly used in stochastic scheduling methods comparing with its application in fuzzy scheduling methods. Moreover, the effectiveness of stochastic scheduling using RL has been also demonstrated through benchmarking studies [29,108,109]. Although the application of RL in fuzzy scheduling is promising, it remains less common due to additional complexities involved in integrating fuzzy logic with RL methods [110,111]. However, the results of this quantitative approach for comparison of application of RL in different scheduling problems provide some useful managerial insights into scheduling practice. Nevertheless, further exploration and research on qualitative aspects of RL-based scheduling, particularly in the context of smart manufacturing, is required.

7. Comparison of the Presented Research and Previous Review Articles

As was already mentioned in introduction, this review paper continues the work of the two earlier articles [28,29] by analyzing some new relevant aspects of production scheduling methods using RL and provides some updated information regarding recent developments in the field. The purpose of this section is to provide a clear view on differences and similarities of the three works in terms of their subject matters. The following Table 6 contains a summary comparison of investigation areas included in the mentioned works.

Table 6.

The comparison of the review papers on RL in Production Scheduling.

From this table it can be see that the compared papers are mostly complementary to each other.

8. Conclusions

The existing body of research on production scheduling primarily consists of studies conducted within the domains of Computer Science, Engineering, Mathematics, Decision Sciences, Business, Management and Counting, Energy, Chemical Engineering, and Material Science. These scientific disciplines, notably ‘Engineering’ and ‘Computer Science’ have exerted significant influence on development of production scheduling based on RL.

The examination of bibliometric findings frequently indicates that an increase in the quantity of published articles is associated with recognition of progressive trends in the subject. Also, for this reason, a bibliometric analysis is becoming more and more beneficial in a variety of academic fields since it makes mapping scientific information and analyzing research development objective and repeatable. The use of this method enables us to identify the networks of scientific collaboration, to establish connections between novel study themes and research streams, as well as show the connections between citations, co-citations and published productivity in the field.

The main contribution of this article can be summarized by two steps:

- (i)

- This review brings additional insights into RL in production scheduling by providing the following new features:

- The citation trend for RL on production scheduling field from 1996 to 2024 (see Figure 5) has been carried out that shows that increasing trend starting from 2019 to present rapidly continues in noticeable rise in their number.

- The analysis of the most relevant sources from the viewpoint of number of their citations (see Figure 7) has been performed to identify the most impacted sources where the latest knowledge in this field is available.

- The most influential authors in this domain were identified (see Table 3) for the determination of the cutting-edge state in RL-based production scheduling.

- The quantitative comparison of deterministic and uncertain scheduling methods based on RL has been conducted (see in Table 5). It showed that deterministic scheduling methods using RL is in a dominant position against RL-based uncertain scheduling techniques.

- The quantitative comparison of stochastic and fuzzy scheduling methods has been given in Figure 11b. From the comparison, it has been observed that RL is predominantly used in stochastic scheduling methods comparing with its application in fuzzy scheduling methods.

- (ii)

- The contribution of this paper can be also seen in the following activities and resulting statements:

- The publication trend for RL in production scheduling field has been updated by mapping period from 1996 to 2024 (see Figure 5). It showed that exponential growth of the publications starting from 2019 [28] rapidly continues in noticeable rise in their number.

- The updated list of the most relevant sources published research on RL in production scheduling was compiled in Figure 6. It has been found that five of ten sources identified until 2021 belong among top ten sources identified until 2024. It means that due to the growing interest about this research field, at the same time, the number of journals covering this topic is increasing.

- Actualization of the quantitative comparison of the most frequent deterministic scheduling methods based on RL was provided in Figure 11a. In this context, the previously identified trend has been confirmed that RL-based scheduling algorithms are mostly used to solve job shop scheduling problems, while flow shop production presents the second most important type of scheduling problems.

- The updated categorization of newly developed RL algorithms has been elaborated and presented in Figure 3 that can provide better implementation support for decision making in real-world problems.

- The updated classification of scheduling methods is provided in Figure 10. In principle, it can be used for easier selection of the scheduling techniques to solve specific types of problems.

In addition to the above-mentioned findings, it would be needed to focus on other challenges to be considered in the future such manufacturing process planning with integrated support for knowledge sharing, increasing demand for improvements in ubiquitous “smartness” in manufacturing processes including designing and implementing smart algorithms, and the need for robust scheduling tools for agile collaborative manufacturing systems.

Author Contributions

Conceptualization, V.M.; methodology, R.S. and A.B.; validation, Z.S.; formal analysis, V.M. and R.S.; investigation, V.M. and A.B.; writing—original draft preparation, A.B. and V.M.; writing—review and editing, R.S. and V.M.; visualization, Z.S.; project administration, V.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research has been funded by the project SME 5.0 with funding received from the European Union’s Horizon research and innovation program under the Marie Skłodowska-Curie Grant Agreement No. 101086487 and the KEGA (Cultural and Education Grant Agency) project No. 044TUKE-4/2023 granted by the Ministry of Education of the Slovak Republic.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is contained within the article.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Pinedo, M. Planning and Scheduling in Manufacturing and Services; Springer: New York, NY, USA, 2005. [Google Scholar]

- Beheshti, Z.; Shamsuddin, S.M.H. A review of population-based meta-heuristic algorithms. Int. J. Adv. Soft Comput. Appl. 2013, 5, 1–35. [Google Scholar]

- Xhafa, F.; Abraham, A. (Eds.) Metaheuristics for Scheduling in Industrial and Manufacturing Applications; Springer: Berlin/Heidelberg, Germany, 2008; Volume 128. [Google Scholar]

- Abdel-Kader, R.F. Particle swarm optimization for constrained instruction scheduling. VLSI Des. 2008, 2008, 930610. [Google Scholar] [CrossRef]

- Balamurugan, A.; Ranjitharamasamy, S.P. A Modified Heuristics for the Batch Size Optimization with Combined Time in a Mass-Customized Manufacturing System. Int. J. Ind. Eng. Theory Appl. Pract. 2023, 30, 1090–1115. [Google Scholar]

- Ghassemi Tari, F.; Olfat, L. Heuristic rules for tardiness problem in flow shop with intermediate due dates. Int. J. Adv. Manuf. Technol. 2014, 71, 381–393. [Google Scholar] [CrossRef]

- Modrak, V.; Pandian, R.S. Flow shop scheduling algorithm to minimize completion time for n-jobs m-machines problem. Teh. Vjesn. 2010, 17, 273–278. [Google Scholar]

- Thenarasu, M.; Rameshkumar, K.; Rousseau, J.; Anbuudayasankar, S.P. Development and analysis of priority decision rules using MCDM approach for a flexible job shop scheduling: A simulation study. Simul. Model. Pract. Theory 2022, 114, 102416. [Google Scholar] [CrossRef]

- Pandian, S.; Modrak, V. Possibilities, obstacles and challenges of genetic algorithm in manufacturing cell formation. Adv. Logist. Syst. 2009, 3, 63–70. [Google Scholar]

- Abdulredha, M.N.; Bara’a, A.A.; Jabir, A.J. Heuristic and meta-heuristic optimization models for task scheduling in cloud-fog systems: A review. Iraqi J. Electr. Electron. Eng. 2020, 16, 103–112. [Google Scholar] [CrossRef]

- Modrak, V.; Pandian, R.S.; Semanco, P. Calibration of GA parameters for layout design optimization problems using design of experiments. Appl. Sci. 2021, 11, 6940. [Google Scholar] [CrossRef]

- Keshanchi, B.; Souri, A.; Navimipour, N.J. An improved genetic algorithm for task scheduling in the cloud environments using the priority queues: Formal verification, simulation, and statistical testing. J. Syst. Softw. 2017, 124, 1–21. [Google Scholar] [CrossRef]

- Jans, R.; Degraeve, Z. Meta-heuristics for dynamic lot sizing: A review and comparison of solution approaches. Eur. J. Oper. Res. 2007, 177, 1855–1875. [Google Scholar] [CrossRef]

- Han, B.A.; Yang, J.J. A deep reinforcement learning based solution for flexible job shop scheduling problem. Int. J. Simul. Model. 2021, 20, 375–386. [Google Scholar] [CrossRef]

- Shyalika, C.; Silva, T.; Karunananda, A. Reinforcement Learning in Dynamic Task Scheduling: A Review. SN Comput. Sci. 2020, 1, 306. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, L.; Ren, L.; Xie, K.; Wang, K.; Ye, F.; Chen, Z. Brief Review on Applying Reinforcement Learning to Job Shop Scheduling Problems. J. Syst. Simul. 2022, 33, 2782–2791. [Google Scholar]

- Dima, I.C.; Gabrara, J.; Modrak, V.; Piotr, P.; Popescu, C. Using the expert systems in the operational management of production. In Proceedings of the 11th WSEAS International Conference on Mathematics and Computers in Business and Economics (MCBE’10), Iasi, Romania, 13–15 June 2010; WSEAS Press: Stevens Point, WI, USA, 2010. [Google Scholar]

- Waschneck, B.; Reichstaller, A.; Belzner, L.; Altenmüller, T.; Bauernhansl, T.; Knapp, A.; Kyek, A. Optimization of global production scheduling with deep reinforcement learning. Procedia CIRP 2018, 72, 1264–1269. [Google Scholar] [CrossRef]

- Yan, J.; Liu, Z.; Zhang, T.; Zhang, Y. Autonomous decision-making method of transportation process for flexible job shop scheduling problem based on reinforcement learning. In Proceedings of the 2021 International Conference on Machine Learning and Intelligent Systems Engineering, MLISE, Chongqing, China, 9–11 July 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA; pp. 234–238. [Google Scholar]

- Modrak, V.; Pandian, R.S. Operations Management Research and Cellular Manufacturing Systems; IGI Global: Hershey, PA, USA, 2010. [Google Scholar]

- Huang, Z.; Liu, Q.; Zhu, F. Hierarchical reinforcement learning with adaptive scheduling for robot control. Eng. Appl. Artif. Intell. 2023, 126, 107130. [Google Scholar] [CrossRef]

- Arviv, K.; Stern, H.; Edan, Y. Collaborative reinforcement learning for a two-robot job transfer flow-shop scheduling problem. Int. J. Prod. Res. 2016, 54, 1196–1209. [Google Scholar] [CrossRef]

- Wen, X.; Zhang, X.; Xing, H.; Ye, G.; Li, H.; Zhang, Y.; Wang, H. An improved genetic algorithm based on reinforcement learning for aircraft assembly scheduling problem. Comput. Ind. Eng. 2024, 193, 110263. [Google Scholar] [CrossRef]

- Aydin, M.E.; Öztemel, E. Dynamic job-shop scheduling using reinforcement learning agents. Robot. Auton. Syst. 2000, 33, 169–178. [Google Scholar] [CrossRef]

- Qu, S.; Wang, J.; Govil, S.; Leckie, J.O. Optimized Adaptive Scheduling of a Manufacturing Process System with Multi-skill Workforce and Multiple Machine Types: An Ontology-based, Multi-agent Reinforcement Learning Approach. Procedia CIRP 2016, 57, 55–60. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, L.; Fan, Y. Dynamic multi-objective scheduling for flexible job shop by deep reinforcement learning. Comput. Ind. Eng. 2021, 159, 107489. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Horn, B.K.P. Deep reinforcement learning-based dynamic scheduling in smart manufacturing. Procedia CIRP 2020, 93, 383–388. [Google Scholar] [CrossRef]

- Wang, L.; Pan, Z.; Wang, J. A Review of Reinforcement Learning Based Intelligent Optimization for Manufacturing Scheduling. Complex Syst. Model. Simul. 2021, 1, 257–270. [Google Scholar] [CrossRef]

- Kayhan, B.M.; Yildiz, G. Reinforcement learning applications to machine scheduling problems: A comprehensive literature review. J. Intell. Manuf. 2021, 34, 905–929. [Google Scholar] [CrossRef]

- Broadus, R.N. Toward a Definition of “Bibliometrics”. Scientometrics 1987, 12, 373–379. [Google Scholar] [CrossRef]

- Arunmozhi, B.; Sudhakarapandian, R.; Sultan Batcha, Y.; Rajay Vedaraj, I.S. An inferential analysis of stainless steel in additive manufacturing using bibliometric indicators. Mater Today Proc. 2023, in press. [CrossRef]

- Randhawa, K.; Wilden, R.; Hohberger, J. A bibliometric review of open innovation: Setting a research agenda. J. Prod. Innov. Manag. 2016, 33, 750–772. [Google Scholar] [CrossRef]

- van Raan, A. Advanced bibliometric methods as quantitative core of peer review based evaluation and foresight exercises. Scientometrics 1996, 36, 397–420. [Google Scholar] [CrossRef]

- Brandom, R.B. Articulating Reasons: An Introduction to Inferentialism; Harvard University Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Kothari, C.R. Research Methodology: Methods and Techniques; New Age International: New Delhi, India, 2004. [Google Scholar]

- Suárez, M. An inferential conception of scientific representation. Philos. Sci. 2004, 71, 767–779. [Google Scholar] [CrossRef]

- Contessa, G. Scientific representation, interpretation, and surrogative reasoning. Philos. Sci. 2007, 74, 48–68. [Google Scholar] [CrossRef]

- Govier, T. Problems in Argument Analysis and Evaluation; University of Windsor: Windsor, ON, Canada, 2018; Volume 6. [Google Scholar]

- Munusamy, R.; Mukherjee, A.; Vasudevan, K.; Venkateswaran, B. Design and Simulation of an Artificial intelligence (AI) Brain for a 2D Vehicle Navigation System. INCAS Bull. 2022, 14, 53–64. [Google Scholar] [CrossRef]

- Dunjko, V.; Briegel, H.J. Machine learning & artificial intelligence in the quantum domain: A review of recent progress. Rep. Prog. Phys. 2018, 81, 074001. [Google Scholar]

- Horvitz, E.; Mulligan, D. Data, privacy, and the greater good. Science 2015, 349, 253–255. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction; MIT: New York, NY, USA, 2018. [Google Scholar]

- Kuhnle, A.; May, M.C.; Schäfer, L.; Lanza, G. Explainable reinforcement learning in production control of job shop manufacturing system. Int. J. Prod. Res. 2022, 60, 5812–5834. [Google Scholar] [CrossRef]

- Esteso, A.; Peidro, D.; Mula, J.; Díaz-Madroñero, M. Reinforcement learning applied to production planning and control. Int. J. Prod. Res. 2023, 61, 5772–5789. [Google Scholar] [CrossRef]

- Khan, M.A.M.; Khan, M.R.J.; Tooshil, A.; Sikder, N.; Mahmud, M.P.; Kouzani, A.Z.; Nahid, A.A. A systematic review on reinforcement learning-based robotics within the last decade. IEEE Access 2020, 8, 176598–176623. [Google Scholar] [CrossRef]

- AlMahamid, F.; Grolinger, K. Reinforcement learning algorithms: An overview and classification. In Proceedings of the 2021 IEEE Canadian Conference on Electrical and Computer Engineering (CCECE), Kingston, ON, Canada, 12–17 September 2021; IEEE: Piscataway, NJ, USA; pp. 1–7. [Google Scholar]

- Akalin, N.; Loutfi, A. Reinforcement learning approaches in social robotics. Sensors 2021, 21, 1292. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, T. Taxonomy of reinforcement learning algorithms. In Deep Reinforcement Learning: Fundamentals, Research and Applications; Springer: Singapore, 2020; pp. 125–133. [Google Scholar]

- Baker, K.R.; Trietsch, D. Principles of Sequencing and Scheduling; John Wiley & Sons: Hoboken, NJ, USA, 2009. [Google Scholar]

- Pinedo, M.L. Scheduling: Theory, Algorithms, and Systems; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Allahverdi, A.; Ng, C.T.; Cheng, T.C.E.; Kovalyov, M.Y. A survey of scheduling problems with setup times or costs. Eur. J. Oper. Res. 2008, 187, 985–1032. [Google Scholar] [CrossRef]

- Panwalkar, S.S.; Smith, M.L. Survey of flow shop scheduling research. Oper. Res. 1977, 25, 45–84. [Google Scholar] [CrossRef]

- Paraschos, P.D.; Koulinas, G.K.; Koulouriotis, D.E. Reinforcement Learning-Based Optimization for Sustainable and Lean Production within the Context of Industry 4.0. Algorithms 2024, 17, 98. [Google Scholar] [CrossRef]

- Wang, S.; Li, J.; Luo, Y. Smart Scheduling for Flexible and Hybrid Production with Multi-Agent Deep Reinforcement Learning. In Proceedings of the 2021 IEEE 2nd International Conference on Information Technology, Big Data and Artificial Intelligence, ICIBA, Chongqing, China, 17–19 December 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA; pp. 288–294. [Google Scholar]

- Tang, J.; Haddad, Y.; Salonitis, K. Reconfigurable manufacturing system scheduling: A deep reinforcement learning approach. Procedia CIRP 2022, 107, 1198–1203. [Google Scholar] [CrossRef]

- Shahrabi, J.; Adibi, M.A.; Mahootchi, M. A reinforcement learning approach to parameter estimation in dynamic job shop scheduling. Comput Ind Eng. 2017, 110, 75–82. [Google Scholar] [CrossRef]

- Yang, J.; You, X.; Wu, G.; Hassan, M.M.; Almogren, A.; Guna, J. Application of reinforcement learning in UAV cluster task scheduling. Future Gener. Comput. Syst. 2019, 95, 140–148. [Google Scholar] [CrossRef]

- Yuan, X.; Pan, Y.; Yang, J.; Wang, W.; Huang, Z. Study on the application of reinforcement learning in the operation optimization of HVAC system. In Building Simulation; Tsinghua University Press: Beijing, China, 2021; Volume 14, pp. 75–87. [Google Scholar]

- Kurinov, I.; Orzechowski, G.; Hämäläinen, P.; Mikkola, A. Automated excavator based on reinforcement learning and multibody system dynamics. IEEE Access 2020, 8, 213998–214006. [Google Scholar] [CrossRef]

- Popper, J.; Motsch, W.; David, A.; Petzsche, T.; Ruskowski, M. Utilizing multi-agent deep reinforcement learning for flexible job shop scheduling under sustainable viewpoints. In Proceedings of the International Conference on Electrical, Computer, Communications and Mechatronics Engineering 2021, ICECCME, Mauritius, Mauritius, 7–8 October 2021; Institute of Electrical and Electronics Engineers Inc.: Piscataway, NJ, USA. [Google Scholar]

- Xiong, H.; Fan, H.; Jiang, G.; Li, G. A simulation-based study of dispatching rules in a dynamic job shop scheduling problem with batch release and extended technical precedence constraints. Eur. J. Oper. Res. 2017, 257, 13–24. [Google Scholar] [CrossRef]

- Palacio, J.C.; Jiménez, Y.M.; Schietgat, L.; Van Doninck, B.; Nowé, A. A Q-Learning algorithm for flexible job shop scheduling in a real-world manufacturing scenario. Procedia CIRP 2022, 106, 227–232. [Google Scholar] [CrossRef]

- Chang, J.; Yu, D.; Hu, Y.; He, W.; Yu, H. Deep Reinforcement Learning for Dynamic Flexible Job Shop Scheduling with Random Job Arrival. Processes 2022, 10, 760. [Google Scholar] [CrossRef]

- Liu, R.; Piplani, R.; Toro, C. Deep reinforcement learning for dynamic scheduling of a flexible job shop. Int. J. Prod. Res. 2022, 60, 4049–4069. [Google Scholar] [CrossRef]

- Samsonov, V.; Kemmerling, M.; Paegert, M.; Lütticke, D.; Sauermann, F.; Gützlaff, A.; Schuh, G.; Meisen, T. Manufacturing control in job shop environments with reinforcement learning. In Proceedings of the 13th International Conference on Agents and Artificial Intelligence (ICAART 2021), Online, 4–6 February 2021; SciTePress: Setúbal, Portugal; pp. 589–597. [Google Scholar]

- Cunha, B.; Madureira, A.M.; Fonseca, B.; Coelho, D. Deep reinforcement learning as a job shop scheduling solver: A literature review. In Proceedings of the 18th International Conference on Hybrid Intelligent Systems (HIS 2018), Porto, Portugal, 13–15 December 2018; Madureira, A.M., Abraham, A., Gandhi, N., Varela, M.L., Eds.; Springer International Publishing: Cham, Switzerland, 2018. [Google Scholar]

- Wang, X.; Zhang, L.; Lin, T.; Zhao, C.; Wang, K.; Chen, Z. Solving job scheduling problems in a resource preemption environment with multi-agent reinforcement learning. Robot. Comput. Integr. Manuf. 2022, 77, 102324. [Google Scholar] [CrossRef]

- Oh, S.H.; Cho, Y.I.; Woo, J.H. Distributional reinforcement learning with the independent learners for flexible job shop scheduling problem with high variability. J. Comput. Des. Eng. 2022, 9, 1157–1174. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, H.; Tang, D.; Zhou, T.; Gui, Y. Dynamic job shop scheduling based on deep reinforcement learning for multi-agent manufacturing systems. Robot. Comput. Integr. Manuf. 2022, 78, 102412. [Google Scholar] [CrossRef]

- Liang, Y.; Sun, Z.; Song, T.; Chou, Q.; Fan, W.; Fan, J.; Rui, Y.; Zhou, Q.; Bai, J.; Yang, C.; et al. Lenovo Schedules Laptop Manufacturing Using Deep Reinforcement Learning. Interfaces 2022, 52, 56–68. [Google Scholar] [CrossRef]

- Chen, Y.; Guo, W.; Liu, J.; Shen, S.; Lin, J.; Cui, D. A multi-setpoint cooling control approach for air-cooled data centers using the deep Q-network algorithm. Meas. Control 2024, 57, 782–793. [Google Scholar] [CrossRef]

- Théate, T.; Ernst, D. An application of deep reinforcement learning to algorithmic trading. Expert Syst. Appl. 2021, 173, 114632. [Google Scholar] [CrossRef]

- Sanaye, S.; Sarrafi, A. A novel energy management method based on Deep Q Network algorithm for low operating cost of an integrated hybrid system. Energy Rep. 2021, 7, 2647–2663. [Google Scholar] [CrossRef]

- Luo, S. Dynamic scheduling for flexible job shop with new job insertions by deep reinforcement learning. Appl. Soft Comput. J. 2020, 91, 106208. [Google Scholar] [CrossRef]

- Luo, S.; Zhang, L.; Fan, Y. Real-Time Scheduling for Dynamic Partial-No-Wait Multiobjective Flexible Job Shop by Deep Reinforcement Learning. IEEE Trans. Autom. Sci. Eng. 2022, 19, 3020–3038. [Google Scholar] [CrossRef]

- Hu, J.; Wang, H.; Tang, H.-K.; Kanazawa, T.; Gupta, C.; Farahat, A. Knowledge-enhanced reinforcement learning for multi-machine integrated production and maintenance scheduling. Comput. Ind. Eng. 2023, 185, 109631. [Google Scholar] [CrossRef]

- Du, Y.; Li, J.Q.; Chen, X.L.; Duan, P.Y.; Pan, Q.K. Knowledge-Based Reinforcement Learning and Estimation of Distribution Algorithm for Flexible Job Shop Scheduling Problem. IEEE Trans. Emerg. Top. Comput. Intell. 2023, 7, 1036–1050. [Google Scholar] [CrossRef]

- Li, Y.; Gu, W.; Yuan, M.; Tang, Y. Real-time data-driven dynamic scheduling for flexible job shop with insufficient transportation resources using hybrid deep Q network. Robot. Comput. Integr. Manuf. 2022, 74, 102283. [Google Scholar] [CrossRef]

- Zhou, T.; Zhu, H.; Tang, D.; Liu, C.; Cai, Q.; Shi, W.; Gui, Y. Reinforcement learning for online optimization of job-shop scheduling in a smart manufacturing factory. Adv. Mech. Eng. 2022, 14, 16878132221086120. [Google Scholar] [CrossRef]

- Wang, L.; Hu, X.; Wang, Y.; Xu, S.; Ma, S.; Yang, K.; Wang, W. Dynamic job-shop scheduling in smart manufacturing using deep reinforcement learning. Comput. Netw. 2021, 190, 107969. [Google Scholar] [CrossRef]

- Wang, Y.; Usher, J.M. Application of reinforcement learning for agent-based production scheduling. Eng. Appl. Artif. Intell. 2005, 18, 73–82. [Google Scholar] [CrossRef]

- Cancino, C.A.; Merigó, J.M.; Coronado, F.C. A bibliometric analysis of leading universities in innovation research. J. Innov. Knowl. 2017, 2, 106–124. [Google Scholar] [CrossRef]

- Varin, C.; Cattelan, M.; Firth, D. Statistical modelling of citation exchange between statistics journals. J. R. Stat. Soc. Ser. A Stat. Soc. 2016, 179, 1–63. [Google Scholar] [CrossRef] [PubMed]

- Moral-Muñoz, J.A.; Herrera-Viedma, E.; Santisteban-Espejo, A.; Cobo, M.J. Software tools for conducting bibliometric analysis in science: An up-to-date review. Prof. De La Inf./Inf. Prof. 2020, 29, e290103. [Google Scholar] [CrossRef]

- Curry, S. Let’s move beyond the rhetoric: It’s time to change how we judge research. Nature 2018, 554, 147–148. [Google Scholar] [CrossRef] [PubMed]

- Al-Hoorie, A.; Vitta, J.P. The seven sins of L2 research: A review of 30 journals’ statistical quality and their CiteScore, SJR, SNIP, JCR Impact Factors. Lang. Teach. Res. 2019, 23, 727–744. [Google Scholar] [CrossRef]

- Waltman, L.; Van Eck, N.J.; Noyons, E.C.M. A Unified Approach to Mapping and Clustering of Bibliometric Networks. J. Informetr. 2010, 4, 629–635. [Google Scholar] [CrossRef]

- Cheng, F.; Huang, Y.; Tanpure, B.; Sawalani, P.; Cheng, L.; Liu, C. Cost-aware job scheduling for cloud instances using deep reinforcement learning. Clust. Comput. 2022, 25, 619–631. [Google Scholar] [CrossRef]

- Thaipisutikul, T.; Chen, Y.-C.; Hui, L.; Chen, S.-C.; Mongkolwat, P.; Shih, T.K. The matter of deep reinforcement learning towards practical AI applications. In Proceedings of the 12th International Conference on Ubi-Media Computing, Bali, Indonesia, 5–8 August 2019; pp. 24–29. [Google Scholar]

- Yan, J.; Huang, Y.; Gupta, A.; Gupta, A.; Liu, C.; Li, J.; Cheng, L. Energy-aware systems for real-time job scheduling in cloud data centers: A deep reinforcement learning approach. Comput. Electr. Eng. 2022, 99, 107688. [Google Scholar] [CrossRef]

- Piller, F.T. Mass customization: Reflections on the state of the concept. Int. J. Flex. Manuf. Syst. 2004, 16, 313–334. [Google Scholar] [CrossRef]

- Suzić, N.; Forza, C.; Trentin, A.; Anišić, Z. Implementation guidelines for mass customization: Current characteristics and suggestions for improvement. Prod. Plan. Control 2018, 29, 856–871. [Google Scholar] [CrossRef]

- Altenmüller, T.; Stüker, T.; Waschneck, B.; Kuhnle, A.; Lanza, G. Reinforcement learning for an intelligent and autonomous production control of complex job-shops under time constraints. Prod. Eng. 2020, 14, 319–328. [Google Scholar] [CrossRef]

- Zhao, Y.; Zhang, H. Application of machine learning and rule scheduling in a job-shop production control system. Int. J. Simul. Model 2021, 20, 410–421. [Google Scholar] [CrossRef]

- Kuhnle, A.; Kaiser, J.P.; Theiß, F.; Stricker, N.; Lanza, G. Designing an adaptive production control system using reinforcement learning. J. Intell. Manuf. 2021, 32, 855–876. [Google Scholar] [CrossRef]

- Panzer, M.; Bender, B.; Gronau, N. Deep reinforcement learning in production planning and control: A systematic literature review. In Proceedings of the Conference on Production Systems and Logistics, Online, 10–11 August 2021. [Google Scholar]

- Wojakowski, P.; Warżołek, D. The classification of scheduling problems under production uncertainty. Res. Logist. Prod. 2014, 4, 245–256. [Google Scholar]

- Blackstone, J.H.; Phillips, D.T.; Hogg, G.L. A state-of-the-art survey of dispatching rules for manufacturing job shop operations. Int. J. Prod. Res. 1982, 20, 27–45. [Google Scholar] [CrossRef]

- Blazewicz, J.; Ecker, K.H.; Pesch, E.; Schmidt, G.; Weglarz, J. Handbook on Scheduling: From Theory to Applications; Springer: Berlin/Heidelberg, Germany, 2007. [Google Scholar]

- Ivanov, D.; Dolgui, A.; Sokolov, B. A dynamic approach to multi-stage job shop scheduling in an industry 4.0-based flexible assembly system. In Advances in Production Management Systems. The Path to Intelligent, Collaborative and Sustainable Manufacturing: IFIP WG 5.7 International Conference, APMS 2017, Hamburg, Germany, 3–7 September 2017, Proceedings, Part I; Springer International Publishing: Cham, Switzerland; pp. 475–482.

- Modrak, V. (Ed.) Mass Customized Manufacturing: Theoretical Concepts and Practical Approaches; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Komaki, G.M.; Sheikh, S.; Malakooti, B. Flow shop scheduling problems with assembly operations: A review and new trends. Int. J. Prod. Res. 2019, 57, 2926–2955. [Google Scholar] [CrossRef]

- Yang, Y.; Li, X. A knowledge-driven constructive heuristic algorithm for the distributed assembly blocking flow shop scheduling problem. Expert Syst. Appl. 2022, 202, 117269. [Google Scholar] [CrossRef]

- Nasiri, M.M.; Yazdanparast, R.; Jolai, F. A simulation optimisation approach for real-time scheduling in an open shop environment using a composite dispatching rule. Int. J. Comput. Integr. Manuf. 2017, 30, 1239–1252. [Google Scholar] [CrossRef]

- Abdelmaguid, T.F. Bi-objective dynamic multiprocessor open shop scheduling for maintenance and healthcare diagnostics. Expert Syst. Appl. 2021, 186, 115777. [Google Scholar] [CrossRef]

- Tremblet, D.; Thevenin, S.; Dolgui, A. Makespan estimation in a flexible job-shop scheduling environment using machine learning. Int. J. Prod. Res. 2024, 62, 3654–3670. [Google Scholar] [CrossRef]

- Fu, Y.; Hou, Y.; Wang, Z.; Wu, X.; Gao, K.; Wang, L. Distributed scheduling problems in intelligent manufacturing systems. Tsinghua Sci. Technol. 2021, 26, 625–645. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, X.; Ding, Y. A Reinforcement Learning-Based Approach to Stochastic Job Shop Scheduling. IEEE Trans. Autom. Sci. Eng. 2020, 17, 72–83. [Google Scholar]

- Rinciog, A.; Meyer, A. Towards standardizing reinforcement learning approaches for stochastic production scheduling. arXiv 2021, arXiv:2104.08196. [Google Scholar]

- Zeng, B.; Yang, Y. A Hybrid Reinforcement Learning and Fuzzy Logic Approach for Job Shop Scheduling. J. Intell. Manuf. 2017, 28, 1189–1201. [Google Scholar]

- Zhang, G.; Huang, Y. Fuzzy reinforcement learning for multi-objective dynamic scheduling of a flexible manufacturing system. J. Intell. Manuf. 2005, 16, 293–304. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).