Abstract

Traffic prediction is crucial for transportation management and user convenience. With the rapid development of deep learning techniques, numerous models have emerged for traffic prediction. Recurrent Neural Networks (RNNs) are extensively utilized as representative predictive models in this domain. This paper comprehensively reviews RNN applications in traffic prediction, focusing on their significance and challenges. The review begins by discussing the evolution of traffic prediction methods and summarizing state-of-the-art techniques. It then delves into the unique characteristics of traffic data, outlines common forms of input representations in traffic prediction, and generalizes an abstract description of traffic prediction problems. Then, the paper systematically categorizes models based on RNN structures designed for traffic prediction. Moreover, it provides a comprehensive overview of seven sub-categories of applications of deep learning models based on RNN in traffic prediction. Finally, the review compares RNNs with other state-of-the-art methods and highlights the challenges RNNs face in traffic prediction. This review is expected to offer significant reference value for comprehensively understanding the various applications of RNNs and common state-of-the-art models in traffic prediction. By discussing the strengths and weaknesses of these models and proposing strategies to address the challenges faced by RNNs, it aims to provide scholars with insights for designing better traffic prediction models.

1. Introduction

Traffic prediction plays a crucial role in intelligent urban management and planning research on traffic prediction, encompassing various aspects such as traffic flow on road networks, passenger flow on public transit networks, Origin–Destination (OD) demand prediction, traffic speed prediction, travel time prediction, traffic congestion prediction, etc., holds significant importance for both users and managers of transportation systems. Accurate traffic prediction enables users to plan their routes more effectively, whether driving on roads or using public transit. By avoiding congested areas and selecting the most efficient paths, users can reduce travel time and improve their overall commuting experience. Additionally, predicting traffic conditions allows users to anticipate potential hazards, accidents, or delays along their route, enabling them to make safer decisions while navigating roads or utilizing public transportation services. Moreover, real-time access to traffic predictions empowers users to make informed decisions about when to travel, which routes to take, or whether to switch to alternative modes of transportation. This leads to increased convenience and flexibility in managing their daily commutes. For urban planners and traffic managers, traffic prediction research assists transportation managers in optimizing the performance of road and transit networks by identifying congestion-prone areas and implementing appropriate traffic management strategies. This includes adjusting traffic signal timings, optimizing public transit schedules, and coordinating infrastructure improvements to enhance network efficiency. Additionally, accurate traffic predictions enable managers to allocate resources more effectively, such as deploying additional transit services, adjusting route schedules, or prioritizing maintenance activities. This ensures that resources are utilized efficiently to meet the demands of travelers and minimize disruptions. Moreover, insights derived from traffic prediction research inform strategic decision-making processes related to urban planning, infrastructure development, and transportation policy formulation. By understanding future traffic patterns and demands, decision makers can make informed choices to support sustainable and resilient transportation systems. In summary, research on traffic prediction plays a critical role in optimizing travel efficiency, safety, and convenience for users while assisting transportation managers in effectively managing and improving the performance of transportation networks.

Traditional traffic prediction methods, such as those based on Historical Averages (HAs), time series analysis, and autoregressive models, while providing initial traffic prediction to some extent, often fail to fully capture traffic data’s complexity and dynamic changes. These methods typically assume that traffic variations are linear or follow simple patterns, making it challenging to handle non-linear relationships, the impact of sudden events, and seasonal or periodic changes in traffic data. Additionally, they exhibit low efficiency when dealing with large-scale, high-dimensional data, making it challenging to respond to real-time traffic changes. In recent years, the rapid development of deep learning models has dramatically promoted research and applications in traffic prediction. Deep learning models such as Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), Graph Neural Networks (GNNs), and transformers have provided new solutions for traffic prediction with their outstanding data processing capabilities and ability to model complex temporal and spatial relationships. These models can automatically learn traffic flow patterns, passenger flow, speed, and congestion changes from massive historical traffic data, achieving high-accuracy predictions of future traffic conditions.

Given the impact of deep learning on traffic prediction, the significance of RNNs and their variants, such as long short-term memory (LSTM) networks and Gated Recurrent Unit (GRU) networks, stands out prominently. The design philosophy of RNNs enables them to naturally handle time series data, which is crucial for traffic prediction as traffic features, like flow and speed, vary over time. By capturing long-term dependencies in time series, RNNs can accurately forecast future changes in traffic conditions. Mainly, variants of RNNs, like LSTMs and GRUs, address the issue of vanishing or exploding gradients faced by traditional RNNs when dealing with long sequences through specialized gating mechanisms. This further enhances prediction accuracy and stability.

As neural network models for traffic prediction become increasingly popular, the diversification of deep learning models complicates the assessment of the current state and future directions of this research field. Despite the emergence of advanced models, like transformers, which have demonstrated superior performance in traffic prediction, reviewing the applications of RNNs in this field remains essential. Recent developments, such as the introduction of the Test-Time Training (TTT) [1] and the Extend LSTM (xLSTM) model by the original author of LSTM in 2024 [2], have reinvigorated interest in RNNs, showcasing their exceptional predictive capabilities and highlighting a resurgence in their relevance. RNNs offer a critical baseline for evaluating newer architectures, and understanding RNNs’ capabilities and limitations provides valuable insights into model design and optimization, which can still be advantageous in specific contexts.

The scarcity of specialized studies on RNN models further complicates understanding their application in traffic prediction. This review addresses these challenges by providing a comprehensive overview, targeting professionals interested in applying RNNs for traffic prediction. Starting with the definition of the problem and a brief history of traffic prediction, it then delves into RNNs within traffic prediction research. It discusses the comparison between RNNs and other state-of-the-art methods and the challenges RNNs face in traffic prediction.

The insights that can be extracted from this paper are as follows:

- The unique characteristics of traffic data and common input data representations in traffic prediction are summarized.

- The statement of traffic prediction problems is generalized.

- A comprehensive overview of seven sub-categories of applications of current deep learning models based on the structures of RNNs in traffic prediction is conducted.

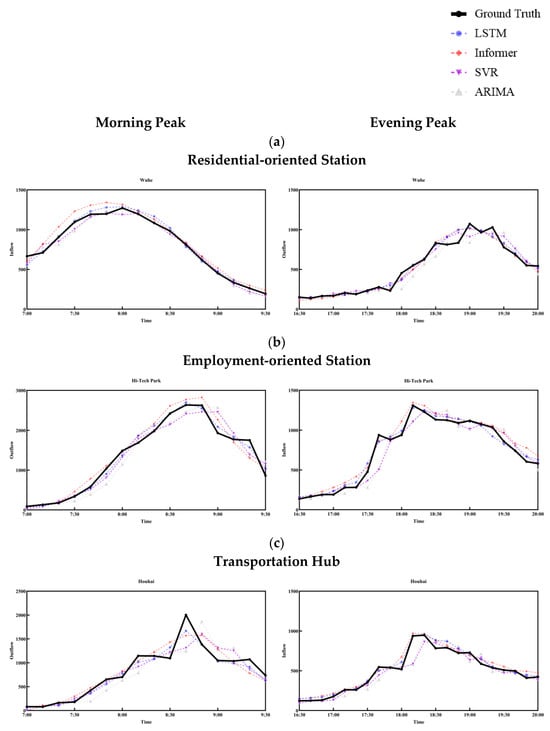

- A detailed comparison between RNNs and other state-of-the-art deep learning models is conducted. In addition to the comparison of RNNs with other state-of-the-art models on public datasets, such as the Performance Measurement System (PeMS, http://pems.dot.ca.gov/ (accessed on 2 March 2024)), we design a comparative study focused on short-term passenger flow prediction using a real-world metro smart card dataset. This study allows us to directly compare the predictive performance of RNNs with other models in a practical, real-world context.

- Transformers excel with long sequences and complex patterns, but RNNs can outperform with shorter sequences and smaller datasets. The metro data used in our comparative study favored LSTM, showing that simpler models can sometimes provide more accurate and efficient predictions. Choosing the right model based on the dataset and resources is crucial.

- The future challenges facing RNNs in traffic prediction and how to deal with these challenges are discussed.

The paper is structured as follows.

In Section 2, we review the development history of the traffic prediction field over the past six decades and describe various data-driven prediction methods. We categorize prediction methods into three major classes: statistical methods, traditional machine learning, and deep learning. With the enhancement of computational resources and advancement in data acquisition techniques, deep learning has gradually become mainstream due to its outstanding performance in prediction accuracy.

In Section 3, we introduce traffic data and its unique characteristics, summarize the abstract description of traffic prediction problems, and then discuss the impact of input data representation on RNNs and their variants for traffic prediction. This includes the form of input sequences (including time series, matrix/grid-based sequences, and graph-based sequences) and sliding window techniques. This section emphasizes the importance of accurately representing input data to improve the prediction accuracy of RNN models. It discusses the performance differences when handling different data representations, ranging from simple RNNs to complex models combining CNNs and GNNs.

In Section 4, we delve into RNNs and their variants, sequentially introducing classical methods and hybrid models (such as RNNs combined with machine learning techniques, CNNs, GNNs, and attention mechanisms). This section showcases RNNs’ advantages in capturing temporal dependencies and methods for overcoming limitations and enhancing prediction performance through integration with other technologies.

In Section 5, we delve into the application of RNNs and their variants in traffic prediction, covering seven aspects as follows: traffic flow prediction, passenger flow prediction, OD (Origin–Destination) demand prediction, traffic speed prediction, travel time prediction, traffic accidents and congestion prediction, and occupancy prediction. This section provides a detailed exposition of the performance of relevant models in various domains.

In Section 6, we discuss the comparison between RNNs and the most popular models, i.e., transformer series models, as well as other classical prediction models, such as convolutional-based time series prediction models. This section also explores the challenges faced, such as model interpretability, accuracy in long-term prediction, the lack of standardized benchmark datasets, issues with small sample sizes and missing data, and the challenge of integrating heterogeneous data from multiple sources.

2. Development History of Traffic Prediction

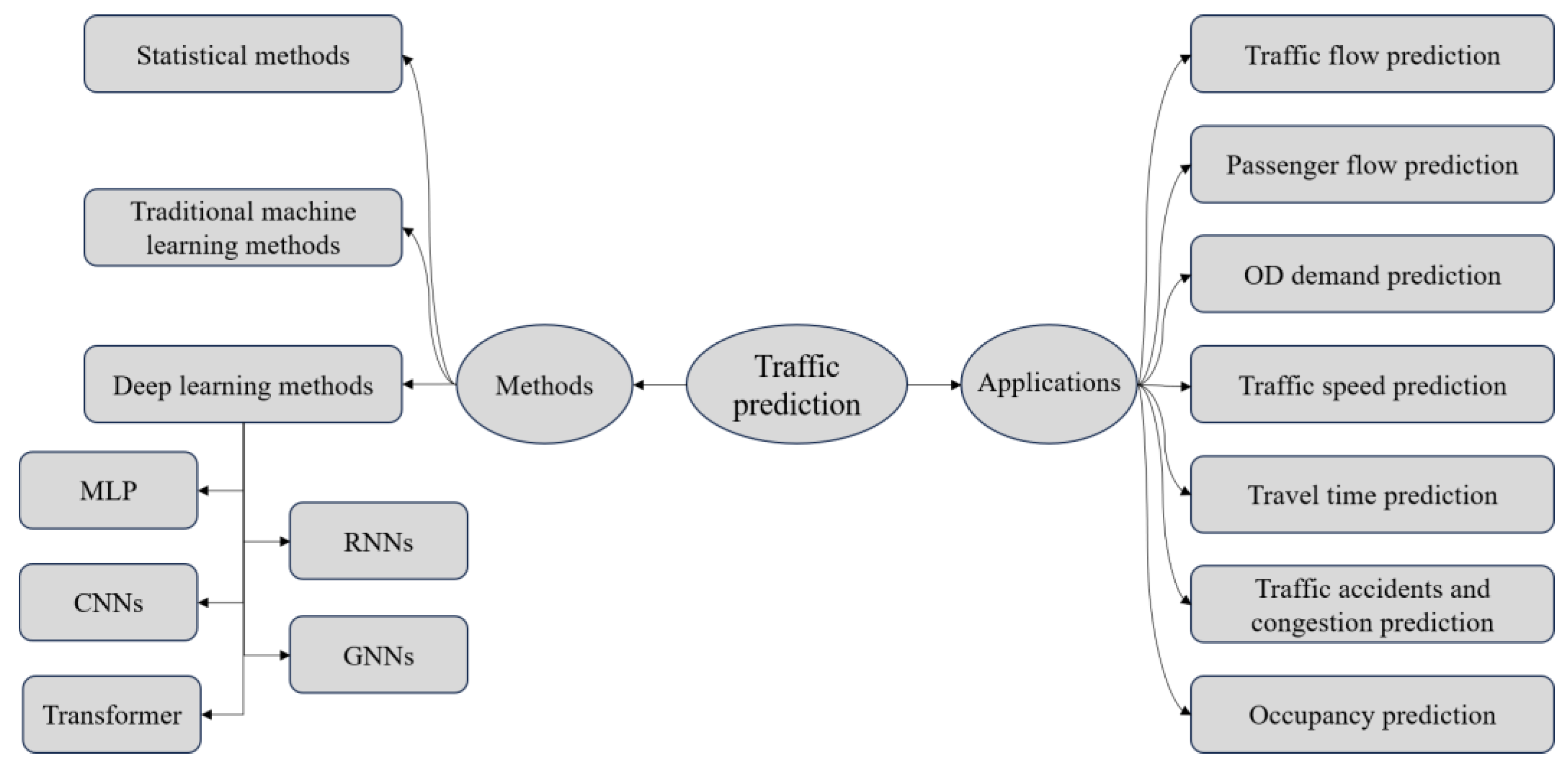

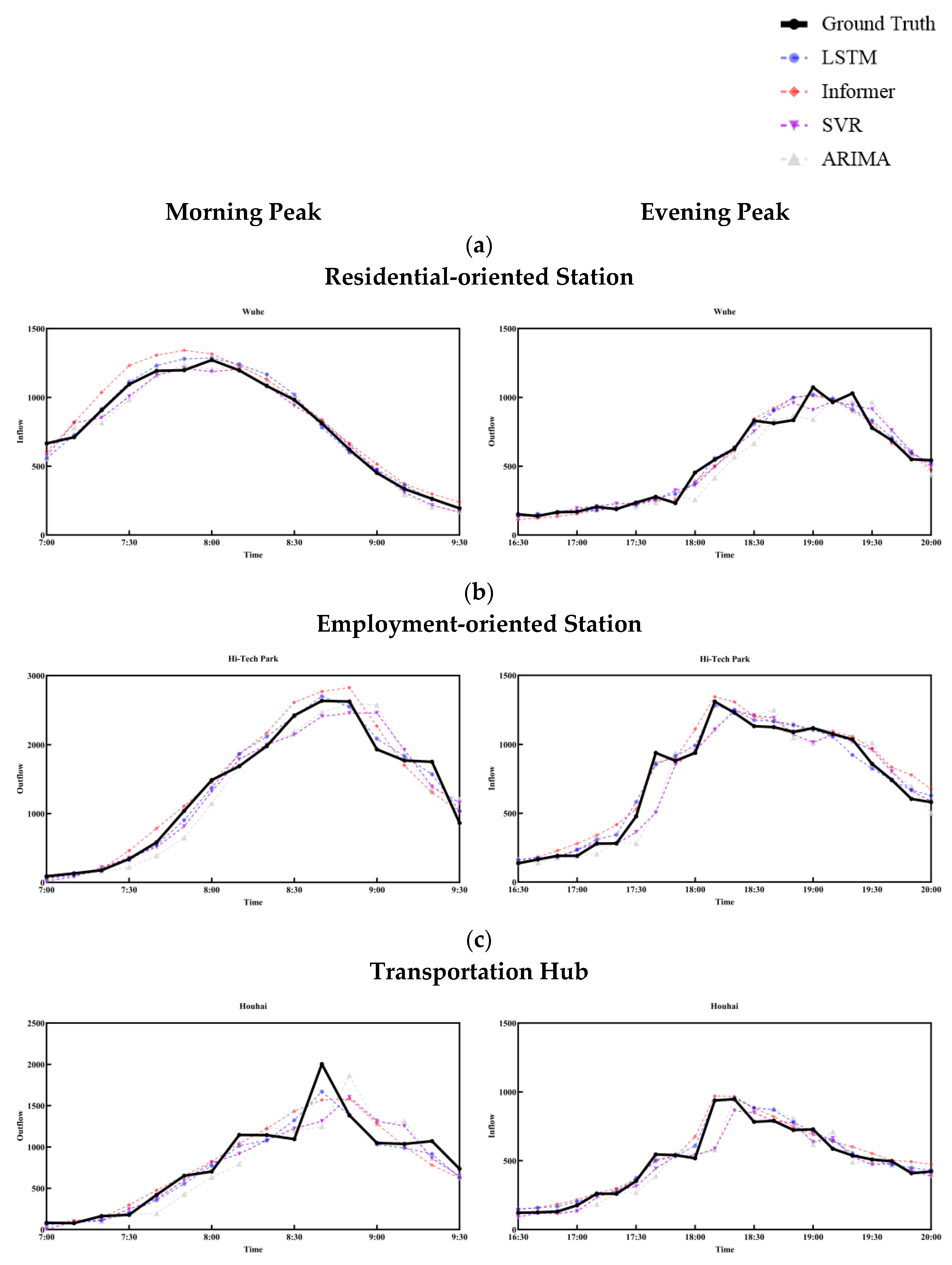

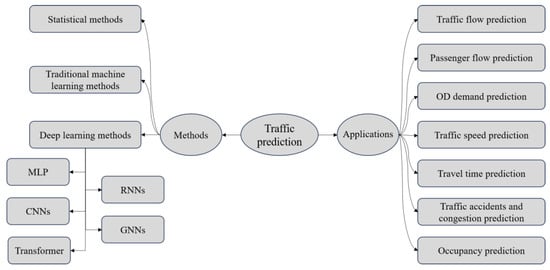

In this section, we conduct a thorough review and survey of significant research on traffic prediction throughout its development history. The field of traffic prediction has evolved over nearly six decades, during which various prediction methods have emerged. Traffic prediction is a multidimensional research field encompassing a diverse classification of methods and applications (Figure 1). Methodologically, traffic prediction is divided into three major classes: statistical methods, traditional machine learning methods, and deep learning methods. Moreover, traffic prediction involves multiple sub-areas of applications, such as traffic flow prediction, passenger flow prediction, traffic speed prediction, and travel time prediction. These sub-areas will be explored in detail in Section 5. This section will systematically review traffic prediction’s historical development and technological progress, following the aforementioned methodological classification. Statistical methods are particularly suitable for handling smaller datasets due to their clear and simplified computational frameworks compared to more advanced machine learning techniques. Meanwhile, traditional machine learning methods excel in capturing complex non-linear relationships in traffic data and processing high-dimensional data. With the enhancement of computational power and improvement in data acquisition methods, deep learning methods are increasingly popular due to their complex structures and ability to outperform many traditional methods given sufficient data.

Figure 1.

A taxonomy of traffic prediction methods and applications.

2.1. Statistical Methods

Statistical methods play pivotal roles in traffic prediction, representing a major paradigm of data-driven methodologies. Statistical methods, exemplified by time series analysis encompassing HA models [3], Exponential Smoothing models (ES) [4], ARIMA models [5], Vector Auto Regression (VAR) models [6], etc., rely on the statistical attributes of historical data to anticipate forthcoming traffic. By capturing the temporal dependencies and seasonal patterns inherent in traffic data, these methodologies furnish a robust groundwork for short-term and mid-term traffic forecasting.

In time series analysis, the ARIMA model and its variants represent mature methodologies rooted in classical statistics and have been widely applied in traffic prediction tasks [7,8,9,10,11]. Ahmed et al. [12] were the first to use the ARIMA model for traffic prediction problems. Since then, many scholars have made improvements in this area. For example, Williams et al. [13] proposed seasonal ARIMA to predict traffic flow, considering the periodicity of traffic data. The success of these models lies in their ability to capture trends and seasonal patterns in time series data, thereby providing accurate predictions for domains, such as traffic volume and accident rates. However, despite the efficacy of the ARIMA model and its variants in addressing linear time series forecasting problems, they exhibit certain limitations, mainly when dealing with complex issues, like traffic prediction. Firstly, ARIMA models assume data has linear relationships and fail to capture non-linear patterns in traffic flow data. Secondly, ARIMA models are limited to a small set of features (lags of the time series itself) and struggle to integrate external factors affecting traffic, such as weather. Additionally, these models are computationally unsuitable for handling large or high-dimensional datasets and require manual tuning of parameters. ARIMA models are sensitive to missing data and noise, necessitating preprocessing and imputation to address gaps in the time series. Their capability for real-time prediction is limited, especially when the models need frequent retraining.

2.2. Traditional Machine Learning Methods

Traditional machine learning methods in traffic prediction practice mainly fall into three categories: feature-based models, Gaussian process models, and state space models. Feature-based models, particularly suitable for traffic prediction [14], rely on regression models constructed from statistical and traffic features. The challenge with such methods lies in the manual construction of regression models, with their effectiveness largely dependent on the accuracy of regression analysis. Zheng et al. [15] proposed a feature selection-based method to explore the performance of machine learning models, such as Support Vector Regression (SVR) and K-Nearest Neighbor (K-NN), in predicting traffic speed across various feature selections. On the other hand, Gaussian process models involve complex manipulation of the spatiotemporal attributes of traffic data. While accurate, scaling to large datasets is difficult due to its high computational resource requirements [16,17]. Sun et al. [18] proposed a method for a mixture of variational approximate Gaussian processes, which extends the single Gaussian process regression model. Zhao et al. [19] established a fourth-order Gaussian process dynamical model for traffic flow prediction based on K-NN, achieving significant improvements. State space models operate by simulating the uncertainty of the system through Markovian hidden states. While they demonstrate specific capabilities in simulating complex systems and dynamic traffic flows, their application is limited when dealing with more intricate traffic simulations and flow dynamics [20]. Shin et al. [21] utilized a Markov chain with velocity constraints to stochastically generate velocity trajectories for traffic speed prediction. Zhu et al. [22] chose the hidden Markov model to represent the dynamic transition process of traffic states and used it to estimate traffic states.

2.3. Deep Learning Methods

While traditional machine learning models can effectively learn non-linear patterns in data, they still have limitations, such as requiring extensive feature engineering, struggling with high-dimensional data, and not capturing complex temporal dependencies as effectively. With their multi-layer neural network structures, deep learning models provide a powerful capability for handling such data. Due to their numerous layers and large number of parameters, these models are particularly suitable for extracting features from large and complex datasets, thereby achieving excellent predictive performance. This leads to the success of deep learning in multiple fields, such as stock prediction [23], text mining [24], and traffic prediction.

Deep learning provides a powerful tool for handling high-dimensional data and learning complex patterns, with Multi-Layer Perceptron (MLP) [25] being one of the most basic deep learning networks that has shown significant potential in traffic prediction. MLP is a feedforward neural network consisting of an input layer, several hidden layers, and an output layer. It is suitable for performing classification and regression tasks, which are crucial in traffic prediction. The goals of traffic prediction include but are not limited to predicting traffic flow, vehicle speed, travel time, and congestion levels. MLPs use backpropagation as a training technique, a supervised learning algorithm that learns the desired output from diverse inputs. Early research applying MLPs to traffic prediction was mainly performed around 1995. For example, Taylor et al. [26] applied MLPs to predict highway traffic volume and occupancy, while Ledoux [27] summarized the potential applications of MLPs in traffic flow modeling. Despite MLP’s early widespread application in traffic prediction due to its ability to handle non-linear relationships in traffic data, more powerful and specifically optimized deep learning models are gradually replacing MLPs over time.

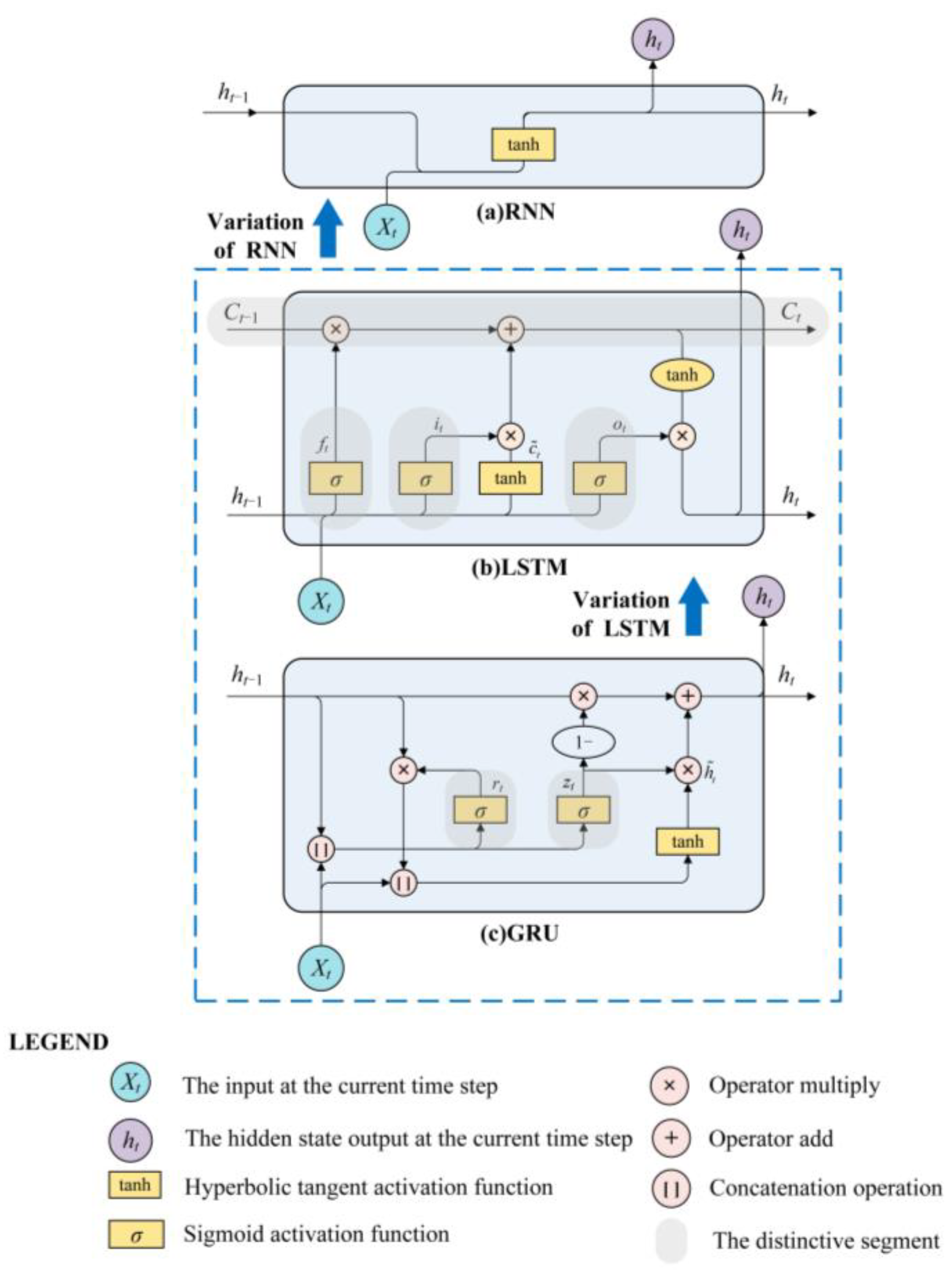

The subsequent development of RNNs represents a significant evolution of traditional feedforward neural network models, mainly aimed at improving the performance in handling time series data. Traditional feedforward neural networks, such as MLPs, often perform poorly in modeling sequences and time series data, mainly because they lack a component for sustained memory, preventing the network from maintaining the flow of information over time between its neurons. In contrast, the design of RNNs improves the handling of sequential data by introducing internal states or memory units in the network to store past information. The first proposed RNN model consisted of a basic two-layer structure, with a notable feature in the hidden layer: a feedback loop. The addition of this feedback loop was an innovation of RNNs, enabling the network to retain information from previous states to some extent, thus handling sequential data. Although the original RNNs show promise in dealing with data with temporal sequences, their simple design faces significant challenges in practical applications. When training larger RNNs using backpropagation, the issues of vanishing or exploding gradients often arose. This became particularly pronounced when attempting to capture long-term data dependencies, as the error gradients decay exponentially during the backpropagation process, making it difficult for the network to learn these dependencies. To address this problem, a new class of RNN structures, known as gated RNNs, was proposed, which manage long-term dependency information by introducing gate mechanisms, effectively overcoming the vanishing gradient problem. Among these, the two most well-known variants of gated RNNs are LSTMs and GRUs. These models achieve a high capability in capturing long-term dependencies in time series data by incorporating complex gate mechanisms, such as the forget gate, input gate, and output gate, in LSTMs or the update gate and reset gate in GRUs. These mechanisms enable the network to selectively retain or discard information as needed, significantly enhancing the model’s ability to capture long-term dependencies in time series data.

To the best of our knowledge, in 2015, two pioneering studies applied LSTM to the field of traffic prediction for the first time, specifically for traffic speed prediction and traffic flow prediction. Ma et al. [28] were the first to apply the LSTM model to traffic speed prediction. Tian et al. [29] were the first to propose using the LSTM model for traffic flow prediction. In 2016, Fu et al. [30] were the first to apply the GRU model to traffic flow prediction and compared it with models such as LSTM, ARIMA, and others. Many research works have improved RNNs and are used for traffic prediction. For example, Bidirectional LSTM (Bi-LSTM) [31], two-dimensional LSTM [32], etc., have been utilized. In recent years, in traffic prediction, RNNs have often served as essential components of hybrid deep neural network models, playing a vital role in capturing temporal patterns in traffic data. This reflects the ability of RNNs to understand and predict how traffic flow changes over time, particularly in handling dynamic variations and periodic events.

Furthermore, many scholars have researched applying CNNs in traffic prediction. Firstly, a CNN is a commonly used supervised deep learning method. CNNs have achieved great success in image and video analysis, especially in handling data with high spatial correlation. This characteristic makes CNNs an indispensable tool in traffic prediction, especially when dealing with visual data from traffic cameras. Unlike MLPs and RNNs, which mainly deal with numerical time series data, CNNs can directly identify and learn valuable features from raw images without manual feature engineering. Specifically, CNNs can automatically and learn spatial features from input data through convolution and pooling operations while being unsupervised. These features are extracted directly from the raw data, enabling the model to identify previously unknown spatial dependencies. In 2017, Ma et al. [33] were the first to apply CNNs in traffic speed prediction, marking the first instance of treating traffic data in the form of images. Additionally, the introduction of the Conv-LSTM [31] combined the CNN with LSTM to extract spatiotemporal features of traffic flow. Given that the traffic time series data from adjacent nodes on the same link are often correlated, multiple studies have confirmed the effectiveness of convolution-based deep learning models to extract local spatial dependencies from multivariate time series data [34]. In addition to two-dimensional (2D) CNNs for extracting spatial dependencies, one-dimensional (1D) CNNs are usually utilized for capturing temporal dependencies. It is worth noting that in 2018, Lea et al. [35] proposed the Temporal Convolutional Network (TCN). The TCN is a kind of 1D-CNN specifically designed for time series data, aiming to overcome the limitations of traditional CNNs in handling specific problems related to time series. It combines the temporal dependencies handling capabilities of RNNs with the efficient feature extraction capabilities of CNNs. In traffic prediction tasks, TCNs effectively handle sequential data, such as traffic flow and speed, predicting the traffic state for future time intervals. As traffic data often exhibit clear temporal periodicity and trends, TCNs excel at learning these patterns and making accurate predictions with their unique network structure. Some researchers have improved and applied the TCN architecture to traffic prediction tasks, demonstrating good predictive effectiveness [36,37,38].

However, in non-Euclidean structures, such as road networks and transportation systems, CNNs struggle to handle the complex relationships between data entities effectively. The relationships between data entities are not only based on physical spatial distances but also involve topology or network connectivity, which is challenging for CNNs. The introduction of Graph Neural Networks (GNNs) has provided better solutions for this aspect. The core advantage of GNNs lies in their ability to capture the complex relationships between nodes and the structural properties of networks. The basic idea of GNNs is to aggregate information from neighboring nodes through an iterative process to learn node representations. Each node’s representation is based on an aggregation function of its features and neighbors’ features. This aggregation mechanism allows information to flow in the graph, enabling each node to indirectly access and learn information about distant dependencies. GCNs have evolved from the GNN framework and are specifically designed to leverage the local connectivity patterns of graph-structured data to learn node features efficiently. GCNs provide a powerful way to process nodes and their relationships by applying convolutional operations on graph data. In 2018, in the realm of traffic prediction utilizing GCNs, the Diffusion Convolutional RNN (DCRNN) [39] and the Spatiotemporal GCN (STGCN) [40] represent early attempts to incorporate the structural graph of road networks. These models combine GCNs with RNNs to capture the spatiotemporal correlations inherent in traffic network structures. It is worth noting that in 2019, Guo et al. [41] proposed the Attention-Based STGCN (ASTGCN), which integrates attention mechanisms into the STGCN framework. This enhances the model’s ability to capture relationships between different nodes in the traffic network, thereby improving feature extraction accuracy. Another typical example is the Temporal Graph Convolutional Network (T-GCN) [42], which integrates the GRU and GCN in a unified manner, simultaneously optimizing spatial and temporal features during training. It demonstrates excellent performance in spatiotemporal traffic prediction tasks—considering that most studies model spatial dependencies based on fixed graph structures, assuming that the fundamental relationships between entities are predetermined, i.e., static graphs. However, this cannot fully reflect the true dependencies relationships, so some research has begun to explore adaptive graphs. A typical example is the Graph Wavelet Neural Network (Graph WaveNet) [43], which utilizes a novel adaptive dependency matrix and learns through node embeddings. The model framework consists of GCNs and TCNs. Considering the multi-modal and compound spatial dependencies in traffic road networks, much work has begun focusing on multi-graph research in subsequent developments. For example, the Temporal Multi-GCN (T-MGCN) [44] encodes spatial correlations between roads as multiple graphs, which are then processed by GCNs and combined with RNNs to capture dynamic traffic flow patterns over time. In recent years, many research methods have begun to explore the complex relationships between spatial and temporal data in more detail. For example, the Dynamic Spatial–Temporal Aware GNN (DSTAGNN) [45] proposed a method to measure the spatiotemporal distances between different nodes, effectively integrating multi-head attention mechanisms.

In the evolution of deep learning for traffic prediction, RNNs, CNNs, and GNNs have played significant roles. However, these models still need to be improved in handling long-term dependencies and global information. Introducing the transformer [46] model provides a new and powerful tool for traffic prediction. Unlike previous RNNs, the transformer relies entirely on a method called attention mechanism to process sequence data. This attention mechanism lets the model focus on different parts of the input data sequence, effectively capturing long-range dependencies. Many studies integrate the transformer with traffic prediction. For example, in 2020, Cai et al. [47] introduced a novel time-based positional encoding strategy in traffic flow. They proposed the traffic transformer using a transformer and GCN to model spatiotemporal correlations. Subsequently, many studies have innovated the transformer model, resulting in the so-called transformer families. In 2021, the informer [48] found wide application in traffic prediction. The informer is an advanced deep learning model for long-sequence time series prediction tasks. It enhances the traditional transformer architecture and is particularly suited for traffic prediction applications. The critical innovation of the informer lies in its Probabilistic Sparse (ProbSparse) self-attention mechanism, which selectively focuses on the most relevant time points for prediction, significantly reducing computational complexity and enabling more efficient handling of highly long sequences. Additionally, the informer employs a generative decoder design that supports one-shot prediction of long-term sequences, improving prediction efficiency and accuracy. With adaptive embedding layers, the informer can flexibly manage datasets of varying lengths while maintaining high prediction accuracy. In addition, the Propagation Delay-aware dynamic long-range transformer (PDFormer) proposed by Jiang et al. [49] is a transformer variant designed for traffic flow prediction in 2023. PDFormer captures dynamic spatial dependencies by employing dynamic spatial self-attention modules and graph masking techniques based on geographical and semantic proximity, and it integrates short-range and long-range spatial relationships. The model includes a feature transformation module to explicitly perceive delays and address time delay issues in the propagation of spatial information in traffic conditions. PDFormer also integrates temporal self-attention modules to capture long-term temporal dependencies.

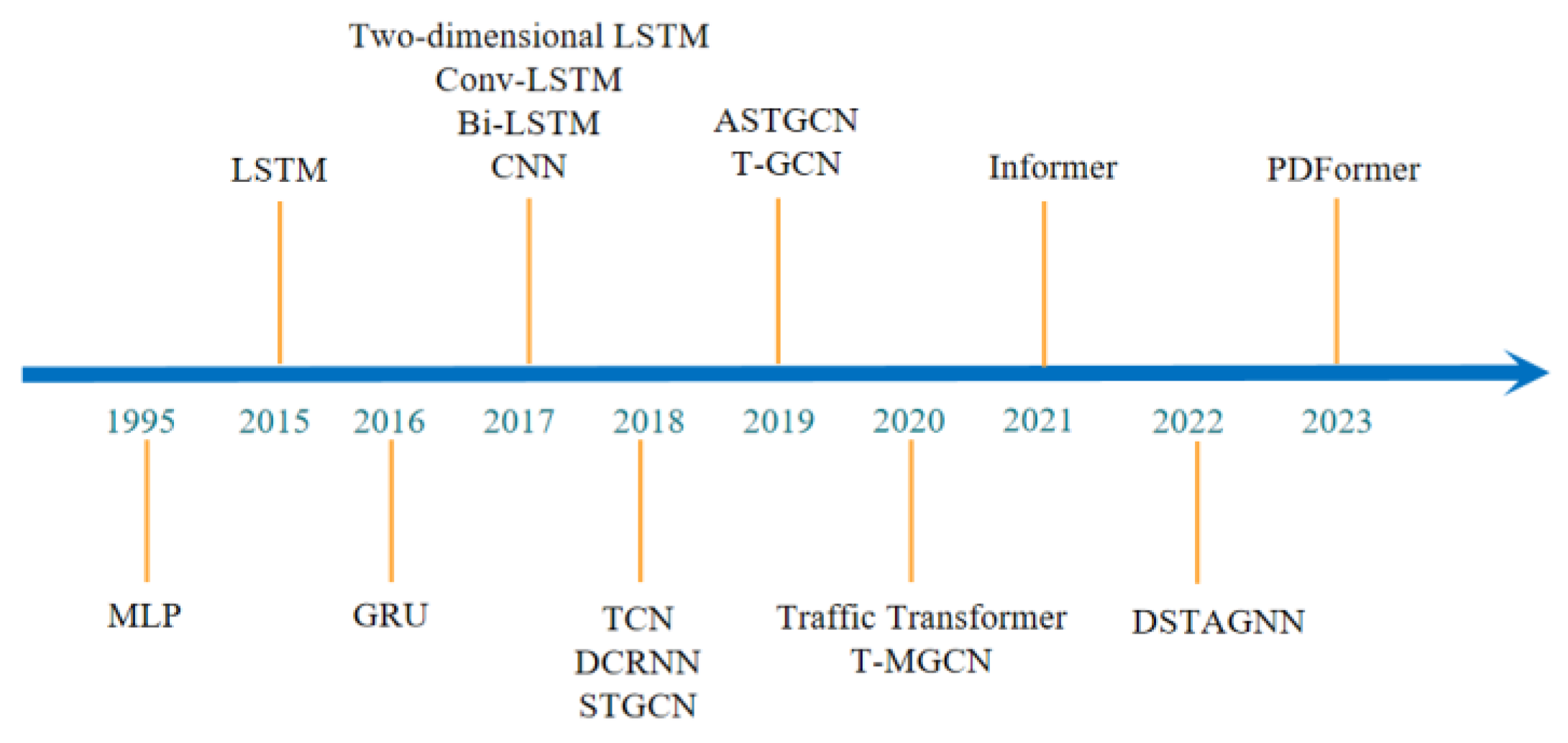

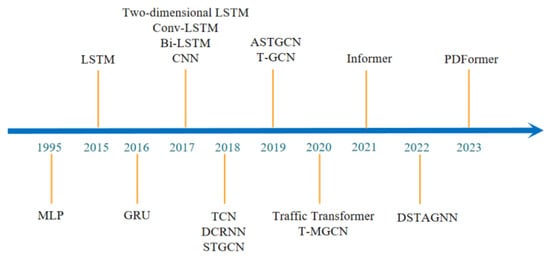

The development trajectory of time series forecasting algorithms based on deep learning is illustrated in Figure 2.

Figure 2.

Timeline of deep learning-based prediction algorithms.

3. Problem Statement and Input Data Representation Methods

3.1. Traffic Data and Their Unique Characteristics

The first step in ensuring accurate traffic prediction is acquiring high-quality data primarily from various sources. These sources mainly include fixed sensors (such as traffic cameras, induction loops, and radar sensors), mobile sensors (such as GPS devices in vehicles and smartphone apps), public transit systems, traffic management centers, social media and online platforms, satellites, aerial imagery, and third-party data service providers. Fixed sensors collect information about vehicle flow, speed, and road occupancy. Mobile sensors provide data on vehicle location and moving speeds. Public transit system data include the positions of buses and subways, departure intervals, and passenger counts. Traffic management centers integrate real-time and historical traffic data. Social media and online platforms offer updates on traffic incidents and road conditions. Satellites and aerial imagery monitor large-scale traffic flow and vehicle density. Third-party data service providers provide comprehensive traffic data that has been preprocessed and analyzed. After obtaining these data, preprocessing steps such as data cleaning, handling outliers, and data integration are typically performed to improve data quality and relevance, providing reliable inputs for traffic prediction models. These high-quality data are the foundation for achieving accurate traffic predictions. To summarize, the unique characteristics of traffic data are as follows:

- Strong periodicity. Traffic data exhibit significant daily and weekly cycles. Typical patterns include morning and evening rush hours during weekdays and different traffic patterns on weekends. Seasonal variations, such as holiday traffic spikes, also exhibit periodicity.

- Spatial dependencies. While many time series involve only time, traffic data are inherently spatiotemporal. Due to the interconnected nature of road networks, the traffic conditions at one location can highly depend on the traffic conditions at nearby or distant locations.

- Non-stationarity. Traffic patterns change over time and are influenced by factors like urban development, changes in traffic regulations, or the introduction of new infrastructure. This non-stationarity means that the statistical properties of the traffic data (such as mean and variance) can vary, making modeling more challenging.

- Volatility. Traffic data can be highly volatile due to unexpected events such as accidents, roadwork, or weather conditions. These events can cause sudden spikes or drops in traffic flow that are not easily predictable with standard models.

- Heteroscedasticity. Traffic volume variability is not constant over time. It can vary significantly across different times of the day or days of the week, particularly increasing during rush hours and decreasing at night.

- Multivariate influences. Traffic conditions are influenced by a wide range of factors beyond just the number of vehicles on the road. Weather conditions, special events, economic conditions, and social media trends can affect traffic flow and congestion levels.

These unique traffic data characteristics make it challenging to model traffic data prediction. The general prediction model cannot be directly applied to the task of traffic data prediction. On the other hand, traffic data encompass both spatial and temporal dimensions. These datasets capture traffic events’ geographical locations (spatial) and corresponding timeframes (temporal). Integrating spatial coordinates and time stamps enables the analysis of traffic patterns, flow dynamics, and congestion over time and across different locations. This dual-dimensional nature renders traffic data inherently spatiotemporal. Thus, effective traffic data prediction must incorporate spatial and temporal dependencies within the dataset. By considering these interdependencies, the predictive models can more accurately capture the dynamic nature of traffic patterns, which are influenced by location-specific factors and temporal variations. Ignoring either dimension would undermine the predictive accuracy and reliability of the model.

3.1.1. Spatial Dependencies

To describe the spatial dependencies within traffic data, the representation can be primarily categorized into the following three ways.

- Stacked Vector

We can use domain knowledge to stack data from multiple related spatial units according to predefined rules to capture spatial dependency, forming a multi-dimensional vector. The data for each unit can include traffic flow, speed, density, etc. Through stacking, these vectors can simultaneously represent the traffic conditions of multiple geographic locations.

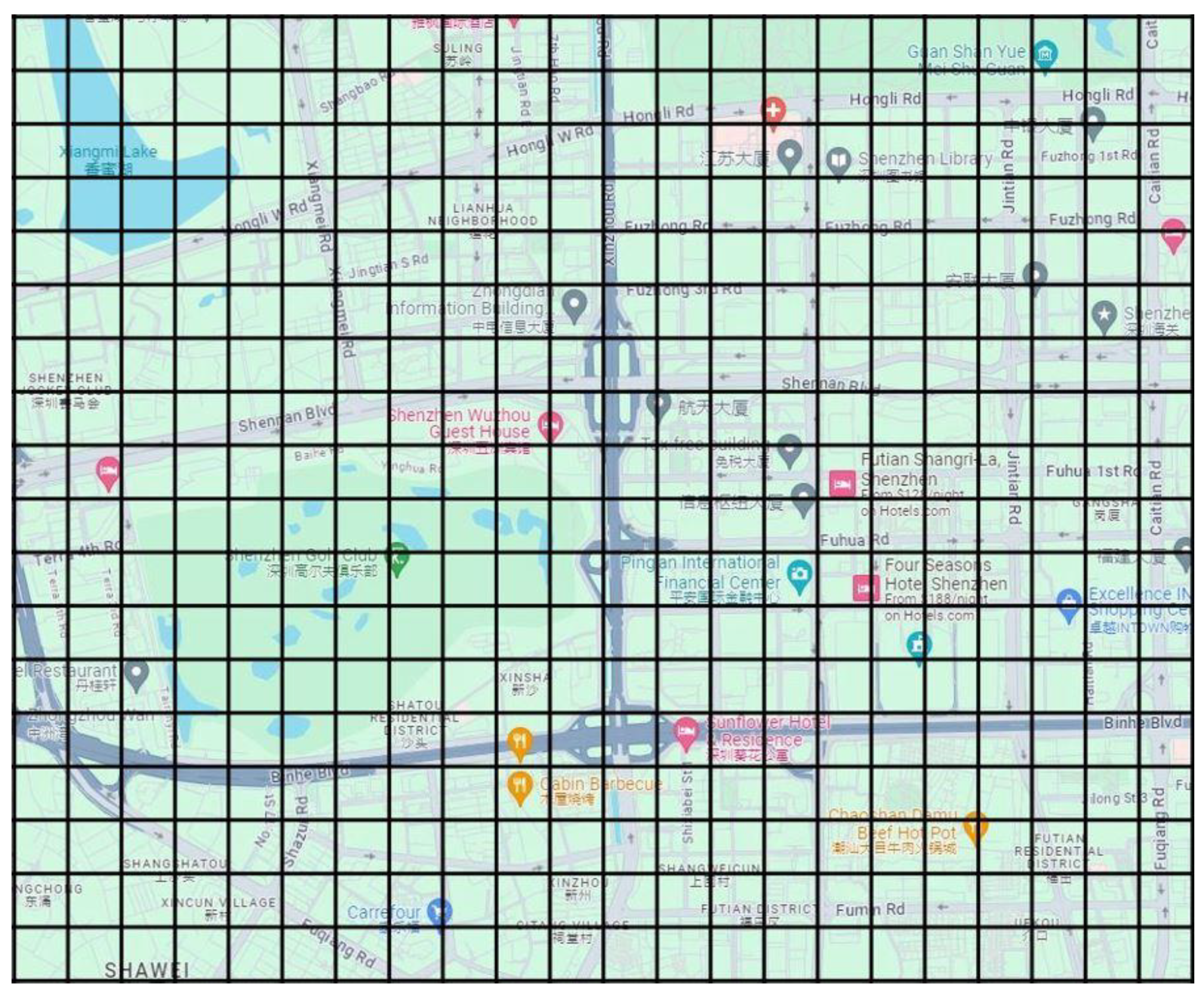

- Matrix/Grid Representation

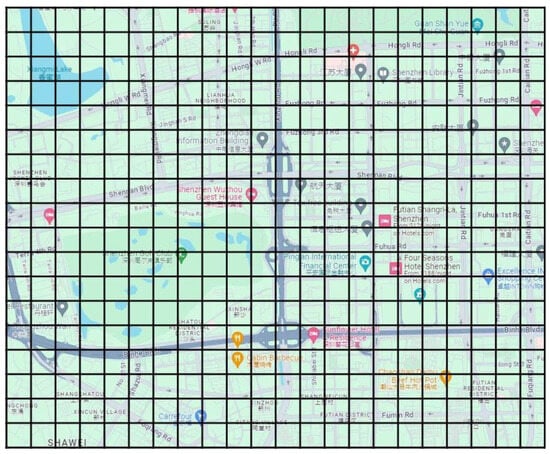

To capture spatial dependencies, spatial data, such as points or areas within a traffic network (a traffic matrix is a two-dimensional matrix with its -th element denoting the amount of traffic sourcing from node and exiting at node ), are mapped onto a two-dimensional matrix or grid. In this matrix or grid, each row and column represents a specific geographic location, and each cell in the matrix contains the traffic data for that location. This treats spatial information as two-dimensional Euclidean data composed of geographic information, representing it like an image. This method can be called matrix-based representation or grid-based map segmentation. As shown in Figure 3, the city map is divided into a grid-based map format.

Figure 3.

Grid-based map segmentation in Shenzhen.

- Graph Representation

Compared to image data, traffic network data exhibit more complex spatial dependencies, primarily because these dependencies cannot be solely explained by Euclidean geometry. For example, traffic networks are inherently graph like rather than grid like. While image data naturally align into a regular grid where each pixel relates primarily to its immediate neighbors, traffic nodes (such as intersections and bus stops) connect in more complex patterns that often do not correspond to physical proximity.

A graph consists of multiple nodes and edges that connect these nodes. Each node represents a specific spatial unit. In the representation of a graph, a matrix of size of is commonly used to describe the complex relationships between nodes, where is the total number of nodes. This matrix is called an adjacency matrix , and its element () represents the connectivity status between the th and -th spatial units. These connections may involve distance, connectivity properties, and other more complex spatial relationships, often beyond simple Euclidean distance definitions.

3.1.2. Temporal Dependencies

Temporal dependencies are typically categorized into two main types in data representation: sequentiality and periodicity. Sequentiality represents the natural order of data over time, which in traffic prediction means that data are collected in chronological order, with each data point timestamped later than the previous one, similar to the stacked vector method for representing spatial dependencies, where consecutive temporal units are stacked into a single vector to indicate that temporally closer data are more related. Periodicity involves the recurring patterns displayed by data, such as daily cycles (peak and off-peak periods within a day) or weekly cycles (differences in traffic patterns between weekdays and weekends). Representing data with periodicity allows models to capture these regularly occurring patterns, enabling accurate predictions for similar future periods.

3.2. Common Forms of Input Representations

With the rapid development of intelligent transportation systems, advanced deep learning techniques, particularly RNNs and their variants, for analyzing and predicting time series data have become a hot research area. RNNs, due to their unique feedback structure, demonstrate significant advantages in capturing long-term dependencies within time series data. However, the performance of RNNs in traffic prediction depends on their algorithms and is heavily influenced by the methods used to represent input data.

Data representation methods play a pivotal role in model design and predictive performance. Different data representation formats, such as time series, grid sequences, or graph sequences, each possess distinct characteristics and advantages suitable for handling various types of traffic data. For example, time series methods emphasize the changes in traffic data over time, while grid sequences and graph sequences also concern spatial dependencies. Furthermore, applying sliding window techniques is particularly crucial for capturing time dependencies, as it allows for the inclusion of continuous sequences of historical data in the model, thereby enhancing prediction accuracy.

With the advancements in big data and computing capabilities, an increasing number of studies are now exploring the impact of different data representation methods on the performance of RNNs in traffic prediction. From essential time series forecasting to complex spatiotemporal data analysis, researchers are striving to identify the most effective data representation methods to improve the accuracy and efficiency of traffic prediction. This section concentrates explicitly on the traffic prediction task’s common input data representation methods. We aim to provide a comprehensive perspective to better utilize RNNs for traffic prediction by analyzing the latest research findings. Below, we will explore the relevant literature regarding three forms of input sequences: time series, matrix/grid-based sequences, and graph sequences. Table 1 summarizes these three types of forms of input representations.

3.2.1. Time Series

The data representation of a time series encompasses the organization and structure of the data points that describe the evolution of a quantity over time. It consists of two fundamental components: the time stamp or index and the corresponding data values. The time stamp indicates when each observation was recorded and can take various formats, such as dates, timestamps, or numerical indices representing time intervals. The data values represent the actual measurements or observations of the quantity of interest at each time stamp. Together, these components form a sequential series of data points that can be analyzed to identify patterns, trends, and relationships over time. Time series, , can be represented as , where represents the traffic state at time . Time series is a common way to represent traffic data. It emphasizes the importance of traffic patterns that change over time. For example, Rawat et al. [50] provided a comprehensive overview of time series forecasting techniques, including their applications in various fields. Furthermore, in traditional studies, RNNs only utilize the time series of traffic volume as input and do not incorporate any attribute information from the time series data, such as timestamps and days of the week. However, traffic volume varies depending on the time and day of the week. Therefore, in addition to the time series of traffic volume, attribute information can be used as input to enhance the prediction accuracy of RNN methods further. Tokuyama et al. [51] investigated the impact of incorporating attribute information from the time series of traffic volume on prediction accuracy in network traffic prediction. They proposed two RNN methods: the RNN–Volume and Timestamp method (RNN-VT), which uses timestamp information, and the RNN–Volume, Timestamp, Day of the week method (RNN-VTD), which utilizes both timestamp and day of the week information as inputs, in addition to the time series of traffic volume. Some studies have also considered the potential connections between traffic states and their contexts by integrating time series data with contextual factors. For instance, Lv et al. [52] used time series to represent the scenario of short-term traffic speed prediction, where these time series data represent the average speed of all vehicles during specific time intervals, aiming to accurately reflect the movement status of vehicles. They introduce Feature-Injected RNNs (FI-RNNs), a network that combines time series data with contextual factors to uncover the potential relationship between traffic states and their backgrounds. Some studies decompose time series data, such as Wang et al. [53], who used time series to represent traffic flow data in urban road networks. Specifically, the study addresses short-term traffic flow prediction in urban road networks and emphasizes the periodicity and randomness of traffic data. To handle these traffic data, the research is divided into two modules. The first module consists of a set of algorithms to process traffic flow data, providing a complete dataset without outliers through analysis and repair and offering a dataset of the most similar road segment pairs. The second module focuses on multi-time step short-term forecasting. Awan et al. [54] demonstrated that in addition to parameters related to traffic, other features associated with road traffic, such as air and noise pollution, can also be integrated into the input. This study uses time series to represent road traffic data in the city, specifically focusing on the correlation between noise pollution and traffic flow to predict traffic conditions. The noise pollution data provide additional indicators of traffic density and traffic flow. These time series integrate changes in road traffic flow and the associated levels of noise pollution, revealing the interaction between traffic flow and noise pollution. Then, an LSTM model is used to predict traffic trends. Traditional time series forecasting models perform poorly when encountering missing data in the dataset. Regarding temporal dependencies, all roadways undergo seasonal variations characterized by long-term temporal dependencies and missing data. Traditional time series forecasting models perform poorly when encountering missing data in the dataset.

Table 1.

Summary of different input representations.

Table 1.

Summary of different input representations.

| Input Representations | Reference | Techniques |

|---|---|---|

| Time Series | [51] | RNN |

| [52] | RNN | |

| [53] | LSTM | |

| [54] | LSTM | |

| Matrix/Grid-based Sequence | [55] | ResNet |

| [56] | LSTM + CNN | |

| [57] | LSTM + CNN | |

| [58] | LSTM + CNN | |

| Graph-based Sequence | [59] | RNN + GNN |

| [60] | LSTM + GNN | |

| [61] | GRU + GCN | |

| [62] | GAT + Attention | |

| [63] | GCN + Attention |

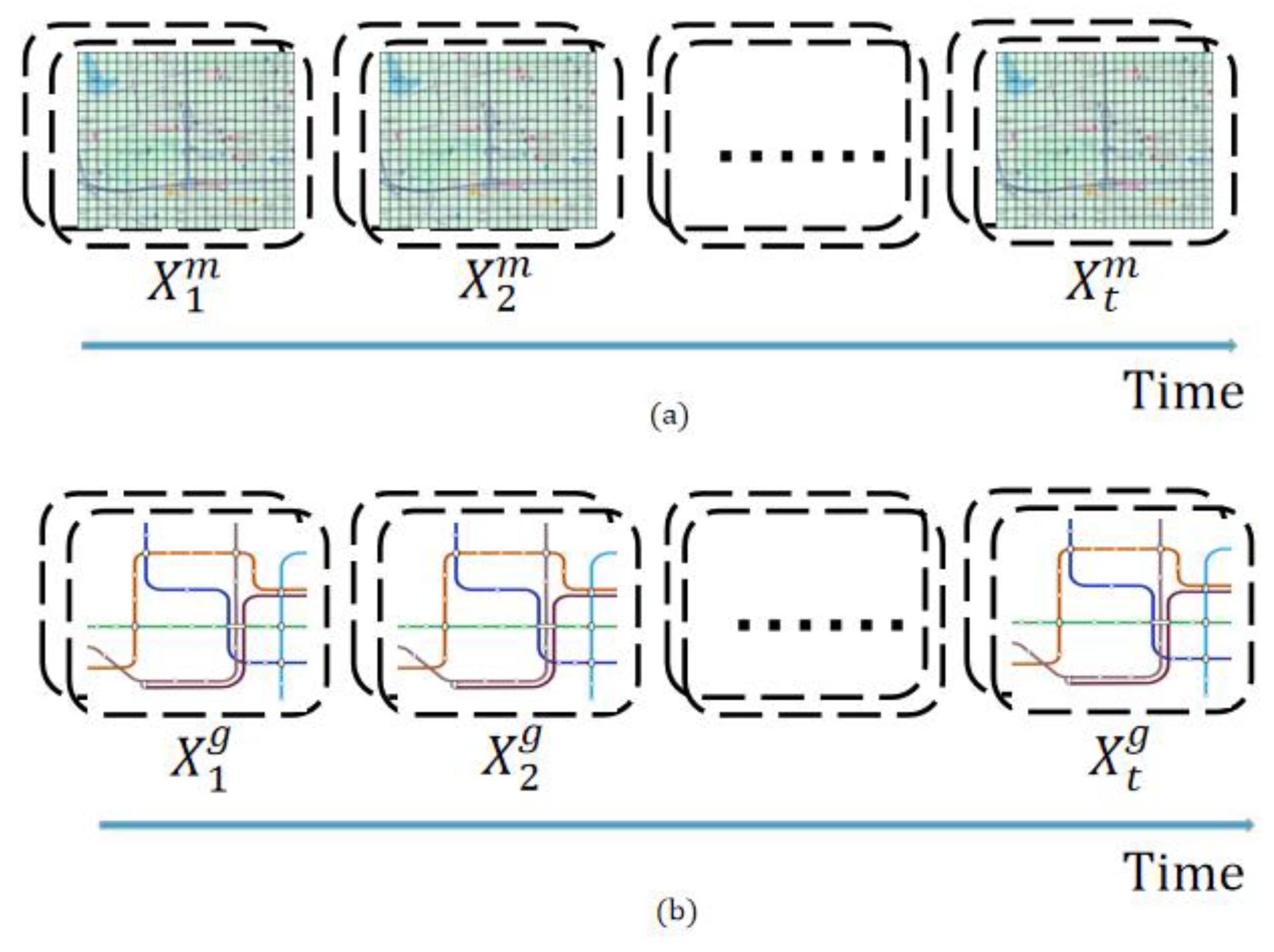

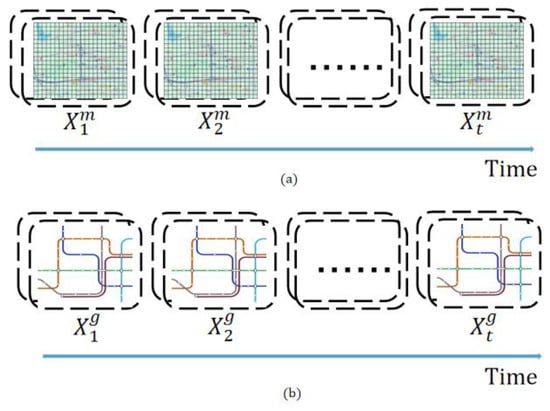

3.2.2. Matrix/Grid-Based Sequence

We previously discussed the spatial dependencies of traffic data, which revealed the mutual influence of traffic conditions between different locations. To effectively capture this spatial relationship, the method of dividing a map into grids in Euclidean space can be used. Specifically, the map is divided into many uniform small areas, with each grid representing a specific area on the map. This way, the map’s location information is transformed into a two-dimensional matrix or grid structure. In this structure, each cell of the matrix or grid contains traffic information for a particular area and simulates the spatial proximity of the real world between cells. Additionally, the traffic matrix (TM) refers to a specific application scenario, namely, the representation of traffic flow between different nodes (such as intersections, city areas, etc.) in a network. Data processed in this manner are referred to as a grid-based sequence, as shown in Figure 4a. The grid-based sequence, , consisting of rows and columns is spread over time steps, and it can be represented as , where represents a two-dimensional matrix indicating the traffic state of all grids at time . Zhang et al. [55] divided the city into equally sized grid areas, with each grid cell capturing the inflow and outflow traffic volume of pedestrian flows. Inflow is defined as the total traffic volume entering an area within a given time interval. In contrast, outflow is defined as the total traffic volume leaving the area within the same time interval. Matrices at each time step are stacked to form a three-dimensional tensor, representing the temporal sequence of pedestrian flow volumes. Yao et al. [56] represented city roads in a grid format, where each grid cell captures taxi demand. The constructed matrix is in a single tensor form. They built a matrix sequence where each matrix represents the city’s taxi demand at different time steps. Bao et al. [57] constructed a grid-based matrix sequence for short-term bike sharing demand. Specifically, they partitioned the surveyed areas of Shanghai into a grid of 5 × 5 cells, aggregating the trip data of bicycles in Shanghai (including start timestamps, start geolocations, end timestamps, end geolocations, etc.), as well as collecting weather and air quality data, based on grid cells. The data for each time step are integrated into a matrix, with each matrix element containing comprehensive data for the corresponding grid cell; over time, these matrices are arranged in chronological order to form a matrix sequence. Zhou et al. [58] divided the city into several grids and proposed a parameter to control the grid width. This study suggests that the pickup demands from certain locations at the previous time step will affect the dropoff demands at the next time step. Subsequently, all data are mapped to the grids, and each grid’s pickup and dropoff demands are aggregated for each time interval. Demand matrices are generated for each time interval, quantifying the number of pickup and dropoff demands occurring within each grid.

Figure 4.

Two representations of spatiotemporal traffic data: (a) a simple schematic diagram of a grid-based sequence data representation, representing a sequence of traffic data changing over time; (b) a simple schematic diagram of a graph-based sequence data representation, representing a sequence of traffic data changing over time.

3.2.3. Graph-Based Sequence

After discussing the grid-based sequence data representation, it is worth noting that much traffic data are collected based on complex traffic networks and their spatial dependencies closely rely on the topology of these networks. Therefore, to accurately describe and capture the spatiotemporal big data characteristics existing in traffic networks, we need a data representation method that reflects the network properties at each time step, namely, graph-based sequence data representation, as shown in Figure 4b. In this representation, each sequence element is treated as a graph at each time step, and the relationships or dependencies among elements are represented by time series. The graph-based sequence is , where each is a graph representing the state of the entire traffic network at time . Each graph can be further defined as , where is the set of nodes in the graph, with each node representing a specific location in the traffic network. is the set of edges in the graph, representing the connections between nodes. is the adjacency matrix. This approach is particularly suitable for capturing complex graph-based spatial and temporal data dependencies. It is widely used in multiple tasks in machine learning and data mining, such as sequence classification, clustering, and prediction.

In recent years, significant progress in traffic prediction research has been made using graph sequences as input sequences. Roudbari et al. [59] used graph-based sequence data to characterize road network traffic speed over time. These data encompass recorded journey information for road segments, considering the road network as a unified graph where nodes represent road segments and edges denote connections between these segments. Variable features are integrated into node information through an adjacency matrix, while static features are regarded as edge information. Their study categorized travel times for road segments, creating temporal lists on adjacent nodes sharing the same road segment, thus establishing a speed matrix that records speed variations for all road segments. Finally, the adjacency and speed matrices are combined to form graph-based sequential data. Additionally, Lu et al. [60] used graph-based sequence data to characterize vehicle speeds over a period in urban road networks. Specifically, the road network structure is constructed as a graph based on map data, with special consideration for the accessibility between different road segments. This not only reflects the physical structure of the roads but also accounts for actual traffic flow conditions, like when a road segment is temporarily closed due to maintenance or a traffic accident. Their study computes the traffic speeds of different road segments at various time points using taxi trajectory data, which are then matched with the road network. Graph-based sequence data are formed by continuously constructing these road traffic speed graphs. Wang et al. [61] used graph-based sequence data to predict traffic flow in urban road networks. Specifically, the road network structure is constructed as a graph based on spatial–temporal data, with each node representing a traffic sensor and edges representing the correlations between sensors at different time steps. The study computes traffic flow data at various time points using real-time sensor readings, forming a sequence of graphs where each graph represents the traffic conditions at a specific time step. Jams et al. [62] utilized a data-driven graph construction scheme for traffic prediction. In their approach, nodes represent traffic sensors, and edges represent the correlations between sensor readings at different time points. They dynamically construct graphs by embedding sensor correlations in a latent attention space and generate them at each time step to form a sequence. Gu et al. [63] used graph-based sequence data to describe traffic flow over a period in urban traffic networks. They define the topological road network as a directed graph, where nodes represent detectors on the roads, and features, such as traffic flow and speed, are added to the nodes. Their study distinguishes between two types of graphs: static adjacency graphs and dynamic adjacency graphs. The static adjacency graph is constructed without relying on prior assumptions, simulating typical long-term spatial dependencies in traffic patterns, while the dynamic adjacency graph addresses short-term local dynamics, allowing relationships between nodes to adjust over time based on observed data. Timestamp information is effectively concentrated on historical time information using a multi-head self-attention mechanism. The graphs of consecutive time steps (static and dynamic adjacency graphs) are concatenated to form a graph sequence encompassing both spatial and temporal dimensions. This way, each graph at a time node not only contains the traffic state at that moment but also reflects changes in the relationships between nodes from one time step to the next through the variations in the edges of the dynamic adjacency graph. Importantly, they embed learnable time and space matrices into the input graph-based sequence, ultimately becoming the model’s input.

3.2.4. Sliding Window

The size of the sliding window is often a critical parameter that needs to be adjusted in forecasting research. Using RNNs for the prediction process allows for recognizing and learning data patterns within time series data. However, the presence of fluctuations in the data can make it challenging to understand these data patterns. The dataset utilized in the study by Sugiartawan et al. [64] records the visitor volume at each time point over ten years, showcasing the changes in visitor visitation to attractions over time, characterized by linear trends and periodic fluctuations. The study employs 120 data vectors for prediction processing, representing tourism visitation data for 120 months. Based on these 120 data vectors, the study conducts multiple experiments and determines a window size setting of 3, meaning each window contains data from three time steps. In the study, the window moves forward one time step at a time, and ultimately, the model predicts the visitor volume at the next time point based on the visitor count data from the past three time points. Like other deep learning models, GRUs require careful adjustment of the sliding window size to optimize predictive performance. To explore this tedious process, in the study conducted by Basharat Hussain et al. [65], they continuously adjust the input window size of the GRU model to enhance its predictive capabilities. The original data are derived from average traffic flow data collected at 5 min intervals. Through experimentation, the researchers tested various window sizes (3, 6, 12, 18, 24) to determine which size most effectively improves the performance of the GRU model. In a GRU model configured with 256 neurons in the first layer and two hidden layers containing 64 and 32 neurons, respectively, they find that a window size of 12 yielded the best predictive performance. Lu et al. [66] combined the ARIMA model and LSTM for traffic flow prediction. In the ARIMA model, regression with a sliding time window is introduced, allowing the model to fit new input data for more accurate predictions continuously. This combined approach merges the projections of the two models through dynamic weighting, where the weights of each model in the final prediction are adjusted based on the standard deviation between the predicted results and the actual traffic flow at different time windows.

3.3. Problem Statement

Traffic prediction involves using historical traffic data to forecast future traffic conditions accurately. In the context of traffic data analysis, predictions are typically categorized into short-term, mid-term, and long-term predictions based on the forecast horizon. Short-term prediction involves forecasting traffic conditions over a horizon ranging from a few minutes to a few hours into the future. This type of prediction is crucial for real-time traffic management and control systems. Mid-term prediction refers to forecasting traffic conditions over a horizon extending from a few hours to several days. This type of prediction is used for planning and operational strategies that require an understanding of traffic patterns beyond immediate real-time needs. Long-term prediction involves forecasting traffic conditions over a horizon extending from several days to several months or even years. This type of prediction is important for strategic planning, infrastructure development, and policymaking [67]. In this review, short-term traffic prediction problems are our focus. Due to the diversity and complexity of traffic data, the traffic prediction tasks also vary. Therefore, a sufficiently generalized problem definition is needed to represent the short-term traffic prediction problem clearly.

Assume a spatial network with a set of sensors deployed, where each sensor records attributes (such as traffic flow, traffic speed, traffic occupancy, etc.) over timestamps. Thus, the observations of all sensors across all time points can be represented as a three-dimensional tensor, , and it can be represented as , where represents the attribute data of all sensors at timestamp . represents the exogenous factors that influence future traffic conditions, such as information on weather, holidays, big events, etc. Assume that the traffic prediction task requires predicting the traffic attributes for the next time steps based on the traffic attributes from the past time steps and exogenous factors ; the traffic prediction task can be defined as follows:

where is the method used for prediction. represents the model’s parameters, including all weights and biases in the network. is the prediction at time . This problem definition provides a clear and structured approach to utilizing various methods for traffic prediction, ensuring coverage of all relevant variables.

4. RNN Structures Used for Traffic Prediction

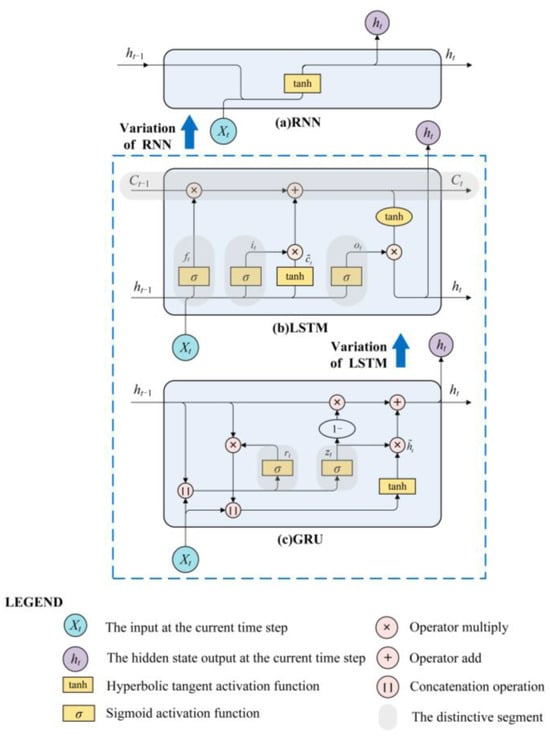

4.1. RNNs

RNNs are a class of iterative learning machines that process sequence data by cyclically reusing the same weights. This cyclical structure enables them to maintain a memory of previous data points using this accumulated information to process current inputs, thereby capturing temporal dependencies. Specifically, an RNN applies a transfer function to update its internal state at each time step. The general formula for this update is as follows:

Here, is identified as the input vector at time step and is the previous hidden state. is the weight matrix for the connections between the input at the current time step and the current hidden state. is the weight matrix for the connections between the previous hidden state and the current hidden state. and represent bias vectors. The function acts as the activation function.

The hidden state at each time step is updated using a combination of the current input and the previous hidden state . Weight matrix facilitates the incorporation of new information and weight matrix helps in transferring past learned information. and adjust the inputs and the state transition. The function , typically a non-linear activation function, is applied to introduce non-linearity into the system, enabling the network to capture complex patterns in sequential data.

4.2. LSTMs

Theoretically, RNNs are simple and powerful models, effectively training them poses many challenges in practical applications. One major issue is the problem of vanishing and exploding gradients. Gradient explosion occurs during training when the norm of gradients sharply increases due to long-term dependencies, growing exponentially. Conversely, the vanishing gradient problem describes the opposite phenomenon, where gradients for long-term dependencies rapidly diminish to near-zero levels, making it difficult for the model to learn long-range dependencies. To overcome these issues, the introduction of LSTM addresses the problem by employing gate mechanisms to maintain long-term dependencies while mitigating gradient problems. These gate control systems include input gates, forget gates, and output gates, which work together to regulate information flow, retention, and output precisely.

The basic formulas for LSTM are as follows:

where represents the input vector at time step . represents the hidden state vector at time step and will serve as part of the input for the next time step. , , and are the outputs of the forget gate, input gate, and output gate. is the candidate layer at time step , representing potential new information that might be added to the current cell state. is the LSTM’s internal state, containing the network’s long-term memory. , , , and are the weight matrices for the forget gate, input gate, output gate, and candidate layer. , , , and are the bias vectors for the forget gate, input gate, output gate, and candidate layer, respectively. is the sigmoid activation function used for gating mechanisms and is the hyperbolic tangent activation function used for non-linear transformations.

The forget gate decides which information to discard by using a sigmoid function that outputs values between 0 and 1 for each component in the cell state, where 1 means “retain this completely” and 0 means “discard completely”. Simultaneously, the input gate and a candidate layer decide which new information is stored by creating a vector of new candidate values. The cell state is then updated by combining the old state, multiplied by the output of the forget gate, with the new candidate values scaled by the output of the input gate. Lastly, the output gate together with the cell state passed through a function determines the next hidden state , filtering the information to the output. This mechanism enables LSTMs to handle vanishing gradients and learn over extended sequences effectively.

4.3. GRUs

The GRU, as another prominent gated structure, was initially proposed by Cho et al. [68]. The GRU was proposed primarily to optimize the complexity and computational cost of LSTMs. While LSTMs are powerful, their structure includes three gates and a cell state, making the model complex and parameter heavy. The GRU simplifies the model structure by merging the forget gate and input gate into a single update gate, reducing the number of parameters and improving computational efficiency. Additionally, the GRU does not have a separate cell state; it operates directly on the hidden state, simplifying the flow of information and memory management. These improvements enable the GRU to efficiently handle tasks requiring capturing long-term dependencies while remaining a powerful model choice. The propagation formulas of the GRU are as follows:

Here, is the output of the update gate and is the output of the reset gate. , , and are the weight matrices for the update gate, reset gate, and candidate layer. , , and are the bias vectors for the update gate, reset gate, and candidate layer.

The GRU operates by effectively balancing the retention and introduction of new information in time series data. The update gate output controls the extent to which the previous hidden state is retained. The reset gate output dictates the influence of on the candidate’s hidden state . The candidate’s hidden state is obtained by applying the tanh activation function to the linear combination of the reset gate-adjusted previous hidden state and the current input. The final hidden state results from a linear combination of the previous hidden state, controlled by the output of the update gate, and the new candidate hidden state. The weight matrix is used to generate the candidate state, while the bias adjusts its bias. Thus, the GRU can effectively update its state, capturing long-term dependencies in time series data.

Figure 5 shows a comparison between the structures of the RNN and its variants. The RNN represents the simplest form, showing a single recurrent layer; the distinction of the LSTM lies in the introduction of three types of gates (input, forget, and output gates), which allow the network to selectively remember or forget information and a cell state that helps maintain long-term dependencies, aiming to overcome the common problem of vanishing gradients seen in simple RNNs. The GRU merges the LSTM’s input and forget gates into a single update gate and combines the cell state and hidden state into a unified mechanism. Overall, this diagram clearly explains how different RNNs and their variants process information to handle sequential data.

Figure 5.

Comparison of RNN, LSTM, and GRU neural network structures.

4.4. Hybrid Models Including RNN Techniques

In the field of traffic prediction, RNNs and their variants, such as LSTMs and GRUs, are widely adopted due to their excellent performance in handling time series data. However, as the complexity of traffic data continues to increase, models relying solely on RNNs are no longer sufficient to meet prediction requirements entirely. To further enhance prediction accuracy and effectively address the complexities of traffic data, researchers are beginning to explore methods that combine RNNs with other mechanisms and models. These methods may include but are not limited to combining RNNs with traditional machine learning (ML) techniques, CNNs, GNNs, and attention mechanisms aimed at achieving better results in traffic prediction tasks, as shown in Table 2.

4.4.1. RNNs + Traditional ML Techniques

As mentioned earlier, classical traffic flow prediction methods based on traditional ML techniques include K-NN and SVR. Some scholars have endeavored to combine these methods with the RNN family for traffic prediction. For example, Luo et al. [69] proposed a spatiotemporal traffic flow prediction method combining K-NN and LSTM. K-NN is used to select neighboring stations most relevant to the test site to capture the spatial characteristics of traffic flow. At the same time, LSTM is employed to explore the temporal variability of traffic flow. The experimental results show that this model outperforms some traditional models in predictive performance, with an average accuracy improvement of 12.59%. In comparison with combining with the LSTM model, Zhou et al. [70] proposed a traffic flow prediction method based on the K-NN and GRU. This method calculates spatial correlations between traffic networks using Euclidean distance and captures the time dependency of traffic volume through the GRU. The experimental results demonstrate a significant improvement in the prediction accuracy of this model compared to traditional methods. The K-NN method exhibits good predictive performance in simple scenarios. However, due to its non-sparse nature, it may struggle to handle traffic scenarios with significant variations. Tong et al. [71] utilized an optimized version of SVR as a traffic flow prediction method. They apply Particle Swarm Optimization (PSO) to optimize the parameters of SVR, thereby enhancing the prediction system’s performance. Given the complex non-linear patterns of traffic flow data, Cai et al. [72] proposed a hybrid traffic flow prediction model combining the Gravitational Search Algorithm (GSA) and SVR model. The GSA is employed to search for the optimal SVR parameters, and this model demonstrates good performance in practice.

Table 2.

Summary of different hybrid models.

Table 2.

Summary of different hybrid models.

| Hybrid Models | Reference | Techniques |

|---|---|---|

| RNNs + Traditional ML Techniques | [69] | LSTM + K-NN |

| [70] | GRU + K-NN | |

| RNNs + CNNs | [73] | LSTM + CNN |

| [74] | LSTM + CNN | |

| RNNs + GNNs | [42] | GRU + GCN |

| [75] | LSTM + GNN | |

| [76] | LSTM + GNN | |

| RNNs + Attention | [77] | LSTM + Attention |

| [78] | LSTM + Attention | |

| [79] | LSTM + Attention | |

| [80] | RNN + GNN + Attention | |

| [81] | GRU + GCN + Attention |

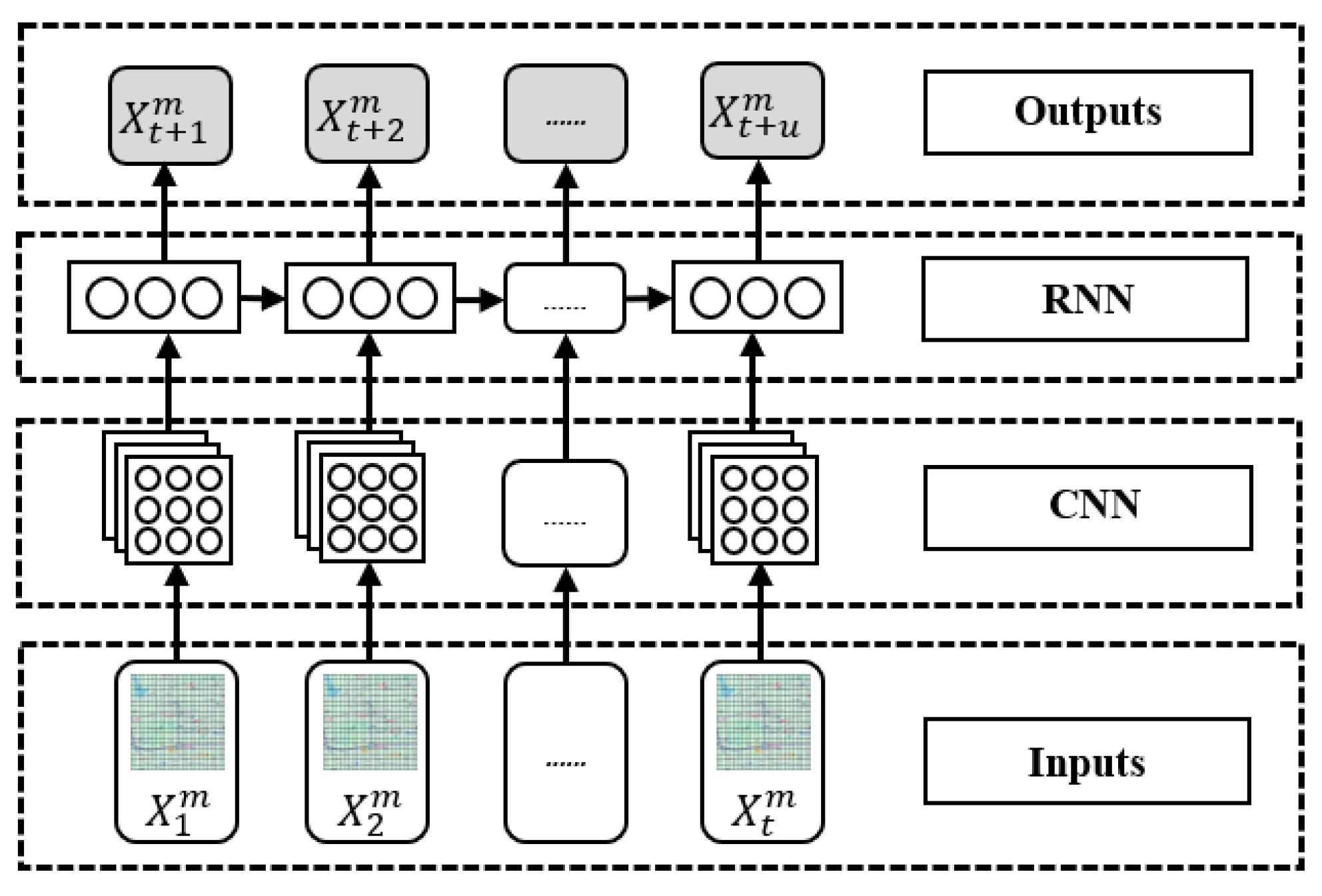

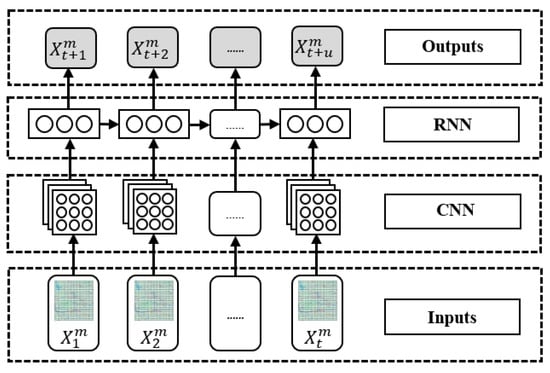

4.4.2. RNNs + CNNs

Researchers have gradually recognized the importance of spatial dependency, and the combined use of RNNs and CNNs in addressing spatiotemporal data issues, particularly in traffic flow prediction in the Euclidean-based space, has become an important research direction. This fusion method fully leverages the advantages of RNNs in handling time series data and the efficiency of CNNs in processing spatial features. RNNs are particularly suitable for handling time series data because they can capture the temporal dynamic characteristics and long-term dependencies in the data. However, RNNs have limited capability in dealing with high-dimensional spatial data. CNNs effectively identify and extract local features from high-dimensional spatial data, such as images and videos. Still, they do not directly handle dynamic information in time series data. Therefore, combining RNNs and CNNs can achieve more comprehensive and accurate modeling and prediction of spatiotemporal data. Figure 6 shows an example of a hybrid RNN and CNN model.

Figure 6.

Example of a hybrid RNN and CNN model.

Ma et al. [33] transferred the application of CNNs from images to the field of traffic prediction, forecasting large-scale transportation networks by utilizing spatiotemporal traffic dynamics transformed into images. This method integrates the spatial and temporal dimensions of traffic data into a unified framework, involving the conversion of traffic dynamics into a two-dimensional spatiotemporal matrix, fundamentally treating traffic flow as images. This matrix captures the intricate relationships between time and space in traffic flow, allowing for a more nuanced analysis of traffic patterns. Yu et al. [73] proposed an innovative approach for predicting traffic flow in large-scale transportation networks using Spatiotemporal Recurrent Convolutional Networks (SRCNs). The particular distinction of this method lies in transforming network-wide traffic speeds into a series of static images, which are then employed as inputs for the deep learning architecture. This image-based representation allows for a more intuitive and effective capture of the complex spatial relationships inherent in traffic flow across the transportation network. Empirical testing on a Beijing transportation network comprising 278 links further demonstrated the effectiveness of this method. Due to the potential variation in spatial dependencies between different locations in the road network over time and the non-periodic nature of temporal dynamics, Yao et al. [74] proposed a novel Spatial–Temporal Dynamic Network (STDN) for traffic prediction. This approach utilizes a flow gating mechanism to track the dynamic spatial similarity between regions, enabling the model to understand how traffic flows between areas change over time, which is crucial for accurately predicting future traffic volumes. Additionally, they employ a periodically shifted attention mechanism to handle long-term periodic information and time offsets. This allows the model to account for subtle variations in daily and weekly patterns, such as shifts in peak traffic times, thereby enhancing prediction accuracy.

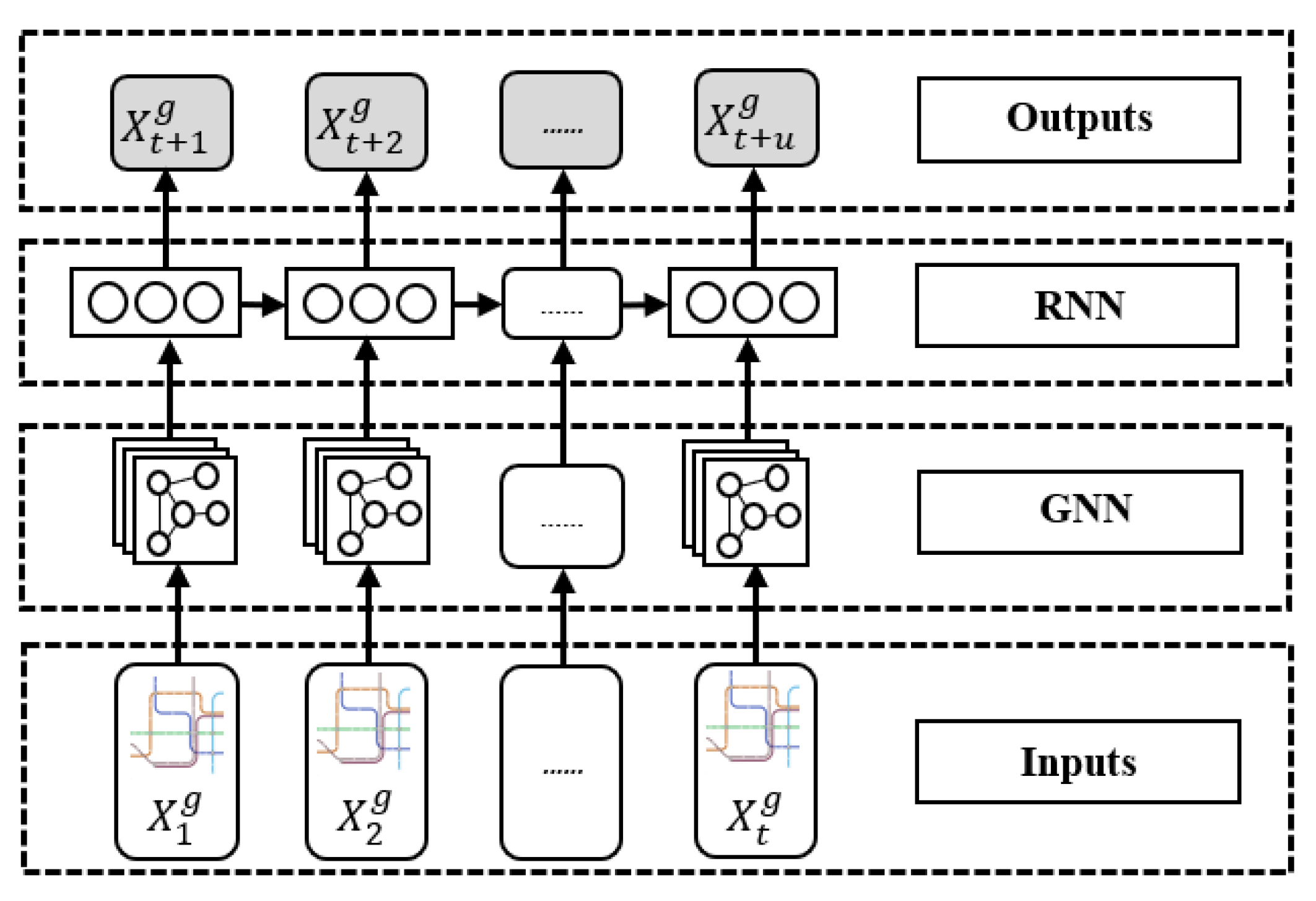

4.4.3. RNNs + GNNs

When constructing deep learning spatiotemporal traffic prediction models, it is necessary to consider the characteristic graph structure of many transportation networks. Generally, passenger flow activities occur on specific transportation networks rather than simple Euclidean spaces. Modeling with non-graph structures may result in the loss of useful spatial information. CNNs are typically used to handle data with Euclidean spatial structures, such as regular grids. However, for non-Euclidean spaces, such as graph-structured data, the utility of CNNs is not as evident. To explore the spatial properties of non-Euclidean transportation networks, some scholars have adopted mathematical graph theory approaches, modeling transportation networks as graphs. This approach effectively depicts the connectivity between traffic nodes and opens new avenues for introducing convolutional operations. In recent years, GNNs have been widely employed to capture spatial correlations in transportation networks. Figure 7 shows an example of a hybrid RNN and GNN model.

Figure 7.

Example of a hybrid RNN and GNN model.

Zhao et al. [42] proposed a T-GCN model, which integrates the advantages of GCNs and GRUs. This model can simultaneously capture the spatial and temporal dependencies of traffic data. Furthermore, it incorporates encoder–decoder architecture and temporal sampling techniques to enhance long-term prediction performance. Wang et al. [75] proposed a novel spatiotemporal GNN for traffic flow prediction, which comprehensively captures spatial and temporal patterns. This framework provides a learnable positional attention mechanism, enabling effective information aggregation from adjacent roads. Additionally, modeling traffic flow dynamics to leverage local and global temporal dependencies demonstrates strong predictive performance on real datasets. Related studies also aim to reduce the data volume processed by neural networks to improve accuracy. For example, Bogaerts et al. [76] proposed a hybrid deep neural network based on a GNN and LSTM. Additionally, they introduced a time-correlation-based data dimensionality reduction technique to select the most relevant sets of road links as inputs. This approach effectively reduces the data volume, enhancing prediction accuracy and efficiency. The model performs well in short-term traffic flow prediction and exhibited good predictive capability in forecasting four-hour long-term traffic flow. Moreover, the proposed time correlation-based data dimensionality reduction technique effectively addresses prediction problems in large-scale traffic networks.

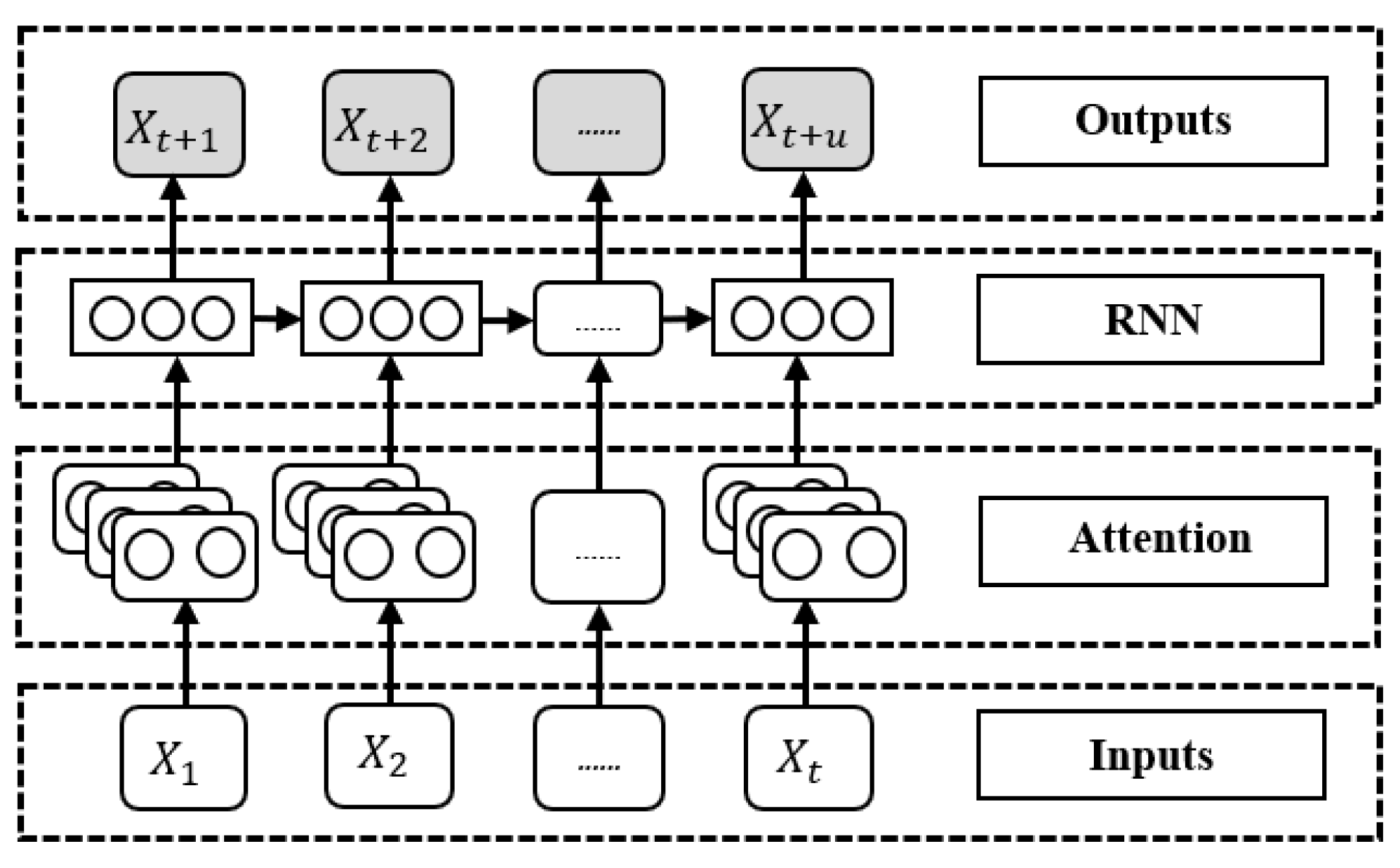

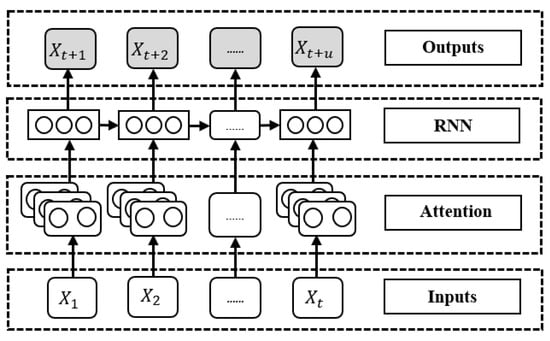

4.4.4. RNNs + Attention

Accurate traffic prediction optimizes road network operational efficiency, enhances traffic safety, and reduces environmental pollution. In recent years, with the development of deep learning methods, RNNs have been widely applied in traffic prediction due to their excellent ability to handle temporal data. Meanwhile, introducing attention mechanisms further improves the model’s capability to capture temporal and spatial dependencies. Figure 8 shows an example of a hybrid RNN and attention model.

Figure 8.

Example of hybrid RNN and attention models.

The STAGCN model proposed by Gu et al. [63] combined static and dynamic graphs to accurately capture spatial dependencies in traffic networks. Additionally, the model introduces gated temporal attention modules to effectively handle long-term dependencies in time series data, thereby improving traffic prediction accuracy. Some scholars have improved training efficiency by introducing attention mechanisms on top of LSTM; for example, Qin et al. [77] integrated attention mechanisms to enhance training efficiency by simplifying the structure of LSTM networks while focusing on the most influential features for current predictions. This approach has shown superior performance and speed over traditional LSTM and RNN models on multiple public datasets. Similarly, Hu et al. [78] improved the LSTM-RNN by introducing attention mechanisms and developed a short-term traffic flow prediction model. This model demonstrates efficiency and accuracy in practical applications with real traffic flow data, confirming the effectiveness of attention mechanisms in enhancing traffic prediction performance. The SA-LSTM model proposed by Yu et al. [79] utilized self-attention mechanisms, effectively addressing the vanishing gradient problem and accurately capturing the spatiotemporal characteristics of traffic information. The superiority of this model is demonstrated in experiments on Shenzhen road network data and floating car data, showcasing the potential of self-attention mechanisms in improving traffic prediction accuracy. Additionally, some scholars, both domestically and abroad, have combined dynamic spatiotemporal graph recurrent networks with attention mechanisms. For instance, the Dynamic Spatiotemporal Graph Recurrent Network (DSTGRN) model proposed by Zhao et al. [80] integrated spatial attention mechanisms and multi-head temporal attention mechanisms by encoding road nodes, providing a fine-grained perspective for modeling the temporal dependencies of traffic flow data. This model surpasses its baseline models in prediction accuracy, demonstrating the potential application of dynamic graph networks in traffic prediction. Tian et al. [81] effectively captured the spatiotemporal characteristics of road conditions and achieved precise traffic speed prediction by combining the GCN and GRU and introducing multi-head attention mechanisms. Testing results on two real datasets demonstrate the superior performance of this model, further validating the application value of multi-head attention mechanisms in traffic prediction.

5. Sub-Areas of Traffic Prediction Applications Using RNNs