Diversity Monitoring of Coexisting Birds in Urban Forests by Integrating Spectrograms and Object-Based Image Analysis

Abstract

:1. Introduction

2. Materials and Methods

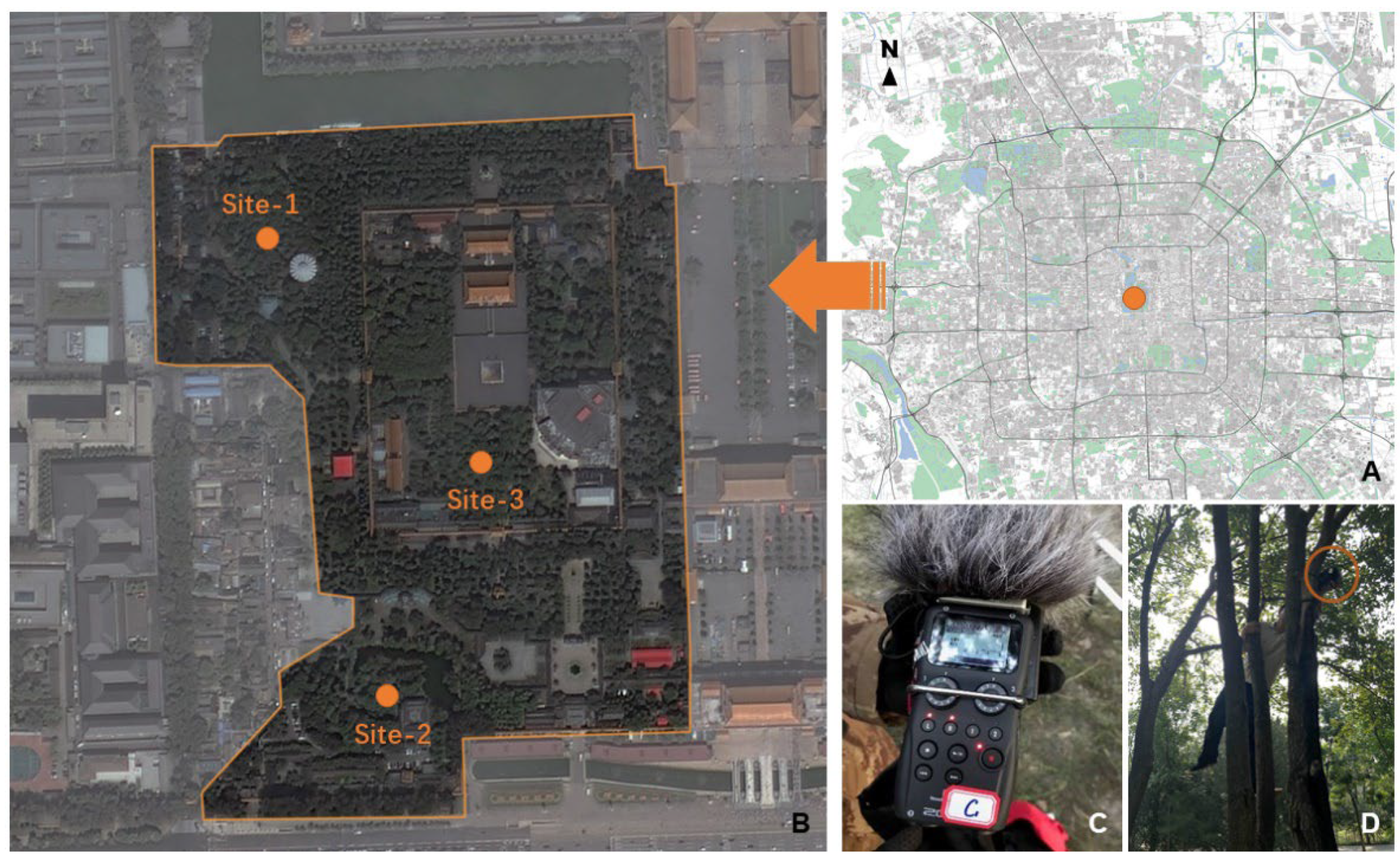

2.1. Study Area and Data Sets

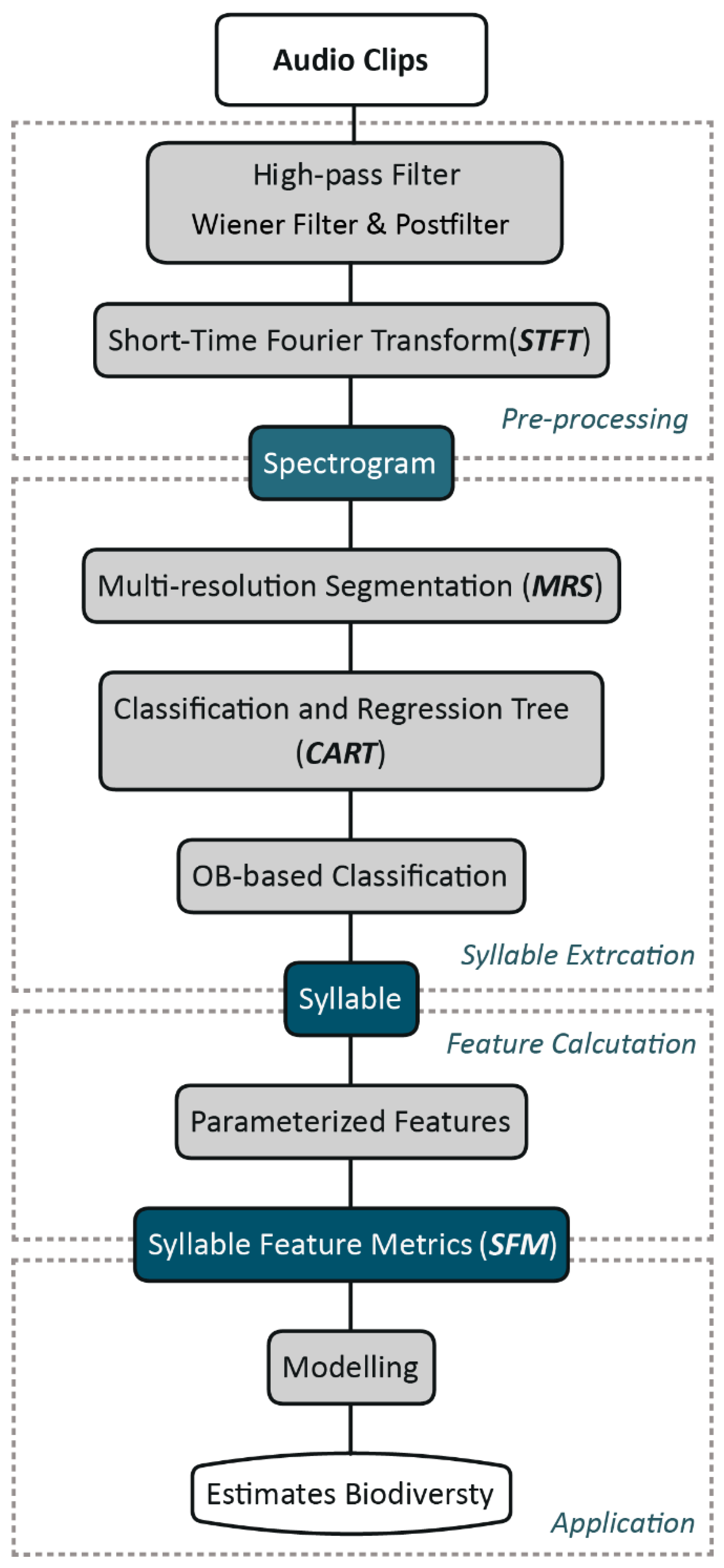

2.2. Methods

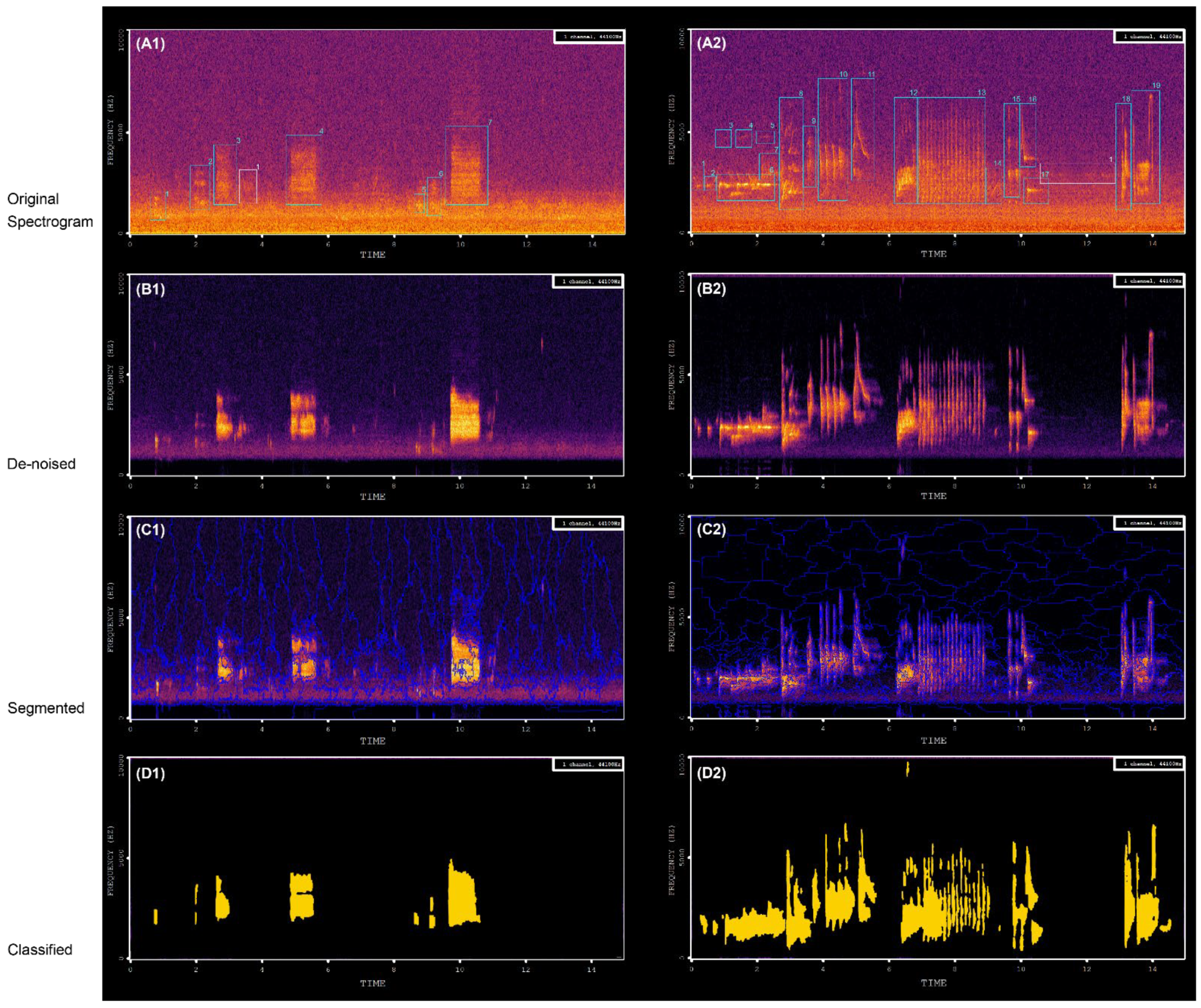

2.2.1. Pre-Processing

- Audio recordings denoising

- 2

- Short-time Fourier transformation (STFT)-Spectrogram

2.2.2. Bird Vocalization Extraction

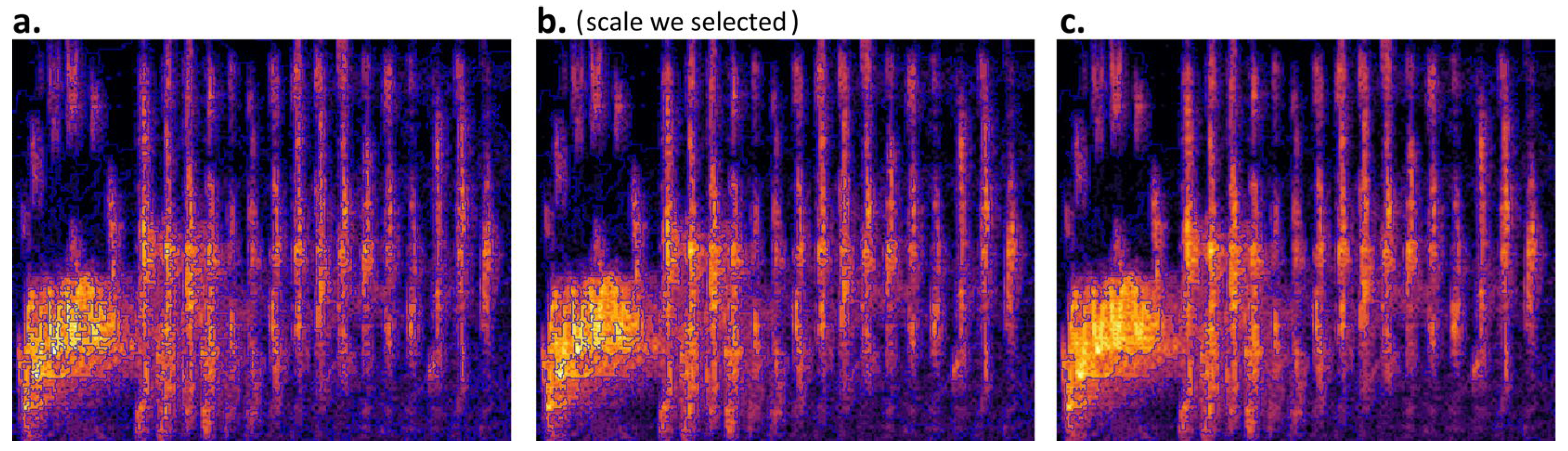

- Segmentation

- 2

- Classification

2.2.3. Feature Representation of Extracted Syllables

2.3. Statistical Analysis

2.3.1. Accuracy Assessment

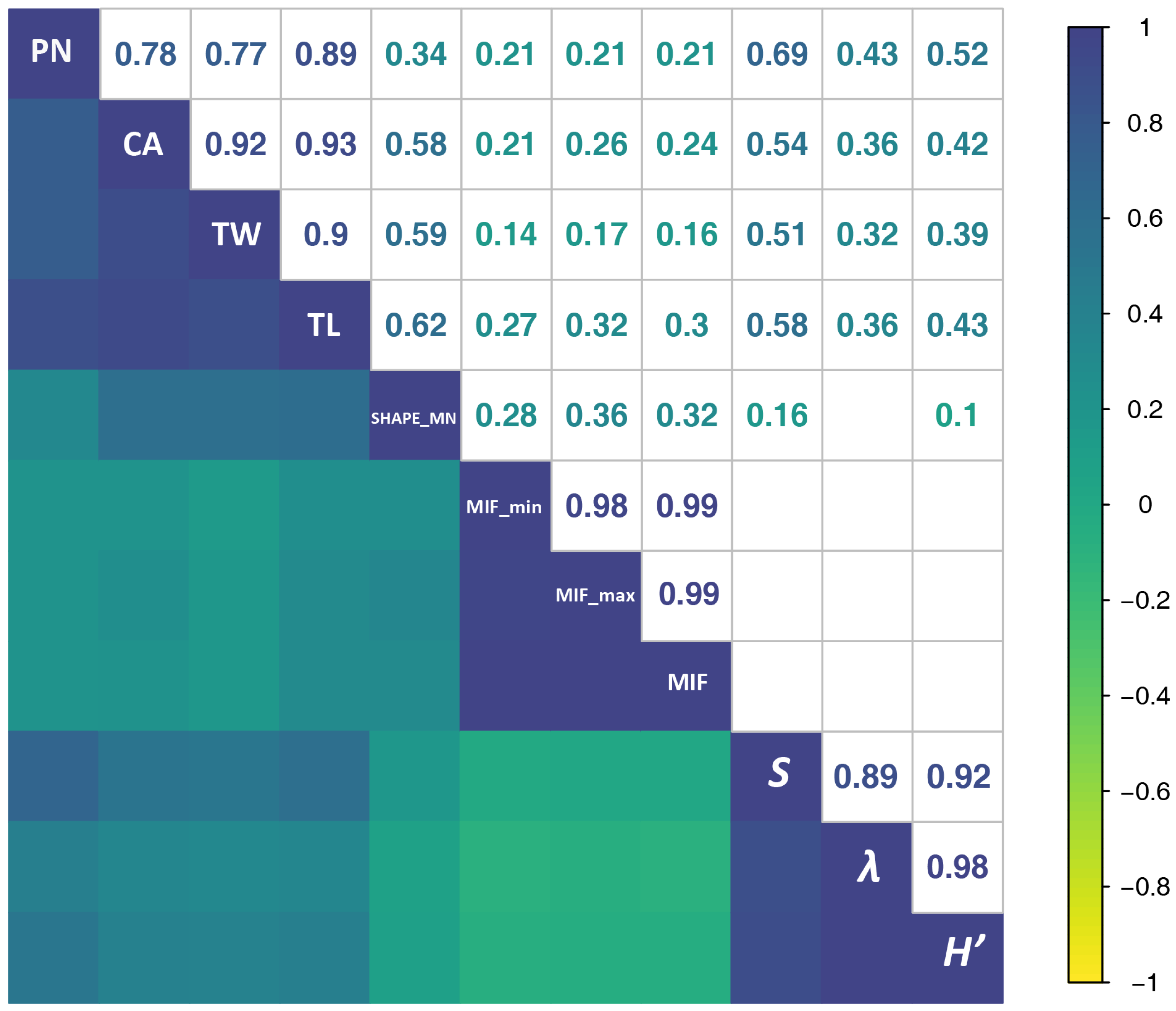

2.3.2. Correlation Analysis

2.3.3. Modelling

3. Results

3.1. Approach Reliability

3.2. SFMs’ Correlation with Biodiversity

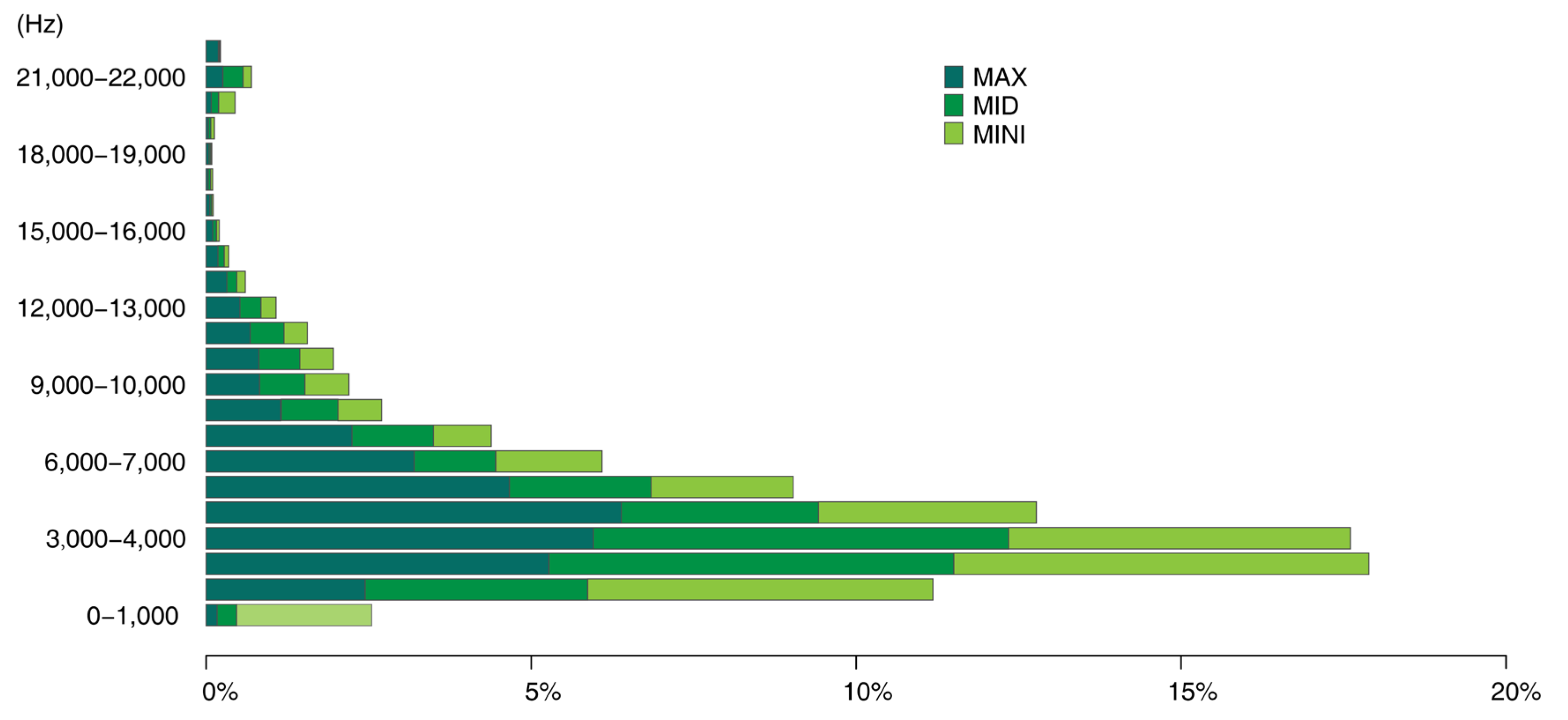

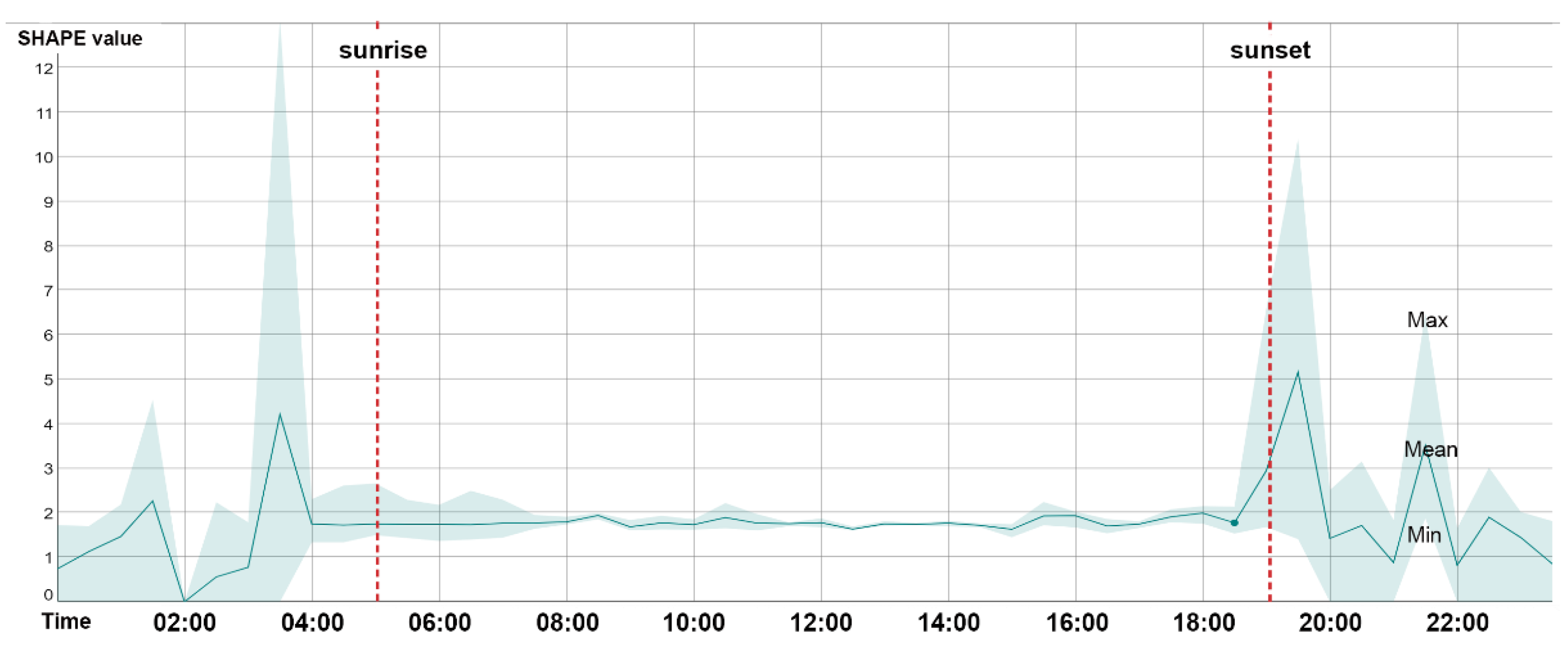

3.3. Prediction of Biodiversity

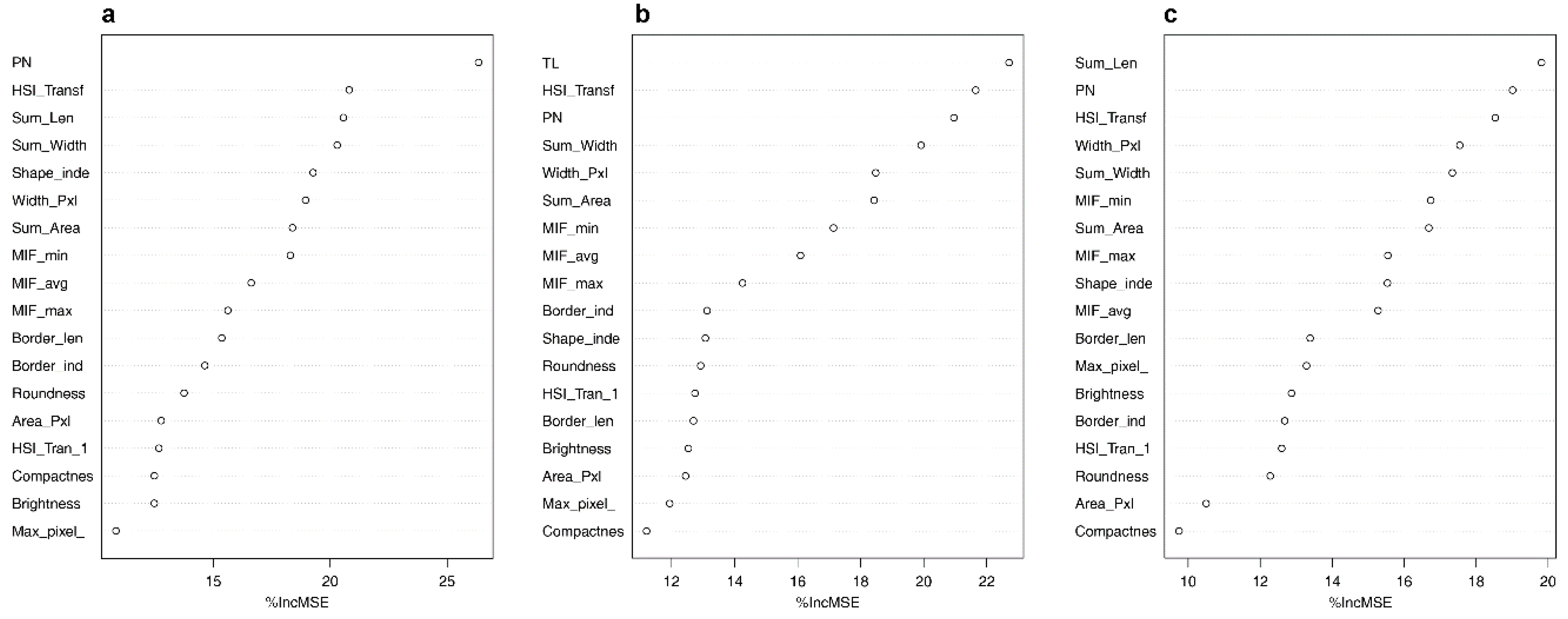

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| No. | Common Name | Binomial Name | Number of Syllable Patches | % | Predominant Frequency Intervals |

|---|---|---|---|---|---|

| 1 | Common Blackbird | Turdus merula | 227 | 23.67049 | / |

| 2 | Eurasian tree sparrow | Passer montanus | 158 | 16.4755 | 2.3–5 kHz or |

| 3 | Azure-winged magpie | Cyanopica cyanus | 120 | 12.51303 | 2–10 kHz |

| 4 | Large-billed crow | Corvus macrorhynchos | 116 | 12.09593 | 1–2 kHz |

| 5 | Spotted dove | Spilopelia chinensis | 109 | 11.36601 | 1–2 kHz |

| 6 | Light-vented bulbul | Pycnonotus sinensis | 55 | 5.735141 | 1.5–4 kHz |

| 7 | Eurasian magpie | Pica pica | 46 | 4.796663 | 0–4 kHz |

| 8 | Common swift | Apus apus | 42 | 4.379562 | 20–16,000 Hz |

| 9 | Yellow-browed warbler | Phylloscopus inornatus | 17 | 1.77268 | 4–8 kHz |

| 10 | Arctic warbler | Phylloscopus borealis | 12 | 1.251303 | / |

| 11 | Crested myna | Acridotheres cristatellus | 7 | 0.729927 | / |

| 12 | Two-barred warbler | Phylloscopus plumbeitarsus | 7 | 0.729927 | / |

| 13 | Dusky warbler | Phylloscopus fuscatus | 6 | 0.625652 | / |

| 14 | Marsh tit | Poecile palustris | 5 | 0.521376 | 6–10 kHz |

| 15 | Grey starling | Spodiopsar cineraceus | 4 | 0.417101 | above 4 kHz |

| 16 | Great spotted woodpecker | Dendrocopos major | 4 | 0.417101 | 0–2.6 kHz |

| 17 | Chinese grosbeak | Eophona migratoria | 4 | 0.417101 | / |

| 18 | Barn swallow | Hirundo rustica | 3 | 0.312826 | / |

| 19 | Chicken | Gallus gallus domesticus | 2 | 0.208551 | 5–10 kHz |

| 20 | Grey-capped greenfinch | Chloris sinica | 2 | 0.208551 | 3–5.5 kHz |

| 21 | Yellow-rumped Flycatcher | Ficedula zanthopygia | 1 | 0.104275 | / |

| 22 | Oriental reed warbler | Acrocephalus orientalis | 1 | 0.104275 | / |

| 23 | Yellow-throated Bunting | Emberiza elegans | 1 | 0.104275 | / |

| 24 | Red-breasted Flycatcher | Ficedula parva | 1 | 0.104275 | / |

| 25 | Carrion crow | Corvus corone | 1 | 0.104275 | 0–8 kHz |

| 26 | Dusky thrush | Turdus eunomus | 1 | 0.104275 | / |

| 27 | Black-browed Reed Warbler | Acrocephalus bistrigiceps | 1 | 0.104275 | / |

| 28 | Grey-capped pygmy woodpecker | Dendrocopos canicapillus | 1 | 0.104275 | 4.5–5 kHz |

| 29 | Naumann’s Thrush | Turdus naumanni | 1 | 0.104275 | / |

| 30 | U1 | / | 1 | 0.104275 | / |

| 31 | U2 | / | 1 | 0.104275 | / |

| 32 | U3 | / | 1 | 0.104275 | / |

| 33 | U4 | / | 1 | 0.104275 | / |

| No. | Metric | Description |

|---|---|---|

| 1 | Border_len(Border Length) | The sum of the edges of the patch. |

| 2 | Width_Pxl (Width) | The number of pixels occupied by the length of the patch. |

| 3 | HSI_Transf | HSI transformation feature of patch hue. |

| 4 | HSI_Tran_1 | HSI transformation feature of patch intensity. |

| 5 | Compactnes | The Compactness feature describes how compact a patch is. It is similar to Border Index but is based on area. However, the more compact a patch is, the smaller its border appears. The compactness of a patch is the product of the length and the width, divided by the number of pixels. |

| 6 | Roundness | The Roundness feature describes how similar a patch is to an ellipse. It is calculated by the difference between the enclosing ellipse and the enclosed ellipse. The radius of the largest enclosed ellipse is subtracted from the radius of the smallest enclosing ellipse. |

| 7 | Area_Pxl (Area of the patch) | The number of pixels forming a patch. If unit information is available, the number of pixels can be converted into a measurement. In scenes that provide no unit information, the area of a single pixel is 1 and the patch area is simply the number of pixels that form it. If the image data provides unit information, the area can be multiplied using the appropriate factor. |

| 8 | Border_ind (Border index) | The Border Index feature describes how jagged a patch is; the more jagged, the higher its border index. This feature is similar to the Shape Index feature, but the Border Index feature uses a rectangular approximation instead of a square. The smallest rectangle enclosing the patch is created and the border index is calculated as the ratio between the border lengths of the patch and the smallest enclosing rectangle. |

References

- Zhongming, Z.; Linong, L.; Wangqiang, Z.; Wei, L. The Global Biodiversity Outlook 5 (GBO-5); Secretariat of the Convention on Biological Diversity: Montreal, QC, Canada, 2020. [Google Scholar]

- World Health Organization. WHO-Convened Global Study of Origins of SARS-CoV-2: China Part; WHO: Geneva, Switzerland, 2021. [Google Scholar]

- Platto, S.; Zhou, J.; Wang, Y.; Wang, H.; Carafoli, E. Biodiversity loss and COVID-19 pandemic: The role of bats in the origin and the spreading of the disease. Biochem. Biophys. Res. Commun. 2021, 538, 2. [Google Scholar] [CrossRef]

- Pei, N.; Wang, C.; Jin, J.; Jia, B.; Chen, B.; Qie, G.; Qiu, E.; Gu, L.; Sun, R.; Li, J.; et al. Long-term afforestation efforts increase bird species diversity in Beijing, China. Urban For. Urban Green. 2018, 29, 88. [Google Scholar] [CrossRef]

- Turner, A.; Fischer, M.; Tzanopoulos, J. Sound-mapping a coniferous forest-Perspectives for biodiversity monitoring and noise mitigation. PLoS ONE 2018, 13, e0189843. [Google Scholar] [CrossRef] [Green Version]

- Sueur, J.; Gasc, A.; Grandcolas, P.; Pavoine, S. Global estimation of animal diversity using automatic acoustic sensors. In Sensors for Ecology; CNRS: Paris, France, 2012; Volume 99. [Google Scholar]

- Sueur, J.; Farina, A. Ecoacoustics: The Ecological Investigation and Interpretation of Environmental Sound. Biosemiotics 2015, 8, 493. [Google Scholar] [CrossRef]

- Blumstein, D.T.; Mennill, D.J.; Clemins, P.; Girod, L.; Yao, K.; Patricelli, G.; Deppe, J.L.; Krakauer, A.H.; Clark, C.; Cortopassi, K.A.; et al. Acoustic mon-itoring in terrestrial environments using microphone arrays: Applications, technological considerations and prospectus. J. Appl. Ecol. 2011, 48, 758. [Google Scholar] [CrossRef]

- Krause, B.; Farina, A. Using ecoacoustic methods to survey the impacts of climate change on biodiversity. Biol. Conserv. 2016, 195, 245. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhang, S.; Xu, Z.; Bellisario, K.; Dai, N.; Omrani, H.; Pijanowski, B.C. Automated bird acoustic event detection and robust species classification. Ecol. Inform. 2017, 39, 99. [Google Scholar] [CrossRef]

- Stephenson, P.J. Technological advances in biodiversity monitoring: Applicability, opportunities and challenges. Curr. Opin. Environ. Sustain. 2020, 45, 36. [Google Scholar] [CrossRef]

- Buxton, R.T.; McKenna, M.F.; Clapp, M.; Meyer, E.; Stabenau, E.; Angeloni, L.M.; Crooks, K.; Wittemyer, G. Efficacy of extracting indices from large-scale acoustic recordings to monitor biodiversity. Conserv. Biol. 2018, 32, 1174. [Google Scholar] [CrossRef]

- Halpin, P.N.; Read, A.J.; Best, B.D.; Hyrenbach, K.D.; Fujioka, E.; Coyne, M.S.; Crowder, L.B.; Freeman, S.; Spoerri, C. OBIS-SEAMAP: Developing a biogeographic research data commons for the ecological studies of marine mammals, seabirds, and sea turtles. Mar. Ecol. Prog. Ser. 2006, 316, 239. [Google Scholar] [CrossRef]

- Gross, M. Eavesdropping on ecosystems. Curr. Biol. 2020, 30, R237–R240. [Google Scholar] [CrossRef]

- Rajan, S.C.; Athira, K.; Jaishanker, R.; Sooraj, N.P.; Sarojkumar, V. Rapid assessment of biodiversity using acoustic indices. Biodivers. Conserv. 2019, 28, 2371. [Google Scholar] [CrossRef]

- Llusia, D.; Márquez, R.; Beltrán, J.F.; Benítez, M.; do Amaral, J.P. Calling behaviour under climate change: Geographical and seasonal variation of calling temperatures in ectotherms. Glob. Chang. Biol. 2013, 19, 2655. [Google Scholar] [CrossRef] [PubMed]

- Hart, P.J.; Sebastián-González, E.; Tanimoto, A.; Thompson, A.; Speetjens, T.; Hopkins, M.; Atencio-Picado, M. Birdsong characteristics are related to fragment size in a neotropical forest. Anim. Behav. 2018, 137, 45. [Google Scholar] [CrossRef]

- Bueno-Enciso, J.; Ferrer, E.S.; Barrientos, R.; Sanz, J.J. Habitat structure influences the song characteris-tics within a population of Great Tits Parus major. Bird Study 2016, 63, 359. [Google Scholar] [CrossRef]

- Browning, E.; Gibb, R.; Glover-Kapfer, P.; Jones, K.E. Passive Acoustic Monitoring in Ecology and Conservation. 2017. Available online: https://www.wwf.org.uk/sites/default/files/2019-04/Acousticmonitoring-WWF-guidelines.pdf (accessed on 7 April 2021).

- Sugai, L.S.M.; Silva, T.S.F.; Ribeiro, J.W.; Llusia, D. Terrestrial Passive Acoustic Monitoring: Review and Perspectives. BioScience 2019, 69, 15. [Google Scholar] [CrossRef]

- Righini, R.; Pavan, G. A soundscape assessment of the Sasso Fratino Integral Nature Reserve in the Central Apennines, Italy. Biodiversity 2020, 21, 4. [Google Scholar] [CrossRef]

- Priyadarshani, N.; Marsland, S.; Castro, I. Automated birdsong recognition in complex acoustic environments: A review. J. Avian Biol. 2018, 49, jav-01447. [Google Scholar] [CrossRef] [Green Version]

- Farina, A. Soundscape Ecology: Principles, Patterns, Methods and Applications; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Eldridge, A.; Casey, M.; Moscoso, P.; Peck, M. A new method for ecoacoustics? Toward the extraction and evaluation of ecologically-meaningful soundscape components using sparse coding methods. PeerJ 2016, 4, e2108. [Google Scholar] [CrossRef] [Green Version]

- Lellouch, L.; Pavoine, S.; Jiguet, F.; Glotin, H.; Sueur, J. Monitoring temporal change of bird communities with dissimilarity acoustic indices. Methods Ecol. Evol. 2014, 5, 495. [Google Scholar] [CrossRef] [Green Version]

- Pijanowski, B.C.; Villanueva-Rivera, L.J.; Dumyahn, S.L.; Farina, A.; Krause, B.L.; Napoletano, B.M.; Gage, S.H.; Pieretti, N. Soundscape Ecology: The Science of Sound in the Landscape. BioScience 2011, 61, 203. [Google Scholar] [CrossRef] [Green Version]

- Hao, Z.; Wang, C.; Sun, Z.; van den Bosch, C.K.; Zhao, D.; Sun, B.; Xu, X.; Bian, Q.; Bai, Z.; Wei, K.; et al. Soundscape mapping for spa-tial-temporal estimate on bird activities in urban forests. Urban For. Urban Green. 2021, 57, 126822. [Google Scholar] [CrossRef]

- Project, W.S.; Truax, B. The World Soundscape Project’s Handbook for Acoustic Ecology; Arc Publications: Todmorden, UK, 1978. [Google Scholar]

- Pieretti, N.; Farina, A.; Morri, D. A new methodology to infer the singing activity of an avian community: The Acoustic Complexity Index (ACI). Ecol. Indic. 2011, 11, 868. [Google Scholar] [CrossRef]

- Boelman, N.T.; Asner, G.P.; Hart, P.J.; Martin, R.E. Multi-trophic invasion resistance in Hawaii: Bioacoustics, field surveys, and airborne remote sensing. Ecol. Appl. 2007, 17, 2137. [Google Scholar] [CrossRef]

- Fuller, S.; Axel, A.C.; Tucker, D.; Gage, S.H. Connecting soundscape to landscape: Which acoustic index best describes landscape configuration? Ecol. Indic. 2015, 58, 207. [Google Scholar] [CrossRef] [Green Version]

- Mammides, C.; Goodale, E.; Dayananda, S.K.; Kang, L.; Chen, J. Do acoustic indices correlate with bird diversity? Insights from two biodiverse regions in Yunnan Province, south China. Ecol. Indic. 2017, 82, 470. [Google Scholar] [CrossRef]

- Fairbrass, A.J.; Rennert, P.; Williams, C.; Titheridge, H.; Jones, K.E. Biases of acoustic indices measuring biodiversity in urban areas. Ecol. Indic. 2017, 83, 169. [Google Scholar] [CrossRef]

- Ross, S.R.-J.; Friedman, N.R.; Yoshimura, M.; Yoshida, T.; Donohue, I.; Economo, E.P. Utility of acoustic indices for ecological monitoring in complex sonic environments. Ecol. Indic. 2021, 121, 107114. [Google Scholar] [CrossRef]

- Sueur, J.; Pavoine, S.; Hamerlynck, O.; Duvail, S. Rapid acoustic survey for biodiversity appraisal. PLoS ONE 2008, 3, e4065. [Google Scholar] [CrossRef] [Green Version]

- Kasten, E.P.; Gage, S.H.; Fox, J.; Joo, W. The remote environmental assessment laboratory’s acoustic library: An archive for studying soundscape ecology. Ecol. Inform. 2012, 12, 50. [Google Scholar] [CrossRef]

- Gibb, R.; Browning, E.; Glover-Kapfer, P.; Jones, K.E. Emerging opportunities and challenges for passive acoustics in ecological assessment and monitoring. Methods Ecol. Evol. 2019, 10, 169. [Google Scholar] [CrossRef] [Green Version]

- Merchant, N.D.; Fristrup, K.M.; Johnson, M.P.; Tyack, P.L.; Witt, M.J.; Blondel, P.; Parks, S.E. Measuring acoustic habitats. Methods Ecol. Evol. 2015, 6, 257. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Swiston, K.A.; Mennill, D.J. Comparison of manual and automated methods for identifying target sounds in audio recordings of Pileated, Pale-billed, and putative Ivory-billed woodpeckers. J. Field Ornithol. 2009, 80, 42. [Google Scholar] [CrossRef]

- Goyette, J.L.; Howe, R.W.; Wolf, A.T.; Robinson, W.D. Detecting tropical nocturnal birds using auto-mated audio recordings. J. Field Ornithol. 2011, 82, 279. [Google Scholar] [CrossRef]

- Potamitis, I. Automatic classification of a taxon-rich community recorded in the wild. PLoS ONE 2014, 9, e96936. [Google Scholar]

- Ulloa, J.S.; Gasc, A.; Gaucher, P.; Aubin, T.; Réjou-Méchain, M.; Sueur, J. Screening large audio datasets to determine the time and space distribution of Screaming Piha birds in a tropical forest. Ecol. Inform. 2016, 31, 91. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2. [Google Scholar] [CrossRef] [Green Version]

- Blaschke, T. (Ed.) Object Based Image Analysis: A new paradigm in remote sensing? In Proceedings of the American Society for Photogrammetry and Remote Sensing Annual Conferenc, Baltimore, MD, USA, 26–28 March 2013. [Google Scholar]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239. [Google Scholar] [CrossRef]

- Johansen, K.; Arroyo, L.A.; Phinn, S.; Witte, C. Comparison of geo-object based and pixel-based change detection of riparian environments using high spatial resolution multi-spectral imagery. Photogramm. Eng. Remote Sens. 2010, 76, 123. [Google Scholar] [CrossRef]

- Burivalova, Z.; Game, E.T.; Butler, R.A. The sound of a tropical forest. Science 2019, 363, 28. [Google Scholar] [CrossRef]

- Hay, G.J.; Castilla, G. Object-based image analysis: Strengths, weaknesses, opportunities and threats (SWOT). In Proceedings of the 1st International Conference on Object-based Image Analysis (OBIA), Salzburg, Austria, 4–5 July 2006; pp. 4–5. [Google Scholar]

- Jafari, N.H.; Li, X.; Chen, Q.; Le, C.-Y.; Betzer, L.P.; Liang, Y. Real-time water level monitoring using live cameras and computer vision techniques. Comput. Geosci. 2021, 147, 104642. [Google Scholar] [CrossRef]

- Schwier, M. Object-based Image Analysis for Detection and Segmentation Tasks in Biomedical Imaging. Ph.D. Thesis, Information Resource Center der Jacobs University Bremen, Bremen, Germany.

- Kerle, N.; Gerke, M.; Lefèvre, S. GEOBIA 2016: Advances in Object-Based Image Analysis—Linking with Computer Vision and Machine Learning; Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2019. [Google Scholar]

- Yan, J.; Lin, L.; Zhou, W.; Han, L.; Ma, K. Quantifying the characteristics of particulate matters captured by urban plants using an automatic approach. J. Environ. Sci. 2016, 39, 259. [Google Scholar] [CrossRef] [PubMed]

- Yan, J.; Lin, L.; Zhou, W.; Ma, K.; Pickett, S.T.A. A novel approach for quantifying particulate matter dis-tribution on leaf surface by combining SEM and object-based image analysis. Remote Sens. Environ. 2016, 173, 156. [Google Scholar] [CrossRef]

- Lin, L.; Yan, J.; Ma, K.; Zhou, W.; Chen, G.; Tang, R.; Zhang, Y. Characterization of particulate matter de-posited on urban tree foliage: A landscape analysis approach. Atmos. Environ. 2017, 171, 59. [Google Scholar] [CrossRef]

- Artiola, J.F.; Brusseau, M.L.; Pepper, I.L. Environmental Monitoring and Characterization; Academic Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Xie, S.; Lu, F.; Cao, L.; Zhou, W.; Ouyang, Z. Multi-scale factors influencing the characteristics of avian communities in urban parks across Beijing during the breeding season. Sci. Rep. 2016, 6, 29350. [Google Scholar] [CrossRef] [PubMed]

- Farina, A.; Righini, R.; Fuller, S.; Li, P.; Pavan, G. Acoustic complexity indices reveal the acoustic commu-nities of the old-growth Mediterranean forest of Sasso Fratino Integral Natural Reserve (Central Italy). Ecol. Indic. 2021, 120, 106927. [Google Scholar] [CrossRef]

- Zitong, B.; Yilin, Z.; Cheng, W.; Zhenkai, S. The public’s Perception of Anthrophony Soundscape in Beijing’s Urban Parks. J. Chin. Urban For. 2021, 19, 16, In Chinese. [Google Scholar] [CrossRef]

- Pahuja, R.; Kumar, A. Sound-spectrogram based automatic bird species recognition using MLP classifier. Appl. Acoust. 2021, 180, 108077. [Google Scholar] [CrossRef]

- Aide, T.M.; Corrada-Bravo, C.; Campos-Cerqueira, M.; Milan, C.; Vega, G.; Alvarez, R. Real-time bioacous-tics monitoring and automated species identification. PeerJ 2013, 1, e103. [Google Scholar] [CrossRef] [Green Version]

- Linke, S.; Deretic, J.-A. Ecoacoustics can detect ecosystem responses to environmental water allocations. Freshw. Biol. 2020, 65, 133. [Google Scholar] [CrossRef] [Green Version]

- Gasc, A.; Francomano, D.; Dunning, J.B.; Pijanowski, B.C. Future directions for soundscape ecology: The importance of ornithological contributions. Auk 2017, 134, 215. [Google Scholar] [CrossRef] [Green Version]

- Eldridge, A.; Guyot, P.; Moscoso, P.; Johnston, A.; Eyre-Walker, Y.; Peck, M. Sounding out ecoacoustic met-rics: Avian species richness is predicted by acoustic indices in temperate but not tropical habitats. Ecol. Indic. 2018, 95, 939. [Google Scholar] [CrossRef] [Green Version]

- Dufour, O.; Artieres, T.; Glotin, H.; Giraudet, P. Soundscape Semiotics—Localization and Categorization; InTech: London, UK, 2013; Volume 89. [Google Scholar]

- Karaconstantis, C.; Desjonquères, C.; Gifford, T.; Linke, S. Spatio-temporal heterogeneity in river sounds: Disentangling micro-and macro-variation in a chain of waterholes. Freshw. Biol. 2020, 65, 96. [Google Scholar] [CrossRef]

- De Oliveira, A.G.; Ventura, T.M.; Ganchev, T.D.; de Figueiredo, J.M.; Jahn, O.; Marques, M.I.; Schuchmann, K.-L. Bird acoustic activity detection based on morphological filtering of the spectrogram. Appl. Acoust. 2015, 98, 34. [Google Scholar] [CrossRef]

- Ludeña-Choez, J.; Gallardo-Antolín, A. Feature extraction based on the high-pass filtering of audio signals for Acoustic Event Classification. Comput. Speech Lang. 2015, 30, 32. [Google Scholar] [CrossRef] [Green Version]

- Albornoz, E.M.; Vignolo, L.D.; Sarquis, J.A.; Leon, E. Automatic classification of Furnariidae species from the Paranaense Littoral region using speech-related features and machine learning. Ecol. Inform. 2017, 38, 39. [Google Scholar] [CrossRef]

- Bhargava, S. Vocal Source Separation Using Spectrograms and Spikes, Applied to Speech and Birdsong; ETH Zurich: Zurich, Switzerland, 2017. [Google Scholar]

- Plapous, C.; Marro, C.; Scalart, P. Improved signal-to-noise ratio estimation for speech enhancement. IEEE Trans. Audio Speech Lang. Process. 2006, 14, 2098. [Google Scholar] [CrossRef] [Green Version]

- Podos, J.; Warren, P.S. The evolution of geographic variation in birdsong. Adv. Study Behav. 2007, 37, 403. [Google Scholar]

- Lu, W.; Zhang, Q. Deconvolutive Short-Time Fourier Transform Spectrogram. IEEE Signal Process. Lett. 2009, 16, 576. [Google Scholar]

- Mehta, J.; Gandhi, D.; Thakur, G.; Kanani, P. Music Genre Classification using Transfer Learning on log-based MEL Spectrogram. In Proceedings of the 5th International Conference on Computing Methodolo-Gies and Communication (ICCMC), Erode, India, 8–10 April 2021; p. 1101. [Google Scholar]

- Ludena-Choez, J.; Quispe-Soncco, R.; Gallardo-Antolin, A. Bird sound spectrogram decomposition through Non-Negative Matrix Factorization for the acoustic classification of bird species. PLoS ONE 2017, 12, e0179403. [Google Scholar] [CrossRef]

- Garcia-Lamont, F.; Cervantes, J.; López, A.; Rodriguez, L. Segmentation of images by color features: A survey. Neurocomputing 2018, 292, 1. [Google Scholar] [CrossRef]

- Chen, Y.; Chen, Q.; Jing, C. Multi-resolution segmentation parameters optimization and evaluation for VHR remote sensing image based on mean NSQI and discrepancy measure. J. Spat. Sci. 2021, 66, 253. [Google Scholar] [CrossRef]

- Zheng, L.; Huang, W. Parameter Optimization in Multi-scale Segmentation of High Resolution Remotely Sensed Image and Its Application in Object-oriented Classification. J. Subtrop. Resour. Environ. 2015, 10, 77. [Google Scholar]

- Mesner, N.; Ostir, K. Investigating the impact of spatial and spectral resolution of satellite images on segmentation quality. J. Appl. Remote Sens. 2014, 8, 83696. [Google Scholar] [CrossRef]

- Ptacek, L.; Machlica, L.; Linhart, P.; Jaska, P.; Muller, L. Automatic recognition of bird individuals on an open set using as-is recordings. Bioacoustics 2016, 25, 55. [Google Scholar] [CrossRef]

- Yip, D.A.; Mahon, C.L.; MacPhail, A.G.; Bayne, E.M. Automated classification of avian vocal activity using acoustic indices in regional and heterogeneous datasets. Methods Ecol. Evol. 2021, 12, 707. [Google Scholar] [CrossRef]

- Breiman, L.; Friedman, J.H.; Olshen, R.A.; Stone, C.J. Classification and Regression Trees; Routledge: Oxfordshire, UK, 2017. [Google Scholar]

- Stowell, D.; Plumbley, M.D. Automatic large-scale classification of bird sounds is strongly improved by unsupervised feature learning. PeerJ 2014, 2, e488. [Google Scholar] [CrossRef] [Green Version]

- Zottesso, R.H.D.; Costa, Y.M.G.; Bertolini, D.; Oliveira, L.E.S. Bird species identification using spectro-gram and dissimilarity approach. Ecol. Inform. 2018, 48, 187. [Google Scholar] [CrossRef]

- Bai, J.; Chen, C.; Chen, J. (Eds.) Xception Based Method for Bird Sound Recognition of BirdCLEF; CLEF: Thessaloniki, Greece, 2020. [Google Scholar]

- McGarigal, K.; Cushman, S.A.; Ene, E. FRAGSTATS v4: Spatial Pattern Analysis Program for Categorical and Continuous Maps. Computer Software Program Produced by the Authors at the University of Massachusetts, Amherst. 2012. Available online: http://www.umass.edu/landeco/research/fragstats/fragstats.html (accessed on 25 June 2021).

- Peterson, R.A.; Peterson, M.R.A. Package ‘bestNormalize’. 2020. Available online: https://mran.microsoft.com/snapshot/2020-04-22/web/packages/bestNormalize/bestNormalize.pdf (accessed on 5 April 2021).

- Van Loan, C.F.; Golub, G. Matrix Computations (Johns Hopkins Studies in Mathematical Sciences); Johns Hopkins University Press: Baltimore, MD, USA, 1996. [Google Scholar]

- Liaw, A.; Wiener, M. Classification and regression by randomForest. R News 2002, 2, 18. [Google Scholar]

- ColorBrewer, S.R.; Liaw, M.A. Package ‘randomForest’; University of California: Berkeley, CA, USA, 2018. [Google Scholar]

- Chakure, A. Random Forest Regression. Towards Data Science, 12 June 2019. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5. [Google Scholar] [CrossRef] [Green Version]

- Wimmer, J.; Towsey, M.; Roe, P.; Williamson, I. Sampling environmental acoustic recordings to determine bird species richness. Ecol. Appl. 2013, 23, 1419. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krause, B.; Bernard, L.; Gage, S. Testing Biophony as an Indicator of Habitat Fitness and Dynamics. Sequoia National Park (SEKI) Natural Soundscape Vital Signs Pilot Program Report; Wild Sanctuary, Inc.: Glen Ellen, CA, USA, 2003. [Google Scholar]

- Ishwaran, H.; Kogalur, U.B.; Gorodeski, E.Z.; Minn, A.J.; Lauer, M.S. High-dimensional variable selection for survival data. J. Am. Stat. Assoc. 2010, 105, 205. [Google Scholar] [CrossRef]

- Brumm, H.; Zollinger, S.A.; Niemelä, P.T.; Sprau, P. Measurement artefacts lead to false positives in the study of birdsong in noise. Methods Ecol. Evol. 2017, 8, 1617. [Google Scholar] [CrossRef]

- Jancovic, P.; Kokuer, M. Bird Species Recognition Using Unsupervised Modeling of Individual Vocaliza-tion Elements. IEEE/ACM Trans. Audio Speech Lang. Process. 2019, 27, 932. [Google Scholar] [CrossRef]

- Zsebők, S.; Blázi, G.; Laczi, M.; Nagy, G.; Vaskuti, É.; Garamszegi, L.Z. “Ficedula”: An open-source MATLAB toolbox for cutting, segmenting and computer-aided clustering of bird song. J. Ornithol. 2018, 159, 1105. [Google Scholar] [CrossRef]

- Potamitis, I. Unsupervised dictionary extraction of bird vocalisations and new tools on assessing and visualising bird activity. Ecol. Inform. 2015, 26, 6. [Google Scholar] [CrossRef]

- Lasseck, M. Bird song classification in field recordings: Winning solution for NIPS4B 2013 competition. In Proceedings of the Neural Information Scaled for Bioacoustics (NIPS), Lake Tahoe, NV, USA, 10 December 2013; p. 176. [Google Scholar]

- Lasseck, M. Large-scale Identification of Birds in Audio Recordings. In Proceedings of the Conference and Labs of the Evaluation Forum (CLEF), Sheffield, UK, 15–18 September 2014; p. 643. [Google Scholar]

- Servick, K. Eavesdropping on ecosystems. Science 2014, 343, 834. [Google Scholar] [CrossRef]

- Sueur, J.; Farina, A.; Gasc, A.; Pieretti, N.; Pavoine, S. Acoustic Indices for Biodiversity Assessment and Landscape Investigation. Acta Acust. United Acust. 2014, 100, 772. [Google Scholar] [CrossRef] [Green Version]

- Gage, S.H.; Axel, A.C. Visualization of temporal change in soundscape power of a Michigan lake habitat over a 4-year period. Ecol. Inform. 2014, 21, 100. [Google Scholar] [CrossRef]

- Gil, D.; Llusia, D. The bird dawn chorus revisited. In Coding Strategies in Vertebrate Acoustic Communication; Springer: Berlin/Heidelberg, Germany, 2020; Volume 7, p. 45. [Google Scholar]

- Stephenson, P.J. The Holy Grail of biodiversity conservation management: Monitoring impact in pro-jects and project portfolios. Perspect. Ecol. Conserv. 2019, 17, 182. [Google Scholar] [CrossRef]

- Stephenson, P.J.; Bowles-Newark, N.; Regan, E.; Stanwell-Smith, D.; Diagana, M.; Höft, R.; Abarchi, H.; Abrahamse, T.; Akello, C.; Allison, H.; et al. Unblocking the flow of biodiversity data for decision-making in Africa. Biol. Conserv. 2017, 213, 335. [Google Scholar] [CrossRef]

- Abolina, K.; Zilans, A. Evaluation of urban sustainability in specific sectors in Latvia. Environ. Dev. Sustain. 2002, 4, 299. [Google Scholar] [CrossRef]

- Jin, J.; Sheppard, S.R.; Jia, B.; Wang, C. Planning to Practice: Impacts of Large-Scale and Rapid Urban Af-forestation on Greenspace Patterns in the Beijing Plain Area. Forests 2021, 12, 316. [Google Scholar] [CrossRef]

| Feature Name | Description | Rel. Imp |

|---|---|---|

| Brightness | Mean value of all image bands | 81.24 |

| Shape index | The smoothness of the boundary of an image object | 10.85 |

| Area | The area of objects in number of pixels | 18.01 |

| Length/width | The ratio of length to width | 7.58 |

| Elliptic fit | How well an image object fits into an ellipse | 1.36 |

| Hue | Mean of hue, one of three color components | 4.97 |

| Saturation | Mean of saturation, one of three color components | 23.12 |

| Intensity | Mean of intensity, one of three color components | 54.33 |

| GLCM-M | Mean value of GLCM (Gray-level Co-occurrence Matrix) | 19.54 |

| GLCM-H | Homogeneity of GLCM (Gray-level Co-occurrence Matrix) | 60.49 |

| SFMs | Description in Landscape Ecology | Transformation | Meaning in Acoustics |

|---|---|---|---|

| NP (Patch Number) | NP is a count of all the patches across the entire landscape. | none | Number of acoustic events. |

| CA (Class Area) | CA is the sum of the areas of all patches belonging to a given class. | none | Proportion of spectrogram covered by acoustic-event patches. |

| SHAPE_MN | SHAPE_MN equals the average shape index of patches across the entire landscape. | none | Average shape index (complexity of patch shape) of the extracted vocalization syllables. |

| TL (Total Length) | The sum of the lengths of all patches belonging to a given spectrogram. | ×15 | Total bandwidth occupancy of acoustic events (Hz). |

| TW (Total Width) | The sum of the widths of all patches belonging to a given spectrogram. | ÷200 | The total duration of the acoustic events (s). |

| NP | CA | TL | TW | |

|---|---|---|---|---|

| BE | 0.71 | 0.60 | 0.56 | 0.63 |

| AE | 0.89 | 0.74 | 0.71 | 0.79 |

| Response Variable | Model Type a | SFM-Covariates | MSE | R2 |

|---|---|---|---|---|

| Richness | 3 | PN + CA + TW + TL + Border_len + Width_Pxl + HSI_Transf + HSI_Tran_1 + Max_pixel_ + Shape_MN + Compactnes + Brightness + Roundness + Area_Pxl + Border_ind + MIF_min + MIF_max + MIF | 1.47 | 0.57 |

| Simpson diversity | 1 | 0.30 | 0.38 | |

| Shannon diversity | 2 | 0.27 | 0.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, Y.; Yan, J.; Jin, J.; Sun, Z.; Yin, L.; Bai, Z.; Wang, C. Diversity Monitoring of Coexisting Birds in Urban Forests by Integrating Spectrograms and Object-Based Image Analysis. Forests 2022, 13, 264. https://doi.org/10.3390/f13020264

Zhao Y, Yan J, Jin J, Sun Z, Yin L, Bai Z, Wang C. Diversity Monitoring of Coexisting Birds in Urban Forests by Integrating Spectrograms and Object-Based Image Analysis. Forests. 2022; 13(2):264. https://doi.org/10.3390/f13020264

Chicago/Turabian StyleZhao, Yilin, Jingli Yan, Jiali Jin, Zhenkai Sun, Luqin Yin, Zitong Bai, and Cheng Wang. 2022. "Diversity Monitoring of Coexisting Birds in Urban Forests by Integrating Spectrograms and Object-Based Image Analysis" Forests 13, no. 2: 264. https://doi.org/10.3390/f13020264

APA StyleZhao, Y., Yan, J., Jin, J., Sun, Z., Yin, L., Bai, Z., & Wang, C. (2022). Diversity Monitoring of Coexisting Birds in Urban Forests by Integrating Spectrograms and Object-Based Image Analysis. Forests, 13(2), 264. https://doi.org/10.3390/f13020264