1. Introduction

In the context of extreme weather events and climate change, reducing carbon emissions has become a pressing global issue [

1]. To limit corporate carbon emissions, China has established pilot carbon trading markets in major cities such as Beijing, Shanghai, and Guangzhou [

2]. China’s carbon market is now transiting from regional pilot projects to national unification [

3]. Moreover, enterprises need to purchase corresponding allowances from forest carbon sequestration products through the Emissions Trading System (ETS) for their carbon emissions from production activities [

4,

5]. This means that the urban carbon price reflects the expenses related to human activities that produce carbon emissions [

6]. In addition, the main reason for offsetting carbon emissions is to enhance forest carbon sequestration abilities [

7]. This framework makes the urban carbon price, together with forest carbon sequestration products, the foundation of the ETS [

8]. As a result, the urban carbon price serves as a regulatory tool to not only encourage companies to reduce carbon emissions but also guide governments in formulating policies such as these for forest protection [

9,

10,

11,

12]. For instance, a high carbon price can encourage companies to develop more efficient carbon reduction technologies and solutions [

10]. Meanwhile, adjusting the carbon price can enhance the forest environment and promote ecological balance by utilizing economic incentives to govern forest management [

11,

12]. In summary, urban carbon prices are closely linked to forest carbon sequestration [

7]. Therefore, accurate carbon price prediction is not only useful for reducing business risk but also serves as a reference for governments in formulating carbon policies, including forest carbon sink management [

13]. Consequentially, accurate carbon price forecasting is a popular research topic in forest science [

8,

11,

12].

Currently, carbon price prediction methods can be categorized into statistical methods and artificial intelligence (AI) methods [

14]. Statistical time prediction models, such as Generalized Autoregressive Conditional Heteroskedasticity (GARCH) [

15] and Autoregressive Integrated Moving Average (ARIMA) [

16], have mature statistical theories to support them and perform well with simple, stable, and strongly periodic time-series prediction problems. However, these methods struggle to model the nonlinear characteristic of time series and cannot effectively handle non-periodic carbon price sequences [

17]. AI methods include machine learning models and deep learning models. Machine learning models, such as Support Vector Regression (SVR) [

18], Least-Squares Support Vector Regression (LSSVR) [

19], eXtreme Gradient Boosting (XGBoost) [

20], and Extreme Learning Machine (ELM) [

21], have been widely applied in carbon price time-series prediction. Machine learning models can model nonlinear features by introducing nonlinear functions, resulting in better fitting of nonlinear relationships. However, as machine learning models generally make predictions about the following moment based on the input of the current moment, they are not proficient in handling long-term time series, such as carbon-price time series. In addition, most machine learning models focus solely on price-based time-series prediction, ignoring the significant amount of data available from multiple other sources. Similar to stock prices, urban carbon prices are affected by numerous factors, making it difficult to capture the complicated dynamics of the carbon market, particularly when attempting to accurately predict long-term urban carbon prices based solely on price fluctuation trends [

22].

Consequently, carbon price prediction based on multi-source data fusion, which can introduce more information, has emerged as a novel solution [

23]. Deep learning models are frequently employed in multi-source data scenarios due to the fact that larger amounts of data necessitate more powerful models [

24,

25,

26]. For instance, Zhang and Xia [

24] applied online news data and Google Trends to predict urban carbon prices with a deep learning model. However, the current studies regarding forecasting carbon prices through deep learning only focus on textual data. Although textual data can provide insights into trends related to the development of the carbon market, their limitations should not be overlooked. Subjectiveness and ambiguity in textual data lead to significant uncertainty in interpretation and analysis, which can affect the accuracy and reliability of predictive models [

27].

Comparatively, remote sensing image data have advantages such as objectivity, comprehensiveness, and accuracy, thus enabling the provision of more comprehensive and accurate features related to factors influencing carbon prices [

28]. Moreover, urban remote sensing images not only cover urban city areas but also extend to surrounding forest regions [

29,

30]. Therefore, remote sensing technology provides abundant image data that can capture various environmental factors, such as vegetation coverage, urban forest management, and land-use changes, as well as economic factors, which include urban building density and industrialization [

31]. This enables a more comprehensive analysis of the factors influencing the supply–demand relationship and price fluctuations in the carbon market. On the other hand, urban remote sensing image data contain abundant information reflecting the construction and development of cities over time [

32]. Analyzing images from different time periods in the same region can better reflect the correlation between time and space, thereby improving the accuracy and reliability of carbon price prediction. Furthermore, remote sensing images are low-cost and easy to obtain. However, according to our extensive research, few researchers have focused on mining remote sensing data for accurate carbon price forecasting.

Compared to textual and historical price data, remote sensing images are sparse. It is difficult to directly combine remote sensing images with price data, and a proper fusion method needs to be designed. In computer vision research, artificial neural networks (also called deep learning models), such as Convolutional Neural Networks (CNNs) [

33], are used to uncover information from images, including remote sensing images [

34]. Moreover, with the rapid development of AI, deep learning models, such as Recurrent Neural Networks (RNNs) [

35], Temporal Convolutional Networks (TCNs) [

36], Gated Recurrent Units (GRUs) [

37], and Long Short-Term Memory (LSTM) [

38], have also been applied to carbon price time-series prediction. These models have stronger nonlinear modeling capabilities and can handle multivariate time series. Thus, they are considered a promising approach to fusing remote sensing images with historical carbon prices. Unfortunately, artificial neural networks are underutilized in carbon price forecasting. Firstly, deep learning models are typically only used for predicting carbon prices, similar to machine learning methods [

35,

36,

37,

38]. Although their nonlinear capability helps them perform well, they also face challenges in capturing long-term dependencies. For example, recurrent-based networks are prone to the vanishing or exploding gradient problem, which hinders their ability to model long-term dependencies [

39]. Secondly, transformer surpasses RNNs, LSTMs, etc. [

40], in terms of performance, and it has been successful in many multi-modal fusion applications, such as ChatGPT-4 [

41]. However, to the best of our knowledge, state-of-the-art (SOTA) deep learning models, such as transformers, have not been introduced into multi-modal data fusion carbon price prediction, and the power of AI has yet to be fully utilized. Using powerful advanced transformer models is the motivation of this work.

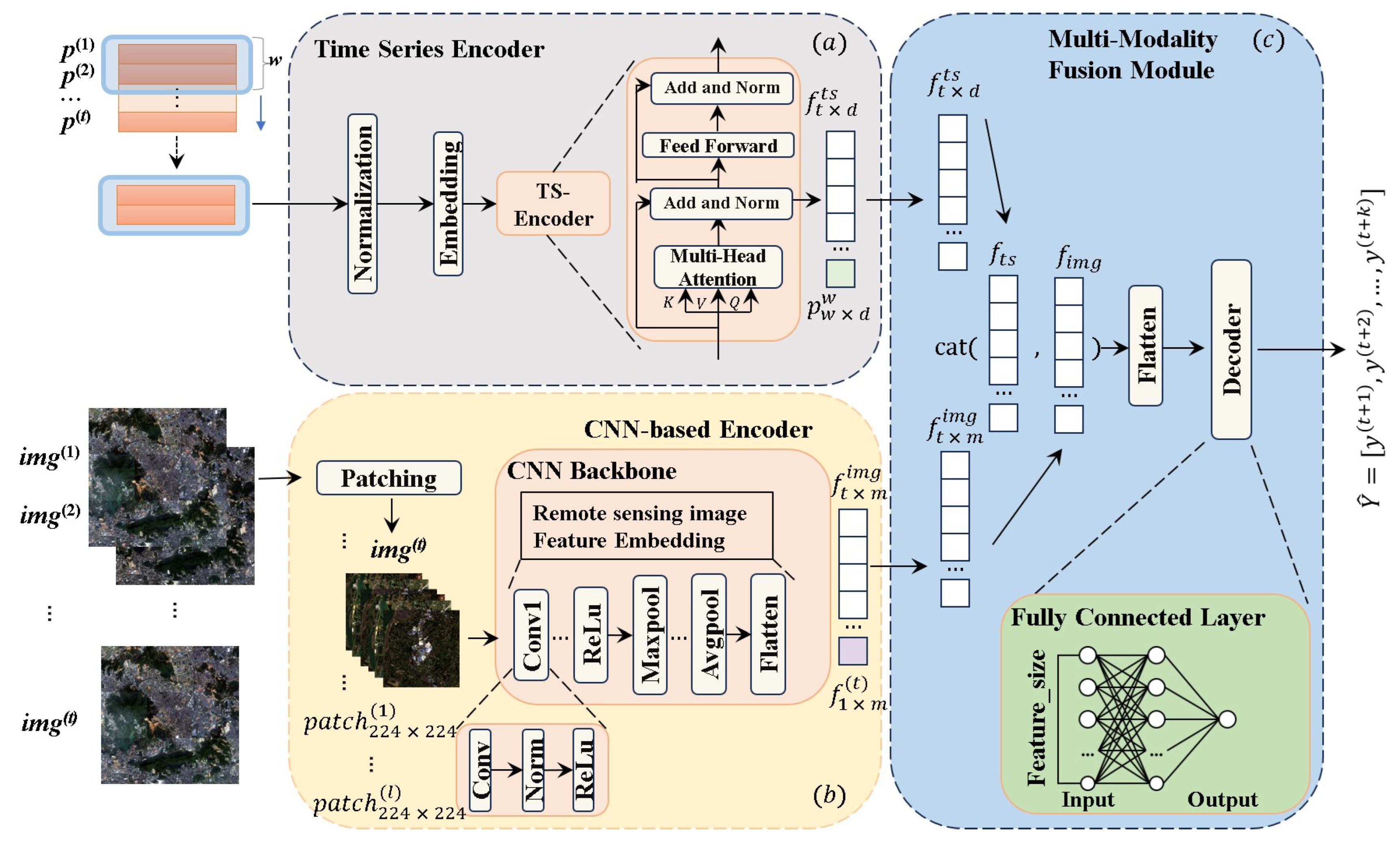

This study introduces remote sensing images into carbon price prediction and then proposes a Multi-source Fusion Time Series Transformer (MFTSformer) prediction model. To utilize useful information from remote sensing images, an encoder–decoder paradigm based on a powerful transformer is proposed to fuse the image and historical price data. In order to overcome the limitations of traditional recurrent-based neural networks with regards to long-term prediction, we utilize a multi-head self-attention mechanism from transformer models to model the input temporal data. We conduct various experiments on the carbon trading markets of a major city in China to validate the proposed strategy and methods. Our proposed MFTSformer method reduces errors by up to 52.24%, 45.07%, 18.42%, and 19.94% in comparison with the four baseline models. The results demonstrate that additional remote information is useful for accurately forecasting long-term urban carbon prices and that the MFTSformer method is effective.

The main contributions of this study are as follows:

We propose a multi-modal fusion carbon price prediction method called MFTSformer, which accurately predicts long-term urban carbon prices. Extensive experiments demonstrate that the proposed MFTSformer is capable of capturing the characteristics of long-term carbon price series and uncovering relevant information from remote sensing images. It can also support governments to formulate carbon pricing policies and companies to mitigate risks in practice.

Introducing urban remote sensing into carbon price forecasting helps us to capture the influential information of carbon price. As remote sensing imaging is objective, low-cost, and comprehensive, the results offer new insights for carbon researchers. In particular, as most carbon allowances come from forests, our work also provides information to researchers interested in forest carbon sequestration.

To the best of our knowledge, we are the first to introduce SOTA AI knowledge, such as an encoder–decoder framework, a self-attention mechanism, and multi-modal fusion technologies, to uncover remote sensing information for carbon price forecasting.