Digital vs. Analog Learning—Two Content-Similar Interventions and Learning Outcomes

Abstract

:1. Introduction

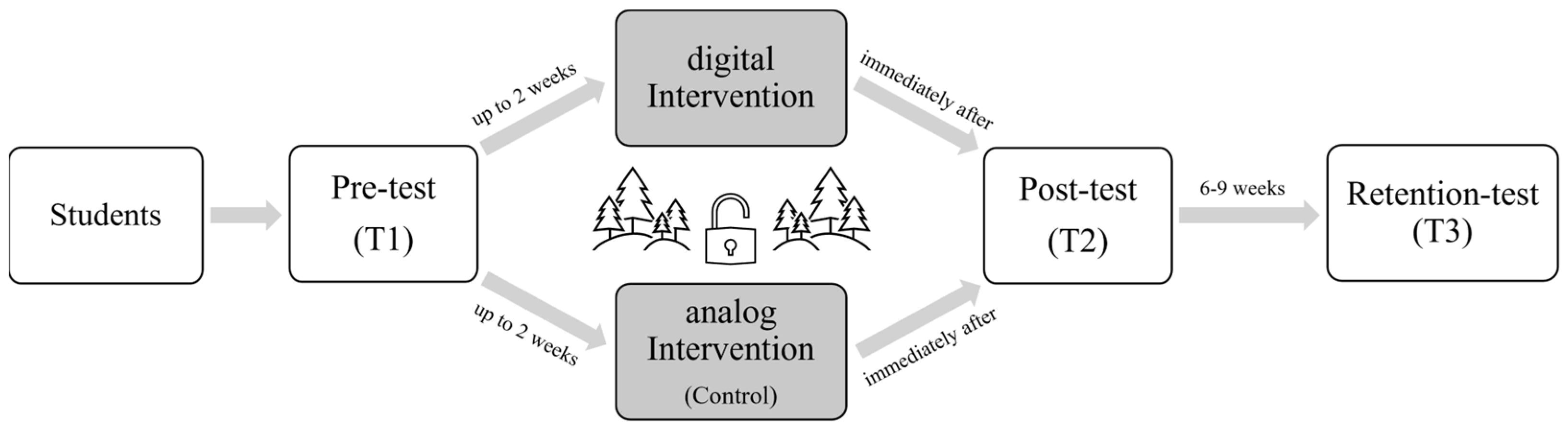

2. Materials and Methods

3. Results

3.1. Quality of the Instrument

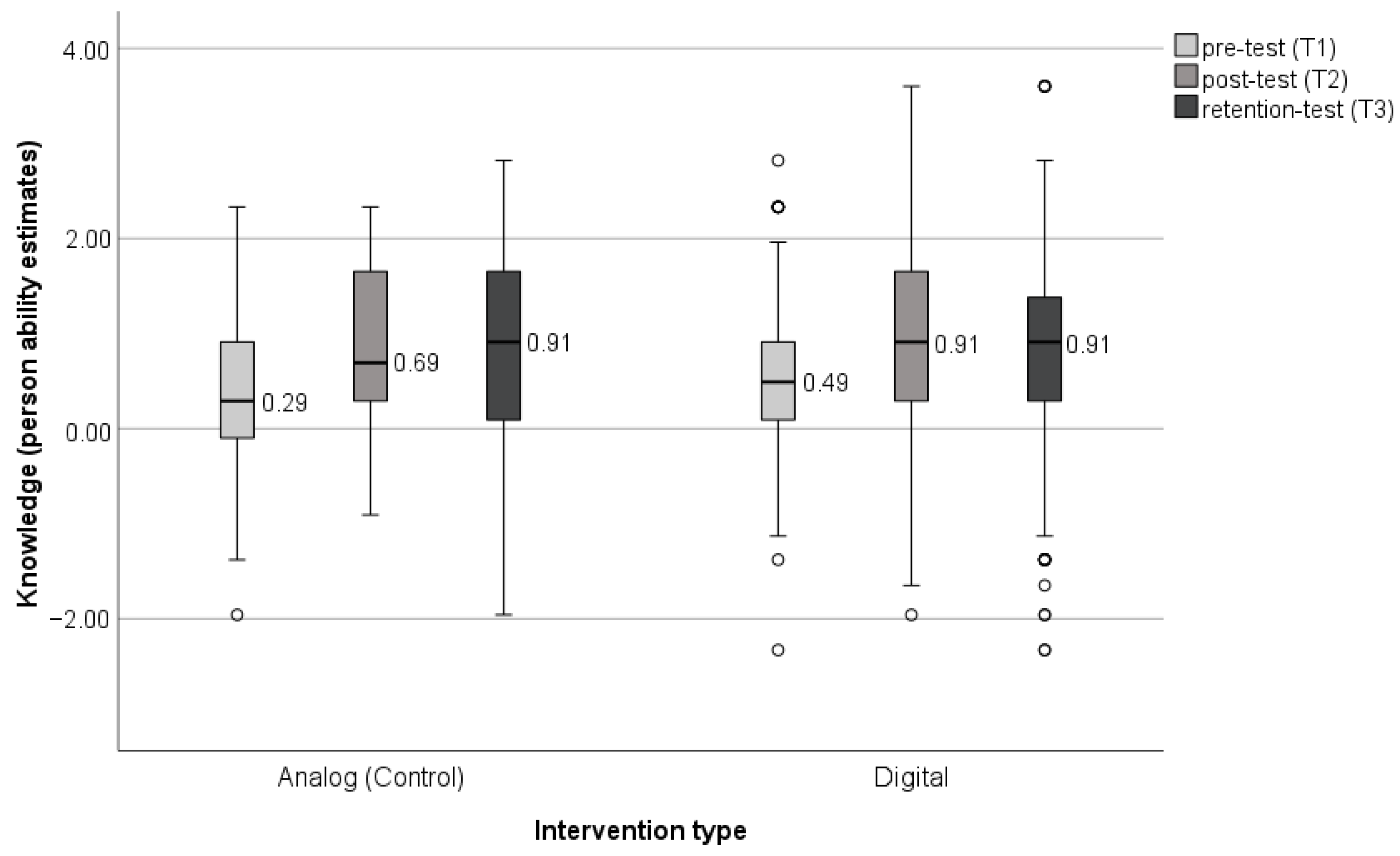

3.2. Knowledge Changes

4. Discussion

4.1. Sustainable Long-Term Knowledge through Both Student-Centered Learning Modules

4.2. Differences in Knowledge Acquisition between the Digital and the Analog Intervention

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Boucher, Y.; Arseneault, D.; Sirois, L.; Blais, L. Logging pattern and landscape changes over the last century at the boreal and deciduous forest transition in Eastern Canada. Landsc. Ecol. 2009, 24, 171–184. [Google Scholar] [CrossRef]

- Foley, J.A.; Defries, R.; Asner, G.P.; Barford, C.; Bonan, G.; Carpenter, S.R.; Chapin, F.S.; Coe, M.T.; Daily, G.C.; Gibbs, H.K.; et al. Global consequences of land use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef]

- Díaz, S.; Fargione, J.; Chapin, F.S.; Tilman, D. Biodiversity loss threatens human well-being. PLoS Biol. 2006, 4, e277. [Google Scholar] [CrossRef]

- Valentini, R.; Matteucci, G.; Dolman, A.J.; Schulze, E.D.; Rebmann, C.; Moors, E.J.; Granier, A.; Gross, P.; Jensen, N.O.; Pilegaard, K.; et al. Respiration as the main determinant of carbon balance in European forests. Nature 2000, 404, 861–865. [Google Scholar] [CrossRef]

- Willett, W.; Rockström, J.; Loken, B.; Springmann, M.; Lang, T.; Vermeulen, S.; Garnett, T.; Tilman, D.; DeClerck, F.; Wood, A.; et al. Food in the Anthropocene: The EAT-Lancet Commission on healthy diets from sustainable food systems. Lancet 2019, 393, 447–492. [Google Scholar] [CrossRef]

- ISB. Fachlehrplan Biologie der 6. Jahrgangsstufe an Bayerischen Realschulen (LehrplanPLUS). Available online: https://www.lehrplanplus.bayern.de/fachlehrplan/realschule/6/biologie (accessed on 4 May 2023).

- ISB. Fachlehrplan Biologie der 8. Jahrgangsstufe an Bayerischen Gymnasien (LehrplanPLUS). Available online: https://www.lehrplanplus.bayern.de/fachlehrplan/gymnasium/8/biologie (accessed on 24 March 2023).

- ISB. Fachlehrplan Heimat-und Sachunterricht der 3./4. Jahrgangsstufe an Bayerischen Grundschulen (LehrplanPLUS). Available online: https://www.lehrplanplus.bayern.de/fachlehrplan/grundschule/3/hsu (accessed on 4 May 2023).

- Leeming, F.C.; Dwyer, W.O.; Porter, B.E.; Cobern, M.K. Outcome Research in Environmental Education: A Critical Review. J. Environ. Educ. 1993, 24, 8–21. [Google Scholar] [CrossRef]

- Cakir, M. Constructivist Approaches to Learning in Science and Their Implications for Science Pedagogy: A Literature Review. Int. J. Environ. Sci. Technol. 2007, 3, 192–206. [Google Scholar]

- Roczen, N.; Kaiser, F.G.; Bogner, F.X.; Wilson, M. A Competence Model for Environmental Education. Environ. Behav. 2014, 46, 972–992. [Google Scholar] [CrossRef]

- Mayer, R.E. Should there be a three-strikes rule against pure discovery learning? The case for guided methods of instruction. Am. Psychol. 2004, 59, 14–19. [Google Scholar] [CrossRef]

- Poudel, D.D.; Vincent, L.M.; Anzalone, C.; Huner, J.; Wollard, D.; Clement, T.; DeRamus, A.; Blakewood, G. Hands-On Activities and Challenge Tests in Agricultural and Environmental Education. J. Environ. Educ. 2005, 36, 10–22. [Google Scholar] [CrossRef]

- Dieser, O.; Bogner, F.X. Young people’s cognitive achievement as fostered by hands-on-centred environmental education. Environ. Educ. Res. 2016, 22, 943–957. [Google Scholar] [CrossRef]

- Randler, C.; Bogner, F.X. Efficacy of Two Different Instructional Methods Involving Complex Ecological Content. Int. J. Sci. Math. Educ. 2009, 7, 315–337. [Google Scholar] [CrossRef]

- Johnson, D.W.; Johnson, R.T. Making Cooperative Learning Work. Theory Pract. 1999, 38, 67–73. [Google Scholar] [CrossRef]

- Settlage, J. Understanding the learning cycle: Influences on abilities to embrace the approach by preservice elementary school teachers. Sci. Educ. 2000, 84, 43–50. [Google Scholar] [CrossRef]

- Felder, R.M.; Felder, G.N.; Dietz, E.J. A Longitudinal Study of Engineering Student Performance and Retention. V. Comparisons with traditionally-taught Students. J. Eng. Educ. 1998, 87, 469–480. [Google Scholar] [CrossRef]

- Sturm, H.; Bogner, F.X. Student-oriented versus Teacher-centred: The effect of learning at workstations about birds and bird flight on cognitive achievement and motivation. Int. J. Sci. Educ. 2008, 30, 941–959. [Google Scholar] [CrossRef]

- Randler, C.; Ilg, A.; Kern, J. Cognitive and Emotional Evaluation of an Amphibian Conservation Program for Elementary School Students. J. Environ. Educ. 2005, 37, 43–52. [Google Scholar] [CrossRef]

- Schneiderhan-Opel, J.; Bogner, F.X. Cannot See the Forest for the Trees? Comparing Learning Outcomes of a Field Trip vs. a Classroom Approach. Forests 2021, 12, 1265. [Google Scholar] [CrossRef]

- Bogner, F.X. Empirical evaluation of an educational conservation programme introduced in Swiss secondary schools. Int. J. Sci. Educ. 1999, 21, 1169–1185. [Google Scholar] [CrossRef]

- Duerden, M.D.; Witt, P.A. The impact of direct and indirect experiences on the development of environmental knowledge, attitudes, and behavior. J. Environ. Psychol. 2010, 30, 379–392. [Google Scholar] [CrossRef]

- Fremerey, C.; Bogner, F. Learning about Drinking Water: How Important are the Three Dimensions of Knowledge that Can Change Individual Behavior? Educ. Sci. 2014, 4, 213–228. [Google Scholar] [CrossRef]

- Marth, M.; Bogner, F.X. How a Hands-on Bionics Lesson May Intervene with Science Motivation and Technology Interest. Int. J. Learn. Teach. Educ. Res. 2017, 16, 72–89. [Google Scholar]

- Bogner, F.X. The Influence of Short-Term Outdoor Ecology Education on Long-Term Variables of Environmental Perspective. J. Environ. Educ. 1998, 29, 17–29. [Google Scholar] [CrossRef]

- Stern, M.J.; Powell, R.B.; Ardoin, N.M. What Difference Does It Make? Assessing Outcomes From Participation in a Residential Environmental Education Program. J. Environ. Educ. 2008, 39, 31–43. [Google Scholar] [CrossRef]

- Liefländer, A.K.; Bogner, F.X.; Kibbe, A.; Kaiser, F.G. Evaluating Environmental Knowledge Dimension Convergence to Assess Educational Programme Effectiveness. Int. J. Sci. Educ. 2015, 37, 684–702. [Google Scholar] [CrossRef]

- Sellmann, D.; Bogner, F.X. Climate change education: Quantitatively assessing the impact of a botanical garden as an informal learning environment. Environ. Educ. Res. 2013, 19, 415–429. [Google Scholar] [CrossRef]

- Cepeda, N.J.; Pashler, H.; Vul, E.; Wixted, J.T.; Rohrer, D. Distributed practice in verbal recall tasks: A review and quantitative synthesis. Psychol. Bull. 2006, 132, 354–380. [Google Scholar] [CrossRef]

- Benbunan-Fich, R.; Hiltz, S.R. Impacts of Asynchronous Learning Networks on Individual and Group Problem Solving: A Field Experiment. Group Decis. Negot. 1999, 8, 409–426. [Google Scholar] [CrossRef]

- Twigg, C.A. Improving Quality and Reducing Cost: Designs for Effective Learning. Chang. Mag. High. Learn. 2003, 35, 22–29. [Google Scholar] [CrossRef]

- Maki, R.H.; Maki, W.S.; Patterson, M.; Whittaker, P.D. Evaluation of a Web-based introductory psychology course: I. Learning and satisfaction in on-line versus lecture courses. J. Psychon. Soc. 2000, 32, 230–239. [Google Scholar] [CrossRef]

- Connolly, T.M.; MacArthur, E.; Stansfield, M.; McLellan, E. A quasi-experimental study of three online learning courses in computing. Comput. Educ. 2007, 49, 345–359. [Google Scholar] [CrossRef]

- Wang, A.Y.; Newlin, M.H. Characteristics of students who enroll and succeed in psychology Web-based classes. J. Educ. Psychol. 2000, 92, 137–143. [Google Scholar] [CrossRef]

- Mottarella, K.; Fritzsche, B.; Parrish, T. Who learns more? Achievement scores following web-based versus classroom instruction in psychology courses. Psychol. Learn. Teach. 2004, 4, 51–54. [Google Scholar] [CrossRef]

- Waschull, S.B. The Online Delivery of Psychology Courses: Attrition, Performance, and Evaluation. Teach. Psychol. 2001, 28, 143–147. [Google Scholar] [CrossRef]

- Kemp, N.; Grieve, R. Face-to-face or face-to-screen? Undergraduates’ opinions and test performance in classroom vs. online learning. Front. Psychol. 2014, 5, 1278. [Google Scholar] [CrossRef]

- Fiedler, S.T.; Heyne, T.; Bogner, F.X. COVID-19 and lockdown schooling: How digital learning environments influence semantic structures and sustainability knowledge. Discov. Sustain. 2021, 2, 32. [Google Scholar] [CrossRef]

- Botsch, C.S.; Botsch, R.E. Audiences and Outcomes in Online and Traditional American Government Classes: A Comparative Two-Year Case Study. APSC 2001, 34, 135–141. [Google Scholar] [CrossRef]

- Hattie, J. Visible Learning: The Sequel; Routledge: London, UK, 2023; ISBN 9781003380542. [Google Scholar]

- Hannafin, M.; Land, S.; Oliver, K. Open Learning Environments: Foundations, methods, and models. In A New Paradigm of Instructional Theory, Volume II. Instructional-Design Theories and Models; Psychology Press: London, UK, 1999; p. 115. [Google Scholar]

- Adams, R.J.; Khoo, S.-T. Acer Quest: The Interactive Test Analysis System (Version 2.1); Australian Council for Educational Research: Camberwell, VIC, Australia, 1996. [Google Scholar]

- Bond, T.G.; Fox, C.M. Applying the Rasch Model: Fundamental Measurement in the Human Sciences; Routledge: New York, NY, USA, 2007; ISBN 978-0-8058-5462-6. [Google Scholar]

- Wright, B.D.; Linacre, J.M.; Gustafson, J.-E.; Martin-Lof, P. Reasonable mean-square fit values. Rasch Meas. Trans. 1994, 8, 370. Available online: http://www.rasch.org/rmt/rmt83b.htm (accessed on 25 March 2023).

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences, 2nd ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988; p. 82. ISBN 0-8058-0283-5. [Google Scholar]

- Rasch, D.; Kubinger, K.D.; Moder, K. The two-sample t test: Pre-testing its assumptions does not pay off. Stat. Pap. 2011, 52, 219–231. [Google Scholar] [CrossRef]

- Ruxton, G.D. The unequal variance t-test is an underused alternative to Student’s t-test and the Mann–Whitney U test. Behav. Ecol. 2006, 17, 688–690. [Google Scholar] [CrossRef]

- Goldschmidt, M.; Bogner, F.X. Learning about Genetic Engineering in an Outreach Laboratory: Influence of Motivation and Gender on Students’ Cognitive Achievement. Int. J. Sci. Educ. Part B 2016, 6, 166–187. [Google Scholar] [CrossRef]

- Schneiderhan-Opel, J.; Bogner, F.X. The Relation between Knowledge Acquisition and Environmental Values within the Scope of a Biodiversity Learning Module. Sustainability 2020, 12, 2036. [Google Scholar] [CrossRef]

- Schmid, S.; Bogner, F. Does Inquiry-Learning Support Long-Term Retention of Knowledge? Int. J. Learn. Teach. Educ. Res. 2015, 10, 51–70. [Google Scholar]

- Hartman, B.A.; Miller, B.K.; Nelson, D.L. The effects of hands-on occupation versus demonstration on children’s recall memory. Am. J. Occup. Ther. 2000, 54, 477–483. [Google Scholar] [CrossRef] [PubMed]

- Hmelo-Silver, C.E. Problem-Based Learning: What and How Do Students Learn? Educ. Psychol. Rev. 2004, 16, 235–266. [Google Scholar] [CrossRef]

- Minner, D.D.; Levy, A.J.; Century, J. Inquiry-based science instruction-what is it and does it matter? Results from a research synthesis years 1984 to 2002. J. Res. Sci. Teach. 2010, 47, 474–496. [Google Scholar] [CrossRef]

- Paul, J.; Lederman, N.G.; Groß, J. Learning experimentation through science fairs. Int. J. Sci. Educ. 2016, 38, 2367–2387. [Google Scholar] [CrossRef]

- Hart, C.; Mulhall, P.; Berry, A.; Loughran, J.; Gunstone, R. What is the purpose of this experiment? Or can students learn something from doing experiments? J. Res. Sci. Teach. 2000, 37, 655–675. [Google Scholar] [CrossRef]

- Banchi, H.; Bell, R. The many levels of inquiry. Sci. Child. 2008, 46, 26–29. [Google Scholar]

- Whitson, L.; Bretz, S.; Towns, M. Characterizing the Level of Inquiry in the Undergraduate Laboratory. J. Coll. Sci. Teach. 2008, 37, 52–58. [Google Scholar]

- Shtulman, A.; Valcarcel, J. Scientific knowledge suppresses but does not supplant earlier intuitions. Cognition 2012, 124, 209–215. [Google Scholar] [CrossRef] [PubMed]

- Sotirou, S.A.; Rodger, W.B.; Bogner, F.X. PATHWAYS—A Case of Large-Scale Implementation of Evidence-Based Practice in Scientific Inquiry-Based Science Education. Int. J. High. Educ. 2017, 6, 8. [Google Scholar] [CrossRef]

- Lord, T.R. 101 Reasons for Using Cooperative Learning in Biology Teaching. Am. Biol. Teach. 2001, 63, 30–38. [Google Scholar] [CrossRef]

- Mullis, I.V.S.; Martin, M.O.; Smith, T.A.; Garden, R.A.; Gregory, K.D.; Gonzalez, E.J.; Chrostowski, S.J.; O’Connor, K.M. TIMSS: Trends in Mathematics and Science Study: Assessment Frameworks and Specifications 2003, 2nd ed.; International Study Center, Lynch School of Education, Boston College: Chestnut Hill, MA, USA, 2003; ISBN 978-1-889938-30-1. [Google Scholar]

- Deci, E.L.; Ryan, R.M. Self-determination theory: A macro-theory of human motivation, development, and health. Can. Psychol./Psychol. Can. 2008, 49, 182–185. [Google Scholar] [CrossRef]

- Randler, C.; Hulde, M. Hands-on versus teacher-centred experiments in soil ecology. Res. Sci. Technol. Educ. 2007, 25, 329–338. [Google Scholar] [CrossRef]

- Kinder, D.; Carnine, D. Direct instruction: What it is and what it is becoming. J. Behav. Educ. 1991, 1, 193–213. [Google Scholar] [CrossRef]

- Kirschner, P.A.; Sweller, J.; Clark, R.E. Why Minimal Guidance During Instruction Does Not Work: An Analysis of the Failure of Constructivist, Discovery, Problem-Based, Experiential, and Inquiry-Based Teaching. Educ. Psychol. 2006, 41, 75–86. [Google Scholar] [CrossRef]

- Kyburz-Graber, R. 50 years of environmental research from a European perspective. J. Environ. Educ. 2019, 50, 378–385. [Google Scholar] [CrossRef]

- Tali Tal, R. Community-based environmental education—A case study of teacher–parent collaboration. Environ. Educ. Res. 2004, 10, 523–543. [Google Scholar] [CrossRef]

- Alexander, K.L.; Entwisle, D.R.; Olson, L.S. Lasting Consequences of the Summer Learning Gap. Am. Sociol. Rev. 2007, 72, 167–180. [Google Scholar] [CrossRef]

- Tran, N.A. The Relationship between Students’ Connections to Out-of-School Experiences and Factors Associated with Science Learning. Int. J. Sci. Educ. 2011, 33, 1625–1651. [Google Scholar] [CrossRef]

- Baierl, T.-M.; Kaiser, F.G.; Bogner, F.X. The supportive role of environmental attitude for learning about environmental issues. J. Environ. Psychol. 2022, 81, 101799. [Google Scholar] [CrossRef]

- Gifford, R.; Nilsson, A. Personal and social factors that influence pro-environmental concern and behaviour: A review. Int. J. Psychol. 2014, 49, 141–157. [Google Scholar] [CrossRef] [PubMed]

- Staus, N.L.; O’Connell, K.; Storksdieck, M. Addressing the Ceiling Effect when Assessing STEM Out-of-School Time Experiences. Front. Educ. 2021, 6, 690431. [Google Scholar] [CrossRef]

- Schönfelder, M.L.; Bogner, F.X. Two ways of acquiring environmental knowledge: By encountering living animals at a beehive and by observing bees via digital tools. Int. J. Sci. Educ. 2017, 39, 723–741. [Google Scholar] [CrossRef]

- Ahel, O.; Lingenau, K. Opportunities and Challenges of Digitalization to Improve Access to Education for Sustainable Development in Higher Education. In Universities as Living Labs for Sustainable Development; Leal Filho, W., Salvia, A.L., Pretorius, R.W., Brandli, L.L., Manolas, E., Alves, F., Azeiteiro, U., Rogers, J., Shiel, C., Do Paco, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 341–356. ISBN 978-3-030-15603-9. [Google Scholar]

- Anshari, M.; Almunawar, M.N.; Shahrill, M.; Wicaksono, D.K.; Huda, M. Smartphones usage in the classrooms: Learning aid or interference? Educ. Inf. Technol. 2017, 22, 3063–3079. [Google Scholar] [CrossRef]

- Mangen, A.; Anda, L.G.; Oxborough, G.H.; Brønnick, K. Handwriting versus keyboard writing: Effect on word recall. J. Writ. Res. 2015, 7, 227–247. [Google Scholar] [CrossRef]

- Mueller, P.A.; Oppenheimer, D.M. The pen is mightier than the keyboard: Advantages of longhand over laptop note taking. Psychol. Sci. 2014, 25, 1159–1168. [Google Scholar] [CrossRef]

| Subtopic | Learning Content |

|---|---|

| The forest as an ecosystem | Students learn about biotic and abiotic environmental factors using the well-known example of the lake ecosystem and transferring them to the forest. |

| The layers of the forest | Students get to know the layers of the forest and conduct and evaluate an experiment on the water storage of the moss layer, followed by deepening exercises. |

| Species knowledge of forest trees | Students identify common forest tree species using a simplified identification key. |

| Succession and deadwood | Students learn about the process of succession and analyze diagrams and texts to evaluate measures for dealing with deadwood in forests. |

| Paper production and recycling | Students get an insight into paper production and resource requirements, recycling exercises, and evaluate common eco-labels related to their gain for nature. |

| The ecological footprint | Students calculate their ecological footprints using an online footprint calculator and evaluate behaviors related to its reduction. |

| Sustainability | Students learn about the three pillars of sustainability and clarify the terms “sustainable”, “climate neutral”, and “carbon neutral”. |

| Protective functions of the forest | Students work out the direct and indirect protective functions of forests. |

| Pollution in the forest | Students evaluate different behaviors in the forests related to their harmfulness and learn about the degradation time of various trash items to reflect on their behavior. |

| n | M | SD | t | df | p (Two-Sided) | |

|---|---|---|---|---|---|---|

| Manalog (T2-T1) | 74 | 0.48 | 0.82 | 1.196 | 126.295 | 0.234 |

| Mdigital (T2-T1) | 231 | 0.35 | 0.84 | |||

| Manalog (T3-T2) | 74 | −0.04 | 0.75 | 0.353 | 127.304 | 0.725 |

| Mdigital (T3-T2) | 231 | −0.08 | 0.78 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fleissner-Martin, J.; Bogner, F.X.; Paul, J. Digital vs. Analog Learning—Two Content-Similar Interventions and Learning Outcomes. Forests 2023, 14, 1807. https://doi.org/10.3390/f14091807

Fleissner-Martin J, Bogner FX, Paul J. Digital vs. Analog Learning—Two Content-Similar Interventions and Learning Outcomes. Forests. 2023; 14(9):1807. https://doi.org/10.3390/f14091807

Chicago/Turabian StyleFleissner-Martin, Juliane, Franz X. Bogner, and Jürgen Paul. 2023. "Digital vs. Analog Learning—Two Content-Similar Interventions and Learning Outcomes" Forests 14, no. 9: 1807. https://doi.org/10.3390/f14091807