1. Introduction

Technological advancement continues to create new opportunities for people across a variety of industries [

1]. Technology helps to improve the efficiency, quality, and cost-effectiveness of the services provided by businesses. However, technological advancements can be disruptive when they make conventional technologies obsolete. Neha et al. [

1] assert that cloud computing, blockchain, and AI are the current developments that may create new opportunities for entrepreneurs. The computer systems are also influencing and improving interactions between consumers and business organizations. Thus, the shift towards the improved use of technology has led to the creation of intelligent systems that can manage and monitor business models with reduced human involvement. AI systems that demonstrate an ability to meet consumers’ demands in different sectors are necessary for the current economy [

2]. AI plays a critical role in monitoring the business environment, identifying the customers’ needs, and implementing the necessary strategies without or with minimal human intervention. Thus, it bridges the gap between consumers’ needs and effective or quality services.

Therefore, AI is modifying the economic landscape and creating changes that can help consumers and entrepreneurs to reap maximum benefits. It is gaining popularity in businesses, especially in business administration, marketing, and financial management [

3]. AI creates new opportunities that result in notable transformations in the overall economic systems. For instance, it causes the rapid unveiling of big data patterns and improved product design to meet customers’ specifications and preferences [

1]. E-commerce is the major beneficiary of the increased use of AI to improve services’ efficiency and quality.

AI helps in reducing complications that may result from human errors. Thus, although AI may reduce employment opportunities, its benefits to organizations are immense.

Notably, AI is a formidable driving force behind the development and success of e-commerce. In e-commerce, AI systems allow for network marketing, electronic payments, and management of the logistics involved in availing products to the customers. Di Vaio et al. [

3] note that AI is becoming increasingly vital in e-commerce food companies because it maintains the production sites’ hygienic conditions and ensures safe food production. It also helps in maintaining high levels of cleanliness of the food-producing equipment.

The automated systems collect, evaluate, and assess data at a rapid rate compared to human beings. AI helps e-commerce to capture the business trends and the changing market needs of customers. Therefore, the customers’ increased convenience leads to increased satisfaction and balancing of the demand and supply mechanisms.

Kumar and Trakru [

4] report that AI allows e-commerce to develop new ideas on satisfying the consumers’ needs and keep up with the changing preferences and choices. Human intelligence may often be limited in carrying out some tasks in e-commerce, including predicting demand and supply chain mechanisms. AI simulates and extents human intelligence to address the rising challenges in e-commerce [

5]. For instance, Soni [

6] established that AI helps e-commerce platforms to manage and monitor their customers. Through AI, a business can gather a wide range of information and evaluate customers to ensure that quality services are offered to them. This helps e-commerce platforms understand the factors that influence their current and potential clients’ purchasing behaviors. It improves the interactions between the e-commerce companies and their customers through chatbots and messengers. E-commerce companies use automation processes to eliminate redundancies in their operations. Kitchens et al. [

7] state that AI allows for automated responses to questions raised by the customers. However, Kumar and Trakru [

4] warn of potential threats and challenges to customers and e-commerce that limit the efficiency and effectiveness of AI in meeting the business expectations. Consequently, it is necessary to explore opportunities and challenges in light of changing consumer demands in e-commerce.

1.1. Statement of the Problem

Even though AI systems have revolutionized e-commerce, courtesy of the wide range of functionalities such as video, image and speech recognition, natural language, and autonomous objects, a range of ethical concerns have been raised over the design, development, and deployment of AI systems. Four critical aspects come into play: fairness, auditability, interpretability and explainability, and transparency. The estimation process via such systems is considered the ‘black box’ as it is less interpretable and comprehensible than other statistical models [

8]. Bathaee [

9] identifies that there are no profound ways of understanding the decision-making processes of AI systems. The black box concept implies that AI predictions and decisions are similar to those of humans. However, they fail to explain why such decisions have been made. While AI processes may be based on perceivable human patterns, it can be imagined that understanding them is similar to trying to determine other highly intelligent species. Because little can be inferred about the conduct and intent of such species (AI systems), especially regarding their behavior patterns, issues of the degree of liability and intent of harm also come into play. The bottom line revolves around how best to guarantee transparency to redeem trust among the targeted users within e-commerce spaces [

9].

1.2. Proposed Solution

The main aim of the current study is to lay the foundation for a universal definition of the term explainability. AI systems need to adopt post hoc machine learning methods that will make them more interpretable [

10]. While there is a wide range of taxonomic proposals on the definition and classification of what should be considered ‘explainable’ in regards to AI systems [

10], it is contended that there is a need for a uniform blueprint of the definition of the term and its components. To address the main objective, which is solving the black box problem, this research proposes the employment of XAI models. XAI models feature some level of explainability approached from various angles, including interpretability and transparency. Even though the current research recognizes the prevalence of studies under the topic and consults widely within the area, it goes a step ahead to offer a solution to the existing impasse, as seen in divided scientific contributions concerning what constitutes the concept of ‘explainability’. In this regard, the article will contribute to the state of the art by establishing a more uniform approach towards research that seeks to create evidence based XAI models that will address ethical concerns and enhance business practices.

1.3. Overview of the Study

The current article provides a critical outline of key tenets of AI and its role in e-commerce. The project is structured into six main sections, which are the introduction, review of the literature, proposed method, results, discussion, and conclusion. The introduction part of the project provides an overview of the research topic ‘role of AI in shaping consumer in E-commerce,’ a statement of the problem and the proposed solution.

Section 2 examines the available literature on artificial intelligence techniques, including sentimental analysis and opinion mining, deep learning, and machine learning. It also examines the AI perspective in the context of shaping the marketing strategies that have been adopted by businesses.

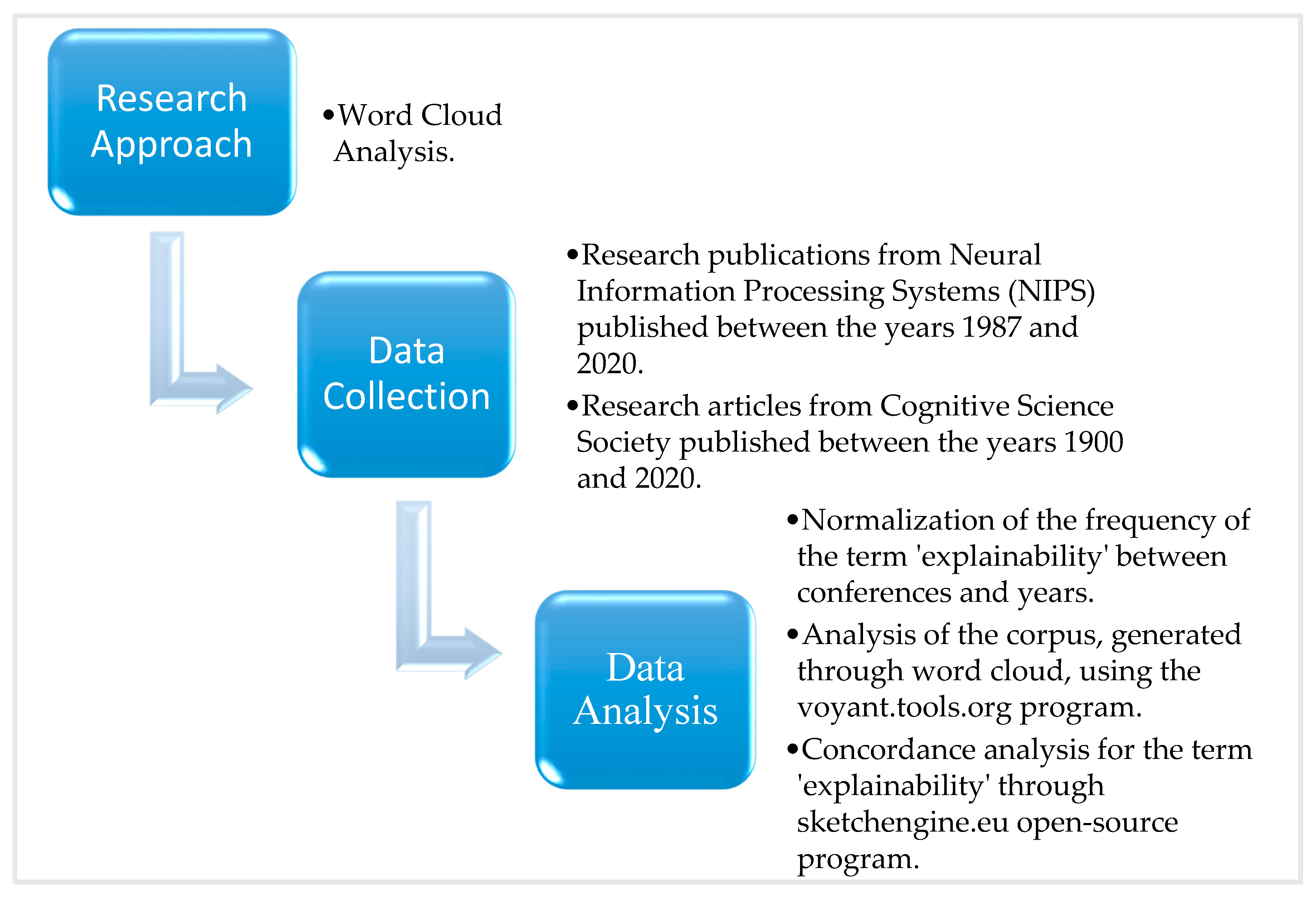

Section 3 discusses the methodology used to explore the research question. It identifies the research approach (word cloud analysis), sources of data (Neural Information Processing Systems (NIPS), and Cognitive Science Society (CSS)).

Section 3 also details data analysis techniques, which include corpus analysis and concordance analysis. The results section provides the outcomes of the analyzed data, particularly the corpus generated from the word cloud. Some of the software, such as the Voyant Tools, was used to reveal the most prominent words. A concordance analysis table was also generated, in the results section, for the term ‘explainability’.

Section 5 provides a detailed discussion of the outcome of the research as well as the key observations made regarding opaque systems, interpretable systems, and comprehensible systems. The overall outcome of the study and implications for future research are provided in the conclusion section.

4. Results

The word cloud plots (

Figure 2a,b) are an easy way of understanding the composition of the ‘explainability’ concept and the related semantic meaning across the ML-driven databases chosen for this study. Here, essential words were perceived as those that first appeared in a 20-word window following a search of the term ‘explanation’; such words also had a frequency above the average level. In

Figure 2a, it is evident that the corpus reveals the prominent words as use, explanation, model, and emotion. Other notable words are learn and the system. The corpus (in

Figure 2b) shows well-known words as model, learn, use, and data. Other prominent words are method, task, infer, and image. There are also essential words such as decision and prediction appearing within the 20-word window. Thus, the AI community in

Figure 2a describes the term explainability as being related to words such as use, explanation, and model, among others, suggesting an emphasis on using system models that enhance learning, explanation, decision making, and prediction. The implication (in

Figure 2b) is that the term explainability could be taken to mean using models that allow learning, ease of inference, and prediction, among others.

The corpus generated from the word cloud (in

Figure 2a) reveals that the 20 most prominent words are as follows: system, explanation, model, emotion, learn, use, study, train, method, predict, data, estimate, control, result, show, decision, interpret, design, variance, image). Analyzing this corpus to formulate a connection between the words and generate meaning using voyant-tools.org (an open-source corpus analysis program) revealed that the most prominent words, as seen in

Figure 3, were as follows: control; data; decision; design; emotion.

Many meaningful sentences in the context of responsible XAI can, therefore, be formed from combining these words. For example, the words could be brought together to imply ‘a design of data control that enhances decision making’ or ‘designing and controlling data in such a way that enhances emotion and decisions’. The corpus from word cloud 2 reveals 20 words as the most prominent (model, use, learn, data, method, predict, behavior, task, perform, base, show, infer, image, algorithm, propose, optimal, object, general, explain, network). Voyant analysis revealed that five words were the most prominent words: action; algorithm, base, behavior, data, and explain, as seen in

Figure 4 below.

Possible sentences from this word combination include: ‘an algorithm that explains data behavior’ or ‘an algorithm behavior that is based on data explanation’. Additionally, upon looking for the concordance of the term ‘explainability’ in sketchengine.eu (an open-source program that analyses how real users of a given language use certain words), some terms emerged as critical definitional terms of the word: predictability, verifiability, user feedback, information management, data insights, analytics, determinism, understanding, and accuracy, as seen in

Figure 5 below.

6. Conclusions

The study’s main purpose was to lay the foundation for a universal definition of the term ‘explainability’. The analyzed data from the word cloud plots revealed that the term ‘explainability’ is mainly associated with words such as model, explanation, and use. These were the most prominent words exhibited in the corpus generated from the word cloud. In the corpus from word cloud 1, they include emotion, design decision, data, control, image, variance, interpret, decision, show, result, control, estimate, data, predict, method, train, study, use, learn, model, explanation, and system. In corpus word cloud 2, they include model, use, learn, data, method, predict, behavior, task, perform, base, show, infer, image, algorithm, propose, optimal, object, general, explain, network. After the Voyant analysis was conducted, the most prominent words that appeared on word cloud 1 included control, data, decision, design, and emotion, while in word cloud 2, they included algorithm, base, behavior, data, and explain. When the words obtained from the Voyant analysis were combined, they provided specific meanings of the word ‘explainability’. The main definitions obtained from the combination of the most frequent words include ‘an algorithm that explains data behavior’ or ‘an algorithm behavior that is based on data explanation’ or ‘a design of data control that enhances decision making’ or ‘designing and controlling data in such a way that enhances emotion and decisions’.

The application of AI in e-commerce stands to expand in the future, as businesses are appreciating their role in influencing consumer demands. The rapid development of research technology and increased access to the internet present e-commerce businesses with an opportunity to expand their various platforms. Notably, the influence of AI in e-commerce spills over to customer retention and satisfaction. The customers are at the center of the changes and adoption of AI in e-commerce. Hence, e-commerce can further develop contact with customers and establish developed customer relationship management systems.

The researcher of this study has made an effort to provide a critical outline of the key tenets of AI and its role in e-commerce, as well as a comprehensive insight regarding the role of AI in addressing the needs of consumers in the e-commerce industry. Even though the study has attempted to provide a universal meaning of ‘ explainability’, the actual impact of AI on consumers’ decisions is not yet clear, considering the notion of the “black box”, i.e., if the decisions arrived at cannot be explained and the reasons behind such actions given, it will be difficult for people to trust AI systems. Therefore, there is a need for future studies further to examine the need for explainable AI systems in e-commerce and find solutions to the ‘black box’ issue.

For future research, this study may serve as a ‘template’ for the definition of an explainable system that characterizes three aspects: opaque systems where users have no access to insights that define the involved algorithmic mechanism; interpretable systems where users have access to the mathematical algorithmic mechanism, and comprehensible systems where users have access to symbols that enhance their decision making.