Journal Description

Future Internet

Future Internet

is an international, peer-reviewed, open access journal on internet technologies and the information society, published monthly online by MDPI.

- Open Access— free for readers, with article processing charges (APC) paid by authors or their institutions.

- High Visibility: indexed within Scopus, ESCI (Web of Science), Ei Compendex, dblp, Inspec, and other databases.

- Journal Rank: JCR - Q2 (Computer Science, Information Systems) / CiteScore - Q1 (Computer Networks and Communications)

- Rapid Publication: manuscripts are peer-reviewed and a first decision is provided to authors approximately 17 days after submission; acceptance to publication is undertaken in 3.6 days (median values for papers published in this journal in the first half of 2025).

- Recognition of Reviewers: reviewers who provide timely, thorough peer-review reports receive vouchers entitling them to a discount on the APC of their next publication in any MDPI journal, in appreciation of the work done.

- Journal Clusters of Network and Communications Technology: Future Internet, IoT, Telecom, Journal of Sensor and Actuator Networks, Network, Signals.

Impact Factor:

3.6 (2024);

5-Year Impact Factor:

3.5 (2024)

Latest Articles

SFC-GS: A Multi-Objective Optimization Service Function Chain Scheduling Algorithm Based on Matching Game

Future Internet 2025, 17(11), 484; https://doi.org/10.3390/fi17110484 (registering DOI) - 22 Oct 2025

Abstract

Service Function Chain (SFC) is a framework that dynamically orchestrates Virtual Network Functions (VNFs) and is essential to enhancing resource scheduling efficiency. However, traditional scheduling methods face several limitations, such as low matching efficiency, suboptimal resource utilization, and limited global coordination capabilities. To

[...] Read more.

Service Function Chain (SFC) is a framework that dynamically orchestrates Virtual Network Functions (VNFs) and is essential to enhancing resource scheduling efficiency. However, traditional scheduling methods face several limitations, such as low matching efficiency, suboptimal resource utilization, and limited global coordination capabilities. To this end, we propose a multi-objective scheduling algorithm for SFCs based on matching games (SFC-GS). First, a multi-objective cooperative optimization model is established that aims to reduce scheduling time, increase request acceptance rate, lower latency, and minimize resource consumption. Second, a matching model is developed through the construction of preference lists for service nodes and VNFs, followed by multi-round iterative matching. In each round, only the resource status of the current and neighboring nodes is evaluated, thereby reducing computational complexity and improving response speed. Finally, a hierarchical batch processing strategy is introduced, in which service requests are scheduled in priority-based batches, and subsequent allocations are dynamically adjusted based on feedback from previous batches. This establishes a low-overhead iterative optimization mechanism to achieve global resource optimization. Experimental results demonstrate that, compared to baseline methods, SFC-GS improves request acceptance rate and resource utilization by approximately 8%, reduces latency and resource consumption by around 10%, and offers clear advantages in scheduling time.

Full article

Open AccessArticle

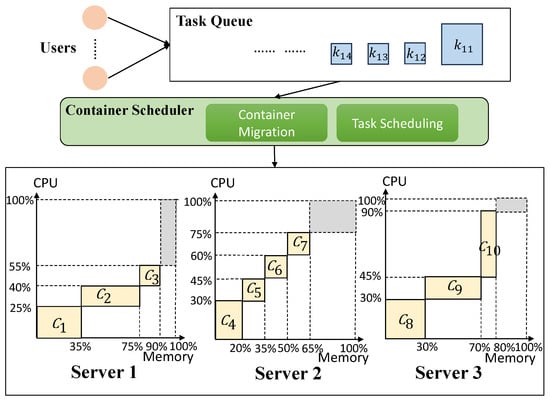

Joint Optimization of Container Resource Defragmentation and Task Scheduling in Queueing Cloud Computing: A DRL-Based Approach

by

Yan Guo, Lan Wei, Cunqun Fan, You Ma, Xiangang Zhao and Henghong He

Future Internet 2025, 17(11), 483; https://doi.org/10.3390/fi17110483 (registering DOI) - 22 Oct 2025

Abstract

Container-based virtualization has become pivotal in cloud computing, and resource fragmentation is inevitable due to the frequency of container deployment/termination and the heterogeneous nature of IoT tasks. In queuing cloud systems, resource defragmentation and task scheduling are interdependent yet rarely co-optimized in existing

[...] Read more.

Container-based virtualization has become pivotal in cloud computing, and resource fragmentation is inevitable due to the frequency of container deployment/termination and the heterogeneous nature of IoT tasks. In queuing cloud systems, resource defragmentation and task scheduling are interdependent yet rarely co-optimized in existing research. This paper addresses this gap by investigating the joint optimization of resource defragmentation and task scheduling in a queuing cloud computing system. We first formulate the problem to minimize task completion time and maximize resource utilization, then transform it into an online decision problem. We propose a Deep Reinforcement Learning (DRL)-based two-layer iterative approach called DRL-RDG, which uses a Resource Defragmentation approach based on a Greedy strategy (RDG) to find the optimal container migration solution and a DRL algorithm to learn the optimal task-scheduling solution. Simulation results show that DRL-RDG achieves a low average task completion time and high resource utilization, demonstrating its effectiveness in queuing cloud environments.

Full article

(This article belongs to the Special Issue Convergence of IoT, Edge and Cloud Systems)

►▼

Show Figures

Figure 1

Open AccessArticle

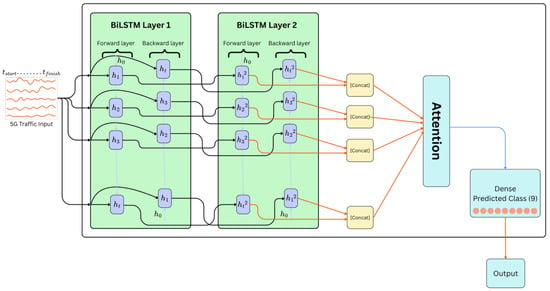

BiTAD: An Interpretable Temporal Anomaly Detector for 5G Networks with TwinLens Explainability

by

Justin Li Ting Lau, Ying Han Pang, Charilaos Zarakovitis, Heng Siong Lim, Dionysis Skordoulis, Shih Yin Ooi, Kah Yoong Chan and Wai Leong Pang

Future Internet 2025, 17(11), 482; https://doi.org/10.3390/fi17110482 (registering DOI) - 22 Oct 2025

Abstract

The transition to 5G networks brings unprecedented speed, ultra-low latency, and massive connectivity. Nevertheless, it introduces complex traffic patterns and broader attack surfaces that render traditional intrusion detection systems (IDSs) ineffective. Existing rule-based methods and classical machine learning approaches struggle to capture the

[...] Read more.

The transition to 5G networks brings unprecedented speed, ultra-low latency, and massive connectivity. Nevertheless, it introduces complex traffic patterns and broader attack surfaces that render traditional intrusion detection systems (IDSs) ineffective. Existing rule-based methods and classical machine learning approaches struggle to capture the temporal and dynamic characteristics of 5G traffic, while many deep learning models lack interpretability, making them unsuitable for high-stakes security environments. To address these challenges, we propose Bidirectional Temporal Anomaly Detector (BiTAD), a deep temporal learning architecture for anomaly detection in 5G networks. BiTAD leverages dual-direction temporal sequence modelling with attention to encode both past and future dependencies while focusing on critical segments within network sequences. Like many deep models, BiTAD’s faces interpretability challenges. To resolve its “black-box” nature, a dual-perspective explainability module, coined TwinLens, is proposed. This module integrates SHAP and TimeSHAP to provide global feature attribution and temporal relevance, delivering dual-perspective interpretability. Evaluated on the public 5G-NIDD dataset, BiTAD demonstrates superior detection performance compared to existing models. TwinLens enables transparent insights by identifying which features and when they were most influential to anomaly predictions. By jointly addressing the limitations in temporal modelling and interpretability, our work contributes a practical IDS framework tailored to the demands of next-generation mobile networks.

Full article

(This article belongs to the Special Issue Intrusion Detection and Resiliency in Cyber-Physical Systems and Networks—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessReview

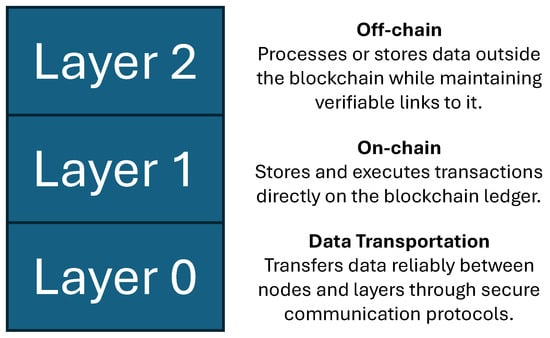

A Review on Blockchain Sharding for Improving Scalability

by

Mahran Morsidi, Sharul Tajuddin, S. H. Shah Newaz, Ravi Kumar Patchmuthu and Gyu Myoung Lee

Future Internet 2025, 17(10), 481; https://doi.org/10.3390/fi17100481 - 21 Oct 2025

Abstract

Blockchain technology, originally designed as a secure and immutable ledger, has expanded its applications across various domains. However, its scalability remains a fundamental bottleneck, limiting throughput, specifically Transactions Per Second (TPS) and increasing confirmation latency. Among the many proposed solutions, sharding has emerged

[...] Read more.

Blockchain technology, originally designed as a secure and immutable ledger, has expanded its applications across various domains. However, its scalability remains a fundamental bottleneck, limiting throughput, specifically Transactions Per Second (TPS) and increasing confirmation latency. Among the many proposed solutions, sharding has emerged as a promising Layer 1 approach by partitioning blockchain networks into smaller, parallelized components, significantly enhancing processing efficiency while maintaining decentralization and security. In this paper, we have conducted a systematic literature review, resulting in a comprehensive review of sharding. We provide a detailed comparative analysis of various sharding approaches and emerging AI-assisted sharding approaches, assessing their effectiveness in improving TPS and reducing latency. Notably, our review is the first to incorporate and examine the standardization efforts of the ITU-T and ETSI, with a particular focus on activities related to blockchain sharding. Integrating these standardization activities allows us to bridge the gap between academic research and practical standardization in blockchain sharding, thereby enhancing the relevance and applicability of our review. Additionally, we highlight the existing research gaps, discuss critical challenges such as security risks and inter-shard communication inefficiencies, and provide insightful future research directions. Our work serves as a foundational reference for researchers and practitioners aiming to optimize blockchain scalability through sharding, contributing to the development of more efficient, secure, and high-performance decentralized networks. Our comparative synthesis further highlights that while Bitcoin and Ethereum remain limited to 7–15 TPS with long confirmation delays, sharding-based systems such as Elastico and OmniLedger have reported significant throughput improvements, demonstrating sharding’s clear advantage over traditional Layer 1 enhancements. In contrast to other state-of-the-art scalability techniques such as block size modification, consensus optimization, and DAG-based architectures, sharding consistently achieves higher transaction throughput and lower latency, indicating its position as one of the most effective Layer 1 solutions for improving blockchain scalability.

Full article

(This article belongs to the Special Issue AI and Blockchain: Synergies, Challenges, and Innovations)

►▼

Show Figures

Figure 1

Open AccessArticle

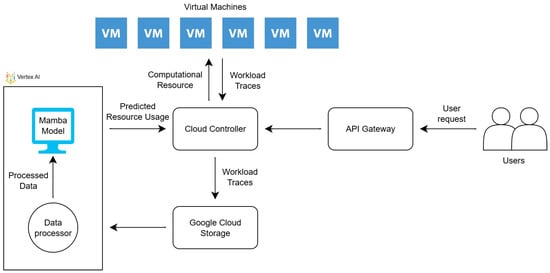

MambaNet0: Mamba-Based Sustainable Cloud Resource Prediction Framework Towards Net Zero Goals

by

Thananont Chevaphatrakul, Han Wang and Sukhpal Singh Gill

Future Internet 2025, 17(10), 480; https://doi.org/10.3390/fi17100480 (registering DOI) - 21 Oct 2025

Abstract

►▼

Show Figures

With the ever-growing reliance on cloud computing, efficient resource allocation is crucial for maximising the effective use of provisioned resources from cloud service providers. Proactive resource management is therefore critical for minimising costs and striving for net zero emission goals. One of the

[...] Read more.

With the ever-growing reliance on cloud computing, efficient resource allocation is crucial for maximising the effective use of provisioned resources from cloud service providers. Proactive resource management is therefore critical for minimising costs and striving for net zero emission goals. One of the most promising methods involves the use of Artificial Intelligence (AI) techniques to analyse and predict resource demand, such as cloud CPU utilisation. This paper presents MambaNet0, a Mamba-based cloud resource prediction framework. The model is implemented on Google’s Vertex AI workbench and uses the real-world Bitbrains Grid Workload Archive-T-12 dataset, which contains the resource usage metrics of 1750 virtual machines. The Mamba model’s performance is then evaluated against established baseline models, including Autoregressive Integrated Moving Average (ARIMA), Long Short-Term Memory (LSTM), and Amazon Chronos, to demonstrate its potential for accurate prediction of CPU utilisation. The MambaNet0 model achieved a 29% improvement in Symmetric Mean Absolute Percentage Error (SMAPE) compared to the best-performing baseline Amazon Chronos. These findings reinforce the Mamba model’s ability to forecast accurate CPU utilisation, highlighting its potential for optimising cloud resource allocation in contribution to net zero goals.

Full article

Figure 1

Open AccessArticle

The Paradox of AI Knowledge: A Blockchain-Based Approach to Decentralized Governance in Chinese New Media Industry

by

Jing Wu and Yaoyi Cai

Future Internet 2025, 17(10), 479; https://doi.org/10.3390/fi17100479 - 20 Oct 2025

Abstract

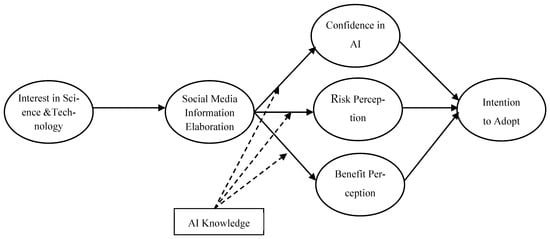

AI text-to-video systems, such as OpenAI’s Sora, promise substantial efficiency gains in media production but also pose risks of biased outputs, opaque optimization, and deceptive content. Using the Orientation–Stimulus–Orientation–Response (O-S-O-R) model, we conduct an empirical study with 209 Chinese new media professionals and

[...] Read more.

AI text-to-video systems, such as OpenAI’s Sora, promise substantial efficiency gains in media production but also pose risks of biased outputs, opaque optimization, and deceptive content. Using the Orientation–Stimulus–Orientation–Response (O-S-O-R) model, we conduct an empirical study with 209 Chinese new media professionals and employ structural equation modeling to examine how information elaboration relates to AI knowledge, perceptions, and adoption intentions. Our findings reveal a knowledge paradox: higher objective AI knowledge negatively moderates elaboration, suggesting that centralized information ecosystems can misguide even well-informed practitioners. Building on these behavioral insights, we propose a blockchain-based governance framework that operationalizes five mechanisms to enhance oversight and trust while maintaining efficiency: Expert Assessment DAOs, Community Validation DAOs, real-time algorithm monitoring, professional integrity protection, and cross-border coordination. While our study focuses on China’s substantial new media market, the observed patterns and design principles generalize to global contexts. This work contributes empirical grounding for Web3-enabled AI governance, specifies implementable smart-contract patterns for multi-stakeholder validation and incentives, and outlines a research agenda spanning longitudinal, cross-cultural, and implementation studies.

Full article

(This article belongs to the Special Issue Blockchain and Web3: Applications, Challenges and Future Trends—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Towards Proactive Domain Name Security: An Adaptive System for .ro domains Reputation Analysis

by

Carmen Ionela Rotună, Ioan Ștefan Sacală and Adriana Alexandru

Future Internet 2025, 17(10), 478; https://doi.org/10.3390/fi17100478 - 18 Oct 2025

Abstract

In a digital landscape marked by the exponential growth of cyber threats, the development of automated domain reputation systems is extremely important. Emerging technologies such as artificial intelligence and machine learning now enable proactive and scalable approaches to early identification of malicious or

[...] Read more.

In a digital landscape marked by the exponential growth of cyber threats, the development of automated domain reputation systems is extremely important. Emerging technologies such as artificial intelligence and machine learning now enable proactive and scalable approaches to early identification of malicious or suspicious domains. This paper presents an adaptive domain name reputation system that integrates advanced machine learning to enhance cybersecurity resilience. The proposed framework uses domain data from .ro domain Registry and several other sources (blacklists, whitelists, DNS, SSL certificate), detects anomalies using machine learning techniques, and scores domain security risk levels. A supervised XGBoost model is trained and assessed through five-fold stratified cross-validation and a held-out 80/20 split. On an example dataset of 25,000 domains, the system attains accuracy 0.993 and F1 0.993 and is exposed through a lightweight Flask service that performs asynchronous feature collection for near real-time scoring. The contribution is a blueprint that links list supervision with registry/DNS/TLS features and deployable inference to support proactive domain abuse mitigation in ccTLD environments.

Full article

(This article belongs to the Special Issue Adversarial Attacks and Cyber Security)

►▼

Show Figures

Figure 1

Open AccessArticle

Uncensored AI in the Wild: Tracking Publicly Available and Locally Deployable LLMs

by

Bahrad A. Sokhansanj

Future Internet 2025, 17(10), 477; https://doi.org/10.3390/fi17100477 - 18 Oct 2025

Abstract

Open-weight generative large language models (LLMs) can be freely downloaded and modified. Yet, little empirical evidence exists on how these models are systematically altered and redistributed. This study provides a large-scale empirical analysis of safety-modified open-weight LLMs, drawing on 8608 model repositories and

[...] Read more.

Open-weight generative large language models (LLMs) can be freely downloaded and modified. Yet, little empirical evidence exists on how these models are systematically altered and redistributed. This study provides a large-scale empirical analysis of safety-modified open-weight LLMs, drawing on 8608 model repositories and evaluating 20 representative modified models on unsafe prompts designed to elicit, for example, election disinformation, criminal instruction, and regulatory evasion. This study demonstrates that modified models exhibit substantially higher compliance: while an average of unmodified models complied with only 19.2% of unsafe requests, modified variants complied at an average rate of 80.0%. Modification effectiveness was independent of model size, with smaller, 14-billion-parameter variants sometimes matching or exceeding the compliance levels of 70B parameter versions. The ecosystem is highly concentrated yet structurally decentralized; for example, the top 5% of providers account for over 60% of downloads and the top 20 for nearly 86%. Moreover, more than half of the identified models use GGUF packaging, optimized for consumer hardware, and 4-bit quantization methods proliferate widely, though full-precision and lossless 16-bit models remain the most downloaded. These findings demonstrate how locally deployable, modified LLMs represent a paradigm shift for Internet safety governance, calling for new regulatory approaches suited to decentralized AI.

Full article

(This article belongs to the Special Issue Artificial Intelligence (AI) and Natural Language Processing (NLP))

►▼

Show Figures

Graphical abstract

Open AccessArticle

Integrating Large Language Models into Automated Software Testing

by

Yanet Sáez Iznaga, Luís Rato, Pedro Salgueiro and Javier Lamar León

Future Internet 2025, 17(10), 476; https://doi.org/10.3390/fi17100476 - 18 Oct 2025

Abstract

►▼

Show Figures

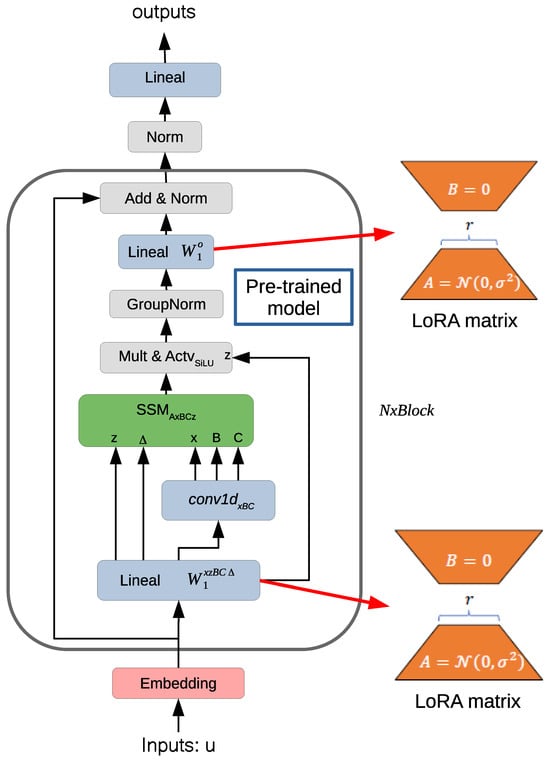

This work investigates the use of LLMs to enhance automation in software testing, with a particular focus on generating high-quality, context-aware test scripts from natural language descriptions, while addressing both text-to-code and text+code-to-code generation tasks. The Codestral Mamba model was fine-tuned by proposing

[...] Read more.

This work investigates the use of LLMs to enhance automation in software testing, with a particular focus on generating high-quality, context-aware test scripts from natural language descriptions, while addressing both text-to-code and text+code-to-code generation tasks. The Codestral Mamba model was fine-tuned by proposing a way to integrate LoRA matrices into its architecture, enabling efficient domain-specific adaptation and positioning Mamba as a viable alternative to Transformer-based models. The model was trained and evaluated on two benchmark datasets: CONCODE/CodeXGLUE and the proprietary TestCase2Code dataset. Through structured prompt engineering, the system was optimized to generate syntactically valid and semantically meaningful code for test cases. Experimental results demonstrate that the proposed methodology successfully enables the automatic generation of code-based test cases using large language models. In addition, this work reports secondary benefits, including improvements in test coverage, automation efficiency, and defect detection when compared to traditional manual approaches. The integration of LLMs into the software testing pipeline also showed potential for reducing time and cost while enhancing developer productivity and software quality. The findings suggest that LLM-driven approaches can be effectively aligned with continuous integration and deployment workflows. This work contributes to the growing body of research on AI-assisted software engineering and offers practical insights into the capabilities and limitations of current LLM technologies for testing automation.

Full article

Figure 1

Open AccessArticle

Decentralized Federated Learning for IoT Malware Detection at the Multi-Access Edge: A Two-Tier, Privacy-Preserving Design

by

Mohammed Asiri, Maher A. Khemakhem, Reemah M. Alhebshi, Bassma S. Alsulami and Fathy E. Eassa

Future Internet 2025, 17(10), 475; https://doi.org/10.3390/fi17100475 - 17 Oct 2025

Abstract

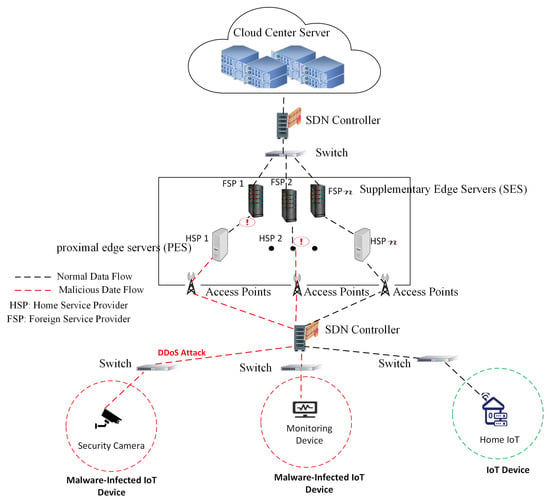

Botnet attacks on Internet of Things (IoT) devices are escalating at the 5G/6G multi-access edge, yet most federated learning frameworks for IoT malware detection (FL-IMD) still hinge on a central aggregator, enlarging the attack surface, weakening privacy, and creating a single point of

[...] Read more.

Botnet attacks on Internet of Things (IoT) devices are escalating at the 5G/6G multi-access edge, yet most federated learning frameworks for IoT malware detection (FL-IMD) still hinge on a central aggregator, enlarging the attack surface, weakening privacy, and creating a single point of failure. We propose a two-tier, fully decentralized FL architecture aligned with MEC’s Proximal Edge Server (PES)/Supplementary Edge Server (SES) hierarchy. PES nodes train locally and encrypt updates with the Cheon–Kim–Kim–Song (CKKS) scheme; SES nodes verify ECDSA-signed provenance, homomorphically aggregate ciphertexts, and finalize each round via an Algorand-style committee that writes a compact, tamper-evident record (update digests/URIs and a global-model hash) to an append-only ledger. Using the N-BaIoT benchmark with an unsupervised autoencoder, we evaluate known-device and leave-one-device-out regimes against a classical centralized baseline and a cryptographically hardened but server-centric variant. With the heavier CKKS profile, attack sensitivity is preserved (TPR

(This article belongs to the Special Issue Edge-Cloud Computing and Federated-Split Learning in Internet of Things—Second Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Bi-Scale Mahalanobis Detection for Reactive Jamming in UAV OFDM Links

by

Nassim Aich, Zakarya Oubrahim, Hachem Ait Talount and Ahmed Abbou

Future Internet 2025, 17(10), 474; https://doi.org/10.3390/fi17100474 - 17 Oct 2025

Abstract

Reactive jamming remains a critical threat to low-latency telemetry of Unmanned Aerial Vehicles (UAVs) using Orthogonal Frequency Division Multiplexing (OFDM). In this paper, a Bi-scale Mahalanobis approach is proposed to detect and classify reactive jamming attacks on UAVs; it jointly exploits window-level energy

[...] Read more.

Reactive jamming remains a critical threat to low-latency telemetry of Unmanned Aerial Vehicles (UAVs) using Orthogonal Frequency Division Multiplexing (OFDM). In this paper, a Bi-scale Mahalanobis approach is proposed to detect and classify reactive jamming attacks on UAVs; it jointly exploits window-level energy and the Sevcik fractal dimension and employs self-adapting thresholds to detect any drift in additive white Gaussian noise (AWGN), fading effects, or Radio Frequency (RF) gain. The simulations were conducted on 5000 frames of OFDM signals, which were distorted by Rayleigh fading, a ±10 kHz frequency drift, and log-normal power shadowing. The simulation results achieved a precision of 99.4%, a recall of 100%, an F1 score of 99.7%, an area under the receiver operating characteristic curve (AUC) of 0.9997, and a mean alarm latency of 80 μs. The method used reinforces jam resilience in low-power commercial UAVs, yet it needs no extra RF hardware and avoids heavy deep learning computation.

Full article

(This article belongs to the Special Issue Intelligent IoT and Wireless Communication)

►▼

Show Figures

Graphical abstract

Open AccessArticle

QL-AODV: Q-Learning-Enhanced Multi-Path Routing Protocol for 6G-Enabled Autonomous Aerial Vehicle Networks

by

Abdelhamied A. Ateya, Nguyen Duc Tu, Ammar Muthanna, Andrey Koucheryavy, Dmitry Kozyrev and János Sztrik

Future Internet 2025, 17(10), 473; https://doi.org/10.3390/fi17100473 - 16 Oct 2025

Abstract

With the arrival of sixth-generation (6G) wireless systems comes radical potential for the deployment of autonomous aerial vehicle (AAV) swarms in mission-critical applications, ranging from disaster rescue to intelligent transportation. However, 6G-supporting AAV environments present challenges such as dynamic three-dimensional topologies, highly restrictive

[...] Read more.

With the arrival of sixth-generation (6G) wireless systems comes radical potential for the deployment of autonomous aerial vehicle (AAV) swarms in mission-critical applications, ranging from disaster rescue to intelligent transportation. However, 6G-supporting AAV environments present challenges such as dynamic three-dimensional topologies, highly restrictive energy constraints, and extremely low latency demands, which substantially degrade the efficiency of conventional routing protocols. To this end, this work presents a Q-learning-enhanced ad hoc on-demand distance vector (QL-AODV). This intelligent routing protocol uses reinforcement learning within the AODV protocol to support adaptive, data-driven route selection in highly dynamic aerial networks. QL-AODV offers four novelties, including a multipath route set collection methodology that retains up to ten candidate routes for each destination using an extended route reply (RREP) waiting mechanism, a more detailed RREP message format with cumulative node buffer usage, enabling informed decision-making, a normalized 3D state space model recording hop count, average buffer occupancy, and peak buffer saturation, optimized to adhere to aerial network dynamics, and a light-weighted distributed Q-learning approach at the source node that uses an ε-greedy policy to balance exploration and exploitation. Large-scale simulations conducted with NS-3.34 for various node densities and mobility conditions confirm the better performance of QL-AODV compared to conventional AODV. In high-mobility environments, QL-AODV offers up to 9.8% improvement in packet delivery ratio and up to 12.1% increase in throughput, while remaining persistently scalable for various network sizes. The results prove that QL-AODV is a reliable, scalable, and intelligent routing method for next-generation AAV networks that will operate in intensive environments that are expected for 6G.

Full article

(This article belongs to the Special Issue Moving Towards 6G Wireless Technologies—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Security Analysis and Designing Advanced Two-Party Lattice-Based Authenticated Key Establishment and Key Transport Protocols for Mobile Communication

by

Mani Rajendran, Dharminder Chaudhary, S. A. Lakshmanan and Cheng-Chi Lee

Future Internet 2025, 17(10), 472; https://doi.org/10.3390/fi17100472 - 16 Oct 2025

Abstract

►▼

Show Figures

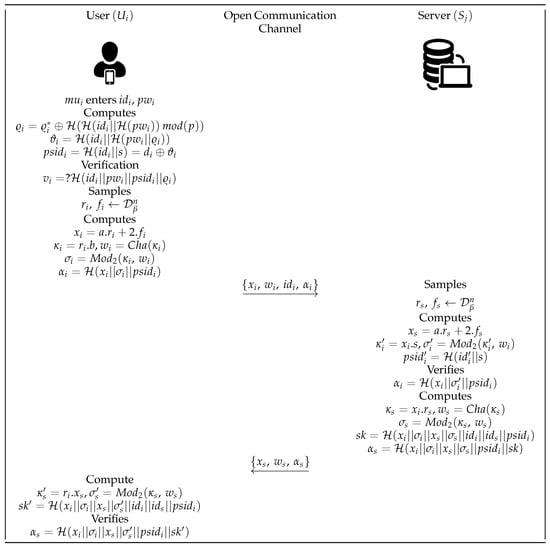

In this paper, we have proposed a two-party authenticated key establishment (AKE), and authenticated key transport protocols based on lattice-based cryptography, aiming to provide security against quantum attacks for secure communication. This protocol enables two parties, who may share long-term public keys, to

[...] Read more.

In this paper, we have proposed a two-party authenticated key establishment (AKE), and authenticated key transport protocols based on lattice-based cryptography, aiming to provide security against quantum attacks for secure communication. This protocol enables two parties, who may share long-term public keys, to securely establish a shared session key, and transportation of the session key from the server while achieving mutual authentication. Our construction leverages the hardness of lattice problems Ring Learning With Errors (Ring-LWE), ensuring robustness against quantum and classical adversaries. Unlike traditional schemes whose security depends upon number-theoretic assumptions being vulnerable to quantum attacks, our protocol ensures security in the post-quantum era. The proposed protocol ensures forward secrecy, and provides security even if the long-term key is compromised. This protocol also provides essential property key freshness and resistance against man-in-the-middle attacks, impersonation attacks, replay attacks, and key mismatch attacks. On the other hand, the proposed key transport protocol provides essential property key freshness, anonymity, and resistance against man-in-the-middle attacks, impersonation attacks, replay attacks, and key mismatch attacks. A two-party key transport protocol is a cryptographic protocol in which one party (typically a trusted key distribution center or sender) securely generates and sends a session key to another party. Unlike key exchange protocols (where both parties contribute to key generation), key transport protocols rely on one party to generate the key and deliver it securely. The protocol possesses a minimum number of exchanged messages and can reduce the number of communication rounds to help minimize the communication overhead.

Full article

Figure 1

Open AccessArticle

Explainable AI-Based Semantic Retrieval from an Expert-Curated Oncology Knowledge Graph for Clinical Decision Support

by

Sameer Mushtaq, Marcello Trovati and Nik Bessis

Future Internet 2025, 17(10), 471; https://doi.org/10.3390/fi17100471 - 16 Oct 2025

Abstract

The modern oncology landscape is characterised by a deluge of high-dimensional data from genomic sequencing, medical imaging, and electronic health records, negatively impacting the analytical capacity of clinicians and health practitioners. This field is not new and it has drawn significant attention from

[...] Read more.

The modern oncology landscape is characterised by a deluge of high-dimensional data from genomic sequencing, medical imaging, and electronic health records, negatively impacting the analytical capacity of clinicians and health practitioners. This field is not new and it has drawn significant attention from the research community. However, one of the main limiting issues is the data itself. Despite the vast amount of available data, most of it lacks scalability, quality, and semantic information. This work is motivated by the data platform provided by OncoProAI, an AI-driven clinical decision support platform designed to address this challenge by enabling highly personalised, precision cancer care. The platform is built on a comprehensive knowledge graph, formally modelled as a directed acyclic graph, which has been manually populated, assessed and maintained to provide a unique data ecosystem. This enables targeted and bespoke information extraction and assessment.

Full article

(This article belongs to the Special Issue Artificial Intelligence for Smart Healthcare: Methods, Applications, and Challenges)

►▼

Show Figures

Figure 1

Open AccessArticle

Multifractality and Its Sources in the Digital Currency Market

by

Stanisław Drożdż, Robert Kluszczyński, Jarosław Kwapień and Marcin Wątorek

Future Internet 2025, 17(10), 470; https://doi.org/10.3390/fi17100470 - 13 Oct 2025

Abstract

►▼

Show Figures

Multifractality in time series analysis characterizes the presence of multiple scaling exponents, indicating heterogeneous temporal structures and complex dynamical behaviors beyond simple monofractal models. In the context of digital currency markets, multifractal properties arise due to the interplay of long-range temporal correlations and

[...] Read more.

Multifractality in time series analysis characterizes the presence of multiple scaling exponents, indicating heterogeneous temporal structures and complex dynamical behaviors beyond simple monofractal models. In the context of digital currency markets, multifractal properties arise due to the interplay of long-range temporal correlations and heavy-tailed distributions of returns, reflecting intricate market microstructure and trader interactions. Incorporating multifractal analysis into the modeling of cryptocurrency price dynamics enhances the understanding of market inefficiencies. It may also improve volatility forecasting and facilitate the detection of critical transitions or regime shifts. Based on the multifractal cross-correlation analysis (MFCCA) whose spacial case is the multifractal detrended fluctuation analysis (MFDFA), as the most commonly used practical tools for quantifying multifractality, we applied a recently proposed method of disentangling sources of multifractality in time series to the most representative instruments from the digital market. They include Bitcoin (BTC), Ethereum (ETH), decentralized exchanges (DEX) and non-fungible tokens (NFT). The results indicate the significant role of heavy tails in generating a broad multifractal spectrum. However, they also clearly demonstrate that the primary source of multifractality encompasses the temporal correlations in the series, and without them, multifractality fades out. It appears characteristic that these temporal correlations, to a large extent, do not depend on the thickness of the tails of the fluctuation distribution. These observations, made here in the context of the digital currency market, provide a further strong argument for the validity of the proposed methodology of disentangling sources of multifractality in time series.

Full article

Figure 1

Open AccessArticle

Modular Microservices Architecture for Generative Music Integration in Digital Audio Workstations via VST Plugin

by

Adriano N. Raposo and Vasco N. G. J. Soares

Future Internet 2025, 17(10), 469; https://doi.org/10.3390/fi17100469 - 12 Oct 2025

Abstract

This paper presents the design and implementation of a modular cloud-based architecture that enables generative music capabilities in Digital Audio Workstations through a MIDI microservices backend and a user-friendly VST plugin frontend. The system comprises a generative harmony engine deployed as a standalone

[...] Read more.

This paper presents the design and implementation of a modular cloud-based architecture that enables generative music capabilities in Digital Audio Workstations through a MIDI microservices backend and a user-friendly VST plugin frontend. The system comprises a generative harmony engine deployed as a standalone service, a microservice layer that orchestrates communication and exposes an API, and a VST plugin that interacts with the backend to retrieve harmonic sequences and MIDI data. Among the microservices is a dedicated component that converts textual chord sequences into MIDI files. The VST plugin allows the user to drag and drop the generated chord progressions directly into a DAW’s MIDI track timeline. This architecture prioritizes modularity, cloud scalability, and seamless integration into existing music production workflows, while abstracting away technical complexity from end users. The proposed system demonstrates how microservice-based design and cross-platform plugin development can be effectively combined to support generative music workflows, offering both researchers and practitioners a replicable and extensible framework.

Full article

(This article belongs to the Special Issue Scalable and Distributed Cloud Continuum Orchestration for Next-Generation IoT Applications: Latest Advances and Prospects—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

Intelligent Control Approaches for Warehouse Performance Optimisation in Industry 4.0 Using Machine Learning

by

Ádám Francuz and Tamás Bányai

Future Internet 2025, 17(10), 468; https://doi.org/10.3390/fi17100468 - 11 Oct 2025

Abstract

In conventional logistics optimization problems, an objective function describes the relationship between parameters. However, in many industrial practices, such a relationship is unknown, and only observational data is available. The objective of the research is to use machine learning-based regression models to uncover

[...] Read more.

In conventional logistics optimization problems, an objective function describes the relationship between parameters. However, in many industrial practices, such a relationship is unknown, and only observational data is available. The objective of the research is to use machine learning-based regression models to uncover patterns in the warehousing dataset and use them to generate an accurate objective function. The models are not only suitable for prediction, but also for interpreting the effect of input variables. This data-driven approach is consistent with the automated, intelligent systems of Industry 4.0, while Industry 5.0 provides opportunities for sustainable, flexible, and collaborative development. In this research, machine learning (ML) models were tested on a fictional dataset using Automated Machine Learning (AutoML), through which Light Gradient Boosting Machine (LightGBM) was selected as the best method (R2 = 0.994). Feature Importance and Partial Dependence Plots revealed the key factors influencing storage performance and their functional relationships. Defining performance as a cost indicator allowed us to interpret optimization as cost minimization, demonstrating that ML-based methods can uncover hidden patterns and support efficiency improvements in warehousing. The proposed approach not only achieves outstanding predictive accuracy, but also transforms model outputs into actionable, interpretable insights for warehouse optimization. By combining automation, interpretability, and optimization, this research advances the practical realization of intelligent warehouse systems in the era of Industry 4.0.

Full article

(This article belongs to the Special Issue Artificial Intelligence and Control Systems for Industry 4.0 and 5.0)

►▼

Show Figures

Figure 1

Open AccessArticle

Beyond Accuracy: Benchmarking Machine Learning Models for Efficient and Sustainable SaaS Decision Support

by

Efthimia Mavridou, Eleni Vrochidou, Michail Selvesakis and George A. Papakostas

Future Internet 2025, 17(10), 467; https://doi.org/10.3390/fi17100467 - 11 Oct 2025

Abstract

Machine learning (ML) methods have been successfully employed to support decision-making for Software as a Service (SaaS) providers. While most of the published research primarily emphasizes prediction accuracy, other important aspects, such as cloud deployment efficiency and environmental impact, have received comparatively less

[...] Read more.

Machine learning (ML) methods have been successfully employed to support decision-making for Software as a Service (SaaS) providers. While most of the published research primarily emphasizes prediction accuracy, other important aspects, such as cloud deployment efficiency and environmental impact, have received comparatively less attention. It is also critical to effectively use factors such as training time, prediction time and carbon footprint in production. SaaS decision support systems use the output of ML models to provide actionable recommendations, such as running reactivation campaigns for users who are likely to churn. To this end, in this paper, we present a benchmarking comparison of 17 different ML models for churn prediction in SaaS, which include cloud deployment efficiency metrics (e.g., latency, prediction time, etc.) and sustainability metrics (e.g., CO2 emissions, consumed energy, etc.) along with predictive performance metrics (e.g., AUC, Log Loss, etc.). Two public datasets are employed, experiments are repeated on four different machines, locally and on the cloud, while a new weighted Green Efficiency Weighted Score (GEWS) is introduced, as steps towards choosing the simpler, greener and more efficient ML model. Experimental results indicated XGBoost and LightGBM as the models capable of offering a good balance on predictive performance, fast training, inference times, and limited emissions, while the importance of region selection towards minimizing the carbon footprint of the ML models was confirmed.

Full article

(This article belongs to the Special Issue Distributed Machine Learning and Federated Edge Computing for IoT)

►▼

Show Figures

Figure 1

Open AccessReview

Building Trust in Autonomous Aerial Systems: A Review of Hardware-Rooted Trust Mechanisms

by

Sagir Muhammad Ahmad, Mohammad Samie and Barmak Honarvar Shakibaei Asli

Future Internet 2025, 17(10), 466; https://doi.org/10.3390/fi17100466 - 10 Oct 2025

Abstract

Unmanned aerial vehicles (UAVs) are redefining both civilian and defense operations, with swarm-based architectures unlocking unprecedented scalability and autonomy. However, these advancements introduce critical security challenges, particularly in location verification and authentication. This review provides a comprehensive synthesis of hardware security primitives (HSPs)—including

[...] Read more.

Unmanned aerial vehicles (UAVs) are redefining both civilian and defense operations, with swarm-based architectures unlocking unprecedented scalability and autonomy. However, these advancements introduce critical security challenges, particularly in location verification and authentication. This review provides a comprehensive synthesis of hardware security primitives (HSPs)—including Physical Unclonable Functions (PUFs), Trusted Platform Modules (TPMs), and blockchain-integrated frameworks—as foundational enablers of trust in UAV ecosystems. We systematically analyze communication architectures, cybersecurity vulnerabilities, and deployment constraints, followed by a comparative evaluation of HSP-based techniques in terms of energy efficiency, scalability, and operational resilience. The review further identifies unresolved research gaps and highlights transformative trends such as AI-augmented environmental PUFs, post-quantum secure primitives, and RISC-V-based secure control systems. By bridging current limitations with emerging innovations, this work underscores the pivotal role of hardware-rooted security in shaping the next generation of autonomous aerial networks.

Full article

(This article belongs to the Special Issue Security and Privacy Issues in the Internet of Cloud—2nd Edition)

►▼

Show Figures

Figure 1

Open AccessArticle

OptoBrain: A Wireless Sensory Interface for Optogenetics

by

Rodrigo de Albuquerque Pacheco Andrade, Helder Eiki Oshiro, Gabriel Augusto Ginja, Eduardo Colombari, Maria Celeste Dias, José A. Afonso and João Paulo Pereira do Carmo

Future Internet 2025, 17(10), 465; https://doi.org/10.3390/fi17100465 (registering DOI) - 9 Oct 2025

Abstract

►▼

Show Figures

Optogenetics leverages light to control neural circuits, but traditional systems are often bulky and tethered, limiting their use. This work introduces OptoBrain, a novel, portable wireless system for optogenetics designed to overcome these challenges. The system integrates modules for multichannel data acquisition, smart

[...] Read more.

Optogenetics leverages light to control neural circuits, but traditional systems are often bulky and tethered, limiting their use. This work introduces OptoBrain, a novel, portable wireless system for optogenetics designed to overcome these challenges. The system integrates modules for multichannel data acquisition, smart neurostimulation, and continuous processing, with a focus on low-power and low-voltage operation. OptoBrain features up to eight neuronal acquisition channels with a low input-referred noise (e.g., 0.99 µVRMS at 250 sps with 1 V/V gain), and reliably streams data via a Bluetooth 5.0 link at a measured throughput of up to 400 kbps. Experimental results demonstrate robust performance, highlighting its potential as a simple, practical, and low-cost solution for emerging optogenetics research centers and enabling new avenues in neuroscience.

Full article

Figure 1

Journal Menu

► ▼ Journal Menu-

- Future Internet Home

- Aims & Scope

- Editorial Board

- Reviewer Board

- Topical Advisory Panel

- Instructions for Authors

- Special Issues

- Topics

- Sections & Collections

- Article Processing Charge

- Indexing & Archiving

- Editor’s Choice Articles

- Most Cited & Viewed

- Journal Statistics

- Journal History

- Journal Awards

- Conferences

- Editorial Office

Journal Browser

► ▼ Journal BrowserHighly Accessed Articles

Latest Books

E-Mail Alert

News

Topics

Topic in

Education Sciences, Future Internet, Information, Sustainability

Advances in Online and Distance Learning

Topic Editors: Neil Gordon, Han ReichgeltDeadline: 31 December 2025

Topic in

Applied Sciences, Electronics, Future Internet, IoT, Technologies, Inventions, Sensors, Vehicles

Next-Generation IoT and Smart Systems for Communication and Sensing

Topic Editors: Dinh-Thuan Do, Vitor Fialho, Luis Pires, Francisco Rego, Ricardo Santos, Vasco VelezDeadline: 31 January 2026

Topic in

Entropy, Future Internet, Healthcare, Sensors, Data

Communications Challenges in Health and Well-Being, 2nd Edition

Topic Editors: Dragana Bajic, Konstantinos Katzis, Gordana GardasevicDeadline: 28 February 2026

Topic in

AI, Computers, Education Sciences, Societies, Future Internet, Technologies

AI Trends in Teacher and Student Training

Topic Editors: José Fernández-Cerero, Marta Montenegro-RuedaDeadline: 11 March 2026

Conferences

Special Issues

Special Issue in

Future Internet

Digital Twins in Next-Generation IoT Networks

Guest Editors: Junhui Jiang, Yu Zhao, Mengmeng Yu, Dongwoo KimDeadline: 25 October 2025

Special Issue in

Future Internet

Internet of Things and Cyber-Physical Systems, 3rd Edition

Guest Editor: Iwona GrobelnaDeadline: 30 October 2025

Special Issue in

Future Internet

IoT–Edge–Cloud Computing and Decentralized Applications for Smart Cities

Guest Editors: Antonis Litke, Rodger Lea, Takuro YonezawaDeadline: 30 October 2025

Special Issue in

Future Internet

Intelligent Decision Support Systems and Prediction Models in IoT-Based Scenarios

Guest Editors: Mario Casillo, Marco Lombardi, Domenico SantanielloDeadline: 31 October 2025

Topical Collections

Topical Collection in

Future Internet

Information Systems Security

Collection Editor: Luis Javier Garcia Villalba

Topical Collection in

Future Internet

Innovative People-Centered Solutions Applied to Industries, Cities and Societies

Collection Editors: Dino Giuli, Filipe Portela

Topical Collection in

Future Internet

Computer Vision, Deep Learning and Machine Learning with Applications

Collection Editors: Remus Brad, Arpad Gellert

Topical Collection in

Future Internet

5G/6G Networks for the Internet of Things: Communication Technologies and Challenges

Collection Editor: Sachin Sharma