FlockAI: A Testing Suite for ML-Driven Drone Applications

Abstract

:1. Introduction

- A comprehensive programming paradigm tailored to the unique characteristics of ML-driven drone applications. The model expressivity enables developers to design, customize, and configure complex drone deployments, including encompassed sensors, compute modules, resource capabilities, operating flight path, and the emulator world along with simulation settings. Most importantly, users can use the declarative algorithm interface to deploy trained models and enable ML inference tasks that run on the drone while in flight;

- A configurable energy profiler, based on an ensemble of SOTA energy models for drones, with the profiler supporting the specification of relevant parameters for multi-grain energy consumption of the drone and the application in general. The complexity of the modeling provides users with energy measurements relevant to drone flight, computational processing, and the communication overhead. Users can take advantage of “energy profiles”, with pre-filled parameters, or tweak the parameterization and even completely alter the model implementation as long as it adheres to the energy interface;

- A monitoring system providing various probing modules for measuring and evaluating the resource utilization of a drone application. Through the monitoring system, users can compose custom metric collectors so that the monitoring system is used to inject application-specific metrics and, finally, compose more complex models, such as QoS and monetary costs;

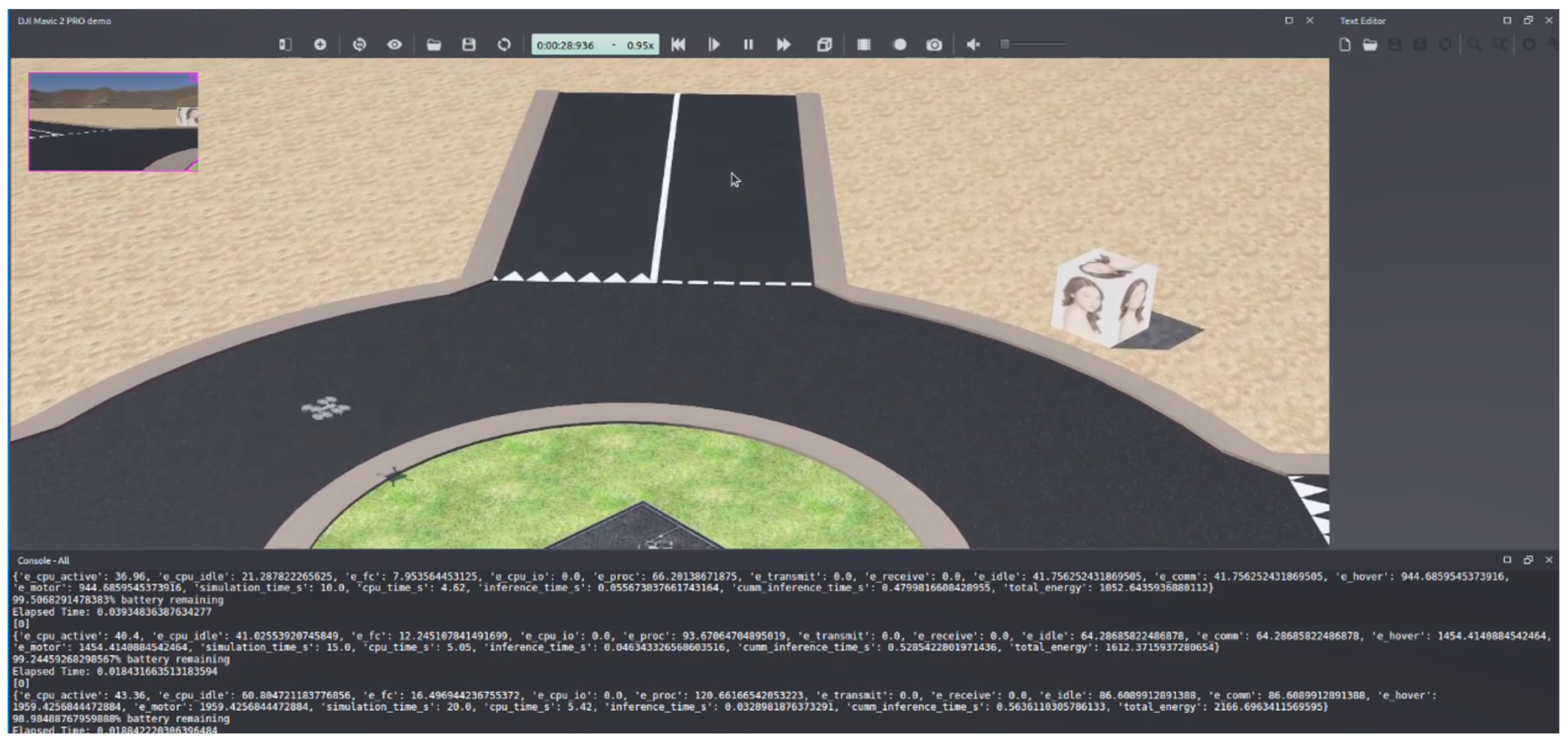

- The FlockAI framework (https://unic-ailab.github.io/flockai/), which is an open and modular by design Python framework supporting ML practitioners with the rapid deployment and repeatable testing of ML-driven drone applications over the Webots robotics simulator (https://cyberbotics.com/). A screenshot is provided in Figure 1.

2. Machine Learning for Drones

- Q1. Can my ML algorithm run in time when deployed on the desired resource-constraint drone?

- Q2. How does the ML algorithm affect the drones’ energy consumption and overall battery autonomy?

- Q3. If the time and energy constraints permit, what is the trade-off between on-board ML inference vs. using a remote service through an open communication link?

- Q4. Could a different (less-intensive) algorithm suffice (trade-off between algorithm accuracy and energy)?

3. The FlockAI Framework

3.1. Requirements

- Drones come in different flavors with their resource capabilities featuring diverse resource configurations (e.g., compute, network, disk I/O), while drones can also encompass multiple and different sensing modules (e.g., camera, gps) depending on the task they are utilized. A framework supporting drone application experimentation must support configurable drones, as well as, the enablement of different sensing modules;

- With the increased interest of examining the adoption of drone technology in different domains, a wide range of applications must be supported and these must be realistic. Although a framework does not need to satisfy every type of application, significantly limiting the scope inhibits adoption as the effort devoted to learning a new framework is only justified when this knowledge can be used again in the (near) future;

- Evaluating the performance of an ML application entails the measurement of a variety of key performance indicators (metrics) including the impact of the ML algorithm to the drone’s resources and the algorithm’s accuracy. The latter is highly dependent to the data used during the training phase, while the former requires significant probing during application simulation in order to assess resource utilization, communication overhead, and energy consumption;

- An evaluation toolkit must facilitate repeatable measurements under various scenarios. For drones, the incorporation of multiple sensing modules, the dependence of physical environment and external communication, make this a challenging criterion to meet.

3.2. Framework Overview

4. Modeling and Implementation

4.1. Robotics Simulator

4.2. Drone Configuration

- 1 from flockai.drones.controllers import FlockAIController

- 2 …

- 3 class DJIMavic2(FlockAIController):

- 4

- 5 def __init__(self, name="DJIMavic2", …):

- 6 self.drone = configDrone(name, …)

- 7 …

- 8

- 9 def configDrone(name, …):

- 10 motors = [

- 11 (MotorDevice.CAMERA, "cameraroll", AircraftAxis.ROLL),

- 12 (MotorDevice.CAMERA, "camerapitch", AircraftAxis.PITCH),

- 13 (MotorDevice.CAMERA, "camerayaw", AircraftAxis.YAW),

- 14 (MotorDevice.PROPELLER, "FLpropeller", Relative2DPosition(1, -1)),

- 15 (MotorDevice.PROPELLER, "FRpropeller", Relative2DPosition(1, 1)),

- 16 (MotorDevice.PROPELLER, "RLpropeller", Relative2DPosition(-1, -1)),

- 17 (MotorDevice.PROPELLER, "RRpropeller", Relative2DPosition(-1, 1)),

- 18 ]

- 19 sensors = [

- 20 (EnableableDevice.CAMERA, "camera"),

- 21 (EnableableDevice.GPS, "gps"),

- 22 (EnableableDevice.INERTIAL_UNIT, "inertial"),

- 23 (EnableableDevice.COMPASS, "compass"),

- 24 (EnableableDevice.GYRO, "gyro")

- 25 (EnableableDevice.BATTERY_SENSOR, "battery"),

- 26 (EnableableDevice.WIFIRECEIVER, "receiver"),

- 27 …

- 28 ]

- 29 mavic2 = FlockAIDrone(motors, sensors)

- 30 mavic2.weight = 0.907 #in kg

- 31 mavic2.battery = 3850 #in mAh

- 32 mavic2.storage = 8000 #in MB

- 33 …

- 34 return mavic2

- 1 from flockai.drones.controllers import DJIMavic2

- 2 from flockai.drones.pilots import BoundingBoxZigZagPilot

- 3 from flockai.drones.monitoring import FlightProbe, EnergyProbe

- 4 from flockai.drones.sensors import TempSensor

- 5 from Classifiers import CrowdDetectionClassifier

- 6 …

- 7 def MyDroneMLExperiment():

- 8 autopilot = BoundingBoxZigZagPilot(…)

- 9 probes = [FlightProbe(..), EnergyProbe(..)]

- 10

- 11 mydrone = DJIMavic2(pilot=autopilot, probes=probes)

- 12 mydrone.getBattery().setCapacity(4500)

- 13

- 14 temp = TempSensor(trange=(20,32), dist=gaussian)

- 15 mydrone.addSensor(temp)

- 16

- 17 crowddetect = CrowdDetectionClassifier()

- 18 crowddetect.load_model(’…/cnn_cd.bin’)

- 19 mydrone.enableML(algo=crowddetect, inf_period=5,…)

- 20

- 21 mydrone.activate() #start simulation

- 22 mydrone.takeoff()

- 23

- 24 while mydrone.flying():

- 25 img = mydrone.getCameraFeed(…)

- 26 loc = mydrone.getGPScoordinates(…)

- 27 testdata = (img, loc)

- 28 pred = mydrone.classifier.inference(data=testdata,…)

- 29 #do something with pred…and output energy and battery level

- 30 eTotal = mydrone.getProbe("EnergyProbe").values().get("total")

- 31 battery = mydrone.battery.getLevel()

- 32 print(eTotal, battery)

- 33 …

- 34 out = mydrone.simulation.exportLogs(format_type=pandas_df)

- 35 #do stuff to output

4.3. ML Algorithm Deployment

- 1 from flockai.drones.ml import FlockAIClassifier

- 2 …

- 3

- 4 class CrowdDetectionClassifier(FlockAIClassifier)

- 5

- 6 def__init__(self, inf_period=10):

- 7 super().__init__()

- 8 self.inf_period = inf_period

- 9 …

- 10

- 11 def load_model(self, model_path, params,…)

- 12 …

- 13

- 14 def inference(self, testdata)

- 15 …

- 16 return prediction

- 17

- 18 …

4.4. Energy Profiling

- 1 from flockai.models.energy import Eproc

- 2

- 3 cusEproc = Eproc(name="custom-eproc")

- 4 cusEproc.removeComponents(["sensors","io"])

- 5 efc = cusProc.getComponent("fc")

- 6 efc.setdesc("DJI A3 flight controller")

- 7 efc.setpower(8.3)

- 8 mydrone.energy.updComponent("proc", custProc)

- 9 …

4.5. Monitoring

5. Evaluation

5.1. Use-Case

5.2. Scenario 1: On-Board Inference

5.3. Scenario 2: Remote Inference

5.4. Scenario 3: Testing Different ML Algorithms

6. Related Work

7. Discussion

8. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Cui, J.Q.; Phang, S.K.; Ang, K.Z.Y.; Wang, F.; Dong, X.; Ke, Y.; Lai, S.; Li, K.; Li, X.; Lin, F.; et al. Drones for cooperative search and rescue in post-disaster situation. In Proceedings of the 2015 IEEE 7th International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE Conference on Robotics, Automation and Mechatronics (RAM), Siem Reap, Cambodia, 15–17 July 2015; pp. 167–174. [Google Scholar] [CrossRef]

- Kyrkou, C.; Timotheou, S.; Kolios, P.; Theocharides, T.; Panayiotou, C. Drones: Augmenting Our Quality of Life. IEEE Potentials 2019, 38, 30–36. [Google Scholar] [CrossRef]

- Wu, D.; Arkhipov, D.I.; Kim, M.; Talcott, C.L.; Regan, A.C.; McCann, J.A.; Venkatasubramanian, N. ADDSEN: Adaptive Data Processing and Dissemination for Drone Swarms in Urban Sensing. IEEE Trans. Comput. 2017, 66, 183–198. [Google Scholar] [CrossRef]

- Puri, V.; Nayyar, A.; Raja, L. Agriculture drones: A modern breakthrough in precision agriculture. J. Stat. Manag. Syst. 2017, 20, 507–518. [Google Scholar] [CrossRef]

- Sacco, A.; Flocco, M.; Esposito, F.; Marchetto, G. An architecture for adaptive task planning in support of IoT-based machine learning applications for disaster scenarios. Comput. Commun. 2020, 160, 769–778. [Google Scholar] [CrossRef]

- Tseng, C.; Chau, C.; Elbassioni, K.M.; Khonji, M. Flight Tour Planning with Recharging Optimization for Battery-operated Autonomous Drones. arXiv 2017, arXiv:1703.10049v1. [Google Scholar]

- Abeywickrama, H.V.; Jayawickrama, B.A.; He, Y.; Dutkiewicz, E. Comprehensive Energy Consumption Model for Unmanned Aerial Vehicles, Based on Empirical Studies of Battery Performance. IEEE Access 2018, 6, 58383–58394. [Google Scholar] [CrossRef]

- Trihinas, D.; Pallis, G.; Dikaiakos, M. Low-cost adaptive monitoring techniques for the internet of things. IEEE Trans. Serv. Comput. 2018, 14, 487–501. [Google Scholar] [CrossRef] [Green Version]

- Dimitropoulos, S. If One Drone Isn’t Enough, Try a Drone Swarm. 2019. Available online: https://www.bbc.com/news/business-49177704 (accessed on 9 November 2021).

- Vásárhelyi, G.; Virágh, C.; Somorjai, G.; Nepusz, T.; Eiben, A.E.; Vicsek, T. Optimized flocking of autonomous drones in confined environments. Sci. Robot. 2018, 3. [Google Scholar] [CrossRef] [Green Version]

- Schilling, F.; Lecoeur, J.; Schiano, F.; Floreano, D. Learning Vision-Based Flight in Drone Swarms by Imitation. IEEE Robot. Autom. Lett. 2019, 4, 4523–4530. [Google Scholar] [CrossRef] [Green Version]

- Taha, B.; Shoufan, A. Machine Learning-Based Drone Detection and Classification: State-of-the-Art in Research. IEEE Access 2019, 7, 138669–138682. [Google Scholar] [CrossRef]

- Chen, W.; Liu, B.; Huang, H.; Guo, S.; Zheng, Z. When UAV Swarm Meets Edge-Cloud Computing: The QoS Perspective. IEEE Netw. 2019, 33, 36–43. [Google Scholar] [CrossRef]

- Commercial Drones Are Here: The Future of Unmanned Aerial Systems. 2021. Available online: https://www.mckinsey.com/industries/travel-logistics-and-infrastructure/our-insights/commercial-drones-are-here-the-future-of-unmanned-aerial-systems (accessed on 9 November 2021).

- Hodge, V.J.; Hawkins, R.; Alexander, R. Deep reinforcement learning for drone navigation using sensor data. Neural Comput. Appl. 2021, 33, 2015–2033. [Google Scholar] [CrossRef]

- San Juan, V.; Santos, M.; Andújar, J.M.; Volchenkov, D. Intelligent UAV Map Generation and Discrete Path Planning for Search and Rescue Operations. Complex 2018, 2018. [Google Scholar] [CrossRef] [Green Version]

- Azari, M.M.; Sallouha, H.; Chiumento, A.; Rajendran, S.; Vinogradov, E.; Pollin, S. Key Technologies and System Trade-offs for Detection and Localization of Amateur Drones. IEEE Commun. Mag. 2018, 56, 51–57. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Shen, L.; Wang, X.; Hu, H.M. Drone Video Object Detection using Convolutional Neural Networks with Time Domain Motion Features. In Proceedings of the 2020 IEEE Conference on Multimedia Information Processing and Retrieval (MIPR), Shenzhen, China, 6–8 August 2020; pp. 153–156. [Google Scholar] [CrossRef]

- Besada, J.A.; Bergesio, L.; Campaña, I.; Vaquero-Melchor, D.; López-Araquistain, J.; Bernardos, A.M.; Casar, J.R. Drone mission definition and implementation for automated infrastructure inspection using airborne sensors. Sensors 2018, 18, 1170. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kumar, A.; Sharma, K.; Singh, H.; Naugriya, S.G.; Gill, S.S.; Buyya, R. A drone-based networked system and methods for combating coronavirus disease (COVID-19) pandemic. Future Gener. Comput. Syst. 2021, 115, 1–19. [Google Scholar] [CrossRef]

- Qin, Y.; Kishk, M.A.; Alouini, M.S. Performance Evaluation of UAV-enabled Cellular Networks with Battery-limited Drones. IEEE Commun. Lett. 2020, 24, 2664–2668. [Google Scholar] [CrossRef]

- Symeonides, M.; Georgiou, Z.; Trihinas, D.; Pallis, G.; Dikaiakos, M.D. Fogify: A Fog Computing Emulation Framework. In Proceedings of the 2020 IEEE/ACM Symposium on Edge Computing (SEC), San Jose, CA, USA, 12–14 November 2020; pp. 42–54. [Google Scholar] [CrossRef]

- Webots Robot Simulator. 2021. Available online: https://cyberbotics.com/ (accessed on 9 November 2021).

- Trihinas, D.; Agathocleous, M.; Avogian, K. Composable Energy Modeling for ML-Driven Drone Applications. In Proceedings of the 2021 IEEE International Conference on Cloud Engineering (IC2E), San Francisco, CA, USA, 4–8 October 2021; pp. 231–237. [Google Scholar] [CrossRef]

- Tropea, M.; Fazio, P.; De Rango, F.; Cordeschi, N. A New FANET Simulator for Managing Drone Networks and Providing Dynamic Connectivity. Electronics 2020, 9, 543. [Google Scholar] [CrossRef] [Green Version]

- Zeng, Y.; Zhang, R. Energy-Efficient UAV Communication With Trajectory Optimization. IEEE Trans. Wirel. Commun. 2017, 16, 3747–3760. [Google Scholar] [CrossRef] [Green Version]

- Marins, J.L.; Cabreira, T.M.; Kappel, K.S.; Ferreira, P.R. A Closed-Form Energy Model for Multi-rotors Based on the Dynamic of the Movement. In Proceedings of the 2018 VIII Brazilian Symposium on Computing Systems Engineering (SBESC), Salvador, Brazil, 5–8 November 2018; pp. 256–261. [Google Scholar] [CrossRef]

- Trihinas, D.; Pallis, G.; Dikaiakos, M.D. Monitoring elastically adaptive multi-cloud services. IEEE Trans. Cloud Comput. 2016, 6, 800–814. [Google Scholar] [CrossRef]

- VisDrone ECCV 2020 Crowd Counting Challenge. 2020. Available online: http://aiskyeye.com/challenge/crowd-counting/ (accessed on 9 November 2021).

- Geitgey, A. Python Face Recognition Library. 2021. Available online: https://github.com/ageitgey/face_recognition (accessed on 9 November 2021).

- Hsu, H.J.; Chen, K.T. DroneFace: An Open Dataset for Drone Research. In Proceedings of the 8th ACM on Multimedia Systems Conference, Taipei, Taiwan, 20–23 June 2017; Association for Computing Machinery: New York, NY, USA, 2017. MMSys’17. pp. 187–192. [Google Scholar] [CrossRef]

- TensorFlow. Face Detection using Frozen Inference Graphs. 2021. Available online: https://www.tensorflow.org/ (accessed on 9 November 2021).

- Daponte, P.; De Vito, L.; Lamonaca, F.; Picariello, F.; Riccio, M.; Rapuano, S.; Pompetti, L.; Pompetti, M. DronesBench: An innovative bench to test drones. IEEE Instrum. Meas. Mag. 2017, 20, 8–15. [Google Scholar] [CrossRef]

- jMavSim. Available online: https://docs.px4.io/master/en/ (accessed on 9 November 2021).

- Furrer, F.; Burri, M.; Achtelik, M.; Siegwart, R. RotorS—A Modular Gazebo MAV Simulator Framework. In Robot Operating System (ROS): The Complete Reference; Koubaa, A., Ed.; Springer International Publishing: Cham, Switzerland, 2016; Volume 1. [Google Scholar]

- Báca, T.; Petrlík, M.; Vrba, M.; Spurný, V.; Penicka, R.; Hert, D.; Saska, M. The MRS UAV System: Pushing the Frontiers of Reproducible Research, Real-world Deployment, and Education with Autonomous Unmanned Aerial Vehicles. J. Intell. Robotic Syst. 2021, 102, 26. [Google Scholar] [CrossRef]

- Al-Mousa, A.; Sababha, B.H.; Al-Madi, N.; Barghouthi, A.; Younisse, R. UTSim: A framework and simulator for UAV air traffic integration, control, and communication. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419870937. [Google Scholar] [CrossRef] [Green Version]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. Airsim: High-fidelity visual and physical simulation for autonomous vehicles. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 621–635. [Google Scholar]

- Kirtas, M.; Tsampazis, K.; Passalis, N.; Tefas, A. Deepbots: A Webots-Based Deep Reinforcement Learning Framework for Robotics. In Artificial Intelligence Applications and Innovations; Maglogiannis, I., Iliadis, L., Pimenidis, E., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 64–75. [Google Scholar]

| ComputeProbe | ||

|---|---|---|

| Metric | Unit | Desc |

| cpuUsage | % | Current drone CPU utilization averaged across all cores |

| cpuTime | ms | Total time CPU in processing state for the current flight |

| ioTime | ms | Total time CPU blocked waiting for IO to complete |

| memUsage | % | Current drone memory utilization |

| CommProbe | ||

| Metric | Unit | Desc |

| kbytesIN | KB | Total incoming traffic (in KB) across selected network interfaces (i.e., wifi) |

| kbytesOut | KB | Total outgoing traffic (in KB) across selected network interfaces |

| pctsIN | # | Total number of incoming message packets |

| pctOut | # | Total number of outgoing message packets |

| FlightProbe | ||

| Metric | Unit | Desc |

| flightTime | s | Timespan of the current flight |

| hoveringTime | s | Timespan drone in hovering state |

| takeoffTime | s | Timespan drone in takeoff state |

| landingTime | s | Timespan drone in landing state |

| inMoveTime | s | Timespan drone in moving state |

| inAccTime | s | Timespan drone is in moving state and velocity is fluctuating |

| EnergyProbe | ||

| Metric | Unit | Desc |

| eProc | J | Energy consumed for compute tasks (when drone in flight continuously updated) |

| eComm | J | Energy consumed for communication tasks |

| eMotor | J | Energy consumed for powering drone motors |

| eTotal | J | Total energy consumed by drone for the current flight |

| batteryRem | % | Percentage of drone battery capacity remaining |

| Scenario No. | Drone Controller | Autopilot | Monitoring | ML Algorithm | ML Execution |

|---|---|---|---|---|---|

| 1-A | DJIMavic2 | Bounding Box ZigZag Pilot | Flight, Compute, Comm and Energy Probes | - | - |

| 1-B | DJIMavic2 | Bounding Box ZigZag Pilot | Flight, Compute, Comm and Energy Probes | CNN Crowd Detection Classifier | In Place |

| 2 | DJIMavic2 | Bounding Box Zig Zag Pilot | Flight, Compute, Comm and Energy Probes | CNN Crowd Detection Classifier | Remote |

| 3-A | DJIMavic2 | Bounding Box Zig Zag Pilot | Flight, Compute, Comm and Energy Probes | CNN Crowd Detection Classifier | In Place |

| 3-B | DJIMavic2 | Bounding Box Zig Zag Pilot | Flight, Compute, Comm and Energy Probes | FIGs Crowd Detection Classifier | In Place |

| Algorithm | Accuracy (%) | Median Inference Time (ms) | Flight Time (s) |

|---|---|---|---|

| CNN | 95 | 1361 | 1488 |

| FIGs | 60 | 274 | 1642 |

| CNN | FIGs | |

|---|---|---|

| Sequence | Inf. Delay (img count) | Inf. Delay (img count) |

| seq1 | 23 | - |

| seq2 | 26 | 29 |

| seq3 | 29 | 29 |

| seq4 | 29 | 29 |

| seq5 | 23 | 28 |

| seq6 | 24 | 23 |

| seq7 | 26 | - |

| seq8 | 27 | - |

| seq9 | 26 | - |

| seq10 | 26 | 29 |

| seq11 | 29 | 29 |

| seq12 | 28 | 29 |

| seq13 | 25 | - |

| seq14 | 26 | 28 |

| seq15 | 28 | 29 |

| seq16 | 28 | - |

| seq17 | 26 | 29 |

| seq18 | 27 | 29 |

| seq19 | 28 | - |

| seq20 | - | - |

| Framework | SDK | Drone Configuration | Algorithm Testing | Algorithm Execution | Monitoring | Energy Profiling M | P | C | Last Updated |

|---|---|---|---|---|---|---|---|

| Drones Bench | - | real drone | - | - | motorized components | X | - | - | NA |

| jMavSim | java | quad-copter and hexa-copter imitations localization and camera sensors | custom flight control with PX4 autopilot modules | remote | flight control | X | - | - | 2021 |

| RotorS | C++ | quad-copter imitation localization and camera sensors | interface for custom flight control and state estimation | on drone | flight control | - | - | - | 2020 |

| MRS | C++ | drone templates (dji, tarot) | interface for custom flight control and state estimation | on drone | flight control | - | - | - | 2021 |

| UTSim | C# | quad-copter imitation | interface for path planning | remote | flight control comm overhead | - | - | - | NA |

| AirSim | C++ python java | quad-copter imitation localization and camera sensors environmental conditions | interface for computer vison | remote | flight control comm overhead | X | - | - | 2021 |

| FANETSim | java | quad-copter imitation comm protocol | - | - | flight control comm overhead | X | X | - | NA |

| Deepbots | python | quad-copter imitation | reinforcement learning | on drone | inference accuracy | - | - | - | 2020 |

| FlockAI | python | customizable drone templates lovalization and camera sensors | autopilot interface for custom flight control and state estimation, regression, clustering and classification incl. face and object detection | on drone and remote | flight control processing overhead, comm overhead inference accuracy and delay | X | X | X | 2021 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Trihinas, D.; Agathocleous, M.; Avogian, K.; Katakis, I. FlockAI: A Testing Suite for ML-Driven Drone Applications. Future Internet 2021, 13, 317. https://doi.org/10.3390/fi13120317

Trihinas D, Agathocleous M, Avogian K, Katakis I. FlockAI: A Testing Suite for ML-Driven Drone Applications. Future Internet. 2021; 13(12):317. https://doi.org/10.3390/fi13120317

Chicago/Turabian StyleTrihinas, Demetris, Michalis Agathocleous, Karlen Avogian, and Ioannis Katakis. 2021. "FlockAI: A Testing Suite for ML-Driven Drone Applications" Future Internet 13, no. 12: 317. https://doi.org/10.3390/fi13120317

APA StyleTrihinas, D., Agathocleous, M., Avogian, K., & Katakis, I. (2021). FlockAI: A Testing Suite for ML-Driven Drone Applications. Future Internet, 13(12), 317. https://doi.org/10.3390/fi13120317