Internet Video Delivery Improved by Super-Resolution with GAN

Abstract

1. Introduction

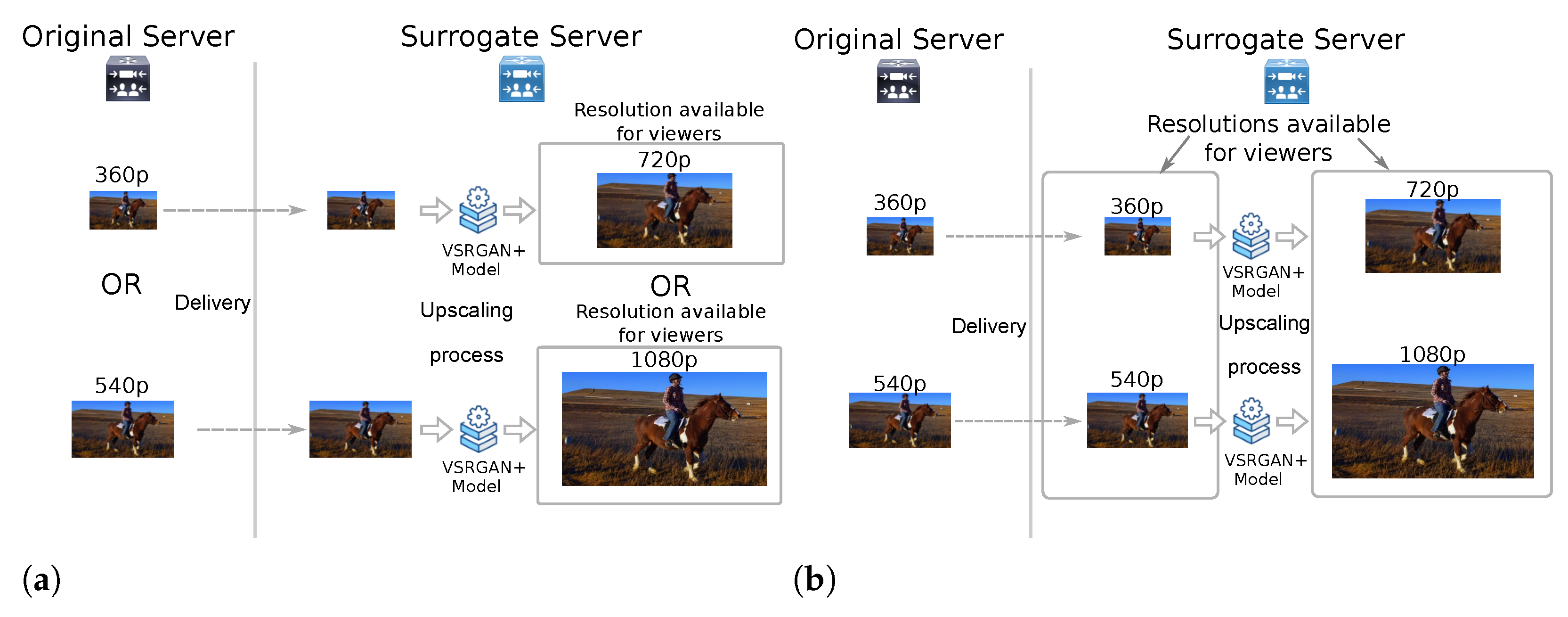

- We propose a cloud-based content-placement framework that substantially reduces video traffic on long-distance infrastructures. In this framework, low-resolution videos move between servers in the cloud and the surrogate server deployed on the server side. An efficient SR GAN-based model reconstructs videos in high resolution.

- We created a video SR model as a practical solution to use in a video-on-demand delivery system that upscales videos by a factor of 2 with perceptual quality indistinguishable from the ground truth.

- We present a method for mapping the perceptual quality of reconstructed videos to the QP level representation of the same video. This method is essential for comparing the quality of a video reconstructed by SR with the representation of the same video at different compression levels.

- Finally, we evaluate the contribution of SR to reducing the data and compare it with reduction by compression. Additionally, we analyze the advantages of the two approach combinations. Our experiments demonstrated that it is possible to reduce the amount of traffic in the cloud infrastructure by up to 98.42% when compared to video distribution with lossless compression.

2. Background and Related Work

2.1. Super-Resolution Using Convolutional Networks

2.2. DNN Super-Resolution for Internet Video Delivery

2.3. JND-Based Video Quality Assessment

3. Cloud-Based Content Placement Framework

The Video-Size Optimization Problem

4. Video Super-Resolution with GAN

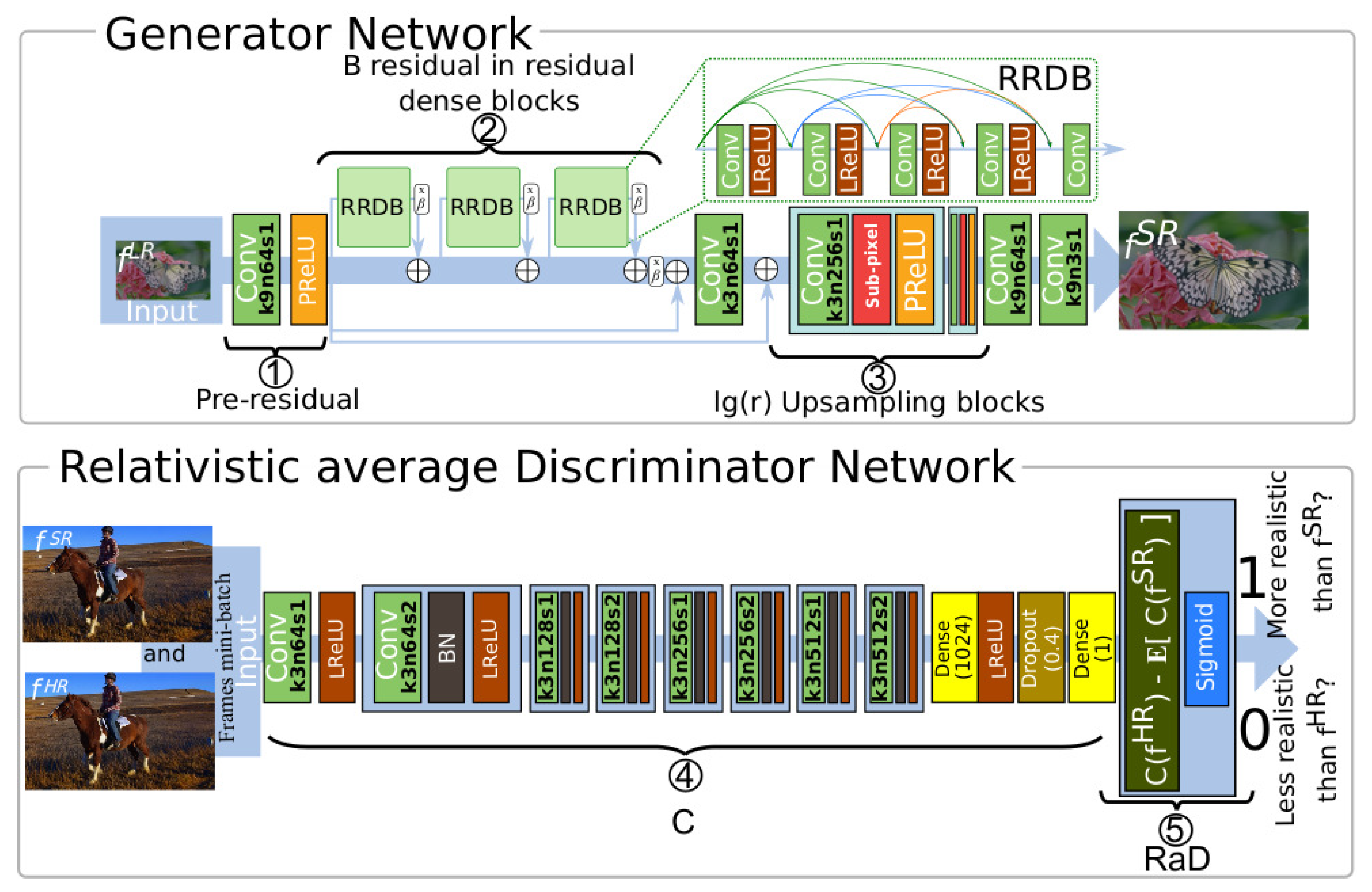

4.1. VSRGAN+ Architecture

4.2. Perceptual Loss Function

5. Datasets

6. Video Quality Assessment Metrics

6.1. Pixel-Wise Quality Assessment

6.2. Perceptual Quality Assessment

6.2.1. Learned Perceptual Image Patch Similar—LPIPS

6.2.2. Video Multimethod Assessment Fusion—VMAF

7. Experimental Results

7.1. Model Parameters and Training

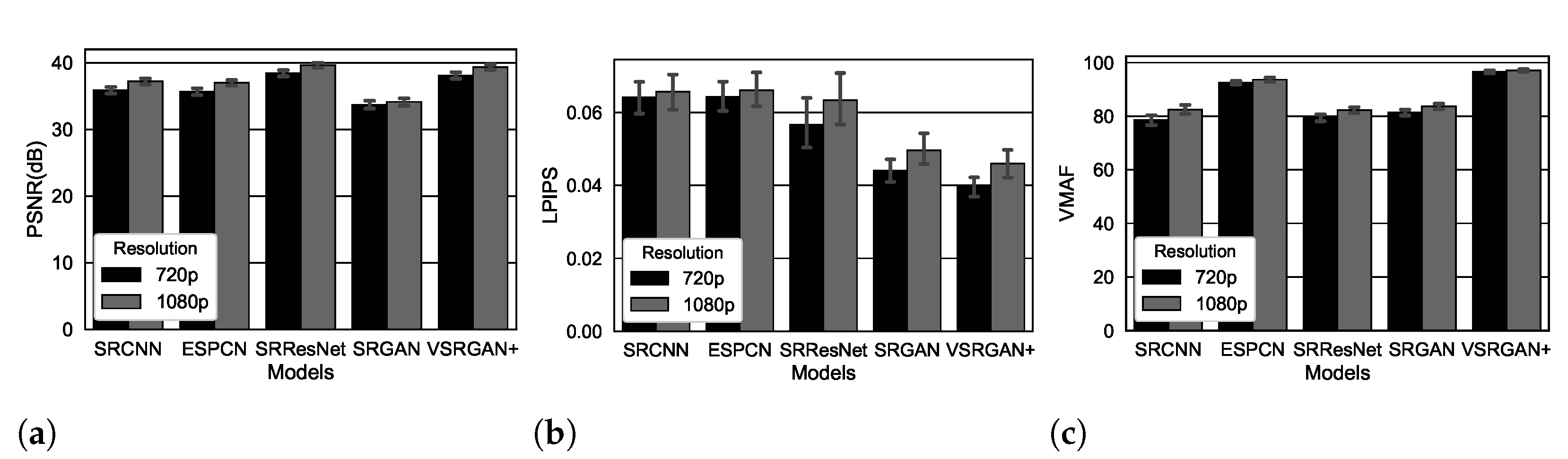

7.2. Results of Video Quality Assessment

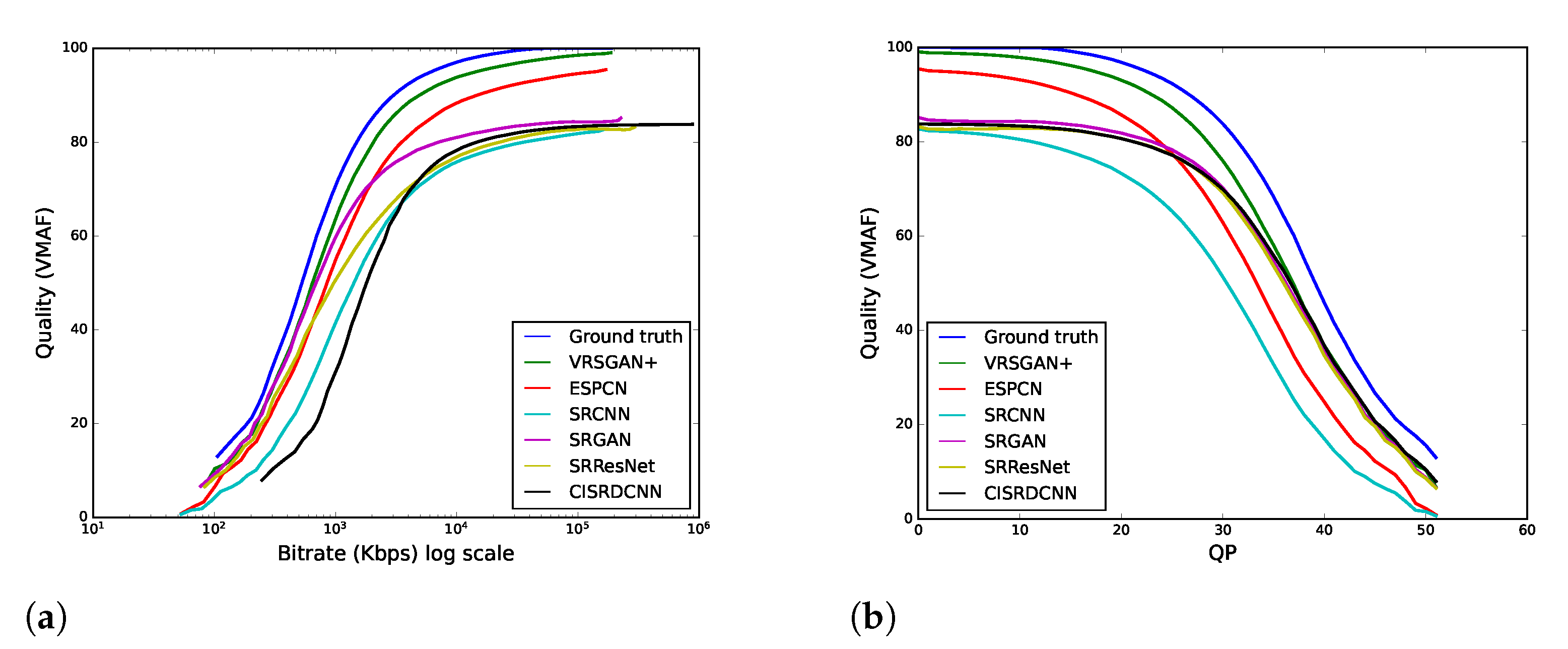

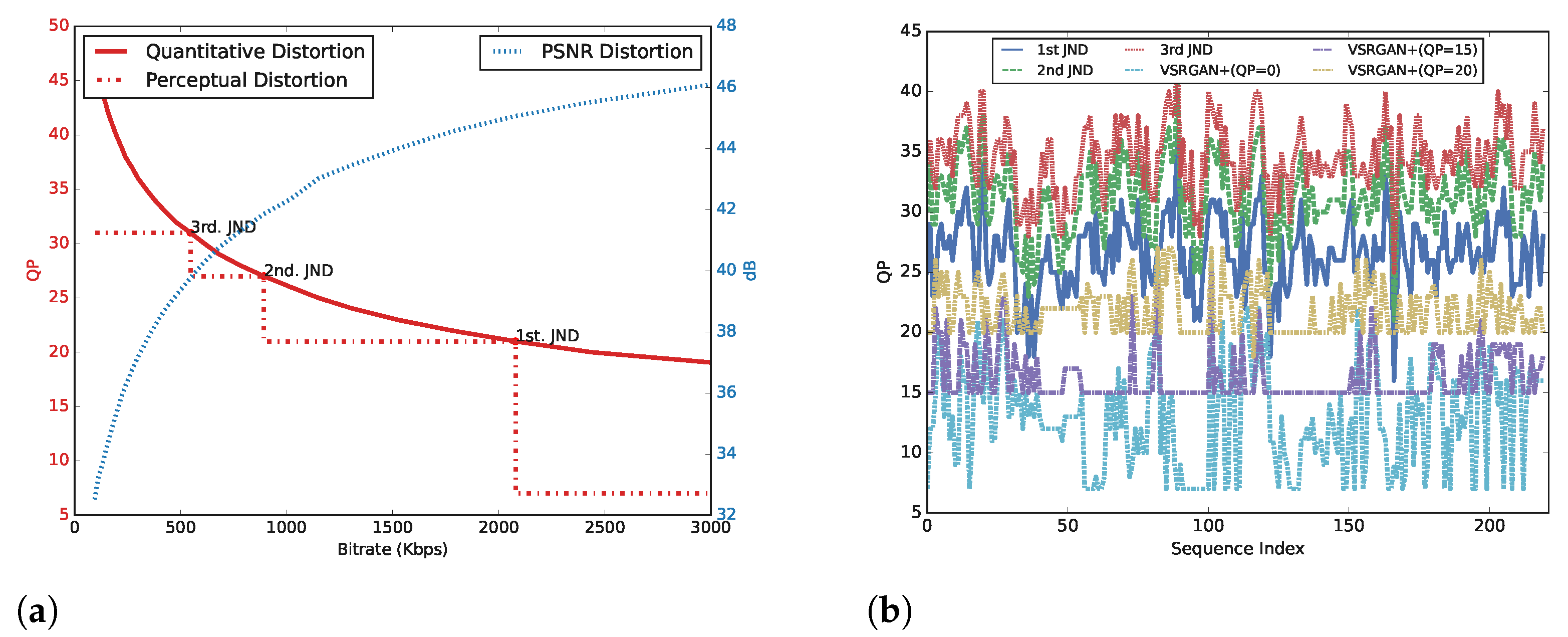

7.3. Perceptual Quality and JND

7.4. Runtime Analysis

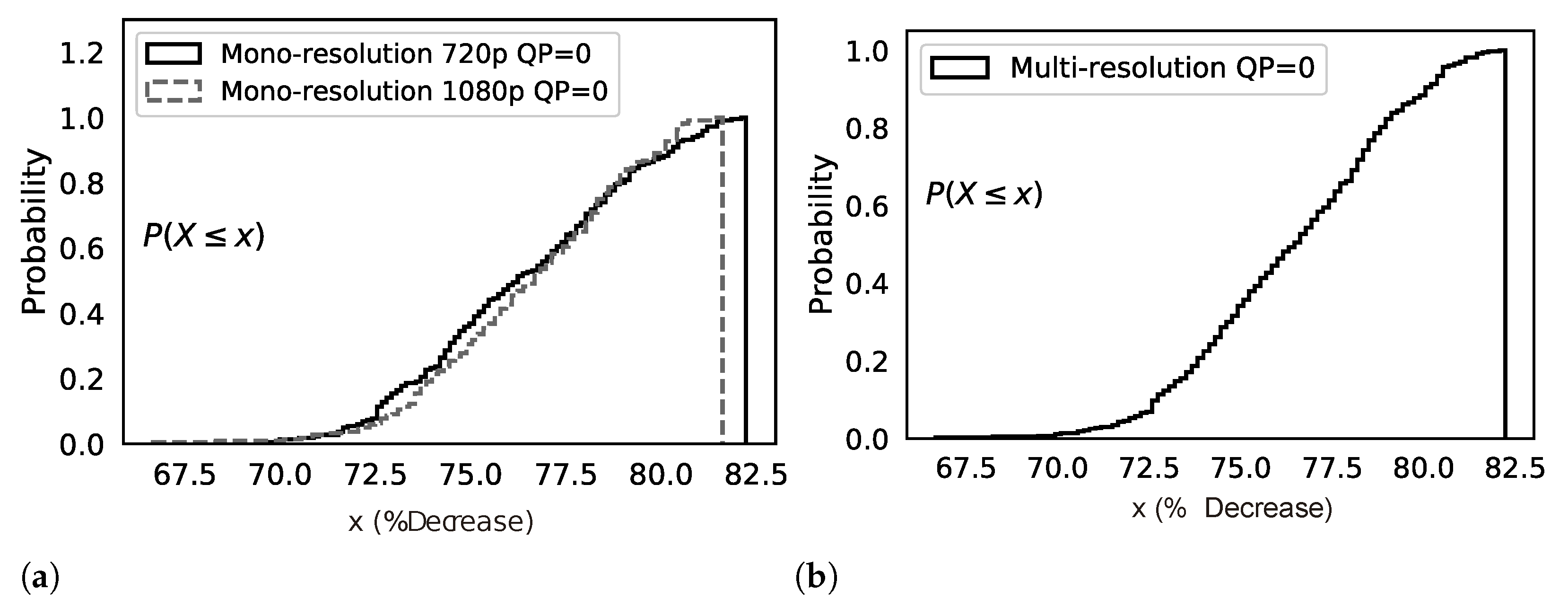

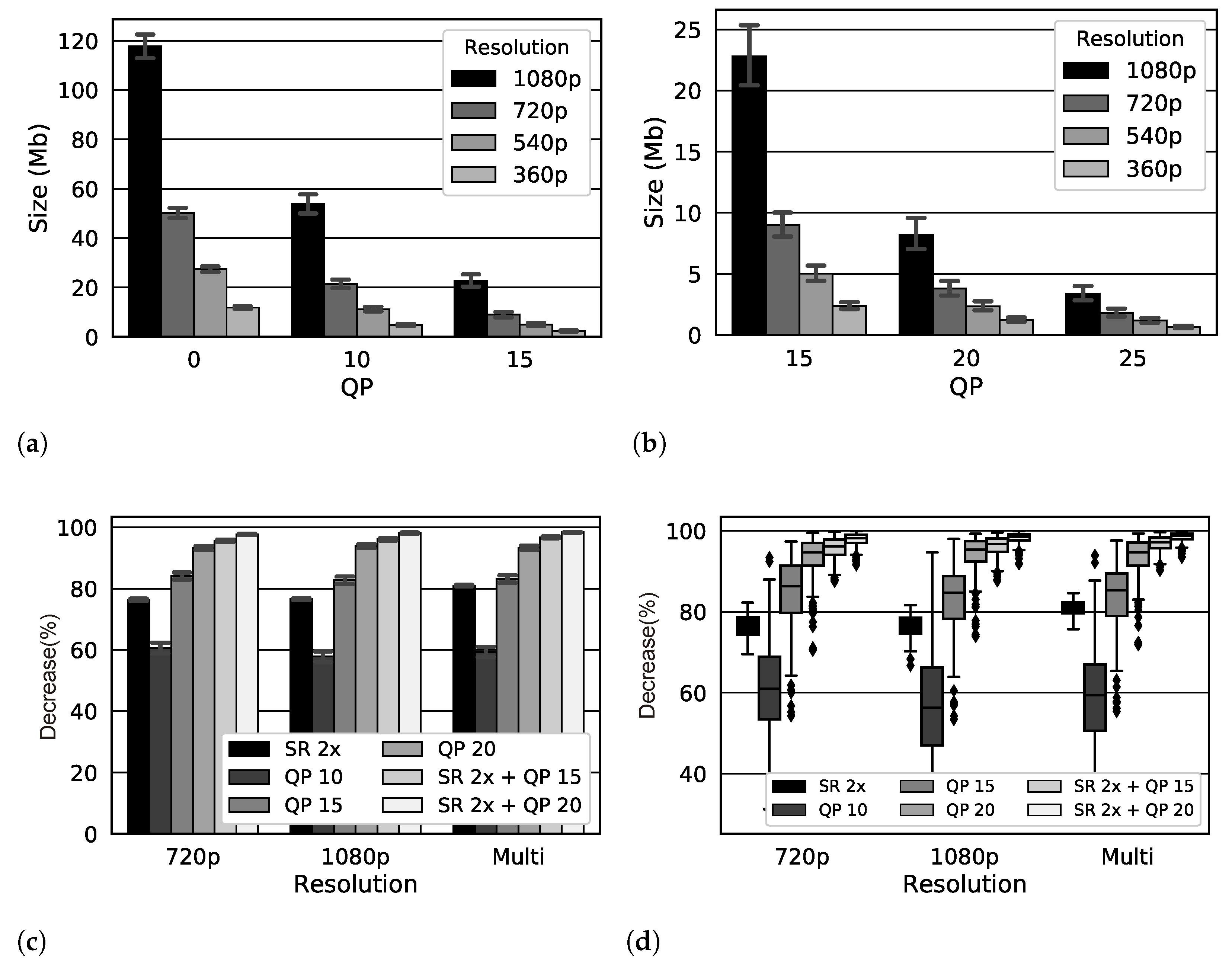

7.5. Data Transfer Decrease

7.6. Data Reduction Using Super-Resolution vs. Compression

8. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| API | application programming interface |

| BN | batch normalization |

| CDF | cumulative distribution function |

| CDN | content delivery network |

| CISRDCNN | super-resolution of compressed images using deep convolutional neural networks |

| CNN | convolutional neural network |

| dB | decibéis |

| DNN | deep neural network |

| ESPCN | efficient sub-pixel convolutional neural networks |

| ESRGAN | enhanced super-resolution generative adversarial networks |

| FHD | full high definition |

| FPS | frames per second |

| GAN | generative adversarial network |

| GPU | graphics processing unit |

| HAS | HTTP-based adaptive streaming |

| HD | high definition |

| IaaS | infrastructure as a service |

| ISP | internet service provider |

| JND | just-noticeable-difference |

| LeakyReLU | leaky rectified linear unit |

| LPIPS | learned perceptual image patch similarity |

| ML | machine learning |

| MSE | mean squared error |

| P2P | peer-to-peer |

| PoP | point of presence |

| PReLU | parametric rectified linear unit |

| PSNR | peak signal-to-noise ratio |

| QoE | quality of experience |

| QP | quantization parameter |

| RaD | relativistic average discriminator |

| RB | residual block |

| RGB | red, green, and blue |

| RRDB | residual-in-residual dense block |

| SGD | stochastic gradient descent |

| SISR | single image super-resolution |

| SR | super-resolution |

| SRCNN | super-resolution convolutional neural networks |

| SRGAN | super-resolution generative adversarial networks |

| SRResNet | super-resolution residual network |

| SSIM | structural similarity |

| SVM | support vector machine |

| UHD | ultra high definition |

| VMAF | video multi-method assessment fusion |

| VoD | video on demand |

| VSRGAN+ | improved video super-resolution with GAN |

| YCbCr | Y: luminance; Cb: chrominance-blue; and Cr: chrominance-red |

References

- Cisco VNI. Cisco Visual Networking Index: Forecast and Trends, 2017–2022 White Paper; Technical Report; Cisco. 2019. Available online: https://twiki.cern.ch/twiki/pub/HEPIX/TechwatchNetwork/HtwNetworkDocuments/white-paper-c11-741490.pdf (accessed on 5 September 2022).

- Zolfaghari, B.; Srivastava, G.; Roy, S.; Nemati, H.R.; Afghah, F.; Koshiba, T.; Razi, A.; Bibak, K.; Mitra, P.; Rai, B.K. Content Delivery Networks: State of the Art, Trends, and Future Roadmap. ACM Comput. Surv. 2020, 53, 34. [Google Scholar] [CrossRef]

- Li, Z.; Wu, Q.; Salamatian, K.; Xie, G. Video Delivery Performance of a Large-Scale VoD System and the Implications on Content Delivery. IEEE Trans. Multimed. 2015, 17, 880–892. [Google Scholar] [CrossRef]

- BITMOVIN INC. Per-Title Encoding. 2020. Available online: https://bitmovin.com/demos/per-title-encoding (accessed on 5 September 2022).

- Yan, B.; Shi, S.; Liu, Y.; Yuan, W.; He, H.; Jana, R.; Xu, Y.; Chao, H.J. LiveJack: Integrating CDNs and Edge Clouds for Live Content Broadcasting. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 73–81. [Google Scholar] [CrossRef]

- Yeo, H.; Jung, Y.; Kim, J.; Shin, J.; Han, D. Neural Adaptive Content-aware Internet Video Delivery. In Proceedings of the 13th USENIX Symposium on Operating Systems Design and Implementation (OSDI 18), Carlsbad, CA, USA, 8–10 October 2018; USENIX Association: Berkeley, CA, USA, 2018; pp. 645–661. [Google Scholar]

- Wang, F.; Zhang, C.; Wang, F.; Liu, J.; Zhu, Y.; Pang, H.; Sun, L. DeepCast: Towards Personalized QoE for Edge-Assisted Crowdcast With Deep Reinforcement Learning. IEEE/ACM Trans. Netw. 2020, 28, 1255–1268. [Google Scholar] [CrossRef]

- Liborio, J.M.; Souza, C.M.; Melo, C.A.V. Super-resolution on Edge Computing for Improved Adaptive HTTP Live Streaming Delivery. In Proceedings of the 2021 IEEE tenth International Conference on Cloud Networking (CloudNet), Cookeville, TN, USA, 8–10 November 2021; pp. 104–110. [Google Scholar] [CrossRef]

- Yeo, H.; Do, S.; Han, D. How Will Deep Learning Change Internet Video Delivery? In Proceedings of the 16th ACM Workshop on Hot Topics in Networks, Palo Alto, CA, USA, 30 November–1 December 2017; ACM: New York, NY, USA, 2017; pp. 57–64. [Google Scholar] [CrossRef]

- Hecht, J. The bandwidth bottleneck that is throttling the Internet. Nature 2016, 536, 139–142. [Google Scholar] [CrossRef] [PubMed]

- Christian, P. Int’l Bandwidth and Pricing Trends; Technical Report; TeleGeography: 2018. Available online: https://www.afpif.org/wp-content/uploads/2018/08/01-International-Internet-Bandwidth-and-Pricing-Trends-in-Africa-%E2%80%93-Patrick-Christian-Telegeography.pdf (accessed on 5 September 2022).

- Wang, Z.; Sun, L.; Wu, C.; Zhu, W.; Yang, S. Joint online transcoding and geo-distributed delivery for dynamic adaptive streaming. In Proceedings of the IEEE INFOCOM 2014—IEEE Conference on Computer Communications, Toronto, ON, Canada, 27 April–2 May 2014; pp. 91–99. [Google Scholar] [CrossRef][Green Version]

- AI IMPACTS. 2019 recent trends in GPU price per FLOPS. Technical report, AI IMPACTS. 2019. Available online: https://aiimpacts.org/2019-recent-trends-in-gpu-price-per-flops (accessed on 16 August 2022).

- NVIDIA Corporation. Accelerated Computing Furthermore, The Democratization Of Supercomputing; Technical Report; California, USA, NVIDIA Corporation: 2018. Available online: https://www.nvidia.com/content/dam/en-zz/Solutions/Data-Center/tesla-product-literature/sc18-tesla-democratization-tech-overview-r4-web.pdf (accessed on 16 August 2022).

- Cloud, G. Cheaper Cloud AI Deployments with NVIDIA T4 GPU Price Cut; Technical Report; California, USA, Google: 2020. Available online: https://cloud.google.com/blog/products/ai-machine-learning/cheaper-cloud-ai-deployments-with-nvidia-t4-gpu-price-cut (accessed on 16 August 2022).

- Papidas, A.G.; Polyzos, G.C. Self-Organizing Networks for 5G and Beyond: A View from the Top. Future Internet 2022, 14, 95. [Google Scholar] [CrossRef]

- Dong, J.; Qian, Q. A Density-Based Random Forest for Imbalanced Data Classification. Future Internet 2022, 14, 90. [Google Scholar] [CrossRef]

- Kappeler, A.; Yoo, S.; Dai, Q.; Katsaggelos, A.K. Video Super-Resolution With Convolutional Neural Networks. IEEE Trans. Comput. Imaging 2016, 2, 109–122. [Google Scholar] [CrossRef]

- Pérez-Pellitero, E.; Sajjadi, M.S.M.; Hirsch, M.; Schölkopf, B. Photorealistic Video Super Resolution. arXiv 2018, arXiv:1807.07930. [Google Scholar]

- Karras, T.; Aila, T.; Laine, S.; Lehtinen, J. Progressive Growing of GANs for Improved Quality, Stability, and Variation. arXiv 2018, arXiv:1710.10196. [Google Scholar]

- Ledig, C.; Theis, L.; Huszar, F.; Caballero, J.; Aitken, A.P.; Tejani, A.; Totz, J.; Wang, Z.; Shi, W. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. arXiv 2018, arXiv:1609.04802. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Loy, C.C. ESRGAN: Enhanced super-resolution generative adversarial networks. In Computer Vision—ECCV 2018 Workshops; Leal-Taixé, L., Roth, S., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 63–79. [Google Scholar]

- Lucas, A.; Tapia, S.L.; Molina, R.; Katsaggelos, A.K. Generative Adversarial Networks and Perceptual Losses for Video Super-Resolution. arXiv 2018, arXiv:1806.05764. [Google Scholar]

- He, Z.; Cao, Y.; Du, L.; Xu, B.; Yang, J.; Cao, Y.; Tang, S.; Zhuang, Y. MRFN: Multi-Receptive-Field Network for Fast and Accurate Single Image Super-Resolution. IEEE Trans. Multimed. 2020, 22, 1042–1054. [Google Scholar] [CrossRef]

- Wang, J.; Teng, G.; An, P. Video Super-Resolution Based on Generative Adversarial Network and Edge Enhancement. Electronics 2021, 10, 459. [Google Scholar] [CrossRef]

- Yang, W.; Zhang, X.; Tian, Y.; Wang, W.; Xue, J.; Liao, Q. Deep Learning for Single Image Super-Resolution: A Brief Review. IEEE Trans. Multimed. 2019, 21, 3106–3121. [Google Scholar] [CrossRef]

- Anwar, S.; Khan, S.; Barnes, N. A Deep Journey into Super-Resolution: A Survey. ACM Comput. Surv. 2020, 53, 60. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Computer Vision—ECCV 2014; Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 295–307. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. arXiv 2016, arXiv:1608.00367. [Google Scholar]

- Chen, H.; He, X.; Ren, C.; Qing, L.; Teng, Q. CISRDCNN: Super-resolution of compressed images using deep convolutional neural networks. Neurocomputing 2018, 285, 204–219. [Google Scholar] [CrossRef]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. arXiv 2016, arXiv:1609.05158. [Google Scholar]

- Johnson, J.; Alahi, A.; Li, F. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. arXiv 2016, arXiv:1603.08155. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Recurrent Back-Projection Network for Video Super-Resolution. arXiv 2019, arXiv:1903.10128. [Google Scholar]

- Tian, Y.; Zhang, Y.; Fu, Y.; Xu, C. TDAN: Temporally Deformable Alignment Network for Video Super-Resolution. arXiv 2018, arXiv:1812.02898. [Google Scholar]

- Wang, X.; Chan, K.C.K.; Yu, K.; Dong, C.; Loy, C.C. EDVR: Video Restoration with Enhanced Deformable Convolutional Networks. arXiv 2019, arXiv:1905.02716. [Google Scholar]

- Jo, Y.; Oh, S.W.; Kang, J.; Kim, S.J. Deep Video Super-Resolution Network Using Dynamic Upsampling Filters Without Explicit Motion Compensation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Isobe, T.; Zhu, F.; Jia, X.; Wang, S. Revisiting Temporal Modeling for Video Super-resolution. arXiv 2020, arXiv:2008.05765. [Google Scholar]

- Chadha, A.; Britto, J.; Roja, M.M. iSeeBetter: Spatio-temporal video super-resolution using recurrent generative back-projection networks. Comput. Vis. Media 2020, 6, 307–317. [Google Scholar] [CrossRef]

- Nah, S.; Baik, S.; Hong, S.; Moon, G.; Son, S.; Timofte, R.; Lee, K.M. NTIRE 2019 Challenge on Video Deblurring and Super-Resolution: Dataset and Study. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 1996–2005. [Google Scholar]

- Liu, C.; Sun, D. A Bayesian approach to adaptive video super resolution. In Proceedings of the CVPR 2011, Washington, DC, USA, 20–25 June 2011; pp. 209–216. [Google Scholar]

- Xue, T.; Chen, B.; Wu, J.; Wei, D.; Freeman, W.T. Video Enhancement with Task-Oriented Flow. arXiv 2017, arXiv:1711.09078. [Google Scholar] [CrossRef]

- Tao, X.; Gao, H.; Liao, R.; Wang, J.; Jia, J. Detail-Revealing Deep Video Super-Resolution. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 4482–4490. [Google Scholar]

- Liborio, J.M.; Melo, C.A.V. A GAN to Fight Video-related Traffic Flooding: Super-resolution. In Proceedings of the 2019 IEEE Latin-American Conference on Communications (LATINCOM), Salvador, Brazil, 11–13 November 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Lubin, J. A human vision system model for objective image fidelity and target detectability measurements. In Proceedings of the Ninth European Signal Processing Conference (EUSIPCO 1998), Rhodes, Greece, 8–11 September 1998; pp. 1–4. [Google Scholar]

- Watson, A.B. Proposal: Measurement of a JND scale for video quality. IEEE G-2.1. 6 Subcommittee on Video Compression Measurements; Citeseer. 2000. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=2c57d52b6fcdd4e967f9a39e6e7509d948e57a07 (accessed on 21 July 2021).

- Lin, J.Y.c.; Jin, L.; Hu, S.; Katsavounidis, I.; Li, Z.; Aaron, A.; Kuo, C.C.J. Experimental design and analysis of JND test on coded image/video. In Proceedings of the Applications of Digital Image Processing XXXVIII, San Diego, CA, USA, 10–13 August 2015; p. 95990Z. [Google Scholar] [CrossRef]

- Wang, H.; Katsavounidis, I.; Zhou, J.; Park, J.; Lei, S.; Zhou, X.; Pun, M.; Jin, X.; Wang, R.; Wang, X.; et al. VideoSet: A Large-Scale Compressed Video Quality Dataset Based on JND Measurement. arXiv 2017, arXiv:1701.01500. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A.; Shechtman, E.; Wang, O. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. arXiv 2018, arXiv:1801.03924. [Google Scholar]

- Huang, C.; Wang, A.; Li, J.; Ross, K.W. Measuring and evaluating large-scale CDNs. In Proceedings of the ACM IMC, Vouliagmeni, Greece, 20–22 October 2008. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks. arXiv 2014, arXiv:1406.2661. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. arXiv 2015, arXiv:11502.01852. [Google Scholar]

- Maas, A.L. Rectifier Nonlinearities Improve Neural Network Acoustic Models. In Proceedings of the ICML, Atlanta, GA, USA, 16–21 June 2013. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V. Inception-v4, Inception-ResNet and the Impact of Residual Connections on Learning. arXiv 2016, arXiv:1602.07261. [Google Scholar] [CrossRef]

- Jolicoeur-Martineau, A. The relativistic discriminator: A key element missing from standard GAN. arXiv 2018, arXiv:1807.00734. [Google Scholar]

- Zhao, H.; Gallo, O.; Frosio, I.; Kautz, J. Loss Functions for Image Restoration With Neural Networks. IEEE Trans. Comput. Imaging 2017, 3, 47–57. [Google Scholar] [CrossRef]

- Mathieu, M.; Couprie, C.; LeCun, Y. Deep multi-scale video prediction beyond mean square error. arXiv 2015, arXiv:1511.05440. [Google Scholar]

- Bruna, J.; Sprechmann, P.; LeCun, Y. Super-Resolution with Deep Convolutional Sufficient Statistics. arXiv 2015, arXiv:1511.05666. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zhou, B.; Lapedriza, A.; Khosla, A.; Oliva, A.; Torralba, A. Places: A 10 million Image Database for Scene Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017. [Google Scholar] [CrossRef]

- Zhang, K.; Gu, S.; Timofte, R. NTIRE 2020 Challenge on Perceptual Extreme Super-Resolution: Methods and Results. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Seattle, WA, USA, 14–19 June 2020; pp. 2045–2057. [Google Scholar] [CrossRef]

- Iandola, F.N.; Moskewicz, M.W.; Ashraf, K.; Han, S.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <1MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, New York, NY, USA, 3–6 December; Curran Associates Inc.: New York, NY, USA, 2012; Volume 1, pp. 1097–1105. [Google Scholar]

- Li, Z.; Bampis, C.; Novak, J.; Aaron, A.; Swanson, K.; Moorthy, A.; Cock, J.D. VMAF: The Journey Continues. Online, Netflix Technology Blog. 2018. Available online: https://netflixtechblog.com/vmaf-the-journey-continues-44b51ee9ed12 (accessed on 15 July 2021).

- Aaron, A.; Li, Z.; Manohara, M.; Lin, J.Y.; Wu, E.C.H.; Kuo, C.C.J. Challenges in cloud based ingest and encoding for high quality streaming media. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 1732–1736. [Google Scholar] [CrossRef]

| Models | CNN | Sub- Pixel | RB | RRDB | Skip Connection | Perceptual Loss | GAN | Dense Skip Connections | RaD | Residual Scaling | Video SR |

|---|---|---|---|---|---|---|---|---|---|---|---|

| SRCNN [28] | √ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| ESPCN [32] | √ | √ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ | √ |

| CISRDCNN [31] | √ | ✕ | ✕ | ✕ | √ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| SRResNet [21] | √ | √ | 16 | ✕ | √ | ✕ | ✕ | ✕ | ✕ | ✕ | ✕ |

| SRGAN [21] | √ | √ | 16 | ✕ | √ | √ | √ | ✕ | ✕ | ✕ | ✕ |

| ESRGAN [22] | √ | √ | ✕ | 23 | √ | √ | √ | √ | √ | √ | ✕ |

| VSRGAN [44] | √ | √ | 3 | ✕ | √ | √ | √ | √ | ✕ | ✕ | √ |

| VSRGAN+ (ours) | √ | √ | ✕ | 3 | √ | √ | √ | √ | √ | √ | √ |

| Title | # of 5 s Samples | Quality (FPS) |

|---|---|---|

| El Fuente | 31 | 30 |

| Chimera | 59 | 30 |

| Ancient Thought | 11 | 24 |

| Eldorado | 14 | 24 |

| Indoor Soccer | 5 | 24 |

| Life Untouched | 15 | 30 |

| Lifting Off | 13 | 24 |

| Moment of Intensity | 10 | 30 |

| Skateboarding | 9 | 24 |

| Unspoken Friend | 13 | 24 |

| Tears of Steel | 40 | 24 |

| Models | Setup |

|---|---|

| SRCNN | Filter = 64, 32, 3 for each layer Filter size = 9, 1, 5 for each layer, respectively Optimizer: SGD with a learning rate of Batch size: 128 HR crop size: 33 × 33 Loss function: Number of iterations = |

| ESPCN | Filter = 64, 32, for each layer, respectively Filter size = 5, 3, 3 for each layer, respectively Optimizer: Adam with a learning rate of Batch size: 128 HR subimage size: Loss function: Number of iterations = |

| CISRDCNN | Block DBCNN: CNN layers use 64 filters of size +BN+ReLU, -th layer uses three filters of size , and uses residual learning Block USCNN: CNN layers use 64 filters of size +BN+ReLU, -th is a deconvolutional layer that uses three filters of size Block QECNN is similar to DBCNN Loss function: , , , and |

| SRResNet | Residual blocks: 16 Optimizer: Adam with a learning rate of Batch size: 16 HR crop size: Loss function: Number of iterations = |

| SRGAN | Residual blocks: 16 Optimizer: Adam with a learning rate of /learning rate of Batch size: 16 HR crop size: Loss function: Perceptual loss + adversarial loss Number of iterations = / |

| VSRGAN+ | B = 3 Optimizer: Adam with a learning rate of /learning rate of Batch size: 16 HR subimage size: Loss function: / Number of iterations = / |

| Methods | Resolution | SRCNN | ESPCN | SRResNet | SRGAN | VSRGAN+ |

|---|---|---|---|---|---|---|

| PSNR | 720p | 38.44 | 38.09 | |||

| 1080p | 39.65 | 39.34 | ||||

| LPIPS | 720p | 0.044 | 0.039 | |||

| 1080p | 0.050 | 0.046 | ||||

| VMAF | 720p | 96.62 | ||||

| 1080p | 97.08 |

| QP | 360p | 540p | 720p | 1080p |

|---|---|---|---|---|

| 0 | 11.80 Mb | 27.43 Mb | 50.18 Mb | 117.71 Mb |

| 10 | 4.74 Mb | 11.20 Mb | 21.42 Mb | 53.76 Mb |

| 15 | 2.38 Mb | 5.01 Mb | 9.00 Mb | 22.81 Mb |

| 20 | 1.24 Mb | 2.35 Mb | 3.80 Mb | 8.18 Mb |

| 25 | 0.65 Mb | 1.18 Mb | 1.80 Mb | 3.38 Mb |

| Data Reduction | Mono-Resolution 720p | Mono-Resolution 1080p | Multi Resolution |

|---|---|---|---|

| SR 2× | 76.35% | 76.52% | 80.99% |

| QP10 | 60.67% | 57.83% | 59.28% |

| QP15 | 84.13% | 82.80% | 83.12% |

| QP20 | 93.34% | 93.91% | 93.37% |

| SR 2×+QP15 | 95.62% | 96.07% | 96.74% |

| SR 2×+QP20 | 97.74% | 98.14% | 98.42% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liborio, J.d.M.; Melo, C.; Silva, M. Internet Video Delivery Improved by Super-Resolution with GAN. Future Internet 2022, 14, 364. https://doi.org/10.3390/fi14120364

Liborio JdM, Melo C, Silva M. Internet Video Delivery Improved by Super-Resolution with GAN. Future Internet. 2022; 14(12):364. https://doi.org/10.3390/fi14120364

Chicago/Turabian StyleLiborio, Joao da Mata, Cesar Melo, and Marcos Silva. 2022. "Internet Video Delivery Improved by Super-Resolution with GAN" Future Internet 14, no. 12: 364. https://doi.org/10.3390/fi14120364

APA StyleLiborio, J. d. M., Melo, C., & Silva, M. (2022). Internet Video Delivery Improved by Super-Resolution with GAN. Future Internet, 14(12), 364. https://doi.org/10.3390/fi14120364