1. Introduction

1.1. Background

Mixed Reality (MR), a term first introduced in 1994, combines the physical and digital worlds, with Augmented Reality (AR) being the most well-known example. Unlike Virtual Reality (VR), which creates a virtual environment that completely isolates users from the real world, MR blends virtual and physical realms, blurring the boundaries between them [

1,

2,

3]. Today, MR has evolved beyond its original definition, encompassing environmental understanding, human understanding, spatial sound, location and positioning in both physical and virtual spaces, and collaborative work on 3D assets in mixed reality environments [

1]. Furthermore, users can interact with digital content simply by tapping their fingers, opening up even more possibilities for interaction design.

In recent years, the smart home has become a popular concept. Smart homes are comprised of networks and sensors and can be controlled by smartphones through apps. Major international IT companies such as Google, Amazon, and Apple have entered the smart home field [

4]. The goal of the smart home is to provide a better quality of user life in terms of energy efficiency, security, convenience, and entertainment [

5,

6]. However, smart homes are not well accepted in the market. This is caused by various reasons such as distrust and technology anxiety [

6,

7]. In this paper, we want to focus on technology anxiety and try to alleviate these issues from a human–computer interaction (HCI) perspective.

The resistance mentioned earlier can stem from the smart device itself, as its reliability, performance, and controllability can affect users’ adoption intentions. Older and less-educated participants tend to be the most distrustful of IoT and exhibit strong resistance. Additionally, complex services may create negative impressions and emotions in users’ minds [

6]. With regards to technology anxiety, Pal et al. argue that some users, particularly older adults, prefer using familiar technology instead of embracing new innovations due to their decreased cognitive and physical abilities [

8]. Furthermore, it has been noted that technology anxiety can also exist among the younger population, although limited research has explored this aspect. In recent years, the rapid growth of technology has necessitated individuals to quickly upgrade their knowledge and skills in using new technological products. This constant need for adaptation can lead to feelings of fear and anxiety, particularly among new and untrained users [

9]. Even among the digital natives generation, there remains a possibility of digital inequality arising due to digital limitations [

10]. These studies all suggest that the cost of learning new technologies and fear of new technologies contribute to technology anxiety. Addressing the issue of technology anxiety requires an essential focus on reducing the learning barriers associated with using new technologies.

Natural interaction is a design paradigm that aims to replicate human behavior in technological applications. It provides an intuitive, entertaining, and non-instructive interaction experience [

11]. The natural interaction system design focuses on recognizing congenital and spontaneous human expressions in relation to some object [

11,

12]. Applying natural interaction can reduce the user’s cognitive load by mimicking familiar user interactions for virtual objects [

12]. Natural interaction can include using speech, gestures, and facial expressions to control and interact with technology rather than traditional methods such as buttons and touchscreens [

11,

13,

14,

15]. In general, natural interaction allows for more user-friendly interactions with technology.

Nowadays, the most common solution for smart home control is using mobile applications, such as Home App on the iOS platform. In this case, HomeKit-compatible smart home accessories are centrally managed by the Home App [

16,

17]. In the 2D user interface (UI), users interact with home appliances through touch icons and interact with the menu by using their fingers; this is a proven way to interact and has been applied widely in smartphones, personal computers, and other platforms with a 2D UI (called WIMP (windows, icons, menus, and pointers) interaction).

However, when transitioning into a 3D MR environment, many interactions change. Since the UI is moved from 2D to 3D and users can use hand tracking to interact with virtual objects directly, the interaction becomes tangible and joyful. We conducted a case study that applied natural interaction to a smart lock to explore the natural interaction experience in an MR smart home control system. We believe the natural interaction can reduce the user resistance to accepting new technology.

1.2. Research Goal

This research aims to provide a way for users to interact with smart home systems through a 3D UI. Unlike conventional 2D UIs, such as buttons used to control smart homes, we want to reproduce the user’s interaction habits based on their real-world experiences. By taking advantage of MR head-mounted devices (HMD) with hand-tracking and spatial-mapping features, we reproduced the process of opening a lock in the physical world for the MR application and performed a user study to collect user feedback.

Our primary objective was to explore whether natural interaction can reduce the learning barrier of new technology and to validate the advantages and limitations of natural interaction compared to traditional WIMP interaction. At the end of our investigation, we discovered a significant difference in user experience between the two designs. Both advantages and limitations exist for natural interaction. Furthermore, through qualitative study, we found that natural interaction reduces the overall learning barrier, which aligns with our expectations. These findings help us to understand scenarios in which natural interaction can be applied and could serve as a foundation to support the application of natural interaction beyond the smart home field.

1.3. Paper Structure

The remaining sections of the paper start by presenting the related work previously conducted by other scholars and discussing the limitations of current studies. Following that, we introduce our experiment design in detail, explain how it achieved our research goals, and provide an explanation of how we implemented the lock system. We then present our experiment procedures. Lastly, we present the data collected from the experiment and refine the results through analysis.

2. Related Works

2.1. Natural Interaction

The concept of natural interaction has been developed for a long time. Ishii et al. present the idea called “Tangible Bits”. The main purpose of this study is to allow user to access digital content through the physical environment tangibly [

18]. Another work conducted by Rekimoto et al. presents the early rising concept of interaction with the real world [

19]. In modern research, natural interaction has been applied widely in various fields and has proved that it can improve the user experience and make interaction joyful. Kyriakou et al. applied natural interaction to a museum AR system. Users can see virtual artifact replicas in their system through the AR application. The AR application is deployed on a low-cost HMD such as Google cardboard. Additionally, they used leap motion to track users’ hands in real time. The experiment results showed that the application was accepted positively among all age groups, and most users felt enjoyment [

20]. Another application scenario is a video game. Leibe et al. conducted a study that applied natural interaction to the video game Elder Scrolls V. They use Kinect as a pose recognizer to perform tasks such as “Raise shield” and “Cast spell”. By measuring the “fun value” of natural and native interaction through a questionnaire, they found that natural interaction provides a more enjoyable and intuitive user experience [

21]. As the adoption of AR technology has risen, Aliprantis et al. conducted an evaluation of gesture-based natural interaction within an AR context. Despite improvements in their designs, these have not yet received high levels of acceptance due to ongoing challenges with accuracy and complexity [

22]. Although the accuracy of gesture recognition has seen significant advancements in recent years, as evidenced by Gloumeau et al.’s development of a six-degrees-of-freedom manipulation technique for virtual objects [

23], the steep learning curve associated with this technology remains a formidable challenge.

2.2. Technology Anxiety

Many studies have been conducted to explore the factors that could influence technology anxiety. Researchers have found that factors such as gender and computer experience have significant correlations with technology anxiety [

24,

25]. Although numerous papers have mentioned the correlation between age and technology anxiety [

7,

8], Fernández-Ardèvol et al. hold the opposite opinion that age does not have a strong correlation with technology anxiety [

26]. However, other research, such as that by Ivan et al., found that technology anxiety increases when older individuals face younger adults who demonstrate better information and communication technology (ICT) skills [

27]. This suggests that multiple factors may influence technology anxiety, and understanding these factors can help facilitate the adoption of new technologies across various user groups.

2.3. Applying AR/MR Technology for Empowering Learning

AR/MR technology has been extensively adopted in educational and professional training scenarios, demonstrably reducing cognitive load and overcoming learning obstacles. Take, for instance, the work by Cheng et al., who created an AR book. This unique tool supplements standard educational content with virtual elements accessible via AR devices, not only diminishing cognitive load but also amplifying reading motivation [

28]. Language learning is another arena where AR/MR technology is making strides. Dalim et al. exploited speech recognition in combination with AR technology to facilitate children’s language acquisition. Their approach noticeably amplified engagement and expedited the learning rate [

29]. In the realm of professional training, particularly in medicine, Kobayashi et al. integrated MR into medical training protocols. Their research demonstrated that MR positively impacts acute care, surgical care, and medical science [

30]. These examples collectively attest to MR’s potential in alleviating the complexities of both study and professional training. However, current research is lacking in studies focusing on daily-use scenarios, as technology anxiety can be caused by the use of technology in daily life. Looking forward, as MR HMD become commonplace in daily life, we can harness this technology to lessen the cognitive load associated with everyday tasks.

2.4. Smart Home Controller in AR Environment

Although there are many studies related to smart home control, few existing works explore the smart home in an AR environment. Mahroo et al. created an AR smart home framework called HoloHome. The goal of this framework is to allow AR applications on the HoloLens platform to be able to interact with real objects in the physical world. They use Vuforia image processing to locate the home appliance and overlay the virtual version of the home appliance over the real one. Each time the user looks at the specific smart device, the virtual replica is activated and necessary information appears [

31]. The architecture of our project is similar to this study. However, rather than Vuforia image processing to detect the object, we choose to use spatial mapping to locate the real object. Another study conducted by Inomata et al. uses gestures as input commands to control smart devices. Users can use corresponding gestures to operate home appliances. If different home appliances have similar operations, the same gestures are applied. For example, the lights’ brightness and the volume level are all adjusted by waving a hand to the left or right [

32]. This design takes advantage of MR HMD and offers convenient operation. However, memorizing various gestures can increase the learning threshold, which we are trying to improve.

3. Materials and Methods

3.1. Overview

Our objective was to explore the advantages of natural interaction in 3D UIs and compare them with traditional WIMP interactions. We accomplished this by creating two demos. One demo utilized natural interaction (case_NI), while another employed WIMP interaction (case_WIMP) for the purpose of contrasting it with the natural interaction scenario. Both demos present a lock-opening application scenario for users. To collect the necessary data, we conducted a user study using these demos, which included the NASA Task Load Index and a Google Forms-based survey.

NASA TLX is a subjective workload assessment tool that enables users to evaluate a human–machine interface system from a multi-dimensional perspective. It encompasses mental demand, physical demand, temporal demand, performance, effort, and frustration [

33,

34].

We employed the NASA TLX tool to validate our interaction design and identify significant differences between the two groups. Furthermore, we supplemented our study with a Google Forms-based survey that included questions related to our research objective, such as participants’ personal information, a ten-point rating scale to measure their general preference for the experimental and control groups, and questionnaires to gather participants’ opinions about the two design groups [

35]. The survey aimed to collect additional feedback that could not be observed through the NASA TLX tool. The survey comprised both qualitative and quantitative studies, and we hoped to obtain more comprehensive results from it.

The demo comprises two components: hardware and software. The software aspect includes two HoloLens apps developed using the Unity engine. Both apps share the same task goal but employ different interaction methods. One uses natural interaction as part of the treatment group, while the other utilizes traditional WIMP interaction and served as the control group. The hardware component is a smart lock that communicates with the HoloLens app via Bluetooth Classic.

3.2. Implementation

3.2.1. Software

We developed two HoloLens Apps by using Unity engine.

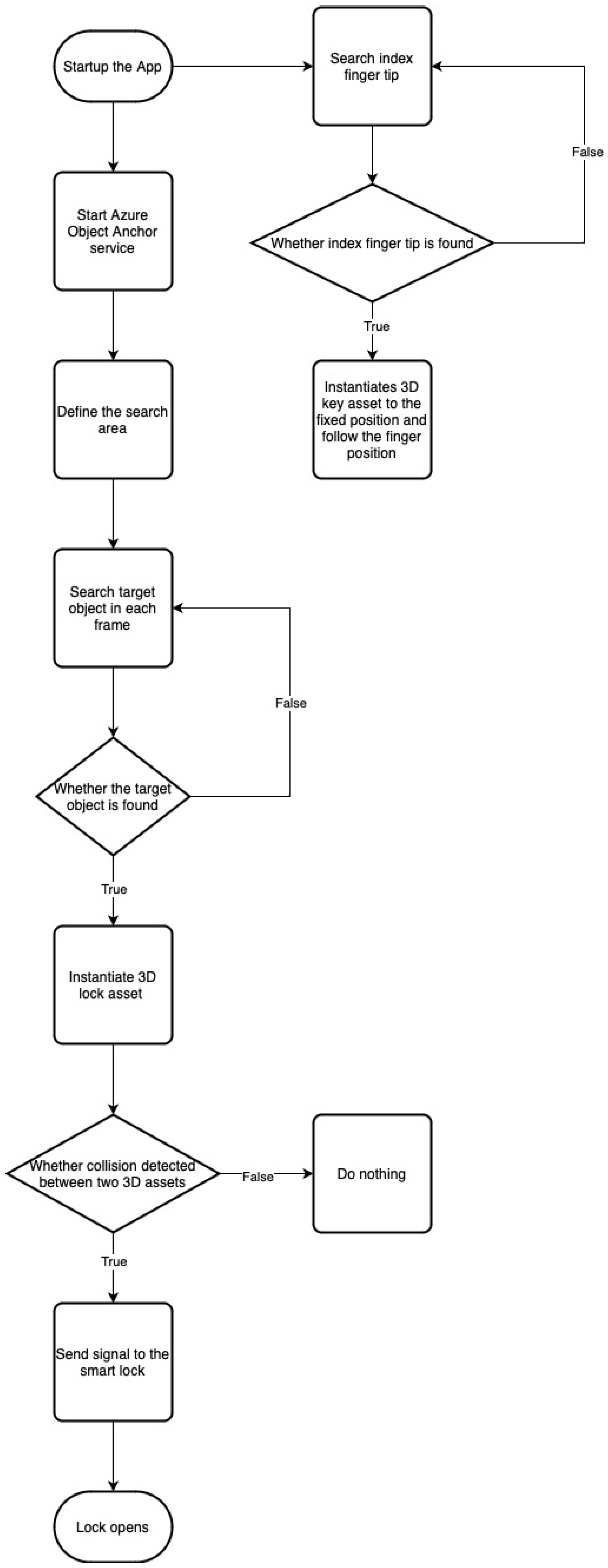

Figure 1 illustrates the overall workflow of the case_NI. The case_NI utilizes Azure Object Anchors (AOA) to identify the target in the real world and align the 3D content with it [

36]. It requires the upload of a 3D asset of the target object for object-detection purposes. AOA employs a spatial camera to scan the surrounding area and construct a 3D model of the environment. It recognizes the target by matching the 3D asset of the target with the pre-scanned 3D environment. AOA then returns the target’s position in 3D coordinates, which are compatible with Unity coordinates. We can use this position value as an anchor to align the 3D object with the anchor. In our case, the target is a rectangular box, and once we obtain its position, we spawn a virtual lock in front of the box.

To implement the interaction feature, we employed the Mixed Reality Toolkit second version (MRTK2). MRTK2 is a Unity project containing multiple components that can be integrated into cross-platform MR or VR apps [

37]. Our aim was to emulate the interaction that people commonly use in daily life to open a lock, necessitating a suitable method to manipulate the virtual key. A common approach to object manipulation in MR is the object manipulator, a component from MRTK2 that enables users to move and rotate objects using their hands. However, after applying this approach to our project, we found that the manipulation experience was not sufficiently smooth and accurate, particularly for rotation, as it was difficult to rotate the object to the desired angle. Consequently, we sought an alternative solution. We ultimately utilized the hand-tracking feature from MRTK2, which can accurately track the user’s finger joints in real-time, enabling us to monitor the position of the user’s index fingertip. Moreover, we found the tracking to be smooth and accurate and thus well-suited to our needs. Meanwhile, a 3D model of a key follows the finger’s position and orientation. Once the key is inserted into the 3D model of the lock, the box opens by sending a signal to the hardware component.

Figure 2 presents the overall workflow of the case_WIMP. The case_WIMP employs the conventional button-press interaction representative of WIMP interaction. Upon starting the app, an unlock button materializes, hovering near the user’s hand. Users can click the button using their fingers, as they typically would on a smartphone, prompting the box to open. The control group serves as a comparison to the treatment group, allowing users to discern the differences between natural interaction and traditional WIMP interaction.

Figure 3 was taken with the HoloLens screenshot function, so we can see the user’s perspective.

3.2.2. Hardware

We have developed a smart lock system utilizing an Arduino board; the circuit diagram of the system is shown in

Figure 4. The parts we used for the system are listed in

Table 1. The system incorporates a solenoid lock, which is controlled by the flow of electric current. This current is regulated by a relay module, allowing us to determine whether the current should pass through the solenoid lock or not. When the current is enabled, the lock opens; otherwise, it remains closed.

Additionally, we integrated a Bluetooth HC-05 module to facilitate communication with a mobile app. This module receives data from the app to decide whether the relay module should allow the current to pass through. We attached the lock to a portable box for experimental purposes, as shown in

Figure 5. While the primary use case for the lock would typically be doors, the box serves as a more practical, affordable, and portable option for testing.

3.3. Experiment Procedure

We carried out an experiment in which we asked 10 participants to first engage with the case_WIMP, followed by the case_NI. Upon completion of the case_WIMP, participants were required to fill out the NASA TLX form and a survey. They followed the same procedure after concluding the tasks associated with case_NI. Before initiating the experiment, we provided a brief overview of our research to each participant. They were informed that our study aims to explore MR interaction in the future. Additionally, they were made aware that the current MR HMDs possess several limitations, which were not being considered within the scope of this particular experiment.

Table 2 listed the questions we included in the survey.

To gain an understanding of the overall trend of user preference, we proposed Questions 3 and 4 to ask users to rate their score. Additionally, we included qualitative questions (Question 5 through Question 7) to ask participants to share their opinions. The qualitative questions were aligned with our research goal to ensure that we gathered relevant information from the participants. Questions 5 and 6 are for exploring both the advantages and limitations of natural interaction, while Question 7 is to validate if natural interaction could reduce technology anxiety, with the learning barrier being one of the key factors that contributes to it. After collecting data from the participants, we employed boxplots to visualize the distribution of NASA TLX scores and rating scales. This enabled us to compare the outcomes of the two cases (natural interaction and WIMP interaction) and draw conclusions. We included Questions 6 and 7 in the survey to gain insight into the participants’ feelings when using natural interaction, which could serve as a reference for answering our research questions. By analyzing these responses, we sought to understand the users’ opinions about the advantages and limitations of using natural interaction in comparison to traditional WIMP interaction, ultimately providing valuable insights for the development of MR smart home controllers and other MR applications.

We recruited 10 participants from Waseda University, including 7 males and 3 females ranging in age from 22 to 33 years old, with an average age of 25.7. Forty percent of the participants had no prior experience with MR HMD devices. All participants were given a description of the research and a full explanation of how to use the NASA TLX as well as an overview of the user study procedure.

4. Results

4.1. NASA TLX

Figure 6 presents the boxplot for the NASA TLX results. The X-axis represents each sub-scale, and the Y-axis denotes the overall score for each sub-scale. Outliers, indicated by bubbles above the boxes, were excluded as they significantly differ from all other values and could skew the results. We conducted

t-tests to measure the significance for each pair of sub-scales and found significance in mental (

p = 0.042), physical (

p = 0.0218), and temporal demand (

p = 0.0271). Pairs with

p-values smaller than 0.05 are labeled with asterisks.

Mental demand evaluates the level of task complexity. When comparing the mental demand between case_NI and case_WIMP, it becomes evident that the median of case_WIMP (22.5) is lower than that of case_NI (27.5). This suggests that although both tasks present a low degree of difficulty, case_WIMP is marginally less complex than case_NI. Additionally, the interquartile range (IQR) of case_NI is broader than that of case_WIMP, indicating a greater dispersion of user scores for case_NI. The quartiles disclose that the middle 50% of data for case_NI are situated between 20 and 50, while for case_WIMP, they are between 10 and 30, which is a lower range compared to case_NI. Based on this analysis, it can be concluded that users generally perceive the task in case_WIMP to be less complicated than in case_NI.

Another crucial category is physical demand, which assesses the level of physical effort needed to accomplish a task. When comparing the data for both cases, case_NI exhibits a considerably higher median (30) than case_WIMP (10), suggesting that users generally regard case_NI as more physically demanding. Furthermore, the middle 50% of case_NI data ranges between 10 and 55, which is both broader and higher than that of case_WIMP (5 to 20). The whiskers of case_NI (5 to 70) also extend further than those of case_WIMP (5 to 20), signifying that the full spectrum of user ratings for case_WIMP is much more constrained than that of case_NI.

The last set of data pertains to temporal demand, which evaluates whether the pace required for task completion is leisurely or swift. In line with the other two subcategories, the median of case_NI (30) surpasses that of case_WIMP (17.5), implying that users perceive the task in case_NI as involving greater time pressure compared to case_WIMP. The IQR of case_NI (10 to 40) is more expansive than that of case_WIMP (10 to 25), and the whisker of case_NI (10 to 70) also extends further than that of case_WIMP (0 to 30). This demonstrates a higher degree of variability in user ratings for case_NI.

Overall, the NASA TLX results reveal that case_NI demands greater mental, physical, and temporal effort. These findings correspond with our expectations, as natural interaction involves supplementary tasks, such as inserting a virtual key into a virtual lock. This process necessitates increased time and physical exertion compared to simply pressing a virtual button. Moreover, people are more accustomed to WIMP interaction, as it is frequently employed in everyday life, making the elevated mental demand for case_NI justifiable.

4.2. Survey

This section includes the user’s rating results for two cases and the user’s feedback we gathered from the survey.

4.2.1. Rating Score

After completing the NASA TLX form, participants rated their preferences. The results are shown in

Figure 7. We used the Wilcoxon Signed Rank Test [

38] to calculate the significance of the paired data (

p = 0.0078). Examining the results from the user rating scale, the median of case_NI is significantly higher than that of WIMP. Both groups have a similar range; however, case_WIMP has a wider range (4 to 10) than case_NI (5 to 10), indicating greater variability in user ratings for WIMP. The IQR of case_NI (6 to 8) is distributed higher than that of case_WIMP (5 to 7). Thus, overall, participants prefer natural interaction, and the ratings for case_NI are more consistent.

4.2.2. User Feedback

We collected feedback from participants based on the two questions we presented. On the positive side of natural interaction, participants found it easy to understand. As natural interaction closely mirrors real-world operations, it offers superior feedback compared to WIMP interaction. One participant remarked, “the natural interaction can simulate real-world interaction, which makes me feel more smooth and seamless.” This observation supports our approach in providing an immersive experience and user-friendly operation. Another participant mentioned, “You can experience the practicality of the process of unlocking the door and strengthen your perceptions and know your mission objectives”. This suggests that our design enables a clear understanding of the task goal. Additional comments were also positive about the user experience, leading us to conclude that natural interaction is intuitive and immersive and provides rich feedback.

On the negative side, participants felt that natural interaction required too much time to complete the task. As the NASA TLX measured, it demands more physical effort than WIMP interaction. One participant observed, “implementing natural interaction might need much more physical effort than the WIMP interaction. The scale of the virtual object needs to be appropriate. Also, the texture of virtual objects needs to be detailed”. We conclude that to capitalize on natural interaction, which reduces the user’s cognitive load, the 3D model of virtual objects must be easily recognizable. This necessitates proper art design and exceptional 3D modeling skills, which can be time-consuming and costly. Moreover, it involves more physical activity and time, which may be less convenient for users already familiar with the task. Another participant who preferred WIMP interaction commented, “There isn’t a particular reason for my preference for WIMP interaction; I am simply more familiar with it.” This response also highlights the fact that people have been using WIMP interaction for decades, making it challenging to persuade them to transition to a new form of interaction unless they clearly see the necessity.

Finally, we asked participants whether they believed natural interaction could reduce the learning curve of new technology, and 70 % of them agreed. Participants who shared this opinion commonly thought that utilizing real-world interactions would require less effort to learn complex tasks, allowing users to apply their real-life habits to the task flow. One participant commented, “The natural interaction provides a more natural interface, like in real-world interaction. I think the natural interface could reduce the time for me to learn how to interact with the buttons and remember how to use them.” This observation highlights a typical usage scenario. WIMP interaction UI can become complicated, for instance, with many apps featuring multi-level menus. The operation on MR HMD (3D UI) cannot be as precise as the operation on 2D UI, such as on touch screens or when using a mouse. This suggests that natural interaction is better suited for 3D UI than WIMP interaction. Participants also noted that natural interaction could be applied to scenarios that lack guidance.

5. Discussion

The user study has provided valuable insights into the natural interaction experience. The distribution of rating scores clearly demonstrates that the participants highly accepted the natural interaction. Our survey findings reveal that six participants found the natural interaction intuitive, contributing to an easy understanding of the task—a result that aligns with our expectations. As we indicated in the introduction, a substantial learning barrier is a key factor in provoking technology anxiety. Consequently, this positive feedback from participants bolsters our conclusion that we are mitigating such concerns. However, as some participants pointed out, our design does requires more time and effort than WIMP interaction. This was also reflected in the NASA TLX results. This shortfall should not be overlooked. It suggests that when a task is relatively uncomplicated, the WIMP interface operation process may be simpler and more time-efficient. Therefore, it is crucial to balance the intuitiveness of natural interaction with the efficiency of traditional interfaces based on the complexity of the task at hand. The above findings suggest that natural interaction could significantly enhance user experience across various technology applications, despite the existing limitations that we previously noted and should continue to address. This approach could simplify the learning process and expedite the adoption rate. As users find these interaction methods intuitive, the barriers to embracing new technologies are reduced, potentially facilitating broader and faster uptake.

The overall results from the NASA TLX align with the user feedback, particularly in terms of physical and temporal demand. Since users need to pick up the key to open the lock, additional operations are required, which naturally takes more time and physical energy compared to simply pressing a button. However, we observed a discrepancy between the mental demand results from the NASA TLX and the user feedback in the survey. To recap, a high mental demand score signifies a more complex task. The distribution of NASA TLX scores revealed that more participants rated natural interaction with higher mental demand than that of WIMP interaction, indicating that natural interaction is more complex. Conversely, user feedback suggested that natural interaction is easy to learn and straightforward.

We believe there are several reasons for this deviation. Firstly, compared to WIMP interaction, people are less familiar with natural interaction. Even though natural interaction is easy to learn, participants still need some time to understand what it entails. Moreover, according to the survey, 40% of participants had no prior experience using MR HMD devices. This means that a significant portion of participants did not know how to use HoloLens. Even though natural interaction is simple to use, we noticed that some participants had little confidence in using the hand-tracking feature. Despite having a correct understanding of the gesture without any introduction, they still doubted if they were performing it correctly, which could also increase the mental demand of natural interaction. Lastly, the UI of the WIMP interaction demo was overly simplistic. Since we only conducted a single task, we placed just one button on the menu; thus, the shortcomings of WIMP interaction were not fully exposed within the 3D environment. In real-world scenarios, the UI could become more complicated as the features of a smart home controller increase, which could affect the results.

Despite the limitations discussed above, we identified a few additional constraints through our study. Firstly, due to the small number of participants, we were only able to collect a limited amount of data, which may affect the accuracy of our results. Furthermore, since all participants were college students, they were well-educated and relatively young in age, meaning the results may not be applicable to the larger population. Lastly, our study is designed based on the existing technology of MR devices, and we do not know what new technological features future MR HMDs will offer, so our design may need to be revisited in the future.

6. Conclusions

This paper introduced a design that integrates natural interaction into an MR smart home control system with the aim of mitigating technology anxiety. Our primary objective was to foster user acceptance of this new technology by reducing the learning barrier. To evaluate the user experience, we compared it with traditional WIMP interaction in a 3D space and investigated the potential and limitations of natural interaction in reducing the learning curve.

Through our user study, we discovered that natural interaction not only clarified task objectives but also reinstated users’ familiar methods of accomplishing tasks. This significantly reduced the learning curve, meeting our initial expectations. Additionally, our observations suggested that for simple workloads, the use of natural interaction may not be necessary. In such contexts, WIMP interaction could potentially offer a more efficient approach.

For future work, we should enhance our experiment and add more cases to create more comprehensive test scenarios. Additionally, we need to improve our experiment procedure, such as incorporating a session to help participants familiarize themselves with the essential operations of HoloLens. This will reduce external influencing factors and improve the accuracy of the test data. Furthermore, we can apply natural interaction to other fields to explore its application scenarios beyond smart homes.

Author Contributions

Conceptualization, Y.S.; methodology, Y.S.; software, Y.S.; validation, Y.S.; formal analysis, Y.S.; investigation, Y.S.; resources, T.N.; data curation, Y.S.; writing—original draft preparation, Y.S.; writing—review and editing, T.N.; visualization, Y.S.; supervision, T.N.; project administration, Y.S.; funding acquisition, T.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

All data collected during this research are presented in full in this manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Microsoft. What Is Mixed Reality?—Mixed Reality. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/discover/mixed-reality (accessed on 11 April 2023).

- Milgram, P.; Kishino, F. A Taxonomy of Mixed Reality Visual Displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Speicher, M.; Hall, B.D.; Nebeling, M. What Is Mixed Reality? Association for Computing Machinery: New York, NY, USA, 2019; CHI’ 19; pp. 1–15. [Google Scholar] [CrossRef]

- Dean, S. Amazon, Samsung, Google, Apple: Big 4 Driving Smart Home Device Sales | Tech Times. Available online: https://www.techtimes.com/articles/192188/20170113/amazon-samsung-google-apple-big-4-driving-smart-home-device-sales.htm (accessed on 11 April 2023).

- Wilson, C.; Hargreaves, T.; Hauxwell-Baldwin, R. Benefits and risks of smart home technologies. Energy Policy 2017, 103, 72–83. [Google Scholar] [CrossRef]

- Li, W.; Yigitcanlar, T.; Erol, I.; Liu, A. Motivations, barriers and risks of smart home adoption: From systematic literature review to conceptual framework. Energy Res. Soc. Sci. 2021, 80, 102211. [Google Scholar] [CrossRef]

- Tsai, T.H.; Lin, W.Y.; Chang, Y.S.; Chang, P.C.; Lee, M.Y. Technology anxiety and resistance to change behavioral study of a wearable cardiac warming system using an extended TAM for older adults. PLoS ONE 2020, 15, e0227270. [Google Scholar] [CrossRef] [PubMed]

- Pal, D.; Funilkul, S.; Charoenkitkarn, N.; Kanthamanon, P. Internet-of-Things and Smart Homes for Elderly Healthcare: An End User Perspective. IEEE Access 2018, 6, 10483–10496. [Google Scholar] [CrossRef]

- Achim, N.; Kassim, A.A. Computer Usage: The Impact of Computer Anxiety and Computer Self-efficacy. Procedia-Soc. Behav. Sci. 2015, 172, 701–708. [Google Scholar] [CrossRef]

- Bellini, C.G.P.; Isoni Filho, M.M.; de Moura Junior, P.J.; Pereira, R.d.C.d.F. Self-efficacy and anxiety of digital natives in face of compulsory computer-mediated tasks: A study about digital capabilities and limitations. Comput. Hum. Behav. 2016, 59, 49–57. [Google Scholar] [CrossRef]

- Baraldi, S.; Bimbo, A.D.; Landucci, L.; Torpei, N. Natural Interaction. In Encyclopedia of Database Systems; Liu, L., ÖZsu, M.T., Eds.; Springer: Boston, MA, USA, 2009; pp. 1880–1885. [Google Scholar]

- Valli, A. The design of natural interaction. Multimed. Tools Appl. 2008, 38, 295–305. [Google Scholar] [CrossRef]

- Lee, M.; Billinghurst, M.; Baek, W.; Green, R.; Woo, W. A usability study of multimodal input in an augmented reality environment. Virtual Real. 2013, 17, 293–305. [Google Scholar] [CrossRef]

- Hilliges, O.; Kim, D.; Izadi, S.; Weiss, M.; Wilson, A. HoloDesk: Direct 3d interactions with a situated see-through display. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar] [CrossRef]

- Benko, H.; Jota, R.; Wilson, A. MirageTable: Freehand interaction on a projected augmented reality tabletop. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar] [CrossRef]

- Apple. Home App. Available online: https://www.apple.com/home-app/ (accessed on 11 April 2023).

- Apple. HomeKit. Available online: https://developer.apple.com/documentation/homekit/ (accessed on 11 April 2023).

- Ishii, H.; Ullmer, B. Tangible bits: Towards seamless interfaces between people, bits and atoms. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Atlanta, GA, USA, 22–27 March 1997. [Google Scholar] [CrossRef]

- Rekimoto, J.; Nagao, K. The world through the computer: Computer augmented interaction with real world environments. In Proceedings of the 8th Annual ACM Symposium on User Interface and Software Technology, Pittsburgh, PA, USA, 15–17 November 1995. [Google Scholar] [CrossRef]

- Kyriakou, P.; Hermon, S. Can I touch this? Using Natural Interaction in a Museum Augmented Reality System. Digit. Appl. Archaeol. Cult. Herit. 2019, 12, e00088. [Google Scholar] [CrossRef]

- Nogueira, P.A.; Teófilo, L.F.; Silva, P.B. Multi-modal natural interaction in game design: A comparative analysis of player experience in a large scale role-playing game. J. Multimodal User Interfaces 2015, 9, 105–119. [Google Scholar] [CrossRef]

- Aliprantis, J.; Konstantakis, M.; Nikopoulou, R.; Mylonas, P.; Caridakis, G. Natural Interaction in Augmented Reality Context. In Proceedings of the VIPERC@ IRCDL, Pisa, Italy, 30 January 2019; pp. 50–61. [Google Scholar]

- Gloumeau, P.C.; Stuerzlinger, W.; Han, J. PinNPivot: Object Manipulation Using Pins in Immersive Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2021, 27, 2488–2494. [Google Scholar] [CrossRef] [PubMed]

- Chua, S.; Chen, D.T.; Wong, A. Computer anxiety and its correlates: A meta-analysis. Comput. Hum. Behav. 1999, 15, 609–623. [Google Scholar] [CrossRef]

- Glass, C.T.; Knight, L.A. Cognitive factors in computer anxiety. Cogn. Ther. Res. 1988, 12, 351–366. [Google Scholar] [CrossRef]

- Fernández-Ardèvol, M.; Ivan, L. Why Age Is Not that Important? An Ageing Perspective on Computer Anxiety. In Human Aspects of IT for the Aged Population. Design for Aging; Springer International Publishing: Cham, Switzerland, 2015; pp. 189–200. [Google Scholar]

- Ivan, L.; Schiau, I. Experiencing Computer Anxiety Later in Life: The Role of Stereotype Threat. In Human Aspects of IT for the Aged Population. Design for Aging; Springer International Publishing: Cham, Switzerland, 2016; pp. 339–349. [Google Scholar]

- Cheng, K.H. Reading an augmented reality book: An exploration of learners’ cognitive load, motivation, and attitudes. Australas. J. Educ. Technol. 2017, 33. [Google Scholar] [CrossRef]

- Che Dalim, C.S.; Sunar, M.S.; Dey, A.; Billinghurst, M. Using augmented reality with speech input for non-native children’s language learning. Int. J. Hum.-Comput. Stud. 2020, 134, 44–64. [Google Scholar] [CrossRef]

- Kobayashi, L.; Zhang, X.C.; Collins, S.A.; Karim, N.; Merck, D.L. Exploratory Application of Augmented Reality/Mixed Reality Devices for Acute Care Procedure Training. West. J. Emerg. Med. 2018, 19, 158–164. [Google Scholar] [CrossRef] [PubMed]

- Mahroo, A.; Greci, L.; Sacco, M. HoloHome: An Augmented Reality Framework to Manage the Smart Home. In Proceedings of the Augmented Reality, Virtual Reality, and Computer Graphics, Santa Maria al Bagno, Italy, 24–27 June 2019; De Paolis, L.T., Bourdot, P., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 137–145. [Google Scholar]

- Inomata, S.; Komiya, K.; Iwase, K.; Nakajima, T. AR Smart Home: A Smart Appliance Controller Using Augmented Reality Technology and a Gesture Recognizer. In Proceedings of the Twelfth International Conference on Advances in Multimedia 2020, Lisbon, Portugal, 23–27 February 2020. [Google Scholar]

- TLX @ NASA Ames—Home. Available online: https://humansystems.arc.nasa.gov/groups/TLX/ (accessed on 12 April 2023).

- Hart, S.G.; Staveland, L.E. Development of NASA-TLX (Task Load Index): Results of Empirical and Theoretical Research. In Advances in Psychology; Hancock, P.A., Meshkati, N., Eds.; North-Holland: Amsterdam, The Netherlands, 1988; Volume 52, pp. 139–183. [Google Scholar] [CrossRef]

- Schrepp, M. User Experience Questionnaire Handbook; Team UEQ: Hockenheim, Germany, 2015. [Google Scholar] [CrossRef]

- Microsoft. Azure Object Anchors Overview—Azure Object Anchors. Available online: https://learn.microsoft.com/en-us/azure/object-anchors/overview (accessed on 12 April 2023).

- polar kev. MRTK2-Unity Developer Documentation—MRTK 2. Available online: https://learn.microsoft.com/en-us/windows/mixed-reality/mrtk-unity/mrtk2/ (accessed on 14 April 2023).

- Wilcoxon, F. Individual Comparisons by Ranking Methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).