HP-LSTM: Hawkes Process–LSTM-Based Detection of DDoS Attack for In-Vehicle Network

Abstract

1. Introduction

- We are the first to utilize the Hawkes process for modeling and analysis in the field of DDoS intrusion detection in the context of the detection of in-vehicle DDoS attacks, where the Hawkes process parameters are capable of capturing the dynamic and self-exciting properties inherent in the sequence of attack events.

- We propose a novel network structure, the HP-LSTM block, which simulates the Hawkes process by introducing Hawkes parameters to capture more temporal features compared to the original LSTM structure. Moreover, our model combines this structure with residual self-attention blocks, enhancing efficiency in detecting DDoS attacks in the in-vehicle Ethernet SOME/IP.

- Based on our extensive experiments, where various HP-LSTM structures were compared, we identified the optimal HP-LSTM design, achieving an average F1-score of 99.3% in detecting DDoS attacks. Furthermore, HP-LSTM demonstrates its capability to meet the stringent traffic and real-time constraints outlined in IEEE 802.1DG [14] (<1 ms), thereby showcasing its proficiency in achieving real-time detection within real-world vehicular environments.

2. Related Work

2.1. Review of Existing Research on DDoS Detection for In-Vehicle Network

2.2. Hawkes Process for Modeling Network Attack

2.3. Critical Review

3. Preliminary

3.1. SOME/IP Protocol

3.1.1. Specification of Message Format

- Message ID (Service ID/Method ID) [32 Bits];

- Length [32 Bits];

- Request ID (Client ID/Session ID) [32 Bits];

- Protocol Version [8 Bits];

- Interface Version [8 Bits];

- Message Type [8 Bits];

- Return Code [8 Bits].

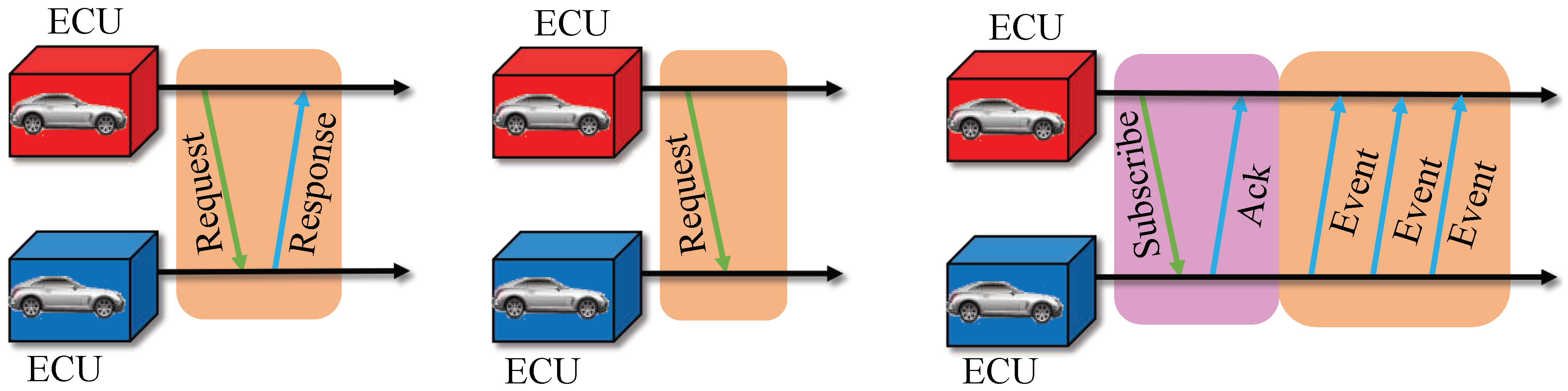

3.1.2. Communication Pattern

- Request/Response: Request/Response is one of the most crucial communication patterns, in which one ECU sends a request and another ECU sends a response. In fact, Request/Response communication is an RPC that comprises a request and a response [28]. The response should be sent after the request, and the server must not send response messages to clients that have not sent requests.

- Fire&Forget: Requests without response message are called Fire&Forget [28]. This means that the client sends a request without the server providing any response. This type of request, known as “REQUEST NO RETURN”, differs from the request in Request/Response communication.

- Notification Events: This communication pattern requires the client to first send a subscription request to the server, and the server responds with a confirmation message. These mechanisms are implemented in SOME/IP-SD [28]. In certain scenarios, the server publishes events to the clients, typically containing updated values or previously occurred events. Additionally, it should be noted that the message type for the entire publication process is NOTIFICATION.

3.2. Hawkes Process

- Counting process: A counting process, typically denoted as , is a type of stochastic process. The mathematical expression for the counting process is given bywhere represents the time points at which events occur, and is an indicator function that equals 1 if an event occurs at time and 0 otherwise. Therefore, the counting process represents the number of events occurring within the given time interval . Additionally, the counting process satisfies the following properties [32]:where denotes the probability of events occurring within the time interval .

- Intensity function: The intensity function is commonly denoted as . For instance, in the case of a homogeneous Poisson process, its intensity function is a constant [33]:where belongs to .

4. HP-LSTM Detection Model

4.1. Overview of Our Model

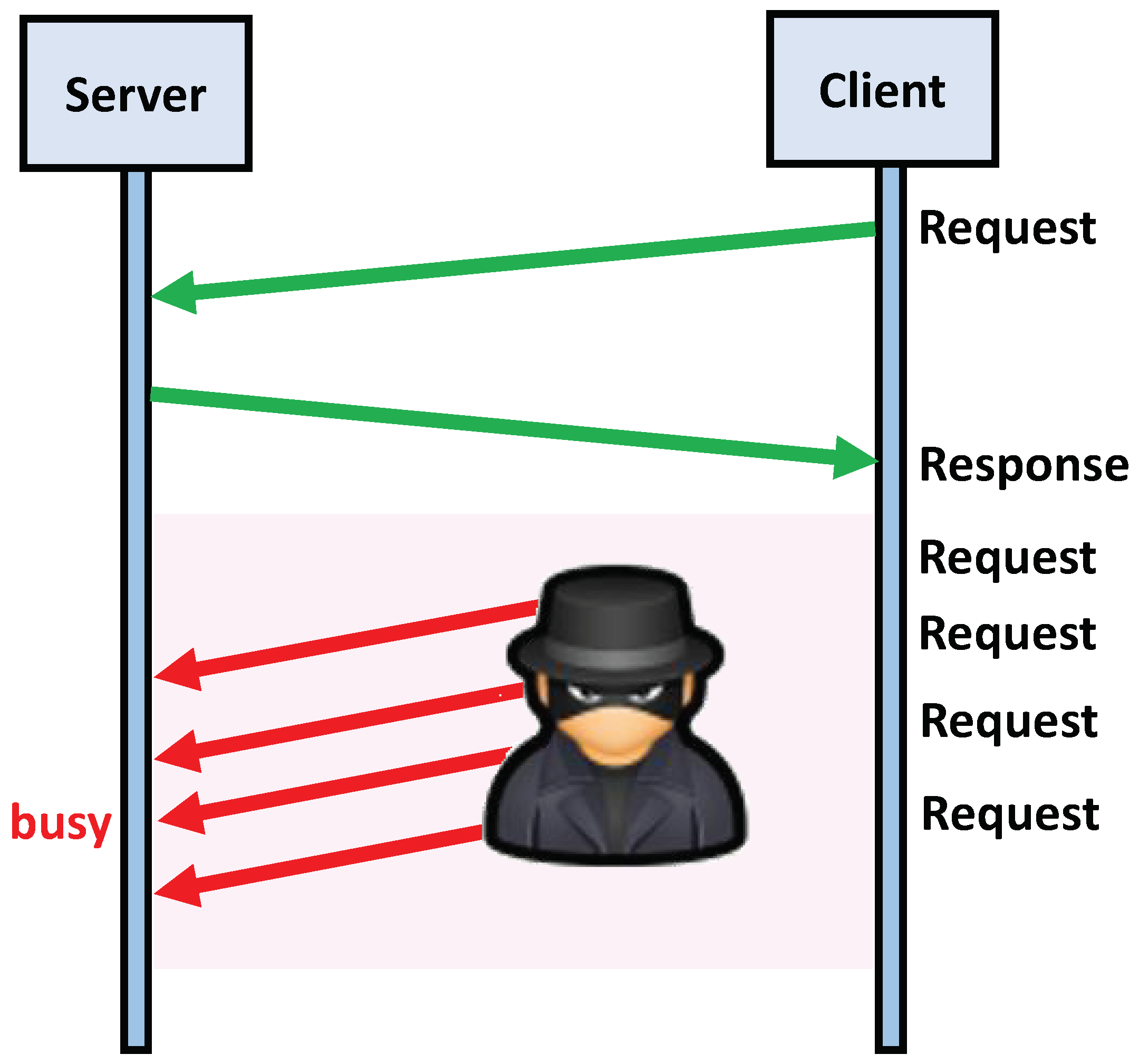

4.2. Cyberattack Modeling Module

4.3. Hawkes Process Formulation Module

- represents the baseline intensity of event occurrences, indicating the initial trend of packet-forwarding events.

- denotes the amplitude parameter, which reflects the abrupt increase in the intensity function for each event occurrence. It signifies the positive influence of each event occurrence on subsequent events.

- is the decay parameter controlling the decay rate of event occurrences, indicating that the positive influence of event occurrences diminishes over time.

4.4. Training Module

- HP-LSTM (our best):

- HP-LSTM hted-chm:

- HP-LSTM ht-chm:

- HP-LSTM hte-chp:

5. Experiments

5.1. Experimental Preparation

5.1.1. Dataset

5.1.2. Experimental Environment

5.1.3. Evaluation Metrics

- Accuracy: Accuracy refers to the proportion of correctly predicted samples to the total number of samples. It is one of the most intuitive performance metrics, calculated as follows:where TP represents true positives (the number of samples correctly predicted as positive), TN represents true negatives (the number of samples correctly predicted as negative), FP represents false positives (the number of samples incorrectly predicted as positive), and FN represents false negatives (the number of samples incorrectly predicted as negative).

- Precision: Precision refers to the proportion of true positive samples among the samples predicted as positive. It measures the accuracy of the model in predicting positive samples, calculated as follows:

- Recall: Recall refers to the proportion of true positive samples among the actual positive samples. It measures the model’s ability to identify positive samples, calculated as follows:

- F1 Score: The F1 score is the harmonic mean of precision and recall, synthesizing the model’s accuracy and recall. It is calculated as follows:

5.2. Experimental Results

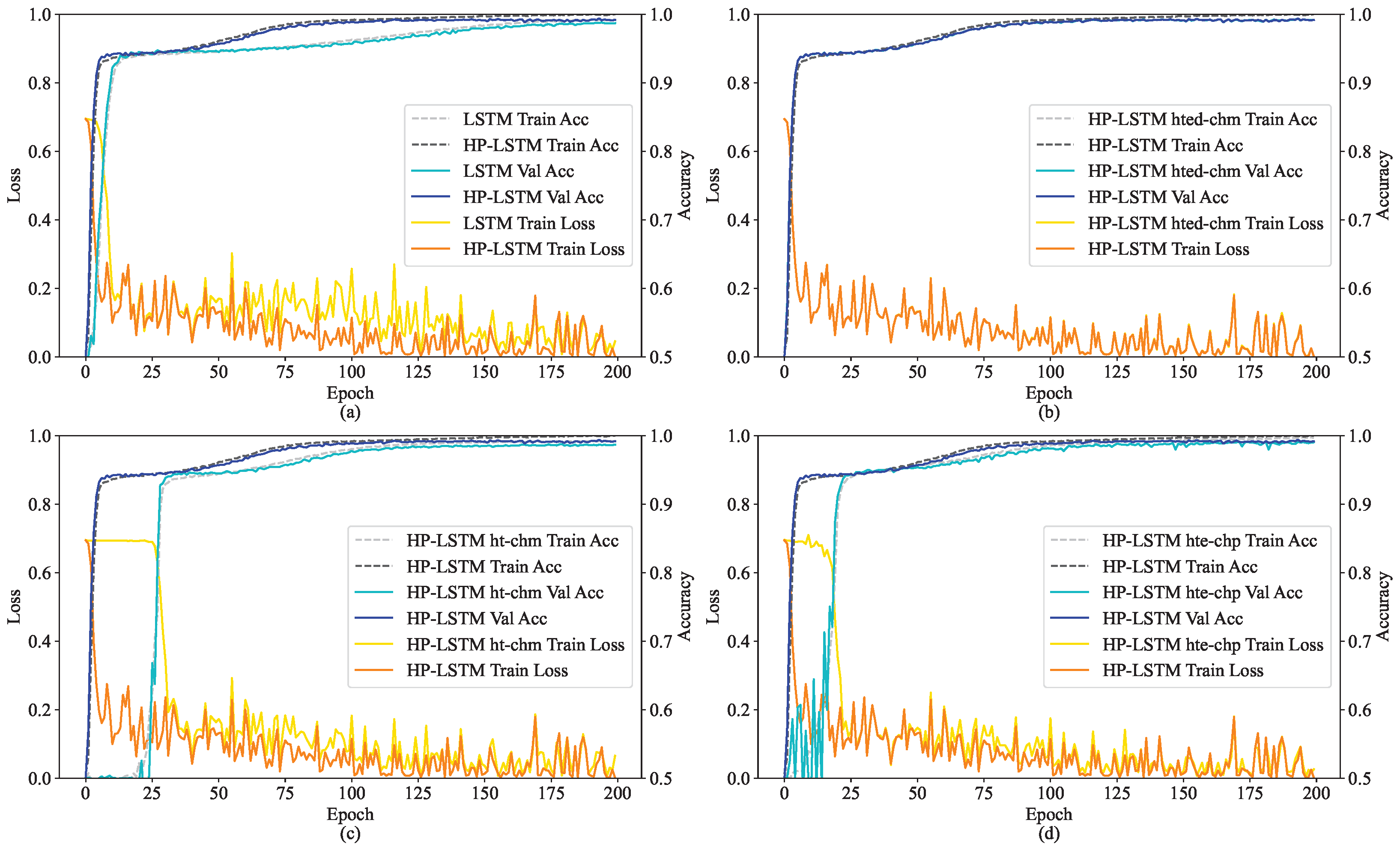

5.2.1. Performance in Training

- In the original Hawkes process, the parameter represents the intensity of stimulus decay over time and is treated with an exponential function. When simulating this process within a deep learning model, the exponential operation can appropriately reproduce the dynamics originally depicted by the Hawkes process, thus benefiting the model’s learning; conversely, removing it disrupts the stimulus pattern as depicted by the Hawkes process.

- Our proposed computation of the next moment’s cell values with Hawkes coefficients, when using multiplication, can constrain both the output of the forget gate and input gate, which aligns more closely with the initial design purpose of Hawkes coefficients. Addition, having a weaker constraining capability than multiplication, actually increases the learning burden on the model.

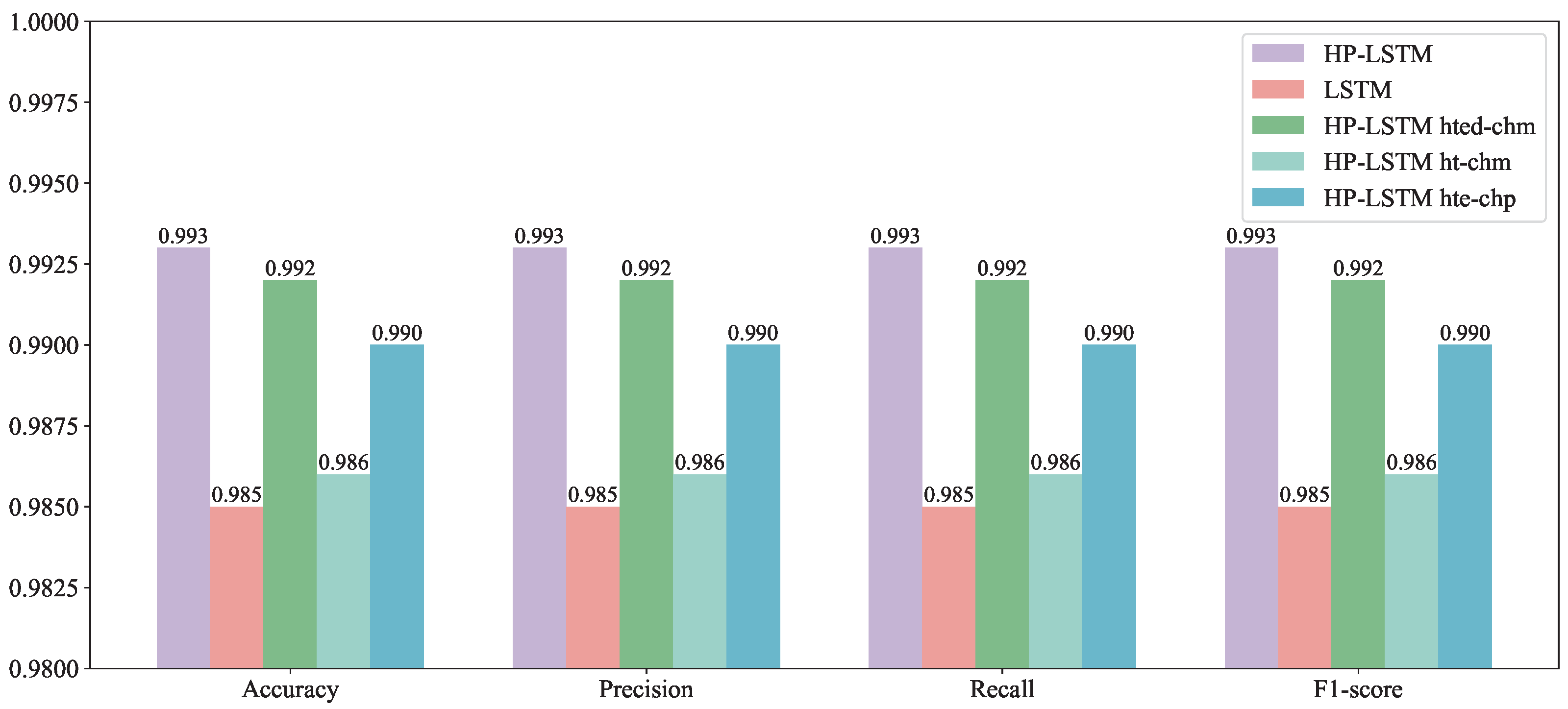

5.2.2. Model Evaluation

6. Discussion

6.1. Application

6.2. Limitations

- Dataset generation: We utilized a mature SOME/IP generator to produce simulated datasets for experimentation. Due to SOME/IP being proposed by BMW and not widely deployed, researches regarding SOME/IP mostly rely on simulated data. Currently, there is a lack of open-source datasets, with most resources only providing generators or simulators. This leads to a lack of comparisons with benchmark datasets.

- Single attack: In real-world attack scenarios, attackers often employ more complex methods to achieve their objectives. Our detection model, targeting a single type of attack (DDoS), has its limitations. We intend to extend our model to cover a broader range of attacks in future research.

- Single-communication protocol: To achieve various functions, connected and autonomous vehicles often employ different communication protocols, such as CAN, MOST, FlexRay, LIN, Ethernet, etc. Attackers typically execute attacks involving multiple in-vehicle communication protocols within a comprehensive attack chain. Therefore, our focus on a single communication protocol, SOME/IP, may result in our model failing to capture anomalies that could be reflected in data from other communication protocols.

- Potential pitfalls: Due to limitations in available datasets, obtaining actual communication data from in-vehicle networks proved challenging. We needed to use a generator to produce simulated data for all our experiments. We employed a mature generator to simulate real attack scenarios to the best of our ability. On the other hand, the datasets utilized by researchers, including our own, were generated using open-source tools like the SOME/IP generator, potentially leading to sampling bias [46].

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Z.; Wei, H.; Wang, J.; Zeng, X.; Chang, Y. Security issues and solutions for connected and autonomous vehicles in a sustainable city: A survey. Sustainability 2022, 14, 12409. [Google Scholar] [CrossRef]

- Sun, X.; Yu, F.R.; Zhang, P. A survey on cyber-security of connected and autonomous vehicles (CAVs). IEEE Trans. Intell. Transp. Syst. 2021, 23, 6240–6259. [Google Scholar] [CrossRef]

- Liu, Q.; Li, X.; Sun, K.; Li, Y.; Liu, Y. SISSA: Real-time Monitoring of Hardware Functional Safety and Cybersecurity with In-vehicle SOME/IP Ethernet Traffic. arXiv 2024, arXiv:2402.14862. [Google Scholar] [CrossRef]

- Bi, R.; Xiong, J.; Tian, Y.; Li, Q.; Liu, X. Edge-cooperative privacy-preserving object detection over random point cloud shares for connected autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 24979–24990. [Google Scholar] [CrossRef]

- Anbalagan, S.; Raja, G.; Gurumoorthy, S.; Suresh, R.D.; Dev, K. IIDS: Intelligent intrusion detection system for sustainable development in autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15866–15875. [Google Scholar] [CrossRef]

- He, Q.; Meng, X.; Qu, R.; Xi, R. Machine learning-based detection for cyber security attacks on connected and autonomous vehicles. Mathematics 2020, 8, 1311. [Google Scholar] [CrossRef]

- Parkinson, S.; Ward, P.; Wilson, K.; Miller, J. Cyber threats facing autonomous and connected vehicles: Future challenges. IEEE Trans. Intell. Transp. Syst. 2017, 18, 2898–2915. [Google Scholar] [CrossRef]

- Nie, L.; Ning, Z.; Wang, X.; Hu, X.; Cheng, J.; Li, Y. Data-driven intrusion detection for intelligent internet of vehicles: A deep convolutional neural network-based method. IEEE Trans. Netw. Sci. Eng. 2020, 7, 2219–2230. [Google Scholar] [CrossRef]

- Kim, J.H.; Seo, S.H.; Hai, N.T.; Cheon, B.M.; Lee, Y.S.; Jeon, J.W. Gateway framework for in-vehicle networks based on CAN, FlexRay, and Ethernet. IEEE Trans. Veh. Technol. 2014, 64, 4472–4486. [Google Scholar] [CrossRef]

- Wang, W.; Guo, K.; Cao, W.; Zhu, H.; Nan, J.; Yu, L. Review of Electrical and Electronic Architectures for Autonomous Vehicles: Topologies, Networking and Simulators. Automot. Innov. 2024, 7, 82–101. [Google Scholar] [CrossRef]

- Fraccaroli, E.; Joshi, P.; Xu, S.; Shazzad, K.; Jochim, M.; Chakraborty, S. Timing predictability for SOME/IP-based service-oriented automotive in-vehicle networks. In Proceedings of the 2023 Design, Automation & Test in Europe Conference & Exhibition (DATE), Antwerp, Belgium, 17–19 April 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Alkhatib, N.; Ghauch, H.; Danger, J.L. SOME/IP intrusion detection using deep learning-based sequential models in automotive ethernet networks. In Proceedings of the 2021 IEEE 12th Annual Information Technology, Electronics and Mobile Communication Conference (IEMCON), Vancouver, BC, Canada, 27–30 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 0954–0962. [Google Scholar]

- Iorio, M.; Buttiglieri, A.; Reineri, M.; Risso, F.; Sisto, R.; Valenza, F. Protecting in-vehicle services: Security-enabled SOME/IP middleware. IEEE Veh. Technol. Mag. 2020, 15, 77–85. [Google Scholar] [CrossRef]

- IEEE 802.1 Working Group. IEEE 802.1 Time-Sensitive Networking Task Group. 2019. Available online: https://1.ieee802.org/tsn/802-1dg/ (accessed on 20 May 2024).

- Adhikary, K.; Bhushan, S.; Kumar, S.; Dutta, K. Hybrid algorithm to detect DDoS attacks in VANETs. Wirel. Pers. Commun. 2020, 114, 3613–3634. [Google Scholar] [CrossRef]

- Kadam, N.; Krovi, R.S. Machine learning approach of hybrid KSVN algorithm to detect DDoS attack in VANET. Int. J. Adv. Comput. Sci. Appl. 2021, 12. [Google Scholar] [CrossRef]

- Dong, C.; Wu, H.; Li, Q. Multiple observation HMM-based CAN bus intrusion detection system for in-vehicle network. IEEE Access 2023, 11, 35639–35648. [Google Scholar] [CrossRef]

- Duan, Y.; Cui, J.; Jia, Y.; Liu, M. Intrusion Detection Method for Networked Vehicles Based on Data-Enhanced DBN. In Proceedings of the International Conference on Algorithms and Architectures for Parallel Processing, Tianjin, China, 20–22 October 2023; Springer: Singapore, 2023; pp. 40–52. [Google Scholar]

- Jaton, N.; Gyawali, S.; Qian, Y. Distributed neural network-based ddos detection in vehicular communication systems. In Proceedings of the 2023 16th International Conference on Signal Processing and Communication System (ICSPCS), Bydgoszcz, Poland, 6–8 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–9. [Google Scholar]

- Ullah, S.; Khan, M.A.; Ahmad, J.; Jamal, S.S.; e Huma, Z.; Hassan, M.T.; Pitropakis, N.; Arshad; Buchanan, W.J. HDL-IDS: A hybrid deep learning architecture for intrusion detection in the Internet of Vehicles. Sensors 2022, 22, 1340. [Google Scholar] [CrossRef] [PubMed]

- Ashraf, J.; Bakhshi, A.D.; Moustafa, N.; Khurshid, H.; Javed, A.; Beheshti, A. Novel deep learning-enabled LSTM autoencoder architecture for discovering anomalous events from intelligent transportation systems. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4507–4518. [Google Scholar] [CrossRef]

- Li, Z.; Kong, Y.; Wang, C.; Jiang, C. DDoS mitigation based on space-time flow regularities in IoV: A feature adaption reinforcement learning approach. IEEE Trans. Intell. Transp. Syst. 2021, 23, 2262–2278. [Google Scholar] [CrossRef]

- Dutta, H.S.; Dutta, V.R.; Adhikary, A.; Chakraborty, T. HawkesEye: Detecting fake retweeters using Hawkes process and topic modeling. IEEE Trans. Inf. Forensics Secur. 2020, 15, 2667–2678. [Google Scholar] [CrossRef]

- Qu, Z.; Lyu, C.; Chi, C.H. Mush: Multi-Stimuli Hawkes Process Based Sybil Attacker Detector for User-Review Social Networks. IEEE Trans. Netw. Serv. Manag. 2022, 19, 4600–4614. [Google Scholar] [CrossRef]

- Sun, P.; Li, J.; Bhuiyan, M.Z.A.; Wang, L.; Li, B. Modeling and clustering attacker activities in IoT through machine learning techniques. Inf. Sci. 2019, 479, 456–471. [Google Scholar] [CrossRef]

- Pan, F.; Zhang, Y.; Head, L.; Liu, J.; Elli, M.; Alvarez, I. Quantifying Error Propagation in Multi-Stage Perception System of Autonomous Vehicles via Physics-Based Simulation. In Proceedings of the 2022 Winter Simulation Conference (WSC), Singapore, 11–14 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2511–2522. [Google Scholar]

- Scalable Service-Oriented MiddlewarE over IP (SOME/IP). Available online: https://some-ip.com/ (accessed on 20 May 2024).

- AUTOSAR. (2022) SOME/IP Protocol Specification. Available online: https://www.autosar.org/fileadmin/standards/R22-11/FO/AUTOSAR_PRS_SOMEIPProtocol.pdf (accessed on 20 May 2024).

- AUTOSAR. (2022) SOME/IP Service Discovery Protocol Specification. Available online: https://www.autosar.org/fileadmin/standards/R22-11/FO/AUTOSAR_PRS_SOMEIPServiceDiscoveryProtocol.pdf (accessed on 20 May 2024).

- Hawkes, A.G. Spectra of some self-exciting and mutually exciting point processes. Biometrika 1971, 58, 83–90. [Google Scholar] [CrossRef]

- Hawkes, A.G. Point spectra of some mutually exciting point processes. J. R. Stat. Soc. Ser. Stat. Methodol. 1971, 33, 438–443. [Google Scholar] [CrossRef]

- Freud, T.; Rodriguez, P.M. The Bell–Touchard counting process. Appl. Math. Comput. 2023, 444, 127741. [Google Scholar] [CrossRef]

- Lima, R. Hawkes processes modeling, inference, and control: An overview. SIAM Rev. 2023, 65, 331–374. [Google Scholar] [CrossRef]

- Wang, P.; Liu, K.; Zhou, Y.; Fu, Y. Unifying human mobility forecasting and trajectory semantics augmentation via hawkes process based lstm. In Proceedings of the 2022 SIAM International Conference on Data Mining (SDM), Alexandria, VA, USA, 28–30 April 2022; SIAM: Philadelphia, PA, USA, 2022; pp. 711–719. [Google Scholar]

- Cavaliere, G.; Lu, Y.; Rahbek, A.; Stærk-Østergaard, J. Bootstrap inference for Hawkes and general point processes. J. Econom. 2023, 235, 133–165. [Google Scholar] [CrossRef]

- Protter, P.E.; Wu, Q.; Yang, S. Order Book Queue Hawkes Markovian Modeling. SIAM J. Financ. Math. 2024, 15, 1–25. [Google Scholar] [CrossRef]

- Ozaki, T. Maximum likelihood estimation of Hawkes’ self-exciting point processes. Ann. Inst. Stat. Math. 1979, 31, 145–155. [Google Scholar] [CrossRef]

- Zelle, D.; Lauser, T.; Kern, D.; Krauß, C. Analyzing and securing SOME/IP automotive services with formal and practical methods. In Proceedings of the 16th International Conference on Availability, Reliability and Security, Vienna, Austria, 17–20 August 2021; pp. 1–20. [Google Scholar]

- Casparsen, A.; Sϕrensen, D.G.; Andersen, J.N.; Christensen, J.I.; Antoniou, P.; Krϕyer, R.; Madsen, T.; Gjoerup, K. Closing the Security Gaps in SOME/IP through Implementation of a Host-Based Intrusion Detection System. In Proceedings of the 2022 25th International Symposium on Wireless Personal Multimedia Communications (WPMC), Herning, Denmark, 30 October–2 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 436–441. [Google Scholar]

- Checkoway, S.; McCoy, D.; Kantor, B.; Anderson, D.; Shacham, H.; Savage, S.; Koscher, K.; Czeskis, A.; Roesner, F.; Kohno, T. Comprehensive experimental analyses of automotive attack surfaces. In Proceedings of the 20th USENIX Security Symposium (USENIX Security 11), San Francisco, CA, USA, 8–12 August 2011. [Google Scholar]

- Ashish, V. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, I. [Google Scholar]

- Gao, J.; Sun, C.; Zhao, H.; Shen, Y.; Anguelov, D.; Li, C.; Schmid, C. Vectornet: Encoding hd maps and agent dynamics from vectorized representation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11525–11533. [Google Scholar]

- Egomania. (2016) Some-ip Generator. Available online: https://github.com/Egomania/SOME-IP_Generator (accessed on 20 May 2024).

- Alkhatib, N.; Mushtaq, M.; Ghauch, H.; Danger, J.L. Here comes SAID: A SOME/IP Attention-based mechanism for Intrusion Detection. In Proceedings of the 2023 Fourteenth International Conference on Ubiquitous and Future Networks (ICUFN), Paris, France, 4–7 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 462–467. [Google Scholar]

- Yao, S.; Yang, S.; Li, Y. A Study of Machine Learning Classification Evaluation Metrics Based on Confusion Matrix and Python Implementation. Hans J. Data Min. 2022, 12, 351. Available online: https://www.hanspub.org/journal/PaperInformation?PaperID=56819& (accessed on 20 May 2024). [CrossRef]

- Arp, D.; Quiring, E.; Pendlebury, F.; Warnecke, A.; Pierazzi, F.; Wressnegger, C.; Cavallaro, L.; Rieck, K. Dos and Don’ts of Machine Learning in Computer Security. In Proceedings of the 31st USENIX Security Symposium (USENIX Security 22), Boston, MA, USA, 10–12 August 2022; pp. 3971–3988. [Google Scholar]

| Literature | Type | ML | Che | Ea | Wb | Oa |

|---|---|---|---|---|---|---|

| Adhikary, Kaushik, et al. [15] | AnovaDot and RBFDot SVM | ✓ | × | × | × | × |

| Kadam, Nivedita, and Raja Sekhar Krovi [16] | KNN and SVM | ✓ | × | × | × | × |

| Li, Zhong, et al. [22] | Reinforcement learning-based | ✓ | ✓ | × | × | ✓ |

| Dong, C., et al. [17] | MOHIDS | × | ✓ | × | ✓ | × |

| Jaton, Nicholas, et al. [19] | MLPC | ✓ | × | × | × | × |

| Duan, Yali, et al. [18] | GANs and DBN | ✓ | × | × | × | ✓ |

| Ashraf, Javed, et al. [21] | LSTM | ✓ | ✓ | × | ✓ | ✓ |

| Dutta, H. S., et al. [23] | Hawkes Process | ✓ | ✓ | × | × | × |

| Qu, Z., et al. [24] | Multi-Stimuli Hawkes Process | ✓ | ✓ | × | × | × |

| Sun et al. [25] | Multivariate Hawkes process | ✓ | ✓ | × | × | × |

| Pan, F., et al. [26] | Multi-Stage Hawkes Process | ✓ | ✓ | × | ✓ | × |

| HP-LSTM | Hawkes Process + LSTM | ✓ | ✓ | ✓ | ✓ | ✓ |

| Message ID | Message ID is a 32-bit identifier used to identify either an RPC call to a method of an application or an event. |

| Length | The Length field specifies the byte length from the Request ID/Client ID to the end of the SOME/IP message. |

| Request ID | The Request ID enables differentiation between multiple concurrent uses of the same method, getter, or setter by a server and client. |

| Protocol Version | The Protocol Version field, occupying eight bytes, identifies the used SOME/IP Header format. |

| Interface Version | The Interface Version is an eight-bit field containing the Major Version of the Service Interface. |

| Message Type | The Message Type field is utilized to distinguish between different types of messages. According to AUTOSAR, there are approximately ten commonly used message types. |

| Return Code | The Return Code is used to indicate whether a request has been successfully processed. Additionally, each message should transmit the Return Code field. |

| Payload | The Payload field represents the valid information content to be transmitted. |

| Time | src | dst | met | len | cl | se | pro | in | Message_Type | Err_Code |

|---|---|---|---|---|---|---|---|---|---|---|

| 10.1.0.8 | 10.0.0.2 | 1 | 8 | 8 | 1 | 1 | 1 | REQUEST | E_OK | |

| 10.1.0.5 | 10.0.0.1 | 273 | 14 | 5 | 1 | 1 | 1 | REQUEST | E_OK | |

| 10.1.0.2 | 10.0.0.2 | 1 | 13 | 2 | 1 | 1 | 1 | REQUEST | E_OK | |

| 10.1.0.1 | 10.0.0.1 | 273 | 25 | 1 | 1 | 1 | 1 | REQUEST | E_OK | |

| 10.0.0.1 | 10.1.0.5 | 273 | 26 | 5 | 1 | 1 | 1 | RESPONSE | E_OK | |

| 10.1.0.3 | 10.0.0.2 | 1 | 24 | 3 | 1 | 1 | 1 | REQUEST | E_OK | |

| 10.1.0.8 | 10.0.0.2 | 1 | 22 | 8 | 2 | 1 | 1 | REQUEST | E_OK | |

| 10.0.0.2 | 10.1.0.2 | 1 | 25 | 2 | 1 | 1 | 1 | ERROR | E_NOT_READAY | |

| 10.1.0.5 | 10.0.0.1 | 273 | 18 | 5 | 2 | 1 | 1 | REQUEST | E_OK | |

| 10.1.0.5 | 10.0.0.2 | 2 | 25 | 5 | 3 | 1 | 1 | NOTIFICATION | E_OK | |

| 10.0.0.2 | 10.1.0.3 | 1 | 8 | 3 | 1 | 1 | 1 | RESONSE | E_OK | |

| 10.1.0.7 | 10.0.0.7 | 2 | 16 | 7 | 2 | 1 | 1 | RESPONSE | E_OK |

| Class | Training Dataset | Test Dataset |

|---|---|---|

| Normal | 7832*128 | 1958*128 |

| Dos attack | 7832*128 | 1958*128 |

| Total | 15,664*128 | 3916*128 |

| CPU | System | Ubuntu 22.04 |

| Architecture | x86_64 | |

| CPU op-mode(s) | 32-bit, 64-bit | |

| Address sizes | 48 bits physical, 48 bits virtual | |

| Byte Order | Little Endian | |

| CPU(s) | 12 | |

| Online CPU(s) list | 0–11 | |

| Vendor ID | AuthenticAMD | |

| Model name | AMD Ryzen 5 4600H with Radeon Graphics | |

| CPU family | 23 Radeon | |

| Model | 96 | |

| Thread(s)per core | 2 | |

| Core()per socket | 6 | |

| Socket(s) | 1 | |

| Stepping | 1 | |

| CPUmax MHz | 3000 | |

| CPUmin MHz | 1400 | |

| GPU | Model | GeForce RTX3090 |

| Memory | 24,576 MiB |

| Training Parameters | Value |

|---|---|

| batch size | 128 |

| epochs | 200 |

| learning rate | |

| weight decay | |

| random seed of torch | 0 |

| random seed of numpy | 0 |

| Class | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| HP-LSTM | 0.993 | 0.993 | 0.993 | 0.993 |

| LSTM | 0.985 | 0.985 | 0.985 | 0.985 |

| HP-LSTM hted-chm | 0.992 | 0.992 | 0.992 | 0.992 |

| HP-LSTM ht-chm | 0.986 | 0.986 | 0.986 | 0.986 |

| HP-LSTM hte-chp | 0.990 | 0.990 | 0.990 | 0.990 |

| Model | Params | Params Size (MB) | Input Size (MB) | Time Cost (s) |

|---|---|---|---|---|

| HP-LSTM | 2441351 | 9.75 | 0.01 | 0.000022 |

| LSTM | 2415239 | 9.66 | 0.01 | 0.000019 |

| HP-LSTM hted-chm | 2441863 | 9.76 | 0.01 | 0.000023 |

| HP-LSTM ht-chm | 2441351 | 9.75 | 0.01 | 0.000020 |

| HP-LSTM hte-chp | 2441351 | 9.75 | 0.01 | 0.000021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Li, R.; Liu, Y. HP-LSTM: Hawkes Process–LSTM-Based Detection of DDoS Attack for In-Vehicle Network. Future Internet 2024, 16, 185. https://doi.org/10.3390/fi16060185

Li X, Li R, Liu Y. HP-LSTM: Hawkes Process–LSTM-Based Detection of DDoS Attack for In-Vehicle Network. Future Internet. 2024; 16(6):185. https://doi.org/10.3390/fi16060185

Chicago/Turabian StyleLi, Xingyu, Ruifeng Li, and Yanchen Liu. 2024. "HP-LSTM: Hawkes Process–LSTM-Based Detection of DDoS Attack for In-Vehicle Network" Future Internet 16, no. 6: 185. https://doi.org/10.3390/fi16060185

APA StyleLi, X., Li, R., & Liu, Y. (2024). HP-LSTM: Hawkes Process–LSTM-Based Detection of DDoS Attack for In-Vehicle Network. Future Internet, 16(6), 185. https://doi.org/10.3390/fi16060185