Real-Time Farm Surveillance Using IoT and YOLOv8 for Animal Intrusion Detection

Abstract

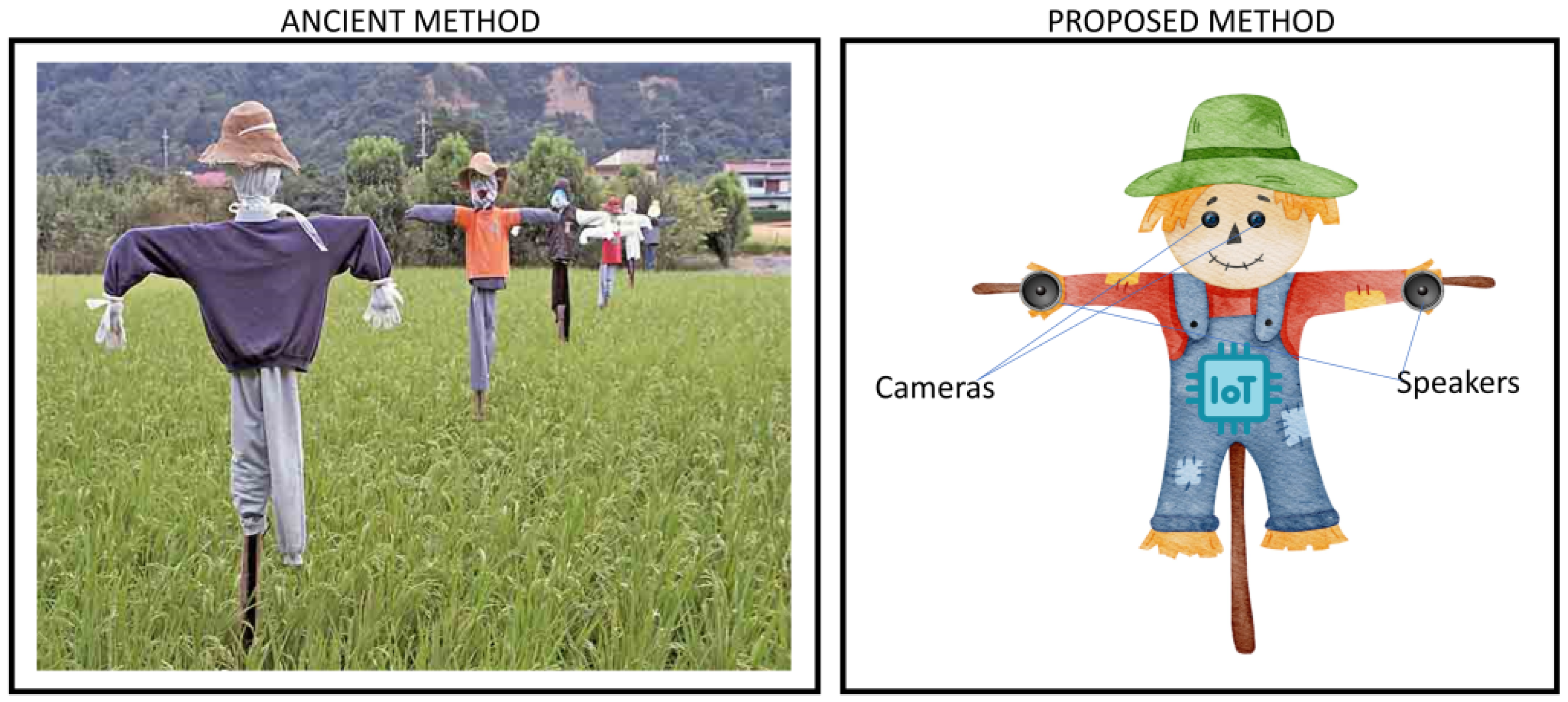

1. Introduction

1.1. Literature Review

1.2. Contributions of This Work

- Advanced Object Identification Algorithms: YOLOv8, a state-of-the-art object detection algorithm, is utilized to enhance the accuracy and speed of identifying both animals and humans in real time. Its advanced identification mechanism is attributed to its ability to process high-resolution images with minimal latency, even in challenging conditions such as low light or occlusion. The model’s multi-scale detection capability ensures reliable performance across varying sizes and distances of detected objects.

- Cloud-Based Real-Time Monitoring: The cloud-based architecture of this system supports real-time monitoring by processing data from multiple IoT sensors (cameras) simultaneously. The system’s advanced scalability ensures that, as additional sensors are integrated, the cloud infrastructure automatically adjusts to accommodate increased data loads without compromising performance. Real-time alerts are generated and delivered through cloud-based AI models that assess the likelihood of intrusion, continuously learning from new data inputs.

- Efficient Data Processing: The system’s connectivity with the cloud allows for seamless data processing, allowing farmers to receive timely notifications and enhance crop management. This feature guarantees that any problems are handled as soon as possible.

- Adaptability Beyond Farms: The system’s adaptability extends its utility to a wide range of industries, including railway track monitoring, border security, wildlife protection, forest fire detection, disaster response, industrial safety, environmental monitoring, pest control in agriculture, and oil and gas pipeline inspection. This versatility will transform approaches to monitoring in a variety of businesses.

- Speaker Activation for Intruder Deterrence: Once an incursion is detected, the system activates a speaker system to dissuade possible threats. This one-of-a-kind feature offers an extra degree of security.

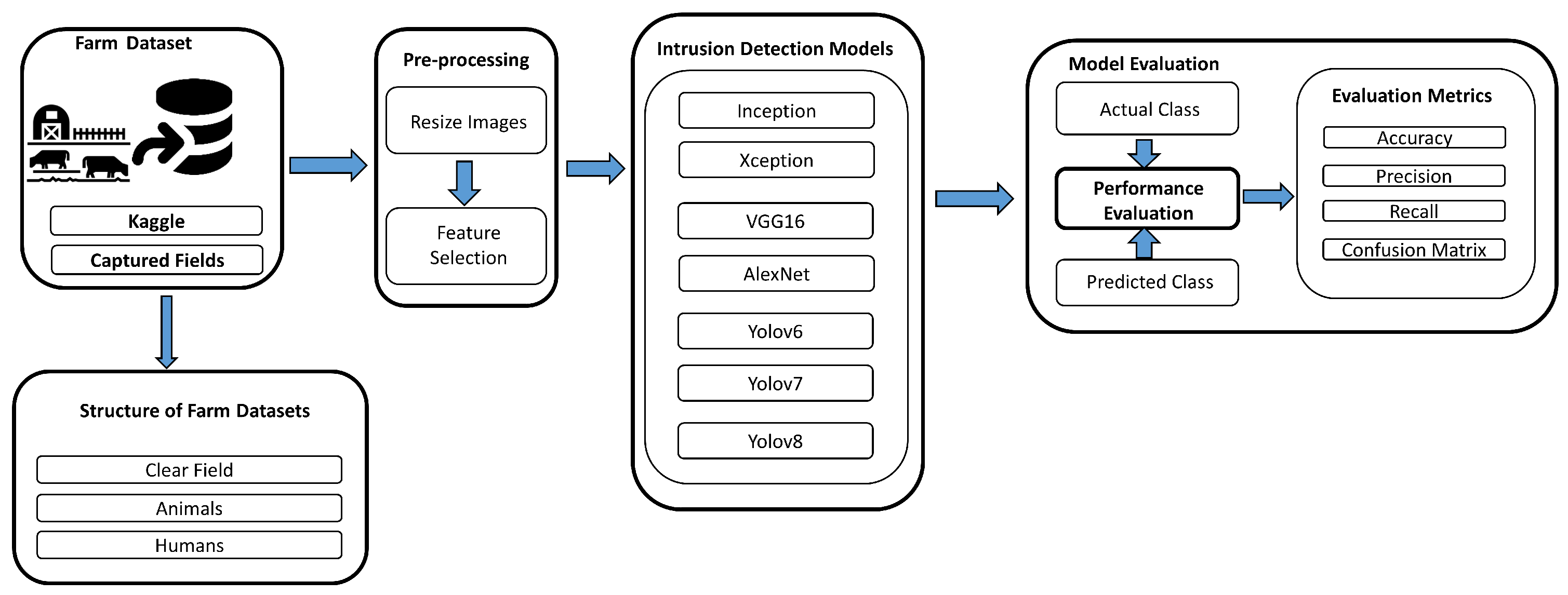

2. Methodology and Implementation

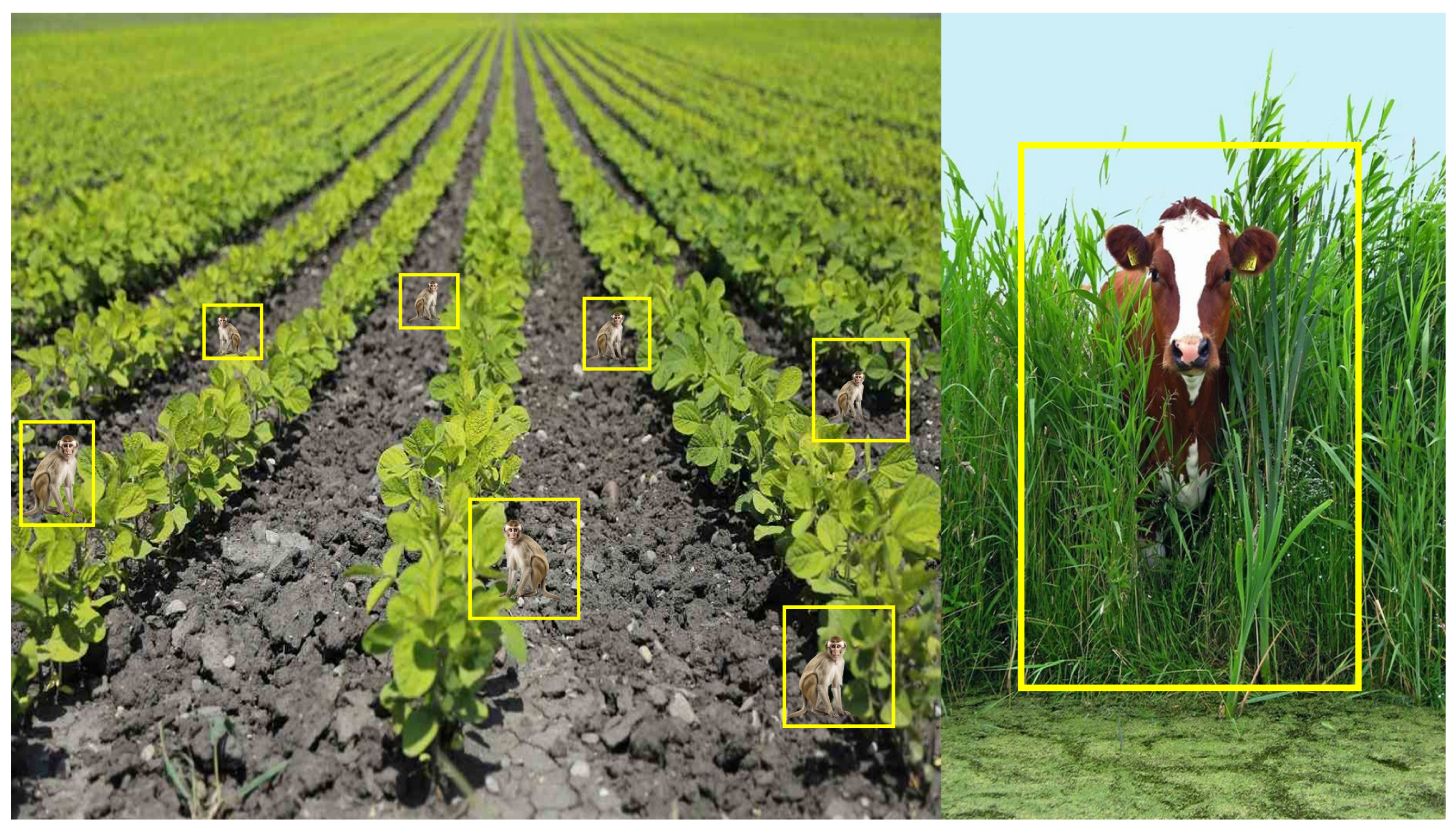

2.1. Intrusion Detection

2.1.1. Data Collection

2.1.2. Model Selection

2.1.3. Inception

2.1.4. Xception

2.1.5. VGG16

2.1.6. Alex Net

2.1.7. Versions of YOLO Used

- YOLOv6: A result of the development of the YOLO (You Only Look Once) family, YOLOv6 is renowned for its ability to accurately and quickly detect objects in real time. With an emphasis on more effective feature extraction and computational efficiency, YOLOv6 introduces network architectural advancements that yield a strong performance across various object identification applications. Its streamlined design makes it a valuable asset in the realm of efficient computer vision applications, enabling rapid deployment in systems requiring real-time analysis, such as autonomous vehicles or surveillance.

- YOLOv7: You Only Look Once (YOLO) is a prominent real-time object identification system that has transformed the field of computer vision. Developed by Joseph Redmon and his colleagues at Roboflow, YOLOv7 is distinguished by its exceptional speed and accuracy in recognizing multiple objects within images or video streams.The evolution of YOLOv7 has been characterized by a series of incremental improvements, with each iteration enhancing the capabilities of the previous version. This model emerged from collaborative efforts within the computer vision community to address the challenges of object detection. From YOLOv1 to YOLOv7, the model underwent rigorous optimization techniques to achieve enhanced accuracy and speed. Advances in deep learning algorithms and hardware acceleration have significantly contributed to the development of YOLOv7.The architecture of YOLOv7 is notable for its simplicity and efficacy. The model is built on a single deep neural network capable of real-time object recognition. YOLOv7 employs a CNN architecture comprising a base network (e.g., CSPDarknet53 or CSPDarknet53-slim) and a detection head with multiple detection layers. These layers predict bounding boxes, class probabilities, and objectness scores for the objects present in the input image, resulting in a comprehensive list of identified items, complete with confidence ratings and class labels.

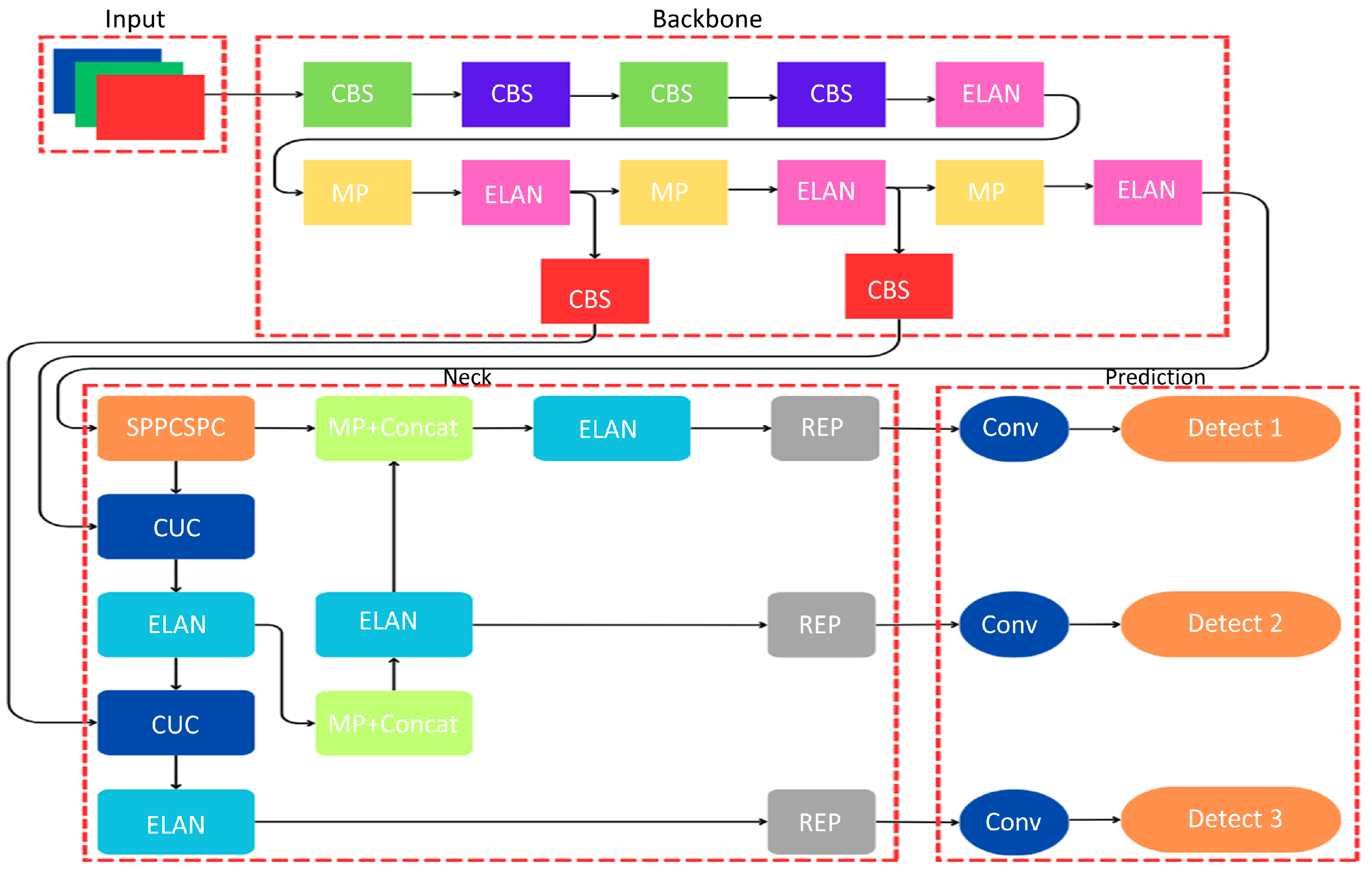

- YOLOv8: The latest iteration of the YOLO series, YOLOv8, further advances the capabilities of real-time object detection. Building upon the strengths of its predecessors, YOLOv8 is designed for enhanced accuracy and efficiency in diverse application contexts. This model incorporates cutting-edge feature extraction techniques and employs advanced deep learning algorithms, resulting in significant improvements in both detection speed and precision.The architecture of YOLOv8 features an optimized deep neural network that facilitates rapid object recognition. It includes a refined CNN backbone, which enhances the model’s ability to extract relevant features while maintaining computational efficiency. YOLOv8’s detection head is equipped with sophisticated layers that predict bounding boxes, class probabilities, and objectness scores, similar to previous versions but with increased accuracy due to its enhanced training methodologies.Notably, YOLOv8 excels in real-time applications, making it suitable for environments such as autonomous driving and smart surveillance systems. Its robust performance and adaptability ensure that it remains at the forefront of object detection technology, responding effectively to the evolving demands of the computer vision landscape.Figure 8 shows the YOLOv7 deep learning model’s architecture for object recognition. The backbone, neck, and prediction heads make up the three fundamental components of the model’s design.Backbone: This section extracts features from the input image by processing it through a series of layers. The layers contain CBS (Convolution–BatchNorm–SiLU/ReLU) blocks, which use a nonlinear activation function for efficiency and stabilization after the convolutions are applied for feature extraction and batch normalization. To reduce spatial dimensions, Max Pooling (MP) layers are interspersed with ELAN blocks, which are used to improve the network’s learning and representational capability.Neck: The features that the backbone extracted are further refined by this section of the network. Using modules like CUC (maybe a cross-stage feature fusion technique), ELAN, and REP (presumably indicating repetition or residual connections), it integrates features of various sizes. The spatial pyramid pooling module that pools features on different scales and concatenates them to capture contextual information at several levels may be referred to as the SPPCSPC block.Prediction: The last step makes predictions about object detection based on these enhanced attributes. This allows the network to recognize objects of different sizes. It consists of a sequence of convolutional layers and detection blocks (called Detect 1, Detect 2, and Detect 3) that most likely correlate to the different scales at which objects are detected. The ‘Detect’ blocks produce predictions for their respective scales, while the ‘Conv’ layers modify the dimensionality of the features for the ultimate prediction.YOLOv7 is well known for its many uses in real-time object identification. Its outstanding speed and accuracy make it ideal for situations requiring fast decision-making based on visual input. This approach has found applications in a variety of sectors, including autonomous cars, surveillance systems, and, most famously, the SFSS.YOLOv7’s key strengths are its ability to perform object detection jobs in real time, its exceptional accuracy in recognizing objects across many classes, and its adaptation to various hardware configurations. The use of YOLOv7 in the framework of the SFSS allows for the speedy and precise detection of intruders in agricultural areas, whether they are animals or people. Its ability to scan live video feeds and provide warnings instantly adds considerably to crop protection and reducing any damage or theft. The SFSS’s use of YOLOv7 underscores the model’s reputation as a must-have for real-time item identification in complicated, dynamic contexts.

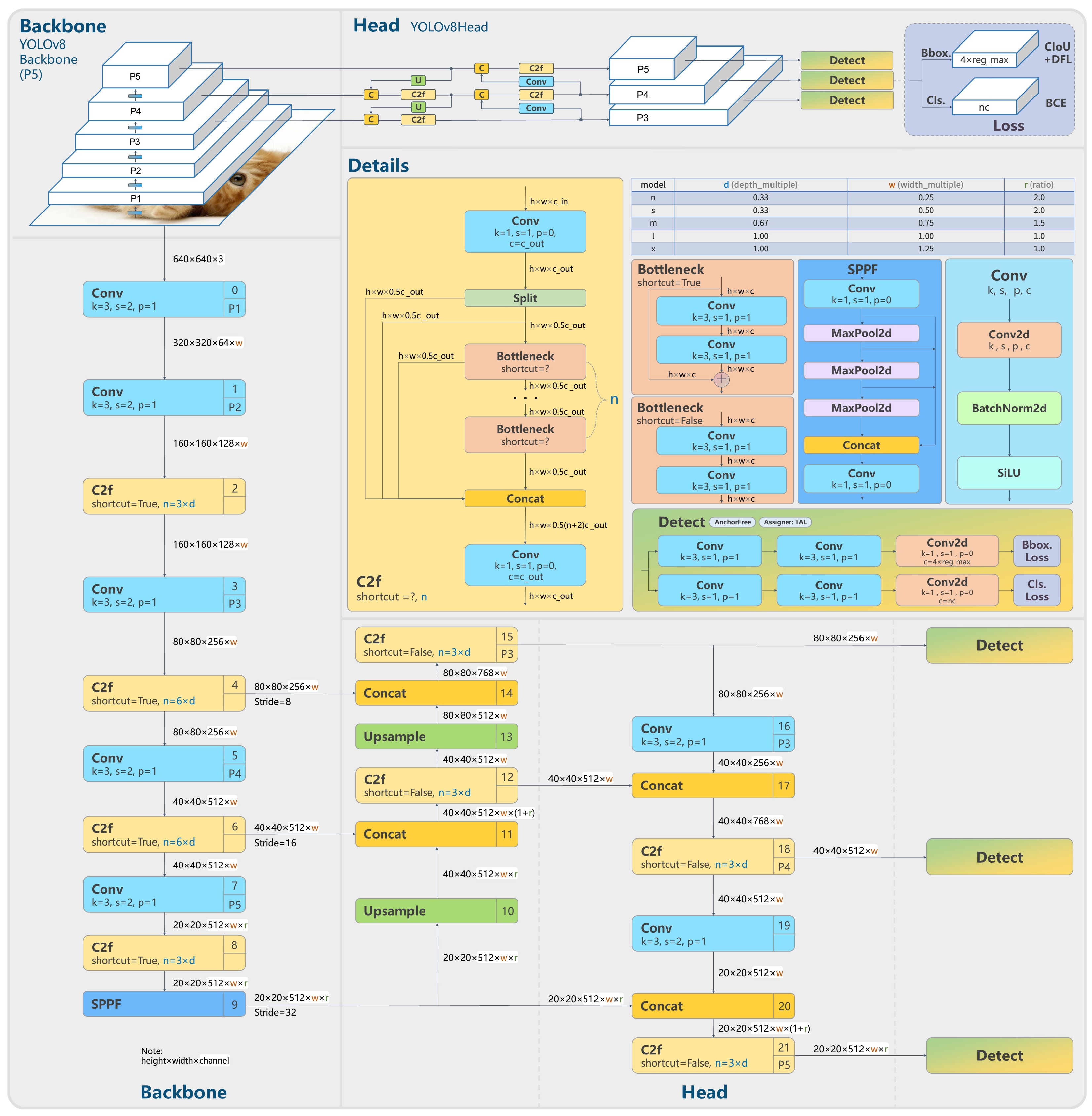

- YOLOv8: YOLOv8 pushes the limits of object-detecting technology and is a major advancement in the YOLO series. Outstanding accuracy in object recognition and localization is provided by YOLOv8 thanks to its sophisticated architectures and training methods. It was designed for high-fidelity detection, meaning it can identify minute details in challenging visual settings. Its use in vital applications such as public safety and traffic management confirms its standing as a premier instrument for state-of-the-art, real-time object detection systems.A detailed architectural diagram of YOLOv8, a sophisticated deep learning model for object identification tasks, is shown in Figure 9. These conclusions are drawn from the structure that is presented:

- -

- Backbone: In order to recognize objects of varying sizes, the model uses a multi-scale method, analyzing the input picture at several resolutions (P1 to P5). In order to capture complicated information, the backbone employs a sequence of convolutional (Conv) layers with different kernel sizes, strides, and padding. These layers gradually reduce their spatial dimensions while increasing their depth (channels). In order to combine low-level and high-level features for a more comprehensive representation of the input data, C2F blocks most likely reflect cross-stage feature fusion.

- -

- Head (YOLOv8Head): The head is made up of many detection blocks that anticipate object classes, bounding boxes, and objectness scores at various scales (P3, P4, P5). The model’s architecture demonstrates parallel processing at various sizes, enabling it to handle bigger items with efficacy while retaining good resolution for little objects. In order to increase localization and classification accuracy during training, Figure 9 recommends using sophisticated approaches such as CIoU and BCE loss functions.

- -

- Details: A thorough explanation of certain procedures like Bottleneck, SPPF, and Conv is given; they are crucial parts of the model that help keep computation efficient and refine feature maps. The model’s capacity to retain spatial information is improved by fusing information across multiple levels of the feature hierarchy through the use of skip connections and upsampling approaches. The employment of normalization and nonlinear activation functions to enable quicker and more stable training is shown by the use of BatchNorm2d and SiLU (Sigmoid Linear Unit) activation.

- -

- Detect (Detection Layers): Each detection block uses a number of convolutions prior to generating final predictions, and detection layers are customized to the particular scale of the features. These layers most likely include class probability predictions and anchor box changes, which are essential for determining the precise location and class of objects in the picture.

The YOLOv8 architecture highlights the significance of using multi-scale processing, feature fusion, and specific detection algorithms. It is a sophisticated and effective network built for fast, precise object recognition. Because of its sophisticated architecture, YOLOv8 can perform at the cutting edge of object detection tasks.

2.1.8. Comparison of the Models

2.1.9. Model Deployment

2.2. Cloud Integration

- Data Storage and Retrieval: Cloud servers are critical as repositories for the continuous video feed acquired from agricultural regions. These servers store a large amount of video data effectively, offering an archive that can be accessed as required. This role is critical not just for record-keeping but also for any post-incident analysis and inquiry.

- Intrusion Detection in the Cloud: The video stream coming from the agricultural field is subjected to rigorous intrusion detection inside the cloud environment. To evaluate this stream in real time, the system employs AI models installed in the cloud. This method detects unwanted intrusions with greater precision and speed, ensuring that potential risks are discovered and treated as soon as possible.

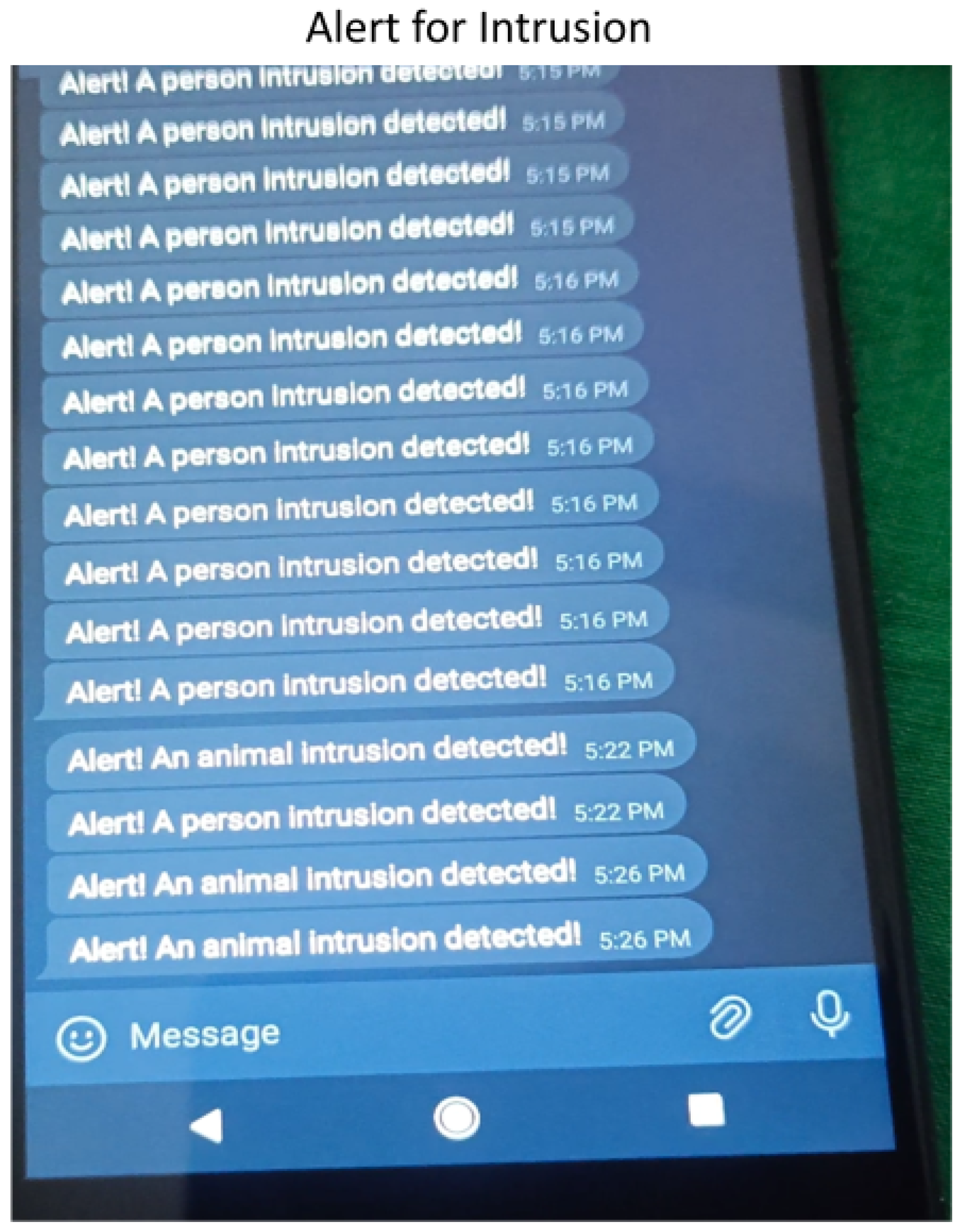

- Real-time alarm Mechanisms: The cloud integration extends to the alarm system, which is important to the proactive defensive approach of the SFSS. When an intrusion is discovered, the cloud server sends out real-time signals. These signals fulfill two functions. First, they activate on-field measures such as the ESP32, which may involve warning intruders and inhibiting future unauthorized activities. Second, and maybe more importantly, SMS notifications are sent to the agricultural landowner. This real-time communication is a critical component of the system, allowing for rapid and educated reactions to recognized threats.

2.3. Alarm System

- Speaker Deployment for Auditory Deterrence: The deployment of speakers strategically positioned throughout the agricultural field is crucial to this auditory deterrence method. These speakers act as aural sentinels, ready to react quickly when the system triggers them. They are outfitted with noises meant to frighten and repel intruders, successfully preventing any unwelcome presence in the region.

- Integration with ESP32 for an Instant Response: The SFSS’s intellect is stored in an ESP32, a powerful microcontroller, which is used to coordinate the auditory deterrent. Signals are routed from the cloud server to the ESP32 as soon as the system senses an intruder, whether animal or human. This acts as a trigger mechanism, causing the speaker to engage. Instant activation is critical because it provides a quick and forceful reaction to possible threats.

2.4. SMS Alert

- Ensure Prompt Intervention: The capacity to receive timely notifications is crucial in the context of contemporary farming, where distant monitoring is required due to the enormous expanses of farmland one farmer can cover. The SMS-based alert system meets this demand directly, guaranteeing that the farm owner is kept up to date in real time, regardless of their actual location. The benefit of this fast alarm system is that it allows for quick responses and mitigates any possible crop damage.

- Integration of AI Model with Cloud System: The SFSS’s intricacy holds the key to launching SMS-based alerts. The AI model installed in the cloud by this system is critical in intrusion detection. When the AI model detects an irregularity or encroachment on agricultural land, it immediately initiates the SMS alert system. To commence message transmission, this method connects to the cloud system.

- Farmer Empowerment: Beyond the technological elements, the actual value of the SMS-based alarm system rests in the farmer empowerment it provides. The system acts as a watchful and proactive guardian, ensuring that farm owners have the knowledge they need to take action to safeguard their agricultural interests. This method instills a feeling of security and trust in the agricultural community by bridging the physical divide and providing real-time information.

- Security and prevention: The SMS-based alert system serves a dual purpose in terms of prevention. For starters, it is an important instrument for preventing possible risks from invaders, both animal and human, thus safeguarding crops. Second, it generates a climate of heightened security inside the farmlands, dissuading trespassers who may be discouraged by the knowledge that their presence is being observed and instantly reported.

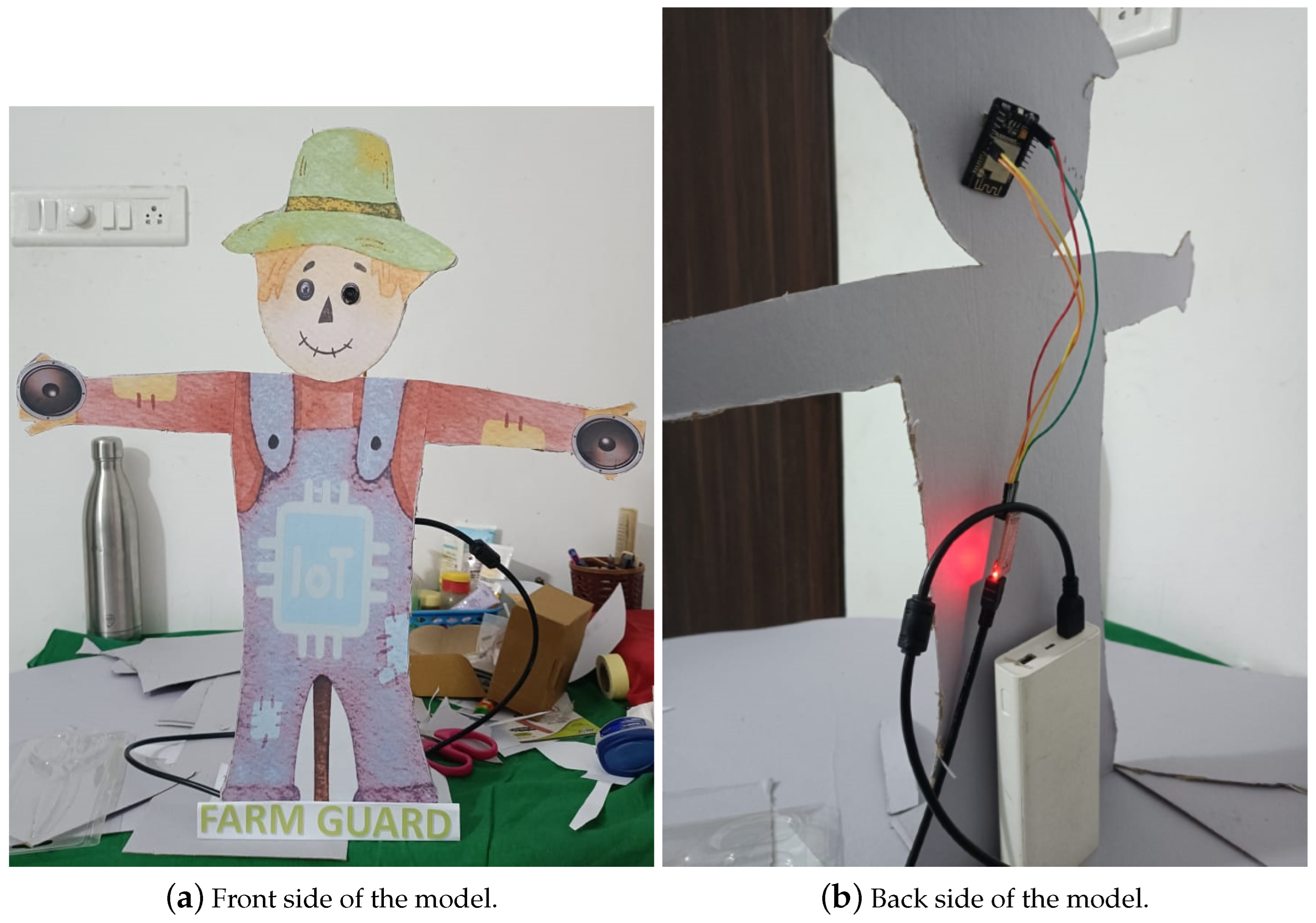

2.5. Hardware Architecture

- Microcontroller ESP32: The ESP32 microcontroller serves as the central control unit at the core of the hardware design. It acts as the system’s brain, directing the operation of all other physical components. The microcontroller serves as the major data transmission interface, guaranteeing a smooth connection between the camera module, speaker, motor, and cloud servers.

- Camera Module: A critical component of the system, the camera module is tightly tied to the ESP32 microcontroller. It is critical in enabling real-time video transmission from the farm to cloud servers. This continuous video stream serves as the basis for AI-driven intrusion detection, allowing for quick reaction mechanisms in the event of an abnormality.

- Speaker: Another essential component of the hardware design, the speaker is directly connected to the ESP32 microprocessor. The ESP32 activates the speaker when it receives intrusion detection orders from the cloud server. This activation is successful in repelling intruders, whether they be animals or people, and so improves farm security.

- Motor: The motor is a specialist component developed to improve the camera module’s efficiency. It is completely integrated with the camera and allows for 360-degree camera rotation. This function provides thorough coverage of agricultural land, guaranteeing that no blind patches go unnoticed. The regulated rotation of the motor offers an added degree of protection to the farms.

- Power Supply: The system’s power supply is critical since it provides the necessary energy for all other hardware components to operate. It guarantees that the ESP32 microcontroller, camera module, speaker, and motor run smoothly, resulting in smooth and continuous functioning.

3. Results and Experiments

- Real Positives (TP): These are the cases that were appropriately identified as positive.

- True Negatives (TN): These are examples of situations that were accurately categorized as negative.

- False Positives (FP): These are situations in which Type I errors have led to an inaccurate positive classification.

- False Negatives (FN): These are cases that are Type II mistakes and are mistakenly labeled as negative.

- Accuracy: The accuracy Equation (1) calculates the ratio of accurately predicted instances to the total number of instances to determine the general correctness of the model’s predictions. It gives an overall evaluation of the model’s performance.

- Precision: Precision is defined as the fraction of actual positives out of all positive assertions. Precision assesses the accuracy of optimistic forecasts.

- Recall: The proportion of true positives among all actual positives. The model’s recall evaluates the efficiency with which it detects cases that are positive.

- F1_score: The harmonic mean of recall and precision is the F1_score. It examines the overall performance of the algorithm in question.

3.1. Training and Validation of the Models

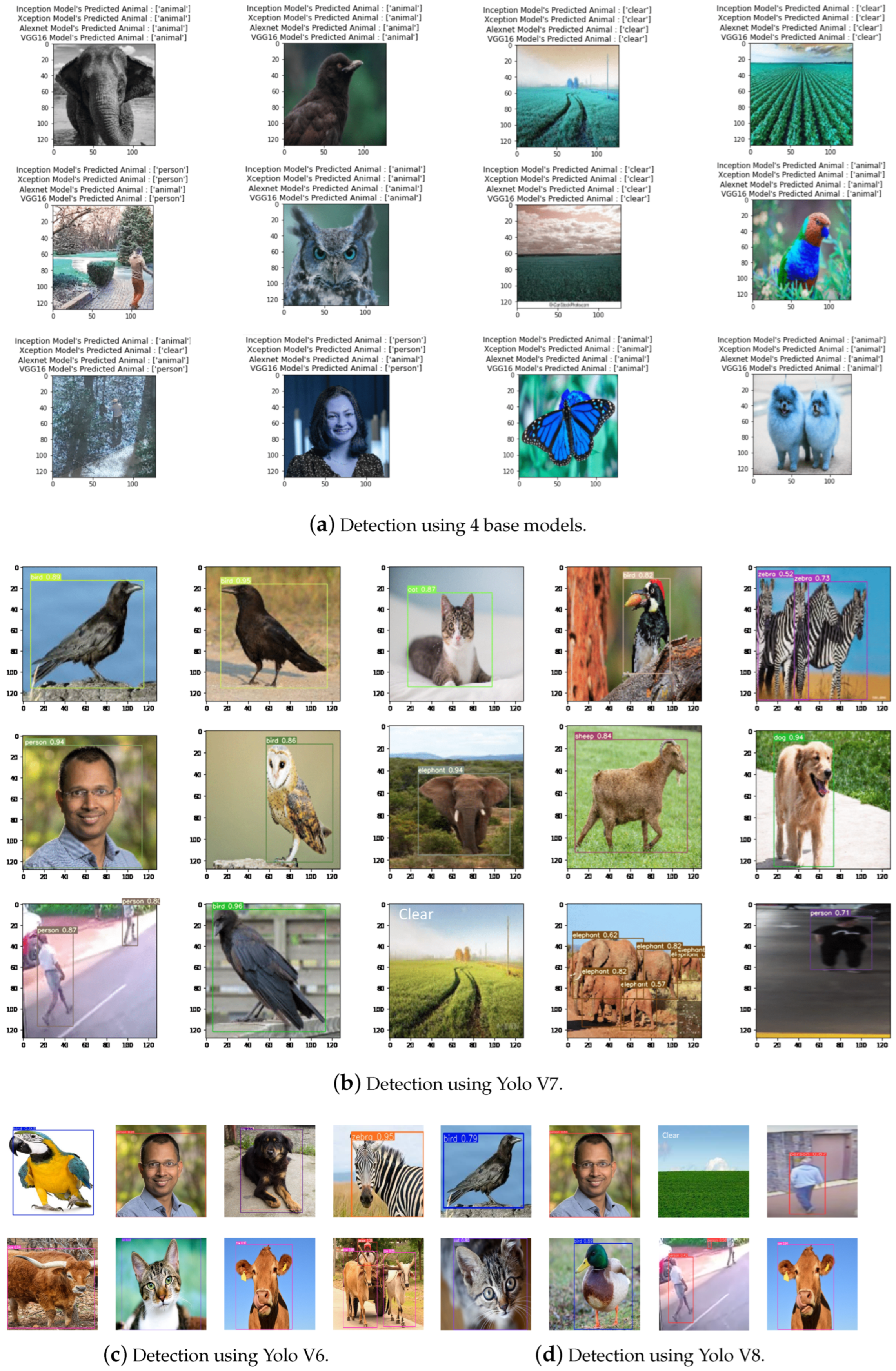

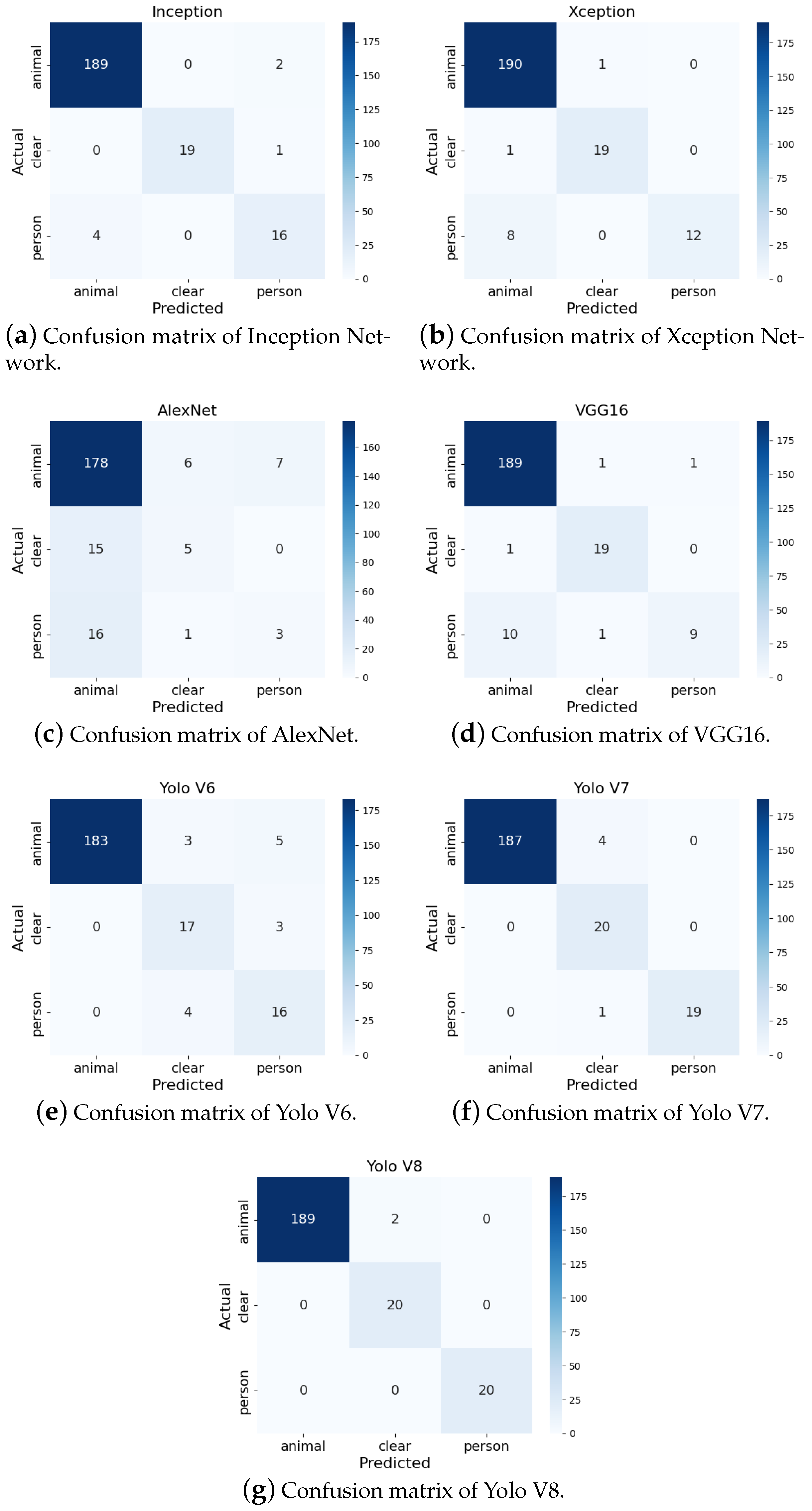

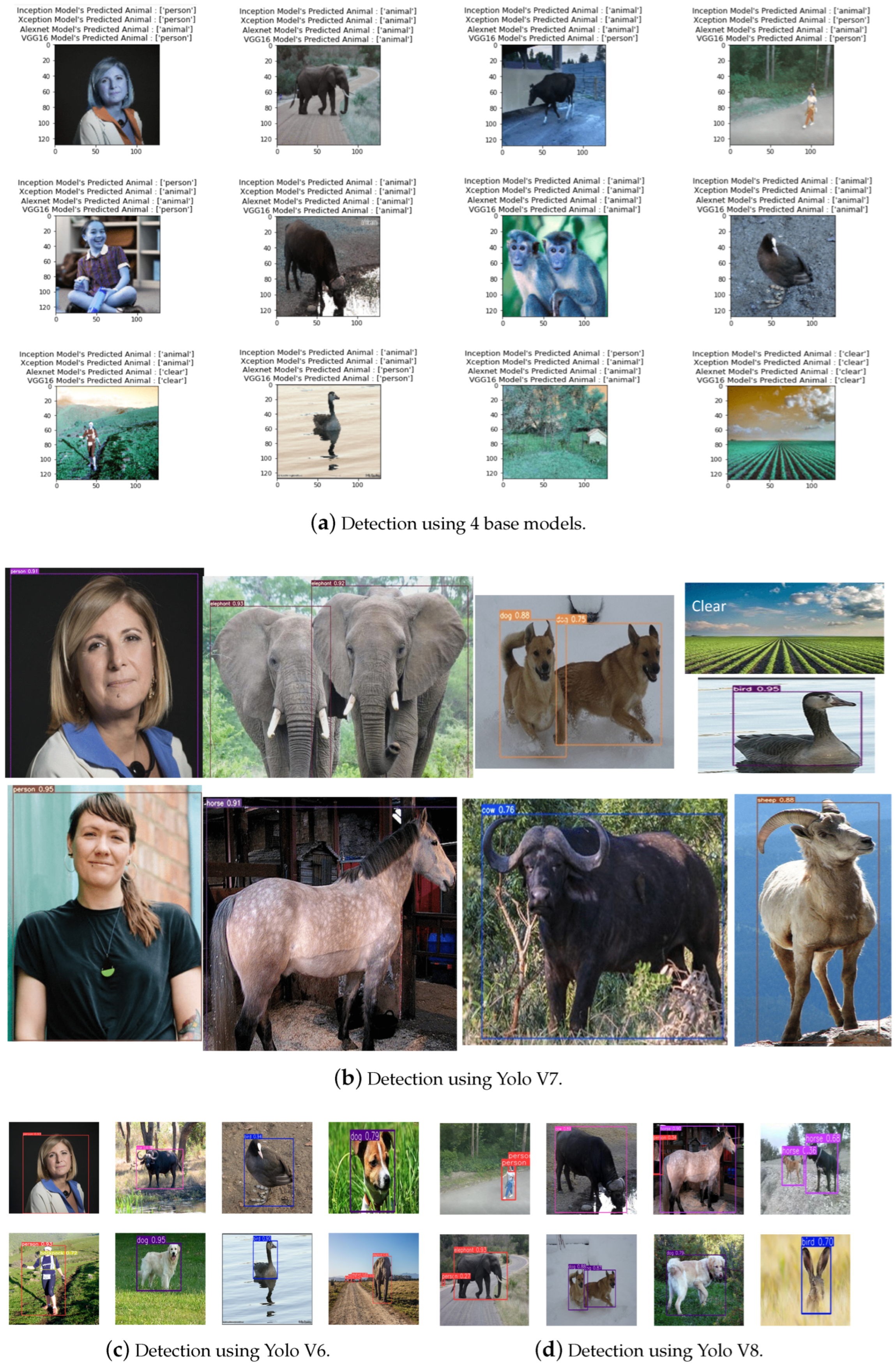

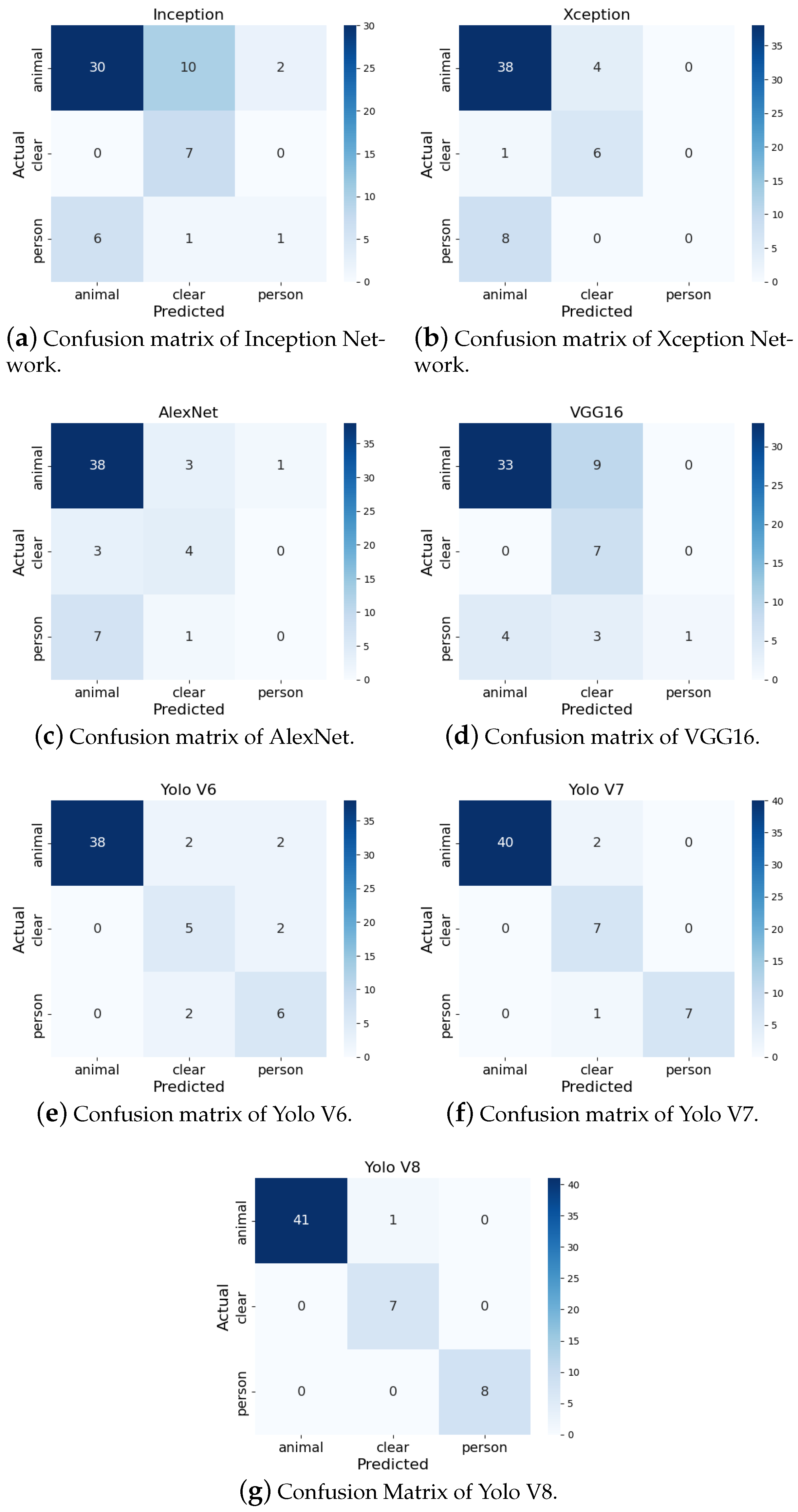

3.2. Evaluation of the Models on the Test Set of the Primary Dataset

3.3. Evaluation of the Models Using the Secondary Dataset

3.4. Evaluation of the Models on the Farm Dataset

3.5. Comparison with Previous Research

3.6. Result of Detection from Real Demo Camera

3.7. Achieving Real-Time Intrusion Detection

- Optimized Machine Learning Model: The YOLOv8 model is intended for real-time object identification in particular. Its design enables high-speed image processing with minimum computing cost, especially when working on resource-constrained devices like the ESP32. The model’s quick inference time—typically under 30 milliseconds per frame—ensures that object recognition occurs virtually instantly upon data reaching the cloud server.

- Efficient Data Transmission Protocols: To minimize transmission delays, the system leverages the MQTT (Message Queuing Telemetry Transport) protocol, which is optimized for low-latency, lightweight data transport. The MQTT is particularly suitable for IoT applications [28,29,30,31], providing a speedy and reliable connection between the ESP32 sensors and the cloud, thereby ensuring that data are delivered and analyzed in near real time.

- Data Compression and Prioritization: The system leverages image compression techniques to minimize the size of the data transported from the ESP32 to the cloud, further lowering transmission time. Additionally, only crucial information—specifically frames indicating possible intrusions—is transferred to the cloud for processing, limiting the volume of data that require real-time management.

- Cloud Processing Power: The cloud infrastructure deployed in this system is designed to grow automatically based on the incoming data load, eliminating processing bottlenecks. The elastic nature of the cloud means that the model can accommodate enormous data volumes without them affecting its real-time performance.

- Latency Management: The whole round-trip time, from data collection to alert production, is closely managed to keep it within real-time restrictions. Empirical testing suggests that the whole delay—from data acquisition at the sensor to warning delivery—typically runs from 100 to 200 milliseconds, which is well within acceptable limits for real-time monitoring systems.

3.8. Reasons for Yolo V8 to Outperform the Other Models

- Advanced Architecture: YOLOv8 incorporates more sophisticated architectural changes, building upon the qualities of its predecessors. Improved feature extraction layers and complicated multi-scale object detection handling techniques are probably among these improvements, which lead to a more powerful and nuanced comprehension of complex visual inputs.

- Enhanced Generalization: There has been a noticeable improvement in the model’s generalization capacity across different datasets and circumstances. Because agricultural surveillance frequently deals with changing situations and unknown components, this resilience is essential. Regardless of changes in the environment, YOLOv8 constantly achieves great performance thanks to its enhanced generalization.

- Streamlined Efficiency: Efficiency, which is a defining feature of the YOLO series, is still emphasized in YOLOv8, but in a more simplified manner that cuts down on duplication and concentrates on critical calculations. This modification probably leads to substantially quicker inference times without sacrificing detection granularity or model fidelity.

- Precision in Localization: It is anticipated that YOLOv8 has even more accurate object localization, which is crucial for real-time monitoring applications like agricultural surveillance. A proactive approach to managing agricultural resources can be substantially improved by a model having the capacity to precisely identify and categorize possible risks or incursions.

- State-of-the-art Training Techniques: It is likely that YOLOv8 has upgraded its training methods to take advantage of the most recent developments in deep learning. These include innovative regularization techniques, loss functions, and data augmentation tactics that work together to enhance the model’s remarkable learning ability.

- Hardware Optimization: It is possible that YOLOv8 keeps optimizing for GPU acceleration, making the most of contemporary technology. This optimization guarantees that the model may be deployed in field applications with strict real-time requirements and that it functions well in research settings as well.

3.9. Performance Evaluation Under Challenging Environmental Conditions

4. Discussion

5. Future Scope

- Low-Cost 360-Degree Camera Integration: The system may be modified in the future to include low-cost 360-degree cameras. This upgrade would give an even more complete perspective of the farm, ensuring that every area is always monitored. This development has the potential to dramatically improve the system’s security capabilities.

- Owner Recognition and Exemption: The inclusion of owner recognition capabilities is a potential avenue for future growth. By storing the farm owner’s information in the system’s database, the technology could differentiate between the owner and intruders, significantly decreasing false alerts. When the owner is there, the system could stay dormant, reducing needless alarms.

- Ultrasonic Sound Integration: Future studies might concentrate on adding ultrasonic noises into the capabilities of this system. Ultrasonic noises are well known for their ability to discourage animals. When intruders are discovered, the device may give an additional degree of safety by frightening away unwelcome animals.

- Animal Type Identification and Adaptive Sound Generation: Modifying the system to recognize various sorts of animals visiting the farm is a fascinating path for future research. The system might then generate noises known to discourage certain animals. If a pet animal is identified, the system may emit noises that imitate the presence of bigger predators such as lions or tigers. This adaptive strategy enables a personalized reaction to various sorts of intrusions.

- Live video access for Farmers: Future advancements might include giving farmers direct access to live video of their farm upon incursion detection, enhancing the system’s capabilities. Farmers would be able to watch the farm in real time, enabling them to evaluate the situation and take appropriate action.

- Application Beyond Agriculture: This SFSS study may be applied to a variety of different sectors. Incorporating drones into the architecture brings up a world of possibilities, including railway track monitoring, border security, animal protection, forest fire detection, disaster response, industrial safety, and environmental monitoring. This technology has the ability to transform a wide range of industries by delivering efficient and cost-effective solutions.

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Anoop, N.R.; Krishnan, S.; Ganesh, T. Elephants in the farm–changing temporal and seasonal patterns of human-elephant interactions in a forest-agriculture matrix in the Western Ghats, India. Front. Conserv. Sci. 2023, 4, 1142325. [Google Scholar]

- Sinclair, M.; Fryer, C.; Phillips, C.J.C. The Benefits of Improving Animal Welfare from the Perspective of Livestock Stakeholders across Asia. Animals 2019, 9, 123. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Dhanaraju, M.; Chenniappan, P.; Ramalingam, K.; Pazhanivelan, S.; Kaliaperumal, R. Smart Farming: Internet of Things (IoT)-Based Sustainable Agriculture. Agriculture 2022, 12, 1745. [Google Scholar] [CrossRef]

- Mane, A.; Mane, A.; Dhake, P.; Kale, S. Smart Intrusion Detection System for Crop Protection. Int. Res. J. Eng. Technol. IRJET 2022, 9, 2921–2925. [Google Scholar]

- Kommineni, M.; Lavanya, M.; Vardhan, V.H. Agricultural farms utilizing computer vision (ai) and machine learning techniques for animal detection and alarm systems. J. Pharm. Negat. Results 2022, 13, 3292–3300. [Google Scholar]

- Yadahalli, S.; Parmar, A.; Deshpande, A. Smart intrusion detection system for crop protection by using Arduino. In Proceedings of the 2020 Second International Conference on Inventive Research in Computing Applications (ICIRCA), Coimbatore, India, 15–17 July 2020; pp. 405–408. [Google Scholar]

- Geetha, D.; Monisha, S.P.; Oviya, J.; Sonia, G. Human and Animal Movement Detection in Agricultural Fields. SSRG Int. J. Comput. Sci. Eng. 2019, 6, 15–18. [Google Scholar]

- Andavarapu, N.; Vatsavayi, V.K. Wild-animal recognition in agriculture farms using W-COHOG for agro-security. Int. J. Comput. Intell. Res. 2017, 13, 2247–2257. [Google Scholar] [CrossRef]

- Gogoi, M. Protection of Crops From Animals Using Intelligent Surveillance System. J. Appl. Fundam. Sci. 2015, 1, 200. [Google Scholar]

- Christiansen, P.; Nielsen, L.N.; Steen, K.A.; Jørgensen, R.N.; Karstoft, H. DeepAnomaly: Combining background subtraction and deep learning for detecting obstacles and anomalies in an agricultural field. Sensors 2016, 16, 1904. [Google Scholar] [CrossRef]

- Kragh, M.; Jørgensen, R.N.; Pedersen, H. Object detection and terrain classification in agricultural fields using 3D lidar data. In International Conference on Computer Vision Systems; Springer International Publishing: Cham, Switzerland, 2015; pp. 188–197. [Google Scholar]

- Quy, V.K.; Hau, N.V.; Anh, D.V.; Quy, N.M.; Ban, N.T.; Lanza, S.; Randazzo, G.; Muzirafuti, A. IoT-enabled smart agriculture: Architecture, applications, and challenges. Appl. Sci. 2022, 12, 3396. [Google Scholar] [CrossRef]

- AlZubi, A.A.; Galyna, K. Artificial Intelligence and Internet of Things for Sustainable Farming and Smart Agriculture. IEEE Access 2023, 11, 78686–78692. [Google Scholar] [CrossRef]

- Lee, S.; Ahn, H.; Seo, J.; Chung, Y.; Park, D.; Pan, S. Practical monitoring of undergrown pigs for IoT-based large-scale smart farm. IEEE Access 2019, 7, 173796–173810. [Google Scholar] [CrossRef]

- Vangala, A.; Das, A.K.; Chamola, V.; Korotaev, V.; Rodrigues, J.J. Security in IoT-enabled smart agriculture: Architecture, security solutions and challenges. Clust. Comput. 2023, 26, 879–902. [Google Scholar] [CrossRef]

- Demestichas, K.; Peppes, N.; Alexakis, T. Survey on security threats in agricultural IoT and smart farming. Sensors 2020, 20, 6458. [Google Scholar] [CrossRef]

- Elbeheiry, N.; Balog, R.S. Technologies driving the shift to smart farming: A review. IEEE Sens. J. 2022, 23, 1752–1769. [Google Scholar] [CrossRef]

- Ayaz, M.; Ammad-Uddin, M.; Sharif, Z.; Mansour, A.; Aggoune, E.H.M. Internet-of-Things (IoT)-based smart agriculture: Toward making the fields talk. IEEE Access 2019, 7, 129551–129583. [Google Scholar] [CrossRef]

- Hafeez, A.; Husain, M.A.; Singh, S.P.; Chauhan, A.; Khan, M.T.; Kumar, N.; Chauhan, A.; Soni, S.K. Implementation of drone technology for farm monitoring & pesticide spraying: A review. Inf. Process. Agric. 2022, 10, 192–203. [Google Scholar]

- de Araujo Zanella, A.R.; da Silva, E.; Albini, L.C.P. Security challenges to smart agriculture: Current state, key issues, and future directions. Array 2020, 8, 100048. [Google Scholar] [CrossRef]

- Shukla, A.; Jain, A. Smart Automated Farming System using IOT and Solar Panel. Sci. Technol. J. 2019, 7, 22–32. [Google Scholar] [CrossRef]

- Panjaitan, S.D.; Dewi, Y.S.K.; Hendri, M.I.; Wicaksono, R.A.; Priyatman, H. A Drone Technology Implementation Approach to Conventional Paddy Fields Application. IEEE Access 2022, 10, 120650–120658. [Google Scholar] [CrossRef]

- Pagano, A.; Croce, D.; Tinnirello, I.; Vitale, G. A Survey on LoRa for Smart Agriculture: Current Trends and Future Perspectives. IEEE Internet Things J. 2022, 10, 3664–3679. [Google Scholar] [CrossRef]

- Hong, S.J.; Park, S.; Lee, C.H.; Kim, S.; Roh, S.W.; Nurhisna, N.I.; Kim, G. Application of X-ray imaging and convolutional neural networks in the prediction of tomato seed viability. IEEE Access 2023, 11, 38061–38071. [Google Scholar] [CrossRef]

- Deshpande, A.V. Design and implementation of an intelligent security system for farm protection from wild animals. Int. J. Sci. Res. ISSN Online 2016, 5, 2319–7064. [Google Scholar]

- Pandey, S.; Bajracharya, S.B. Crop protection and its effectiveness against wildlife: A case study of two villages of Shivapuri National Park, Nepal. Nepal J. Sci. Technol. 2015, 16, 1–10. [Google Scholar] [CrossRef]

- Chang, B.R.; Tsai, H.F.; Hsieh, C.W. Accelerating the Response of Self-Driving Control by Using Rapid Object Detection and Steering Angle Prediction. Electronics 2023, 12, 2161. [Google Scholar] [CrossRef]

- Available online: https://github.com/ultralytics/ultralytics/issues/189 (accessed on 2 November 2023).

- Rao, K.; Maikhuri, R.; Naut iyal, S.; Saxena, K.G. Crop damage and livestock depredation by wildlife: A case study from Nanda Devi biosphere reserve, India. J. Environ. Manag. 2002, 66, 317–327. [Google Scholar] [CrossRef]

- Bavane, V.; Raut, A.; Sonune, S.; Bawane, A.; Jawandhiya, P. Protection of crops from wild animals using Intelligent Surveillance System. Int. J. Res. Advent Technol. IJRAT 2018, 10, 2321–9637. [Google Scholar]

- Vigneshwar, R.; Maheswari, R. Development of embedded based system to monitor elephant intrusion in forest border areas using internet of things. Int. J. Eng. Res. 2016, 5, 594–598. [Google Scholar]

- Raghuvanshi, A.; Singh, U.K.; Sajja, G.S.; Pallathadka, H.; Asenso, E.; Kamal, M.; Singh, A.; Phasinam, K. Intrusion detection using machine learning for risk mitigation in IoT-enabled smart irrigation in smart farming. J. Food Qual. 2022, 2022, 3955514. [Google Scholar] [CrossRef]

- Roy, B.; Cheung, H. A deep learning approach for intrusion detection in internet of things using bi-directional long short-term memory recurrent neural network. In Proceedings of the 28th International Telecommunication Networks and Applications Conference, Sydney, NSW, Australia, 21–23 November 2018; pp. 1–6. [Google Scholar]

- Le, H.V.; Ngo, Q.-D.; Le, V.-H. Iot botnet detection using system call graphs and one-class CNN classification. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 937–942. [Google Scholar] [CrossRef]

| Authors | Approach | Features | Contribution |

|---|---|---|---|

| Dhake et al., 2022 [4] | Sensor technology (PIR and ultrasonic) | Motion detection, intruder classification | Automated agricultural security system with real-time alerts and intrusion categorization. |

| Vardhan et al., 2022 [5] | Computer vision and AI | Animal detection, auditory alarm, notifications | AI-powered wildlife intrusion detection system with real-time alerts and animal identification. |

| Yadahalli et al., 2020 [6] | Multi-sensor system (PIR, ultrasonic, camera) | Intrusion detection, visual and auditory alerts | Comprehensive agricultural security framework with intruder categorization and real-time notifications. |

| Geetha et al., 2019 [7] | Sensor technology (PIR, LDR, flame sensors) and IoT | Animal detection, auditory and visual alerts, forest fire detection | Smart farm protection system with diverse sensor capabilities, real-time alerts, and additional features. |

| Andacarapu et al., 2017 [8] | Computer vision (W-CoHOG) | Animal detection, feature extraction, classification | Computer vision-based wildlife intrusion detection system with improved feature extraction and accuracy. |

| Gogoi et al., 2015 [9] | Image processing (SIFT) | Animal detection, object identification | Hybrid approach for animal identification in agricultural fields using image processing and SIFT. |

| Nielsen et al., 2016 [10] | Deep learning and anomaly detection (DeepAnomaly) | Anomaly detection, human recognition | Deep learning-based system for accurate anomaly detection in agricultural areas, surpassing RCNN. |

| Kragh et al., 2015 [11] | Support vector machine | Object recognition and terrain classification | High overall classification accuracy for identifying various objects and vegetation in agricultural settings. |

| Lanza et al., 2022 [12] | Content analysis (2017–2022) | IoT in smart agriculture and architecture applications | Guidelines for IoT use, IoT’s importance, and the categorization of its applications, benefits, challenges, and future research directions. |

| Galyna et al., 2023 [13] | IoT and AI framework | IoT building blocks for the creation of smart sustainable agriculture platforms | Real-time weather data collecting is made possible by smart sensors. |

| Lee et al., 2019 [14] | Deep learning- based computer vision | Undergrown pig detection | Early warning system for undergrown pigs, combining image processing, deep learning, and real-time detection. |

| Vangala et al., 2023 [15] | Analysis of authentication and access control protocols | Security in smart agriculture | Proposed independent architecture, security requirements, threat model, attacks, and protocol performance. |

| Peppes et al., 2020 [16] | Analysis of ICT’s function in agriculture, focusing on new threats and weaknesses | IoT in smart agriculture and architecture applications | ICT breakthroughs, approaches, and mitigation in agriculture, with emphasis on their benefits and potential concerns. |

| Balog et al., 2023 [17] | Content analysis (2015 to 2021) | IoT in smart agriculture and architecture applications | Describes common SF practices, problems, and solutions while classifying contributions into key SF technologies and research areas. |

| Ayaz et al., 2019 [18] | Analysis of wireless sensors and IoT in agriculture | IoT applications, wireless sensors, UAVs | Examines IoT use in agriculture, sensors, and UAVs and the potential benefits for precision agriculture. |

| Hafeez et al., 2022 [19] | Drone technology | Aerial monitoring, data collection, precision agriculture | Drones offer solutions to outdated farming methods, enable crop assessments, and improve crop management. Discusses advancements in drone technology for precision agriculture. |

| Araujo Zanella et al., 2020 [20] | Smart agriculture | IoT, big data, precision agriculture | Explores security challenges in Agriculture 4.0 and proposes solutions. Highlights the importance of security, computational capabilities, and the role of edge computing. |

| Shukla et al., 2019 [21] | Eco-friendly farming solution | IoT, real-time monitoring | Proposes an electronically operated system for Indian farming, addressing water problems, humidity, temperature control, electricity supplies, and lighting. |

| Panjaitan et al., 2022 [22] | Drones for spraying | UAVs, precision agriculture | Discusses drone implementation in paddy fields, assesses drone performance, and highlights the impact of drone-based spraying. |

| Pagano et al., 2022 [23] | LoRa/LoRaWAN in agriculture | IoT, LoRaWAN, wireless connectivity | Focuses on LoRa/LoRaWAN technology in agriculture, addressing challenges and suggesting solutions. Highlights the role of machine learning, AI, and edge computing. |

| Hong et al., 2023 [24] | Seed viability prediction | X-ray imaging, deep learning | Develops viability prediction technologies for tomato seeds using X-ray imaging. Achieves high accuracy in distinguishing between viable and non-viable seeds. |

| Model | Architecture | Detection Speed | Detection Accuracy | Training Dataset Size | Hardware Compatibility |

|---|---|---|---|---|---|

| Inception | Traditional CNN | Moderate | High | Large | GPU |

| Xception | Depthwise Separable Convolution | High | High | Large | GPU |

| AlexNet | Standard CNN | Moderate | Moderate | Moderate | CPU/GPU |

| VGG16 | Standard CNN | Moderate | High | Large | GPU |

| Yolo Models | Single-Shot Detection | High | High | Moderate | GPU |

| Model | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|

| Inception | 96.96 | 96.89 | 97 | 96.77 |

| Xception | 96.10 | 96.42 | 96.1 | 95.87 |

| VGG16 | 95.67 | 95.87 | 95.67 | 95.29 |

| AlexNet | 80.51 | 76.66 | 80.51 | 78.02 |

| YoloV6 | 94.41 | 95.37 | 94.34 | 94.85 |

| YoloV7 | 98.15 | 98.38 | 98.07 | 98.22 |

| YoloV8 | 99.10 | 99.21 | 99.05 | 99.12 |

| Model | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|

| Inception | 96 | 91 | 90 | 90 |

| Xception | 89 | 90 | 89 | 88 |

| VGG16 | 89 | 89 | 89 | 88 |

| AlexNet | 68 | 63 | 68 | 62 |

| YoloV6 | 89 | 93 | 89 | 90 |

| YoloV7 | 92 | 93 | 92 | 92 |

| YoloV8 | 98 | 99 | 98 | 98 |

| Model | Accuracy | Precision | Recall | F1_Score |

|---|---|---|---|---|

| Inception | 78 | 77 | 77 | 76 |

| Xception | 77 | 67 | 77 | 72 |

| VGG16 | 74 | 64 | 74 | 68 |

| AlexNet | 72 | 64 | 72 | 67 |

| YoloV6 | 86 | 89 | 86 | 87 |

| YoloV7 | 95 | 96 | 95 | 95 |

| YoloV8 | 99 | 98 | 98 | 98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Delwar, T.S.; Mukhopadhyay, S.; Kumar, A.; Singh, M.; Lee, Y.-w.; Ryu, J.-Y.; Hosen, A.S.M.S. Real-Time Farm Surveillance Using IoT and YOLOv8 for Animal Intrusion Detection. Future Internet 2025, 17, 70. https://doi.org/10.3390/fi17020070

Delwar TS, Mukhopadhyay S, Kumar A, Singh M, Lee Y-w, Ryu J-Y, Hosen ASMS. Real-Time Farm Surveillance Using IoT and YOLOv8 for Animal Intrusion Detection. Future Internet. 2025; 17(2):70. https://doi.org/10.3390/fi17020070

Chicago/Turabian StyleDelwar, Tahesin Samira, Sayak Mukhopadhyay, Akshay Kumar, Mangal Singh, Yang-won Lee, Jee-Youl Ryu, and A. S. M. Sanwar Hosen. 2025. "Real-Time Farm Surveillance Using IoT and YOLOv8 for Animal Intrusion Detection" Future Internet 17, no. 2: 70. https://doi.org/10.3390/fi17020070

APA StyleDelwar, T. S., Mukhopadhyay, S., Kumar, A., Singh, M., Lee, Y.-w., Ryu, J.-Y., & Hosen, A. S. M. S. (2025). Real-Time Farm Surveillance Using IoT and YOLOv8 for Animal Intrusion Detection. Future Internet, 17(2), 70. https://doi.org/10.3390/fi17020070