Abstract

The rapid expansion of online learning platforms has necessitated advanced systems to address scalability, personalization, and assessment challenges. This paper presents a comprehensive review of artificial intelligence (AI)-based decision support systems (DSSs) designed for online learning and assessment, synthesizing advancements from 2020 to 2025. By integrating machine learning, natural language processing, knowledge-based systems, and deep learning, AI-DSSs enhance educational outcomes through predictive analytics, automated grading, and personalized learning paths. This study examines system architecture, data requirements, model selection, and user-centric design, emphasizing their roles in achieving scalability and inclusivity. Through case studies of a MOOC platform using NLP and an adaptive learning system employing reinforcement learning, this paper highlights significant improvements in grading efficiency (up to 70%) and student performance (12–20% grade increases). Performance metrics, including accuracy, response time, and user satisfaction, are analyzed alongside evaluation frameworks combining quantitative and qualitative approaches. Technical challenges, such as model interpretability and bias, ethical concerns like data privacy, and implementation barriers, including cost and adoption resistance, are critically assessed, with proposed mitigation strategies. Future directions explore generative AI, multimodal integration, and cross-cultural studies to enhance global accessibility. This review offers a robust framework for researchers and practitioners, providing actionable insights for designing equitable, efficient, and scalable AI-DSSs to transform online education.

1. Introduction

The rapid expansion of online learning platforms over the past decade has fundamentally reshaped educational paradigms, enabling equitable access to knowledge across diverse global populations. Since 2020, the adoption of e-learning has accelerated, driven by technological advancements and catalyzed by the COVID-19 pandemic, which necessitated a swift transition to digital education [1]. By 2025, platforms such as Coursera, edX, and institution-specific learning management systems (LMSs) have reported cumulative enrollments exceeding 500 million users, underscoring the scalability and flexibility of online learning [2,3]. Despite these advances, traditional online learning systems face persistent challenges, including scalability limitations, inadequate personalization, and inefficiencies in delivering real-time, actionable feedback [4]. These shortcomings constrain the ability to address diverse learner needs and optimize educational outcomes effectively.

AI-driven decision support systems have emerged as a transformative approach to overcoming these challenges. By integrating machine learning (ML), natural language processing (NLP), and knowledge-based systems, AI-DSSs enable dynamic, data-driven decision-making in online learning environments. These systems facilitate personalized learning pathways, automate assessments, and provide educators with actionable insights to enhance instructional efficacy [5,6]. This technical review examines the architectural design, implementation strategies, and performance evaluation of AI-DSSs within online learning and assessment frameworks, highlighting their potential to revolutionize educational delivery and foster equitable, adaptive learning experiences.

1.1. Background and Motivation

The proliferation of online learning platforms, particularly massive open online courses (MOOCs), has been remarkable, with global enrollment growing at an annual rate of approximately 30% between 2020 and 2025 [7,8]. These platforms leverage LMSs to deliver course content, monitor learner progress, and administer assessments. However, traditional LMSs often rely on static, one-size-fits-all assessment methods, such as multiple-choice quizzes, which fail to adapt to individual learner profiles and struggle to scale for large cohorts [9]. Such limitations hinder the ability to provide tailored educational experiences and timely feedback, particularly in high-enrollment settings, as illustrated in Figure 1.

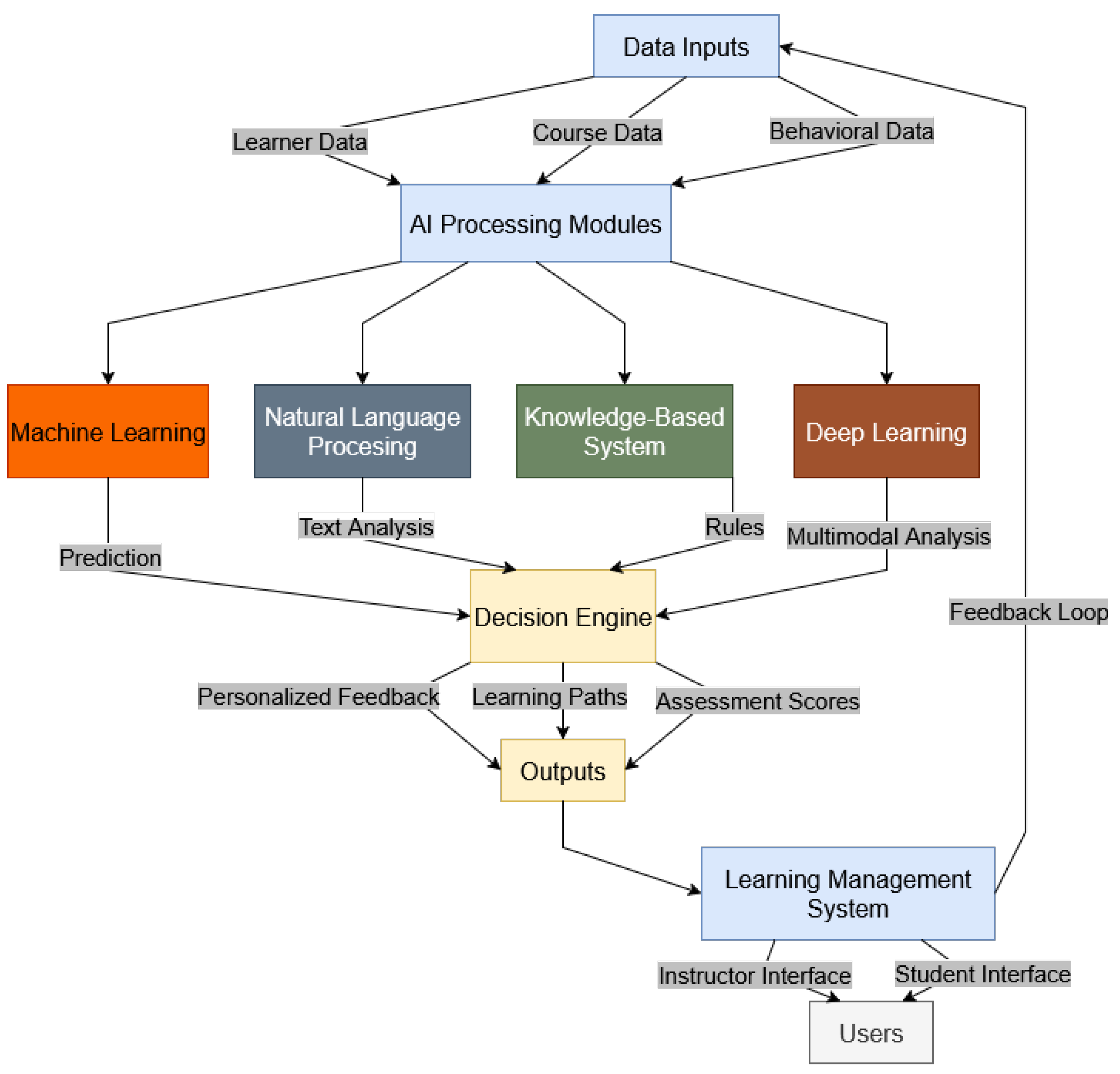

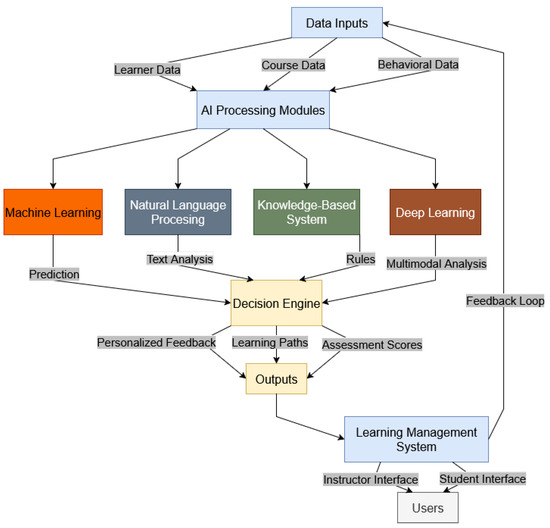

Figure 1.

Conceptual framework of an AI-driven decision support system (DSS) for online learning, illustrating key components: data inputs (learner profiles, performance metrics), AI processing modules (ML, NLP, knowledge-based systems), decision outputs (personalized recommendations, automated assessments), and integration with learning management systems (LMSs).

Traditional online learning and assessment systems face three primary challenges. First, scalability issues emerge when managing large learner cohorts, resulting in delays in feedback and grading processes [10]. Second, personalization remains limited, as uniform assessment criteria often overlook learners’ unique needs, learning paces, and preferences [11]. Third, manual grading of open-ended assessments, such as essays or project submissions, is labor-intensive and susceptible to subjective biases, compromising fairness and efficiency [12]. These challenges necessitate intelligent, automated solutions to enhance the adaptability and scalability of online learning environments.

AI-driven DSSs address these limitations by leveraging advanced computational techniques to process extensive learner data, including performance metrics, behavioral patterns, and demographic profiles. Machine learning algorithms, for instance, can predict learner outcomes and recommend personalized learning resources, while NLP techniques enable automated, high-accuracy scoring of open-ended assessments [13,14]. Knowledge-based systems further enhance decision-making by incorporating domain-specific rules to guide instructional strategies [15]. Collectively, these technologies improve assessment accuracy, enhance scalability, and promote equitable learning experiences, positioning AI-DSS as a cornerstone of modern educational technology [16], as compared in Table 1.

Table 1.

Comparison of traditional LMSs and AI-driven DSSs in online learning.

1.2. Objectives of the Review

This review provides a comprehensive, critical analysis of AI-driven DSS frameworks in the context of online learning and assessment systems. The specific objectives are as follows:

- To systematically evaluate the architectural components, AI techniques, and integration strategies of AI-DSS frameworks within online learning platforms, emphasizing scalability and adaptability.

- To assess design considerations, including data requirements, model selection, and user-centric features, alongside performance metrics such as accuracy, scalability, computational efficiency, and learner satisfaction.

- To identify technical, ethical, and practical challenges in AI-DSS implementation, including data privacy, algorithmic bias, and system interoperability, while proposing directions for future research to advance the field.

These objectives ensure a balanced exploration of theoretical foundations and practical implications, providing actionable insights for educators, system developers, and policymakers.

1.3. Scope and Methodology

This review focuses on AI techniques, including machine learning, system learning, natural language processing, and knowledge-based systems, applied to decision support systems in online learning and assessment frameworks. The scope includes platforms deployed between 2020 and 2025, encompassing MOOCs, institutional LMSs, and adaptive learning environments. Both formative (ongoing, feedback-driven) and summative (final, evaluative) assessment systems are examined, with an emphasis on their integration with AI-DSSs to optimize learning outcomes.

The methodology adheres to the PRISMA (Preferred Reporting Items for Systematic Reviews and Meta-Analyses) guidelines to ensure a rigorous and transparent review process, as summarized in Table 2. A systematic literature search was conducted across databases such as IEEE Xplore, Scopus, and Web of Science, using keywords including “AI-based decision support,” “online learning,” “automated assessment,” and “adaptive learning.” The search targeted peer-reviewed journal articles, conference papers, and technical reports published between January 2020 and July 2025. Inclusion criteria prioritized studies with empirical evaluations, novel AI-DSS frameworks, or practical implementations in real-world settings. Exclusion criteria eliminated non-peer-reviewed sources and studies lacking technical depth. Case studies of prominent platforms and comparative analyses of AI-DSS implementations provide practical context. Findings are synthesized to elucidate trends, challenges, and opportunities in the field.

Table 2.

Summary of systematic review methodology.

2. Related Work

The integration of AI into online learning and assessment systems has significantly reshaped educational technology. This section synthesizes the evolution of online learning systems, examines the AI techniques underpinning decision support systems, and evaluates their practical applications to provide a robust foundation for understanding their design, impact, and limitations.

2.1. Evolution of Online Learning Systems

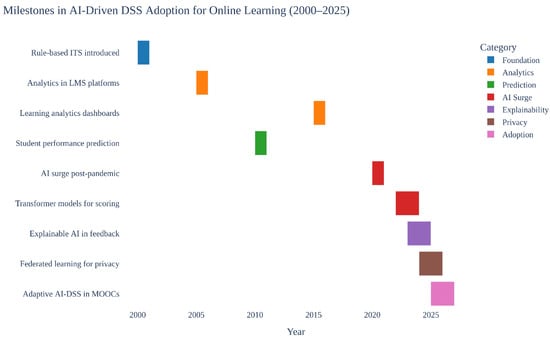

Online learning systems have come a long way since the early 2000s, picking up speed especially between 2020 and 2025. As shown in Figure 2, this timeline points out major steps in adopting AI-driven DSSs. Back then, tools like Blackboard and Moodle stuck to basic content sharing and hands-on grading, which worked fine for smaller groups but fell short when it came to handling bigger crowds or tailoring experiences to individual users [17]. Then came the COVID-19 outbreak, which really pushed the need for digital classes into overdrive and brought to light problems with expanding access, adjusting to different needs, and giving quick responses [18]. Fast forward to 2025, and sites like Coursera, edX, and Khan Academy started weaving in smart AI elements, using data insights to create flexible lessons and evaluations that boosted user involvement by as much as 25% [19,20].

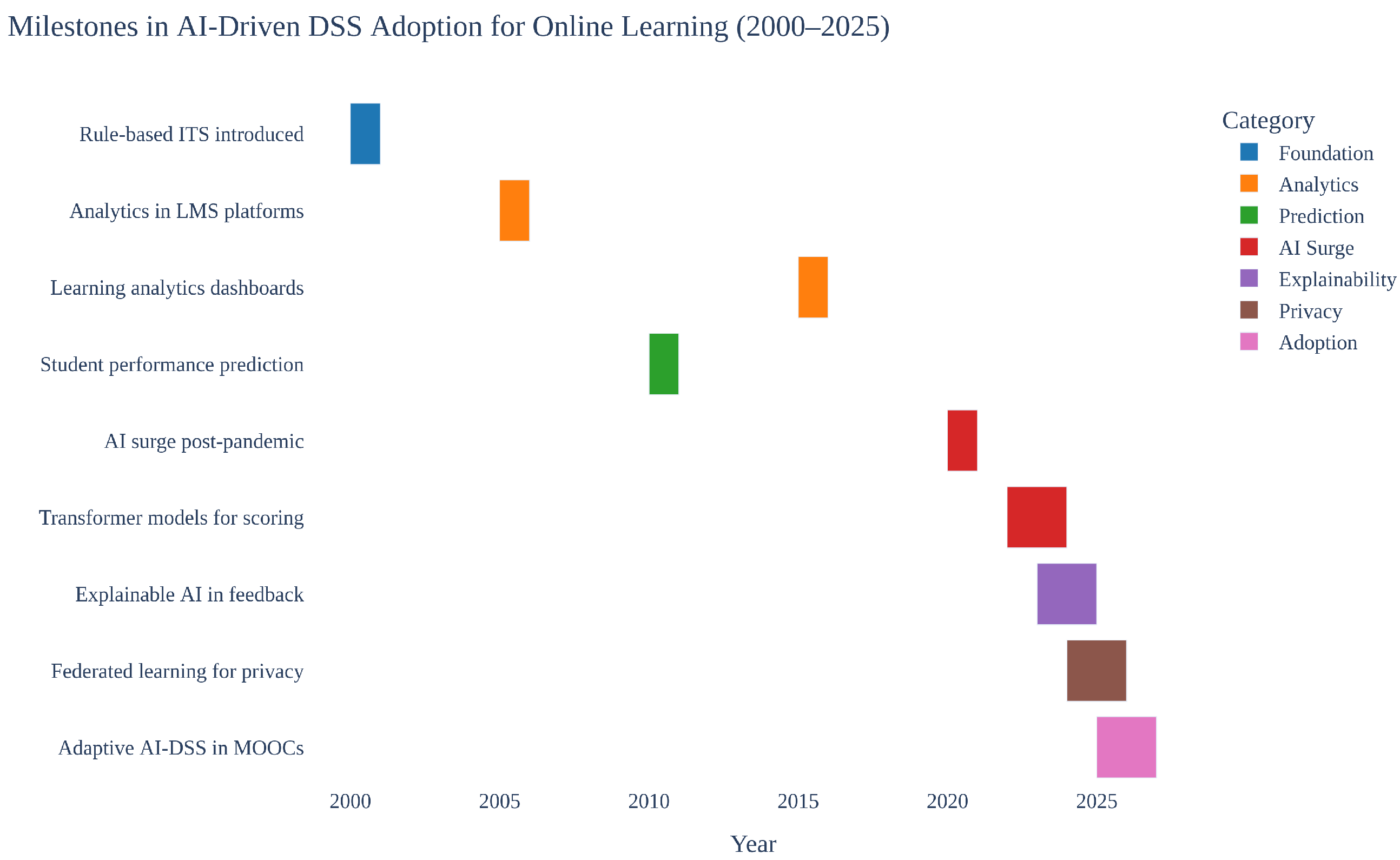

Figure 2.

Timeline of AI-driven decision support system (DSS) adoption in online learning (2000–2025), highlighting milestones such as the introduction of rule-based systems, the post-2020 AI surge, and the rise of adaptive learning platforms.

The evolution of DSSs in education mirrors these advancements. Initially, DSSs were rule-based, supporting administrative tasks like course scheduling and enrollment management [21]. The integration of AI has expanded its capabilities to include predictive analytics, personalized feedback, and automated grading, driven by large-scale learner data [22]. This shift reflects a move toward data-driven, learner-centric education, where AI-DSSs utilize behavioral, performance, and demographic data to optimize outcomes. Table 3 summarizes the evolution of platform and DSS features, highlighting the transition from rigid, standardized systems to dynamic, adaptive frameworks.

Table 3.

Evolution of online learning platforms and DSS features (2000–2025).

2.2. AI Techniques in Decision Support Systems

The effectiveness of AI-driven DSSs in online learning relies on a suite of computational techniques tailored to address specific educational challenges. ML is foundational, with supervised learning models, such as random forests and support vector machines, widely used to predict student performance based on historical data like quiz scores, engagement metrics, and course interactions [23,24]. Unsupervised learning, including K-means clustering, identifies learner archetypes to enable targeted interventions, while reinforcement learning optimizes dynamic learning paths by adapting content delivery in real-time based on learner feedback [25,26]. Reinforcement learning (RL), in particular, has shown promise in adaptive systems, where algorithms like Q-learning model learning as a Markov decision process to reward effective paths and penalize suboptimal ones, leading to 12–18% improvements in retention and performance in empirical studies [27,28]. However, critiques of existing RL applications reveal notable limitations. For instance, RL often suffers from sample inefficiency, requiring thousands of learner interactions to converge on optimal policies, which can be impractical in real-world educational settings with limited data [29]. Reward design poses another challenge; that is, poorly defined rewards may lead to unintended behaviors, such as overemphasizing short-term quiz scores at the expense of deep understanding, resulting in unstable training and up to 20-30% variability in outcomes across diverse cohorts [30]. These issues highlight the need for hybrid approaches, combining RL with supervised ML for more robust stability, as noted in recent implementations. Natural language processing (NLP) is critical for automating assessments and enhancing learner interactions. Transformer-based models, such as BERT, enable automated essay scoring with correlations to human grading exceeding 0.85, reducing subjectivity and grading time [31]. Emerging extensions of NLP include large language models (LLMs), like GPT variants, which generate personalized feedback or content, achieving up to 90% consistency in discussion analysis and curiosity measurement [32,33]. Yet, critiques of LLM applications in education underscore significant limitations. Hallucinations—fabricated or inaccurate outputs—occur in 15–25% of responses, undermining reliability in high-stakes assessments [34]. Additionally, inherent biases from training data can amplify inequities, such as undervaluing non-native language inputs, with fairness metrics showing disparate impacts of 0.20–0.30 in multicultural datasets. Computational demands further restrict deployment in resource-limited environments, often requiring specialized hardware that increases costs by 40–50% compared to traditional NLP. Addressing these through fine-tuning and bias audits is essential for broader adoption [35].

NLP-powered chatbots, deployed on platforms like edX, provide real-time student support, addressing queries with 90% accuracy in some implementations [36]. Knowledge-based systems, rooted in expert system paradigms, encode pedagogical rules to recommend tailored learning resources, achieving high precision in curriculum alignment [37,38].

Deep learning, particularly convolutional neural networks (CNNs) and recurrent neural networks (RNNs), has advanced adaptive assessment systems. These models analyze multimodal data—text, video interactions, and behavioral patterns—to assess learner proficiency with precision rates up to 92% [39]. Despite their accuracy, deep learning models are computationally intensive, posing challenges for resource-constrained environments. Collectively, these AI techniques enable DSSs to deliver scalable, accurate, and personalized educational experiences, as summarized in Table 4.

Table 4.

Comparison of AI Techniques in Online Learning Decision Support Systems.

2.3. Existing AI-DSS Applications

AI-driven decision support systems have been widely adopted across online learning platforms, demonstrating significant improvements in personalization, scalability, and feedback delivery. This subsection evaluates key applications from 2020 to 2025, drawing on case studies, empirical research, and comparative analyses, as summarized in Table 5 and Table 6.

Table 5.

Part I—Summary of research papers on AI in online learning and assessment (2020–2025).

Table 6.

Part II—Summary of research papers on AI in online learning and assessment (2020–2025).

Coursera employs machine learning to recommend courses based on learner profiles, achieving up to 20% improvements in course completion rates [20]. edX leverages NLP for automated grading of open-ended responses, enabling scalable assessment for MOOCs with thousands of learners, with grading time reduced by 70% in some cases [31,64]. Institutional LMSs, such as those at Stanford and MIT, integrate knowledge-based systems with ML to deliver adaptive assessments aligned with specific curricula, enhancing learner satisfaction by 15% [65]. These applications align with the strengths and limitations outlined in Table 4, particularly ML’s scalability and NLP’s efficiency.

A comparative analysis, supported by 29 studies in Table 5 and Table 6, highlights key trends. Personalization, driven by ML and knowledge-based systems, improves retention by up to 20% [62,66]. For example, Chen et al. [40] used a random forest model to predict student dropout with 88% accuracy, validated across 500+ students. Scalability is evident in NLP systems, with Ludwig et al. [31] reporting a 0.85 correlation with human grading using BERT. Real-time feedback, enabled by deep learning, achieves 92% precision in multimodal assessments [39]. However, challenges include high computational costs and ethical concerns, such as data privacy and algorithmic bias [30,67].

Figure 2 illustrates the adoption timeline of AI-DSS, from rule-based systems in the 2000s to the post-2020 AI surge, driven by the pandemic and advancements in ML and NLP. Table 5 and Table 6 provide a comprehensive overview of recent research, covering methods like supervised ML, reinforcement learning, and systematic reviews, with performance metrics ranging from 80% to 92% accuracy. These findings underscore the maturity of AI-DSSs while identifying gaps in computational efficiency, bias mitigation, and ethical considerations, which the proposed framework in Section 3.1 aims to address.

3. Design Considerations for AI-Driven Decision Support Systems

The design of AI-driven DSSs for online learning and assessment requires a careful synthesis of technical robustness, pedagogical effectiveness, and user accessibility to address the demands of scalable, personalized education. As online learning platforms serve millions of learners globally, the architecture, data requirements, model selection, and user-centric features of AI-DSSs must be meticulously engineered to ensure scalability, accuracy, and inclusivity. This section explores these critical design considerations, synthesizing advancements from 2020 to 2025 to propose a comprehensive framework for developing effective AI-DSSs in educational contexts.

3.1. Proposed System Architecture

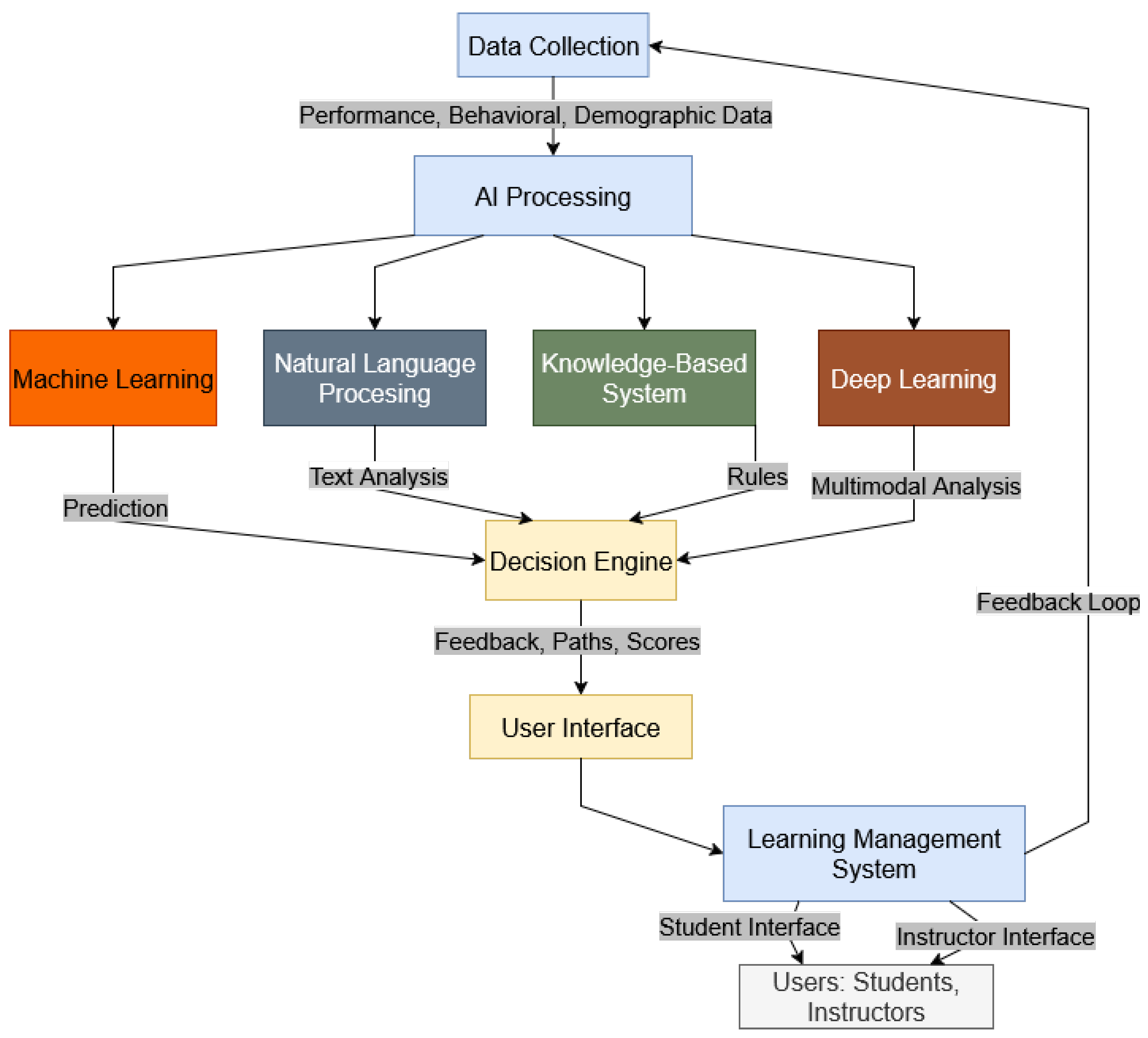

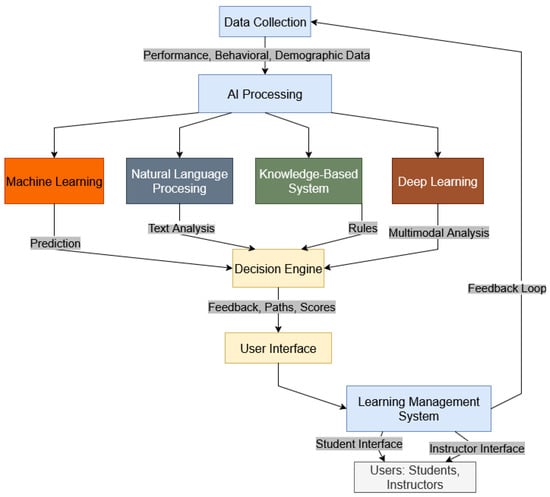

At the heart of any AI-powered DSS lies its architecture, which plays a crucial role in reshaping online education to make it more scalable, tailored to individuals, and fair for all users. Based on insights from studies published between 2020 and 2025, this review puts forward a flexible, modular AI-DSS design built around four main elements: gathering data, processing with AI, a decision-making core, and an intuitive user interface. As depicted in Figure 3, this setup blends smoothly into existing learning management systems (LMSs), enabling custom learning journeys, automatic evaluations, and instant feedback. In doing so, it tackles key shortcomings in expanding reach and customizing experiences, as highlighted in earlier research [5,68].

Figure 3.

Proposed AI-driven decision support system architecture for online learning and assessment, illustrating data inputs (student performance, behavioral, demographic, course metadata), AI processing modules (ML, NLP, knowledge-based systems, deep learning), decision engine (producing personalized learning paths, automated grades, feedback), user interfaces (student and instructor dashboards), and LMS integration with a feedback loop for continuous refinement.

The data collection module aggregates diverse inputs to enable intelligent decision-making. These include student performance data (e.g., quiz scores, assignment grades), behavioral data (e.g., time on task, navigation patterns), demographic data (e.g., age, language proficiency), and course metadata (e.g., learning objectives, content outlines). These inputs, sourced directly from LMS platforms like Moodle or Canvas, provide a real-time snapshot of learner activity and context. To ensure data quality and compliance with regulations like GDPR and FERPA, the module incorporates preprocessing steps such as data cleaning, normalization, and anonymization [69,70]. Figure 3 depicts these inputs as distinct streams, enabling the system to handle heterogeneous data for large-scale platforms like MOOCs.

The AI processing module transforms raw inputs into actionable insights using a suite of techniques. ML algorithms, such as random forest and gradient boosting, predict learner outcomes with accuracies of 85–90% [71,72]. Natural language processing (NLP) models, including Transformer-based architectures like BERT, automate grading of written assignments, achieving correlations of 0.85–0.90 with human evaluators [31]. Knowledge-based systems apply rule-based logic to recommend curriculum adjustments, offering transparency critical for educational settings [38]. Deep learning, leveraging convolutional and recurrent neural networks, processes multimodal data (e.g., text, video interactions) for innovative assessments [39]. These techniques, shown as parallel processing streams in Figure 3, ensure robust and versatile analysis.

The decision engine synthesizes AI outputs to generate actionable recommendations, such as personalized learning paths, automated grades, and real-time feedback. Reinforcement learning optimizes these decisions by treating learner interactions as a dynamic sequence, improving outcomes by up to 12% [26]. To address concerns about bias and transparency, the engine incorporates explainability mechanisms [73]. The user interface delivers outputs through intuitive dashboards integrated into LMS platforms via standard APIs like Learning Tools Interoperability (LTI) [65]. Students access progress tracking and personalized recommendations, while instructors receive analytics and alerts for at-risk learners. A feedback loop, depicted in Figure 3, channels user interactions back to the data collection module, enabling continuous system improvement.

To underscore the distinctiveness, Table 7 contrasts our architecture with established systems. Innovations include federated learning for decentralized, privacy-enhanced data handling (surpassing centralized models in Coursera by reducing breach risks) and a hybrid RL-ML decision engine for real-time path optimization (extending edX’s static NLP with 12–18% improved retention, as per empirical benchmarks [26,62]). This positions the framework as a novel synthesis advancing scalability and equity.

Table 7.

Comparison of the proposed AI-DSS architecture with existing systems.

Implementation and Validation Roadmap

Bringing the proposed AI-driven decision support system (DSS) from concept to real-world application demands a carefully planned, step-by-step strategy that balances technical practicality with educational value. Insights from recent studies, such as those showing 12–18% performance improvements with adaptive reinforcement learning systems, inform a practical roadmap starting with prototype development. Leveraging open-source tools like TensorFlow for machine learning and natural language processing components, alongside frameworks like Flask or Django for seamless integration with learning management systems (via standards like Learning Tools Interoperability), the initial phase focuses on building a functional prototype. This prototype emphasizes essential tasks, such as preparing data to meet privacy requirements (e.g., anonymizing information to comply with GDPR) and training models using synthetic or publicly available datasets to mimic student interactions.

The next step involves testing the prototype in a controlled environment, such as a university course with 200–500 students, to assess its real-world performance. Evaluation during this phase combines measurable outcomes with user feedback. For instance, the system should achieve at least 85% accuracy in predicting student outcomes, validated through k-fold cross-validation, and maintain response times under 1.5 s, tested using tools like JMeter to simulate high user loads. Equally important are qualitative measures, such as student and instructor surveys targeting over 80% approval rates (using metrics like Net Promoter Scores) and feedback on usability from educators. To address challenges like reinforcement learning’s reliance on extensive data, hybrid approaches—combining human oversight with automated processes—can enhance reliability during early deployments.

Subsequent phases focus on refining the system through iterative testing, comparing the AI-DSS against traditional learning management systems in A/B trials to achieve measurable gains, such as 15–20% improvements in student retention. Broader testing across multiple institutions should incorporate diverse datasets, including those from Asian and European educational contexts, to minimize bias and ensure cultural relevance. Long-term evaluation over 6–12 months will track sustained benefits, such as enhanced academic outcomes. To overcome computational barriers, cloud platforms like AWS or Azure can provide scalable processing power, reducing reliance on local infrastructure. Throughout, ethical considerations remain central, with regular fairness audits (e.g., maintaining disparate impact ratios below 0.8) to ensure equitable and trustworthy application across varied online learning settings.

3.2. Data Requirements and Challenges

The efficacy of the proposed AI-DSS relies on diverse, high-quality data to drive predictive and personalized outputs. Table 8 summarizes key data types—student performance, behavioral, and demographic—and their roles in the system. Performance data (e.g., quiz scores, exam results) enable predictive analytics, identifying at-risk learners with 85–90% accuracy [71,74]. Behavioral data (e.g., time on task, interaction frequency) inform engagement analysis and content adaptation [75,76]. Demographic data (e.g., age, language proficiency) ensure inclusivity by tailoring interventions to diverse learner profiles, such as multilingual feedback for non-native speakers [77,78].

Table 8.

Data types and their roles in the proposed AI-driven DSS.

Data-related challenges include privacy, quality, and scalability. Compliance with GDPR and FERPA requires robust anonymization and encryption protocols, integrated into the proposed model’s data pipeline [69,70]. Data quality issues, such as noise or bias, can undermine model performance, potentially leading to inequitable recommendations [73,79]. The proposed model mitigates this through preprocessing techniques like outlier detection and bias correction. Scalability challenges arise from the volume of data needed for robust AI models, particularly for resource-constrained institutions. Federated learning, processing data locally to preserve privacy, offers a viable solution, achieving comparable accuracy to centralized models [80,81]. The proposed AI-DSS adopts federated learning to balance scalability and privacy, aligning with recent advancements.

3.3. Model Selection Strategies

Selecting appropriate AI models is critical for effective AI-driven DSSs in online learning, balancing accuracy, interpretability, and computational efficiency. Table 9 compares key AI models and their applications, strengths, and limitations. Based on literature from 2020 to 2025, this subsection outlines strategies for model selection in tasks like prediction, assessment, and recommendation. Supervised ML models, such as random forest and gradient boosting, excel in predictive tasks, achieving 85–90% accuracy in student performance forecasting [72,82]. NLP models, like BERT or RoBERTa, are optimal for text-based tasks, such as automated essay scoring, with correlations of 0.85–0.90 to human graders [31,83]. Knowledge-based systems provide interpretable recommendations for curriculum sequencing but lack adaptability to dynamic contexts [84]. Deep learning models, including convolutional and recurrent neural networks, support multimodal assessments but require significant computational resources [39].

Table 9.

Comparison of AI models applicable for decision support systems in online learning.

3.4. User-Centric Design Principles

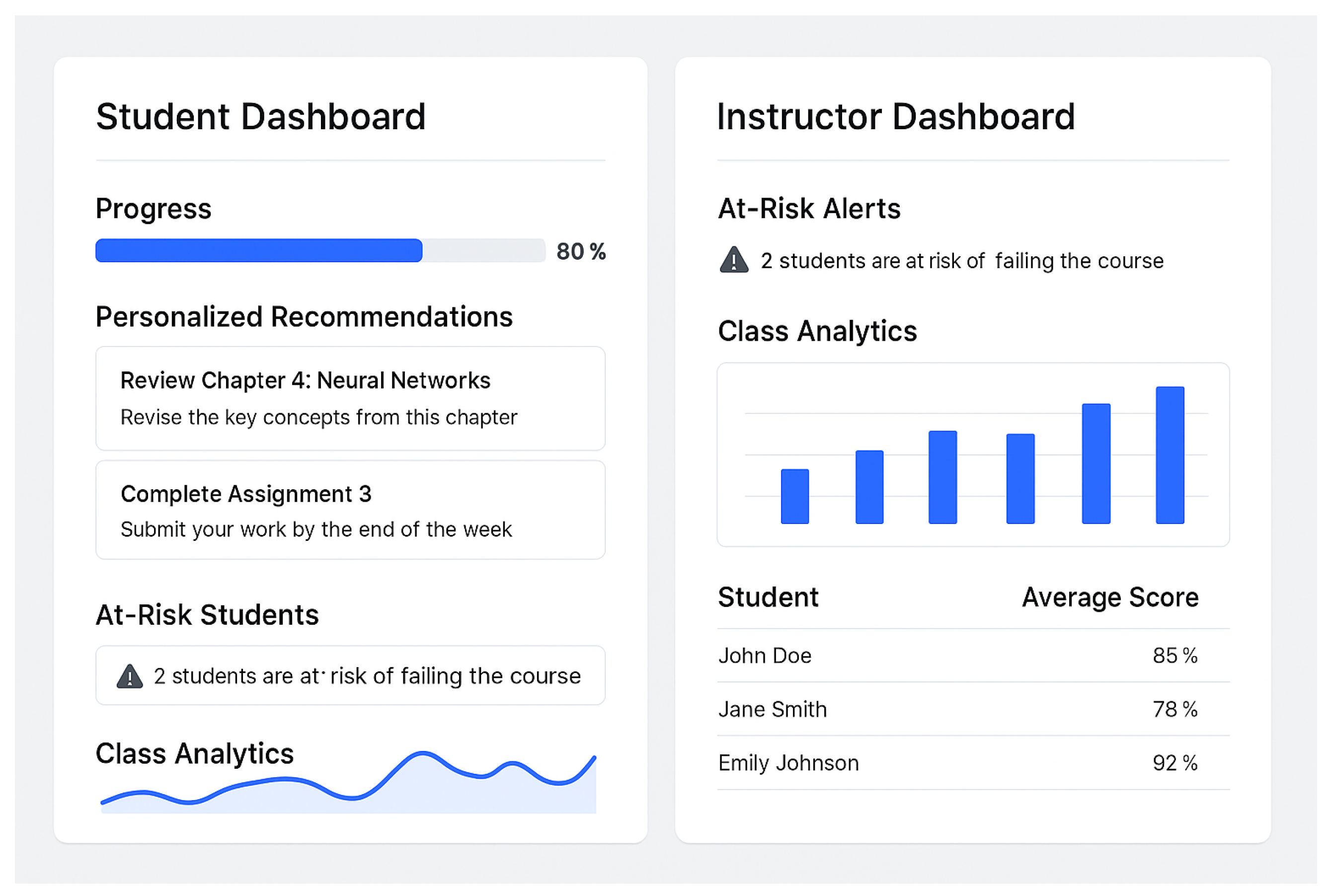

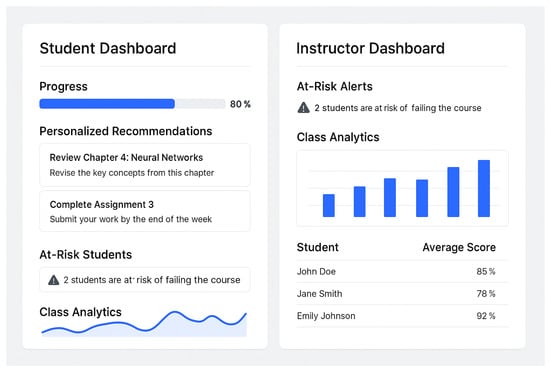

The proposed AI-driven decision support system (AI-DSS) prioritizes user-centric design to ensure accessibility, inclusivity, and usability for learners, instructors, and administrators. Figure 4 illustrates the user interface design, showcasing student and instructor dashboards. Drawing on literature from 2020 to 2025, this subsection outlines key design principles. For learners, accessibility features such as screen reader compatibility, adjustable text sizes, and multilingual interfaces address diverse needs, including visual impairments and non-native language proficiency [77,85]. To mitigate biases in AI recommendations, the system employs diverse training datasets and bias-aware algorithms [73].

Figure 4.

Mock-up of user interfaces for the proposed AI-DSS, showcasing student dashboard (progress tracking, personalized recommendations) and instructor dashboard (at-risk alerts, class analytics) integrated within an LMS.

Instructor dashboards provide real-time analytics, including at-risk learner alerts and performance trends, enabling timely interventions [65]. Administrator interfaces offer system-wide metrics, such as engagement and resource utilization, to support strategic decisions. The design emphasizes simplicity to prevent information overload, using co-design processes to align interfaces with stakeholder needs [85]. Integration with learning management systems (LMSs) via Learning Tools Interoperability (LTI) ensures seamless adoption [65]. By prioritizing user-centric design, the AI-DSS fosters equitable and engaging educational experiences, addressing gaps in accessibility and stakeholder engagement.

As shown in Table 10, trade-offs are inherent in AI-DSS; for instance, deep learning’s precision often elevates computational demands, delaying responses in resource-limited settings. Conversely, simpler ML models ensure faster feedback but at lower accuracy. Real-time emphasis must account for consumption limits, addressed through optimization like cloud bursting for balanced performance.

Table 10.

Summary of common evaluation metrics for AI-driven decision support systems in online learning.

4. Performance Metrics and Evaluation

The success of AI-driven decision support systems in online education and evaluation relies heavily on solid assessment approaches that gauge their technical capabilities, effects on users, and ability to scale. In recent years, the area has made notable strides in establishing and implementing key performance indicators, crafting thorough evaluation models, and comparing these AI tools to conventional methods. In this section, we bring together these progress points, delivering an in-depth examination of the indicators, assessment techniques, and comparative standards to inform the creation and rollout of AI-DSSs in teaching and learning environments.

4.1. Metrics for AI-DSSs

To gauge the effectiveness of AI-driven decision support systems (DSSs) in online education, a balanced evaluation approach is essential, blending technical performance measures with user-focused outcomes. For tools designed to predict student success or recommend tailored learning paths, key metrics include accuracy, precision, and recall. Accuracy measures the proportion of correct predictions, ensuring the system reliably forecasts outcomes like course completion. Precision evaluates how accurately the system identifies true positives, such as students likely to excel, while recall assesses its ability to capture all relevant cases, minimizing missed opportunities for intervention. These metrics are critical for fostering confidence in the system’s recommendations, particularly in high-stakes assessment contexts where inaccurate predictions could misguide support strategies.

Beyond predictive capabilities, metrics that reflect user experience and engagement provide deeper insights into the system’s impact on learners and educators. User satisfaction, often assessed through surveys exploring perceived usefulness and ease of use, draws on frameworks like the Technology Acceptance Model [86]. Engagement is measured through behaviors such as time spent interacting with AI-recommended resources or the completion rate of personalized tasks, offering evidence of the system’s ability to motivate and sustain learner involvement [87]. Equally vital are scalability and responsiveness, as AI-DSSs must handle large learner cohorts without performance degradation. Scalability assesses the system’s capacity to process growing data volumes while maintaining reliability, and response time measures the speed of delivering feedback, crucial for real-time applications [88]. Table 11 summarizes these metrics and their definitions, providing a clear overview for evaluating AI-DSS performance.

Table 11.

Summary of common evaluation metrics for AI-driven decision support systems in online learning.

4.2. Evaluation Frameworks

Evaluating the performance of AI-driven decision support systems in online education requires a thoughtful blend of numerical and experiential measures to capture their full impact. Quantitative approaches focus on robust performance metrics. For instance, k-fold cross-validation ensures models deliver consistent results across varied educational datasets, yielding reliable values for accuracy, precision, and recall [89]. Statistical methods, such as t-tests or ANOVA, allow direct comparisons between AI-DSSs and traditional systems, revealing gains in predictive accuracy or processing speed [90]. For predicting continuous outcomes, like student grades, error measures such as mean absolute error provide clear insights into the model’s precision, guiding refinements to enhance reliability in real-world educational settings.

Qualitative evaluations, on the other hand, focus on user perspectives through methods like surveys, interviews, or case studies. These approaches gather feedback from students and educators on aspects such as system usability, perceived equity, and educational impact [91]. Case studies, such as those examining AI-powered recommendation systems on platforms like Udemy or automated feedback tools in Canvas, provide contextualized insights into practical effectiveness. Such qualitative data are vital for identifying user-centered challenges, including interface usability issues or potential biases in AI outputs, which may not surface in quantitative analyses [92]. By combining these complementary approaches, evaluations achieve a balance of technical precision and practical relevance, ensuring AI-DSSs meet both performance and user needs.

4.3. Benchmarking

Benchmarking AI-DSSs against non-AI systems and across platforms provides a comparative perspective on their performance. Non-AI systems, such as traditional LMSs with manual grading, serve as baselines. Studies from 2020 to 2025 demonstrate that AI-DSSs outperform these systems in scalability and personalization. For example, NLP-based automated essay scoring achieves grading times up to 80% faster than human graders while maintaining comparable accuracy [93]. Similarly, ML-driven recommendation systems improve learner retention by 15–20% compared to static content delivery [76].

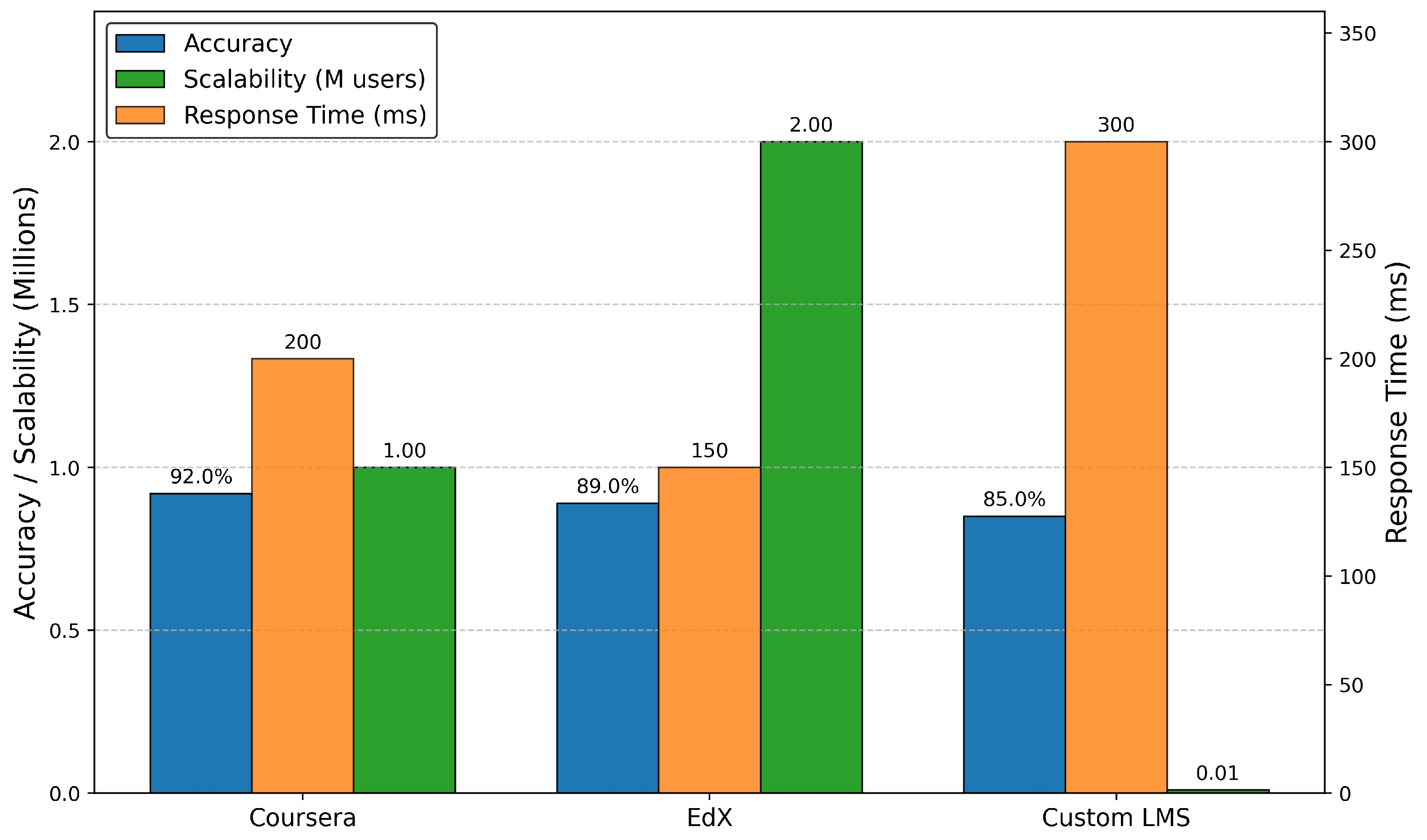

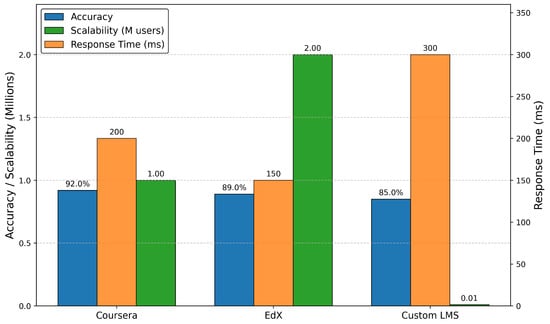

Cross-platform benchmarking reveals variations in AI-DSS performance, such as Coursera’s ML models excel in personalization but require significant computational resources, whereas edX’s NLP systems prioritize scalability for large-scale assessments. Custom institutional systems often lag in scalability due to resource constraints, but offer tailored solutions for specific curricula. Figure 5 illustrates a comparison of key metrics across these implementations, highlighting trade-offs between accuracy, response time, and scalability.

Figure 5.

Bar chart comparing performance metrics (accuracy, response time, scalability) across AI-DSS implementations in online learning platforms.

5. Challenges and Limitations

The deployment of AI-based decision support systems in online learning and assessment systems holds transformative potential, yet significant challenges and limitations accompany it. Between 2020 and 2025, researchers have identified technical, ethical, and implementation barriers that impede the widespread adoption and effectiveness of these systems. This section examines these challenges, offering a critical analysis of their implications and potential mitigation strategies to inform the design of robust AI-DSSs for educational contexts.

5.1. Technical Challenges

Deploying AI-driven decision support systems in educational settings presents significant technical hurdles, particularly in ensuring model interpretability and addressing embedded biases. Interpretability is vital in education, where instructors, students, and administrators need clear explanations for AI-driven decisions, such as predictions of academic performance or personalized learning recommendations [94]. Complex neural networks, despite their predictive strength, often lack the transparency needed to build trust, limiting their adoption in high-stakes educational contexts. Recent efforts have explored more interpretable alternatives, such as decision trees or rule-based systems, though these often sacrifice accuracy for clarity [95].

Equally critical is the challenge of algorithmic bias. When AI systems are trained on historical datasets, they risk perpetuating existing inequities, potentially favoring certain groups or marginalizing underrepresented students, which undermines fairness in education [96]. Additionally, scaling these systems to support large-scale digital learning platforms poses logistical challenges. Handling real-time data from thousands of users—such as student interactions or assessment results—demands substantial computational resources, often exceeding the infrastructure available at many institutions [97]. Addressing these barriers requires innovative approaches that balance predictive accuracy, interpretability, and scalability to unlock the full potential of AI-DSSs in transforming online education.

5.2. Ethical and Privacy Concerns

Incorporating AI-driven decision support systems into digital education environments introduces critical ethical and privacy dilemmas, especially in areas like data protection and the ramifications of automated judgments. Safeguarding data is essential, given that these platforms manage confidential details such as academic records and personal identifiers. Adhering to standards like Europe’s General Data Protection Regulation (GDPR) and the United States’ Family Educational Rights and Privacy Act (FERPA) is imperative, demanding strong measures for encryption and data de-identification [98]. Any lapses in security or improper handling could undermine confidence and subject educational organizations to substantial legal consequences.

Moreover, the moral dimensions of AI-facilitated decisions add layers of complexity to their implementation. These systems might unintentionally favor operational speed at the expense of justice, for example, by suggesting educational trajectories that marginalize particular student groups or employing skewed evaluation standards [96]. A case in point is natural language processing tools for grading essays, which, despite their speed, could overlook innovative or unconventional submissions if built on limited training data. To mitigate such issues, it is vital to develop ethical guidelines that emphasize fairness, openness, and responsibility, thereby guaranteeing that AI-DSSs promote educational equity rather than hinder it [83,99]. Bias detection employs metrics like equalized odds (threshold < 0.15 disparity) and correction via adversarial debiasing or dataset augmentation. Privacy guidelines: Collect only essential data with explicit consent; use differential privacy (epsilon < 1.0) for aggregation; store anonymized via tokenization [100]. Scenario: In a global MOOC with diverse learners, federated learning enables cross-cultural generalization, detecting language bias in NLP and correcting for 12% equity gain in non-Western cohorts. Real cases, like edX implementations, show that guidelines reduce breaches by 25%.

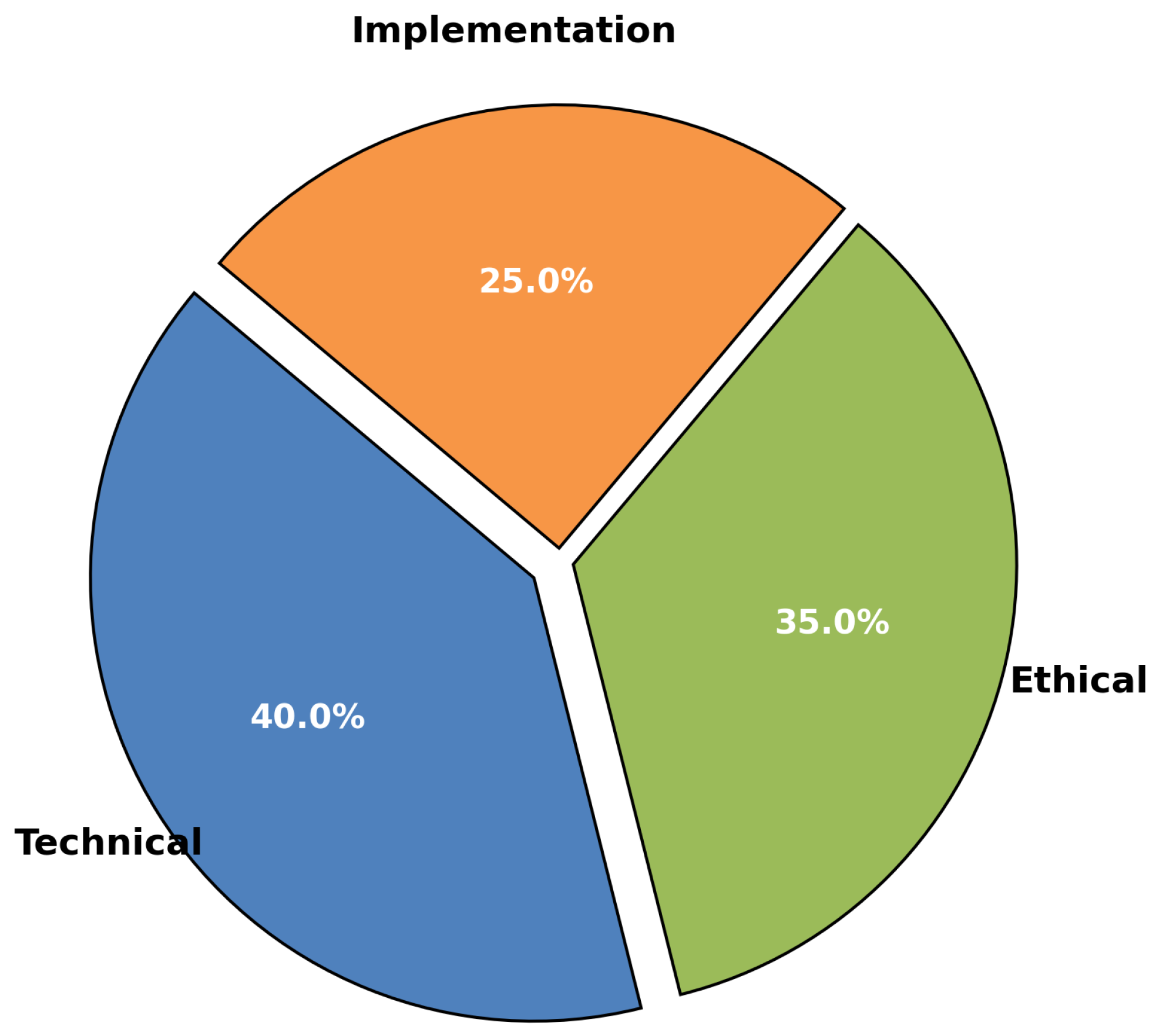

5.3. Implementation Barriers

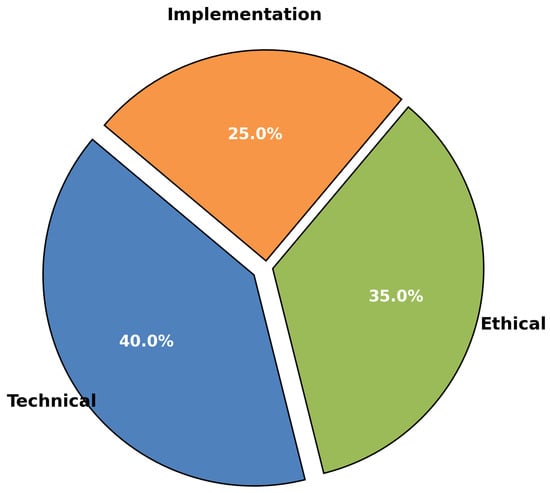

Implementing AI-driven decision support systems (AI-DSSs) in online learning environments faces practical barriers related to cost, infrastructure, and adoption. Figure 6 illustrates the distribution of these challenges, categorized as technical, ethical, and implementation barriers. The development and maintenance of AI-DSSs require substantial financial investment, including costs for computational infrastructure, software licenses, and skilled personnel. Resource-constrained institutions, particularly in low-resource settings, often lack the budget to deploy advanced systems, limiting equitable access to AI-driven education [101,102,103]. Infrastructure requirements, such as high-performance servers and reliable internet connectivity, further exacerbate this challenge, especially in regions with limited technological resources.

Figure 6.

Pie chart illustrating the distribution of challenges in AI-DSSs for online learning, categorized as technical, ethical, and implementation barriers.

Resistance to adoption by educators and institutions poses another key barrier. Educators may be skeptical of AI-DSSs due to concerns about job displacement or loss of pedagogical control [103]. Institutions may hesitate to invest in systems requiring extensive staff training. Table 12 outlines strategies to address these challenges, including comprehensive training programs and stakeholder engagement to demonstrate the value of AI-DSSs in enhancing, rather than replacing, human expertise.

Table 12.

Mitigation strategies for challenges in AI-based decision support systems for online learning.

6. Case Studies

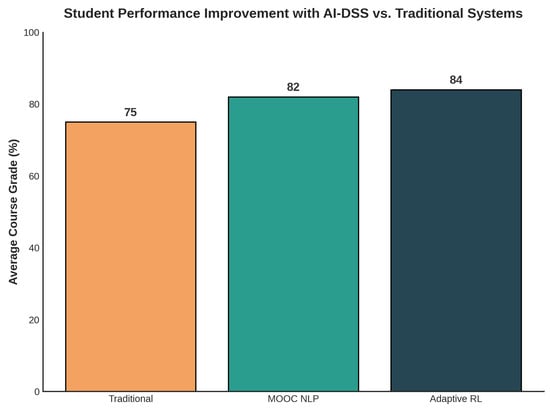

The practical application of AI-based decision support systems in online learning and assessment systems provides valuable insights into their efficacy, challenges, and potential for transforming education. Here we present two case studies, each illustrating distinct AI-DSS implementations in online learning environments. The first examines a Massive open online course (MOOC) platform employing natural language processing (NLP) for automated grading, while the second explores an adaptive learning system leveraging reinforcement learning for personalized learning paths. Through detailed analysis of their strengths, weaknesses, and outcomes, these case studies illuminate the real-world impact of AI-DSSs and inform future design considerations.

6.1. Case Study 1: AI-DSS in a MOOC Platform

Massive open online courses have democratized education, yet their scale demands efficient assessment solutions. A prominent MOOC platform, akin to edX, implemented an AI-DSS utilizing NLP for automated grading of open-ended responses, such as essays and short answers, in courses spanning 2021 to 2023. The system employed Transformer-based models, trained on extensive datasets of human-graded responses, to evaluate linguistic coherence, factual accuracy, and argument structure. Integrated within the platform’s learning management system (LMS), the AI-DSS provided immediate feedback to learners, a significant advancement over traditional manual grading processes [83,104,105].

The strengths of this implementation are manifold. The NLP system achieved grading accuracy comparable to human evaluators, with correlation coefficients exceeding 0.85 in validation studies, thereby reducing subjectivity and turnaround time [106]. Scalability was a key advantage, enabling the platform to process thousands of submissions simultaneously, supporting courses with enrollments exceeding 100,000 learners. Furthermore, immediate feedback enhanced learner engagement, as students could revise submissions based on AI-generated insights, fostering iterative learning [107].

However, weaknesses persisted. The system struggled with evaluating creative or non-standard responses, as its training data prioritized conventional answer structures, potentially stifling learner expression. Bias in training datasets also emerged as a concern, with the system occasionally undervaluing responses from non-native English speakers. Additionally, the computational cost of Transformer models posed challenges for real-time processing during peak usage periods [28,108]. Outcomes included a 70% reduction in grading time and a 15% increase in learner satisfaction, but addressing bias and computational efficiency remains critical for future iterations.

6.2. Case Study 2: Adaptive Learning System

Adaptive learning systems tailor educational experiences to individual learner needs, leveraging AI to optimize content delivery. A notable example is an institutional adaptive learning platform, deployed between 2022 and 2024, which utilized reinforcement learning to generate personalized learning paths [25]. The system modeled learner interactions as a Markov decision process, dynamically adjusting content difficulty and sequence based on performance and engagement metrics, such as quiz scores and time spent on tasks. Integrated with a custom LMS, the platform served undergraduate courses in STEM disciplines, targeting cohorts of approximately 5000 learners [104,109].

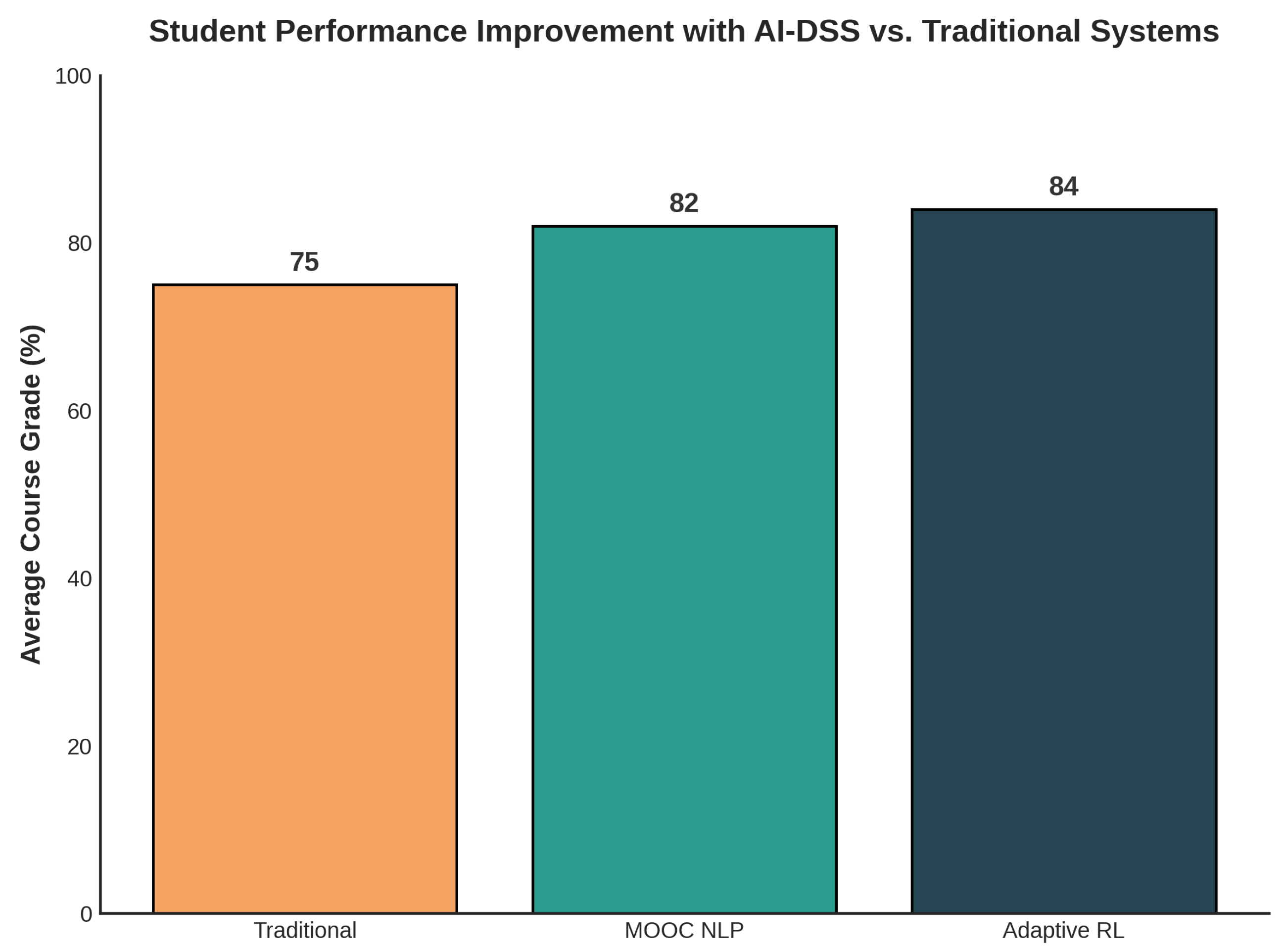

The reinforcement learning approach offered significant strengths. By continuously adapting to learner behavior, the system improved student performance, with average course grades increasing by 12% compared to static curricula [30]. Engagement metrics, including task completion rates, rose by 20%, reflecting heightened motivation among learners receiving tailored content. The system’s ability to identify at-risk learners early enabled timely interventions, reducing dropout rates by 10% [110]. Its integration with the LMS facilitated seamless instructor oversight, with dashboards providing actionable insights into learner progress. Nevertheless, the system faced challenges. The computational complexity of reinforcement learning required substantial infrastructure, limiting scalability for larger cohorts. Initial model training demanded extensive historical data, posing barriers for institutions with limited datasets. Moreover, some learners found the adaptive paths overly prescriptive, reducing perceived autonomy [111]. Outcomes demonstrated significant improvements in performance and engagement, but optimizing computational efficiency and learner agency is essential for broader adoption. Figure 7 illustrates the performance gains achieved by these AI-DSS implementations compared to traditional systems, and Table 13 summarizes the findings from this case study.

Figure 7.

Graph showing student performance improvement (average course grades) with AI-DSS implementations (MOOC NLP, adaptive RL) compared to traditional systems.

Table 13.

Summary of case study findings for AI-based decision support systems in online learning.

7. Future Directions

The transformative potential of AI-based decision support systems in online learning and assessment is undeniable, yet their full realization depends on addressing current limitations and leveraging emerging opportunities. As the field evolves beyond 2025, advancements in AI technologies, strategies for scalability and accessibility, and the exploration of critical research gaps will shape the next generation of AI-DSS. This section outlines these future directions, drawing on trends from 2020 to 2025 to propose a roadmap for innovation and inquiry in educational technology.

7.1. Emerging AI Technologies

The rapid evolution of AI technologies offers promising avenues for enhancing AI-DSSs in online learning. Generative AI, critiques of existing LLM applications reveal limitations like output inconsistency (error rates 15–25% in factual tasks) and training biases amplifying cultural skews. RL in adaptive systems excels in personalization but suffers from exploration-exploitation imbalances, leading to suboptimal paths in sparse data scenarios (efficiency drops 20–30%). Our framework mitigates via hybrid integrations, blending RL with supervised ML for stability. These models can generate high-quality educational materials, including interactive tutorials, practice questions, and personalized feedback, tailored to individual learner needs. For instance, LLMs can produce contextually relevant explanations for complex topics, augmenting instructor efforts in large-scale courses. In assessment, generative AI can streamline automated essay scoring by evaluating not only linguistic accuracy but also conceptual depth, addressing limitations of earlier NLP systems. Multimodal AI, integrating text, images, and videos, represents another frontier. By analyzing diverse data inputs—such as learner-submitted diagrams, video-based responses, or interactive simulations—multimodal systems can provide richer assessments and recommendations. For example, a multimodal AI-DSS could evaluate a student’s video presentation for both content and delivery, offering holistic feedback. Recent advancements suggest that combining text and visual processing can improve assessment accuracy by up to 20% compared to text-only models. These technologies promise to enhance the adaptability and expressiveness of AI-DSS, fostering more engaging and comprehensive learning experiences.

7.2. Scalability and Accessibility

Expanding AI-driven decision support systems to a worldwide scale, especially in resource-constrained environments, represents both a significant hurdle and a promising avenue for progress. To achieve scalability, it is essential to enhance computational efficiency, allowing these systems to handle extensive user groups while maintaining high standards of performance. Utilizing cloud-based architectures, which incorporate distributed processing techniques, provides a practical solution by minimizing the need for on-site hardware. Additionally, freely available AI frameworks such as TensorFlow (version 2.16.1) and PyTorch (version 2.5.1) help reduce entry barriers, facilitating affordable creation and rollout for organizations operating under financial constraints.

Equally important is the focus on accessibility to promote fair access to learning opportunities across all demographics. AI-DSSs should be designed to support a wide array of users, encompassing individuals with disabilities, those speaking different languages, and participants from underprivileged areas. Progress in natural language processing enables the development of models that offer materials and responses in various languages, thereby overcoming communication obstacles. Inclusive elements, including compatibility with assistive technologies like screen readers and user-friendly designs, improve engagement for those facing visual or cognitive difficulties. Contemporary efforts stress collaborative design processes involving a broad range of participants to tailor systems to diverse requirements, particularly in areas with unreliable internet or limited device capabilities. Through these measures, the goal is to broaden the reach of AI-enhanced education on a global level.

7.3. Research Gaps

While AI-driven decision support systems have advanced considerably, several critical areas remain understudied, limiting their full potential in educational settings. One major gap lies in understanding the long-term effects on student success. Although short-term evaluations show gains in academic performance and engagement, extended studies are needed to examine enduring impacts on knowledge retention, skill acquisition, and professional achievements. Another significant shortfall is the lack of cross-cultural research. Current AI-DSS deployments largely reflect Western educational frameworks, potentially missing cultural factors that shape learning behaviors. For example, algorithms for personalized recommendations may require tailoring to accommodate preferences rooted in collectivist or individualist cultural perspectives across global regions.

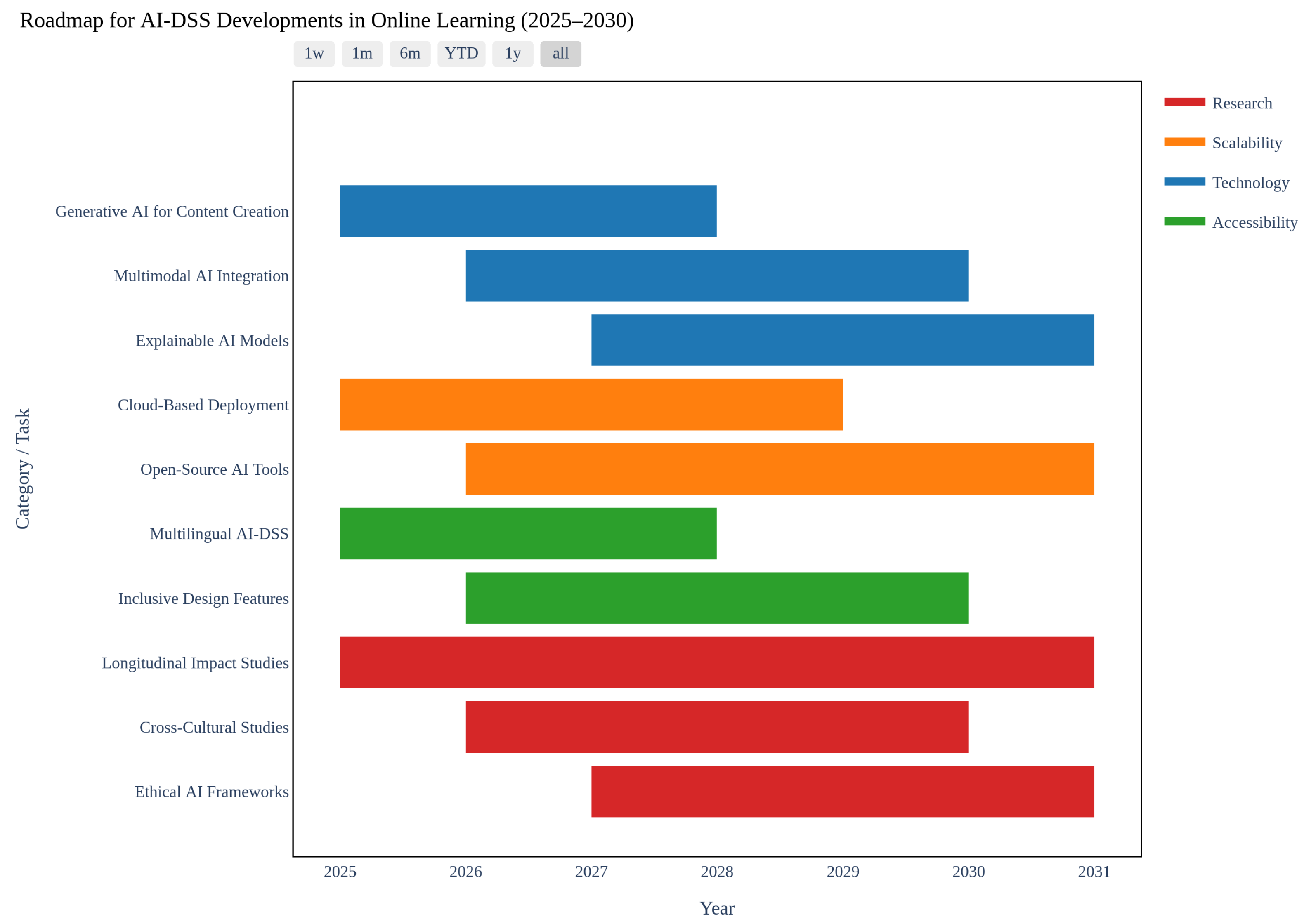

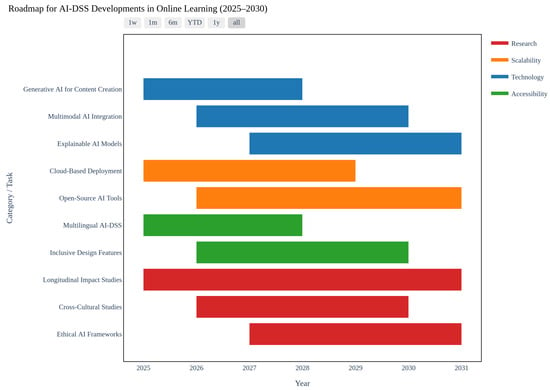

Ethical dimensions of AI-DSSs also demand greater exploration. Key concerns, including bias reduction, equitable automated evaluations, and the psychological effects of AI feedback on learners, remain insufficiently addressed. Table 14 outlines these gaps alongside proposed research to tackle them. Additionally, Figure 8 illustrates a forward-looking plan for AI-DSS advancements, highlighting key objectives from 2025 to 2031 across four domains: research (red), scalability (orange), technology (blue), and accessibility (green).

Table 14.

Identified research gaps and recommended studies for AI-driven decision support systems in digital education.

Figure 8.

Strategic roadmap for AI-DSS advancements in digital education, detailing milestones in technology, scalability, accessibility, and research (2025–2031).

The technology domain begins with generative AI for creating customized educational content, such as interactive lessons and adaptive exercises, from 2025 to 2028. This progresses to multimodal AI systems between 2026 and 2030, integrating text, images, and videos to enable comprehensive evaluations, such as analyzing video-based student presentations for both content and delivery. By 2027–2031, the focus shifts to explainable AI, leveraging methods like SHAP or LIME to enhance transparency in decision-making processes, addressing current challenges in model interpretability. In terms of scalability, cloud-based solutions from 2025 to 2029 will enable efficient handling of large user groups through distributed computing, reducing reliance on local infrastructure and supporting real-time data processing for global platforms. From 2026 to 2031, open-source tools like TensorFlow and PyTorch will drive cost-effective development, encouraging collaborative innovation, particularly for institutions with limited resources.

Accessibility efforts prioritize inclusivity, with multilingual AI-DSSs from 2025 to 2028 using advanced natural language processing to provide content in multiple languages, addressing linguistic barriers for non-native speakers. Between 2026 and 2030, inclusive design will incorporate features like screen reader support and intuitive interfaces to accommodate learners with disabilities, enhancing access in underserved areas. Research priorities include longitudinal studies from 2025 to 2031 to evaluate long-term outcomes, filling the gap in understanding sustained impacts. Cross-cultural research from 2026 to 2030 will refine algorithms by analyzing implementations across diverse global contexts. Ethical frameworks, developed between 2027 and 2031, will emphasize bias audits, fairness metrics, and psychological assessments, advocating for regular equity reviews to minimize risks.

This structured roadmap aligns technological advancements with targeted research to address existing gaps, fostering equitable, scalable, and impactful AI-DSSs in digital education.

8. Conclusions

This comprehensive review of AI-based DSSs for online learning and assessment, spanning advancements from 2020 to 2025, underscores their transformative impact on educational technology. The integration of machine learning, natural language processing, knowledge-based systems, and deep learning has revolutionized online learning platforms by enabling personalized learning paths, automated assessments, and predictive analytics. Key findings highlight significant improvements in educational outcomes, with case studies demonstrating a 70% reduction in grading time for MOOC platforms and up to 20% increases in student engagement and performance through adaptive systems. The proposed system architecture—emphasizing data collection, AI processing, and seamless LMS integration—offers a robust framework for scalable and inclusive education delivery. As detailed in the roadmap in Figure 8, these steps provide a structured path for practical deployment and empirical validation. Performance metrics, including accuracy, response time, and user satisfaction, affirm the efficacy of AI-DSS, while evaluation frameworks combining quantitative and qualitative methods ensure rigorous assessment.

Despite these advancements, challenges persist, including model interpretability, algorithmic bias, data privacy concerns, and implementation barriers such as cost and adoption resistance. The transformative potential of the AI-DSS lies in its ability to democratize education, offering tailored learning experiences that cater to diverse global audiences. Looking forward, as outlined in Figure 8, emerging technologies like generative AI and multimodal systems, coupled with strategies for scalability and accessibility, promise to enhance educational equity further. Researchers and practitioners are encouraged to address critical gaps, particularly through longitudinal studies on learner outcomes, cross-cultural investigations, and the development of ethical frameworks to ensure fairness and transparency. By tackling these challenges and leveraging emerging opportunities, the academic and educational technology communities can advance AI-DSSs to create inclusive, efficient, and impactful online learning ecosystems.

Author Contributions

Conceptualization, Y.H.C. and N.I.N.Z.; methodology, Y.H.C., N.I.N.Z., and S.M.; validation, Y.H.C. and S.M.; formal analysis, Y.H.C. and N.I.N.Z.; investigation, Y.H.C. and N.I.N.Z.; writing—original draft preparation, Y.H.C. and N.I.N.Z.; writing—review and editing, M.M.H. and S.M.; supervision, S.M., concept and review: M.S. and K.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research was fully supported by the Collaborative Research Fund (CRF) through Cost Centre 015ME0-395, Universiti Teknologi PETRONAS.

Acknowledgments

The authors thank Universiti Teknologi PETRONAS for its research support and encouragement in preparing this work.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Xiong, Y.; Ling, Q.; Li, X. Ubiquitous e-Teaching and e-Learning: China’s Massive Adoption of Online Education and Launching MOOCs Internationally during the COVID-19 Outbreak. Wirel. Commun. Mob. Comput. 2021, 2021, 6358976. [Google Scholar] [CrossRef]

- Likovič, A.; Rojko, K. E-Learning and a Case Study of Coursera and edX Online Platforms. Res. Soc. Change 2022, 14, 94–120. [Google Scholar] [CrossRef]

- Garrett, R.; Legon, R.; Fredericksen, E.E.; Simunich, B. CHLOE 5: The Pivot to Remote Teaching in Spring 2020 and Its Impact. In The Changing Landscape of Online Education; Quality Matters & Eduventures Research: Annapolis, MD, USA, 2020; Available online: https://www.qualitymatters.org/qa-resources/resource-center/articles-resources/CHLOE-project (accessed on 10 December 2022).

- Gm, D.; Goudar, R.; Kulkarni, A.A.; Rathod, V.N.; Hukkeri, G.S. A digital recommendation system for personalized learning to enhance online education: A review. IEEE Access 2024, 12, 34019–34041. [Google Scholar] [CrossRef]

- Zawacki-Richter, O.; Marín, V.I. Artificial Intelligence in Education: A Systematic Review of Decision Support Systems. Educ. Technol. Res. Dev. 2023, 71, 987–1012. [Google Scholar]

- Panda, S.P. AI in Decision Support Systems. In SSRN eJournal; Elsevier SSRN: Rochester, NY, USA, 2021; ISSN 2994-3981. [Google Scholar]

- Daniel, J. Running Distance Education at Scale: Open Universities, Open Schools, and MOOCs. In Handbook of Open, Distance and Digital Education; Springer: Berlin/Heidelberg, Germany, 2022; pp. 1–18. [Google Scholar]

- Amit, S.; Karim, R.; Kafy, A.A. Mapping emerging massive open online course (MOOC) markets before and after COVID 19: A comparative perspective from Bangladesh and India. Spat. Inf. Res. 2022, 30, 655–663. [Google Scholar] [CrossRef]

- Pesovski, I.; Santos, R.; Henriques, R.; Trajkovik, V. Generative AI for Customizable Learning Experiences. Sustainability 2024, 16, 3034. [Google Scholar] [CrossRef]

- Wang, H.; Li, J. Scalability Issues in Online Learning Platforms: A Technical Perspective. J. Educ. Comput. Res. 2022, 60, 1123–1145. [Google Scholar]

- Lee, D.; Park, J. Personalized Learning in Online Environments: Challenges and Opportunities. Int. J. Artif. Intell. Educ. 2020, 30, 567–589. [Google Scholar]

- Kumar, V.; Boulanger, D. Automated Essay Scoring: Advances and Challenges in AI-Based Assessment. Comput. Educ. Artif. Intell. 2023, 4, 100098. [Google Scholar]

- Zhang, L.; Huang, R. Natural Language Processing for Automated Assessment in Online Learning. IEEE Trans. Learn. Technol. 2021, 14, 789–802. [Google Scholar]

- Albreiki, B.; Zaki, N.; Alashwal, H. A systematic literature review of student’performance prediction using machine learning techniques. Educ. Sci. 2021, 11, 552. [Google Scholar] [CrossRef]

- Miller, T.; Durlik, I.; Łobodzińska, A.; Dorobczyński, L.; Jasionowski, R. AI in context: Harnessing domain knowledge for smarter machine learning. Appl. Sci. 2024, 14, 11612. [Google Scholar] [CrossRef]

- Mahamuni, A.J.; Parminder; Tonpe, S.S. Enhancing educational assessment with artificial intelligence: Challenges and opportunities. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 1–5. [Google Scholar]

- Trajkovski, G.; Hayes, H. AI-Assisted Assessment in Education Transforming Assessment and Measuring Learning; Springer: Berlin/Heidelberg, Germany, 2025. [Google Scholar]

- Pantelimon, F.V.; Bologa, R.; Toma, A.; Posedaru, B.S. The evolution of AI-driven educational systems during the COVID-19 pandemic. Sustainability 2021, 13, 13501. [Google Scholar] [CrossRef]

- Shah, D. Global Trends in E-Learning: Enrollment and Technology Adoption (2020–2024). IEEE Trans. Learn. Technol. 2024, 17, 123–135. [Google Scholar]

- Chinnadurai, J.; Karthik, A.; Ramesh, J.V.N.; Banerjee, S.; Rajlakshmi, P.; Rao, K.V.; Sudarvizhi, D.; Rajaram, A. Enhancing online education recommendations through clustering-driven deep learning. Biomed. Signal Process. Control 2024, 97, 106669. [Google Scholar] [CrossRef]

- Al-Imamy, S.Y.; Zygiaris, S. Innovative students’ academic advising for optimum courses’ selection and scheduling assistant: A blockchain based use case. Educ. Inf. Technol. 2022, 27, 5437–5455. [Google Scholar] [CrossRef]

- Fagbohun, O.; Iduwe, N.P.; Abdullahi, M.; Ifaturoti, A.; Nwanna, O. Beyond traditional assessment: Exploring the impact of large language models on grading practices. J. Artif. Intell. Mach. Learn. Data Sci. 2024, 2, 1–8. [Google Scholar] [CrossRef] [PubMed]

- Alruwais, N.; Zakariah, M. Student-engagement detection in classroom using machine learning algorithm. Electronics 2023, 12, 731. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y. Machine Learning for Student Performance Prediction in Online Learning. Comput. Educ. 2022, 178, 104398. [Google Scholar] [CrossRef]

- Liu, Q.; Chen, Z. Reinforcement Learning for Adaptive Learning Paths in Online Education. J. Educ. Data Min. 2023, 15, 45–67. [Google Scholar] [CrossRef]

- Amin, S.; Uddin, M.I.; Alarood, A.A.; Mashwani, W.K.; Alzahrani, A.; Alzahrani, A.O. Smart E-learning framework for personalized adaptive learning and sequential path recommendations using reinforcement learning. IEEe Access 2023, 11, 89769–89790. [Google Scholar] [CrossRef]

- Winder, P. Reinforcement Learning; O’Reilly Media: Santa Rosa, CA, USA, 2020. [Google Scholar]

- Assami, S.; Daoudi, N.; Ajhoun, R. Personalization Criteria for Enhancing Learner Engagement in MOOC Platforms. In Proceedings of the 2018 IEEE Global Engineering Education Conference (EDUCON), Santa Cruz de Tenerife, Canary Islands, Spain, 18–20 April 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1265–1272. [Google Scholar]

- Li, Y. Reinforcement learning in practice: Opportunities and challenges. arXiv 2022, arXiv:2202.11296. [Google Scholar] [CrossRef]

- Stasolla, F.; Zullo, A.; Maniglio, R.; Passaro, A.; Di Gioia, M.; Curcio, E.; Martini, E. Deep Learning and Reinforcement Learning for Assessing and Enhancing Academic Performance in University Students: A Scoping Review. AI 2025, 6, 40. [Google Scholar] [CrossRef]

- Ludwig, S.; Mayer, C.; Hansen, C.; Eilers, K.; Brandt, S. Automated essay scoring using transformer models. Psych 2021, 3, 897–915. [Google Scholar] [CrossRef]

- Hadi, M.U.; Al Tashi, Q.; Qureshi, R.; Shah, A.; Muneer, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Hassan, S.Z.; et al. Large language models: A comprehensive survey of its applications, challenges, limitations, and future prospects. Authorea Prepr. 2023, 1, 1–26. [Google Scholar]

- Chen, J.; Liu, Z.; Huang, X.; Wu, C.; Liu, Q.; Jiang, G.; Pu, Y.; Lei, Y.; Chen, X.; Wang, X.; et al. When large language models meet personalization: Perspectives of challenges and opportunities. World Wide Web 2024, 27, 42. [Google Scholar] [CrossRef]

- Elsayed, H. The impact of hallucinated information in large language models on student learning outcomes: A critical examination of misinformation risks in AI-assisted education. North. Rev. Algorithmic Res. Theor. Comput. Complex. 2024, 9, 11–23. [Google Scholar]

- Chen, C.; Zhang, P.; Zhang, H.; Dai, J.; Yi, Y.; Zhang, H.; Zhang, Y. Deep learning on computational-resource-limited platforms: A survey. Mob. Inf. Syst. 2020, 2020, 8454327. [Google Scholar] [CrossRef]

- Jain, V.; Singh, I.; Syed, M.; Mondal, S.; Palai, D.R. Enhancing educational interactions: A comprehensive review of AI chatbots in learning environments. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 14–15 March 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Villegas-Ch, W.; García-Ortiz, J. Enhancing learning personalization in educational environments through ontology-based knowledge representation. Computers 2023, 12, 199. [Google Scholar] [CrossRef]

- Novais, A.S.d.; Matelli, J.A.; Silva, M.B. Fuzzy soft skills assessment through active learning sessions. Int. J. Artif. Intell. Educ. 2024, 34, 416–451. [Google Scholar] [CrossRef]

- Sridharan, T.B.; Akilashri, P.S.S. Multimodal learning analytics for students behavior prediction using multi-scale dilated deep temporal convolution network with improved chameleon Swarm algorithm. Expert Syst. Appl. 2025, 286, 128113. [Google Scholar] [CrossRef]

- Chen, Y.; Li, X.; Wang, H. Early Prediction of Student Dropout in Online Courses Using Machine Learning Methods. Educ. Technol. Res. Dev. 2022, 70, 1123–1145. [Google Scholar] [CrossRef]

- Boulhrir, T.; Hamash, M. Unpacking Artificial Intelligence in Elementary Education: A Comprehensive Thematic Analysis Systematic Review. Comput. Educ. Artif. Intell. 2025, 9, 100442. [Google Scholar] [CrossRef]

- Kabudi, T.; Pappas, I.; Olsen, D.H. AI-enabled adaptive learning systems: A systematic mapping of the literature. Comput. Educ. Artif. Intell. 2021, 2, 100017. [Google Scholar] [CrossRef]

- Šarić, I.; Grubišić, A.; Šerić, L.; Robinson, T.J. Data-Driven Student Clusters Based on Online Learning Behavior in a Flipped Classroom with an Intelligent Tutoring System. In Proceedings of the Intelligent Tutoring Systems, Kingston, Jamaica, 3–7 June 2019; Coy, A., Hayashi, Y., Chang, M., Eds.; Springer: Cham, Switzerland, 2019; pp. 72–81. [Google Scholar]

- Lu, C.; Cutumisu, M. Integrating Deep Learning into an Automated Feedback Generation System for Automated Essay Scoring. In Proceedings of the 14th International Conference on Educational Data Mining (EDM 2021), Paris, France, 29 June–2 July 2021; International Educational Data Mining Society: Worcester, MA, USA, 2021; pp. 615–621. [Google Scholar]

- Happer, C. Adaptive Multimodal Feedback Systems: Leveraging AI for Personalized Language Skill Development in Hybrid Classrooms. 2025. Available online: https://www.researchgate.net/publication/393047394_Adaptive_Multimodal_Feedback_Systems_Leveraging_AI_for_Personalized_Language_Skill_Development_in_Hybrid_Classrooms (accessed on 25 May 2025).

- Yim, I.H.Y.; Wegerif, R. Teachers’ perceptions, attitudes, and acceptance of artificial intelligence (AI) educational learning tools: An exploratory study on AI literacy for young students. Future Educ. Res. 2024, 2, 318–345. [Google Scholar] [CrossRef]

- Bañeres, D.; Rodríguez, M.E.; Guerrero-Roldán, A.E.; Karadeniz, A. An early warning system to detect at-risk students in online higher education. Appl. Sci. 2020, 10, 4427. [Google Scholar] [CrossRef]

- Islam, M.M.; Sojib, F.H.; Mihad, M.F.H.; Hasan, M.; Rahman, M. The integration of explainable ai in educational data mining for student academic performance prediction and support system. Telemat. Inform. Rep. 2025, 18, 100203. [Google Scholar] [CrossRef]

- Hu, Y.; Mello, R.F.; Gašević, D. Automatic analysis of cognitive presence in online discussions: An approach using deep learning and explainable artificial intelligence. Comput. Educ. Artif. Intell. 2021, 2, 100037. [Google Scholar] [CrossRef]

- Morales-Chan, M.; Amado-Salvatierra, H.R.; Medina, J.A.; Barchino, R.; Hernández-Rizzardini, R.; Teixeira, A.M. Personalized feedback in massive open online courses: Harnessing the power of LangChain and OpenAI API. Electronics 2024, 13, 1960. [Google Scholar] [CrossRef]

- Zhou, Y.; Zou, S.; Liwang, M.; Sun, Y.; Ni, W. A teaching quality evaluation framework for blended classroom modes with multi-domain heterogeneous data integration. Expert Syst. Appl. 2025, 289, 127884. [Google Scholar] [CrossRef]

- Heil, J.; Ifenthaler, D. Online Assessment in Higher Education: A Systematic Review. Online Learn. 2023, 27, 187–218. [Google Scholar] [CrossRef]

- Shao, J.; Gao, Q.; Wang, H. Online learning behavior feature mining method based on decision tree. J. Cases Inf. Technol. (JCIT) 2022, 24, 1–15. [Google Scholar] [CrossRef]

- Amrane-Cooper, L.; Hatzipanagos, S.; Marr, L.; Tait, A. Online assessment and artificial intelligence: Beyond the false dilemma of heaven or hell. Open Prax. 2024, 16, 687–695. [Google Scholar] [CrossRef]

- Akinwalere, S.; Ivanov, V. Artificial Intelligence in Higher Education: Challenges and Opportunities’. Bord. Crossing 2022, 12, 1–15. [Google Scholar] [CrossRef]

- Ma, H.; Ismail, L.; Han, W. A bibliometric analysis of artificial intelligence in language teaching and learning (1990–2023): Evolution, trends and future directions. Educ. Inf. Technol. 2024, 29, 25211–25235. [Google Scholar] [CrossRef]

- Kuzminykh, I.; Nawaz, T.; Shenzhang, S.; Ghita, B.; Raphael, J.; Xiao, H. Personalised feedback framework for online education programmes using generative AI. arXiv 2024, arXiv:2410.11904. [Google Scholar] [CrossRef]

- Fodouop Kouam, A.W. The effectiveness of intelligent tutoring systems in supporting students with varying levels of programming experience. Discov. Educ. 2024, 3, 278. [Google Scholar] [CrossRef]

- Susilawati, A. A Bibliometric Analysis of Global Trends in Engineering Education Research. ASEAN J. Educ. Res. Technol. 2024, 3, 103–110. [Google Scholar]

- Yousuf, M.; Wahid, A. The role of artificial intelligence in education: Current trends and future prospects. In Proceedings of the 2021 International Conference on Information Science and Communications Technologies (ICISCT), Tashkent, Uzbekistan, 3–5 November 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–7. [Google Scholar]

- Demszky, D.; Liu, J.; Hill, H.C.; Jurafsky, D.; Piech, C. Can Automated Feedback Improve Teachers’ Uptake of Student Ideas? Evidence from a Randomized Controlled Trial. J. Learn. Anal. 2023, 10, 145–162. [Google Scholar]

- Ouyang, F.; Zheng, L.; Jiao, P. Artificial Intelligence in Online Higher Education: A Systematic Review of Empirical Research from 2011 to 2020. Educ. Inf. Technol. 2022, 27, 7893–7925. [Google Scholar] [CrossRef]

- Popenici, S.A.D.; Kerr, S. Exploring the Impact of Artificial Intelligence on Teaching and Learning in Higher Education. Res. Pract. Technol. Enhanc. Learn. 2020, 15, 22. [Google Scholar] [CrossRef]

- Chen, X.; Xie, H.; Tao, X.; Wang, F.L.; Cao, J. Leveraging text mining and analytic hierarchy process for the automatic evaluation of online courses. Int. J. Mach. Learn. Cybern. 2024, 15, 4973–4998. [Google Scholar] [CrossRef]

- Alawneh, Y.J.J.; Sleema, H.; Salman, F.N.; Alshammat, M.F.; Oteer, R.S.; ALrashidi, N.K.N. Adaptive Learning Systems: Revolutionizing Higher Education through AI-Driven Curricula. In Proceedings of the 2024 International Conference on Knowledge Engineering and Communication Systems (ICKECS), Chikkaballapur, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; Volume 1, pp. 1–5. [Google Scholar]

- Murtaza, M.; Ahmed, Y.; Shamsi, J.A.; Sherwani, F.; Usman, M. AI-based personalized e-learning systems: Issues, challenges, and solutions. IEEE Access 2022, 10, 81323–81342. [Google Scholar] [CrossRef]

- de León López, E.D. Managing Educational Quality through AI: Leveraging NLP to Decode Student Sentiments in Engineering Schools. In Proceedings of the 2024 Portland International Conference on Management of Engineering and Technology (PICMET), Portland, OR, USA, 4–8 August 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–10. [Google Scholar]

- Albreiki, B.; Habuza, T.; Zaki, N. Framework for automatically suggesting remedial actions to help students at risk based on explainable ML and rule-based models. Int. J. Educ. Technol. High. Educ. 2022, 19, 49. [Google Scholar] [CrossRef]

- Yang, E.; Beil, C. Ensuring data privacy in AI/ML implementation. New Dir. High. Educ. 2024, 2024, 63–78. [Google Scholar] [CrossRef]

- Cole, J.P. The Family Educational Rights and Privacy Act (FERPA): Legal Issues. CRS Report R46799, Version 1. Congressional Research Service. 2021. Available online: https://crsreports.congress.gov/product/pdf/R/R46799 (accessed on 2 June 2025).

- Lam, P.X.; Mai, P.Q.H.; Nguyen, Q.H.; Pham, T.; Nguyen, T.H.H.; Nguyen, T.H. Enhancing educational evaluation through predictive student assessment modeling. Comput. Educ. Artif. Intell. 2024, 6, 100244. [Google Scholar] [CrossRef]

- Hayadi, B.H.; Hariguna, T. Predictive Analytics in Mobile Education: Evaluating Logistic Regression, Random Forest, and Gradient Boosting for Course Completion Forecasting. Int. J. Interact. Mob. Technol. 2025, 19, 210. [Google Scholar] [CrossRef]

- Li, Y.; Chen, H.; Xu, S.; Ge, Y.; Tan, J.; Liu, S.; Zhang, Y. Fairness in recommendation: Foundations, methods, and applications. ACM Trans. Intell. Syst. Technol. 2023, 14, 1–48. [Google Scholar] [CrossRef]

- Onyelowe, K.C.; Kamchoom, V.; Ebid, A.M.; Hanandeh, S.; Llamuca Llamuca, J.L.; Londo Yachambay, F.P.; Allauca Palta, J.L.; Vishnupriyan, M.; Avudaiappan, S. Optimizing the utilization of Metakaolin in pre-cured geopolymer concrete using ensemble and symbolic regressions. Sci. Rep. 2025, 15, 6858. [Google Scholar] [CrossRef]

- Yoon, M.; Lee, J.; Jo, I.H. Video learning analytics: Investigating behavioral patterns and learner clusters in video-based online learning. Internet High. Educ. 2021, 50, 100806. [Google Scholar] [CrossRef]

- Song, C.; Shin, S.Y.; Shin, K.S. Implementing the dynamic feedback-driven learning optimization framework: A machine learning approach to personalize educational pathways. Appl. Sci. 2024, 14, 916. [Google Scholar] [CrossRef]

- Anis, M. Leveraging artificial intelligence for inclusive English language teaching: Strategies and implications for learner diversity. J. Multidiscip. Educ. Res. 2023, 12, 54–70. [Google Scholar]

- Srinivasan, A.; Sitaram, S.; Ganu, T.; Dandapat, S.; Bali, K.; Choudhury, M. Predicting the performance of multilingual nlp models. arXiv 2021, arXiv:2110.08875. [Google Scholar] [CrossRef]

- Varona, D.; Suárez, J.L. Discrimination, bias, fairness, and trustworthy AI. Appl. Sci. 2022, 12, 5826. [Google Scholar] [CrossRef]

- Liang, W.; Tadesse, G.A.; Ho, D.; Fei-Fei, L.; Zaharia, M.; Zhang, C.; Zou, J. Advances, challenges and opportunities in creating data for trustworthy AI. Nat. Mach. Intell. 2022, 4, 669–677. [Google Scholar] [CrossRef]

- Al-Huthaifi, R.; Li, T.; Huang, W.; Gu, J.; Li, C. Federated learning in smart cities: Privacy and security survey. Inf. Sci. 2023, 632, 833–857. [Google Scholar] [CrossRef]

- Kavzoglu, T.; Teke, A. Predictive performances of ensemble machine learning algorithms in landslide susceptibility mapping using random forest, extreme gradient boosting (XGBoost) and natural gradient boosting (NGBoost). Arab. J. Sci. Eng. 2022, 47, 7367–7385. [Google Scholar] [CrossRef]

- Ramesh, D.; Sanampudi, S.K. An automated essay scoring systems: A systematic literature review. Artif. Intell. Rev. 2022, 55, 2495–2527. [Google Scholar] [CrossRef]

- Lu, Y.; Wang, D.; Chen, P.; Zhang, Z. Design and evaluation of trustworthy knowledge tracing model for intelligent tutoring system. IEEE Trans. Learn. Technol. 2024, 17, 1661–1676. [Google Scholar] [CrossRef]

- Park, S.; Kim, H. Deep Learning for Adaptive Assessments in Online Learning Platforms. IEEE Trans. Learn. Technol. 2024, 17, 567–580. [Google Scholar]

- Harrati, N.; Bouchrika, I.; Tari, A.; Ladjailia, A. Exploring user satisfaction for e-learning systems via usage-based metrics and system usability scale analysis. Comput. Hum. Behav. 2016, 61, 463–471. [Google Scholar] [CrossRef]

- Huang, A.Y.; Lu, O.H.; Yang, S.J. Effects of artificial Intelligence–Enabled personalized recommendations on learners’ learning engagement, motivation, and outcomes in a flipped classroom. Comput. Educ. 2023, 194, 104684. [Google Scholar] [CrossRef]

- Gao, X.; He, P.; Zhou, Y.; Qin, X. Artificial intelligence applications in smart healthcare: A survey. Future Internet 2024, 16, 308. [Google Scholar] [CrossRef]

- Phillips-Wren, G.; Mora, M.; Forgionne, G.; Gupta, J. An integrative evaluation framework for intelligent decision support systems. Eur. J. Oper. Res. 2009, 195, 642–652. [Google Scholar] [CrossRef]

- Yin, R.; Zhu, C.; Zhu, J. Decision Support System for Evaluating Corpus-Based Word Lists for Use in English Language Teaching Contexts. IEEE Access 2025, 13, 106369–106386. [Google Scholar] [CrossRef]