1. Introduction

Device-to-device (D2D) communication is a nascent feature for the Long term evolution advanced (LTE-Advanced) systems. D2D communication can operate in centralized, i.e., Base station (BS) controlled mode, and decentralized mode, i.e., without a BS [

1]. Unlike the traditional cellular network where Cellular users (CU) communicate through the base station, D2D allows direct communication between users by reusing the available radio resources. Consequently, D2D communication can provide improved system throughput and reduced traffic load to the BS. However, D2D devices generate interferences while reusing the resources [

2,

3]. Efficient resource allocation play a vital role in reducing the interference level, which positively impacts the overall system throughput. Fine tuning of power allocation on Resource blocks (RB) has consequences on interference, i.e., a higher transmission power can increase D2D throughput; however, it increases the interference level as well. Therefore, choosing the proper level of transmission power for RBs is a key research issue in D2D communication, which calls for adaptive power allocation methods.

Resource allocators, i.e., D2D transmitters in our system model as described in

Section 3 need to perform a particular action at each time step based on the application demand. For example, actions can be selecting power level options for a particular RB [

4]. Random power allocation is not suitable in a D2D communication due to its dynamic nature in terms of signal quality, interferences and limited battery capacity [

5]. Scheduling of these actions associated with different levels of power helps to allocate the resources in such a way that the overall system throughput is increased and an acceptable level of interference is maintained. However, this is hard to maintain, and therefore, we need an algorithm for learning the scheduling of actions adaptively, which helps to improve the overall system throughput with fairness and the minimum level of interferences.

To illustrate the problem,

Figure 1 shows a basic single cell scenario with one Cellular user (CU), two D2D pairs and one base station having two resource blocks operating in an underlay mode. D2D devices contend for resource blocks for reusing. Here, RB1 is allocated to the cellular user. D2D pair Tx and D2D pair Rx are assigned RB2. Now, D2D candidate Tx and D2D candidate Rx will contend for the resources either for RB1 or for RB2 to access. If we allocate RB1 to a D2D pair closer to the BS, there will be high interference between the D2D pair and the cellular user. So, RB1 should be allocated to the D2D candidate Tx which is closer to the cell edge (d2 > d3). For reusing the RB1, there will be interferences. Our goal is to propose an adaptive learning algorithm for selecting the proper level of power for the RB to minimize the level of interferences and maximize the throughput of the system.

In contrast with existing works, our proposed algorithm helps to learn the proper action selection for resource allocation. We consider reinforcement learning with the cooperation between users by sharing the value function and incorporating a neighboring factor. In addition, we consider a set of states based on system variables which have an impact on the overall QoS of the system. Moreover, we consider both cross-tier interference (interference that the BS receives from D2D transmitter and that the D2D receivers receive from cellular users) and cotier interference (that the D2D receivers receive from D2D transmitters) [

6]. To the best of our knowledge, this is the first work that considers all the above aspects for adaptive resource allocation in D2D communications.

The main contributions of this work can be stated as follows:

We propose an adaptive and cooperative reinforcement learning algorithm to improve achievable system throughput as well as D2D throughput simultaneously. The cooperation is performed by sharing the value function between devices and imposing the neighboring factor in our learning algorithm. A set of actions is considered based on the level of transmission power for a particular Resource block (RB). Further, a set of states is defined considering the appropriate number of system-defined variables. In addition, the reward function is composed of Signal-to-noise-plus-interference ratio (SINR) and the channel gains (between the base station and user, and also between users). Moreover, our proposed reinforcement learning algorithm is an on-policy learning algorithm which considers both exploitation and exploration. This action selection strategy helps to learn the best action to execute, which has a positive impact on selecting the proper level of power allocation to resource blocks. Consequently, this method shows better performance regarding overall system throughput.

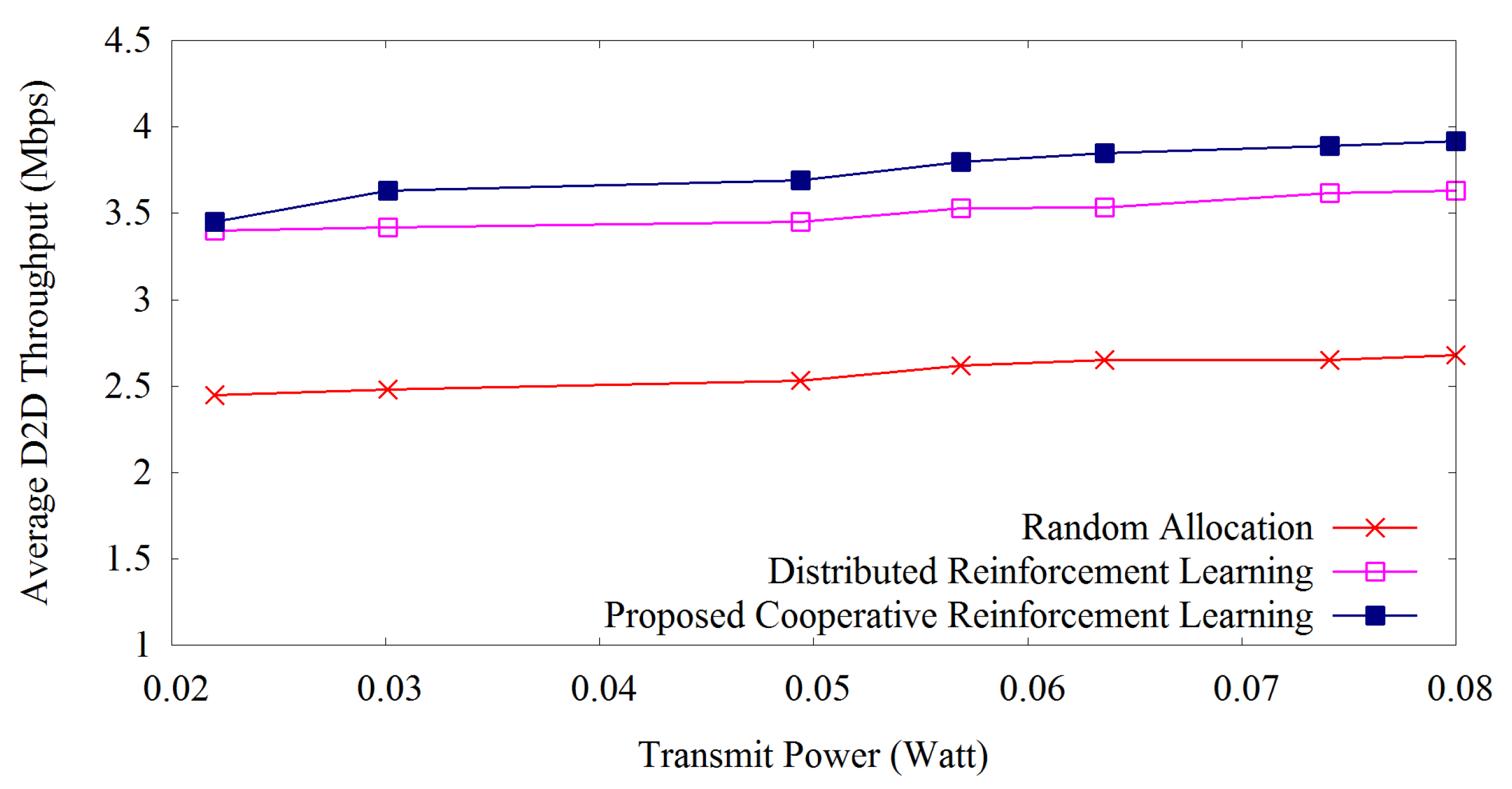

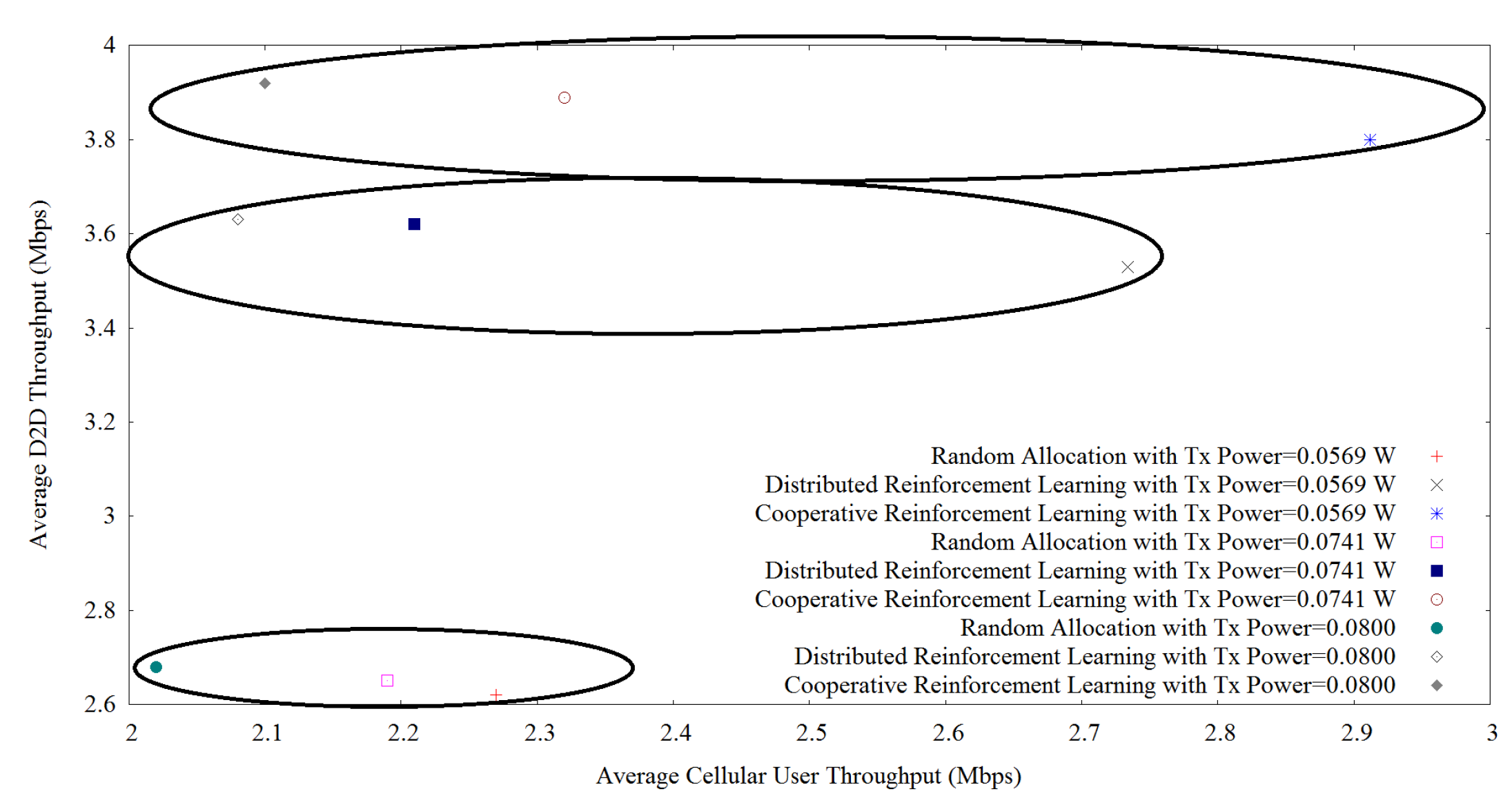

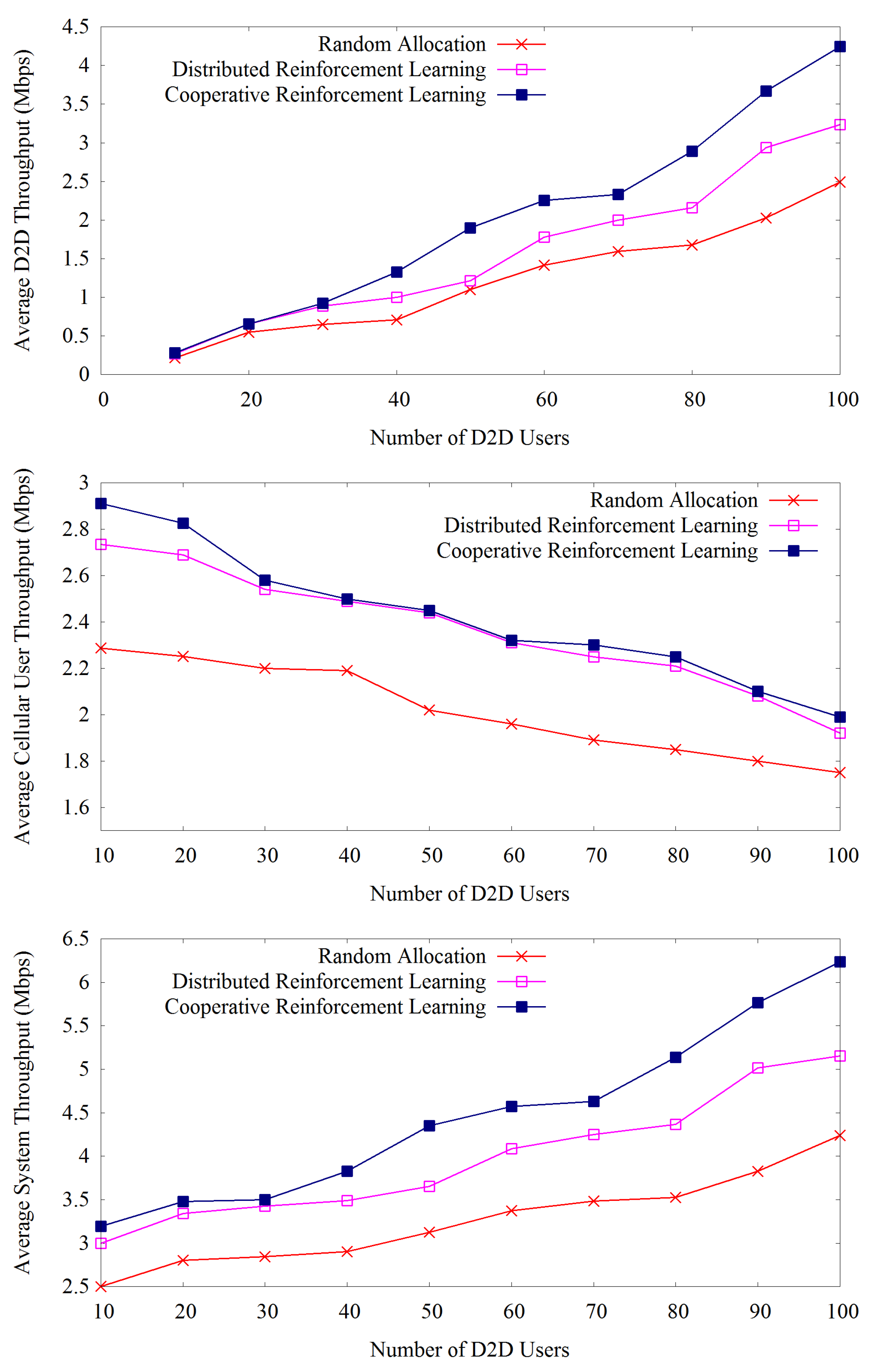

We perform realistic throughput evaluation of the proposed algorithm while varying the transmission power and the number of D2D users. We compare our method with existing distributed reinforcement learning and random allocation of resources in terms of D2D and system throughput considering the system model where Resource block (RB)-power level combination is used for resource allocation. Moreover, we consider fairness among D2D pairs by computing a fairness index which shows that our proposed algorithm achieves balance among D2D users throughput.

The rest of the paper is organized as follows.

Section 2 describes the related works. This is followed by the system model in

Section 3. The proposed cooperative reinforcement learning based resource allocation algorithm is described in

Section 4.

Section 5 presents the simulation results.

Section 6 concludes the paper with future works.

2. Related Works

Recent advances in Reinforcement learning (RL) create a broad scope of adaptive applications to apply. Resource allocation in D2D communication is such an application. Here, we describe at first some classical approaches [

7,

8,

9,

10,

11,

12,

13,

14,

15,

16] followed by existing RL-based resource allocation algorithms [

17,

18].

In [

7], an efficient resource allocation technique for multiple D2D pairs is proposed considering the maximization of system throughput. By exploring the relationship between the number of Resource blocks (RB) per D2D pair and the maximum power constraint for each D2D pair, a sub-optimal solution is proposed to achieve higher system throughput. However, the interference among D2D pairs is not considered. Local water filling algorithm (LWFA) is used for each D2D pair which is computationally expensive. Feng et al. [

8] introduce a resource allocation technique by maintaining the QoS of cellular users and D2D pairs simultaneously to enhance the system performance. A three-step scheme is proposed where the system performs admission control at first and then allocates the power to each D2D pair and its potential Cellular user (CU). A maximum weight bipartite Matching based scheme (MBS) is proposed to select a suitable CU partner for each D2D pair where the system throughput is maximized. However, this work basically focuses on suitable CU selection for the resource sharing where adaptive power allocation is not considered. In [

9], a centralized heuristic approach is proposed where the resources of cellular users and D2D pairs are synchronized considering the interference link gain from D2D transmitter to the BS. They formulate the problem of radio resource allocation to the D2D communication as a Mixed integer nonlinear programming (MINLP). However, MINLP is hard to solve and the adaptive power control mechanism is not considered. Zhao et al. [

10] propose a joint mode selection and resource allocation method for the D2D links to enhance the system sum-rate. They formulate the problem to maximize the throughput with SINR and power constraints for both D2D links and cellular users. They propose a Coalition formation game (CFG) with transferable utility to solve the problem. However, they do not consider the adaptive power allocation problem. In [

11], Min et al. propose a Restricted interference region (RIR) where cellular users and D2D users can not coexist. By adjusting the size of the restricted interference region, they propose the interference control mechanism in a way that the D2D throughput is increased over time. In [

12], the authors consider the target rate of cellular users for maximizing the system throughput. Their proposed method shows better results in terms of system interference. However, their work also focuses on the region control for the interference. They do not consider the adaptive resource allocation for maximizing the system throughput. A common limitation to the works as mentioned above is that they are fully centralized, which requires full knowledge of the link state information that produces redundant information over the network.

In addition to above-mentioned works, Hajiaghajani et al. [

13] propose a heuristic resource allocation method. They design an adaptive interference restricted region for the multiple D2D pairs. In their proposed region, multiple D2D pairs share the resources where the system throughput is increased. However, their proposed method is not adaptive regarding power allocation to the users. In [

14], the authors propose a two-phase optimization algorithm for the adaptive resource allocation which provides better results for system throughput. They propose Lagrangian dual decomposition (LDD) which is computationally complex.

Wang et al. [

15] propose a Joint scheduling (JS) and resource allocation for the D2D underlay communication where the average D2D throughput can be improved. Here, the channel assigned to the cellular users is reused by only one D2D pair and the cotier interference is not considered. In [

16], Yin et al. propose a distributed resource allocation method where minimum rates of cellular users and D2D pairs are maintained. A Game theoretic algorithm (GTA) is proposed for minimizing the interferences among D2D pairs. However, this approach provides low spectral efficiency.

With regards to machine learning for resource allocation in D2D communication, there are only few works, e.g., [

17,

18]. Luo et al. [

17] and Nie et al. [

18] exploit machine learning algorithms for D2D resource allocation. Luo et al. [

17] propose Distributed reinforcement learning (DIRL), Q-learning algorithm for resource allocation which improves the overall system performance in comparison to the random allocator. However, the model of Reinforcement learning (RL) is not well structured. For example, the set of states and a set of actions are not adequately designed. Their reward function is composed of only Signal to interference plus noise power ratio (SINR) metric. The channel gain between the base station and the user, and also the channel gain between users are not considered. This is a drawback since channel gains are important to consider as these help the D2D communication with better SINR level and transmission power, which is reflected in increased system throughput [

19].

Recently, Nie et al. [

18] propose Distributed reinforcement learning (DIRL), Q-learning to solve the power control problem in underlay mode. In addition, they explore the optimal power allocation which helps to maintain the overall system capacity. However, this preliminary study has limitations, for example, in their reward function, the channel gains are not considered. In addition, in their system model only the transmit power level is considered for maximizing the system throughput. To consider RB/subcarrier allocation in the optimization function is a very important issue for mitigating interference [

20]. Moreover, the cooperation between devices for resource allocation is not investigated in these existing works. A summary of the features and limitations of classical and RL-based allocation methods is given in

Table 1.

We propose adaptive resource allocation using Cooperative reinforcement learning (CRL) considering the neighboring factor, improved state space, and a reward function. Our proposed resource allocation method helps to provide mitigated interference level, D2D throughput and consequently an overall improved system throughput.

Table 1 shows the comparison of all the above mentioned works with our proposed cooperative reinforcement learning. Firstly, we categorize the related methods in two types: classical D2D resource allocation methods and Reinforcement learning (RL) based D2D resource allocation methods. We compare these works based on D2D throughput, system throughput, transmission alignment, online task scheduling for resource allocation, and cooperation. We can observe that almost all the methods consider the D2D and system throughput. None of the existing methods for resource allocation consider the transmission alignment, online action scheduling, and cooperation for the adaptive resource allocation.

3. System Model

We consider a network that consists of one Base station (BS) and a set of

Cellular users (CU), i.e.,

. There are also

D2D pairs,

coexist with the cellular users within the coverage of BS. In a particular D2D pair,

and

are the D2D transmitter and D2D receiver respectively. The set of User equipments (UE) in the network is given by UE =

. Each D2D transmitter

selects an available Resource block (RB)

r from the set

. In addition, underlay D2D transmitters select the transmit power from a finite set of power levels, i.e.,

. Each D2D transmitter should select resources, i.e., RB-power level combination refers to transmission alignment [

21].

For each RB , there is a predefined threshold for maximum aggregated interference. We consider that the value of is known to the transmitters using the feedback control channels. An underlay transmitter uses a particular transmission alignment in a way that the cross-tier interference should be within the threshold limit. According to our proposed system model, only one CU can be served by one RB where D2D users can reuse the same RB to improve the spectrum efficiency.

For each transmitter , the transmit power over the RBs is determined by the vector where denotes the transmit power level of transmitter over resource block r. If RB is not allocated to the transmitter then the power level . As we assume that each transmitter selects only one RB where only one entity in the power level .

Signal-to-interference-plus-noise-ratio (SINR) can be treated as an important factor to measure the link quality. The received SINR for any D2D receiver over

rth RB as follows:

where

and

denote the

uth D2D user and cellular user uplink transmission power on

rth RB, respectively.

,

where

is the upper bound of each D2D user’s transmit power.

is the noise variance [

9].

, and are the channel gains in the uth D2D link, the channel gain from D2D transmitter u to receiver v, and the channel gain from cellular transmitter c to receiver u, respectively. is a D2D pairs set sharing the rth RB.

The SINR of a cellular user

on the

rth RB is

where

and

indicate the channel gains on the

rth RB from BS to cellular user

c and

vth D2D transmitter, respectively.

The total path-loss which includes the antenna gain between BS and the user

u is:

where

is the pathloss between a BS and the user at a distance

d meter.

is the log-normal shadow path-loss of user

u.

is the radiation pattern [

22].

can be expressed as follows:

where

is the carrier frequency in GHz and

is the base station antenna height [

22].

The linear gain between the BS and a user is .

For D2D communication, the gain between two users

u and

v is

[

23]. Here,

is the distance between transmitter

u and receiver

v.

is a constant pathloss exponent and

is a normalization constant.

The objective of resource allocation problem (i.e., to allocate RB and transmit power) is to assign the resources in a way that maximizes system throughput. System throughput is the sum of D2D users and CU throughput, which is calculated by Equation (

6).

The resource allocation can be indicated by a binary decision variable,

where

The aggregated interference experienced by RB

r can be expressed as follows

Let

denote the resource e.g., RB and transmission power allocation. So, the allocation problem can be expressed as follows:

where

and

is the bandwidth corresponding to a RB. The objective function is to maximize the throughput of the system constrained by that the aggregated interference should be limited by a predefined threshold. The number of RB selected by the transmitter should be one where each can select one power level at each RB. Our goal is to investigate the optimal resource allocation in such a way that the system throughput is maximized by applying cooperative reinforcement learning.

4. Cooperative Reinforcement Learning Algorithm for Resource Allocation

In this section, we describe the basics of Reinforcement learning (RL), followed by our proposed cooperative reinforcement learning algorithm. After that, we describe the set of states, the set of actions and reward function for our proposed algorithm. Finally, Algorithm 1 shows the overall proposed resource allocation method and Algorithm 2 shows the execution steps of our proposed cooperative reinforcement learning.

We apply a Reinforcement learning (RL) algorithm named state action reward state action, SARSA(

), for adaptive resource in D2D communication for efficient resource allocation. This variant of standard SARSA(

) [

24] algorithm has some important features like cooperation by using a neighboring factor, a heuristic policy for exploration and exploitation, and a varying learning rate considering the visited state-action pair. Currently, we are applying the learning algorithm for the resource allocation of D2D users considering that the allocation of cellular users is performed prior to the allocation of D2D users. We consider the cooperative fashion of this learning algorithm which helps to improve the throughput as explained in

Section 1 by sharing the value function and incorporating weight factors for the neighbors of each agent.

In reinforcement learning, there is no need for prior knowledge about the environment. Agents learn how to behave with the environment based on the previous experience achieved, which is traced by a parameter, i.e., Q-value and controlled by a reward function. There should be some actions/tasks to perform at every time step. After performing every action, the agents shifts from one state to another and it gets a reward that reflects the impact of that action, which helps to decide about the next action to perform. The basic reinforcement learning is a form of Markov decision process (MDP).

Figure 2 depicts the overall model of a reinforcement learning algorithm.

Each agent in RL has the following components [

25]:

Policy: The policy acts as a decision making function for the agents. All other functions/components help to improve the policy for better decision making.

Reward function: The reward function defines the ultimate goal of an agent. This helps to assign a value/number to the performed action, which indicates the intrinsic desirability of the states. The main objective of the agent is to maximize the reward function in the long run.

Value function: The value function determines the suitability of action selection in the long run. The value of a state is accumulated reward over long run when starting from the current state.

Model: The model of the environment mimics the behavior of the environment which consists of a set of states and a set of actions.

In our model of the environment, we consider the components of the reinforcement learning algorithm as follows:

Agent: All the resource allocators: D2D Transmitters.

State: The state of D2D user

u on RB

r at time

t is defined as:

We consider three variables , and for defining the states for maintaining the overall quality of the network. is the SINR of a cellular user on the rth RB. is the channel gain between the BS and an user u. is the channel gain between two users u and v. The variables , and are important to consider for the resource allocation. The SINR is the indicator of the quality of service of the network. In addition, if the channel gains ( and ) quality is good then it is possible to achieve higher throughput without excessively increasing the transmit power, i.e., without causing too much interference to others. On the other hand, if the channel gain is too low, higher transmit power is required, which leads to increased interference.

Now, the state values of these variables can be either 0 or 1 based on following conditions. If the value of the variables are greater than or equal to a threshold value, then this denotes that their state value is ‘0’. On the contrary, if the values are less than the threshold value, then their state value is ‘1’. So, means state value ’1’ and means state value ’0’. Similarly, means state value ‘1’ and means state value ‘0’. Consequently, means state value ‘1’ and means the state value ‘0’. In this way, based on the combination of the value of these variables, the total number of possible states is eight where , and are the minimum SINR and channel gain guaranteeing the QoS performance of the system.

Action/Task: The action of each agent consists of a set of transmitting power levels. It is denoted by

where

r represents the

rth Resource Block (RB), and

means that every agent has

power levels. In this work, we consider the power levels to assign within the range of 1 to

in the interval of 1 dBm.

Reward Function: The reward function for the reinforcement learning is designed focusing on the throughput of each agent/user which is formulated as follows:

when

,

and

. Otherwise,

ℜ = − 1. SINR (

u) denotes the signal to interference plus noise power ratio of user

u (Step 7–10 in Algorithm 1).

SARSA(

) is an on-policy reinforcement learning algorithm that estimates the value of the policy being followed where

is a parameter such as learning rate [

26]. In SARSA learning algorithm, every agent needs to maintain a Q matrix which is initially assigned 0 and the agents may be in any state. Based on performing one particular action, it shifts from one state to another. The basic form of the learning algorithm is

, which means that the agent was in state

, did action

, received reward

ℜ, and ended up in state

, from which it decided to perform action

. This provides a new iteration to update

.

SARSA(

) helps to find out the appropriate sets of actions for some states. The considered state-action pair’s value function

as follows:

In Equation (

8),

is a

discount-factor which varies from 0 to 1. The higher the value, the more the agent relies on future rewards than on the immediate reward. The objective of applying reinforcement learning is to find the optimal policy

which maximizes the value function

. We consider the cooperative fashion of this algorithm where each agent shares the value function with each other.

At each time step,

for the iteration

,

is updated with the temporal difference error

and the immediate received reward. The

Q value has the following update rules:

for all

s,

a.

In Equation (

9),

is the learning rate which decreases with time.

is the temporal difference error which is calculated by following rule (Step 7 in Algorithm 2):

In Equation (

10),

is a discount-factor which varies from 0 to 1. The higher the value, the more the agent relies on future rewards than on the immediate reward.

represents the reward received for performing an action.

f is the neighboring weight factor of agent

i where this factor consists of the effect of neighbor’s Q-value, which helps to update the Q-value of agent

i that is calculated as follows [

27]:

where

is the number of neighbors of agent

i within the D2D radius. BS provides the information of number of neighbors for each agent [

28].

There is a trade-off between exploration and exploitation in reinforcement learning. Exploration chooses an action randomly in the system to find out the utility of that chosen action. Exploitation deals with the actions which have been chosen based on previously learned utility of the actions.

We use a heuristic for exploration probability at any given time such as:

where

and

denote upper and lower boundaries for the exploration factor, respectively.

represents the maximum number of states which is eight in our work and S represents the current number of states already known [

29]. At each time step, the system calculates

and generates a random number in the interval

. If the selected random number is less than or equal to

, the system chooses a uniformly random task (exploration), otherwise it chooses the best task using

Q values (exploitation).

k is a constant which controls the effect of unexplored states (Step 4 in Algorithm 2).

SARSA(

) helps to improve the learning technique by eligibility trace. In Equation (

9),

is the eligibility trace. The eligibility trace is updated by the following rule:

Here, is learning parameter for guaranteed convergence, whereas is the discount factor. In addition, the eligibility trace helps to provide higher impact on revisited states. For example, for a state-action pair , if and , the state-action pair is reinforced. Otherwise, the eligibility trace is removed (Step 8 in Algorithm 2).

The learning rate

is decreased in such a way that it reflects the degree to which a state-action pair has been chosen in the recent past. It is calculated as:

where

is a positive constant and

represents the visited state-action pairs so far [

30] (Step 6 in Algorithm 2).

| Algorithm 1: Proposed resource allocation method |

Input : = 23 dBm, Number of resource blocks = 30, Number of cellular users = 30,

Number of D2D user pairs = 12, D2D radius = 20 m, Pathloss parameter = 3.5, Cell

radius = 500 m, = 0.004, = 0.2512, = 0.2512 [9]

Output: RB-Power level, System Throughput

loop Pathloss calculation by Gain between the BS and a user, Gain between two users, SINR of the D2D users on the rth RB, SINR of the cellular users on the RB, if , and then else end Apply Algorithm 2 for the power allocation end loop

|

| Algorithm 2: Cooperative SARSA() reinforcement learning algorithm over number of iterations. |

Initialize = 0, = 0, = 0.3, = 0.1, k = 0.25, = 1, , , [ 17, 29] loop Determine the current s based on , and Select a particular action a based on the policy, Execute the selected action Update learning rate by Determine the temporal difference error by Update eligibility traces Update the Q-value, Update the value function and share with neighbors Shift to the next based on the executed action end loop

|

Algorithm 1 depicts the overall proposed resource allocation method. After setting the initial input parameters, the system oriented parameters, i.e., pathloss, channel gains, SINR of the D2D users and cellular users on the rth RB are calculated (Step 2–6 in Algorithm 1). Then the reward function is calculated (Step 8) and is assigned when the state values satisfy the constraint in step 7. After that Algorithm 2 is applied for the adaptive resource allocation. Algorithm 2 shows our proposed reinforcement learning algorithm execution steps for resource allocation over number of iterations.