Research on 3D Point Cloud Data Preprocessing and Clustering Algorithm of Obstacles for Intelligent Vehicle

Abstract

:1. Introduction

2. D Point Cloud Data Filtering Algorithm Design

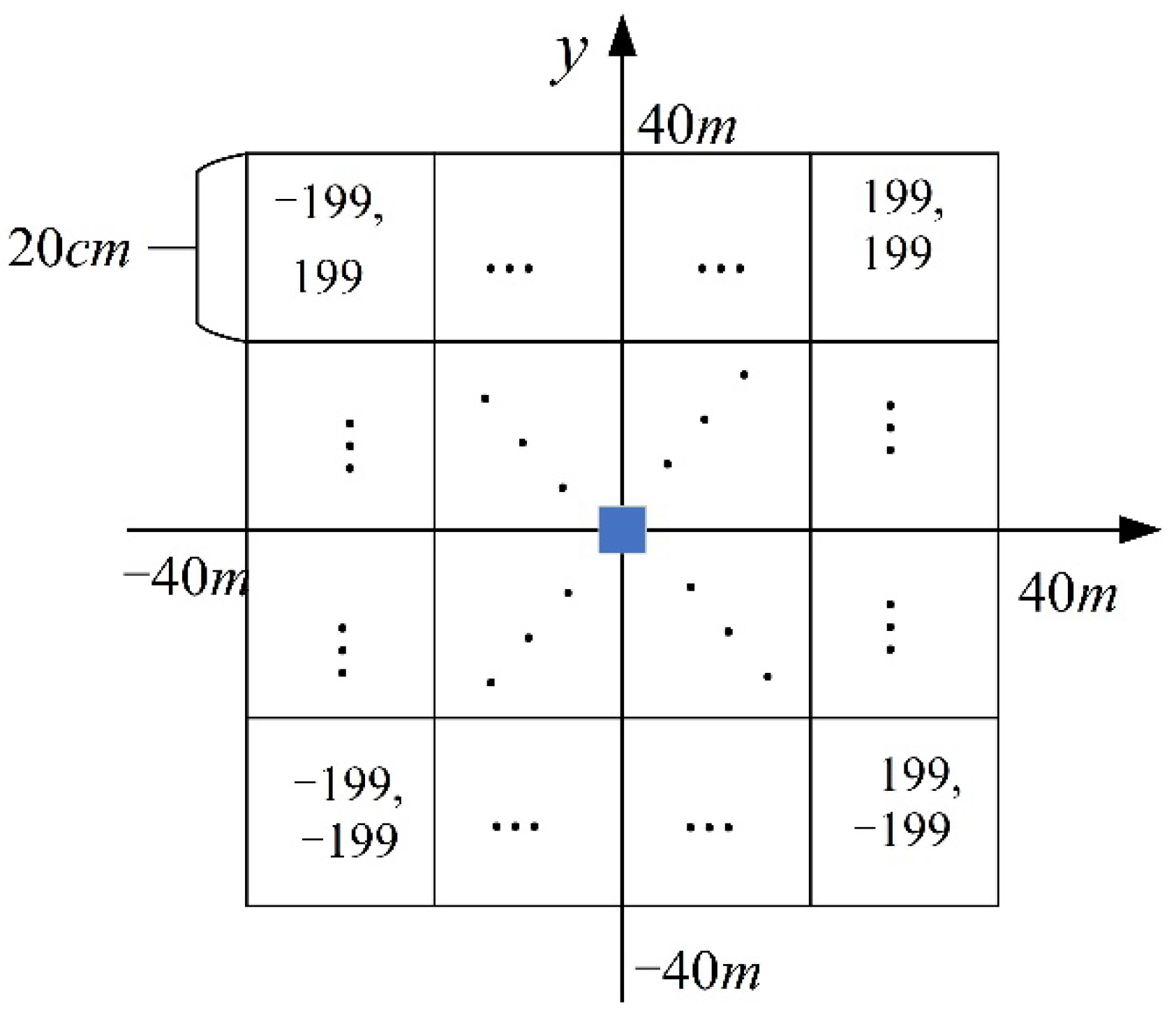

2.1. Vehicle Body Point Removal

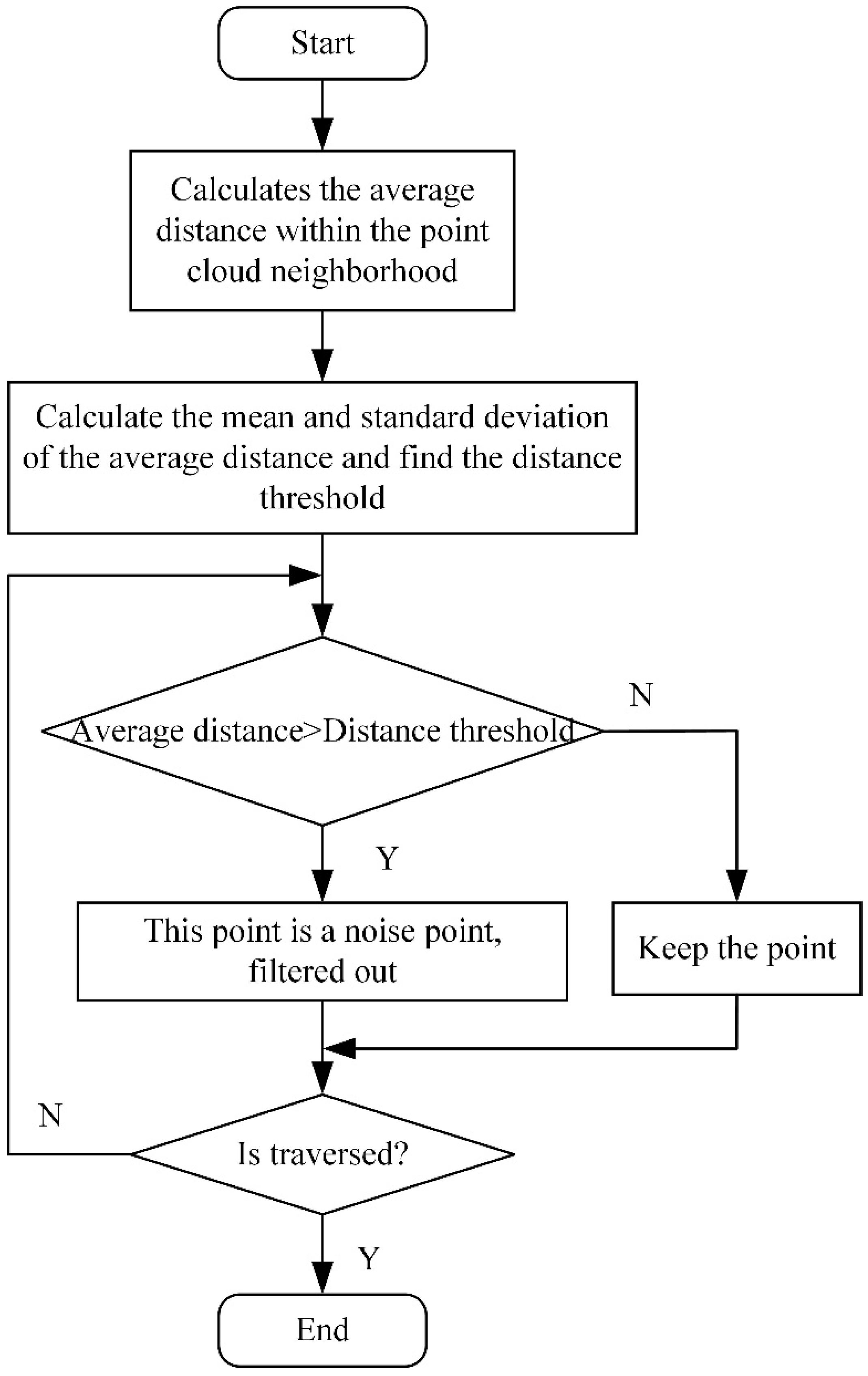

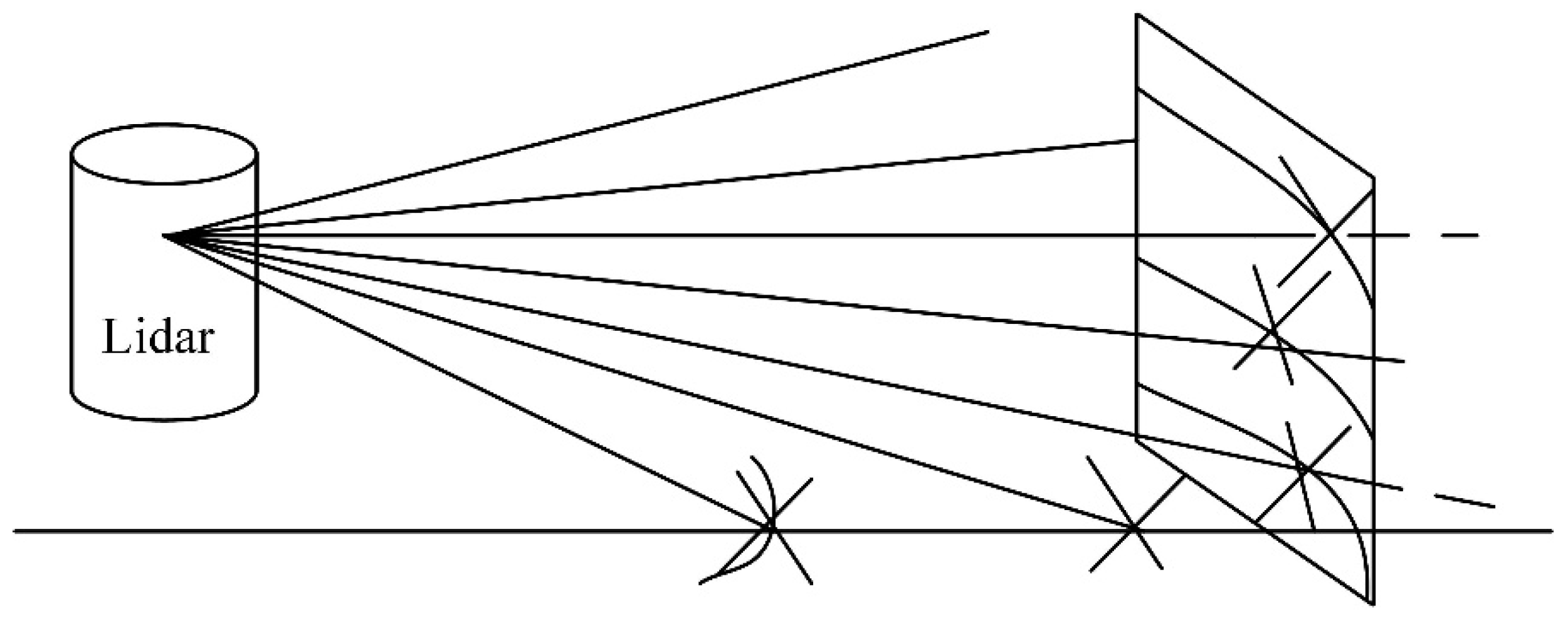

2.2. Noise Point Removal

2.3. Point Cloud Down-Sampling

3. Design of Ground Segmentation Algorithm for 3D Point Cloud Data

4. Design Based on Improved DBSCAN Point Cloud Clustering Algorithm

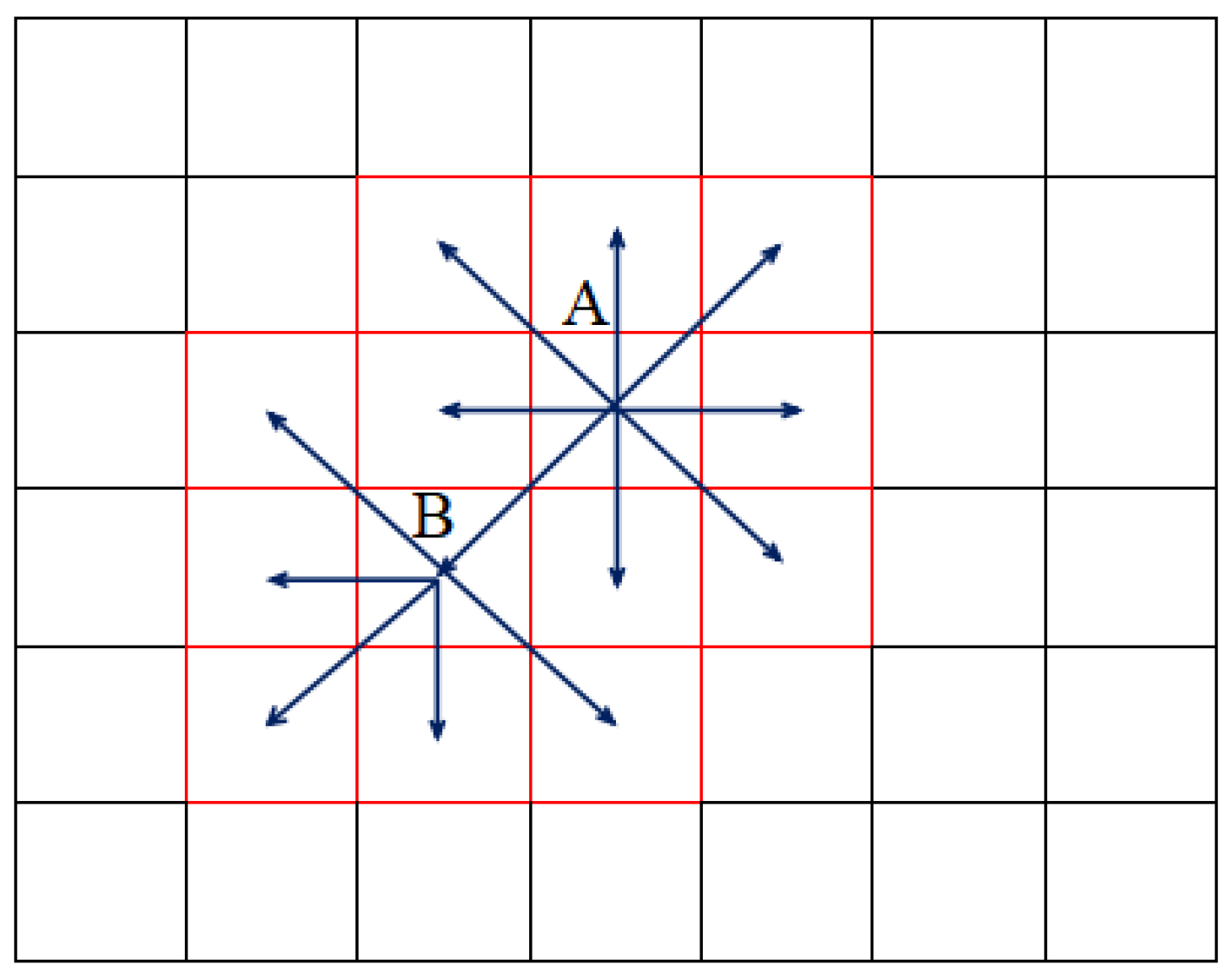

4.1. Design of Region Growing Algorithm

4.2. Design of Improved DBSCAN Fusion Region Growing Algorithm

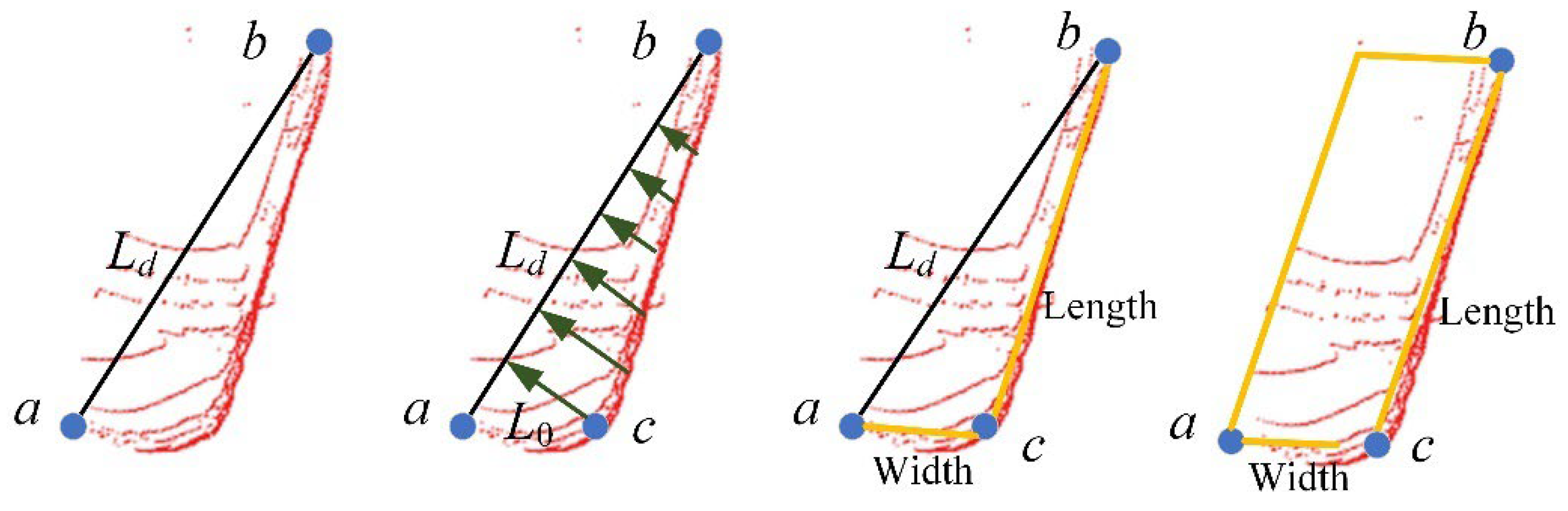

4.3. Bounding Box Fitting

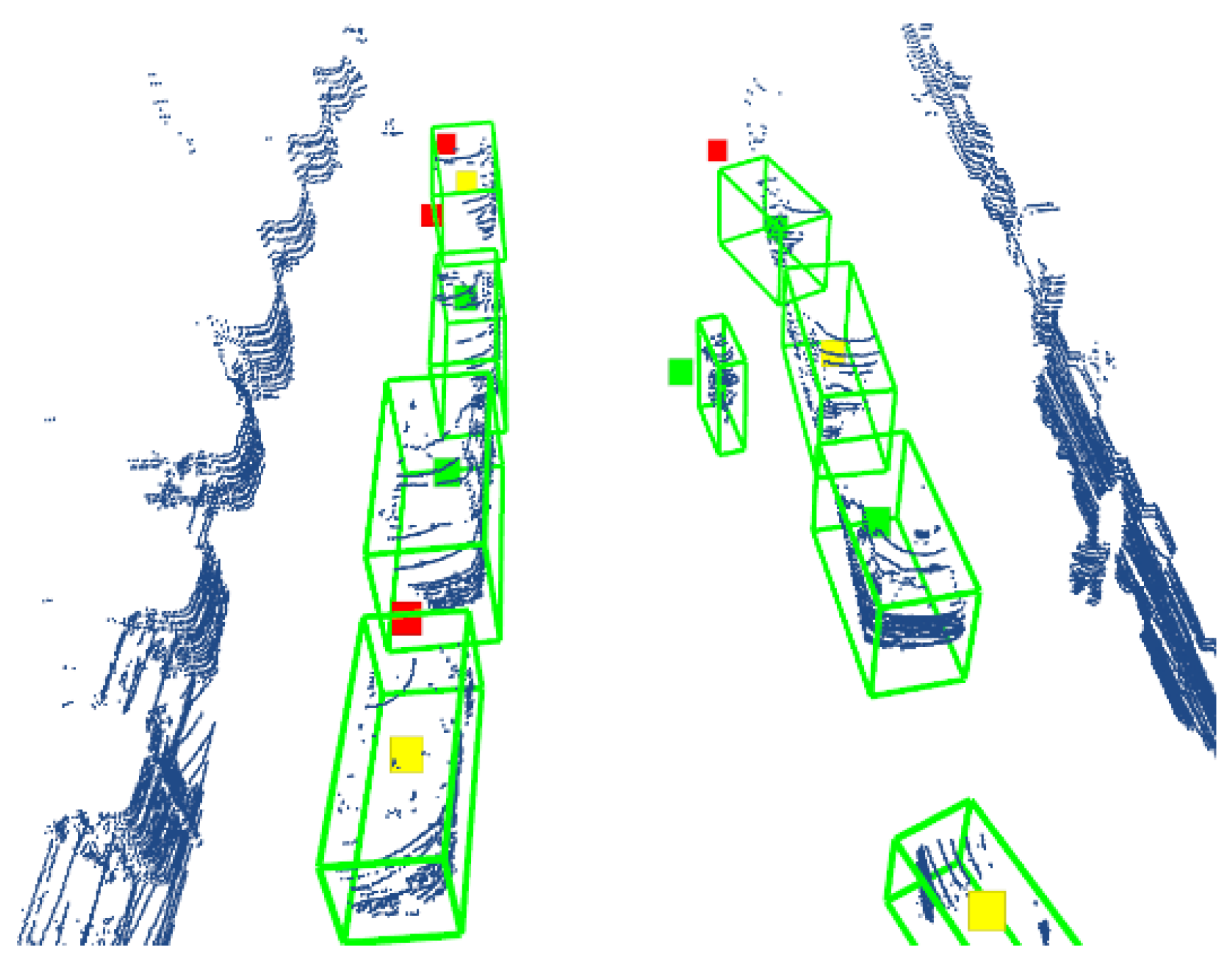

5. Experimental Verification

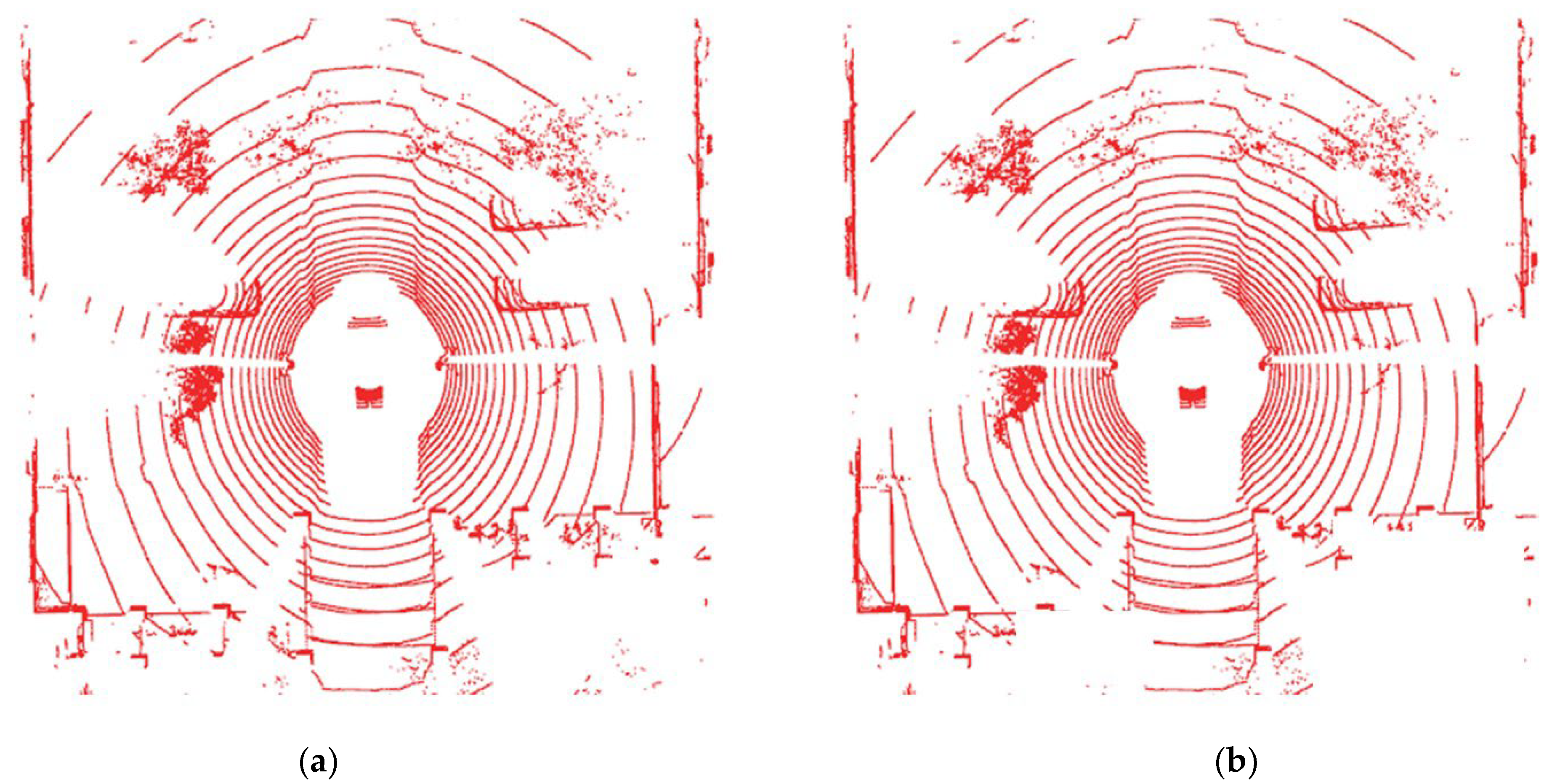

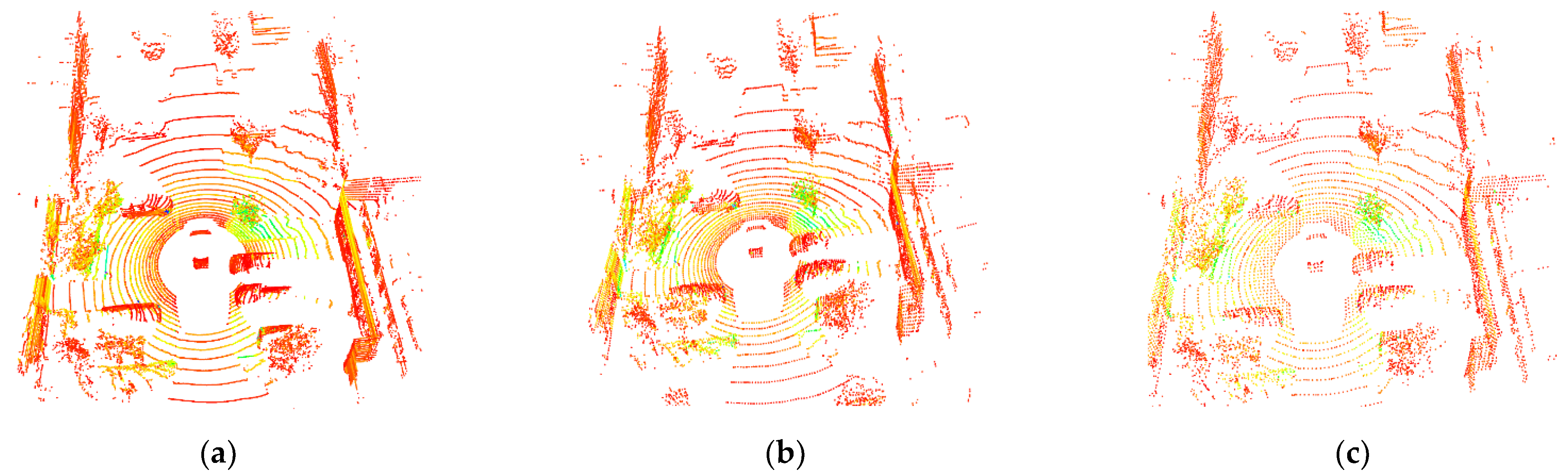

5.1. Analysis of Point Cloud Preprocessing Experiment

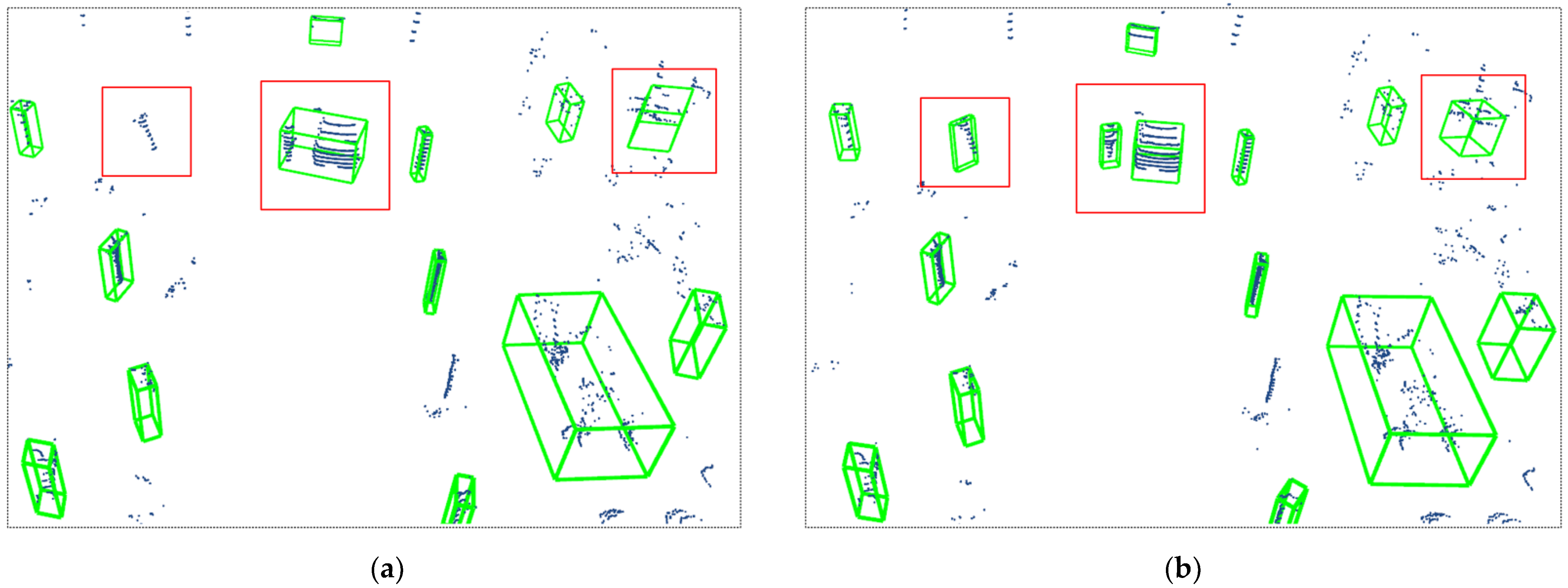

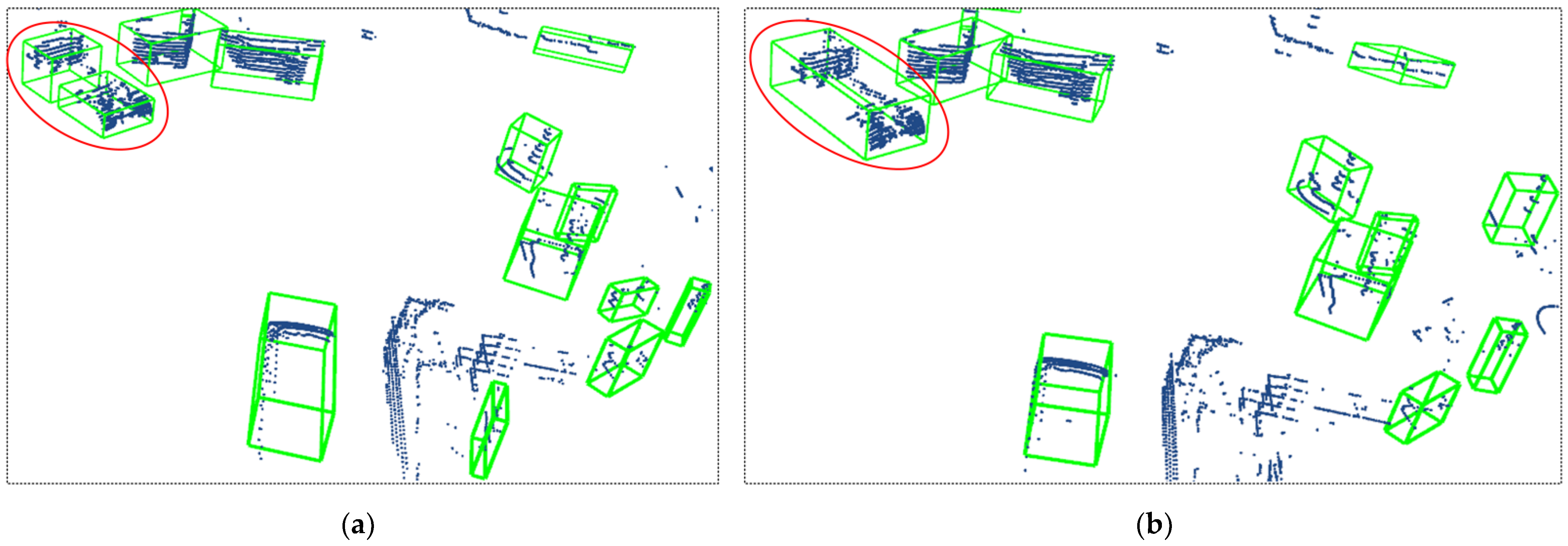

5.2. Analysis of Point Cloud Clustering Experiments

6. Conclusions and Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Xu, Z.L. Research on Smart Car Obstacle Avoidance System. Int. Core J. Eng. 2020, 6, 99–115. [Google Scholar]

- Zhang, W.; Jiang, F.; Yang, C.F.; Wang, Z.P.; Zhao, T.J. Research on Unmanned Surface Vehicles Environment Perception Based on the Fusion of Vision and radar. IEEE Access 2021, 9, 63107–63121. [Google Scholar] [CrossRef]

- Dhamanam, N.; Kathirvelu, M.; Govinda Rao, T. Review of Environment Perception for Intelligent Vehicles. Int. J. Eng. Manag. Res. (IJEMR) 2019, 9, 13–17. [Google Scholar] [CrossRef] [Green Version]

- Song, X.; Lou, X.; Zhu, J.; He, D. Secure State Estimation for Motion Monitoring of Intelligent Connected Vehicle Systems. Sensors 2020, 20, 1253. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, X.; Gao, Z.; Chen, X.; Sun, S.; Liu, J. Research on Estimation Method of Geometric Features of Structured Negative Obstacle Based on Single-Frame 3D Laser Point Cloud. Information 2021, 12, 235. [Google Scholar] [CrossRef]

- Abdallaoui, S.; Aglzim, E.-H.; Chaibet, A.; Kribèche, A. Thorough Review Analysis of Safe Control of Autonomous Vehicles: Path Planning and Navigation Techniques. Energies 2022, 15, 1358. [Google Scholar] [CrossRef]

- Mondal, D.; Bera, R.; Mitra, M. Design and Implementation of an Integrated Radar and Communication System for Smart Vehicle. Int. J. Soft Comput. Eng. (IJSCE) 2016, 5, 8–12. [Google Scholar]

- Li, X.; Deng, W.; Zhang, S.; Li, Y.; Song, S.; Wang, S.; Wang, G. Research on Millimeter Wave Radar Simulation Model for Intelligent Vehicle. Int. J. Automot. Technol. 2020, 21, 275–284. [Google Scholar] [CrossRef]

- Vargas, J.; Alsweiss, S.; Toker, O.; Razdan, R.; Santos, J. An Overview of Autonomous Vehicles Sensors and Their Vulnerability to Weather Conditions. Sensors 2021, 21, 5397. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Sun, L.; Chen, Y.; Bi, Y. Calibration method of three-dimensional plane array laser radar based on space-time transformation. Appl. Opt. 2020, 59, 8595–8602. [Google Scholar] [CrossRef] [PubMed]

- Chen, B.; Chen, H.; Yuan, D.; Yu, L. 3D Fast Object Detection Based on Discriminant Images and Dynamic Distance Threshold Clustering. Sensors 2020, 20, 7221. [Google Scholar] [CrossRef] [PubMed]

- Yang, H.; Wang, Z.; Lin, L.; Liang, H.; Huang, W.; Xu, F. Two-Layer-Graph Clustering for Real-Time 3D radar Point Cloud Segmentation. Appl. Sci. 2020, 10, 8534. [Google Scholar] [CrossRef]

- Ji, Y.; Li, S.; Peng, C.; Xu, H.; Cao, R.; Zhang, M. Obstacle detection and recognition in farmland based on fusion point cloud data. Comput. Electron. Agric. 2021, 189, 106409. [Google Scholar] [CrossRef]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. PointNet: Deep Learning on Point Sets for 3D Classification and Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 77–85. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely Embedded Convolutional Detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Frossard, D.; Urtasun, R. End-to-end Learning of Multi-sensor 3D Tracking by Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Salt Lake City, UT, USA, 18–22 June 2018; pp. 635–642. [Google Scholar]

- He, D.; Zou, Z.; Chen, Y.; Liu, B.; Yao, X.; Shan, S. Obstacle detection of rail transit based on deep learning. Measurement 2021, 176, 109241. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Gu, T.; Sun, B.; Huang, D.; Sun, K. Research on 3D Point Cloud Data Preprocessing and Clustering Algorithm of Obstacles for Intelligent Vehicle. World Electr. Veh. J. 2022, 13, 130. https://doi.org/10.3390/wevj13070130

Wang P, Gu T, Sun B, Huang D, Sun K. Research on 3D Point Cloud Data Preprocessing and Clustering Algorithm of Obstacles for Intelligent Vehicle. World Electric Vehicle Journal. 2022; 13(7):130. https://doi.org/10.3390/wevj13070130

Chicago/Turabian StyleWang, Pengwei, Tianqi Gu, Binbin Sun, Di Huang, and Ke Sun. 2022. "Research on 3D Point Cloud Data Preprocessing and Clustering Algorithm of Obstacles for Intelligent Vehicle" World Electric Vehicle Journal 13, no. 7: 130. https://doi.org/10.3390/wevj13070130

APA StyleWang, P., Gu, T., Sun, B., Huang, D., & Sun, K. (2022). Research on 3D Point Cloud Data Preprocessing and Clustering Algorithm of Obstacles for Intelligent Vehicle. World Electric Vehicle Journal, 13(7), 130. https://doi.org/10.3390/wevj13070130