Proposal of a Cost-Effective and Adaptive Customized Driver Inattention Detection Model Using Time Series Analysis and Computer Vision

Abstract

1. Introduction

- Detailed eye movement tracking: Accurate measurement of gaze movement is crucial for assessing driver attention.

- Customized monitoring: This is a customized system that combines eye tracking with the empirical analysis of repeated interactions with key vehicle devices, such as the side mirrors, rear-view mirror, and center console.

- Hybrid Model: By utilizing deep learning models for video analysis to collect visual information about the driver and applying this to a machine learning-based time series classification model, we can strengthen the classification model in a device environment.

- Performance verification: The evaluation algorithm follows EuroNCAP’s DMS criteria and is tested under actual driving conditions, considering various practical variables and providing real performance results.

2. Related Work

3. Proposed System

3.1. Workflow

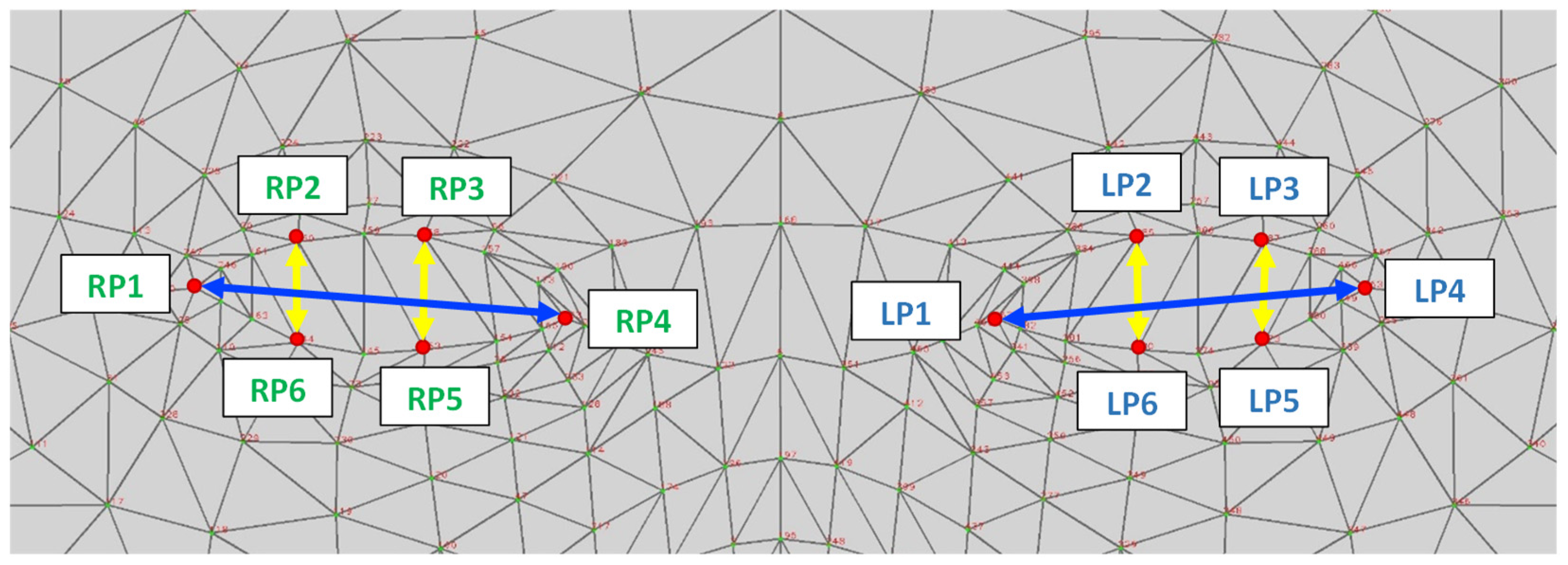

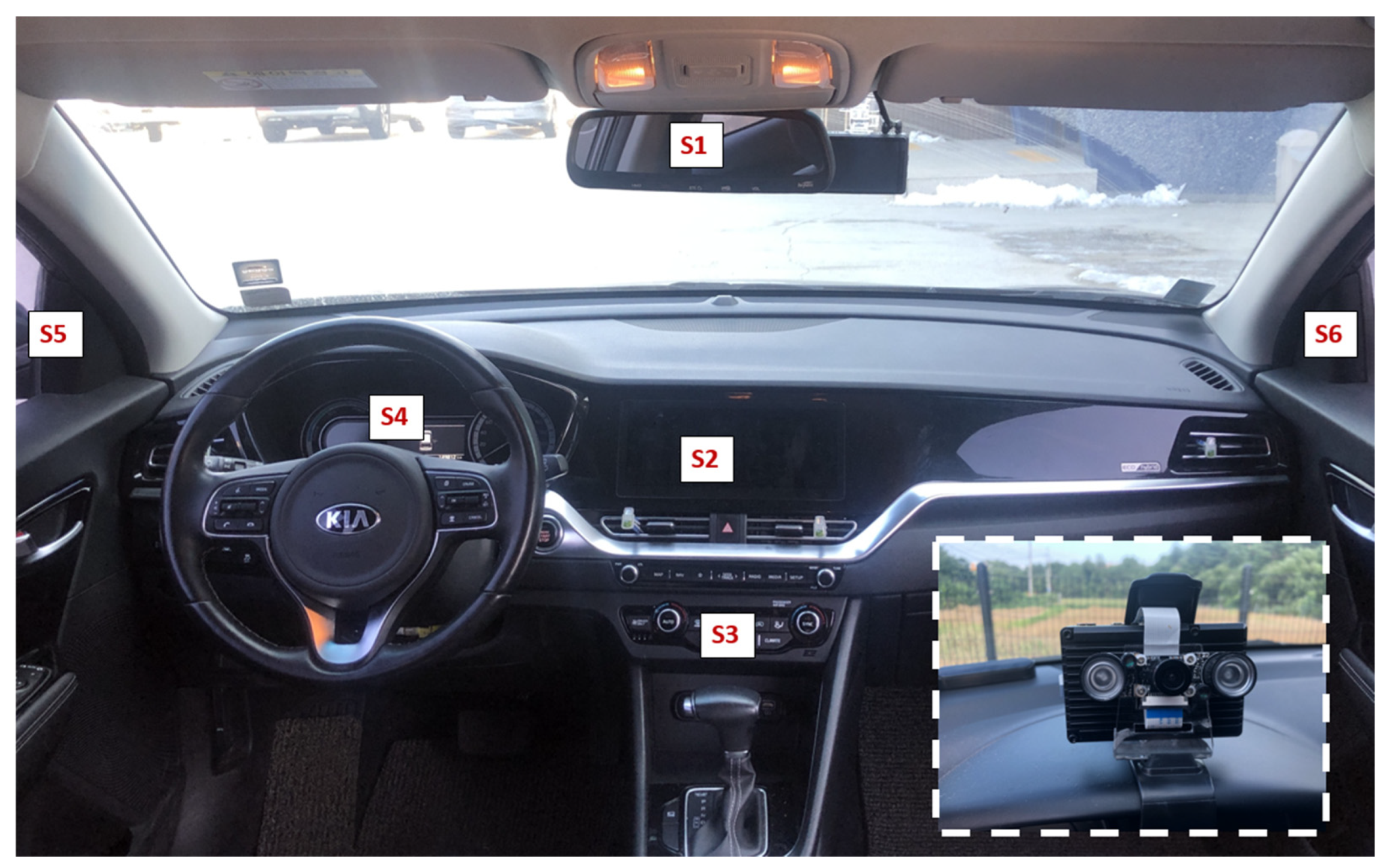

3.2. Data Collection

- I represents the specific coordinate values within the eye. It provides precise positions relative to the x and y coordinate axes.

- D is the measured distance from the geometric center of the eye to the pupil, indicating how far the eyeball is from the center of the eye.

- A is a constant representing the physical distance from the camera to the subject’s face. It is used to scale measurements within the image.

- C represents the length of one side of the Region of Interest (ROI) bounding box surrounding the face. It is important for normalizing eye positions to a standard size across various images and scenarios.

- H represents the angle of the head, where Hy denotes the cosine value of the yaw angle and Hp denotes the cosine value of the pitch angle.

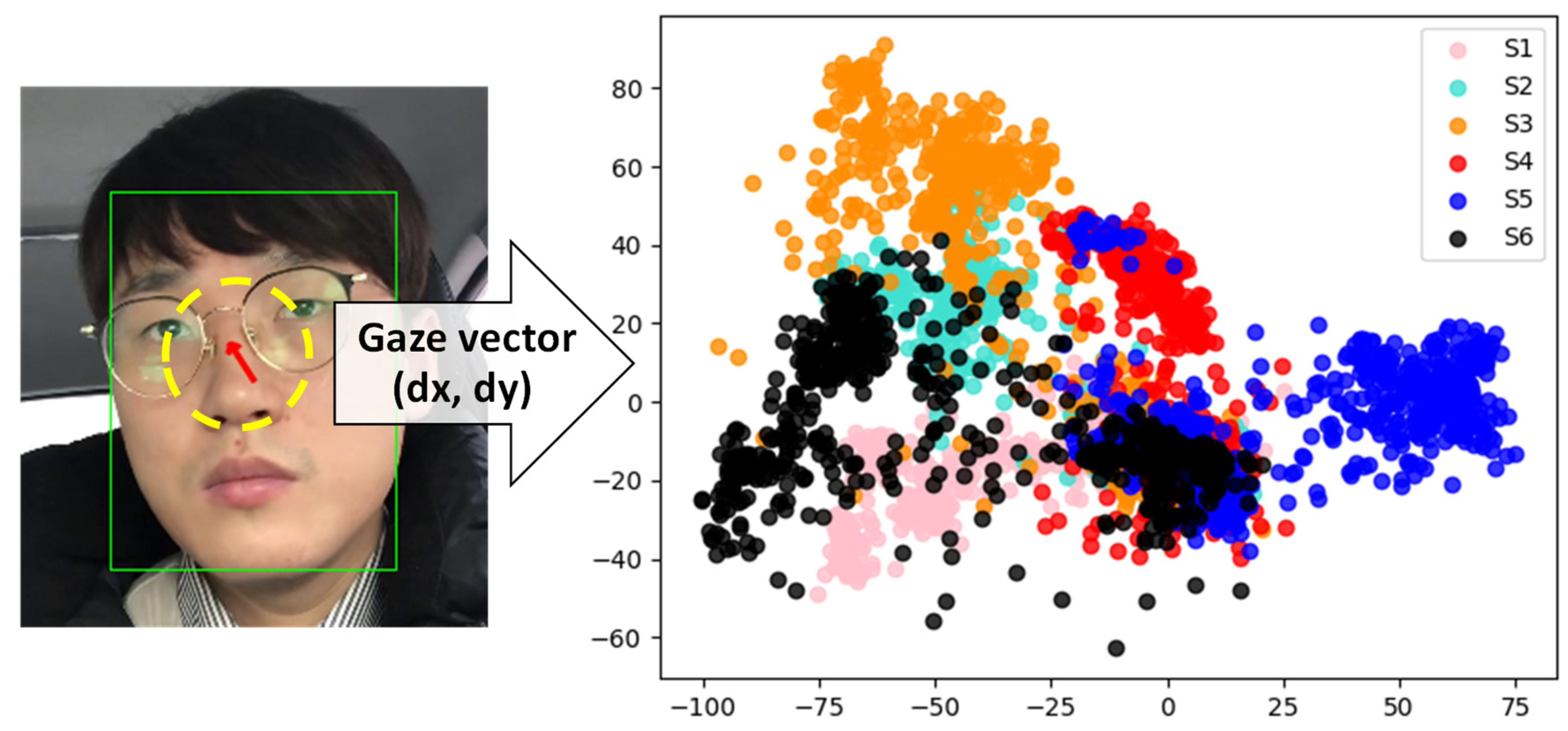

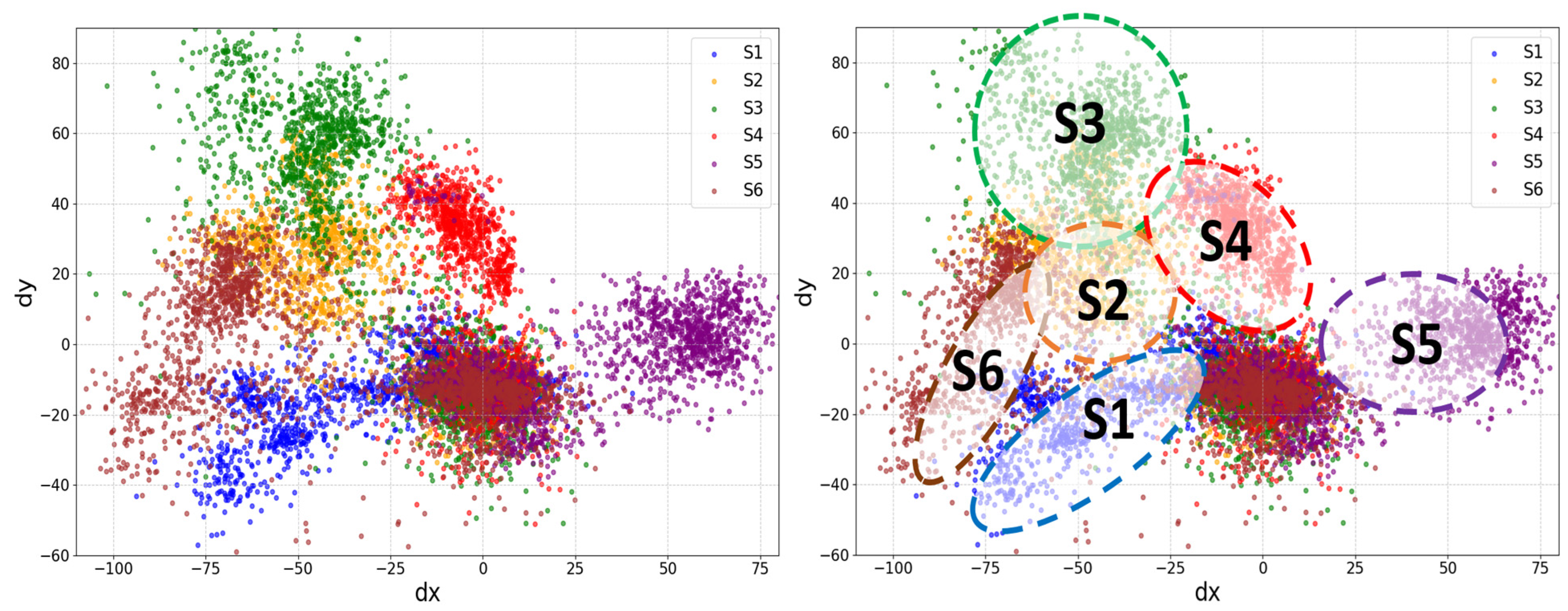

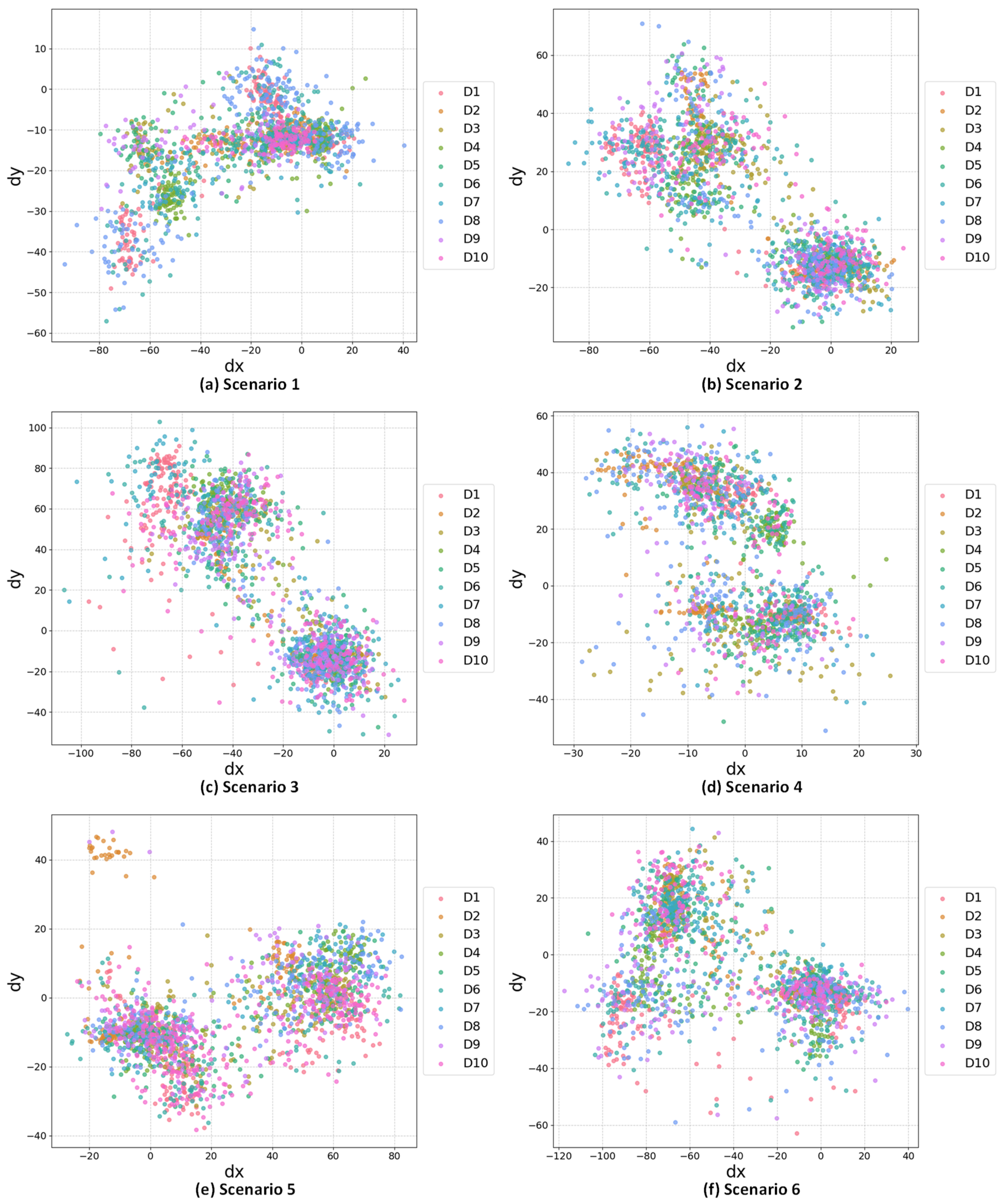

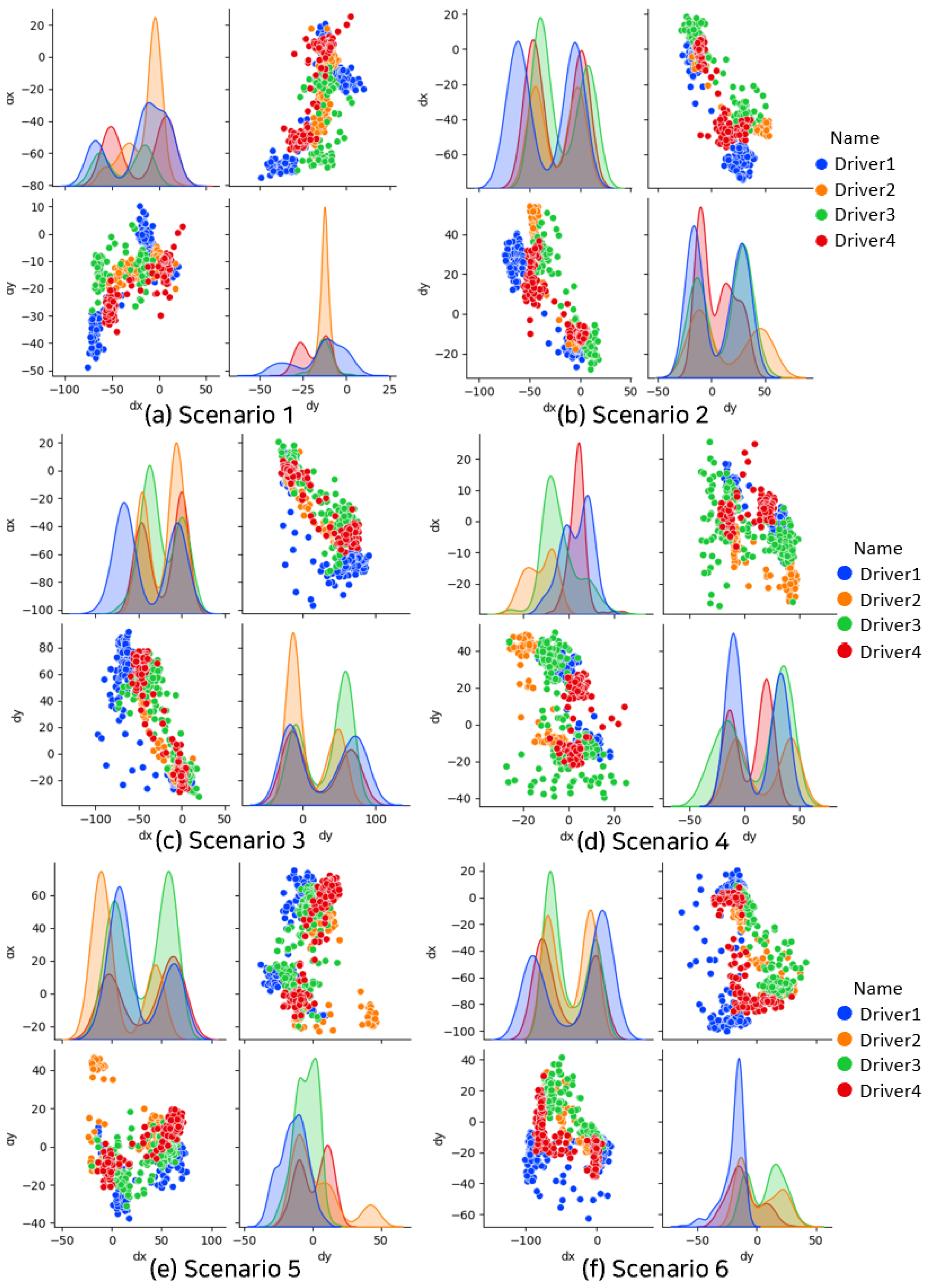

- Inter-driver variability: Within each scenario, the scatter plots reveal distinct clustering patterns for each driver, indicating persistent individual differences in gaze behavior even under similar driving conditions. This is further supported by the varied shapes and peaks of the KDE curves for each driver.

- Scenario-specific patterns: The overall distribution of gaze vectors shows marked differences across scenarios, suggesting that different driving tasks elicit distinct gaze behaviors. For instance, Scenario 3 exhibits a more diffuse pattern compared to the tighter clustering in Scenario 1.

- Bimodal distributions: In several scenarios (e.g., S3, S5), the KDE curves for dy exhibit bimodal distributions for some drivers. This could indicate alternating attention between two vertical points of interest, such as the road ahead and the dashboard.

- Density hotspots: Areas of high point density in the scatter plots, often corresponding to peaks in the KDE curves, represent frequently occurring gaze positions. These hotspots could be indicative of key areas of visual attention for specific driving tasks.

3.3. Data Preprocessing

3.4. Driver Inattention Estimation

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Autonomous Vehicles—California DMV. Available online: https://www.dmv.ca.gov/portal/vehicle-industry-services/autonomous-vehicles/ (accessed on 10 March 2023).

- AbuAli, N.; Abou-zeid, H. Driver Behavior Modeling: Developments and Future Directions. Int. J. Veh. Technol. 2016, 2016, 6952791. [Google Scholar] [CrossRef]

- UN Regulation No. 157 Uniform Provisions Concerning the Approval of Vehicles with Regard to Automated Lane Keeping Systems, E/ECE/TRANS/505/Rev.3/Add.151; UNECE: Geneva, Switzerland, 2022; Available online: https://unece.org/ (accessed on 22 February 2024).

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time facial surface geometry from monocular video on mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Petitjean, F.; Ketterlin, A.; Gançarski, P. A global averaging method for dynamic time warping, with ap-plications to clustering. Pattern Recognit. 2011, 44.3, 678–693. [Google Scholar] [CrossRef]

- Vogelpohl, T.; Kühn, M.; Hummel, T.; Vollrath, M. Asleep at the automated wheel—Sleepiness and fatigue during highly automated driving. Accid. Anal. Prev. 2019, 126, 70–84. [Google Scholar] [CrossRef] [PubMed]

- Zhao, C.; Gao, Y.; He, J.; Lian, J. Recognition of driver distraction based on improved YOLOv5 target detection. Accid. Anal. Prev. 2022, 165, 106505. [Google Scholar]

- Deng, Y.; Wu, C.H. A Hybrid CNN-LSTM Approach for Real-Time Driver Fatigue Detection. IEEE Trans. Intell. Transp. Syst. 2023, 24, 5123–5133. [Google Scholar]

- ISO/AWI TR 5283-1; Road Vehicles-Driver Readiness and Intervention Management-Part 1: Partial Automation (Level 2) [Un-der Development]. ISO: Geneva, Switzerland, 2021.

- EuroNCAP. Euro NCAP Assessment Protocol-SA—Safe Driving, v10.2; Euro NCAP: Leuven, Belgium, 2023. [Google Scholar]

- Shahid, A.; Wilkinson, K.; Marcu, S.; Shapiro, C.M. (Eds.) Karolinska sleepiness scale (KSS). In STOP, THAT and One Hundred Other Sleep Scales; Springer: Berlin/Heidelberg, Germany, 2012; pp. 209–210. [Google Scholar]

- Subasi, A. Automatic recognition of alertness level from EEG by using neural network and wavelet coefficients. Expert Syst. Appl. 2005, 28, 701–711. [Google Scholar] [CrossRef]

- Jiao, Y.; Deng, Y.; Luo, Y.; Lu, B.L. Driver sleepiness detection from EEG and EOG signals using GAN and LSTM net-works. Neurocomputing 2020, 408, 100–111. [Google Scholar] [CrossRef]

- Lin, C.T.; Chuang, C.H.; Huang, C.S.; Tsai, S.F.; Lu, S.W.; Chen, Y.H.; Ko, L.W. Wireless and wearable EEG system for evaluating driver vigilance. IEEE Trans. Biomed. Circuits Syst. 2014, 8, 165–176. [Google Scholar]

- Li, G.; Boon-Leng, L.; Wan-Young, C. Smartwatch-based wearable EEG system for driver drowsiness detection. IEEE Sens. J. 2015, 15, 7169–7180. [Google Scholar]

- Tango, F.; Botta, M. Real-time detection system of driver distraction using machine learning. IEEE Trans. Intell. Transp. Syst. 2013, 14, 894–905. [Google Scholar] [CrossRef]

- Liang, Y.; Lee, J.D.; Reyes, M.L. Nonintrusive Detection of Driver Cognitive Distraction in Real Time Using Bayesian Networks. Transp. Res. Rec. 2007, 2018, 1–8. [Google Scholar] [CrossRef]

- Hu, S.; Gangtie, Z. Driver drowsiness detection with eyelid related parameters by support vector ma-chine. Expert Syst. Appl. 2009, 36, 7651–7658. [Google Scholar] [CrossRef]

- Dasgupta, A.; George, A.; Happy, S.L.; Routray, A. A vision-based system for monitoring the loss of attention in automotive drivers. IEEE Transac-Tions Intell. Transp. Syst. 2013, 14, 1825–1838. [Google Scholar] [CrossRef]

- Jo, J.; Lee, S.J.; Jung, H.G.; Park, K.R.; Kim, J. Vision-based method for detecting driver drowsiness and distraction in driver monitoring system. Opt. Eng. 2011, 50, 127202. [Google Scholar] [CrossRef]

- Grace, R.; Byrne, V.E.; Bierman, D.M.; Legrand, J.M.; Gricourt, D.; Davis, B.K.; Staszewski, J.J.; Carnahan, B. A drowsy driver detection system for heavy vehicles. In Proceedings of the 17th DASC. AIAA/IEEE/SAE. Digital Avionics Systems Conference. Proceedings (Cat. No. 98CH36267), Bellevue, WA, USA, 31 October–7 November 1998; IEEE: New York, NY, USA, 1998; Volume 2. [Google Scholar]

- Wang, J.; Wu, Z. Driver distraction detection via multi-scale domain adaptation network. IET Intell. Transp. Syst. 2023, 17, 1742–1751. [Google Scholar] [CrossRef]

- Wang, X.; Xu, R.; Zhang, S.; Zhuang, Y.; Wang, Y. Driver distraction detection based on vehicle dynamics using naturalistic driving data. Transp. Res. Part C Emerg. Technol. 2022, 136, 103561. [Google Scholar] [CrossRef]

- Aljohani, A.A. Real-time driver distraction recognition: A hybrid genetic deep network based approach. Alex. Eng. J. 2023, 66, 377–389. [Google Scholar] [CrossRef]

- Shen, H.Z.; Lin, H.Y. Driver distraction detection for daytime and nighttime with unpaired visible and infrared image translation. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; IEEE: New York, NY, USA, 2023. [Google Scholar]

- Zhang, X.; Sugano, Y.; Fritz, M.; Bulling, A. Mpiigaze: Real-world dataset and deep appearance-based gaze estimation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 162–175. [Google Scholar] [CrossRef]

- Dubey, D.; Tomar, G.S. Image alignment in pose variations of human faces by using corner detection method and its application for PIFR system. Wirel. Pers. Commun. 2022, 124, 147–162. [Google Scholar] [CrossRef]

- Al-Nuimi, A.M.; Mohammed, G.J. Face direction estimation based on mediapipe landmarks. In Proceedings of the 2021 7th International Conference on Contemporary Information Technology and Mathematics (ICCITM), Mosul, Iraq, 25–26 August 2021; IEEE: New York, NY, USA, 2021. [Google Scholar]

- Hayami, T.; Matsunaga, K.; Shidoji, K.; Matsuki, Y. Detecting drowsiness while driving by measuring eye movement-a pilot study. In Proceedings of the IEEE 5th International Conference on Intelligent Transportation Systems, Singapore, 6 September 2002; IEEE: New York, NY, USA, 2002. [Google Scholar]

- Sommer, D.; Golz, M. Evaluation of PERCLOS based current fatigue monitoring technologies. In Proceedings of the 2010 Annual International Conference of the IEEE Engineering in Medicine and Biology, Buenos Aires, Argentina, 31 August–4 September 2010; IEEE: New York, NY, USA, 2010. [Google Scholar]

- Esling, P.; Agon, C. Time-series data mining. ACM Comput. Surv. 2012, 45, 1–34. [Google Scholar] [CrossRef]

- Bagnall, A.; Lines, J.; Bostrom, A.; Large, J.; Keogh, E. The great time series classification bake off: A review and experimental evaluation of recent algo-rithmic advances. Data Min. Knowl. Discov. 2017, 31, 606–660. [Google Scholar] [CrossRef] [PubMed]

- Tekin, N.; Aris, A.; Acar, A.; Uluagac, S.; Gungor, V.C. A review of on-device machine learning for IoT: An energy perspective. Ad Hoc Netw. 2023, 153, 103348. [Google Scholar] [CrossRef]

- Liao, Y.; Li, S.E.; Li, G.; Wang, W.; Cheng, B.; Chen, F. Detection of driver cognitive distraction: An SVM based real-time algorithm and its comparison study in typical driving scenarios. In Proceedings of the 2016 IEEE Intelligent Vehicles Symposium (IV), Gothenburg, Sweden, 19–22 June 2016; pp. 394–399. [Google Scholar]

- Li, Z.; Chen, L.; Nie, L.; Yang, S.X. A novel learning model of driver fatigue features representation for steering wheel angle. IEEE Trans. Veh. Technol. 2021, 71, 269–281. [Google Scholar] [CrossRef]

| Inattention Types | Distraction Scenarios | Criteria | |

|---|---|---|---|

| Distraction | Long Distraction | Away from the forward road/non-driving task | When the gaze consistently fixates on a location other than the forward direction for more than three seconds |

| Short Distraction (VATS) | Away from the forward road /non-driving task | When the gaze diverges for a cumulative duration of 10 s within a 30-s timeframe | |

| Away from road (multi-location) | |||

| Fatigue | Drowsiness | KSS (Karolinska Sleepiness Scale) level > 7 | |

| Microsleep | When there is a blink duration of less than 3 s | ||

| Sleep | When there is sustained eye closure lasting more than 3 s | ||

| Unresponsive Driver | When the gaze is averted from the forward direction or when the eyes are closed for more than 6 s | ||

| Level | Description |

|---|---|

| 1 | Extremely alert |

| 2 | Very alert |

| 3 | Alert |

| 4 | Rather alert |

| 5 | Neither alert nor sleepy |

| 6 | Some signs of sleepiness |

| 7 | Sleepy, but no effort is required to stay awake |

| 8 | Sleepy, but some effort is required to stay awake |

| 9 | Very sleepy, great effort required to stay awake, fighting sleep |

| 10 | Extremely sleepy and cannot stay awake |

| Automobile Information | Driver Information | ||||||

|---|---|---|---|---|---|---|---|

| Type | Segment | Manufacturer | Model | Gender | Age | Height (cm) | Weight (kg) |

| SUV | D | KIA | SORRENTO | Male | 43 | 174 | 68 |

| SUV | C | KIA | Niro | Male | 37 | 171 | 65 |

| Truck | 1 ton | KIA | Bongo3 | Male | 44 | 182 | 82 |

| Sedan | C | Hyundai | Elantra | Female | 30 | 162 | 53 |

| Sedan | C | KIA | K3 | Male | 44 | 168 | 62 |

| SUV | J | Hyundai | Tucson | Male | 26 | 176 | 84 |

| Sedan | F | BMW | 720 d | Male | 52 | 177 | 80 |

| SUV | J | Hyundai | Tucson | Female | 40 | 160 | 58 |

| Van | M | KIA | Carnival | Male | 41 | 175 | 83 |

| Compact | A | KIA | Morning | Female | 38 | 156 | 61 |

| Seq | Pitch | Yaw | Roll | Iris_Lx | Iris_Ly | Iris_Rx | Iris_Ry |

|---|---|---|---|---|---|---|---|

| 1 | 0.0761 | −0.0562 | −0.0248 | 575.2844 | 327.6482 | 654.3644 | 336.1082 |

| 2 | 0.0788 | −0.0484 | −0.0267 | 583.3813 | 324.1810 | 660.4034 | 333.9849 |

| 3 | 0.0769 | −0.0465 | −0.0343 | 589.4077 | 321.4966 | 664.8054 | 332.6090 |

| 4 | 0.0798 | −0.0394 | −0.0304 | 593.2281 | 319.6493 | 667.5866 | 331.8580 |

| 5 | 0.0604 | −0.0697 | −0.0244 | 595.3186 | 318.7085 | 669.1718 | 331.6109 |

| 6 | 0.0540 | −0.0439 | −0.0273 | 596.2318 | 318.4063 | 669.9681 | 331.7222 |

| 7 | 0.0722 | −0.0409 | −0.0347 | 596.6299 | 318.4297 | 670.4521 | 331.8593 |

| 8 | 0.0616 | −0.0389 | −0.0263 | 596.8526 | 318.5858 | 670.6852 | 331.9431 |

| 9 | 0.0614 | −0.0466 | −0.0285 | 596.7946 | 318.7040 | 670.8551 | 331.9021 |

| 10 | 0.0652 | −0.0356 | −0.0300 | 596.7021 | 318.6856 | 670.9465 | 331.7718 |

| Seq | Gaze_Lx | Gaze_Ly | Gaze_Rx | Gaze_Ry |

|---|---|---|---|---|

| 1 | 575.28 | 327.65 | 654.36 | 336.11 |

| 2 | 583.38 | 324.18 | 660.40 | 333.98 |

| 3 | 589.41 | 321.50 | 664.81 | 332.61 |

| 4 | 593.23 | 319.65 | 667.59 | 331.86 |

| 5 | 595.32 | 318.71 | 669.17 | 331.61 |

| 6 | 596.23 | 318.41 | 669.97 | 331.72 |

| 7 | 596.63 | 318.43 | 670.45 | 331.86 |

| 8 | 596.85 | 318.59 | 670.69 | 331.94 |

| 9 | 596.79 | 318.70 | 670.86 | 331.90 |

| 10 | 596.70 | 318.69 | 670.95 | 331.77 |

| Seq | Vector_Lx | Vector_Ly | Vector_Rx | Vector_Ry | SUM_x | SUM_y | GridValue | NORM |

|---|---|---|---|---|---|---|---|---|

| 1 | 49 | 1 | 66 | −21 | 115 | −20 | 226,935 | 0.812 |

| 2 | 65 | −8 | 82 | −35 | 147 | −43 | 209,766 | 0.738 |

| 3 | 76 | −14 | 82 | −45 | 158 | −59 | 197,822 | 0.686 |

| 4 | 79 | −22 | 80 | −53 | 159 | −75 | 185,878 | 0.634 |

| 5 | 82 | −28 | 78 | −56 | 160 | −84 | 179,160 | 0.605 |

| 6 | 82 | −30 | 82 | −55 | 164 | −85 | 178,413 | 0.602 |

| 7 | 83 | −30 | 85 | −53 | 168 | −83 | 179,906 | 0.608 |

| 8 | 83 | −26 | 93 | −49 | 176 | −75 | 185,878 | 0.634 |

| 9 | 84 | −22 | 97 | −43 | 181 | −65 | 193,343 | 0.667 |

| 1 | 82 | −21 | 102 | −45 | 184 | −66 | 192,597 | 0.663 |

| Eigen Vector | Explained Variance | Explained Variance Ratio | |

|---|---|---|---|

| S1 | [−0.97133103, −0.23773099] | 736.9242905 | 0.93258419 |

| S2 | [0.75592334, −0.65466014] | 1067.50632338 | 0.92779933 |

| S3 | [−0.55115528, 0.8344027 ] | 1954.73997671 | 0.94382174 |

| S4 | [0.21681727, −0.97621221] | 587.61989058 | 0.91274915 |

| S5 | [0.98644338, 0.16410198] | 883.008934 | 0.83827288 |

| S6 | [0.97293209, −0.23109122] | 1431.98554755 | 0.85216792 |

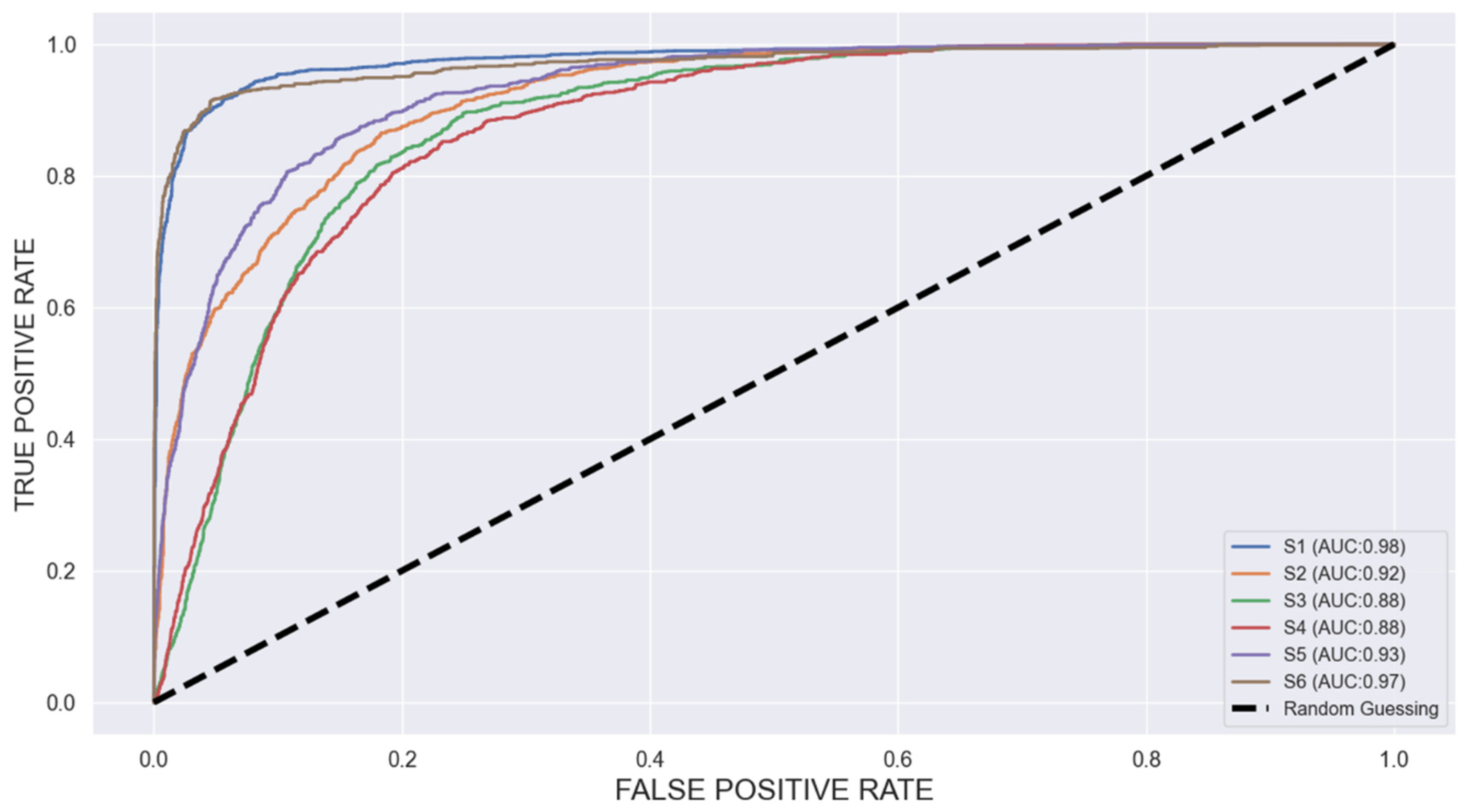

| Driver | Amount | S1 | S2 | S3 | S4 | S5 | S6 | AVG |

|---|---|---|---|---|---|---|---|---|

| Driver1 | 2610 | 99 | 98 | 94 | 95 | 97 | 99 | 97 |

| Drvier2 | 1914 | 97 | 95 | 93 | 94 | 96 | 95 | 95 |

| Driver3 | 870 | 96 | 92 | 90 | 91 | 93 | 90 | 92 |

| Driver4 | 1130 | 97 | 93 | 91 | 92 | 94 | 94 | 93 |

| Driver5 | 1031 | 93 | 97 | 95 | 96 | 95 | 97 | 95 |

| Driver6 | 1016 | 94 | 96 | 98 | 97 | 95 | 96 | 95 |

| Driver7 | 965 | 93 | 94 | 96 | 95 | 93 | 92 | 93 |

| Driver8 | 1185 | 96 | 95 | 97 | 98 | 96 | 98 | 95 |

| Driver9 | 904 | 92 | 94 | 93 | 95 | 92 | 91 | 93 |

| Driver10 | 1023 | 93 | 96 | 94 | 95 | 94 | 93 | 95 |

| AVG | 98 | 94 | 92 | 92 | 95 | 95 | 94 | |

| Remarks | Accuracy |

|---|---|

| Customized Gaze Estimation Model (CDIDM) | 97% |

| EEG signal analysis [3] | 98% |

| EEG headband and smartwatch [10] | 84% |

| Gaze estimation (PERCLOS) [15] | 98% |

| Driving performance and eye movement [34] | 93% |

| Steering wheel sensor and speed [35] | 98% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sim, S.; Kim, C. Proposal of a Cost-Effective and Adaptive Customized Driver Inattention Detection Model Using Time Series Analysis and Computer Vision. World Electr. Veh. J. 2024, 15, 400. https://doi.org/10.3390/wevj15090400

Sim S, Kim C. Proposal of a Cost-Effective and Adaptive Customized Driver Inattention Detection Model Using Time Series Analysis and Computer Vision. World Electric Vehicle Journal. 2024; 15(9):400. https://doi.org/10.3390/wevj15090400

Chicago/Turabian StyleSim, Sangwook, and Changgyun Kim. 2024. "Proposal of a Cost-Effective and Adaptive Customized Driver Inattention Detection Model Using Time Series Analysis and Computer Vision" World Electric Vehicle Journal 15, no. 9: 400. https://doi.org/10.3390/wevj15090400

APA StyleSim, S., & Kim, C. (2024). Proposal of a Cost-Effective and Adaptive Customized Driver Inattention Detection Model Using Time Series Analysis and Computer Vision. World Electric Vehicle Journal, 15(9), 400. https://doi.org/10.3390/wevj15090400