A Peer Review System for BIM Learning

Abstract

:1. Introduction

1.1. BIM Education

1.2. Active Learning in Engineering Education

1.3. Peer Review Systems

2. Research Objectives

3. Peer Review System for BIM Tool Learning

3.1. Guidelines for the Peer Review-Based BIM Course

- provide both formative feedback and summative decision-making,

- have a developed process and instrumentation with attention to thoroughness and fairness,

- allow the peer reviewers to understand their tasks and be prepared to accomplish them,

- foster trust and confidence in the process among all parties,

- invest ongoing efforts in improving the peer review process itself,

- develop assignments in ways that are likely to result in helpful collaborations,

- be a valued process within the academic unit, and

- let all parties cooperate in accomplishing peer review tasks in a timely way.

- Design a real case project: The instructor should use a real case, such as a real building or a real bridge, as the learning project. To take into account the variety of real construction projects, the instructor needs to convey a considerable amount of information to cover many different kinds of scenarios. As this is difficult for the instructor to do, it is important for the learner to study real cases so that the learner can then gain active experiences after finishing the course project.

- Set teaching/learning milestones: After finding a real case project, the instructor should set the teaching/learning milestones by following the course schedule. The milestones should follow the actual process in the industry. For example, the construction of a building should start with the foundation work and build up to the basement, the main structure components, and the exterior and interior design. By doing so, the learner can become aware of how the industry uses the tool in practice. This method can also help the learner to connect what they learn with real industry work.

- Break down the project into sub-tasks: After setting the learning milestones, the instructor should break the entire project work down into several sub-tasks. Each sub-task should be designed by following the milestone that he/she has just set. When breaking down the sub-task, it is important to set simpler goals for the learner in the early stage and gradually add complexities to the learning goals in stages. For instance, in the early stage, the sub-task may be “Build a model of a wall” or “Build a model of different windows.” After that, more complexities can be added to the following sub-task, such as “Construct a wall with two windows.”

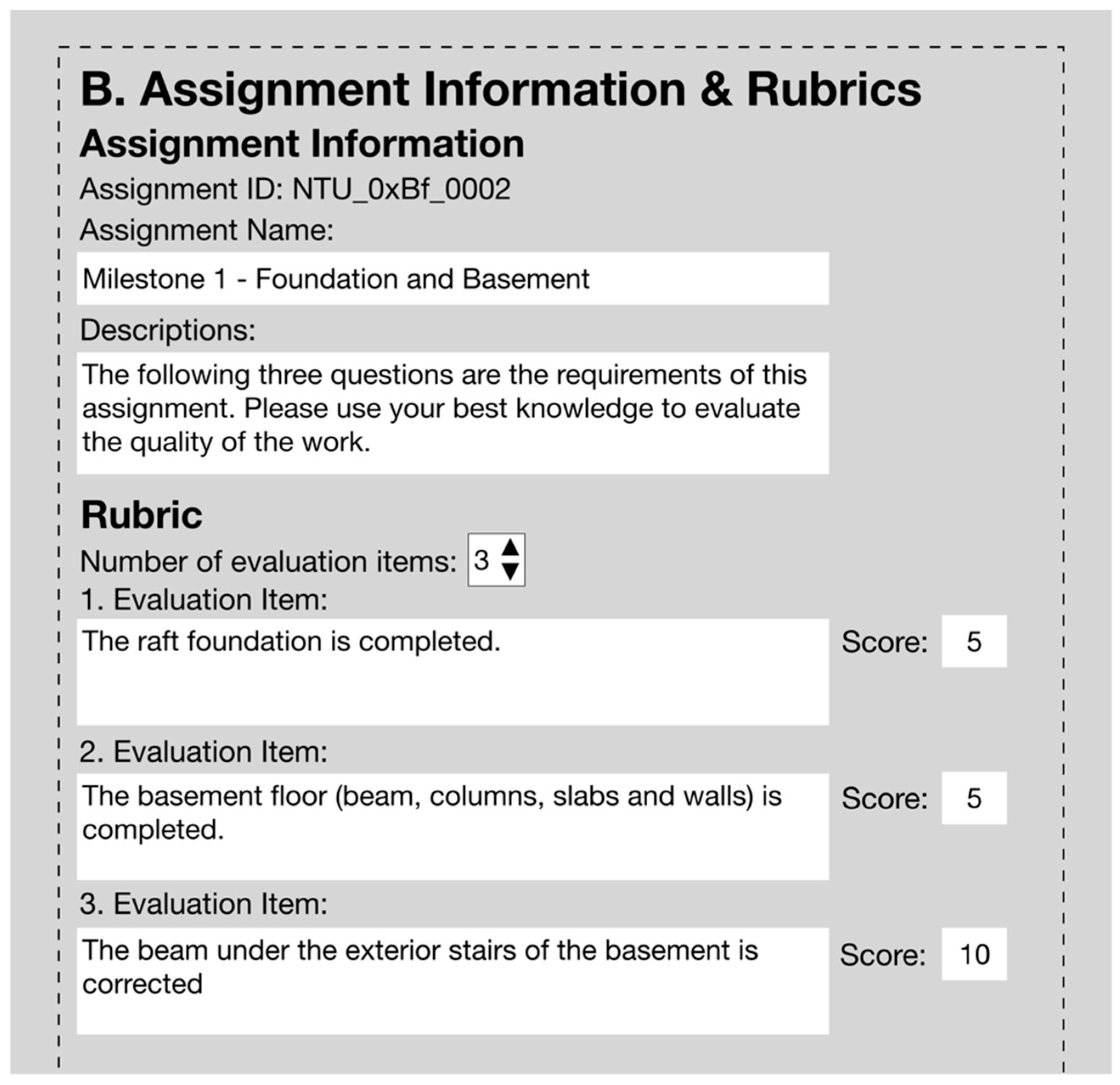

- Develop assessment rubrics: The instructor should develop rubrics for each sub-task for the learner to use as the assessment guidelines when reviewing others’ work. These rubrics should follow the set milestones for each sub-task. In BIM tool learning, it is important to make the learner aware of potential mistakes that they may make. Therefore, this research suggests that the rubrics can be developed using a binary checklist. The instructor should list the critical parts of each milestone and let the learner take a close look at others’ models. Additionally, unlike in architectural design or the composition of an article, evaluating the BIM tool learning performance of peers contains few subjective elements. A binary checklist could also make the peer review rubrics more objective, thus facilitating the learner’s implementation of the assessment. For instance, instead of the question, “Do you think the model of the wall is well built with the approximate information attached?” the question should be phrased as “Is the wall built to the right scale and in the right position?” or “Is the window installed in the right place according to the shop drawing?”

- Conduct the peer review: Before conducting the peer review process, it is necessary for the instructor to provide detailed instruction of the peer review mechanism. With the instruction of the entire mechanism, the learner can have a better understanding of how it works and thus have trust and confidence in the mechanism. The instructor also needs to provide a platform for reviewing the works. Such a platform should (a) be simple to use, to avoid adding extra load to the workload of the learner, which may create resistance to using the platform; (b) allow all reviewing to be conducted anonymously to ensure the fairness of the peer review process; and (c) make the learner randomly review more than one work, so that every learner can obtain more than one set of feedback from their peers.

- Review the result per sub-task: The instructor should review the results of the peer review after learners have conducted every sub-task to determine whether the designed model acquired the expected results. By reviewing the results of each sub-task, the instructor could make ongoing improvements to the tasks.

- Collect feedback after the project: After finishing the project/course, the instructor should collect feedback from the learners. A subjective questionnaire could be utilized at this stage to acquire learners’ feedback about the peer review mechanism and establish what they have learned from reviewing others’ work.

- Review the feedback: At the end of the course/project, the instructor should review all feedback received from the learners and use it to make improvements to the entire system, including the process, the milestones, the sub-tasks, and even the reviewing platform, for the next course.

3.2. Web-Based Reviewing Platform

4. Implementation

4.1. Development of the BIM Learning Program

4.2. Prototype of the Reviewing Platform

5. Validation

5.1. Actual Course Implementation

5.2. Evaluation of Effectiveness

5.3. Qualitative Feedback Analysis

- The course allows students to learn from their peers’ works (9 of the 26 provided feedback): The majority of the positive feedback mentioned that it was a good experience to learn from others’ work. Some found the creativity of their peers valuable in addition to what they learned from the instructor. They also observed others’ mistakes and considered these to reflect on their own work. For example, one of the students mentioned, “It is a very good system. We can see other students’ work and learn the advantages of other students, thus correcting our own shortcomings.”

- The course allows students to learn from the rating process (7 of the 26 provided feedback): Students placed a high value on the feedback process because it offered a fresh experience that helped them understand the difficulty that the instructor and teaching assistants have when grading their work. For example, one of the students stated, “I think this gives us a great opportunity to learn how to check mistakes and where to pay attention when modeling; it also lets us know how the teaching assistant and professors give marks and what the criteria are.”

- The course is an interesting way of learning (9 of the 26 provided feedback): As the course is very different from the old ways of teaching/learning, the students could learn from a different perspective, which was interesting and motivating for them. For instance, one of the students replied that “Using the peer-to-peer evaluation method, I think it is quite new, and it is also a time for us to learn extra. After all, if only the traditional assignments are assigned by the assistants or teachers, only the mistakes made by themselves can be found and modified, but that is it. If you use peer-to-peer evaluation methods, you can not only find out that you are different from others but also learn how others do well. Therefore, I think the [peer review method] is unique and meaningful.”

- The course helps the students understand the goal of the learning subject more clearly (7 of the 26 provided feedback): By rating others’ works, the students could better understand the learning goal through the grading criteria and reflect on their own work to see if they achieved the goal. For instance, one of the students mentioned, “Through peer review, we can see the work of other students and find out where they can do better. We can also review what we need to improve by grading our classmates.”

- The platform for conducting the review work is easy to operate (5 of the 26 provided feedback): Some said that the platform was easy to operate, stating that the platform design was clear and required little effort to understand. For example, one of the students stated that “I personally feel that the peer review system is very useful, the user interface clear at a glance, and you can enter the system without logging in. The operation is simple and user-friendly. If you want me to score, I will give full marks.”

- The course scores may have low reliability (10 of the 26 provided feedback): The majority expressed concern about the reliability of the scores. Some worried that their peers might put little effort into the ratings, and others said that the scores that they received from their peers varied considerably. For instance, one of the students mentioned, “I think some of the students did not spend sufficient effort reviewing others’ work and might randomly mark it, which would lose the original meaning of the reviewing mechanism.”

- The system operation requires improvement (9 of the 26 provided feedback): Some students said that they received the link but were unable to open the file. One said that he did not receive the link when it was time to rate, another said that it was time-consuming to upload the file onto the system, and another said that he did not click the confirm button and accidentally gave someone a score of zero.

- The course requires improvements regarding the anonymity of the mechanism (4 of the 26 provided feedback): The reviewing mechanism was designed to be anonymous. However, some students put their names or student ID numbers on the file name, which revealed their identities. Some students noticed this and were worried that it might have affected the reliability of the scores (e.g., one might have given a higher score to a friend).

- The rating criteria need to be more specific (1 of the 26 provided feedback): One student suggested that the rating criteria should be more detailed because she received three very varied ratings and thought that it was because the standards were not uniform.

- The review should include comments as well as scores (4 of the 26 provided feedback): The students suggested adding comments to the rating system so that they could write comments alongside the rating. By reading any qualitative comments, they would also be able to understand why they did not receive full marks. For example, one student mentioned, “I ended up with one 0 points. However, I could not see the comments from others. In addition, I hope that the system can [add] the function of inputting short comments, thus we can receive the most sincere suggestions from colleagues.”

6. Benefits

- The learning goals are clearer. By taking the position of a rater, the students can have a clearer picture of the scope and goals of what they should learn from this course. Additionally, the instructors have a chance to polish their rubric and make it more effective.

- The system can expand the method of learning. The students can learn from each other rather than only from the instructors. Especially for complicated subjects to learn, such as BIM, it is not easy for the instructor to cover every detail. Students can, therefore, learn from others’ good and bad work during the process of rating.

- The system can serve as a way to validate scores. With the instructor as the sole rater of students’ work, the scores awarded could be biased. By considering the students’ scores from the peer review system, the instructor has an additional reference by which to assign scores.

- The feedback can help to improve future courses. The instructor can understand the students’ learning outcomes from the peer review system and adjust future courses based on the students’ performance. In addition, the instructor can ask the students to write anonymous feedback about the system to obtain a better understanding of the students’ needs.

7. Conclusions

Funding

Acknowledgments

Conflicts of Interest

References

- Eastman, C.; Teicholz, P.; Sacks, R.; Liston, K. BIM Handbook: A Guide to Building Information Modelling for Owners, Managers, Designers, Engineers and Contractors; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Sebastian, R. Changing roles of the clients, architects and contractors through BIM. Eng. Constr. Archit. Manag. 2011, 18, 176–187. [Google Scholar] [CrossRef]

- Eadie, R.; Browne, M.; Odeyinka, H.; McKeown, C.; McNiff, S. BIM implementation throughout the UK construction project lifecycle: An analysis. Autom. Constr. 2013, 36, 145–151. [Google Scholar] [CrossRef]

- Su, J.Y.; Juang, J.R.; Lee, W.L.; Yang, C.H.; Tsai, M.H. V3DM+: BIM interactive collaboration system for facility management. Vis. Eng. 2016, 4, 5. [Google Scholar] [CrossRef]

- Azhar, S. Building Information Modeling (BIM): Trends, Benefits, Risks, and Challenges for the AEC Industry. Leadersh. Manag. Eng. 2011, 11, 241–252. [Google Scholar] [CrossRef]

- Tsai, M.H.; Md, A.M.; Kang, S.C.; Hsieh, S.H. Workflow re-engineering of design-build projects using a BIM tool. J. Chin. Inst. Eng. 2013, 37, 88–102. [Google Scholar] [CrossRef]

- Tsai, M.H.; Kang, S.C.; Hsieh, S.H. Lessons learnt from customization of a BIM tool for a design-build company. J. Chin. Inst. Eng. 2013, 37, 189–199. [Google Scholar] [CrossRef]

- Tsai, M.H.; Kang, S.C.; Hsieh, S.H. A three-stage framework for introducing a 4D tool in large consulting firms. Adv. Eng. Inform. 2010, 24, 476–489. [Google Scholar] [CrossRef]

- Tsai, M.H.; Mom, M.; Hsieh, S.H. Developing critical success factors for the assessment of BIM technology adoption: Part I. Methodology and survey. J. Chin. Inst. Eng. 2014, 37, 845–858. [Google Scholar] [CrossRef]

- Suwal, S.; Singh, V. Assessing students’ sentiments towards the use of a Building Information Modelling (BIM) learning platform in a construction project management course. Eur. J. Eng. Educ. 2018, 43, 492–506. [Google Scholar] [CrossRef]

- Magana, A.J.; Vieira, C.; Boutin, M. Characterizing Engineering Learners’ Preferences for Active and Passive Learning Methods. IEEE Trans. Educ. 2018, 61, 46–54. [Google Scholar] [CrossRef]

- Felder, R.M.; Silverman, L.K. Learning and Teaching Styles in Engineering Education. J. Eng. Educ. 1988, 78, 674–681. [Google Scholar]

- Auyuanet, A.; Modzelewski, H.; Loureiro, S.; Alessandrini, D.; Míguez, M. FísicActiva: Applying active learning strategies to a large engineering lecture. Eur. J. Eng. Educ. 2018, 43, 55–64. [Google Scholar] [CrossRef]

- Felder, R.M.; Woods, D.R.; Stice, J.E.; Rugarcia, A. The future of engineering education: Part 2. Teaching methods that work. Chem. Eng. Educ. 2000, 34, 26–39. [Google Scholar]

- Lima, R.M.; Andersson, P.H.; Saalman, E. Active Learning in Engineering Education: A (re)introduction. Eur. J. Eng. Educ. 2017, 42, 1–4. [Google Scholar] [CrossRef]

- Gillet, D.; Ngoc, V.A.N.; Rekik, Y. Collaborative Web-Based Experimentation in Flexible Engineering Education. IEEE Trans. Educ. 2005, 48, 696–704. [Google Scholar] [CrossRef]

- Carlson, L.; Sullivan, J. Hands-on Engineering: Learning by Doing in the Integrated Teaching and Learning Program. Int. J. Eng. Educ. 1999, 15, 20–31. [Google Scholar]

- Tsai, M.H.; Wen, M.C.; Chang, Y.L.; Kang, S.C. Game-based education for disaster prevention. Ai Soc. 2015, 30, 463–475. [Google Scholar] [CrossRef]

- Tsai, M.H.; Chang, Y.L.; Shiau, S.; Wang, S.M. Exploring the effects of a serious game-based learning package for disaster prevention education: The case of Battle of Flooding Protection. Int. J. Disaster Risk Reduct. Under review.

- Clark, R.; Kaw, A.; Lou, Y.; Besterfield-Sacre, M.; Scott, A. Evaluating Blended and Flipped Instruction in Numerical Methods at Multiple Engineering Schools. Int. J. Scholarsh. Teach. Learn. 2018, 12. [Google Scholar] [CrossRef]

- Hadim, H.A.; Esche, S.K. Enhancing the engineering curriculum through project-based learning. In Proceedings of the 32nd Annual Frontiers in Education, Boston, MA, USA, 6–9 November 2002. [Google Scholar] [CrossRef]

- Fini, E.H.; Awadallah, F.; Parast, M.M.; Abu-Lebdeh, T. The impact of project-based learning on improving student learning outcomes of sustainability concepts in transportation engineering courses. Eur. J. Eng. Educ. 2018, 43, 473–488. [Google Scholar] [CrossRef]

- Lutsenko, G. Case study of a problem-based learning course of project management for senior engineering students. Eur. J. Eng. Educ. 2018, 43, 895–910. [Google Scholar] [CrossRef]

- Gehringer, E.F. Electronic peer review and peer grading in computer-science courses. ACM SIGCSE Bull. 2004, 33, 139–143. [Google Scholar] [CrossRef]

- Mulder, R.; Baik, C.; Naylor, R.; Pearce, J. How does student peer review influence perceptions, engagement and academic outcomes? A case study. Assess. Eval. High. Educ. 2014, 39, 657–677. [Google Scholar] [CrossRef]

- McGourty, J.; Dominick, P.; Reilly, R.R. Incorporating student peer review and feedback into the assessment process. In Proceedings of the FIE ‘98. 28th Annual Frontiers in Education Conference, Tempe, AZ, USA, 4–7 November 1998; Volume 1, pp. 14–18. [Google Scholar] [CrossRef]

- Keith, T. Peer Assessment between Students in Colleges and Universities. Rev. Educ. Res. 1998, 68, 249. [Google Scholar] [CrossRef]

- Boud, D. Sustainable Assessment: Rethinking assessment for the learning society. Stud. Contin. Educ. 2007, 22, 151–167. [Google Scholar] [CrossRef]

- Liu, E.Z.F.; Lin, S.S.J.; Chiu, C.H.; Yuan, S.M. Web-based peer review: The learner as both adapter and reviewer. IEEE Trans. Educ. 2001, 44, 246–251. [Google Scholar] [CrossRef]

- Wang, Y.; Li, H.; Feng, Y.; Jiang, Y.; Liu, Y. Assessment of programming language learning based on peer code review model: Implementation and experience report. Comput. Educ. 2012, 59, 412–422. [Google Scholar] [CrossRef]

- Carlson, P.A.; Berry, F.C.; Voltmer, D. Incorporating Student Peer-Review into an Introduction to Engineering Design Course. In Proceedings of the Proceedings Frontiers in Education 35th Annual Conference, Indianopolis, IN, USA, 19–22 October 2005. [Google Scholar] [CrossRef]

- Member, S. Using Computer-Mediated Peer Review in an Engineering. Eng. Technol. 2008, 51, 264–279. [Google Scholar] [CrossRef]

- Van Note Chism, N. Peer Review of Teaching. A Sourcebook, 2nd ed.; Anker Publishing Company, Inc.: Bolton, MA, USA, 1999. [Google Scholar]

| Milestone | Learning Items |

|---|---|

| Milestone 1: Foundation and basement | |

| Learning target: Learn how to lay out the construction site in BIM software | |

| Introduction of the Revit environment | |

| Grid and floor line functions | |

| Foundation beams and columns | |

| Raft foundation slab and basement slab | |

| Basement columns and exterior walls | |

| Basement interior walls | |

| Basement openings (windows and doors) | |

| Basement exterior stairs | |

| Basement interior stairs and section lines | |

| View range functions in Revit | |

| Milestone 2: First floor and property lines | |

| Learning target: Learn how to use the basic 3D modeling operations in BIM software | |

| 1st floor beams and slabs | |

| 1st floor columns and exterior walls | |

| 1st floor stairs and interior walls | |

| Create and edit family type – window example | |

| 1st floor windows and doors | |

| Cornered windows | |

| 1st floor to 2nd floor stairs | |

| Site and property lines | |

| Milestone 3: Second floor and driveway | |

| Learning target: Learn how to use the advanced operation skills in BIM software (e.g., parametric modeling for openings and furnishing) | |

| 2nd floor beams and slabs | |

| 2nd floor walls and openings | |

| 2nd floor balcony and ramps | |

| 2nd floor furnishing – bathroom and kitchen | |

| Import CAD file function in Revit | |

| Driveway model | |

| Edit driveway terrain | |

| 2nd floor to 3rd floor stairs | |

| Milestone 4: Third floor to seventh floor and roof | |

| Learning target: Learn how to combine basic and advanced operation skills for detailed modeling | |

| 3rd floor beams and slabs | |

| 3rd floor columns, walls, and openings | |

| 3rd floor to 4th floor stairs and elevator openings | |

| 4th floor to 7th floor models | |

| Roof beams and slabs | |

| Roof GFRC board | |

| Roof P2 and P3 floors | |

| Water tanks and roof plates | |

| Roof shafts and balcony handrails | |

| Textures and materials | |

| Milestone 5: Finalization—rendering, quantity takeoff, and shop drawings | |

| Learning target: Learn how to use the advanced functions of BIM software including floor plan drawing, 3D model rendering, quantity takeoff, and section view | |

| Model review | |

| Camera setting and rendering | |

| Basic animations | |

| Model and site terrain integration | |

| Generate quantity takeoffs | |

| Shop drawing template setup | |

| Floor plan drawings export | |

| Section view drawings export | |

| Milestone | Review Rubrics | Points |

|---|---|---|

| Milestone 1 | The raft foundation is completed. | 5 |

| The basement floor (beams, columns, slabs, and walls) are completed. | 5 | |

| The beam under the exterior stairs of the basement is corrected. | 10 | |

| Milestone 2 | The building elements (beams, columns, slabs, and walls) of the 1st floor are completed. | 5 |

| Site and property lines are drawn. | 5 | |

| All the cornered windows are finished appropriately. | 5 | |

| The elevator openings are finished. | 5 | |

| Milestone 3 | The overall model of the 2nd floor is completed (structure, driveway, and terrain). | 5 |

| The furnishings of both the bath and the kitchen are completed. | 5 | |

| The balconies of the 1st floor are completed. | 5 | |

| The ramps of the balcony are completed. | 5 | |

| Milestone 4 | The overall model from the 3rd floor to the roof level is completed. | 5 |

| The water tank and the roof plates are completed. | 5 | |

| The GFRC boards are completed. | 5 | |

| Two roof shafts and the balcony handrails are completed. | 5 | |

| Milestone 5 | The overall model of the entire building is rendered. | 5 |

| The model file contains at least two section views. | 5 | |

| The model of the water tank with 20 cm plate is modified. | 5 | |

| The model of the stair platforms of each floor is modified. | 5 |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tsai, M.-H. A Peer Review System for BIM Learning. Sustainability 2019, 11, 5747. https://doi.org/10.3390/su11205747

Tsai M-H. A Peer Review System for BIM Learning. Sustainability. 2019; 11(20):5747. https://doi.org/10.3390/su11205747

Chicago/Turabian StyleTsai, Meng-Han. 2019. "A Peer Review System for BIM Learning" Sustainability 11, no. 20: 5747. https://doi.org/10.3390/su11205747

APA StyleTsai, M.-H. (2019). A Peer Review System for BIM Learning. Sustainability, 11(20), 5747. https://doi.org/10.3390/su11205747