Effects of the Performance-Based Research Fund and Other Factors on the Efficiency of New Zealand Universities: A Malmquist Productivity Approach

Abstract

:1. Introduction

2. Literature Review

3. Materials and Methods

3.1. Malmquist Productivity Index

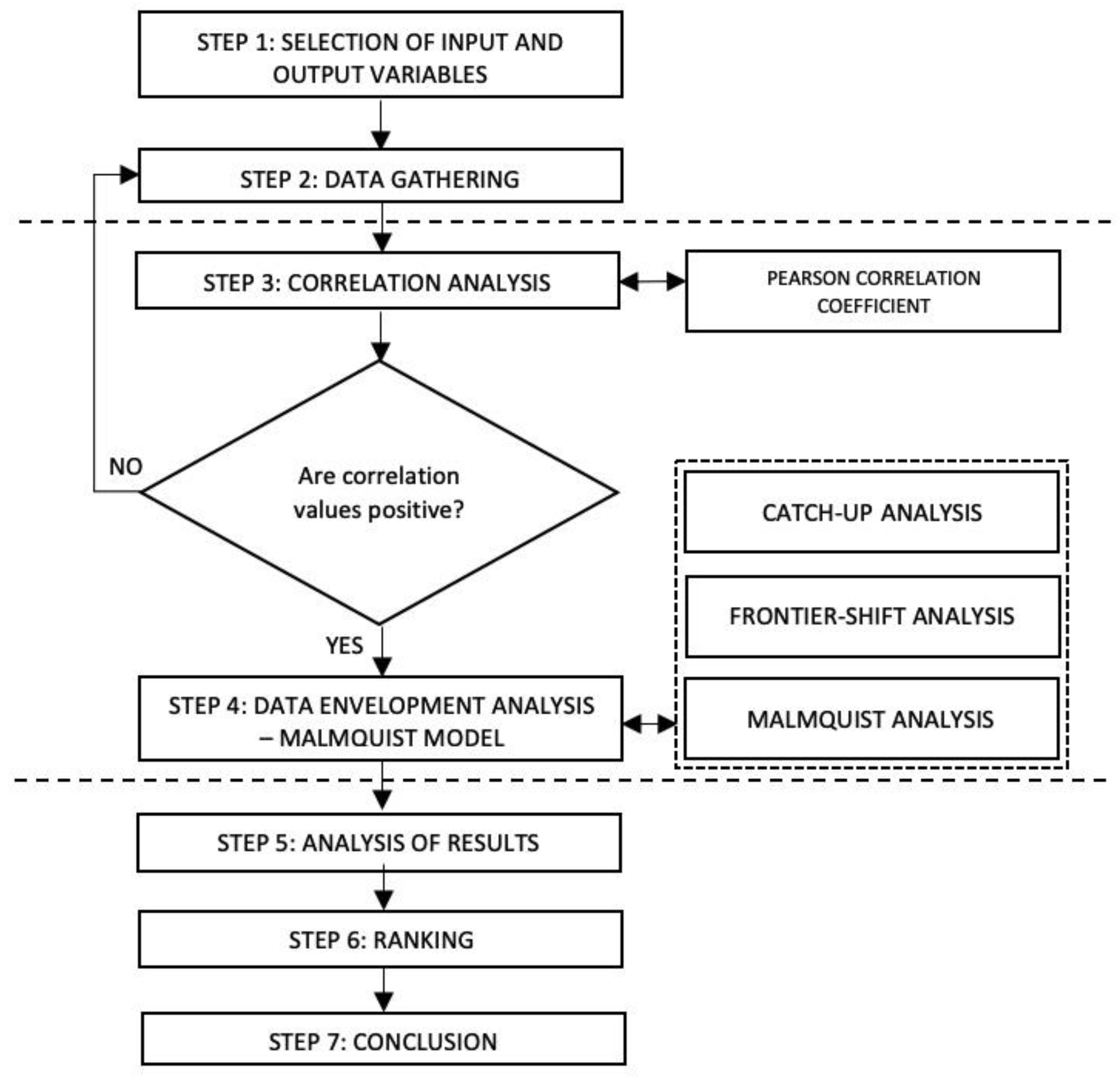

3.2. Research Process

4. Results

4.1. Data Selection for Input and Output Variables

4.2. Pearson Correlation

4.3. Result of MPI Analysis

5. Discussions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Smart, W. The impact of the performance-based research fund on the research productivity of New Zealand universities. Soc. Policy J. N. Z. 2009, 34, 136–151. [Google Scholar]

- Benade, L.; Devine, N.; Stewart, G. The 2019 PBRF Review: What’s to be Done? Springer: Berlin/Heidelberg, Germany, 2019. [Google Scholar]

- Curtis, B.; Matthewman, S. The managed university: The PBRF, its impacts and staff attitudes. N. Z. J. Employ. Relat. 2005, 30, 1. [Google Scholar]

- We Collate the Annual Audited Data on the Financial Performance of All Public Tertiary Education Institutions (TEIs) for Comparative Purposes. Available online: https://www.tec.govt.nz/funding/funding-and-performance/performance/financial/ (accessed on 10 November 2019).

- McLaughlin, M. Tertiary Education Policy in New Zealand; Ian Axford New Zealand Fellowship in Public Policy: Wellington, New Zealand, 2003. [Google Scholar]

- Buela-Casal, G.; Gutiérrez-Martínez, O.; Bermúdez-Sánchez, M.P.; Vadillo-Muñoz, O. Comparative study of international academic rankings of universities. Scientometrics 2007, 71, 349–365. [Google Scholar] [CrossRef]

- NZ Funding Model Means Ongoing Rankings Fall Likely. Available online: https://www.auckland.ac.nz/en/news/2018/09/27/funding-model-makes-nz-universities-ratings-falls-likely.html/ (accessed on 3 November 2019).

- Hjalmarsson, L.; Kumbhakar, S.C.; Heshmati, A. DEA, DFA and SFA: A comparison. J. Product. Anal. 1996, 7, 303–327. [Google Scholar] [CrossRef]

- Kipesha, E.F.; Msigwa, R. Efficiency of higher learning institutions: Evidences from public, universities in Tanzania. J. Educ. Pract. 2013, 4, 63–73. [Google Scholar]

- Daghbashyan, Z. Do University Units Differ in the Efficiency of Resource Utilization?:—A Case Study of the Royal Institute of Technology (KTH), Sweden; KTH Royal Institute of Technology: Stockholm, Sweden, 2009. [Google Scholar]

- Abbott, M.; Doucouliagos, C. The efficiency of Australian universities: A data envelopment analysis. Econ. Educ. Rev. 2003, 22, 89–97. [Google Scholar] [CrossRef]

- Flegg, A.-T.; Allen, D.; Field, K.; Thurlow, T. Measuring the efficiency of British universities: A multi-period data envelopment analysis. Educ. Econ. 2004, 12, 231–249. [Google Scholar] [CrossRef]

- Katharaki, M.; Katharakis, G. A comparative assessment of Greek universities’ efficiency using quantitative analysis. Int. J. Educ. Res. 2010, 49, 115–128. [Google Scholar] [CrossRef]

- Liu, W.-B.; Wongcha, A.; Peng, K.-C. Adopting super-efficiency and tobit model on analyzing the efficiency of teacher’s colleges in Thailand. Int. J. New Trends Educ. Their Implic. 2012, 3, 176–188. [Google Scholar]

- Taylor, B.; Harris, G. Relative efficiency among South African universities: A data envelopment analysis. High. Educ. 2004, 47, 73–89. [Google Scholar] [CrossRef]

- Warning, S. Performance differences in German higher education: Empirical analysis of strategic groups. Rev. Ind. Organ. 2004, 24, 393–408. [Google Scholar] [CrossRef]

- Leitner, K.-H.; Prikoszovits, J.; Schaffhauser-Linzatti, M.; Stowasser, R.; Wagner, K. The impact of size and specialisation on universities’ department performance: A DEA analysis applied to Austrian universities. High. Educ. 2007, 53, 517–538. [Google Scholar] [CrossRef]

- Monfared, S.; Safi, M. Efficiency analysis of public universities in Iran using DEA approach: Importance of stakeholder’s perspective. J. Ind. Syst. Eng. 2012, 5, 185–197. [Google Scholar]

- Wolszczak-Derlacz, J.; Parteka, A. Efficiency of European public higher education institutions: A two-stage multicountry approach. Scientometrics 2011, 89, 887. [Google Scholar] [CrossRef] [Green Version]

- Pietrzak, M.; Pietrzak, P.; Baran, J. Efficiency assessment of public higher education with the application of Data Envelopment Analysis: The evidence from Poland. Online J. Appl. Knowl. Manag. 2016, 4, 59–73. [Google Scholar] [CrossRef] [Green Version]

- Jongbloed, B.W.; Vink, M. Assessing efficiency in British, Dutch and German universities. An application of data envelopment analysis. In Comparative Policy Studies in Higher Education; Goedegebuure, L.C.J., van Vught, F.A., Eds.; Lemma: Utrecht, The Netherlands, 1994; pp. 195–218. [Google Scholar]

- Johnes, J.; Johnes, G. Research funding and performance in UK university departments of economics: A frontier analysis. Econ. Educ. Rev. 1995, 14, 301–314. [Google Scholar] [CrossRef]

- Worthington, A.C.; Lee, B.L. Efficiency, technology and productivity change in Australian universities, 1998–2003. Econ. Educ. Rev. 2008, 27, 285–298. [Google Scholar] [CrossRef] [Green Version]

- Ismail, I.; Ramalingam, S.; Azahan, A.H.; Khezrimotlagh, D. Relative efficiency of public universities in Malaysia. Sch. J. Econ. Bus. Manag. 2014, 1, 606–612. [Google Scholar]

- Mahmudah, U.; Lola, M.S. The efficiency measurement of indonesian universities based on a fuzzy data envelopment analysis. Open J. Stat. 2016, 6, 1050. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.-N.; Nguyen, T.-D.; Le, M.-D. Assessing performance efficiency of information and communication technology industry-forecasting and evaluating: The case in Vietnam. Appl. Sci. 2019, 9, 3996. [Google Scholar] [CrossRef] [Green Version]

- Asmild, M.; Paradi, J.C.; Aggarwall, V.; Schaffnit, C. Combining DEA window analysis with the Malmquist index approach in a study of the Canadian banking industry. J. Product. Anal. 2004, 21, 67–89. [Google Scholar] [CrossRef]

- Schaffnit, C.; Rosen, D.; Paradi, J.C. Best practice analysis of bank branches: An application of DEA in a large Canadian bank. Eur. J. Oper. Res. 1997, 98, 269–289. [Google Scholar] [CrossRef]

- Biørn, E.; Hagen, T.P.; Iversen, T.; Magnussen, J. The effect of activity-based financing on hospital efficiency: A panel data analysis of DEA efficiency scores 1992–2000. Health Care Manag. Sci. 2003, 6, 271–283. [Google Scholar] [CrossRef] [PubMed]

- Ouellette, P.; Vierstraete, V. Technological change and efficiency in the presence of quasi-fixed inputs: A DEA application to the hospital sector. Eur. J. Oper. Res. 2004, 154, 755–763. [Google Scholar] [CrossRef]

- Ferrier, G.D.; Rosko, M.D.; Valdmanis, V.G. Analysis of uncompensated hospital care using a DEA model of output congestion. Health Care Manag. Sci. 2006, 9, 181–188. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.-N.; Huang, Y.-F.; Cheng, I.; Nguyen, V. A multi-criteria decision-making (mcdm) approach using hybrid scor metrics, ahp, and topsis for supplier evaluation and selection in the gas and oil industry. Processes 2018, 6, 252. [Google Scholar] [CrossRef] [Green Version]

- Kuo, R.; Lee, L.; Hu, T.-L. Developing a supplier selection system through integrating fuzzy AHP and fuzzy DEA: A case study on an auto lighting system company in Taiwan. Prod. Plan. Control 2010, 21, 468–484. [Google Scholar] [CrossRef]

- Kumar, A.; Jain, V.; Kumar, S. A comprehensive environment friendly approach for supplier selection. Omega 2014, 42, 109–123. [Google Scholar] [CrossRef]

- Shi, G.-M.; Bi, J.; Wang, J.-N. Chinese regional industrial energy efficiency evaluation based on a DEA model of fixing non-energy inputs. Energy Policy 2010, 38, 6172–6179. [Google Scholar] [CrossRef]

- Wang, C.-N.; Nguyen, V.; Thai, H.; Duong, D. Multi-criteria decision making (MCDM) approaches for solar power plant location selection in viet nam. Energies 2018, 11, 1504. [Google Scholar] [CrossRef] [Green Version]

- Wang, C.-N.; Le, T.-M.; Nguyen, H.-K.; Ngoc-Nguyen, H. Using the optimization algorithm to evaluate the energetic industry: A case study in Thailand. Processes 2019, 7, 87. [Google Scholar] [CrossRef] [Green Version]

- Ramanathan, R. Evaluating the comparative performance of countries of the Middle East and North Africa: A DEA application. Socio-Econ. Plan. Sci. 2006, 40, 156–167. [Google Scholar] [CrossRef]

- Sexton, T.R.; Lewis, H.F. Two-stage DEA: An application to major league baseball. J. Product. Anal. 2003, 19, 227–249. [Google Scholar] [CrossRef]

- Cullinane, K.; Song, D.-W.; Ji, P.; Wang, T.-F. An application of DEA windows analysis to container port production efficiency. Rev. Netw. Econ. 2004, 3. [Google Scholar] [CrossRef] [Green Version]

- Tarim, Ş.; Dener, H.I.; Tarim, Ş.A. Efficiency measurement in the hotel industry: Output factor constrained DEA application. Anatolia 2000, 11, 111–123. [Google Scholar] [CrossRef]

- Martín, J.C.; Roman, C. An application of DEA to measure the efficiency of Spanish airports prior to privatization. J. Air Transp. Manag. 2001, 7, 149–157. [Google Scholar] [CrossRef]

- Wang, C.-N.; Le, A. Measuring the macroeconomic performance among developed countries and asian developing countries: Past, present, and future. Sustainability 2018, 10, 3664. [Google Scholar] [CrossRef] [Green Version]

- Charnes, A.; Cooper, W.W.; Rhodes, E. Measuring the efficiency of decision making units. Eur. J. Oper. Res. 1978, 2, 429–444. [Google Scholar] [CrossRef]

- Banker, R.D.; Charnes, A.; Cooper, W.W. Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manag. Sci. 1984, 30, 1078–1092. [Google Scholar] [CrossRef] [Green Version]

- Ahn, T.; Charnes, A.; Cooper, W.W. Some statistical and DEA evaluations of relative efficiencies of public and private institutions of higher learning. Socio-Econ. Plan. Sci. 1988, 22, 259–269. [Google Scholar] [CrossRef]

- Tone, K. A slacks-based measure of efficiency in data envelopment analysis. Eur. J. Oper. Res. 2001, 130, 498–509. [Google Scholar] [CrossRef] [Green Version]

- Khezrimotlagh, D.; Salleh, S.; Mohsenpour, Z. A new method in data envelopment analysis to find efficient decision making units and rank both technical efficient and inefficient DMUs together. Appl. Math. Sci. 2012, 6, 4609–4615. [Google Scholar]

- Caves, D.W.; Christensen, L.R.; Diewert, W.E. The economic theory of index numbers and the measurement of input, output, and productivity. Econom. J. Econom. Soc. 1982, 1393–1414. [Google Scholar] [CrossRef]

- Färe, R.; Grosskopf, S.; Lindgren, B.; Roos, P. Productivity changes in Swedish pharamacies 1980–1989: A non-parametric Malmquist approach. J. Product. Anal. 1992, 3, 85–101. [Google Scholar] [CrossRef]

- Puertas, R.; Marti, L. Sustainability in universities: DEA-Greenmetric. Sustainability 2019, 11, 3766. [Google Scholar] [CrossRef] [Green Version]

- Liu, N.C.; Cheng, Y. The academic ranking of world universities. High. Educ. Eur. 2005, 30, 127–136. [Google Scholar] [CrossRef]

- Driscoll, A.; De Noriega, D.C. Taking Ownership of Accreditation: Assessment Processes that Promote Institutional Improvement and Faculty Engagement; Stylus Publishing, LLC.: Sterling, VA, USA, 2006. [Google Scholar]

- Schwarz, S.; Westerheijden, D.F. Accreditation and Evaluation in the European Higher Education Area; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2007; Volume 5. [Google Scholar]

| Authors, Year of Study | Inputs Used | Outputs Used | Method/Efficiency Model | Decision Making Units |

|---|---|---|---|---|

| Jongbloed et al. 1994 [21] | Labour expenditures Material expenditures Labour expenditures Material expenditures Labour expenditures Material expenditures | FTE undergraduates FTE postgraduates Income from grants and contracts Fulltime students Part time students Auditing students Dissertations Other publications Number of students Non-core research funding (government, research enterprise, private foundations) | Cost efficiency model Cost efficiency model Cost efficiency model | 60 Universities in UK from 1989–1991 28 Dutch universities from 1989–1991 48 Universities in Germany from 1989–1991 |

| Johnes and Johnes, 1995 [22] | Teaching/research and research only staff Per capital research grants Undergraduate student load | Papers and letters in academic journals Journal articles Authored and edited books Published works Edited works | DEA | 36 UK Universities economics department |

| Worthington and Lee, 2008 [23] | Academic staff Non-academic staff Non-labour expenditure Undergraduate load Postgraduate load | Undergraduate graduates Postgraduate graduates PhD graduates National competitive grants Industry grants Publication | Malmquist Index | 35 Australian Universities from 1998–2003 period |

| Ismail et al., 2014 [24] | Postgraduate students Undergraduate students Academic staff | Postgraduate graduates Undergraduate graduates Graduates working Graduates opt for further studies Graduates opt for developing skills | DEA | 20 Public universities in Malaysia |

| Mahmudah and Lola, 2016 [25] | Lecturer Student Department Ratio | World rank Presence rank Impact rank Openness rank Excellence rank | Fuzzy DEA | 25 Indonesian universities |

| DMU No | Name of Universities | Abbreviation |

|---|---|---|

| DMU1 | Auckland University of Technology | AUT |

| DMU2 | Lincoln University | LU |

| DMU3 | Massey University | MU |

| DMU4 | University of Auckland | UA |

| DMU5 | University of Canterbury | UC |

| DMU6 | University of Otago | UO |

| DMU7 | University of Waikato | UW |

| DMU8 | Victoria University of Wellington | VUW |

| Year | Statistics | AS | NAS | TE | NDG | NPG | TG | TOR |

|---|---|---|---|---|---|---|---|---|

| 2013 | Max | 2131 | 2778 | 41,365 | 5490 | 4955 | 10,445 | 973,471 |

| Min | 274 | 456 | 5850 | 430 | 230 | 660 | 114,194 | |

| Average | 1140 | 1342.50 | 21,881.88 | 3101.25 | 1908.75 | 5010.00 | 419,108.63 | |

| SD | 598.88 | 669.73 | 10,581.94 | 1400.26 | 1308.30 | 2613.56 | 253,096.63 | |

| 2014 | Max | 2154 | 2789 | 41,955 | 5605 | 4740 | 10,345 | 1,012,839 |

| Min | 236 | 422 | 4485 | 460 | 220 | 680 | 114,483 | |

| Average | 1142.38 | 1329.25 | 21,633.13 | 3051.25 | 1888.13 | 4939.38 | 433,258.75 | |

| SD | 615.56 | 679.84 | 10,930.70 | 1456.50 | 1243.88 | 2603.43 | 262,112.95 | |

| 2015 | Max | 2183 | 2892 | 42,100 | 5285 | 4910 | 10,195 | 1,074,624 |

| Min | 351 | 331 | 4605 | 440 | 210 | 650 | 110,786 | |

| Average | 1177.25 | 1356.00 | 21,731.25 | 2936.25 | 1944.38 | 4880.63 | 455,076.63 | |

| SD | 599.33 | 720.71 | 10,948.15 | 1404.72 | 1311.97 | 2605.82 | 277,202.28 | |

| 2016 | Max | 2209 | 3126 | 41,865 | 5300 | 5350 | 10,650 | 1,092,586 |

| Min | 319 | 331 | 4975 | 400 | 205 | 605 | 123,196 | |

| Average | 1158.13 | 1429.75 | 21,950.63 | 2883.13 | 2113.75 | 4996.88 | 472,512.63 | |

| SD | 584.88 | 793.72 | 10,796.35 | 1426.69 | 1389.11 | 2721.36 | 280,268.84 | |

| 2017 | Max | 2232 | 3126 | 42,300 | 5390 | 5625 | 11,015 | 1,068,364 |

| Min | 332 | 359 | 3795 | 410 | 265 | 675 | 116,274 | |

| Average | 1098.38 | 1659.00 | 21,935.63 | 2868.13 | 2111.88 | 4980.00 | 489,487.25 | |

| SD | 544.64 | 845.84 | 10,995.24 | 1415.67 | 1461.92 | 2788.31 | 274,563.43 | |

| 2018 | Max | 2347 | 3209 | 42,760 | 5155 | 5755 | 10,910 | 1,143,864 |

| Min | 324 | 340 | 2950 | 475 | 290 | 765 | 118,610 | |

| Average | 1117.38 | 1681.00 | 22,118.75 | 3000.63 | 2243.75 | 5244.38 | 506,085.75 | |

| SD | 573.40 | 892.68 | 11,141.50 | 1313.06 | 1486.35 | 2697.05 | 294,702.14 |

| AS | NAS | TE | NDG | NPG | TG | TOR | |

|---|---|---|---|---|---|---|---|

| AS | 1 | 0.920378 | 0.883679 | 0.842026 | 0.882053 | 0.898005 | 0.926228 |

| NAS | 0.920378 | 1 | 0.835755 | 0.839571 | 0.912896 | 0.91221 | 0.990539 |

| TE | 0.883679 | 0.835755 | 1 | 0.931933 | 0.945837 | 0.978585 | 0.856424 |

| NDG | 0.842026 | 0.839571 | 0.931933 | 1 | 0.839899 | 0.961941 | 0.839957 |

| NPG | 0.882053 | 0.912896 | 0.945837 | 0.839899 | 1 | 0.956242 | 0.936537 |

| TG | 0.898005 | 0.91221 | 0.978585 | 0.961941 | 0.956242 | 1 | 0.924321 |

| TOR | 0.926228 | 0.990539 | 0.856424 | 0.839957 | 0.936537 | 0.924321 | 1 |

| DMUs | Year Periods | |||||

|---|---|---|---|---|---|---|

| 2013=>2014 | 2014=>2015 | 2015=>2016 | 2016=>2017 | 2017=>2018 | Average | |

| DMU1 | 1 | 1 | 1 | 1 | 1 | 1 |

| DMU2 | 1.09609159 | 0.91628871 | 1.091359076 | 1 | 1 | 1.020747875 |

| DMU3 | 1 | 1 | 1 | 0.847807315 | 1.019116074 | 0.973384678 |

| DMU4 | 1 | 1 | 1 | 1 | 1 | 1 |

| DMU5 | 1 | 0.990254569 | 1.009369762 | 0.972188207 | 1.029087982 | 1.000180104 |

| DMU6 | 1 | 1 | 1 | 1 | 1 | 1 |

| DMU7 | 0.983631579 | 0.99324135 | 1.020818358 | 1.002684436 | 1 | 1.000075145 |

| DMU8 | 1 | 1 | 1 | 0.978323209 | 0.924370093 | 0.98053866 |

| Average | 1.009965396 | 0.987473079 | 1.0151934 | 0.975125396 | 0.996571769 | 0.996865808 |

| Max | 1.09609159 | 1 | 1.091359076 | 1.002684436 | 1.029087982 | 1.020747875 |

| Min | 0.983631579 | 0.91628871 | 1 | 0.847807315 | 0.924370093 | 0.973384678 |

| SD | 0.035268452 | 0.029014388 | 0.03167009 | 0.052730083 | 0.031253333 | 0.014337993 |

| DMUs | Year Periods | |||||

|---|---|---|---|---|---|---|

| 2013=>2014 | 2014=>2015 | 2015=>2016 | 2016=>2017 | 2017=>2018 | Average | |

| DMU1 | 1.010693009 | 0.936430638 | 0.987085807 | 0.997883831 | 1.01878562 | 0.990175781 |

| DMU2 | 1.064501216 | 1.019402164 | 0.991793414 | 0.952002384 | 1.130851044 | 1.031710045 |

| DMU3 | 1.133918617 | 1.00620516 | 0.94066454 | 0.941744429 | 1.017912233 | 1.008088996 |

| DMU4 | 0.988649965 | 1.014844149 | 1.020876556 | 1.011278714 | 1.005319966 | 1.00819387 |

| DMU5 | 0.972915975 | 0.984178198 | 1.032456901 | 1.012979863 | 1.001884719 | 1.000883131 |

| DMU6 | 0.984252477 | 1.007133317 | 1.017783397 | 1.100542911 | 0.994019075 | 1.020746235 |

| DMU7 | 0.984472308 | 0.970724559 | 1.009067017 | 1.030182408 | 1.092457772 | 1.017380813 |

| DMU8 | 0.969990631 | 0.96769892 | 0.985320766 | 0.940434909 | 1.102286937 | 0.993146433 |

| Average | 1.013674275 | 0.988327138 | 0.99813105 | 0.998381181 | 1.045439671 | 1.008790663 |

| Max | 1.133918617 | 1.019402164 | 1.032456901 | 1.100542911 | 1.130851044 | 1.031710045 |

| Min | 0.969990631 | 0.936430638 | 0.94066454 | 0.940434909 | 0.994019075 | 0.990175781 |

| SD | 0.05727762 | 0.028754974 | 0.028859508 | 0.05417503 | 0.053926295 | 0.014125245 |

| DMUs | Year Periods | |||||

|---|---|---|---|---|---|---|

| 2013=>2014 | 2014=>2015 | 2015=>2016 | 2016=>2017 | 2017=>2018 | Average | |

| DMU1 | 1.010693009 | 0.936430638 | 0.987085807 | 0.997883831 | 1.01878562 | 0.990175781 |

| DMU2 | 1.166790830 | 0.934066694 | 1.082402744 | 0.952002384 | 1.130851044 | 1.053222739 |

| DMU3 | 1.133918617 | 1.006205160 | 0.940664540 | 0.798417815 | 1.037370719 | 0.983315370 |

| DMU4 | 0.988649965 | 1.014844149 | 1.020876556 | 1.011278714 | 1.005319966 | 1.008193870 |

| DMU5 | 0.972915975 | 0.974586957 | 1.042130776 | 0.984807077 | 1.031027524 | 1.001093662 |

| DMU6 | 0.984252477 | 1.007133317 | 1.017783397 | 1.100542911 | 0.994019075 | 1.020746235 |

| DMU7 | 0.968358051 | 0.964163771 | 1.030074136 | 1.032947866 | 1.092457772 | 1.017600319 |

| DMU8 | 0.969990631 | 0.967698920 | 0.985320766 | 0.920049298 | 1.018921079 | 0.972396139 |

| Average | 1.024446194 | 0.975641201 | 1.013292340 | 0.974741237 | 1.041094100 | 1.005843014 |

| Max | 1.166790830 | 1.014844149 | 1.082402744 | 1.100542911 | 1.130851044 | 1.053222739 |

| Min | 0.968358051 | 0.934066694 | 0.940664540 | 0.798417815 | 0.994019075 | 0.972396139 |

| SD | 0.079371562 | 0.031452028 | 0.042613228 | 0.089270195 | 0.046743820 | 0.025368462 |

| MPI Indicator | DMUs | University | Rank |

|---|---|---|---|

| >1 | DMU2 | Lincoln University | 1 |

| DMU6 | University of Otago | 2 | |

| DMU7 | University of Waikato | 3 | |

| DMU4 | University of Auckland | 4 | |

| DMU5 | University of Canterbury | 5 | |

| <1 | DMU1 | Auckland University of Technology | 6 |

| DMU8 | Victoria University of Wellington | 7 | |

| DMU3 | Massey University | 8 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.-N.; Tibo, H.; Nguyen, V.T.; Duong, D.H. Effects of the Performance-Based Research Fund and Other Factors on the Efficiency of New Zealand Universities: A Malmquist Productivity Approach. Sustainability 2020, 12, 5939. https://doi.org/10.3390/su12155939

Wang C-N, Tibo H, Nguyen VT, Duong DH. Effects of the Performance-Based Research Fund and Other Factors on the Efficiency of New Zealand Universities: A Malmquist Productivity Approach. Sustainability. 2020; 12(15):5939. https://doi.org/10.3390/su12155939

Chicago/Turabian StyleWang, Chia-Nan, Hector Tibo, Van Thanh Nguyen, and Duy Hung Duong. 2020. "Effects of the Performance-Based Research Fund and Other Factors on the Efficiency of New Zealand Universities: A Malmquist Productivity Approach" Sustainability 12, no. 15: 5939. https://doi.org/10.3390/su12155939