1. Introduction

As the ICT services industry is blooming, such services being requested in almost every domain or activity, Data Centers (DCs) are constructed and operated to supply the continuous demand of computing resources with high availability. However, this is the nice side of the story, because as this also has an environmental impact, the DCs sector is estimated to consume 1.4% of global electricity [

1]. Thus, it is no longer sufficient to address the DCs’ energy efficiency problems from the perspective of decreasing their energy consumption, but new research efforts are aiming to increase the share of renewable energy used for their operation and to manage them for optimal integration with local multi-energy grids [

2,

3].

Firstly, the DCs are large generators of residual heat which can be recovered and reused in nearby heat grids and offer them a new revenue stream [

4]. This is rather challenging due to the continuous hardware upgrades that increase the power density of the chips, leading to an even higher energy demand for the cooling system to eliminate the heat produced by the Information Technology (IT) servers to execute the workload [

5]. In addition to the potential hot sports that may endanger the safe equipment operation, other challenges are created by the relatively low temperatures of recovered heat compared with the ones needed to heat up a building and the difficulty of transporting heat over long distances [

6,

7]. Current studies show that the DCs’ can offer a secured supply of heat accounting more than 60 TW h in Europe [

8]. If the heat is not constantly dissipated, the temperature in the DC can exceed the normal operating setpoints and the IT equipment can be damaged. Thus, the cooling system is a significant contributor to the DCs’ high energy consumption and even in well designed DCs, it takes almost 37% of the total energy consumption [

9]. Several aspects are of most interest for DCs when providing waste heat to district grids [

10]: improving the DCs’ energy efficiency, better integration of renewable energy and downstream waste heat monetization. The adoption of new emerging technologies, such as machine learning or blockchain, force DCs to investigate even other possibilities for more efficient cooling and heat reuse, such as adopting liquid cooling [

11]. This trend was accelerated with the introduction of AI-friendly processors, which consume lots of energy and their heat dissipation could not be managed any more using air cooling. Moreover, hybrid solutions featuring liquid cooling are adopted while at the same time re-using the heat in the smart energy grid [

12]. These solutions require complex ICT-based modelling and optimization techniques to assess the DC potential thermal flexibility and associated operation optimization [

13].

Secondly, the DCs are characterized by flexible energy loads that may be used for assuring a better integration with local smart energy grids by participating in DR programs and delivering ancillary services [

14,

15]. In this way, the DCs will contribute to the continuity and security of energy supply at affordable costs and grid resilience [

16]. Moreover, if the renewable energy is not self-consumed locally, problems such as overvoltage or electric equipment damage may appear at a local micro-grid level and could be escaladed to higher management levels [

17]. To be truly integrated in the grid supply, renewable energy sources (RES), since they are volatile, need of flexibility options and DR programs to be put in place [

18]. Few approaches are addressing the exploitation of DCs’ electrical energy flexibility to achieve better integration into the local energy grids [

19]. Modern ICT infrastructures allow the development of demand and energy management solutions that will allow DCs to be involved scenarios such as reduction of peak power demand during DR periods [

20]. DCs’ strategies for providing demand response are usually referring to shutting down IT equipment, using Dynamic Voltage Frequency Scaling (DVFS), load shifting or queuing IT workload, temperature set point adjustment, load migration and IT equipment load reduction [

21,

22,

23]. To increase their flexibility potential, the DCs may rely on non-electrical cooling devices such thermal storages for pre-cooling or post-cooling [

6] and the DC IT workload migration in federated DCs [

7]. Shifting flexibility to meet the demand by leveraging on workload scheduling usually involves the live migration of virtual machines, even in other DCs [

24], or postponing delay-tolerant workloads to future execution points [

25]. The migration of load to distributed partners’ DCs as part of an optimization process is currently addressed through more promising techniques such as blockchain-based workload scheduling by trying to solve the data transfer security issues [

26]. This typically involves different Artificial Intelligence (AI) algorithms and techniques with a prevalence of prediction heuristics [

27].

Table 1 classifies the above-presented approaches in terms of proposed solutions for DC integration in smart grids and innovative techniques and technologies developed.

In this context, the innovative vision defined by H2020 project CATALYST [

28] is that the DCs’ energy efficiency, should be addressed by managing their operation considering the optimal integration with electrical, thermal and data networks. On the one hand, the DCs have good, yet mostly unexploited, potential regarding their energy demand flexibility, which makes them great potential contributors to the ongoing efforts for more efficient and integrated management of the smart grid. On the other hand, they are large producers of residual heat which can be recovered and re-used near district heating infrastructures. At the same time, they have great IT data network connections which may provide a new source of flexibility and optimization: the workload relocation to/from other DCs to meet some green objectives such as following the renewable energy. As shown above, the state-of-the-art approaches to DC energy efficiency address only partially the above-presented aspects lacking a holistic approach. The work presented in this paper contributes to the process of creating the necessary technological infrastructure for establishing active integrative links among DCs and electrical, heat, and IT networks which are currently missing.

In summary, the paper brings the following contributions:

Defines innovative scenarios for DCs, allowing them to exploit their electrical, thermal and networks connections for trading flexibility as a commodity, aiming to gain primary energy savings and contribute to the local grid sustainability.

Describes an architecture and innovative ICT technologies that act as a facilitator for the implementation of the defined scenarios.

Presents electrical energy, thermal energy and IT load migration flexibility results in two pilot data centers, showing the feasibility and improvements brought by the proposed ICT technologies in some of the new scenarios.

The rest of the paper is structured as follows:

Section 2 presents the new scenarios and ICT technology,

Section 3 describes the results obtained in two pilot DCs, and

Section 4 concludes the paper.

2. Scenarios and Technology

Table 2 shows the defined scenarios for efficient management and operation of the DCs at the crossroads of data, electrical energy and thermal energy networks trading electricity, energy flexibility, heat and IT load as commodities. The definition of scenarios is done incrementally: Scenarios 1, 2 and 3 considering the network connections in isolations, Scenarios 4, 5 and 6 consider combinations of the two network connections together, while Scenario 7 is the most complex one in which all three network connections are considered at once. The scenarios highlight the importance of various commodities in obtaining primary energy savings and decreasing carbon footprint and the network connections providing the necessary infrastructure in achieving this.

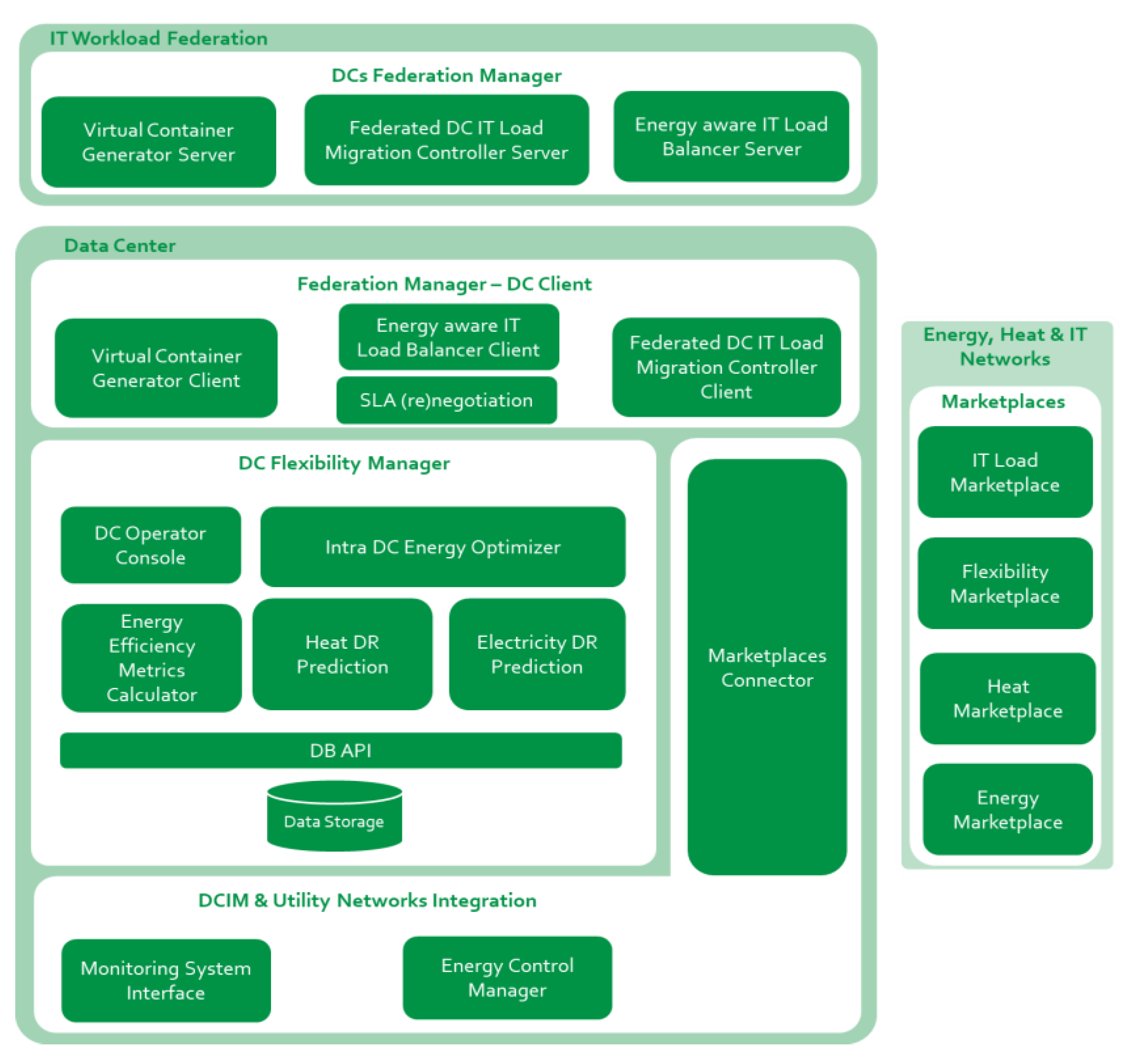

To address this innovative vision, several ICT technologies have been developed and coherently integrated in a framework architecture consisting of three interacting systems (see

Figure 1): the DC Flexibility Manager, DCs Federation Manager, Data center infrastructure management (DCIM) and Utility Networks Integration.

2.1. DC Flexibility Manager System

The DC Flexibility Manager sub-system is responsible for improving DC energy awareness and energy efficiency, by exploiting the internal flexibility for providing energy services to power and heat energy grids. The main components of this sub-system are detailed in

Table 3.

The interaction between the DC Flexibility Manager system components is presented in

Figure 2. The Intra DC Energy Optimizer component takes as input the thermal and electrical energy predictions determined by the Electricity and Heat DR Prediction components as well as the DC model describing the characteristics and operation. Its main output is the optimal flexibility shifting action plan, whereby the DC energy profile is adapted to provide various services in the Electrical and Heat Marketplaces. The Energy Efficiency Metrics Calculator estimates the values of the metrics and the optimization action plans are displayed on the DC Operator Console for validation. If the plan is validated by the operator, its actions will be executed.

2.2. DCs Federation Manager

DCs Federation Manager is responsible for orchestrating the workload relocation among federated DCs.

Table 4 describes the main components of this system.

The Federated DC MIgration Controller (DCMC) [

29], including Master and Lite Client and Server, enables the live migration of IT load among different administrative domains, without affecting the end users’ accounting and without service interruption (see

Figure 3). The secure communication channels between DCs are deployed by the DCMC through OpenVPN and secured by Oauth2.0 to ensure secure and authorized transfers. A new VM in the form of a virtual compute node gets created in an OpenStack environment of the destination DC to host the migrated load at the destination, while tokens for authenticating the DCMC components are retrieved via an integrated KeyCloak server (part of DCMC software). The DCMC software can attach the new virtual compute to the source DC’s OpenStack installation, which means that the source DC is the sole owner/manager of the load even albeit in a different administrative domain.

In detail, the DC IT Load Migration Controller clients will receive migration offers or bids requests by the Energy-aware IT Load Balancer, originally spurred by the Intra DC Energy Optimizer. The DC IT Load Migration Controller client will also inform the Energy Aware Load Balancer of potential rejection or acceptance. Upon acceptance of the migration bid/offer, DC IT Load Migration Controller clients will inform the DC IT Load Migration Controller server that they are ready for migration; in case of a migration bid, the DC IT Load Migration Controller client will first set up the virtual compute node to host the migrated load. Then, DC IT Load Migration Controller server is responsible for setting up a secure communication channel between the two DCs: the source and the destination of the IT load. After communication is set up and the source DC is connected to the virtual compute node at the destination DC, the migration starts. Then, the DC IT Load Migration Controller clients inform both the Energy Aware IT Load Balancer about the success or failure of the migration.

Under a federated DCs perspective, the Virtual Container Generator enables IT loads trackability and allows for indisputable SLA monitoring. In short, the Virtual Container Generator client will offer information related to the lifetime of the VCs, which will first be translated by the SLA (re)negotiation component into service license objective (SLO) events and second into SLA status compliance. This information will be fed to the Energy Aware IT Load Balancer component so that effective decisions on Virtual Container load migrations may be achieved. The SLA (re)negotiation component features two methods of information retrieval, which are RESTful and publish-subscribe, while for data feeding, pull requests towards the Virtual Container Generator will be performed.

2.3. DCIM and Utility Networks Integration

This system offers different components for allowing integration with existing Data center infrastructure management software or with the utilities networks considered for flexibility exploitation: electricity, heat and IT.

The Marketplace Connector acts as a mediator between the DC and the potential Marketplaces that are setup and running and on which the DC may participate. Through the Marketplace Connector, a DC can provide flexibility services, trade electrical or thermal energy and IT workload. Additionally, it provides an interface, and the electricity or energy grids operators are responsible for listening after Demand Response (DR) signals sent by the Distribution System Operator for reducing the DC energy consumption at critical times or for providing heat to the District Heating network. The DR request is forwarded to the Intra DC Energy Optimizer, which evaluates the possibility for the DC to opt in the request based on the optimization criteria.

Energy Control Manager interfaces the DC appliances and local RES via existing DC infrastructure management system (DCIM), or other control systems (e.g., OPC server), implements, and executes the optimization action plans.

The Monitoring System Interface interacts with existing monitoring systems already installed in a DC, adapts the monitoring data received periodically, and provides this data to the Data Storage component from which it will be analyzed in the flexibility optimization processes.

3. DC Pilots and Results

In this section, we show the validation results of the proposed technology throughout the first four scenarios defined in

Table 2 in two pilot DCs, a Micro Cloud DC connected to PV system and located in Poznan Poland and a Colocation DC located in Pont Saint Martin, Italy. The selection of the scenarios to be evaluated was driven by the hardware characteristics of the considered pilots DCs, their type and sources of flexibility available. For the real experiments, relevant measurements were taken from the pilot DCs, flexibility actions were computed by the software stack deployed in the pilot and the actions were executed by leveraging on the integration with existing DCIM (see

Table 5). Finally, relevant KPIs were computed to determine the energy savings and thermal and electrical energy flexibility committed. The KPIs were calculated exclusively, considering the monitored data reported in the experiment and no financial or energetic parameters were assumed.

3.1. Poznan Micro DC with Photovoltaic System

The configuration of the DC pilot test bed is presented in

Figure 4. It features two racks with 50 server nodes which consume approximately 8.5 kW of power in a maximum load and about 3 kW in idle state. The servers’ utilization varies only a few regular services running on the servers; thus, most of the workload (around 90%) constitutes tasks that can be easily shifted.

The racks are connected to the photovoltaic system, which consists of 80 PV panels with 20 kW peak power. Servers are directly connected to inverters so that they can be supplied: directly by power grid, using renewable in the case of enough generation or by batteries (75 kWh) if energy production is too low. If batteries are discharged below 60%, servers immediately switch to power grid to extend battery life. The maximum load of the PV used by servers, due to electrical constraints, is limited to 7.3 kW. With fully charged batteries and no solar radiation, the battery could keep the completely loaded servers for 4 h.

The cooling system consumes up to 3.2 kW with a cooling capacity of 10 kW (maximum 11 kW). The testbed DC system characteristics are summarized in

Table 6.

The challenge in this case is to minimize the pilot DC operational costs with energy, considering variable characteristics such as the energy prices, renewable energy generation, efficient air conditioning management and computing load. By adjusting the power usage of the micro DC in certain periods, it is possible to emulate adaptation to variability of energy prices or renewable generation. The micro DC servers are managed by the SLURM queuing system for batch jobs and by OpenStack while the power management system allows for an accurate analysis of energy consumption of the racks and cooling system. In the next sub-sections, we show how scenarios 1 and 3 are addressed in Poznan Micro DC for providing electrical flexibility and exploiting the IT load migration cross DCs.

3.1.1. Scenario 1: Single DC Providing Electrical Energy Flexibility

In this test case, we evaluate the electrical energy flexibility of a Pilot DC offered by the use of photovoltaic renewable and associated energy storage. The flexibility services that may be provided are: (i) congestion management by decreasing the DC energy demand from the grid and (ii) trade energy in case of a surplus of RES. The test benefits from the power capping mechanism allowing to dynamically adjust the power level, and thus shifting the corresponding load execution to run when renewable energy is available.

The DC Flexibility Manager System was deployed and configured into the pilot DC and used to manage the on-site electrical energy flexibility and PV utilization. It was integrated with the existing monitoring system which exposes PV production and energy data and stores them within the Data Storage. The micro DC energy consumption considers energy related to Real Workload, Delay Tolerant Workload and the cooling equipment. These data are used to forecast the energy demand for the next day, the potential latent energy flexibility and PV power production. The predicted data are later used by the Intra DC Energy Optimizer component to determine flexibility optimization plans shifting the electrical energy flexibility to maximize the locally generated renewable energy by leveraging on mechanisms such as workload management and battery storage usage. The provided action plan can be seen in DC Operator Console and is later translated into actions performed on the pilot DC.

The historical data for the pilot DC used by the energy prediction processes are shown in

Figure 5 the DC energy consumption being split on the three main flexibility components (real-time workload, delay tolerant workload and cooling system).

The forecasts for the next operational day computed by the Electrical DR Prediction component are displayed in

Figure 6.

The Intra DC Energy Optimizer computes an energy flexibility management plan aiming to increase the DC demand during the day and decrease it during the night when the PV production is low. As shown in

Figure 7a, the adapted DC energy demand (displayed as a dark green line) differs from the DC baseline energy demand (displayed as a dark line) because it will follow the pattern of the PV production, plotted as a light-green line. Thus, until the PV panels will start producing electricity, the plan aims at lowering the DC energy consumption by discharging batteries. They can support about 2 kWh of energy discharge each hour, until the capacity of 60 kWh of the battery depletes (light green columns in

Figure 7b). Furthermore, the plan suggests shifting the delay tolerant workload from this period to the interval between hours 7 and 14 when the PV production is high. Each time the load shift action is encountered, the power is capped by the corresponding value and released later, according to the schedule. Moreover, the batteries will be recharged during the time period when the PV production is high (green columns in

Figure 7b).

The optimization plan computed by the Intra DC Optimizer targets to leave the batteries at the end of the day in the same state as they were at beginning of the day. After the PV production stops, the DC energy consumption follows the normal pattern given by the baseline, since no actions are required.

The evaluated pilot DC is configured to be powered in equal parts by RES and the electricity grid. Moreover, the PV panels are used to charge the set of batteries. In the test, an assumption was considered to protect the batteries—not discharging them below 40%. Deeper (or worse—full) discharge leads to faster degradation of the battery and lower capacity. When there is a surplus of energy, solar cells charge the batteries; in the event of a shortage of energy, the batteries maintain the DC operation.

Figure 8 depicts the charge level of the batteries during the test case execution.

Charging and discharging the batteries takes place according to the schedule returned by the Intra DC Energy Optimizer. When it determines that the batteries are fully charged, it does not use them until start of the next cycle. However, some batteries power external devices that could not be turned off during the tests, hence the constant drop in the charge level after 6 p.m. in

Figure 8. As mentioned, the testbed worked on real resources that were additionally used by external users. This may affect the appearance of additional tasks that did not alter the course of the test and confirms the possibility of its operation on an operational DC.

Table 7 below shows relevant metrics calculated for this test case.

3.1.2. Scenario 3: Workload Federated DCs

The goal of this test case was to exploit the possibility of IT load migration between DCs offered by the DCs Federation Manager system. We considered the pilot DC described the previous section as the destination DC. For the load migration, we utilized 16 servers Huawei XH620 blades, which reside within Huawei X6800 chassis. Each blade consists of 2x Xeon(R) E5-2640 v3 CPU (8 c/CPU) and 64 GB of RAM. The servers are connected to the Internet with symmetric 1 Gb/s connection. The DC deployed a DC Migration Controller Client around the OpenStack instance (Train distribution) hosted on a physical XH620 server with installed Ubuntu 18.04. OpenStack installation comes with Nova Compute and VPNaaS extension responsible for hosting the VM and providing VPN connections, respectively. The source DC provides with 32 servers equipped with AMD Ryzen 7 1700x and 32 GB of RAM with a similar software stack.

By the means of IT load migration, the amount of electrical and/or thermal flexibility of the destination DCs is increased and may provide to the local grid via DR programs or local flexibility markets. The migration test case was conducted between two servers of the source and destination DCs. The obtained results were used to extrapolate for the situation in which half of the workload is migrated to the destination DC (see

Table 8).

The destination DC servers’ total idle power usage is estimated by multiplication of the value of energy drawn by a single server by the number of servers. A destination DC server can efficiently host two VMs running Blender. Such an assumption was driven by the fact that the destination DC node has 2x 8 core CPU with similar characteristics as the CPU of the source DC server and twice the amount of operational memory. The power drawn by the destination server hosting two VMs running Blender was computed as the sum of the power drawn by the idle destination server and twice the amount of power consumption that increased after a single VM migrated to the server. The latter component of the sum is enlarged by 5% in order to consider the worst-case scenario. The value of the power consumption was calculated by running a heavy load on one of the destination DC servers and measuring its power consumption. The sum for all servers was calculated as the value multiplied by 16, the number of servers. The assumption was made that after squeezing the PaaS services load running on 16 servers to eight servers, the power consumption of such servers would be much higher. In this experiment, a worst-case scenario was assumed, i.e., the maximum power drawn by such servers. A destination DC server under typical (PaaS services) load power usage was estimated as the average of the last 90 days.

In the first experiment, we considered that no workload was migrated between the source and destination DC (see

Figure 9). This experiment was used as a baseline to the other two, since no load was shifted between the DCs. Not shifting the load made the power consumption in both DCs constant, and the RES energy utilization was the lowest among the experiments, i.e., 30.4 kWh.

In the second experiment, half of the load was migrated in case of overproduction of RES at destination DC. The PaaS services running on destination servers were squeezed on half of the servers, inducing higher power consumption of the servers and resulting in lower QoS and reliability of the services. The other half of the servers were then released to receive the migrated workload.

Figure 10 shows that workload from migrated resulted in power consumption decreased by half in the source DC. The power consumption at destination DC is higher due to the higher load of the PaaS services. This experiment allowed to draw approximately 36.5 kWh of energy from RES and is the most realistic due to proper PaaS services handling.

Table 9 presents the relevant KPIs calculated for this scenario. The REF and APCren metrics were only calculated for destination DC as source does not have access to renewable energy.

3.2. Pont Saint Martin DC

For these experiments, we leveraged on the pilot DC testbed provided by Engineering Informatica in Pont Saint Martin. The DC testbed cooling system is mainly composed of two Trane RTHA 380 refrigerating units (GFs): single-compressor, helical-rotary type water-cooled liquid chillers, accountable for server rooms’ refrigeration and for the summer cooling of 6000 m

2 offices. Their characteristics are provided in

Table 10.

Moreover, each server room is equipped with two–three conditioning units (CDZs). The computer room air conditioner (CRAC) unit is composed of chilled water precision air conditioners systems, used to refrigerate the server rooms. They are located outside each server room: the air inside the server room flows through vents reaching the conditioning unit to be later reinjected into the bunker room through a ventilation shaft at the base of the rack’s lines. Each server room includes a heterogeneous group of servers and devices, whose configurations differ: this means that each server-room will have a different number of hosted elements, power absorbed and therefore, set point temperature.

Table 11 shows the characteristics of the sever rooms used for evaluation.

Finally,

Table 12 presents the hardware characteristics of the other devices of the DC relevant for the experiments carried out.

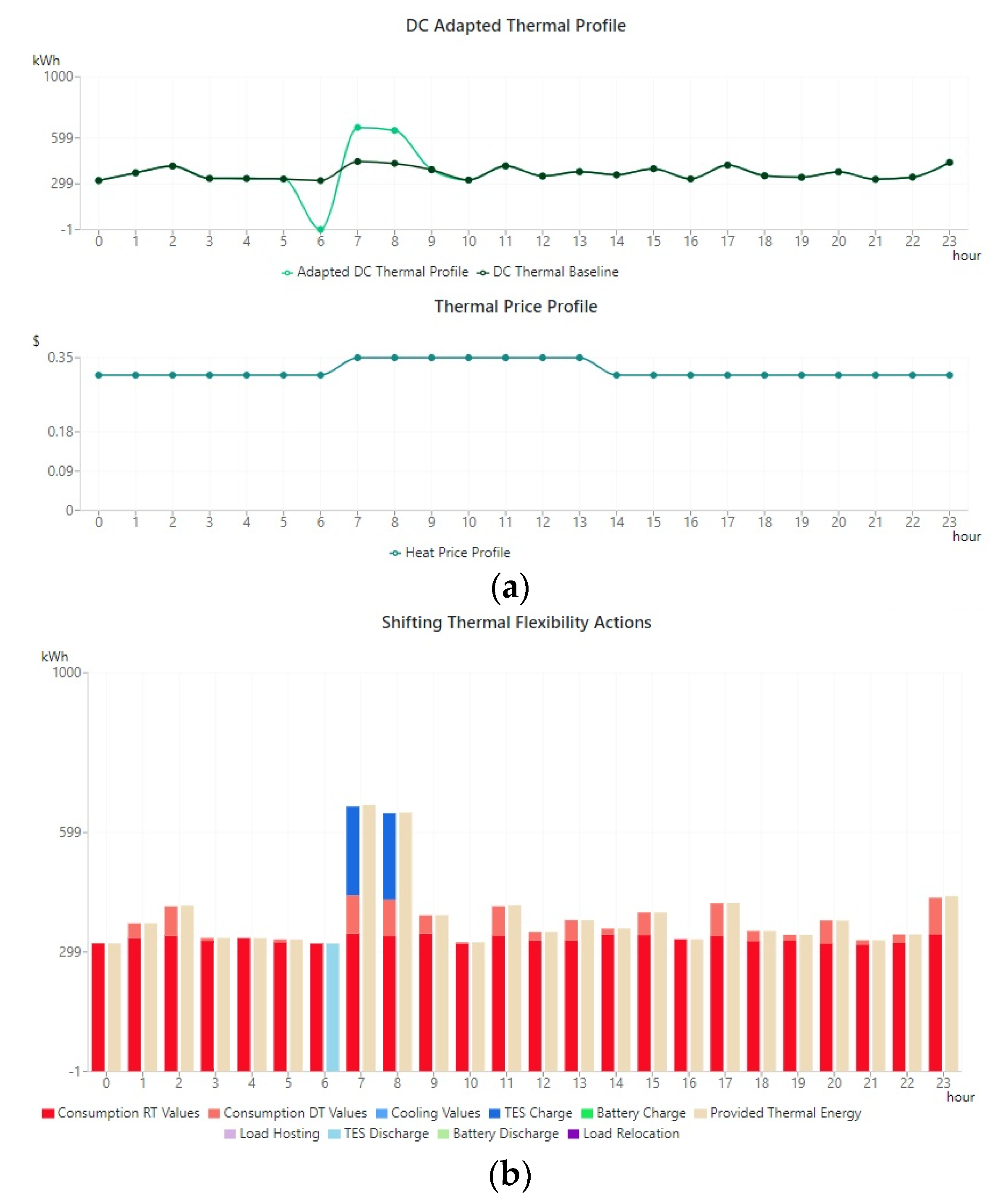

In the next sub-sections, we show how scenarios 2 and 4 were addressed in this DC for re-using the generated heat and achieving more flexibility by efficiently combining power and heat.

3.2.1. Scenario 2: Single DC Providing Heat

The main goal of this experiment was to exploit the post cooling technique as a mechanism of thermal flexibility aiming to re-use the residual heat in nearby heat grids or buildings. We used the post cooling technique as a flexibility mechanism to increase the quality of the heat (i.e., temperature) recovered from the server rooms as much as possible without endangering the safe operation of the computing equipment.

The Flexibility Manager system was deployed in the pilot DC and used to compute the flexibility optimization actions. DCIM and Utility Networks Integration components were configured to allow on one side the gathering of real-time measurements from the pilot and the integration with local thermal grid for obtaining the heat demand and associated price to be used as flexibility optimization driver. Using the historical monitored data stored in the Data Storage, the Heat DR Prediction forecasts the day ahead energy demand and thermal flexibility of the DC. The Intra DC Energy Optimizer considers the heat prediction process results and heat demand to decide on an optimization action plan that contains post-cooling actions for specific intervals (i.e., change the configuration of bunker temperature alarm from 25 to 27 degree). The plans were calculated and used to shift thermal energy flexibility in response to specific heat reuse requests. Upon plan validation, the actions were executed including post-cooling of server rooms for more thermal flexibility by switching off refrigerator units GF1 and GF2 at specific time intervals.

The historical energy consumption data for the pilot DC is shown in

Figure 11 (i.e., for the last 24 h), split on the three main flexibility components (i.e., real time workload, delay tolerant workload and cooling system). The DC energy consumption varies slightly around an average of 780 kWh.

The Heat/Cold DR Prediction Module computes the estimation of the thermal generation for the next day using historical energy consumption data. The prediction over the next 24 h corresponds to the energy production of the last 24 h due to the low variability of the DC energy consumption.

The Intra DC Energy Optimizer uses the Marketplace Connector to get the heat demand and reference prices for the next day. As can be seen in

Figure 12, the price for heat is high during hours 7–14; however, this does not match the thermal energy generation profiles as estimated by the prediction tool, which are almost constant during the day. Thus, the DC Optimizer computes an optimization plan to increase the DC thermal generation during the time interval when the heat price is high. This is done by leveraging on two flexibility mechanisms: shifting delay tolerant workload to increase the heat generation of the servers and post cooling the server room to extract more heat when the prices are high. The optimization plan is shown in

Figure 13, in which the left chart shows the baseline heat generation (in dark green) and the adapted heat generation (in light green). Due to server room post cooling, the heat generation is increased during hours 8 and 9, enabling the DC to make extra profit by selling heat when the prices are high. The extra heat generated by the post cooling mechanism is shown as the dark blue columns in the right chart in

Figure 13.

Considering the execution of the day-ahead flexibility optimization plan, the metrics in

Table 13 were calculated.

3.2.2. Scenario 4: Single DC Providing both Electrical Energy Flexibility and Heat

The goal of this experiment was to show how the pilot DC can operate at the crossroad of two energy networks (power and heat) and provide a combination of electrical and thermal flexibility. In this case, we leveraged on the possibility of controlling the cooling system and postponing the execution of delay tolerant workload as sources of flexibility. Using the monitored data stored in the Data Storage, the Heat DR Prediction and Electrical DR Prediction modules forecast the day ahead energy demand, electrical energy flexibility and thermal flexibility of the DC (see

Figure 14). The Intra DC Energy Optimizer considers the electrical and heat flexibility prediction process results and both the electrical flexibility request and heat demand to decide on an optimization action plan. As a result, the optimization actions plans are calculated and used to shift both electrical and thermal energy flexibility to match specific flexibility requests.

We leveraged on both shifting delay tolerant workload actions and post-cooling of the server room to allow the DC to adapt both its electrical energy consumption and the thermal energy generation to match the request.

Figure 15 shows the DC optimization plan from the electrical energy flexibility perspective. The chart in the upper-left corner shows the DC baseline (in black), the electrical energy flexibility request having a peak between hours 15 and 20 (blue line) and the DC adapted profile that matches closely the Flexibility request (shown in green line). The DC energy consumption is shown in the upper right chart of its components.

Figure 16 shows the same optimization plan from the thermal energy flexibility perspective. It shows the thermal flexibility of the DC and the generated heat that will be re-used in the local heat grid. The chart from the upper left corner shows the baseline heat generation (dark green) and the adapted heat generation (light green) and the effect of the post cooling action on the DC heat generation can be seen by the dark blue columns in the right chart.

For the day ahead flexibility optimization plans in this experiment, the metrics in

Table 14 were calculated.

4. Conclusions

In this paper, we address DCs’ energy efficiency from the perspective of their optimal integration with utility networks such as electrical, heat and data networks. We describe innovative scenarios and ICT technology that allows them to shift electrical and thermal energy flexibility and exploit workload migration inside a federation to obtain primary energy savings and contribute to the grid sustainability.

The technology and several of the proposed scenarios were validated in the context of two pilot DCs: a micro DC in Poznan which has on site renewable and a DC in Point Saint Martin. The first experiment conducted on Poznan micro DC proved the possibility of using RES in DCs energy flexibility. The PV-system allows not only to reduce the grid power usage and reduce CO2 emission, but also can be considered as a resource in energy flexibility services. By harnessing the energy storage, one may accumulate the energy and use it once more profitable. The profitability can be either driven by Congestion Management services or participation in DR.

The second experiment combined IT load migration with the availability of RES to increase the amount of energy flexibility and to find a trade-off between the flexibility level, QoS and the RES production level. As expected, migrating the load allows a reduction in CO2 emission by opening possibilities for bolder power savings actions at DC originating the load. In this case, it is the effect of shutting down the unused nodes at one DC site and taking advantage of RES together with temporarily postponing some of the running services on the other. However, the amount of workload relocated affects efficiency indicators in the opposite way. When the number of migrated VMs exceeds the computational capabilities of the destination DC, it may overload the system, thus, inducing less favorable exploitation of renewable energy. The key point is to find a balance between the power profile of DC and the acceptance level for the external load. One should easily note that the IT load migration can serve not only as a source of energy flexibility but can also lead to an increase in the overall energy efficiency of the federated system. In this way, it can match IT demands with time-varying onsite RES and implement “follow the energy approach”.

In Point Saint Martin DC, the first performed experiment shows how the DC can adapt its thermal energy profile in order to match specific heat re-use requests. The obtained results prove that the heat recovery takes place even if the total facility energy is not being recovered for use within the DC boundaries. For efficient heat recovery, we considered the deployment of a heat pump as flexibility actions we have used the room post cooling by controlling the temperature set points and cooling system. The second experiment shows that both the electrical and thermal energy profiles of the DC can be adapted to match specific flexibility requests. The small value of primary energy savings obtained indicates that the plan focuses on adapting the DC energy demand and not reducing it while the reuse factor indicates that almost half of the energy consumed by the DC will be further reused in nearby destring heating systems.

Finally, to provide a guideline on the use of proposed ICT theology and applicability of the specific scenarios and flexibility actions,

Table 15 shows that they fit to different DC types. We used a DCs’ well known classification according to their specific operation, such as collocation, cloud and High-Performance Computing (HPC). For each DC type, we highlighted the possibility of applying a specific scenario considering their resources and flexibility sources.

Generally, cloud DCs are suitable for most scenarios due their flexibility, moderate utilization, and good control. Of course, these are general guidelines and suitability depends strongly on a specific case, e.g., used software and technologies, data center policies, customers’ requirements, etc. However, flexibility actions based on managing the workload are not well suited to collocation DCs that do not have direct control on servers and running workloads.

.jpeg)