Innovation Performance Indicators for Architecture, Engineering and Construction Organization

Abstract

1. Introduction

- (1)

- The industry largely favors a traditional way of business procedures as the acts of the parties in the industry are rigidly restricted by procurements and data are presented in paper documents [6].

- (2)

- (3)

- As the designers and contractors work under difficult time and cost restrictions, in order to minimize the risk of the rejection of building plans, the client, designer and contractors always prefer the design and construction to be in compliance with the prescriptive building codes rather than using a performance-based approach [14].

2. Previous Studies

- (1)

- The identification and formulation of evaluating indicators;

- (2)

- The establishing of methods to quantify and measure in conformance with the indicators.

- (1)

- an indicator is used to measure and indicates performance and success;

- (2)

- a metric is solely a judgement, whether it is a number or a qualitative description within an indicator that helps track performance and progress.

- (a)

- innovation performance evaluation is a complex process comprising of a set of indicators;

- (b)

- the number of indicators selected for the assessment should be limited to between five and ten;

- (c)

- the evaluation should be proceeded initially by considering two components, namely efficiency (examine how things can be carried out, referring to the input and process) and effectiveness (examine the capability of producing a desired output and outcome, referring to the output and outcome);

- (d)

- the measurement for an indicator should not be a quantitative figure only; clear qualitative indicators should also be included and be easily understandable to experts.

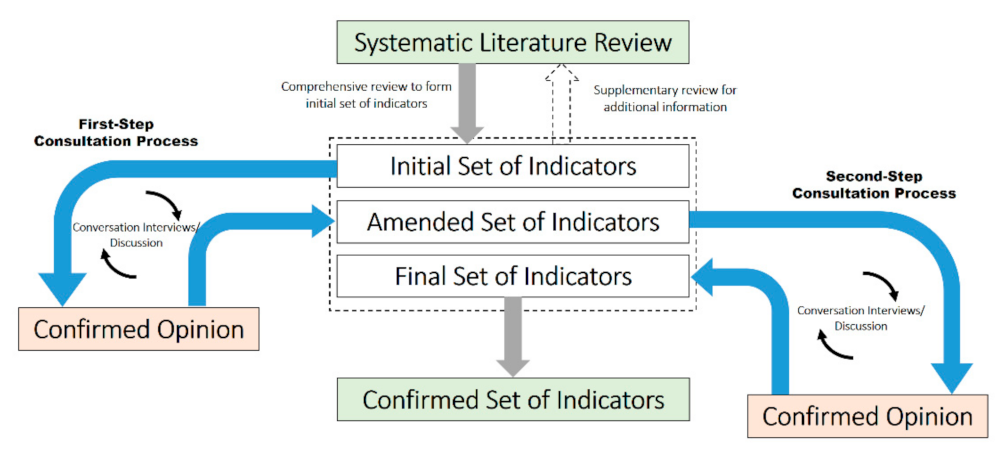

3. Approach and Methodology

4. Information Collection and Triangulation Approach

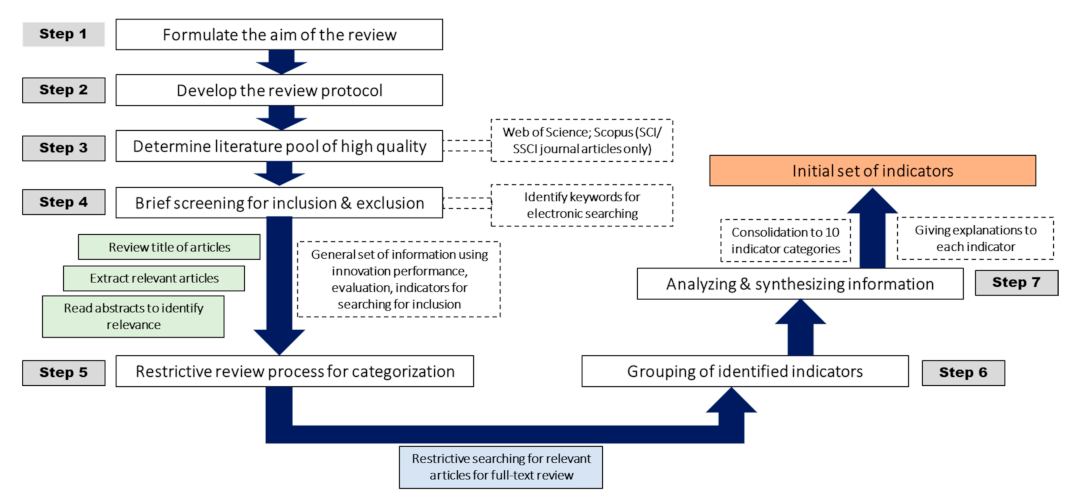

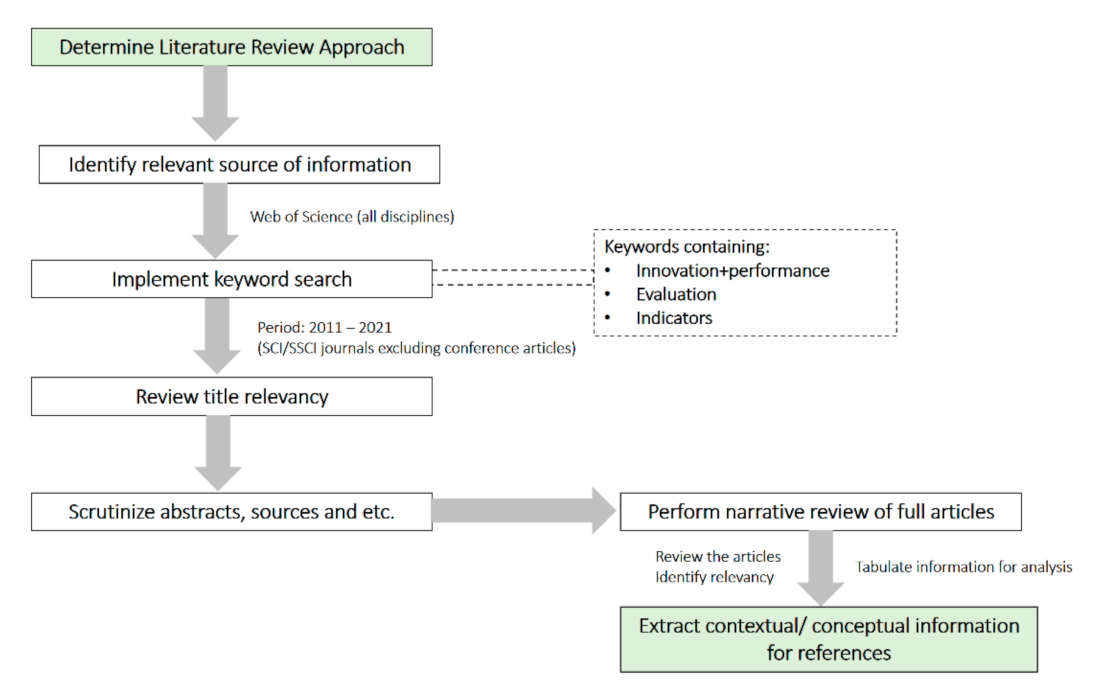

4.1. Systematic Literature Review

- (1)

- To adopt a restrictive searching approach, by using the keywords “innovation + performance + indicators”/“innovation + performance + measure” for the list of articles compiled in Step 4;

- (2)

- To obtain the articles for a full-text review;

- (3)

- To review the full text of the new set of articles and extract the “indicators” presented/mentioned in the selected articles.

- (1)

- To assign name/title to each indicator category;

- (2)

- To classify/group the indicator to the relevant indicator category and provide a detailed description/explanation for each indicator.

- (1)

- cover critical issues;

- (2)

- be simple and clear to all parties;

- (3)

- not rely on complex and challenging data collection;

- (4)

- be understood and reliable;

- (5)

- be easy for interpretation and evaluation;

- (6)

- be presentable in scale for use in executive decision-making.

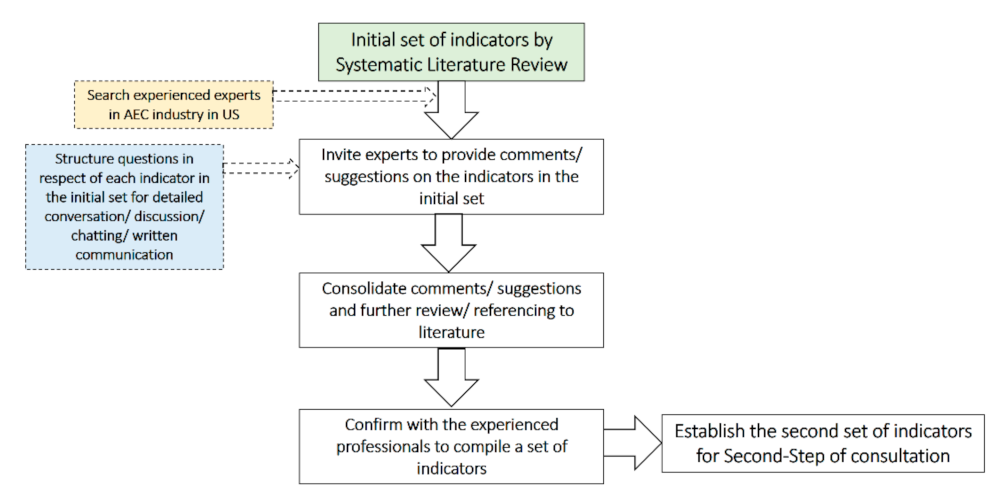

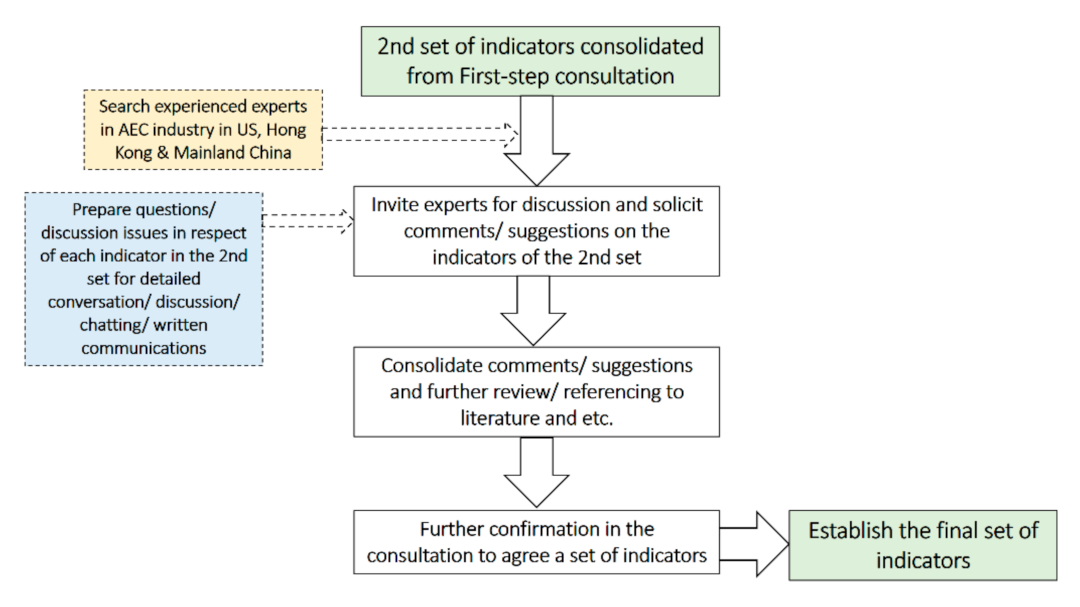

4.2. Second Stage Two-Step Consultation Process

5. Discussions

Limitations and Future Works

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| AEC Firms | Key Innovation Commitment Described in the Annual Reports | Innovation Specially Mentioned in the Annual Reports | Innovation Highlights |

|---|---|---|---|

| AECOM (Reports from 2008–2020) | Being Passionate about problem solving: “ we embrace our clients’ biggest challenges—combining collaboration with innovation to deliver transformational results”. | Investing in technologies at the forefront of the AEC industry that create value across the entire project life cycle. |

|

| Becthel (Reports from 2009–2020) | Directing tens of millions of dollars toward investments in innovation to realize both incremental and disruptive improvements in project delivery and cost competitiveness. |

|

|

| Jacobs Engineering (Reports from 2007–2020) | Company’s strategies: to build a High Performance Culture, i.e., to reinforce a culture of accountability, inspirational leadership and innovation that will drive long-term outperformance. |

|

|

| Fluor (Reports from 1998–2020) | Investing continuously in the innovation that differentiates the company from their competitors and enable the company to deliver the capital-efficient solutions that the clients demand. |

| The company maintains a controlling interest in NuScale Power LLC, the operation of which are primarily research and development. The following are designs by NuScale:

|

| Arcadis (Reports from 2014–2020) |

|

|

|

| DPR Construction (SWOT reports from 2009–2019/online information) |

|

|

|

| Gensler (Annual reports from 2014–2020) |

|

|

|

| Brady Construction (Annual reports from 2001–2016) |

|

|

|

| Stantec (Annual reports from 2011–2020) |

|

|

|

| Tetra Tech, Inc. (Annual reports from 2000–2020) |

|

|

|

Appendix B

| Innovation Criteria | Key Metrics | Remarks & Examples | Unit of Measures |

|---|---|---|---|

| Durability | Durability | Very Poor: very sensitive to change in market and may be easily replaced in a very short period of time. Very Good: extremely durable and are anticipated to stay unless disruption of work. | Ordinal scale |

| Employee Creativity | Creativity of teams |

| Ordinal scale |

| Engagement | % of employees involved in idea generation | # of Employee involved in idea generation/Total # of employee. | % |

| Culture that foster and supports innovation | Firm’s culture based on its supportiveness to innovation: How long did time managers spend with innovations compared to normal tasks? Are the firm tolerable to risk and failure? Does the firm orient to the future market? | Ordinal Scale | |

| Ideas and Knowledge | Knowledge sharing sessions | e.g., meeting, seminar, forum, courses, online chatting, etc. | Ordinal Scale |

| Idea pool—# of new ideas in the pipeline | # of new ideas that are considered to be valuable but have not started piloting | Numbers | |

| Extent of support from firm on employee continue development | Subsidizing work-related courses, online/formal/informal, etc. Providing internal training sessions from senior employees, site senior management, outsiders, etc. | Ordinal Scale | |

| Impact | Impact on the firm’s image | Minimal impact on firm’s image Greatly enhance firm’s image and toward branding power where customers will first think of the firm when coming to a certain kind of service | Ordinal Scale |

| Innovation Process | Incentive schemes | Incentive schemes | Ordinal Scale |

| # of innovative ideas generated | # of innovative ideas generated | Numbers | |

| Vetting process of innovative ideas (potential of enhancement and implementation) | No standard or protocol on how innovative ideas will be selected. A well-designed and organized process with clear rubrics and respectable judges for selecting innovative ideas to pilot. | Ordinal Scale | |

| Quality of Ideas | % of piloted ideas | # of piloted ideas/# of innovative ideas generated | % |

| Diffusion rate of new ideas in the firm | % of innovative ideas implemented across the firm/# of innovative ideas generated | % | |

| Return on Investment | Enhancement in quality performance | % increase in quality | % |

| Enhancement in communication performance | % increase in communication efficiency | % | |

| Enhancement in productivity | % increase in productivity | % | |

| Enhancement in cost performance | % decrease in cost/budget | % | |

| Enhancement in time performance | % decrease in time spent | % | |

| Strategic Alignment | Attention from management level on innovation | No attention from the management level on innovation and not supportive when coming to innovative activities. Strong, enthusiastic, accountable, dedicated, supportive and attentive management level on innovation. | Ordinal Scale |

| % of investment in R&D/revenue | Investment in R&D/Total Revenue | % | |

| % of strategies/policies regarding innovation | # of innovation related strategies/policies/Total # of strategies and policies | % |

References

- Collison, C. Knowledge Management; Computer Press: Brno, Czech Republic, 2005. [Google Scholar]

- Hamel, G.; Green, B. The Future of Management; Harvard Business School Press: Boston, MA, USA, 2007. [Google Scholar]

- Bartes, F. Competitive Intelligence. In Management, Economics and Business Development in European Conditions; Brno University of Technology: Brno, Czech Republic, 2009; ISBN 978-80-214-3893-4. [Google Scholar]

- Tushman, M.; Nadler, D. Organizing for Innovation. Calif. Manag. Rev. 1986, 28, 74–92. [Google Scholar] [CrossRef]

- Boston Consulting Group for the World Economic Forum (2017). Shaping the Future of Construction Inspiring innovators Redefine the Industry. Available online: http://www3.weforum.org/docs/WEF_Shaping_the_Future_of_Construction_Inspiring_Innovators_redefine_the_industry_2017.pdf (accessed on 10 August 2020).

- Teicholz, P. Labor-Productivity Declines in the Construction Industry: Causes and Remedies (Another Look). AECbytes Viewpoint. 2013. Available online: http://www.aecbytes.com/viewpoint/2013/issue_67.html (accessed on 18 November 2013).

- Kunz, J.; Fischer, M. Virtual Design and Construction: Themes, Case Studies and Implementation Suggestions; CIFE Working Paper #097; Department of Civil & Environmental Engineering, Stanford University: Stanford, CA, USA, 2012. [Google Scholar]

- Mandujano, M.G.; Mourgues, C.; Alarcon, L.F.; Kunz, J. Modeling virtual design and construction implementation strategies considering lean management impacts. Comput. Aided Civ. Infrastruct. Eng. 2017, 32, 930–951. [Google Scholar] [CrossRef]

- Woudhuysen, J.; Abley, I. Why Is Construction So Backward? Wiley Academy: Chichester, UK, 2004. [Google Scholar]

- Abadi, A. A Study of Innovation Perception within the Construction Industry. Ph.D. Thesis, University of Manchester, Manchester, UK, 2014. [Google Scholar]

- National Endowments for the Arts. 2007 Annual Report. Available online: https://www.arts.gov/sites/default/files/AR2007.pdf (accessed on 10 May 2021).

- National Civic Art Society. Americans’ Preferred Architecture for Federal Buildings. 2020. Available online: https://static1.squarespace.com/static/59bfe5dbf14aa1b6bbb12cd0/t/5f845dfda65e566a0e0a8d32/1602510358640/Americans%27-Preferred-Architecture-for-Federal-Buildings-National-Civic-Art-Society-Harris-Poll-Survey.pdf (accessed on 10 May 2021).

- Dom’s Plan B Blog. 2018. Available online: https://domz60.wordpress.com/tag/popular/ (accessed on 10 May 2021).

- Lo, S.M.; Lam, K.C.; Fang, Z. An Investigation on the building officials’ perception for the use of performance based fire engineering approach in building design. Fire Technol. 2002, 38, 271–286. [Google Scholar] [CrossRef]

- Kabanoff, B.; Brown, S. Knowledge structures of prospectors, analyzers and defenders: Content, structure, stability and performance. Strateg. Manag. J. 2008, 29, 149–171. [Google Scholar] [CrossRef]

- Popova, V.; Sharpanskykh, A. Modeling organizational performance indicators. Inf. Syst. 2010, 35, 505–527. [Google Scholar] [CrossRef]

- Grigoroudis, E.; Orfanoudaki, E.; Zopounidis, C. Strategic performance measurement in a healthcare organisation: A multiple criteria approach based on balanced scorecard. Omega 2012, 40, 104–119. [Google Scholar] [CrossRef]

- Kompalla, A.; Buchmuller, M.; Heinemann, B.; Kopia, J. Performance measurement of management system standards using the balanced scorecard. Amfiteatru Econ. 2017, 19, 981–1002. [Google Scholar]

- Adams, R.; Bessant, J.; Phelps, R. Innovation management measurement: A review. Int. J. Manag. Rev. 2006, 8, 21–47. [Google Scholar] [CrossRef]

- Crossan, M.M.; Apaydin, M. A multi-dimensional framework of organizational innovation: A systematic review of the literature. J. Manag. Stud. 2010, 47, 1154–1191. [Google Scholar] [CrossRef]

- Subramanian, A.; Nilakanta, S. Organizational innovativeness: Exploring the relationship between organizational determinants of innovation, types of innovations, and measures of organizational performance. Omega 1996, 24, 631–647. [Google Scholar] [CrossRef]

- de Jong, M.; Marston, N.; Roth, E.; van Biljon, P. The Eight Essentials of Innovation Performance; McKinsey & Company: New York, NY, USA, 2013. [Google Scholar]

- Roszko-Wójtowicz, E.; Białek, J. A multivariate approach in measuring innovation Performance. J. Econ. Bus. 2016, 34, 443–479. [Google Scholar] [CrossRef]

- Serrano-Bedia, A.M.; López-Fernández, M.C.; García-Piqueres, G. Complementarity between innovation knowledge sources: Does the innovation performance measure matter? Bus. Res. Q. 2018, 21, 53–67. [Google Scholar] [CrossRef]

- Grillitsch, M.; Schubert, T.; Srholec, M. Knowledge base combinations and firm growth. Res. Policy 2019, 48, 234–247. [Google Scholar] [CrossRef]

- Brenner, T.; Broekel, T. Methodological issues in measuring innovation performance of spatial units. Ind. Innov. 2011, 18, 7–37. [Google Scholar] [CrossRef]

- Ghazinoory, S.; Riahi, P.; Azar, A.; Miremadi, T. Measuring innovation performance of developing regions: Learning and catch-up in provinces of Iran. Technol. Econ. Dev. Econ. 2014, 20, 507–533. [Google Scholar] [CrossRef]

- McKinsey Report 2016. Imagining Construction’s Digital Future. Available online: https://www.mckinsey.com/business-functions/operations/our-insights/imagining-constructions-digital-future (accessed on 10 May 2021).

- Svandova, E.; Jirásek, M. On measuring countries’ innovation performance: Organisational level perspective. Acta Univ. Agric. Silvic. Mendel. Brun. 2019, 67, 871–881. [Google Scholar] [CrossRef]

- Carrillo, M. Measuring and ranking R&D performance at the country level. Econ. Sociol. 2019, 12, 100–114. [Google Scholar] [CrossRef]

- The Next Normal in Construction; McKinsey Report 2020; McKinsey & Company: New York, NY, USA, 2020; Available online: https://www.mckinsey.com/~/media/McKinsey/Industries/Capital%20Projects%20and%20Infrastructure/Our%20Insights/The%20next%20normal%20in%20construction/The-next-normal-in-construction.pdf (accessed on 10 May 2021).

- Zawawi, N.F.M.; Wahab, S.A.; Al-Mamun, A.; Yaacob, A.S.; NKSamy Fazal, S.A. Defining the concept of innovation and firm innovativeness: A critical analysis from resource-based view perspective. Int. J. Bus. Manag. 2016, 11, 87–94. [Google Scholar] [CrossRef]

- Siksnelyte-Butkiene, I.; Streimikiene, D.; Balezentis, T.; Skulskis, V. A systematic literature review of multi-criteria decision-making methods for sustainable selection of insulation materials in buildings. Sustainability 2021, 13, 737. [Google Scholar] [CrossRef]

- Li, Y.F.; Song, Y.; Wang, J.X.; Li, C.W. Intellectual capital, knowledge sharing, and innovation performance: Evidence from the Chinese construction industry. Sustainability 2019, 11, 2713. [Google Scholar] [CrossRef]

- Zheng, J.W.; Wu, G.D.; Xie, H.T. Impacts of leadership on project-based organizational innovation performance: The mediator of knowledge sharing and moderator of social capital. Sustainability 2017, 9, 1893. [Google Scholar] [CrossRef]

- Wang, Q.; Zhao, L.W.; Chang-Richards, A.; Zhang, Y.Y.; Li, H.J. Understanding the impact of social capital on the innovation performance of construction enterprises: Based on the mediating effect of knowledge transfer. Sustainability 2021, 13, 5099. [Google Scholar] [CrossRef]

- Kim, D.G.; Choi, S.O. Impact of construction it technology convergence innovation on business performance. Sustainability 2018, 10, 3972. [Google Scholar] [CrossRef]

- Xue, X.L.; Zhang, R.X.; Wang, L.; Fan, H.Q.; Yang, R.J.; Dai, J. Collaborative in-novation in construction project: A social network perspective. KSCE J. Civ. Eng. 2018, 22, 417–427. [Google Scholar] [CrossRef]

- Chen, T.; Huang, G.Q.; Olanipekun, A.O. Simulating the evolution mechanism of inner innovation in large-scale construction enterprise with an improved NK model. Sustainability 2018, 10, 4221. [Google Scholar] [CrossRef]

- Ozorhon, B. Analysis of construction innovation process at project level. J. Manag. Eng. 2013, 29, 455–463. [Google Scholar] [CrossRef]

- Lai, X.D.; Liu, J.X.; Georgiev, G. Low carbon technology integration innovation assessment index review based on rough set theory—An evidence from construction industry in China. J. Clean. Prod. 2016, 126, 88–96. [Google Scholar] [CrossRef]

- Le, Y.; Wan, J.Y.; Wang, G.; Bai, J.; Zhang, B. Exploring the missing link between top management team characteristics and megaproject performance. Eng. Constr. Archit. Manag. 2020, 27, 1039–1064. [Google Scholar]

- Hartmann, T.; Levitt, R.E. Understanding and managing three-dimensional/four-dimensional model implementations at the project team level. ASCE J. Constr. Eng. Manag. 2010, 136, 757–767. [Google Scholar] [CrossRef][Green Version]

- Cao, D.P.; Li, H.; Wang, G.B.; Luo, X.C.; Yang, X.C.; Tan, D. Dynamics of project-based collaborative networks for BIM implementation: Analysis based on stochastic actor-oriented models. J. Manag. Eng. 2017, 33, 04016055. [Google Scholar] [CrossRef]

- Miterev, M.; Mancini, M.; Turner, R. Towards a design for the project-based organization. Int. J. Proj. Manag. 2017, 35, 479–491. [Google Scholar] [CrossRef]

- Taylor, J.E.; Levitt, R.E. Understanding and Managing Systemic Innovation in Project-Based Industries; Global Projects Center, Stanford University: Stanford, CA, USA, 2004; Available online: https://gpc.stanford.edu/sites/default/files/taylorlevitt2004_0.pdf (accessed on 10 May 2021).

- Nasrallah, W.; Levitt, R.; Glynn, P. Interaction value analysis: When structured communication benefits organizations. Organ. Sci. 2003, 14, 541–557. [Google Scholar] [CrossRef]

- Wei, Y.H.; Miraglia, S. Organizational culture and knowledge transfer in project-based organizations: Theoretical insights from a Chinese construction organization. Int. J. Proj. Manag. 2017, 35, 571–585. [Google Scholar] [CrossRef]

- The KPI Working Group. KPI Report for the Minister for Construction; Department of the Environment, Transport and the Regions: London, UK, 2000. [Google Scholar]

- Cruz-Cázares, C.; Bayona-Sáez, C.; García-Marco, T. You can’t manage right what you can’t measure well: Technological innovation efficiency. Res. Policy 2013, 42, 1239–1250. [Google Scholar] [CrossRef]

- Yu, A.Y.; Shi, Y.; You, J.X.; Zhu, J. Innovation performance evaluation for high-tech companies using a dynamic network data envelopment analysis approach. Eur. J. Oper. Res. 2021, 292, 199–212. [Google Scholar] [CrossRef]

- European Commission. Science, Research and Innovation Performance of the EU. Directorate-General for Research and Innovation, European Commission. Available online: https://op.europa.eu/s/pBoz (accessed on 11 August 2021).

- Gaulta, F. Defining and measuring innovation in all sectors of the economy. Res. Policy 2018, 47, 617–622. [Google Scholar] [CrossRef]

- Birchall, D.; Chanaron, J.; Tovstiga, G.; Hillenbrand, C. Innovation performance measurement: Current practices, issues and management challenges. Int. J. Technol. Manag. 2011, 56, 1–20. [Google Scholar] [CrossRef]

- Henttonen, K.; Ojanen, V.; Puumalainen, K. Searching for appropriate performance measures for innovation and development projects. R&D Manag. 2016, 46, 914–927. [Google Scholar]

- Schwartz, L.; Miller, R.; Plummer, D.; Fusfeld, A.R. Measuring the effectiveness of R&D. Res. Technol. Manag. 2011, 54, 29–36. [Google Scholar]

- Chobotová, M.; Rylková, Ž. Measurement of innovation performance. Int. J. Econ. Manag. Eng. 2014, 8, 2085–2090. [Google Scholar]

- Neely, A.; Hii, J. Innovation and Business Performance: A Literature Review; Report for the Judge Institute of Management Studies; University of Cambridge: Cambridge, UK, 1998. [Google Scholar]

- Werner, B.M.; Souder, W.E. Measuring R&D performance—State of the art. Res. Technol. Manag. 1997, 38, 22–39. [Google Scholar]

- Rogers, E.M. Diffusion of Innovations, 5th ed.; Free Press: New York, NY, USA, 2003. [Google Scholar]

- Taques, F.H.; López, M.G.; Basso, L.F.; Areal, N. Indicators used to measure service innovation and manufacturing innovation. J. Innov. Knowl. 2021, 6, 11–26. [Google Scholar] [CrossRef]

- Walker, R.M.; Chen, J.Y.; Aravind, D. Management innovation and firm performance: An integration of research findings. Eur. Manag. J. 2015, 33, 407–422. [Google Scholar] [CrossRef]

- BCG. Innovation 2007: A BCG Senior Management Survey; The Boston Consulting Group: Boston, MA, USA, 2007. [Google Scholar]

- Calantone, R.J.; Harmancioglu, N.; Droge, C. Inconclusive innovation “Returns”: A meta-analysis of research on innovation in new product development. J. Prod. Innov. Manag. 2010, 27, 1065–1081. [Google Scholar] [CrossRef]

- Chen, J.; Damanpour, F.; Reilly, R.R. Understanding antecedents of new product development speed: A meta-analysis. J. Oper. Manag. 2010, 28, 17–33. [Google Scholar] [CrossRef]

- Silva, J.J.; Cirani, C.B.S. The capability of organizational innovation: Systematic review of literature and research proposals. Gestão Produção. 2020, 27, e4819. [Google Scholar] [CrossRef]

- Sears, G.J.; Baba, V.V. Toward a multi-stage, multi-level theory of innovation. Can. J. Adm. Sci. 2011, 28, 357–372. [Google Scholar] [CrossRef]

- Lame, G. Systematic Literature Reviews: An Introduction. In Proceedings of the 22nd International Conference on Engineering Design (ICED19), Delft, The Netherlands, 5–8 August 2019. [Google Scholar] [CrossRef]

- Xiao, Y.; Watson, M. Guidance on conducting a systematic literature review. J. Plan. Educ. Res. 2019, 39, 93–112. [Google Scholar] [CrossRef]

- Carter, N.; Bryant-Lukosius, D.; DiCenso, A.; Blythe, J.; Neville, A.J. The use of triangulation in qualitative research. Oncol. Nurs. Forum 2014, 41, 545–547. [Google Scholar] [CrossRef] [PubMed]

- Meijer, P.C.; Verloop, N.; Beijaard, D. Multi-Method triangulation in a qualitative study on teachers’ practical knowledge: An attempt to increase internal validity. Qual. Quant. 2002, 36, 145–167. [Google Scholar] [CrossRef]

- Risjord, M.W.; Dunbar, S.B.; Moloney, M.F. A new foundation for methodological triangulation. J. Nurs. Scholarsh. 2002, 34, 269–275. [Google Scholar] [CrossRef] [PubMed]

- Kitchenham, B.; Brereton, O.P.; Budgen, D.; Turner, M.; Bailey, J.; Linkman, S. Systematic literature reviews in software engineering–A systematic literature review. Inf. Softw. Technol. 2009, 51, 7–15. [Google Scholar] [CrossRef]

- Kitchenham, B.; Brereton, O.P. A systematic review of systematic review process research in software engineering. Inf. Softw. Technol. 2013, 55, 2049–2075. [Google Scholar] [CrossRef]

- Purssell, E.; McCrae, N. How to Perform a Systematic Literature Review; Springer: Berlin/Heidelberg, Germany, 2020; ISBN 978-3-030-49671-5. [Google Scholar]

- Nankervis, A.R.; Compton, R. Performance management: Theory in practice? Asia Pac. J. Hum. Resour. 2006, 44, 83–101. [Google Scholar] [CrossRef]

- Dijk, D.V.; Schodl, M.M. Performance Appraisal and Evaluation. In International Encyclopedia of the Social & Behavioral Sciences, 2nd ed.; Wright, J., Ed.; Elsevier: Amsterdam, The Netherlands, 2015; pp. 716–721. [Google Scholar]

- Hong, J.; Liao, Y.; Zhang, Y.; Yu, Z. The effect of supply chain quality management practices and capabilities on operational and innovation performance: Evidence from Chinese manufacturers. Int. J. Prod. Econ. 2019, 212, 227–235. [Google Scholar] [CrossRef]

- Okoli, C.; Schabram, K. A guide to conducting a systematic literature review of information systems Research. Sprouts Work. Pap. Inf. Syst. 2010, 10. [Google Scholar] [CrossRef]

- Breretona, P.; Kitchenhama, B.A.; Budgenb, D.; Turnera, M.; Khalilc, M. Lessons from applying the systematic literature review process within the software engineering domain. J. Syst. Softw. 2007, 80, 571–583. [Google Scholar] [CrossRef]

- Gates, S. Review of methodology of quantitative reviews using meta-analysis in ecology. J. Anim. Ecol. 2002, 71, 547–557. [Google Scholar] [CrossRef]

- Gomersall, J.S.; Jadotte, Y.T.; Xue, Y.F.; Lockwood, S.; Riddle, D.; Preda, A. Conducting systematic reviews of economic evaluations. Int. J. Evid. Based Healthc. 2015, 13, 170–178. [Google Scholar] [CrossRef]

- Nazim, A.S.; Young, H.C.; Ali, N.M. Determining the quality of publications and research for tenure or promotion decisions. Libr. Rev. 1996, 45, 39–53. [Google Scholar] [CrossRef]

- Dixon-Woods, M.; Agarwal, S.; Jones, D.; Young, B.; Sutton, A. Synthesizing qualitative and quantitative evidence: A review of possible methods. J. Health Serv. Res. Policy 2005, 10, 45–53. [Google Scholar] [CrossRef]

- Chen, F.; Zhao, T.; Liao, Z. The impact of technology-environmental innovation on CO2 emissions in China’s transportation sector. Environ. Sci. Pollut. Res. 2020, 27, 29485–29501. [Google Scholar] [CrossRef]

- Dziallas, M.; Blind, K. Innovation indicators throughout the innovation process: An extensive literature analysis. Technovation 2019, 80, 3–29. [Google Scholar] [CrossRef]

- Piro, F.N. The R&D composition of European countries: Concentrated versus dispersed profiles. Scientometrics 2019, 119, 1095–1119. [Google Scholar]

- Brogi, S.; Menichini, T. Do the ISO 14001 Environmental management systems influence eco-innovation performance? Evidences from the EU context. Eur. J. Sustain. Dev. 2019, 8, 292–303. [Google Scholar] [CrossRef]

- Popadic, I.; Borocki, J.; Radisic, M.; Stefanic, I.; Duspara, L. The challenges while measuring enterprise innovative activities—the case from a developing country. Teh. Vjesn. Tech. Gaz. 2018, 25, 452–459. [Google Scholar]

- Garcia-Granero, E.M.; Piedra-Munoz, L.; Galdeano-Gomez, E. Eco-innovation measurement: A review of firm performance indicators. J. Clean. Prod. 2018, 191, 304–317. [Google Scholar] [CrossRef]

- Mehta, S. National innovation system of India: An empirical analysis. Millenn. Asia 2018, 9, 203–224. [Google Scholar] [CrossRef]

- Nwachukwu, C.; Chladkova, H.; Fadeyi, O. Strategy formulation process and innovation performance nexus. Int. J. Qual. Res. 2018, 12, 147–164. [Google Scholar]

- Mazur, K.; Inkow, M. Methodological aspects of innovation performance measurement in the IT sector. Management 2017, 21, 14–27. [Google Scholar] [CrossRef]

- Ocnarescu, I.; Bouchard, C. Memorable projects and aesthetic experiences in an industrial R&D lab. Soc. Bus. Rev. 2017, 12, 285–301. [Google Scholar]

- Moagar-Poladian, S.; Folea, V.; Paunica, M. Competitiveness of EU member states in attracting EU funding for research and innovation. Rom. J. Econ. Forecast. 2017, 20, 150–167. [Google Scholar]

- Plewa, M. Long-Run dynamics between product life cycle length and innovation performance in Manufacturing. Int. J. Innov. Manag. 2017, 21, 1750006. [Google Scholar] [CrossRef]

- Noktehdan, M.; Shahbazpour, M.; Wilkinson, S. Driving innovative thinking in the New Zealand construction industry. Buildings 2015, 5, 297–309. [Google Scholar] [CrossRef]

- Cheng, C.C.J.; Huizingh, E.K.R.E. When is open innovation beneficial? The role of strategic orientation. J. Prod. Innov. Manag. 2014, 31, 1235–1253. [Google Scholar] [CrossRef]

- Price Waterhouse Coopers. Guide to Key Performance Indicators; Connected Thinking; Price Waterhouse Coopers LLP: London, UK, 2007. [Google Scholar]

- Manoochehri, G. Measuring innovation: Challenges and best practices. Calif. J. Oper. Manag. 2010, 8, 67–73. [Google Scholar]

- Patton, M.Q. Enhancing the quality and credibility of qualitative analysis. HSR 1999, 34, 1189–1208. [Google Scholar]

- Patton, M.Q. Qualitative Research & Evaluation Methods: Integrating Theory and Practice, 4th ed.; SAGE: Thousand Oaks, CA, USA, 2015. [Google Scholar]

- Ashour, M. Triangulation as a powerful methodological research technique in technology-based services. Bus. Manag. Stud. Int. J. 2018, 6, 193–208. [Google Scholar] [CrossRef]

- Edwards, W. How to use multiattribute utility measurement for social decisionmaking. IEEE Trans. Syst. Man Cybern. 1977, 7, 326–340. [Google Scholar] [CrossRef]

- Olson, D.L. Smart. In Decision Aids for Selection Problems; Springer: New York, NY, USA, 1996; pp. 34–48. [Google Scholar]

- Risawandi, R.R. Study of the simple multi-attribute rating technique for decision support. Decis. Mak. 2016, 4, C4. [Google Scholar]

- Conejar, R.J.; Kim, H.K. A medical decision support system (DSS) for ubiquitous healthcare diagnosis system. Int. J. Softw. Eng. Appl. 2014, 8, 237–244. [Google Scholar]

- Kasie, F.M. Combining simple multiple attribute rating technique and analytical hierarchy process for designing multi-criteria performance measurement framework. Glob. J. Res. Eng. 2013, 13, 15–29. [Google Scholar]

- Taylor, J.M., Jr.; Love, B.N. Simple multi-attribute rating technique for renewable energy deployment decisions (SMART REDD). J. Def. Model. Simul. 2014, 11, 227–232. [Google Scholar] [CrossRef]

- Amato, F.; Casola, V.; Esposito, M.; Mazzeo, A.; Mazzocca, N. A smart decision support systems based on a fast classifier and a semantic post reasoner. Int. J. Syst. Syst. Eng. 2013, 4, 317–336. [Google Scholar] [CrossRef]

- Fitriani, N.; Suzanti, I.O.; Jauhari, A.; Khozaimi, A. Application monitoring and evaluation using SMART (Simple Multi attribute Rating Technique) Method. J. Phys. Conf. Ser. 2020, 1569, 022090. [Google Scholar] [CrossRef]

| Keyword Search by: | No. of Articles | Remarks |

|---|---|---|

| Innovation + Performance + Measure | 4 | None relating to AEC industry |

| Innovation + Performance + Evaluation | 6 | None relating to AEC industry |

| Innovation + Performance + Assessment | 2 | None relating to AEC industry |

| Innovation + Performance and Construction | 19 | 9 out of 19 articles relating to AEC industry |

| Articles | Key Objectives of the Research (Information Extracted from the Abstract of the Article) |

|---|---|

| Intellectual capital not only has a direct positive influence on the innovation performance of construction enterprises, but also positively affects their innovation performance through knowledge sharing. |

| Project managers should promote a higher stimulation of a leadership behavior, encouraging knowledge management, and establishing social capital, thus improving the innovation performance of project-based organizations in construction projects. |

| The research results can not only improve an understanding of effects of social capital on the innovation performances of construction enterprises, but also validates the importance of knowledge transfer in stimulating innovation performance. |

| It is found that any improvement of IT convergence innovation competence such as business efficiency IT index, collaboration IT index, and strategy management IT index has a positive impact on the production process, financial performance, and customer satisfaction with the services the companies provided. |

| The decomposition of a collaborative relationship with network analysis contributes to a better understanding of the innovation process in construction projects. In particular, key nodes which influence construction innovation through collaborative relationships are revealed and analyzed. |

| This study reveals the mutual effects of the factors in the inner innovation system in Large Scale Construction Enterprises and provides an effective model for internal systems analyses in the construction industry and in other sectors. |

| Collaborative working among team members and strong commitment prove to be the primary enablers of innovation; reluctance, inexperience, and cost are regarded as barriers to innovation. This study helps to develop a better understanding of the inter-organizational nature of construction innovations, thereby improving innovation performance. |

| An integration innovation management evaluation model is needed for the sustainability evaluation in the construction practice. Its development is the main scientific objective of this study, by taking into consideration the entire life cycle assessment and various other factors. |

| The purpose of this paper is to analyze the relationship between the demographic characteristics of top management teams (i.e., age, gender, administrative level, senior management experience and educational background) and megaproject performance, with respect to schedule, cost, quality, safety and technological innovation. The findings revealed that age has a significant influence on schedule performance; gender has a significant influence on safety performance; senior management experience has a significant influence on cost performance; and educational background has a significant influence on both schedule and technological innovation performance. |

| Criteria | Action/Remarks |

|---|---|

| Purpose of the Study |

|

| Research Issues |

|

| Inclusion Criteria |

|

| Search Strategies |

|

| Screening Procedures |

|

| Data Extraction Approach |

|

| Synthesis and Reporting |

|

| Article | Aim/Objective of the Study | Indicators/Metrics (Presented or Mentioned in the Article) | Implied Ideas/Meaning of the Indicators | Indicator Categories Designated |

|---|---|---|---|---|

| Chen F, Zhao T, Liao Z (2020) [85] | To study the influence of technological–environmental innovation indicator systems on CO2 emissions of China’s transportation sector. |

|

|

|

| Dziallas, M., & Blind, K. (2019) [86] | Literature review on innovation indicators based on the different stages of innovation. The review identifies 82 unique indicators to evaluate innovations under different dimensions. | Note: Only company-specific categories will be listed below:

|

|

|

| Piro FN (2019) [87] | To study the R&D composition of European countries and whether the profiles are associated with Research and Innovation performance indicators. |

|

|

|

| Brogi S, Menichini T (2019) [88] | To study to what extent the ISO certification of Environmental Management Systems influences eco-innovation performance. |

|

|

|

| Popadic et al. (2018) [89] | This article presents the results of innovation activities analyses on a given sample of Small-Medium Enterprises. |

|

|

|

| Garcia-Granero EM, Piedra-Munoz L Galdeano-Gomez E (2018) [90] | To perform a critical review of literature on eco-innovation performance indicators. |

|

|

|

| Mehta S (2018) [91] | To study empirically the national innovation system of India. | Input:

|

|

|

| Nwachukwu C, Chladkova H, Fadeyi O (2018) [92] | To study the link between strategy formulation process and innovation performance indicators in microfinance banks. |

|

|

|

| Mazur K, Inkow M (2017) [93] | To examine what innovation performance indicators are appropriate for research on innovation process in the IT sector. |

|

|

|

| Ocnarescu I, Bouchard, C (2017) [94] | To study the mechanism of aesthetic experiences of work in a research and innovation context—an R&D laboratory of a multinational communications and information technology company. |

|

|

|

| Moagar-Poladian S, Folea V, Paunica M (2017) [95] | The article presents a study on the competitiveness of European Union Member States in terms of research and innovation from the perspective of attracting EU funding for research, as well as from the viewpoint of key science and innovation performance indicators. |

|

|

|

| Plewa M (2017) [96] | To study the long-run dynamics between product life cycles and key innovation performance indicators. |

|

|

|

| Noktehdan M, Shahbazpour M, Wilkinson S (2015) [97] | To study the relationship between innovation and productivity improvement in the construction industry. |

|

|

|

| Cheng CCJ, Huizingh EKRE (2014) [98] | To study the relation between open innovation and innovation performance. |

|

|

|

| Consolidated Metric Category (Indicators) | Descriptions/Explanations |

|---|---|

| Financial Resources | Describes the financial resources an organization is willing to invest in innovation-related activities, including, but not limited to, establishing grants for supporting pilot projects, holding idea competitions with financial incentives and financial support on investing in new technologies, etc. |

| Innovation Process | Describes the systematic process in the firm that an innovative idea will go through, including how the process helps incentivize idea generation, implementation, the flow of the new ideas from generation to implementation (or even commercialization), etc. |

| Creativity of Employees | Describes the creativity of employees when solving problems in their work process and how willing they are to share their new ideas with the organization. |

| People and Organizations | Includes organization culture, management structure, technological environment, infrastructure, and leadership. Describes employee engagement in the innovation process and how well the current organization is to support innovation. |

| Training | Includes training sessions provided by the organization for the employee to learn new technologies or approaches. It also includes how the organization supports the self-development of the employee, such as subsidiaries for employees to take related online courses. |

| Knowledge Sharing | Includes the means and methods by which the organization supports knowledge sharing among its employees; how advanced implementation can be transferred from one team to another. |

| Implementation of New Ideas | Includes the efficiency of the implementation of new ideas, the diffusion rate of new ideas, etc. How the new idea/concept/method is being implemented in the organization; the strategies used. |

| Quality of New Ideas | Includes the measurement of the sustainability, scalability, and effectiveness of the idea, reflecting how well the idea is for bringing value to the organization. |

| Resultant of New Ideas | Includes enhancement of project satisfaction, cost, time, quality, organization’s image, competitiveness, etc. In other words, the performance chang led by the innovation, e.g., return of investment. |

| Customers’ Satisfaction | Clients’ satisfaction; end-users’ satisfaction; and revenue. |

| Major Items | Descriptions/Information | Decision/Actions |

|---|---|---|

| Establish initial set of indicators by SLR | ---- | ---- |

| Inviting senior professionals in the US to join the conversation/discussions/chatting/written communications for vetting an initial set of indicators |

| Record comments and discuss with other experts |

| Assess the usefulness of the indicator: “training and knowledge sharing” |

| Delete “training and knowledge sharing”; group them together and form a new indicator “ideas and knowledge” which includes idea pool (how many more ideas are in the pipeline, waiting for investment, piloting, or implementation, etc.) |

| Add durability to explain the resiliency of the innovation |

| Add a new indicator: durability |

| Add accessibility to reflect the ease of implementation of the innovation |

| Add a new indicator: accessibility |

| Add strategic alignment to describe how the ideas from the innovation team align with operational and business strategies of the company |

| Add a new indicator: strategic alignment |

| Establish second set of indicators |

| Finalized the second set of indito cater for for the next stage process |

| Task | Information | Decision |

|---|---|---|

| Secondset of indicators established in the first step consultation process | --- | ---- |

| Invite senior professionals in US, Hong Kong and Mainland China to join the conversation/discussion for vetting the second set of indicators |

| Record comments and discuss with other experts |

| Assess the usefulness of the indicator: “resultant of new ideas” |

| Customers’ satisfaction to be included in “resultant of new idea”; change the wording of “resultant of new idea” to “return of investment (ROI)” |

| Comment on the indicator: “implementation of new ideas“ |

| “implementation of new idea” should be included in the indicator: “innovation process” |

| Add Impact as a new indicator to represent the soft side of “resultant” |

| Add a new indicator: Impact |

| Merge financial resources into strategic alignment |

| Delete the “financial resources” indicator and add explanation to “strategic alignment” to include the concept of financial and human resources |

| Amend “people and organization” into engagement |

| Change “People & Organization” to “Engagement”; add further explanation to the term “engagement” |

| Delete the indicator “accessibility” |

| Delete “accessibility” and add further explanation to “innovation process” |

| Retain the indicator “durability” |

| Keep the term “durability” and add further explanation to clarify this indicator |

| Establish Final Set of Indicators |

| Finalize the Final Set of Indicators (for subsequent works) |

| Categories | Indicators | Explanations |

|---|---|---|

| Leading (Input/Process) | Creativity of Employees | Creativity of Employees describes the creativity of employees when solving problems faced in their work process and how willing they are to share their new ideas with the organization. |

| Innovation Process | Innovation Process describes the system within the organization that supports innovation, including how the system helps incentivize idea generation, implementation, and the flow of the new ideas from generation to implementation (or even commercialization), etc. | |

| Durability | Durability describes how “durable” the value of the innovation will be in the market and how resilient this innovation will be to change. | |

| Ideas and Knowledge | Ideas and Knowledge includes the organizations’ idea pool and how knowledge transfers between communities and groups. | |

| Engagement | Engagement describes the engagement level of employees in carrying out innovation. This includes how engaged employees are in generating new ideas, and implementing these new ideas in projects, etc. This engagement is also hindered by other factors, which are included in this indicator, such as the organization’s culture. | |

| Strategic Alignment | Strategic Alignment includes aligning organizational policies/strategies that promote and manage innovation, management style, organization structure, etc. | |

| Lagging (Output/Outcome) | Return on Investment (ROI) | ROI describes the measurement of the return on the investment of the innovations. In general, measuring the enhancement in performance results from the idea, such as project satisfaction, cost, time, quality, etc. In other words, the performance change led by the innovations. Customers’ satisfaction is included. |

| Impact | Impact measures the “soft” impact of innovations such as their effect on the organization‘s image, competitiveness in the industry, etc. | |

| Quality of New Ideas | Quality of Ideas includes the measurement of the applicability, scalability, etc., of the idea, representing the value of the ideas. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lo, J.T.Y.; Kam, C. Innovation Performance Indicators for Architecture, Engineering and Construction Organization. Sustainability 2021, 13, 9038. https://doi.org/10.3390/su13169038

Lo JTY, Kam C. Innovation Performance Indicators for Architecture, Engineering and Construction Organization. Sustainability. 2021; 13(16):9038. https://doi.org/10.3390/su13169038

Chicago/Turabian StyleLo, Jacqueline Tsz Yin, and Calvin Kam. 2021. "Innovation Performance Indicators for Architecture, Engineering and Construction Organization" Sustainability 13, no. 16: 9038. https://doi.org/10.3390/su13169038

APA StyleLo, J. T. Y., & Kam, C. (2021). Innovation Performance Indicators for Architecture, Engineering and Construction Organization. Sustainability, 13(16), 9038. https://doi.org/10.3390/su13169038