Research on Runoff Simulations Using Deep-Learning Methods

Abstract

1. Introduction

2. Materials and Methods

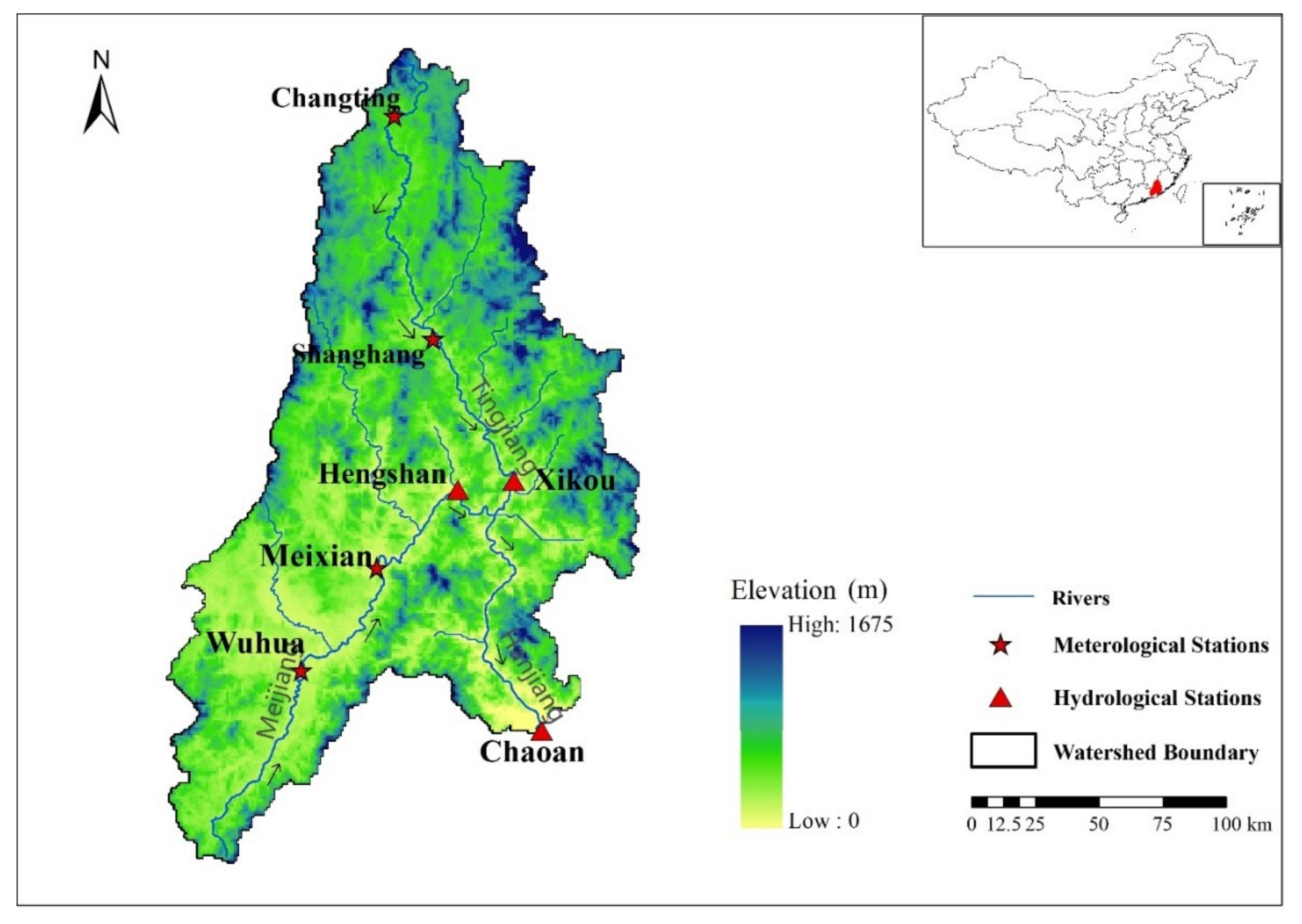

2.1. Study Area

2.2. Data Introduction

2.3. Models

2.3.1. Deep Learning

2.3.2. Modeling Process

- (1)

- Convolution layer: The preprocessed data was input into the convolution layer, and a convolution kernel with the size of 1 * k was selected to extract more abstract feature structures of different variables in space. The number of convolution kernels was n and the time steps were T. Then, we output the T *n dimensional feature vector, WCNN.

- (2)

- LSTM layer: WCNN was used as the input for LSTM, and the significant features of time dimension were extracted by LSTM. The number of hidden layer units in LSTM was m. Specifically, in formula (2) is WCNN, and the output of WCNN was WLSTM.

- (3)

- Attention-mechanism layer: We took WLSTM as the input of the attention-mechanism layer. The influence degree of different time points on the model was expressed as “weight.” The weight was normalized by softmax function [30], and the numerical value was restricted to 0~1. The weight output was Wattention. We performed a weighted summation of Wattention and WLSTM to obtain the final comprehensive timing information. Specifically, is WLSTM and is Wattention in formula (10).

- (4)

- Full-connection layer. A full connection layer was set up as the output layer.

2.3.3. Artificial Neural Network

2.3.4. Physical Model

2.3.5. Model Evaluation Criteria

3. Results

3.1. Optimization of Parameters under Different Inputs

3.2. Comparison of Different Model Components

3.3. Comparison of Different Inputs

3.4. Comparison of Simulation Capability with Other Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Luo, P.; Sun, Y.; Wang, S.; Wang, S.; Lyu, J.; Zhou, M.; Nakagami, K.; Takara, K.; Nover, D. Historical Assessment and Future Sustainability Challenges of Egyptian Water Resources Management. J. Clean. Prod. 2020, 263, 121154. [Google Scholar] [CrossRef]

- Zhang, Y.; Luo, P.; Zhao, S.; Kang, S.; Wang, P.; Zhou, M.; Lyu, J. Control and Remediation Methods for Eutrophic Lakes in Recent 30 years. Water Sci. Technol. 2020, 81, 1099–1113. [Google Scholar] [CrossRef] [PubMed]

- Zhu, Y.; Luo, P.; Su, F.; Zhang, S.; Sun, B. Spatiotemporal Analysis of Hydrological Variations and Their Impacts on Vegetation in Semiarid Areas from Multiple Satellite Data. Remote Sens. 2020, 12, 4177. [Google Scholar] [CrossRef]

- Luo, P.; Kang, S.; Apip, A.; Zhou, M.; Lyu, J.; Aisyah, S.; Mishra, B.; Regmi, R.K.; Nover, D. Water quality trend assessment in Jakarta: A rapidly growing Asian megacity. PLoS ONE 2019, 14, e0219009. [Google Scholar] [CrossRef] [PubMed]

- Song, X.M.; Kong, F.Z.; Zhan, C.S.; Han, J.W. Hybrid optimization rainfall-runoff simulation based on xinanjiang model and artificial neural network. J. Hydrol. Eng. 2012, 17, 1033–1041. [Google Scholar] [CrossRef]

- Niemi, T.J.; Warsta, L.; Taka, M.; Hickman, B.; Pulkkinen, S.; Krebs, G.; Moisseev, D.N.; Koivusalo, H.; Kokkonen, T. Applicability of open rainfall data to event-scale urban rainfall-runoff modelling. J. Hydrol. 2017, 547, 143–155. [Google Scholar] [CrossRef]

- Kan, G.; Li, J.; Zhang, X.; Ding, L.; He, X.; Liang, K.; Jiang, X.; Ren, M.; Li, H.; Wang, F.; et al. A new hybrid data-driven model for event-based rainfall–runoff simulation. Neural Comput. Appl. 2017, 28, 2519–2534. [Google Scholar] [CrossRef]

- Rui, X. Discussion of watershed hydrological model. Adv. Sci. Technol. Water Resour. 2017, 37, 1–7. [Google Scholar] [CrossRef]

- Wang, Y.; Shao, J.; Su, C.; Cui, Y.; Zhang, Q. The Application of Improved SWAT Model to Hydrological Cycle Study in Karst Area of South China. Sustainability 2019, 11, 5024. [Google Scholar] [CrossRef]

- Muleta, M.K.; Nicklow, J.W. Sensitivity and uncertainty analysis coupled with automatic calibration for a distributed watershed model. J. Hydrol. 2005, 306, 127–145. [Google Scholar] [CrossRef]

- Meng, X.; Zhang, M.; Wen, J.; Du, S.; Xu, H.; Wang, L.; Yang, Y. A Simple GIS-Based Model for Urban Rainstorm Inundation Simulation. Sustainability 2019, 11, 2830. [Google Scholar] [CrossRef]

- Huo, A.; Peng, J.; Cheng, Y.; Luo, P.; Zhao, Z.; Zheng, C. Hydrological Analysis of Loess Plateau Highland Control Schemes in Dongzhi Plateau. Front. Earth Sci. 2020, 8, 528632. [Google Scholar] [CrossRef]

- Mu, D.; Luo, P.; Lyu, J.; Zhou, M.; Huo, A.; Duan, W.; Nover, D.; He, B.; Zhao, X. Impact of temporal rainfall patterns on flash floods in Hue City, Vietnam. J. Flood Risk Manag. 2020, e12668. [Google Scholar] [CrossRef]

- Luo, P.; Mu, D.; Xue, H.; Ngo-Duc, T.; Dang-Dinh, K.; Takara, K.; Nover, D.; Schladow, G. Flood inundation assessment for the Hanoi Central Area, Vietnam under historical and extreme rainfall conditions. Sci. Rep. 2018, 8, 12623. [Google Scholar] [CrossRef]

- Huo, A.; Yang, L.; Luo, P.; Cheng, Y.; Peng, J.; Daniel, N. Influence of Landfill and land use scenario on runoff, evapotranspiration, and sediment yield over the Chinese Loess Plateau. Ecol. Indic. 2020. [Google Scholar] [CrossRef]

- Wu, X.; Liu, C. Progress in watershed hydrological models. Progr. Geogr. 2002, 21, 341–348. [Google Scholar] [CrossRef]

- Wood, E.F.; Roundy, J.K.; Troy, T.J. Hyperresolution global land surface modeling: Meeting a grand challenge for monitoring Earth’s terrestrial water. Water Resour. Res. 2011, 47. [Google Scholar] [CrossRef]

- Yin, Z.; Liao, W.; Lei, X.; Wang, H.; Wang, R. Comparing the Hydrological Responses of Conceptual and Process-Based Models with Varying Rain Gauge Density and Distribution. Sustainability 2018, 10, 3209. [Google Scholar] [CrossRef]

- Parkin, G.; Birkinshaw, S.J.; Younger, P.L.; Rao, Z.; Kirk, S. A numerical modelling and neural network approach to estimate the impact of groundwater abstractions on river flows. J. Hydrol. 2007, 339, 15–28. [Google Scholar] [CrossRef]

- Guo, Z.; Chi, D.; Wu, J.; Zhang, W. A new wind speed forecasting strategy based on the chaotic time series modelling technique and the Apriori algorithm. Energy Convers. Manag. 2014, 84, 140–151. [Google Scholar] [CrossRef]

- Seo, Y.; Kim, S.; Kisi, O.; Singh, V.P. Daily water level forecasting using wavelet decomposition and artificial intelligence techniques. J. Hydrol. 2015, 520, 224–243. [Google Scholar] [CrossRef]

- Chiamsathit, C.; Adeloye, A.J.; Bankaru-Swamy, S. Inflow forecasting using artificial neural networks for reservoir operation. Proc. Int. Ass. Hydrol. Sci. 2016, 373, 209–214. [Google Scholar] [CrossRef]

- Shoaib, M.; Shamseldin, A.Y.; Khan, S.; Khan, M.M.; Khan, Z.M.; Sultan, T.; Melville, B.W. A comparative study of various hybrid wavelet feedforward neural network models for runoff forecasting. Water Resour. Manag. 2018, 32, 83–103. [Google Scholar] [CrossRef]

- Maniquiz, M.C.; Lee, S.; Kim, L. Multiple linear regression models of urban runoff pollutant load and event mean concentration considering rainfall variables. J. Environ. Sci. 2010, 22, 946–952. [Google Scholar] [CrossRef]

- Ouyang, Q.; Lu, W.; Xin, X.; Zhang, Y.; Cheng, W.; Yu, T. Monthly rainfall forecasting using EEMD-SVR based on phase-space reconstruction. Water Resour. Manag. 2016, 30, 2311–2325. [Google Scholar] [CrossRef]

- Okkan, U.; Serbes, Z.A. The combined use of wavelet transform and black box models in reservoir inflow modeling. J. Hydrol. Hydromech. 2013, 61, 112–119. [Google Scholar] [CrossRef]

- Chua, L.H.C.; Wong, T.S.W. Runoff forecasting for an asphalt plane by artificial neural networks and comparisons with kinematic wave and autoregressive moving average models. J. Hydrol. 2011, 397, 191–201. [Google Scholar] [CrossRef]

- Liu, C. New generation hydrological model based on artificial intelligence and big data and its application in flood forecasting and early warning. China Flood Drought Manag. 2019, 29, 11–22. Available online: https://www.ixueshu.com/docment/035f7dd25a73b911a0a9d37b30b561f6318947a18e7f9386.html (accessed on 27 March 2020).

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef]

- Lecun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 278–2324. [Google Scholar] [CrossRef]

- Bengio, Y.; Imard, P.; Frasconi, P. Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 1994, 5, 157–166. [Google Scholar] [CrossRef] [PubMed]

- Han, M.; Xi, J.; Xu, S.; Yin, F.L. Prediction of chaotic time series based on the recurrent predictor neural network. IEEE Trans. Signal Process. 2004, 52, 3409–3416. [Google Scholar] [CrossRef]

- Yang, L.; Wu, Y.; Wang, J.; Liu, Y. Research on recurrent neural network. J. Comput. Appl. 2018, 38, 1–6. [Google Scholar]

- Bowes, B.D.; Sadler, J.M.; Morsy, M.M.; Behl, M.; Goodall, J.L. Forecasting groundwater table in a flood prone coastal city with long short-term memory and recurrent neural networks. Water 2019, 11, 1098. [Google Scholar] [CrossRef]

- Zhang, D.; Lindholm, G.; Ratnaweera, H. Use long short-term memory to enhance internet of things for combined sewer overflow monitoring. J. Hydrol. 2018, 556, 409–418. [Google Scholar] [CrossRef]

- Kratzert, F.; Klotz, D.; Brenner, C.; Schulz, K.; Herrnegger, M. Rainfall-Runoff modelling using Long-Short-Term-Memory (LSTM) networks. Hydrol. Earth Syst. Sci. 2018, 22, 6005–6022. [Google Scholar] [CrossRef]

- Yin, Z.; Liao, W.; Wang, R.; Lei, X. Rainfall-runoff modelling and forecasting based on long short-term memory(LSTM). S. N. Water Transf. Water Sci. Technol. 2019, 6, 1–9. [Google Scholar] [CrossRef]

- Yuan, X.; Chen, C.; Lei, X.; Yuan, Y.; Adnan, R.M. Monthly runoff forecasting based on LSTM-ALO model. Stoch. Environ. Res. Risk A 2018, 32, 2199–2212. [Google Scholar] [CrossRef]

- Jiang, S.; Lu, J.; Chen, X.; Liu, Z. The research of stream flow simulation using Long and Short Term Memory (LSTM) network in Fuhe River Basin of Poyang Lake. J. Cent. China Norm. Univ. 2020, 54, 128–139. [Google Scholar] [CrossRef]

- Xiang, Z.; Yan, J.; Demir, I. A rainfall-runoff model with LSTM-based sequence-to-sequence learning. Water Resour. Res. 2020, 56. [Google Scholar] [CrossRef]

- Liu, M.; Huang, Y.; Li, Z.; Tong, B.; Zhang, H. The applicability of LSTM-KNN model for real-time flood forecasting in different climate zones in China. Water 2020, 12, 440. [Google Scholar] [CrossRef]

- Ding, L.; Fang, W.; Luo, H.; Love, P.E.D.; Zhong, B.; Ouyang, X. A deep hybrid learning model to detect unsafe behavior: Integrating convolution neural networks and long short-term memory. Automat. Constr. 2018, 86, 118–124. [Google Scholar] [CrossRef]

- Sun, J.; Di, L.; Sun, Z.; Shen, Y.; Lai, Z. County-level soybean yield prediction using deep CNN-LSTM model. Sensors 2019, 19, 4363. [Google Scholar] [CrossRef]

- Kim, T.Y.; Cho, S.B. Predicting residential energy consumption using CNN-LSTM neural networks. Energy 2019, 182, 72–81. [Google Scholar] [CrossRef]

- Fan, H.; Jiang, M.; Xu, L.; Zhu, H.; Jiang, J. Comparison of long short term memory networks and the hydrological model in runoff simulation. Water 2020, 12, 175. [Google Scholar] [CrossRef]

- Kontogiannis, D.; Bargiotas, D.; Daskalopulu, A. Minutely Active Power Forecasting Models Using Neural Networks. Sustainability 2020, 12, 3177. [Google Scholar] [CrossRef]

- Huang, C.J.; Kuo, P.H. A deep CNN-LSTM model for particulate matter (PM2.5) forecasting in smart cities. Sensors 2018, 18, 2220. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Shoaib, M.; Shamseldin, A.Y.; Melville, B.W.; Khan, M.M. A comparison between wavelet based static and dynamic neural network approaches for runoff prediction. J. Hydrol. 2016, 535, 211–225. [Google Scholar] [CrossRef]

- Son, H.; Kim, C. A Deep Learning Approach to Forecasting Monthly Demand for Residential–Sector Electricity. Sustainability 2020, 12, 3103. [Google Scholar] [CrossRef]

- Abedinia, O.; Amjady, N.; Zareipour, H. A new feature selection technique for load and price forecast of electrical power systems. IEEE T. Power Syst. 2016, 32, 62–74. [Google Scholar] [CrossRef]

- Hinton, G.E.; Salakhutdinov, R.R. Replicated softmax: An undirected topic model. In Advances in Neural Information Processing Systems 22 (NIPS 2009); Curran Associates Inc.: Red Hook, NY, USA, 2009; Available online: https://proceedings.neurips.cc/paper/2009/file/31839b036f63806cba3f47b93af8ccb5-Paper.pdf (accessed on 1 May 2020).

- Fuente, A.D.L.; Meruane, V.; Meruane, C. Hydrological Early Warning System Based on a Deep Learning Runoff Model Coupled with a Meteorological Forecast. Water 2019, 11, 1808. [Google Scholar] [CrossRef]

- Wang, Z.M.; Batelaan, O.; De Smedt, F. A distributed model for water and energy transfer between soil, plants and atmosphere (wetspa). Phys. Chem. Earth 1996, 21, 189–193. [Google Scholar] [CrossRef]

- Bahremand, A.; De Smedt, F.; Corluy, J.; Liu, Y.B.; Poorova, J.; Velcicka, L.; Kunikova, E. WetSpa model application for assessing reforestation impacts on floods in margecany-Hornad Watershed, Slovakia. Water Resour. Manag. 2007, 21, 1373–1391. [Google Scholar] [CrossRef]

- Liu, Y.B.; De Smedt, F. WetSpa Extension, A GIS-based Hydrologic Model for Flood Prediction and Watershed Management Documentation and User Manual. Vrije Univ. Bruss. Belgium 2004, 1, e108. [Google Scholar] [CrossRef]

- Ma, H.; Dong, Z.; Zhang, W.; Liang, Z. Application of SCE-UA algorithm to optimization of TOPMODEL parameters. J. Hohai Univ. Nat. Sci. 2006, 4, 361–365. [Google Scholar] [CrossRef]

- Lei, X.; Jiang, Y.; Wang, H.; Tian, Y. Distributed hydrological model EasyDHM Ⅱ. Application. J. Hydrol. Eng. 2010, 41, 893–907. [Google Scholar] [CrossRef]

- Chollet, F.; Allaire, J.J. Deep Learning with R; Manning Publications: New York, NY, USA, 2018; pp. 24–50. [Google Scholar]

- Tian, Y.; Xu, Y.P.; Yang, Z.; Wang, G.; Zhu, Q. Integration of a parsimonious hydrological model with recurrent neural networks for improved streamflow forecasting. Water 2018, 10, 1655. [Google Scholar] [CrossRef]

- Hu, C.; Wu, Q.; Li, H.; Jian, S.; Li, N.; Lou, Z. Deep learning with a long short-term memory networks approach for rainfall-runoff simulation. Water 2018, 10, 1543. [Google Scholar] [CrossRef]

| Input Data | No. of Inputs | Detailed Inputs | |

|---|---|---|---|

| Data Information at Time t | History Information at Time t-i (i > = 1) | ||

| A1 | 29 | M1t; M2t; M3t; M4t | M1t-i; M2t-i; M3t-i; M4t-i; H4t-i |

| A2 | 4 | H1t; H2t; H3t | H1t-i; H2t-i; H3t-i; H4t-i |

| A3 | 32 | M1t; M2t; M3t; M4t; H1t; H2t; H3t | M1t-i; M2t-i; M3t-i; M4t-i; H1t-i; H2t-i; H3t-i; H4t-i |

| Model | Input Data | Time Length | Calibration Period | Validation Period | ||||

|---|---|---|---|---|---|---|---|---|

| RMSE | R2 | NSE | RMSE | R2 | NSE | |||

| WetSpa | A3 | 1 | 542.22 | 0.73 | 0.68 | 341.79 | 0.70 | 0.65 |

| ANN | A3 | 6 | 324.02 | 0.85 | 0.85 | 237.69 | 0.81 | 0.78 |

| LSTM | A1 | 6 | 293.87 | 0.87 | 0.87 | 232.91 | 0.81 | 0.79 |

| A2 | 4 | 290.03 | 0.88 | 0.87 | 231.33 | 0.81 | 0.79 | |

| A3 | 2 | 289.79 | 0.88 | 0.88 | 217.14 | 0.82 | 0.81 | |

| Conv-LSTM | A1 | 6 | 292.41 | 0.88 | 0.87 | 228.56 | 0.81 | 0.80 |

| A2 | 4 | 288.30 | 0.88 | 0.88 | 219.16 | 0.83 | 0.81 | |

| A3 | 4 | 284.04 | 0.89 | 0.88 | 215.64 | 0.84 | 0.82 | |

| TALSTM | A1 | 6 | 286.29 | 0.88 | 0.88 | 220.55 | 0.81 | 0.81 |

| A2 | 3 | 281.79 | 0.88 | 0.88 | 216.72 | 0.82 | 0.82 | |

| A3 | 3 | 279.06 | 0.89 | 0.88 | 205.70 | 0.84 | 0.83 | |

| Conv-TALSTM | A1 | 7 | 283.71 | 0.88 | 0.88 | 210.00 | 0.83 | 0.83 |

| A2 | 4 | 280.74 | 0.89 | 0.88 | 208.06 | 0.84 | 0.83 | |

| A3 | 2 | 277.70 | 0.90 | 0.89 | 205.11 | 0.85 | 0.84 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Zhang, T.; Kang, A.; Li, J.; Lei, X. Research on Runoff Simulations Using Deep-Learning Methods. Sustainability 2021, 13, 1336. https://doi.org/10.3390/su13031336

Liu Y, Zhang T, Kang A, Li J, Lei X. Research on Runoff Simulations Using Deep-Learning Methods. Sustainability. 2021; 13(3):1336. https://doi.org/10.3390/su13031336

Chicago/Turabian StyleLiu, Yan, Ting Zhang, Aiqing Kang, Jianzhu Li, and Xiaohui Lei. 2021. "Research on Runoff Simulations Using Deep-Learning Methods" Sustainability 13, no. 3: 1336. https://doi.org/10.3390/su13031336

APA StyleLiu, Y., Zhang, T., Kang, A., Li, J., & Lei, X. (2021). Research on Runoff Simulations Using Deep-Learning Methods. Sustainability, 13(3), 1336. https://doi.org/10.3390/su13031336