E-Learning Services to Achieve Sustainable Learning and Academic Performance: An Empirical Study

Abstract

:1. Introduction

2. Theoretical Foundation

2.1. E-Learning Success Based on IS Model

2.2. E-Learning Success Based on TAM Model

2.3. E-Learning Success Based on User Satisfaction Model:

2.4. E-Learning Success Based on ELQ Model

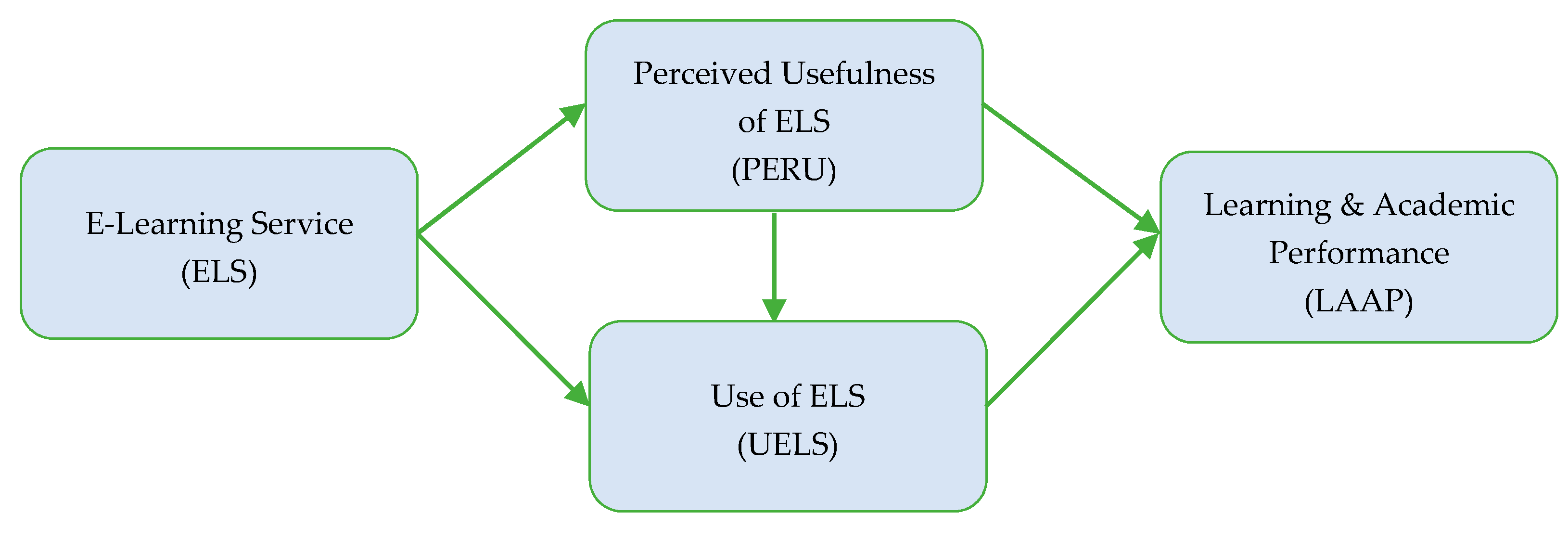

3. Development of the Conceptual Model

3.1. E-Learning Service Quality/E-Learning Success Factors

3.1.1. Learner’s Quality

3.1.2. Instructor’s Quality

3.1.3. Information Quality

3.1.4. System’s Quality

3.1.5. Institution’s Quality

3.2. Perceived Usefulness and Use of ELS

3.3. Learning and Academic Performance

4. Materials and Methods

4.1. Design of Questionnaire and Its Process

4.2. Study Design and Data Collection

4.3. Study Variables and Their Items

4.4. Target Population and Sample

4.5. Data Collection, Preparation and Management

4.6. Statistical Analysis

5. Results

- Indicator reliability with the help of outer loadings which should be ≥0.70 for each indicator.

- Internal Consistency Reliability with the help of Cronbach’s alpha (α) and composite reliability (CR). The minimum threshold value ≥ 0.70 for both test.

- Validity was examined and assessed with the following two criterion;

- Convergent Validity was checked with the help of Average Variance Extracted (AVE) should be ≥ 0.50.

- Discriminant Validity was examined with the help of Fornell–Larcker Criterion, Cross Loadings and Heterotrait–Monotrait (HTMT) ratio.

- Outer loading >0.70 retain the item.

- Outer loading ≥0.40 <0.70 then analyze the impact of deleting items on Average Variance Extracted and Composite Reliability. If the impact is minimum retain the items otherwise delete the items from model.

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ahmad, N. Cloud computing: Technology, security issues and solutions. In Proceedings of the 2nd International Conference on Anti-Cyber Crimes (ICACC), Abha, Saudi Arabia, 26–27 March 2017; pp. 30–35. [Google Scholar] [CrossRef]

- Irfan, M.; Ahmad, N. Internet of medical things: Architectural model, motivational factors and impediments. In Proceedings of the 15th Learning and Technology Conference (L&T), Jeddah, Saudi Arabia, 25–26 February 2018; pp. 6–13. [Google Scholar]

- Khan, N.; Naim, A.; Hussain, M.R.; Naveed, Q.N.; Ahmad, N.; Qamar, S. The 51 V’s of Big Data: Survey, Technologies, Characteristics, Opportunities, Issues and Challenges. In Proceedings of the International Conference on Omni-Layer Intelligent Systems, Crete, Greece, 5–7 May 2019; pp. 19–24. [Google Scholar]

- Ahmad, N.; Mehmood, R. Enterprise systems: Are we ready for future sustainable cities. Supply Chain Manag. Int. J. 2015, 20, 264–283. [Google Scholar] [CrossRef]

- Ahmad, N.; Mehmood, R. Enterprise Systems for Networked Smart Cities. In Deep Learning and Edge Computing Solutions for High Performance Computing; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 1–33. [Google Scholar]

- Naveed, Q.N.; Qureshi, M.R.N.M.; Shaikh, A.; Alsayed, A.; Sanober, S.; Mohiuddin, K. Evaluating and Ranking Cloud-Based E-Learning Critical Success Factors (CSFs) Using Combinatorial Approach. IEEE Access 2019, 7, 157145–157157. [Google Scholar] [CrossRef]

- Noorulhasan, Q.; Muhammad, A.; Sanober, S.; Rafik, M.; Shah, A.; Naveed, Q.N.; Qureshi, M.R.N. A Mixed Method Study for Investigating Critical Success Factors (CSFs) of E-Learning in Saudi Arabian Universities. Int. J. Adv. Comput. Sci. Appl. 2017, 8, 171–178. [Google Scholar] [CrossRef] [Green Version]

- Ahmad, N.; Hoda, N.; AlAhmari, F. Developing a Cloud-Based Mobile Learning Adoption Model to Promote Sustainable Education. Sustainability 2020, 12, 3126. [Google Scholar] [CrossRef] [Green Version]

- Hassanzadeh, A.; Kanaani, F.; Elahi, S. A model for measuring e-learning systems success in universities. Expert Syst. Appl. 2012, 39, 10959–10966. [Google Scholar] [CrossRef]

- Sawangchai, A.; Prasarnkarn, H.; Kasuma, J.; Polyakova, A.G.; Qasim, S. Effects of COVID-19 on digital learning of entrepreneurs. Pol. J. Manag. Stud. 2020, 22, 502–517. [Google Scholar] [CrossRef]

- Smoląg, K.; Ślusarczyk, B.; Kot, S. The Role of Social Media in Management of Relational Capital in Universities. Prabandhan Indian J. Manag. 2016, 9, 34. [Google Scholar] [CrossRef]

- Zhang, T.; Shaikh, Z.A.; Yumashev, A.V.; Chłąd, M. Applied Model of E-Learning in the Framework of Education for Sustainable Development. Sustainability 2020, 12, 6420. [Google Scholar] [CrossRef]

- Elkington, J. The triple bottom line for twenty-first-century business. In Companies in a World Conflict: NGOs, Sanctions and Corporative Responsibility; Earthscan: London, UK, 1998. [Google Scholar]

- United Nations. Indicators of Sustainable Development: Guidelines and Methodologies; United Nations Division for Sustainable Development: Geneva, Switzerland, 2001. [Google Scholar]

- Muhammad, A.; Shaikh, A.; Naveed, Q.N.; Qureshi, M.R.N. Factors Affecting Academic Integrity in E-Learning of Saudi Arabian Universities. An Investigation Using Delphi and AHP. IEEE Access 2020, 8, 16259–16268. [Google Scholar] [CrossRef]

- Ahmad, N.; Quadri, N.N.; Qureshi, M.R.N.; Alam, M.M. Relationship Modeling of Critical Success Factors for Enhancing Sustainability and Performance in E-Learning. Sustainability 2018, 10, 4776. [Google Scholar] [CrossRef] [Green Version]

- Davis, F.D.; Venkatesh, V. A critical assessment of potential measurement biases in the technology acceptance model: Three experiments. Int. J. Human Comput. Stud. 1996, 45, 19–45. [Google Scholar] [CrossRef] [Green Version]

- Sarbaini, S. Managing e-learning in public universities by investigating the role of culture. Pol. J. Manag. Stud. 2019, 20, 394–404. [Google Scholar]

- Delone, W.H.; McLean, E.R. The DeLone and McLean Model of Information Systems Success: A Ten-Year Update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar] [CrossRef]

- Parasuraman, A.; Zeithaml, V.; Berry, L. SERVQUAL: A multiple-item scale for measuring consumer perceptions of service quality. Retail. Crit. Concepts 2002, 64, 140. [Google Scholar]

- Sadaf, A.; Newby, T.J.; Ertmer, P.A. Exploring Factors that Predict Preservice Teachers’ Intentions to Use Web 2.0 Technologies Using Decomposed Theory of Planned Behavior. J. Res. Technol. Educ. 2012, 45, 171–196. [Google Scholar] [CrossRef]

- Cardona, M.M.; Bravo, J.J. Service quality perceptions in higher education institutions: The case of a colombian university. Estud. Gerenc. 2012, 28, 23–29. [Google Scholar] [CrossRef] [Green Version]

- Alsabawy, A.Y.; Cater-Steel, A.; Soar, J. IT infrastructure services as a requirement for e-learning system success. Comput. Educ. 2013, 69, 431–451. [Google Scholar] [CrossRef]

- Al-Fraihat, D.; Joy, M.; Masa’Deh, R.; Sinclair, J. Evaluating E-learning systems success: An empirical study. Comput. Hum. Behav. 2020, 102, 67–86. [Google Scholar] [CrossRef]

- Waheed, M.; Kaur, K.; Qazi, A. Students’ perspective on knowledge quality in eLearning context: A qualitative assessment. Internet Res. 2016, 26, 120–145. [Google Scholar] [CrossRef]

- Vasconcelos, P.; Furtado, E.S.; Pinheiro, P.; Furtado, L. Multidisciplinary criteria for the quality of e-learning services design. Comput. Hum. Behav. 2020, 107, 105979. [Google Scholar] [CrossRef]

- Asoodar, M.; Vaezi, S.; Izanloo, B. Framework to improve e-learner satisfaction and further strengthen e-learning implementation. Comput. Hum. Behav. 2016, 63, 704–716. [Google Scholar] [CrossRef]

- Yilmaz, R. Exploring the role of e-learning readiness on student satisfaction and motivation in flipped classroom. Comput. Hum. Behav. 2017, 70, 251–260. [Google Scholar] [CrossRef]

- Kanwal, F.; Rehman, M. Measuring Information, System and Service Qualities for the Evaluation of E-Learning Systems in Pakistan. Pak. J. Sci. 2016, 68, 302–307. [Google Scholar]

- Stuss, M.M.; Szczepańska-Woszczyna, K.; Makieła, Z.J. Competences of Graduates of Higher Education Business Studies in Labor Market I (Results of Pilot Cross-Border Research Project in Poland and Slovakia). Sustainability 2019, 11, 4988. [Google Scholar] [CrossRef] [Green Version]

- Cidral, W.A.; Oliveira, T.; Di Felice, M.; Aparicio, M. E-learning success determinants: Brazilian empirical study. Comput. Educ. 2018, 122, 273–290. [Google Scholar] [CrossRef] [Green Version]

- Klobas, J.E.; McGill, T.J. The role of involvement in learning management system success. J. Comput. High. Educ. 2010, 22, 114–134. [Google Scholar] [CrossRef]

- Eom, S.; Ashill, N.J.; Arbaugh, J.; Stapleton, J.L. The role of information technology in e-learning systems success. Hum. Syst. Manag. 2012, 31, 147–163. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319–340. [Google Scholar] [CrossRef] [Green Version]

- Cyert, R.M.; March, J.G. A Behavioral Theory of the Firm; M.E. Sharpe: Englewood Cliffs, NJ, USA, 1963; Volume 2. [Google Scholar]

- Surendran, P. Technology acceptance model: A survey of literature. Int. J. Bus. Soc. Res. 2012, 2, 175–178. [Google Scholar]

- Venkatesh, V.; Davis, F.D. A Theoretical Extension of the Technology Acceptance Model: Four Longitudinal Field Studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef] [Green Version]

- Abdullah, F.; Ward, R. Developing a General Extended Technology Acceptance Model for E-Learning (GETAMEL) by analysing commonly used external factors. Comput. Hum. Behav. 2016, 56, 238–256. [Google Scholar] [CrossRef]

- Bailey, J.E.; Pearson, S.W. Development of a Tool for Measuring and Analyzing Computer User Satisfaction. Manag. Sci. 1983, 29, 530–545. [Google Scholar] [CrossRef]

- Sun, P.-C.; Tsai, R.J.; Finger, G.; Chen, Y.-Y.; Yeh, D. What drives a successful e-Learning? An empirical investigation of the critical factors influencing learner satisfaction. Comput. Educ. 2008, 50, 1183–1202. [Google Scholar] [CrossRef]

- MacDonald, C.J.; Stodel, E.J.; Farres, L.G.; Breithaupt, K.; Gabriel, M.A. The demand-driven learning model: A framework for web-based learning. Internet High. Educ. 2001, 4, 9–30. [Google Scholar] [CrossRef]

- McDowall, T.; Jackling, B. The Impact of Computer-Assisted Learning on Academic Grades: An Assessment of Students’ Perceptions. Account. Educ. 2006, 15, 377–389. [Google Scholar] [CrossRef]

- Islam, A.N. Investigating e-learning system usage outcomes in the university context. Comput. Educ. 2013, 69, 387–399. [Google Scholar] [CrossRef]

- Yeung, C.; Zhou, L.; Armatas, C. An Overview of Benchmarks Regarding Quality Assurance for eLearning in Higher Education. In Proceedings of the 2019 IEEE Conference on e-Learning, e-Management & e- Services (IC3e), Pulau Pinang, Malaysia, 19–21 November 2019; pp. 1–6. [Google Scholar]

- Hadullo, K.; Oboko, R.; Omwenga, E. A model for evaluating e-learning systems quality in higher education in developing countries. Int. J. Educ. Dev. Using ICT 2017, 13, 185–204. [Google Scholar]

- Naveed, Q.N.; Alam, M.M.; Tairan, N. Structural Equation Modeling for Mobile Learning Acceptance by University Students: An Empirical Study. Sustainability 2020, 12, 8618. [Google Scholar] [CrossRef]

- Udo, G.J.; Bagchi, K.K.; Kirs, P.J. Using SERVQUAL to assess the quality of e-learning experience. Comput. Hum. Behav. 2011, 27, 1272–1283. [Google Scholar] [CrossRef]

- Khasawneh, M. Factors Influence e-Learning Utilization in Jordanian Universities—Academic Staff Perspectives. Procedia Soc. Behav. Sci. 2015, 210, 170–180. [Google Scholar] [CrossRef] [Green Version]

- Shafiq, M.; Lasrado, F.; Islam, A. Service Quality scale Development for Higher Education Institutions: The Asian Context. J. Qual. Technol. Manag. 2018, 15, 37–55. [Google Scholar]

- Uppal, M.A.; Ali, S.; Gulliver, S.R. Factors determining e-learning service quality. Br. J. Educ. Technol. 2018, 49, 412–426. [Google Scholar] [CrossRef]

- Lee, B.-C.; Yoon, J.-O.; Lee, I. Learners’ acceptance of e-learning in South Korea: Theories and results. Comput. Educ. 2009, 53, 1320–1329. [Google Scholar] [CrossRef]

- Nikolić, V.; Kaljevic, J.; Jović, S.; Petković, D.; Milovančević, M.; Dimitrov, L.; Dachkinov, P. Survey of quality models of e-learning systems. Phys. A Stat. Mech. Appl. 2018, 511, 324–330. [Google Scholar] [CrossRef]

- Haryaka, U.; Agus, F.; Kridalaksana, A.H. User satisfaction model for e-learning using smartphone. Procedia Comput. Sci. 2017, 116, 373–380. [Google Scholar]

- Wu, H.-Y.; Lin, H.-Y. A hybrid approach to develop an analytical model for enhancing the service quality of e-learning. Comput. Educ. 2012, 58, 1318–1338. [Google Scholar] [CrossRef]

- Martínez-Argüelles, M.; Castán, J.; Juan, A. How do students measure service quality in e-learning? In A case study regarding an internet-based university. In Proceedings of the 8th European Conference on Learning 2009, Bari, Italy, 29–30 October 2009; University of Bari: Bari, Italy, 2009; Volume 8, pp. 366–373. [Google Scholar]

- Trenholm, S.; Juan, A.A.; Simosa, J.; Oliveira, A. Long-Term Experiences in Mathematics E-Learning in Europe and the USA. In Teaching Mathematics Online; IGI Global: Hershey, PA, USA, 2012; pp. 238–257. [Google Scholar]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sarstedt, M. A new criterion for assessing discriminant validity in variance-based structural equation modeling. J. Acad. Mark. Sci. 2015, 43, 115–135. [Google Scholar] [CrossRef] [Green Version]

- Hair, J.; Black, W.; Babin, B.; Anderson, R. Exploratory Factor Analysis. Multivariate Data Analysis, 7th ed.; UK Pearson Education: Harlow, UK, 2014. [Google Scholar]

- Chen, W.S.; Yao, A.Y.T. An Empirical Evaluation of Critical Factors Influencing Learner Satisfaction in Blended Learning: A Pilot Study. Univers. J. Educ. Res. 2016, 4, 1667–1671. [Google Scholar] [CrossRef]

- Cheng, Y. Effects of quality antecedents on e-learning acceptance. Internet Res. 2012, 22, 361–390. [Google Scholar] [CrossRef] [Green Version]

- Naveed, Q.N.; Qureshi, M.R.N.; Tairan, N.; Mohammad, A.; Shaikh, A.; Alsayed, A.O.; Shah, A.; Alotaibi, F.M. Evaluating critical success factors in implementing E-learning system using multi-criteria decision-making. PLoS ONE 2020, 15, e0231465. [Google Scholar] [CrossRef]

| Construct | Category | Measure | Code | Reference |

|---|---|---|---|---|

| Learner’s Quality | Learner’s Attitude | Learner believes LMS is good for learning. | LEAR11 | [24] |

| Learner has a positive attitude toward LMS. | LEAR12 | |||

| Learner’s Behavior | Learner is not intimidated by using LMS. | LEAR21 | [24] | |

| Learner’s previous experience with LMS is helpful. | LEAR22 | |||

| Learner’s Self-efficacy | Learner’s ICT skills | LEAR31 | [43] | |

| Learner’s communication skills | LEAR32 | |||

| Learner’s Peer-Interaction | Learner enjoys interacting with peers. | LEAR41 | [31] | |

| Learner trusts the knowledge of Peers. | LEAR42 | |||

| Instructor Quality | Reliability | Instructor are dependable for the course contents. | INSR11 | [9] |

| Instructors provide services at the time they promise to do so. | INSR12 | |||

| Instructors’ intended communications are always clear. | INSR13 | |||

| Assurance | Instructors are knowledgeable in their fields. | INSR21 | [29,47] | |

| Instructors are experienced to deliver course content. | INSR22 | |||

| Instructors are fair and impartial in academics. | INSR23 | |||

| Empathy | Instructors are genuinely concerned with their students. | INSR31 | [29,47] | |

| Instructor understand the individual needs of their students. | INSR32 | |||

| Instructors encourage and motivate students to do their best. | INSR33 | |||

| Responsiveness | Instructors efficiently respond to individual students. | INSR41 | [24,29,47] | |

| Instructors welcome student’s questions and comments. | INSR42 | |||

| Instructor utilize all possible ways to help students. | INSR43 | |||

| Information Quality | Content | LMS offers relevant information. | INFR11 | [9,24,31] |

| LMS offers up-to-date information. | INFR12 | |||

| LMS offers complete information. | INFR13 | |||

| Instructions | LMS provides clear instructions. | INFR21 | [29,31] | |

| LMS provides objective instructions. | INFR22 | |||

| LMS provides actionable instructions. | INFR23 | |||

| Language | LMS uses easy language. | INFR31 | [50] | |

| LMS uses professional language. | INFR32 | |||

| LMS uses multilingual communication. | INFR33 | |||

| Modes | LMS contains multimedia information. | INFR41 | [50] | |

| LMS gives proper feedback information. | INFR42 | |||

| LMS facilitates flexible learning. | INFR43 | |||

| System Quality | Multimedia Support | LMS uses audio elements properly. | SYST11 | [47] |

| LMS uses video elements properly. | SYST12 | |||

| LMS uses animations properly. | SYST13 | |||

| Interface Design | LMS fonts, style, color, meet the quality standards. | SYST21 | [9,51] | |

| Structure of LMS is easy to understand. | SYST22 | |||

| LMS interface is intuitive and familiar. | SYST23 | |||

| Functionality | LMS does not sign out automatically. | SYST31 | [52] | |

| LMS operates gracefully during peak loads. | SYST32 | |||

| LMS gives similar experience on desperate devices. | SYST33 | |||

| Ease of Use | Ease of communication with peers. | SYST41 | [24,50] | |

| Ease of sharing data and information. | SYST42 | |||

| Ease of sharing learning experience. | SYST43 | |||

| Institutional Quality | Administrative Support | Institution has Top management support for E-Learning. | INST11 | [45] |

| Institution has effective policy to promote E-Learning. | INST12 | |||

| Provides conducive environment to implement E-Learning. | INST13 | |||

| Financial Support | Institution provide financial support for E-Learning | INST21 | [45] | |

| Provides timely funding for hardware and software updates. | INST22 | |||

| Institution provides monetary benefits to stakeholders. | INST23 | |||

| Stakeholder Training | Institution is providing training to stakeholders. | INST31 | [9] | |

| Trainers of stakeholders are knowledgeable. | INST32 | |||

| Modes of stakeholders training are appropriate. | INST33 | |||

| Environmental Support | Ministry provides incentives for E-Learning adoption. | INST41 | [48] | |

| Executive body such as Deanship to handle E-Learning. | INST42 | |||

| Best performing stakeholder gain social recognition. | INST43 | |||

| Perceived Usefulness of ELS | Blackboard helps me to complete my tasks easily | PERU11 | [9,24,51] | |

| Blackboard increases my knowledge | PERU12 | |||

| Blackboard is useful | PERU13 | |||

| Use of ELS | I use Blackboard frequently | UELS21 | [24] | |

| I use Blackboard for my study | UELS22 | |||

| I use Blackboard for my communication | UELS23 | |||

| Learning and Academic Performance | Academic performance using ELS is satisfactory | LAAP31 | [42,43] | |

| I am happy with the learning experience using ELS | LAAP32 | |||

| ELS helped me in achieving learning goals | LAAP33 | |||

| ELS is efficient for learning | LAAP34 |

| Items | Description | N | % |

|---|---|---|---|

| Gender | Male/Female | 180/217 | 45.30/54.7 |

| Knowledge About E-Learning | Yes/No | 379/18 | 95.50/04.5 |

| Level of E-Learning Knowledge | Very Poor | 24 | 06.00 |

| Poor | 22 | 05.50 | |

| Average | 131 | 33.00 | |

| Good | 96 | 24.20 | |

| Very Good | 124 | 31.20 | |

| Currently using E-Learning | Yes/No | 397/0 | 100.00/0.00 |

| Satisfaction from E-Learning | Highly Dissatisfied | 18 | 04.50 |

| Dissatisfied | 43 | 10.80 | |

| Neutral | 105 | 26.40 | |

| Satisfied | 160 | 40.30 | |

| Highly Satisfied | 71 | 17.90 | |

| Types of E-Learning Use | Blended–1 | 214 | 53.90 |

| Blended–2 | 56 | 14.10 | |

| Blended–3 | 59 | 14.90 | |

| Full E-Learning | 68 | 17.10 | |

| Years of E-Learning Usage | 01 or less | 121 | 30.50 |

| 02–05 | 210 | 52.90 | |

| 06–09 | 39 | 09.80 | |

| 09–Above | 27 | 06.80 | |

| Area of Study | Medical | 227 | 57.20 |

| Humanities and Social Science | 64 | 16.10 | |

| Engineering, Computer and Science | 42 | 10.60 | |

| Others | 64 | 16.10 | |

| University | King Khalid University | 118 | 29.72 |

| Albaha University | 76 | 19.14 | |

| Jazan University | 81 | 20.40 | |

| Najran University | 63 | 15.87 | |

| Bisha University | 59 | 14.86 |

| Construct Code | Cronbach Alpha α ≥ 0.70 | CR CR ≥ 0.70 | AVE AVE ≥ 0.50 |

|---|---|---|---|

| Learner’s Quality (LEARQ) | 0.888 | 0.710 | 0.560 |

| Instructor Quality (INSRQ) | 0.753 | 0.859 | 0.661 |

| Information Quality (INFRQ) | 0.824 | 0.861 | 0.674 |

| System Quality (SYSTQ) | 0.737 | 0.849 | 0.611 |

| Institutional Quality (INSTQ) | 0.846 | 0.853 | 0.630 |

| Perceived Usefulness of ELS (PERU) | 0.801 | 0.838 | 0.734 |

| Use of ELS (UELS) | 0.906 | 0.853 | 0.771 |

| Learning and Academic Performance (LAAP) | 0.795 | 0.727 | 0.760 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | |

|---|---|---|---|---|---|---|---|---|

| INFRQ | 0.821 | |||||||

| INSRQ | 0.683 | 0.813 | ||||||

| INSTQ | 0.569 | 0.598 | 0.794 | |||||

| LAAP | 0.597 | 0.602 | 0.628 | 0.872 | ||||

| LEARQ | 0.664 | 0.611 | 0.439 | 0.49 | 0.748 | |||

| PERU | 0.531 | 0.595 | 0.558 | 0.789 | 0.473 | 0.913 | ||

| SYSTQ | 0.817 | 0.688 | 0.677 | 0.647 | 0.643 | 0.656 | 0.782 | |

| UELS | 0.518 | 0.584 | 0.664 | 0.803 | 0.442 | 0.795 | 0.668 | 0.933 |

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | ||

|---|---|---|---|---|---|---|---|---|---|

| INFRQ | |||||||||

| INSRQ | 0.718 | ||||||||

| INSTQ | 0.58 | 0.615 | |||||||

| LAAP | 0.642 | 0.644 | 0.665 | ||||||

| LEARQ | 0.715 | 0.657 | 0.463 | 0.526 | |||||

| PERU | 0.564 | 0.634 | 0.588 | 0.874 | 0.495 | ||||

| SYSTQ | 0.856 | 0.722 | 0.71 | 0.687 | 0.696 | 0.694 | |||

| UELS | 0.543 | 0.617 | 0.698 | 0.88 | 0.471 | 0.867 | 0.701 |

| Hypothesis | Path | β Coefficients | T Statistics | P Values | Support |

|---|---|---|---|---|---|

| H0 1a | LEARQ > PERU | 0.043 | 0.796 | 0.427 | Supported |

| H0 1b | LEARQ > UELS | –0.017 | 0.148 | 0.882 | Supported |

| H0 2a | INSRQ > PERU | 0.250 | 3.873 | 0.000 | Not Supported |

| H0 2b | INSRQ > UELS | 0.048 | 3.060 | 0.002 | Not Supported |

| H0 3a | INFRQ > PERU | −0.134 | 1.724 | 0.085 | Supported |

| H0 3b | INFRQ > UELS | −0.099 | 2.397 | 0.017 | Not Supported |

| H0 4a | SYSTQ > PERU | 0.460 | 5.750 | 0.001 | Not Supported |

| H0 4b | SYSTQ > UELS | 0.176 | 5.247 | 0.001 | Not Supported |

| H0 5a | INSTQ > PERU | 0.157 | 2.059 | 0.040 | Not Supported |

| H0 5b | INSTQ > UELS | 0.265 | 5.202 | 0.001 | Not Supported |

| H0 6a | PERU > UELS | 0.563 | 11.267 | 0.001 | Not Supported |

| H0 6b | PERU > LAAP | 0.407 | 6.331 | 0.001 | Not Supported |

| H0 6c | UELS > LAAP | 0.479 | 7.980 | 0.001 | Not Supported |

| Hypothesis | Path | β Coefficients | T Statistics | P Values | Support |

|---|---|---|---|---|---|

| H0 7a | LEARQ > LAAP | 0.021 | 0.510 | 0.610 | Supported |

| H0 7b | INSRQ > LAAP | 0.192 | 3.902 | 0.000 | Not Supported |

| H0 7c | INFRQ > LAAP | −0.138 | 2.551 | 0.011 | Not Supported |

| H0 7d | SYSTQ > LAAP | 0.396 | 6.114 | 0.000 | Not Supported |

| H0 7e | INSTQ > LAAP | 0.233 | 3.880 | 0.000 | Not Supported |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, M.M.; Ahmad, N.; Naveed, Q.N.; Patel, A.; Abohashrh, M.; Khaleel, M.A. E-Learning Services to Achieve Sustainable Learning and Academic Performance: An Empirical Study. Sustainability 2021, 13, 2653. https://doi.org/10.3390/su13052653

Alam MM, Ahmad N, Naveed QN, Patel A, Abohashrh M, Khaleel MA. E-Learning Services to Achieve Sustainable Learning and Academic Performance: An Empirical Study. Sustainability. 2021; 13(5):2653. https://doi.org/10.3390/su13052653

Chicago/Turabian StyleAlam, Mohammad Mahtab, Naim Ahmad, Quadri Noorulhasan Naveed, Ayyub Patel, Mohammed Abohashrh, and Mohammed Abdul Khaleel. 2021. "E-Learning Services to Achieve Sustainable Learning and Academic Performance: An Empirical Study" Sustainability 13, no. 5: 2653. https://doi.org/10.3390/su13052653

APA StyleAlam, M. M., Ahmad, N., Naveed, Q. N., Patel, A., Abohashrh, M., & Khaleel, M. A. (2021). E-Learning Services to Achieve Sustainable Learning and Academic Performance: An Empirical Study. Sustainability, 13(5), 2653. https://doi.org/10.3390/su13052653