1. Introduction

With the predicted growth of the world population (9.8 billion people by 2050), the demand for food production is rapidly increasing [

1]. To cope with such demand, it is necessary to improve the efficiency of agricultural processes, assuring safety for people and environmental sustainability [

2] while reducing costs [

3,

4]. In addition, agricultural scientists, farmers, and growers are also facing the challenge of producing more food with less land [

5].

In this context, the protected cultivation systems (namely greenhouses) are a way to optimize production systems and to control resources and factors with the aim of achieving a greater production in a smaller space, increasing yields, and improving quality [

6,

7]. Indeed, protected cultivation is an intensive production method, allowing to protect the crops from unfavorable outdoor climate conditions and offering an optimal environment for crop growth and production, both in terms of quality and quantity [

8].

Generally, a large employment of human workforce in greenhouses is required. As a matter of fact, the cost allocated to manual labor is the largest cost factor: more than 30% of the total production costs are spent on wages for the grower and his employees [

9]. In addition, the availability of a skilled workforce that accepts repetitive tasks in uncomfortable greenhouse conditions is decreasing rapidly, causing a reduced availability of workforce [

10,

11]. Furthermore, the issue of labor shortage has become even more relevant during the current COVID-19 pandemic caused by the SARS-CoV-2 virus, which has limited international travel for migrant workers [

12].

On this basis, protected agriculture lends itself well to automation. In recent years, agricultural technologies have received great attention in scientific research studies and many efforts have been made for their development [

13], especially focusing on the application of robots and automation on intensive crops [

4,

14]. In particular, the use of robotics in protected agriculture would allow to increase overall performances and production efficiency; improving, at the same time, labor quality and safety [

15]. With this regard, a number of authors acknowledge automation as a valuable way to face labor shortage [

13,

16,

17], improving the efficiency of human labor or even reducing the amount of human labor [

9]. Robots can easily perform repetitive tasks, replacing human labor, and, at the same time, can operate in a hazardous environment, thereby strongly reducing the exposure of human operators to risks, such as spraying chemicals and pesticides in protected cultivation. Thus, through the development of automation in greenhouses, it is possible to guarantee better working conditions, protect workers from physical and chemical hazards, and improve their health, comfort, and safety.

Nonetheless, despite the recognized merits and the attention received over the last few years, there are several limitations that hinder the spread of robotics in agriculture. As a matter of fact, due to the complexity and variety of the working settings, there are now relatively few commercial agricultural robots available [

7]. This is also true for the greenhouse environments, where robotic solutions could become vulnerable to the adverse conditions, including dust, humidity, and chemical agents [

18], causing difficulties for both crop–robot and human–robot interactions.

Based on these considerations, a scoping review [

19] was conducted in order to map the research conducted in recent years relating to robotic automation for greenhouse applications, with the goal of highlighting the most important aspects of such technological tools and offering a benchmark that is useful in discussions of future directions in greenhouse automation and in the design of novel agricultural robots for greenhouse applications.

2. Materials and Methods

The scoping review’s literature search occurred between January and February 2021. The currently available literature concerning robots and automated solutions for greenhouse applications have been reviewed through consultation of international databases of journals, namely: Scopus, Web of Science, Springer, Taylor & Francis, and IEEE. PRISMA guidelines were followed for conducting the review [

19].

A keyword-based search with the following combination of keywords was used to identify relevant articles: (robot* OR robotic tool* OR robotic application*) AND (greenhouse OR protected crop* OR protected cultivation*). Publications from peer-reviewed journals as well as from conference proceedings were included. The review included articles published in English, reporting results of studies conducted in any geographical area during the last six years (in detail, from 1 January 2015 to 31 December 2020). After removing the duplicates, the remaining articles were screened in order of titles, abstracts, and the full text. To be shortlisted in the literature review, the publications had to meet the following criteria: (i) the reported study had to describe (in terms of hardware and/or software) the design and/or the development of agricultural robot prototypes and/or automated solutions (or effectors); (ii) the described automated solutions should be designed to operate in greenhouses; and (iii) the study should perform one or more tasks meant to support/substitute the human workforce. In compliance with these criteria, all studies that just related to the monitoring systems of greenhouses environmental parameters, or focused exclusively on algorithms for tasks performance or simulation modeling, were excluded. Moreover, redundant articles, describing the same automated solution (e.g., conference papers), were also not considered.

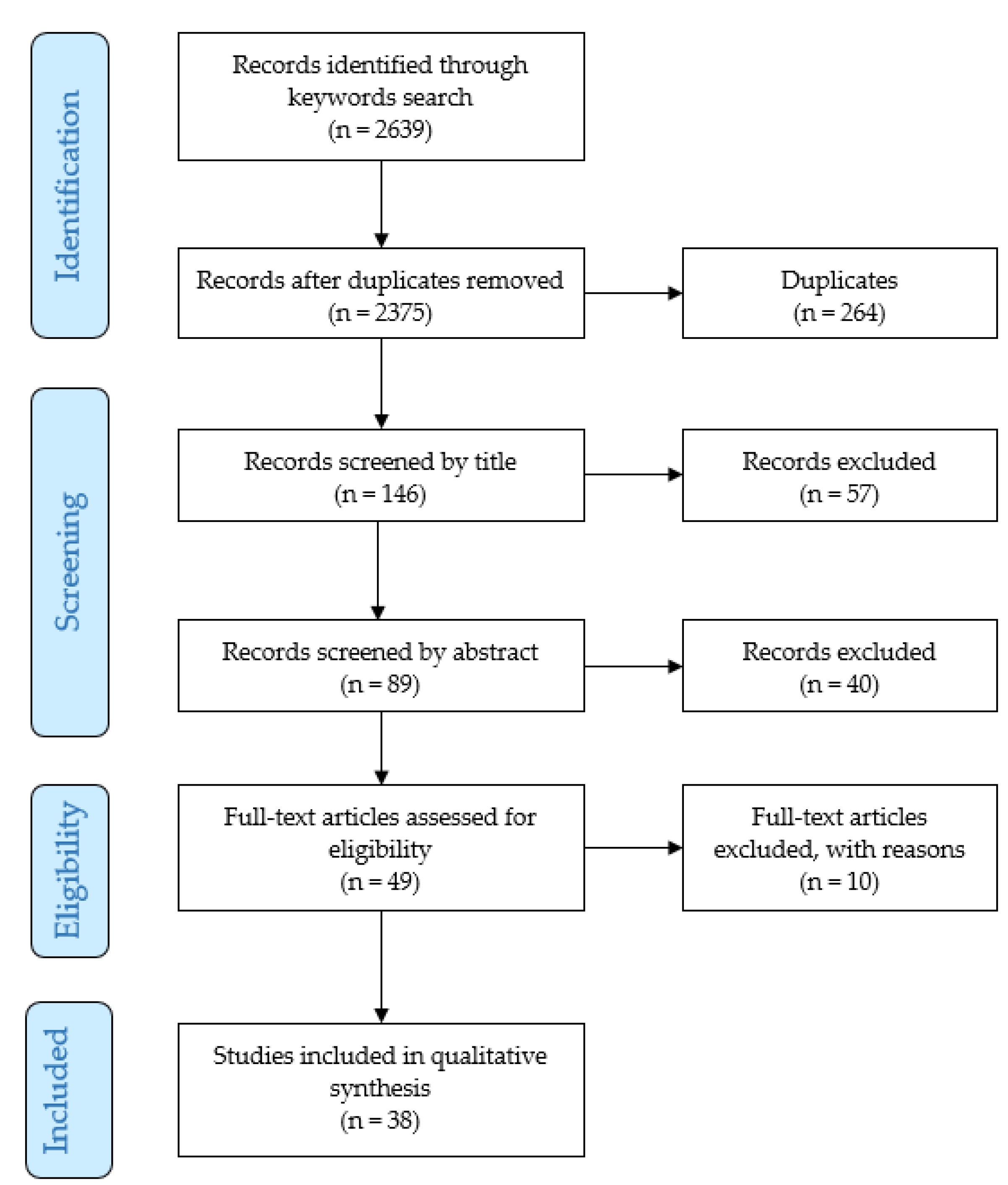

Figure 1 illustrates the selection process for the review (

Figure 1). Two authors worked independently on the screening, and any disagreements were handled through conversation until a consensus was reached. The first author extracted the data and the second author double-checked it.

Once the articles eligible for inclusion were selected, the findings that related to each described automated solution were summarized as follows: (i) target crops; (ii) operational tasks of the agricultural robots; (iii) development stages reached in the experimental setup, namely if the described solution corresponded to a phase of hardware/software design, academic study, a prototype, or commercial product; and (iv) the type of performance tests, where applicable. In detail, based on Bechar and Vigneault [

4], the operational tasks were categorized into “main task”, which usually concerns the execution of a specific agronomic practice (planting, weeding, pruning, picking, harvesting, etc.) and into “supporting tasks”, which are the functional abilities of the greenhouse robots that are necessary in order to perform the “main task”, e.g., localization and navigation, detection of the object to treat, etc. Finally, based on the detected “main” and “supporting tasks”, the adopted elementary technologies (sub-systems and devices) were compared and discussed. Additional transversal information was retrieved with regard to guidance and navigation systems. Such information was classified based on a scheme proposed by Mousazadeh et al. [

20] for agricultural autonomous off-road vehicles; however, not all studies covered the complete information.

Figure 1.

Flow diagram of the search and selection processes for articles included in the study (adapted from Page et al. [

21]).

Figure 1.

Flow diagram of the search and selection processes for articles included in the study (adapted from Page et al. [

21]).

3. Results

The database research identified 2639 articles containing the search terms. After eliminating any duplicates (264), the articles were reviewed using inclusion and exclusion criteria for titles, abstracts, and full text. A total of 38 publications were retrieved after screening, all of which were published within 2015 and 2020 (

Figure 1).

A synthesis of retrieved studies and adopted categorizations is presented in

Table 1.

The majority of studies focus on the research and development of greenhouse robots or their components; moreover, the automated solutions described are generally ad hoc and crop specific, owing to the high specialization required to accomplish certain tasks for each plant species. It follows that the most studied greenhouse crops for robotic implementation are the sweet pepper (

Capsicum annuum L.) with 8 publications (21%) [

3,

10,

22,

23,

24,

25,

26,

27], followed by 5 publications (13%) on tomato (

Solanum lycopersicum L.) [

14,

15,

28,

29,

30], 4 publications (11%) on strawberries (

Fragaria × ananassa Duch.) [

31,

32,

33,

34], and 3 publications (8%) on cucumber (

Cucumis sativus L.) [

35,

36,

37]. Raspberry (

Rubus idaeus L.), bramble (

Rubus fruticosus L.), and cherry tomato (

Solanum lycopersicum var. cerasiforme) are also mentioned in at least one paper [

38,

39,

40]. The remaining 34% of publications [

14,

17,

41,

42,

43,

44,

45,

46,

47,

48,

49,

50,

51,

52] do not specify which crop the robot is designed for, mostly because the study presented a generic software/hardware prototype, or the robot is designed for a generic supporting task (i.e., spraying or weeding), and has thus not been adapted for a specific crop. Therefore, the low adaptability of greenhouse robots to other production systems directs the results.

With reference to the development stages reached in the experimental setup, it emerged that most studies refer to specific software/hardware redesigning and development (39% of the studies), followed by prototype crafting that is the implementation of a first partially operational robot (26% of the studies), and the “academic robots” that are result of academic research and usually not commercially available (21% of studies). Eventually, 13% of the publications report test results in the operating environment and describe robots already available on the market, which are the result of private R&D.

Moreover, only 24 out of 38 studies evaluated the robot’s performance in a greenhouse environment; the remaining studies did not go beyond modeling and laboratory testing.

3.1. Summary of Findings according to Performed Tasks and Operations

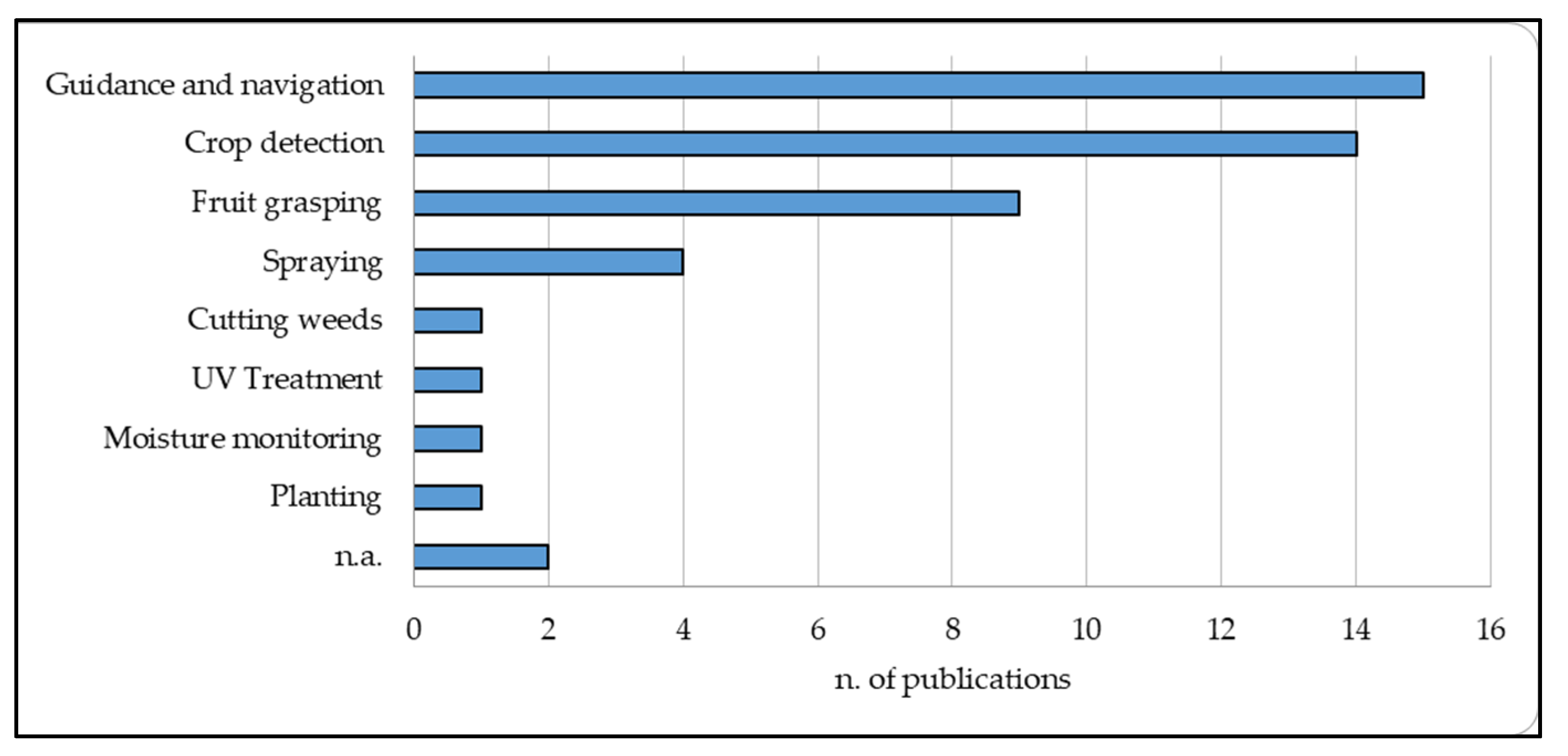

The majority of reviewed works are designed to perform support in harvesting, which is mentioned in almost 47% of the manuscripts, followed by plant protection and weed control, which account for 18%. Another 18% of the papers describe robots designed to carry out multiple tasks. Additionally, although with less frequency, other tasks are mentioned, i.e., two publications show the design and development of planting robots, while for fertilizing, grafting, watering, and pollination, one study each was retrieved (

Figure 2).

Nevertheless, with the aim of depicting the current state in the development and technology of this type of robot, “supporting tasks”, the fundamental tasks enabling the system to perform its main task, are the key features of greenhouse robots on which research focuses first. Indeed, each “supporting task” is in charge of one or more sub-systems and devices, and a single sub-system or device might perform many “supporting tasks” [

4].

Most of the studies focus on various strategies for autonomous guidance through the adoption of tools and sensors. Hence, the first most frequently observed supporting task is guidance and navigation, comprising 31% of studies, because this task cuts across all of the main tasks. For the case of the present review, harvesting was the most described among the “main tasks”; it follows that the second main supporting task is represented by crop detection, followed by crop grasping (

Figure 2). This means that 29% of the studies perform software simulation or laboratory and greenhouse tests for potentially harvested recognition activity, while 19% perform collection. Of the publications, 8% describe systems for autonomous spraying, which is part of the “plant protection and weed control” main task. The remaining supporting tasks, such as cutting weeds, UV treatment, moisture monitoring, and planting are represented by one study each (

Figure 2).

3.1.1. Guidance and Navigation Systems

Guidance and navigation systems play a pivotal part in autonomous vehicles. Considering greenhouse robots, guidance and navigation can be the system’s main task, but in most cases, they act as supporting tasks for spraying, or for transporting a robot from plant to plant during the harvesting process.

The level of autonomy in the displacement varies: robot orientation within the greenhouse environment is managed by the guided movement systems, which guarantee autonomy of movement, and where, in some cases, the trajectory is already defined [

6], e.g., through predefined waypoints. In other cases, the greenhouse robot is moved by a fixed navigation system, specifically guided by rails, as highlighted in some of the retrieved studies [

3,

10,

15,

22,

36,

37,

47,

48,

49,

53]. In particular, in Heravi et al. [

37], the robot is mounted on a monorail that is responsible for supporting robot navigations and stopping between two cucumber rows. In other cases, the robot is able to move from the flat floor to the rails [

36,

48]. Likewise, the robots by Arad et al. and Bac et al. [

3,

10] are designed to autonomously drive on heating pipe rails to travel along the plants and on the concrete floor.

Other navigation solutions enable the mobile robot to move freely, without involving major changes to the environments’ structure. In this case, the internal and external sensors of the robot are essential to define the position and therefore the orientation of the robot [

50].

Most of the reviewed studies provide a minimum detail of the navigation and guidance systems adopted for the developed greenhouse robot, while a subset of studies particularly focuses on this issue [

42,

46,

50]. The robot navigation system is different depending on project and application, but in most cases, a suite of various components (sensors and data collection devices) is integrated to evaluate the position and heading.

GNSS Sensors

One of the basic solutions commonly used on autonomous agricultural machinery for navigation is the global navigation satellite system (GNSS). In particular, although this kind of system is more appropriate for outdoor navigation, some greenhouse robots [

14,

39,

42,

44] adopt a GPS or differential GPS (DGPS) receiver. For greater accuracy, high-precision GNSS positioning systems are adopted; the most commonly used are Real Time Kinematic (RTK) GNSS receivers [

28,

48,

50]. The RTK technology provides centimeter accuracy thanks to the real-time position correction from a reference station or network aimed at reducing errors [

54].

Machine Vision

Vision-based techniques are key components to obtain essential data about the robot motion and its environment. For these kinds of navigation systems, the input data are images, such as a photograph or a video frame; these are subjected to processing, which returns a set of characteristics or parameters relating the images and containing significant information [

20]. These techniques are usually based on the implementation of algorithms to perform image processing, the computation of the steering angle, the generation of steering parameters and the decision index, the selection of the driving algorithm from the set, and the transmission of the control word to the control board for actuation [

17]. In Masuzawa et al. [

13], with the aim of developing a mobile robot able to support flower harvesting, a simultaneous localization and mapping (SLAM) algorithm is used to map the environment, while an RGB-D camera is installed not only for measuring the distance to objects, but also to allow for person following capability. Indeed, this study uses the movement of a person as a guide to safe path planning: the robot tries to trace the person’s trajectory by a combination of image-based person detection and tracking and a trajectory generation for following movements.

Optical Sensors

In addition to vision-based sensors, image processing can also be performed by optical sensor systems.

One of the most widespread optical remote sensing technologies, used for robot localization, mapping, and obstacle avoidance, is the Light Detection And Ranging (LIDAR) technology, which can measure distance by illuminating the target with light, often using pulses from a laser.

The distance between the target object and the robot is calculated by the usage of a laser emitter and a radar that receive the reflected laser waves. This enables for determining the target’s profile and coordinate position, thus providing crucial information for autonomous navigation [

29].

The Thorvald II platform [

36,

48] employs LIDAR for navigation in combination with a map and navigates the through pre-recorded waypoints on the map. Similarly in Harik and Korsaeth [

50] predefined waypoints navigation is obtained employing a single LIDAR sensor attached to the robot’s front side and adopting the open source Hector SLAM algorithm for pose estimation. The autonomous navigation of the robot is ensured thanks to the artificial potential field (APF) controller, a local-path method for obstacle avoidance.

Among others, the “BrambleBee” robot described by Ohi et al. [

39] is equipped with a suite of sensors, including 3D LIDAR, multiple cameras, wheel encoders, and an inertial measurement unit (IMU). Likewise, in Xiong et al. [

34], encoder-based velocity estimates are used together with data from the 2D LIDAR to create a map using the GMapping simultaneous localization and mapping (SLAM) technique. The robot is teleoperated throughout this procedure. The robot stores and uses the resultant map during autonomous operation. For localization in the greenhouse or tunnel, the robot uses the map, LIDAR data, and encoder-based odometry.

Furthermore, the tomato harvesting robot developed by Lili et al. [

29] moves along a predetermined path through a navigation system with automatic steering, complementarily provided by a laser navigation control system, in which a laser scanner is used to adjust the parameters to guide the movement of the robot along the navigation path. Furthermore, Xue et al. [

44] equipped their multipurpose robot with a sensing unit that included a laser radar, and in Yamashita at al. [

45], a 2D laser range finder measured the distance by the time of flight (ToF) method, in which the distance is calculated by measuring the time the reflected light takes to travel from the target object. In addition, a depth camera uses the active infrared stereo technique to measure the projected IR laser pattern from the IR laser projector, using left and right IR cameras, and then calculates the distance using stereo vision.

Inertial and Electro-Mechanical Sensors

The inertial measurement unit (IMU) represents further orientation solutions, often supporting the GNSS sensors; it usually comprises accelerometers (measuring linear accelerations or inertia), gyroscopes (measuring rotational accelerations), and other complementary electro-mechanical devices, such as wheel encoders and electronic compasses [

33,

36,

39,

44,

48].

In Harik and Korsaeth [

50], the robot’s location was determined using the reflectance of the light emitted from a total station with a fixed, known position within the greenhouse to a prism installed on the robot, while the orientation was determined using an on-board IMU.

In particular, Chang et al. [

46], with the aim of implementing a low-cost planting robot with the capability of high-precision straight-line navigation, propose a two-stage guidance control technique to manage the robot’s heading angle and reduce the lateral error. The suggested technique is implemented using a low-cost micro-electro-mechanical control system. To act as a guide, the control approach requires a single-axis electronic compass with a stabilizing algorithm to maintain the compass level. The pitch angle and roll angle, which are obtained using an accelerometer and a gyroscope, are then used to determine the geomagnetic intensities along the X and Y axes.

Electromagnetic and Ultrasonic Sensors

In the strawberry picking robot described by De Preter et al. [

33], a state estimator system was generated, combining a gyroscope and wheel encoders with an ultra-wideband (UWB) indoor positioning system. UWB technology allows for relative localization without visual contact and avoids the limitations of GPS when moving near to high vegetation. The robot is equipped with a beacon that emits electromagnetic waves, which are achieved by stationary beacons (or anchors) placed at the boundaries and corners of the greenhouse, establishing the vehicle’s relative position by measuring the time-of-flight (ToF) of the waves themselves. The state estimator generates and tracks the vehicle’s pose with centimeter precision. Ultrasonic sensors are also installed on the robots described Cantelli et al. and Xue et al. [

28,

44], and on the spraying robot realized by Mosalanejad et al. [

42], where the navigation system is evaluated. Six ultrasonic sensors collect information about locations inside the aisles, while two ultrasonic sensors at the top of the robot and two micro switches at the bottom are utilized to identify any obstructions in the robot’s route.

The robot studied by Grimstad et al. [

36,

48], in front and between the driving wheels, has an array of down-facing ultrasonic range finders. These sensors identify whether the robot is traveling on a level floor or on rails, as well as whether it is driving outside of an edge. Likewise, the robotic vehicle described by Mosalanejad [

42] adopts six ultrasonic sensors, covering the left and right side of the robot, to gather the information related to position inside the aisles. Finally, in Huang et al. [

51], who aimed at developing and evaluating the accuracy of orientation measurement, the orientation system is based on sound signals, namely the Spread Spectrum Sound (SSSound) technology, which is able to determine the position within a 30 m × 30 m area to an accuracy of 20 mm.

3.1.2. Crop Detection Systems

“Fruit or vegetable detection” is a crucial supporting task, which is necessary for identifying the final target of the main task to accomplish. It is the activity carried by the robot through the use of various visual sensors and precedes the carrying out of the final task, such as the fruit collection or the targeted application of fertilizer. One of the most adopted solutions identified in the literature is the RGB-D camera, which is generally combined with other sensors.

The RGB-D camera [

10,

26,

34,

39] implements a depth-sensing device and an RGB camera working in combination to return a conventional image with depth information, and it is often associated with an illumination system to create the optimal conditions to film. In the literature, the RGB-D cameras are adopted to have a three-dimensional location of the fruit, and in the meantime to carry out a shape and color-based segmentation, through a specifically trained algorithm aimed at collecting it. Image processing based on a color threshold is also important to discriminate the ripe fruit. The camera is usually placed on the end-effector in order to change the perspective and to create a short-range position to the fruit target.

In the study conducted by Arad et al. [

10], using RGB-D pictures, a technique has been developed to estimate the angle at which the fruit was positioned around the stem in order to find the stem relative to the fruit.

Ohi et al. [

39] have employed this technology to develop a robot able to perform the fully autonomous precision pollination of bramble plants, operating in a greenhouse environment. This vision technique is utilized to detect the position of flowers for pollination, and an RGB-D camera is installed on the robotic arm for precise, short-range positioning.

Some studies [

3,

22] have installed a ToF sensor and an RGB camera separately; however, these systems have identical applications, with the difference being the paired sensors instead of an all-in-one product.

In some cases, the solution adopted for fruit recognition resorts to the use of multiple RGB cameras [

23,

25,

29,

33,

52,

53], usually two or three, mounted at different positions and viewing angles, in order to gain a stereo vision system to measure the distance to the target. As in the previous case, an image processing algorithm is implemented to recognize ripe and ready-to-harvest fruit based on color and shape.

3.1.3. Fruit Grasping Systems

In the study proposed by Hemming et al. [

22], two end effectors for sweet pepper harvesting were developed and compared. The first is the so-called “Fin Ray” end effector, with four elastic fingers that are able to adapt to the curvature of the fruit, thus assuring the grip, and then cutting the peduncle with a scissor-like cut mechanism on top of the fingers. The second type is the lip-type end effector, consisting of a suction cup to grasp the fruit and a vacuum sensor to ensure it has been grasped, and of two rings, which enclose and cut the peduncle with a circular blade integrated in the upper lip. The most significant disadvantage of the Fin Ray end effector over the lip type was the substantial stem damage of 4–13%, as documented by Bac et al. [

3].

Another example of a “finger-type” end effector is reported by De Preter et al. [

33]. The study intends to describe the development of a robot harvesting strawberries. The gripper consists of soft-touch fingers, which are able to grasp the strawberry without the stem by applying a rotational motion during the grasping, aiming to reduce possible product damages.

Xiong et al. [

34] have developed a strawberry harvesting system consisting of arms mounted on a horizontal track, on which finger grippers are installed, equipped with IR sensors to detect the distance between the obstacle and the sensor, and which are capable of overcoming the obstacles to reach the ripe fruit to be harvested.

Lehnert et al. [

26] presented a new sweet pepper harvester, which was characterized by a suction cup as a gripper end effector and an oscillating blade to cut the peduncle above. The sweet pepper is collected by gripping it through a vacuum gripper, which is generated by a vacuum pump, then cut by the oscillating blade.

A different fruit picking system is presented by Arad et al. [

10], who have designed a new end effector composed of an RGB-D camera with LED lighting fixtures, and on the top of the housing, a plant stem fixation mechanism with a vibrating knife designed to cut the peduncle. The end effector finishes with a catching device composed of various spring-loaded fingers. In comparison to the others, this end-effector does not have grip devices but is instead designed to approach the stem above the fruit, cut the peduncle, and to catch the detached fruit.

3.1.4. Spraying Systems

In Cantelli et al. [

28], the spraying system is based on a basic spraying module that has been made “smart” by add-on technologies that allow for system automation. A hydraulic subsystem and an electronic control unit constitute the sprayer unit. The hydraulic system is made of a 130 L tank, an electrically actuated pump with a 1 kW DC motor with a gearbox, a manual pressure regulator, an electric flux regulator valve, a pressure sensor, a flow rate meter, and two electric on/off valves. On the left and right sides of the sprayer, two vertical stainless-steel bars may be controlled independently from one another. The electronic control unit enables the automatic control of the system. Gao et al. [

47] have developed a prototype of a robot sprayer, which is able to target and vary the spray angle based on height of the crops, canopy shape, thickness, and plant density. The automatic sprayer system works with a series of magnetic sensors installed on the tracks, which directly face the canopy ridge.

Mosalanejad et al. [

42] describe their robot sprayer prototype as a system that requires several inputs; therefore, the robot’s correct operation is largely dependent on the effectiveness of the various sensors used, which include a combination of infrared, ultrasonic, and level sensors.

Rincòn et al. [

30] have developed a remote-controlled self-propelled electric sprayer, in which four different configurations were tested in a greenhouse tomato crop to evaluate the efficiency of the treatment applications. In detail, they have demonstrated that robot spray application provided better penetration than a traditional greenhouse hand-held sprayer, and future research is intended to optimize the air assistance system and the other features of the robot in order to improve performance and the amount of plant protection product that reaches the target.

3.1.5. Other Supporting Tasks

Weeding

Heravi et al. [

37] designed a prototype of a robotic weed control system for exclusive application in protected cultivation (more specifically tailored to cucumber cultivation), which is characterized by a robotic platform supported by a monorail navigation system. The platform consists of a mechanical arms system equipped with ultrasonic sensors, which sense the presence of weeds between the cucumber plants, causing a blade on the mechanical arm to move, thus cutting the detected weeds between the crop.

UV Treatment

Grimstead et al. [

48] presented the patented Thorvald II robot, a robotic system consisting of multiple modules that may be connected in a variety of ways to provide a wide range of robot designs able to accomplish various tasks, with different crops and configurations depending on the work environment. In a later publication [

36], they proposed an original application of the treatment with UV rays in cucumber to prevent powdery mildew from establishing on the plants. Lights are set in arcs on either side of the robot, which adjust to the right height above the plants, depending on feedback from an array of ultrasonic sensors.

Moisture Monitoring

Al-beeshi et al. [

41] designed a self-propelled robot, which is able to analyze soil moisture, and to monitor and adjust the water pump’s condition in order to activate the soil watering function.

Planting

A multi-task robotic work cell for greenhouse transplanting and seedlings has been developed by Han et al. [

49]. The work cell mainly consists of two conveyors, a filling unit, a control system, and a transplanting system, which is made up of multi-grippers designed to automatically pick up and plant whole rows of seedlings.

Chang et al. [

46] designed and implemented a low-cost planting robot that can navigate in straight lines with great precision. The robot is equipped with a drilling mechanism that can excavate to a depth of at least 30 cm, which is supported by an ultrasonic sensor used to detect the drilling depth.

4. Discussion

The present literature review portrays some of the key issues related to greenhouse robots, focusing on the technical developments achieved. Moreover, this study highlights some of the problems that still need to be solved and offers the opportunity to discuss future perspectives. The first noteworthy aspect is the high specialization characterizing some of the developed greenhouse robots. Most of the investigated technologies are generally related to crop localization and crop detachment [

26]. Working mechanisms and conditions can be very varied, even within the same species and cultivar, and may require adjustments or replacement of components [

33]. This may be a limiting factor, because target crops often require a specific design of tools. To overcome this issue, some retrieved studies have developed multi-purpose robots, consisting of modular platforms adapted to perform a wide range of tasks, based just on the end-effector. As predicted years ago by Belforte et al. [

2], the robot’s adaptability in performing a variety of agricultural tasks is a key feature to improve the competitiveness of the greenhouse cultivations sector.

The development stage reached in the experimental setup can be considered as an indicator of the progress of research in the greenhouse robot sector. The majority of collected studies report simulations, experiments, preliminary results, and specifications related to lab-scale prototypes or hardware/software design, thus underlining how challenging the development of autonomous solutions able to support or replace human labor is. Only a minority of the share of studies dealt with commercial solutions. This is a well-known issue pointed out by earlier studies, remarking that only a few automated solutions have been adopted and commercialized [

4,

55]. Bergerman [

56] already observed how difficult it is for the robotic system in protected cultivation to become commercially available, due to the complexity of the operation. In detail, Van Henten [

8] remarked that most of the solutions already on the market consist of automated systems, which are inflexible to variations in the working conditions, contain a small amount of sensors, are tailored for relatively simple phases, and focus on well-defined objects in terms of location, size, shape, and color. On the other hand, as remarked by Stentz [

57] and Bechar and Vigneault [

4], partial autonomy can add value to the machine long before full autonomy is achieved.

With respect to the automated solutions for application in greenhouses, most of the literature retrieved in the present review focus on harvesting automation and pay particular attention to “supporting tasks” such as guidance problems, vision-based control, advanced image processing techniques, and gripper design, in line with the studies conducted by Ramin Shamshiri et al. [

5] and Belforte et al. [

2].

In detail, one of the main issues resulted to be automatic guidance and navigation. With this regard, a substantial distinction may be made between vehicles completely autonomous and vehicles characterized by a fixed navigation system, in which the trajectory is already defined and the robot navigates through a list of assigned waypoints or is guided by a structure such as rails. On the one hand, guided displacement systems have the advantage of avoiding concerns about the orientation of the robot within the greenhouse, allowing to focus on the design of the system for decision making and not on the location in space [

6]. The traditional methods for orientation determination, such as the GNSS navigation systems, may encounter troubles in greenhouse environments. In particular, these methods have limited application due to the errors associated with indoor GPS compasses and to the sensitivity of the magnetic direction sensors to the metallic materials in the greenhouses [

51]. Post et al. [

58] estimated that in indoor environments, GPS compass measurements can have errors of more than 10˚. To face such problems, many manufacturers adopt, for more accuracy, the multi-frequency RTK receivers, providing more “immunity” to the temporary interruption of the GNSS signals caused by interference or by site-specific effects [

54]. However, the rail-based solution also presents some drawbacks: first, a railed system implies the additional costs to modify the typical greenhouse structure [

50]. Moreover, rails are unfit for path discontinuity when moving from one row to another and cannot be used for tight turns. Another navigation system for agricultural autonomous off-road vehicles has been described by Mousazadhe et al. [

20] in their study, where they affirmed that the machine vision-based navigation system performed well at all speeds and on various pathways, with average errors of less than 3 cm. The same authors also found that during up to 3.1 m/s speed, the LADAR-based navigation performed better in straight and curved courses. Hence, as observed in a number of the retrieved studies, using both machine vision and laser radar may provide a more robust guidance, as well as obstacle detection capability [

59]. Both methods have advantages and disadvantages; laser sensors are “active sensors”, providing reliable and precise information about the surrounding area, and they also work with the varying lighting conditions inside a greenhouse [

50]. However, some basic constraints should be acknowledged, such as the one degree of freedom, bigger volume, and higher energy usage. Comparatively, vision systems have the capability to handle larger amounts of data, have lower energy consumption, a smaller size, and higher resolution [

20].

Crop detection was the second most described supporting task in the literature review. In this context, vision-based systems are pivotal for harvesting robots. Crop detection can be quite challenging: the term “automatic fruit recognition” refers to the process of detecting and finding fruit in a natural complicated situation [

60]. When color differences are more pronounced, fruits detection is typically simple. However, when fruits to collect have a color very similar to the green background, visual sensors should be able to discriminate the green fruits from the green background based on morphological characteristics [

56,

61].

Within the retrieved studies, the most adopted vision-based solution is the RGB-D camera, generally combined with other sensors, which can be used according to a monocular or stereovision scheme. These two schemes do not differ much by the number of installed cameras but rather by the principle of crop identification. Indeed, there may be vision systems with several cameras forming a redundancy of monocular systems. In their review, Zhao et al. [

60] analyzed the applications, principles, advantages, and limitations of various vision schemes for harvesting robots. As a matter of fact, in both the monocular and binocular stereo-vision schemes, the target crop is identified by color, shape, and texture features, while the binocular scheme allows to obtain through triangulation the three-dimensional map and the positing of the target crop. Thus, even though a monocular system is the simplest and comes with the lowest cost, the binocular stereo-vision scheme is the most common approach to obtain the 3D position of the detected crop. Moreover, the major disadvantage of the monocular schemes is that images captured by the visual sensor are sensitive to illumination conditions [

60]. On the other hand, the disadvantage of the stereo-vision scheme is its complexity and long computation time due to stereo matching [

62].

After crop detection, fruit grasping and manipulation is also quite challenging. To solve the problem of grasping and detaching the fruits, an effective gripping and cutting system is required to harvest fruits, preventing physical damage that can reduce its economic value [

56]. Among the reviewed studies, a number of different types of end effectors were found, working with different approaches; to this regard, diverging viewpoints on end-effector designs are available in the literature. For instance, Bachche [

61] advocated for the development of a multi-tasking and multi-sensory end-effector, which is able to perform several operations in a sequential loop and to grasp the peduncle of the fruit or fruit cluster instead of grasping the fruits directly. This type of gripping systems, aiming at avoiding fruits damages, would substitute those systems developed to grasp fruit or fruit clusters only. At the same time, Bac et al., 2017, through recognizing the positive effects of grasping the fruits’ peduncle, pointed out that occlusions by foliage and fruits often complicate the detection of the gripping point and reaching the peduncle. Moreover, even peduncle length varies among crops and can influence the cut success. Hence, one of the solutions envisaged is to find or breed cultivars that are suitable for robotic harvesting. However, as observed by Liu et al. [

63], up to date, the majority of research studies conducted have focused on the development of autonomous multi-sensory end-effector spherical fruit harvesting robots.

4.1. Challenges and Future Research

Based on the review and synthesis of the retrieved literature, some key gaps and opportunities for future research were identified.

Summarizing from the previous discussions of the results, at present, the main challenge is imposed by those technologies that enable automated solutions in the unstructured, varying, and complex settings characterizing greenhouses. In particular, most of the literature accessed in the present review addressed the development of algorithms and technologies related to guidance, vision-based systems, and advanced image processing techniques, specifically aimed at crop detection and gripping.

Furthermore, some additional cross-cutting issues, often mentioned within the retrieved studies, could represent a challenge for future research in greenhouse automation.

As asserted by Bechar and Vigneault [

4], the implementation of robotics technology in agriculture may be sustained by a number of conditions. In detail, automation is auspicable when the use of agricultural robots: i) is more convenient than the cost of any concurrent alternatives; ii) enables increasing farm production capability, profit, and survivability under competitive market conditions; iii) improves the quality and uniformity of the product; iv) minimizes the uncertainty and variance in production processes; v) enables the farmer to make decisions and act at higher resolution; and vi) allows to perform specific tasks that are defined as hazardous or that cannot be performed manually.

Likewise, based on these statements and on the solicitation that emerged from the reviewed studies, the main issues that could hamper the development of greenhouse robots can be summarized into: (i) economic issues, (ii) performance issues, and (iii) human–robot interaction and safety issues. Future directions of research should address these aspects.

As reported by a number of authors [

53,

56], high costs are the bottlenecks for the commercial viability of greenhouse robots. In particular, one of the main concerns related to greenhouse robots is making a feasible business case also for crops with smaller market size [

8]. As a matter of fact, there is a risk for robots in protected cultivation to be designed as complex and costly systems that are appealing only when used on high-value crops. Furthermore, as already mentioned, most available automated solutions are able to perform only a single specific agricultural task and are employed only a few times per year due to the seasonality of most agricultural productions. This problem raises expenses that cannot be offset by sharing the equipment with other farmers because they normally work on the same scheduled period and use the machine at the same time [

2].

Together with high costs, another obstacle to the commercial application of greenhouse robots is the low work efficiency. This is particularly true for harvesting robots: most of the prototypes carrying out harvest tasks have low operational efficiency because fruit detection, grasping, and collection require long processing and implementation times. In general, the full autonomy for an agricultural robot is difficult to reach [

4], and robot efficiency can only be implemented and demonstrated via numerous field trials, which are time-consuming and costly [

56]. Some studies already analyzed the work efficiency of robots compared to that of a human workforce, as well as the relative economic analyses of robot usage [

3,

12,

27]. In detail, although Woo et al. [

12] recorded an average hourly production of the robot to be about five times lower than that of skilled workers, they found the robot to be more productive overall, because it could work around the clock. Nonetheless, in order to increase greenhouse robots’ performance, Zhao et al. 2016 [

53] suggested to combine human workers and robots in synergy, e.g., operating a task distribution between human and machine or promoting collaboration between the human operator and the robot. To support this, Bergerman et al. [

56] underlined that human–robot interaction might be an interesting and cost-effective intermediate step, allowing for human guidance and supervision only when the machine needs assistance. Such a human–robot collaboration system could make data collection easier in real working settings, and could lead to the learning and improvement of algorithms, thus paving the path for completely autonomous operations in the future.

4.2. Limitations of the Study

Although this study was rigorously performed, some limitations concerning the literature sampling criteria and analysis should be acknowledged.

First, due to restrictions in the keywords adopted for the search, the database utilized, and the time period selected, the final list of retrieved studies may not be exhaustive.

Finally, this review offers a global overview of different automated solutions for greenhouses, providing a general description of the main technological tools adopted to perform the most important tasks and supporting actions without focusing on a specific operation or technology. Future research should attempt to address these limitations and continue to examine the literature and studies addressing a single specific agricultural task and/or technology in order to provide more in-depth technical details.