Learning Self-Regulation Questionnaire (SRQ-L): Psychometric and Measurement Invariance Evidence in Peruvian Undergraduate Students

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Instrument

2.3. Procedure

3. Results

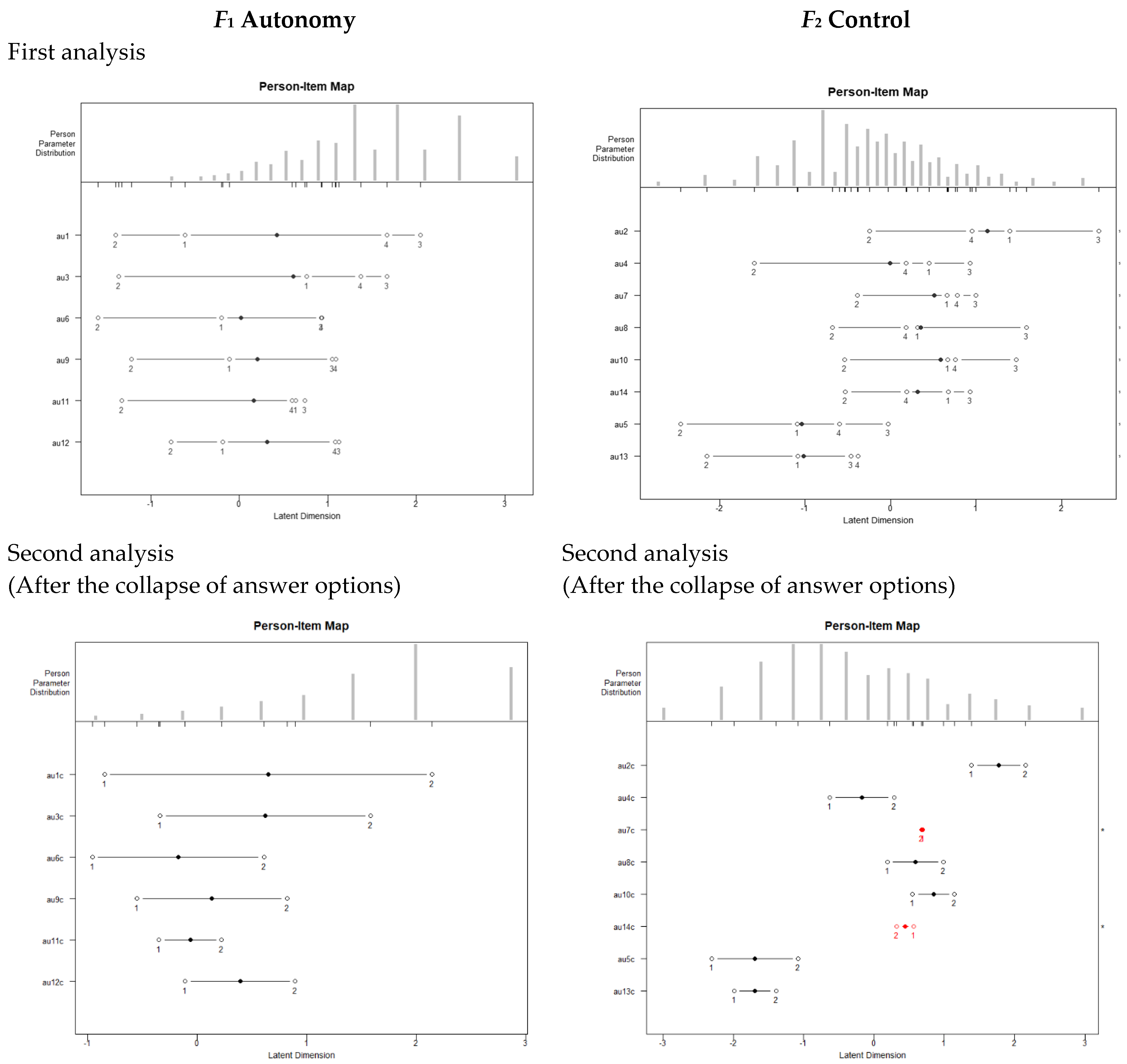

3.1. Item Analysis

3.2. Nonparametric Dimensional Analysis

3.3. Structural Equations Modeling (SEM)-Based Parametric Analysis

3.4. Reliability

3.5. Invariance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hardy, J.H.; Day, E.A.; Steele, L.M. Interrelationships Among Self-Regulated Learning Processes: Toward a Dynamic Process-Based Model of Self-Regulated Learning. J. Manag. 2019, 45, 3146–3177. [Google Scholar] [CrossRef]

- Arias Gallegos, W.L.; Galdos Rodríguez, D.; Ceballos Canaza, K.D. Estilos de enseñanza y autorregulación del aprendizaje en estudiantes de Educación de la Universidad Católica San Pablo. Rev. Estilos Aprendiz. 2018, 11, 83–107. [Google Scholar] [CrossRef]

- Alonso-Tapia, J.; Abello, D.M.; Panadero, E. Regulating emotions and learning motivation in higher education students. Int. J. Emot. Educ. 2020, 12, 73–89. [Google Scholar]

- Muñoz Jaramillo, L.C.; Palacios Bejarano, H.; Ramírez Velasquez, I.M. La autorregulación del aprendizaje mediante la estrategia de trabajo experimental con énfasis investigativo. In Desarrollo y Transformación Social Desde Escenarios Educativos; Giraldo Gutiérrez, F.L., Molina García, J.C., Córdoba Gómez, F.J., Eds.; Instituto Tecnológico Metropolitano: Medellín, Colombia, 2018; pp. 33–35. [Google Scholar]

- Sáez-Delgado, F.; Mella-Norambuena, J.; López-Angulo, Y.; Olea-González, C.; García-Vásquez, H.; Porter, B. Association Between Self-Regulation of Learning, Forced Labor Insertion, Technological Barriers, and Dropout Intention in Chile. Front. Educ. 2021, 6, 801865. [Google Scholar] [CrossRef]

- Stephanou, G.; Mpiontini, M.-H. Metacognitive knowledge and metacognitive regulation in self-regulatory learning style, and in its effects on performance expectation and subsequent performance across diverse school subjects. Psychology 2017, 8, 1941–1975. [Google Scholar] [CrossRef]

- Beekman, K.; Joosten-Ten Brinke, D.; Boshuizen, E. Sustainability of Developed Self-Regulation by Means of Formative Assessment among Young Adolescents: A Longitudinal Study. Front. Educ. 2021, 6, 746819. [Google Scholar] [CrossRef]

- Sitzmann, T.; Ely, K. A meta-analysis of self-regulated learning in work-related training and educational attainment: What we know and where we need to go. Psychol. Bull. 2011, 137, 421–442. [Google Scholar] [CrossRef]

- De la Fuente, J.; Zapata, L.; Martínez-Vicente, J.M.; Sander, P.; Cardelle-Elawar, M. The role of personal self-regulation and regulatory teaching to predict motivational-affective variables, achievement, and satisfaction: A structural model. Front. Psychol. 2015, 6, 399. [Google Scholar] [CrossRef]

- Robles, O.F.J.; Galicia, M.I.X.; Sánchez, V.A. Orientación temporal, autorregulación y aproximación al aprendizaje en el rendimiento académico en estudiantes universitarios. Rev. Elec. Psic. Izt. 2017, 20, 502–518. [Google Scholar]

- Zimmerman, B.J. Self-Regulated Learning: Theories, Measures, and Outcomes. In International Encyclopedia of the Social & Behavioral Sciences, 2nd ed.; Wright, J.D., Ed.; Elsevier: Oxford, UK, 2015; pp. 541–546. [Google Scholar]

- Doo, M.Y.; Bonk, C.J.; Shin, C.H.; Woo, B.-D. Structural relationships among self-regulation, transactional distance, and learning engagement in a large university class using flipped learning. Asia Pac. J. Educ. 2021, 41, 609–625. [Google Scholar] [CrossRef]

- Doo, M.Y.; Bonk, C.J. The effects of self-efficacy, self-regulation and social presence on learning engagement in a large university class using flipped Learning. J. Comput. Assist. Learn. 2020, 36, 997–1010. [Google Scholar] [CrossRef]

- Deci, E.L.; Ryan, R.M. Optimizing Students’ Motivation in the Era of Testing and Pressure: A Self-Determination Theory Perspective. In Building Autonomous Learners: Perspectives from Research and Practice Using Self-Determination Theory; Liu, W.C., Wang, J.C.K., Ryan, R.M., Eds.; Springer: Singapore, 2016; pp. 9–29. [Google Scholar]

- Chen, P.-Y.; Hwang, G.-J. An empirical examination of the effect of self-regulation and the Unified Theory of Acceptance and Use of Technology (UTAUT) factors on the online learning behavioural intention of college students. Asia Pac. J. Educ. 2019, 39, 79–95. [Google Scholar] [CrossRef]

- Duchatelet, D.; Donche, V. Fostering self-efficacy and self-regulation in higher education: A matter of autonomy support or academic motivation? High. Educ. Res. Dev. 2019, 38, 733–747. [Google Scholar] [CrossRef]

- Koh, J.; Farruggia, S.P.; Back, L.T.; Han, C.-w. Self-efficacy and academic success among diverse first-generation college students: The mediating role of self-regulation. Soc. Psychol. Educ. 2022. [Google Scholar] [CrossRef]

- von Keyserlingk, L.; Rubach, C.; Lee, H.R.; Eccles, J.S.; Heckhausen, J. College Students’ motivational beliefs and use of goal-oriented control strategies: Integrating two theories of motivated behavior. Motiv. Emot. 2022. [Google Scholar] [CrossRef]

- Jeno, L.M.; Danielsen, A.G.; Raaheim, A. A prospective investigation of students’ academic achievement and dropout in higher education: A Self-Determination Theory approach. Educ. Psychol. 2018, 38, 1163–1184. [Google Scholar] [CrossRef]

- Mujica, A.D.; Villalobos, M.V.P.; Gutierrez, A.B.B.; Fernandez-Castanon, A.C.; Gonzalez-Pienda, J.A. Affective and cognitive variables involved in structural prediction of university dropout. Psicothema 2019, 31, 429–436. [Google Scholar] [CrossRef]

- Bernardo, A.; Esteban, M.; Cervero, A.; Cerezo, R.; Herrero, F.J. The Influence of Self-Regulation Behaviors on University Students’ Intentions of Persistence. Front. Psychol. 2019, 10, 2284. [Google Scholar] [CrossRef]

- Xu, W.; Shen, Z.-Y.; Lin, S.-J.; Chen, J.-C. Improving the Behavioral Intention of Continuous Online Learning Among Learners in Higher Education During COVID-19. Front. Psychol. 2022, 13, 857709. [Google Scholar] [CrossRef]

- Deci, E.L. Intrinsic Motivation and Self-Determination. In Reference Module in Neuroscience and Biobehavioral Psychology; Stein, J., Ed.; Elsevier: Amsterdam, The Netherlands, 2017. [Google Scholar]

- Williams, G.C.; Deci, E.L. Internalization of biopsychosocial values by medical students: A test of self-determination theory. J. Pers. Soc. Psychol. 1996, 70, 767–779. [Google Scholar] [CrossRef]

- Vives-Varela, T.; Durán-Cárdenas, C.; Varela-Ruíz, M.; Fortoul van der Goes, T. La autorregulación en el aprendizaje, la luz de un faro en el mar. Investig. Educ. Med. 2014, 3, 34–39. [Google Scholar] [CrossRef]

- Panadero, E.; Alonso-Tapia, J. Teorías de autorregulación educativa: Una comparación y reflexión teórica. Psicol. Educ. 2014, 20, 11–22. [Google Scholar] [CrossRef] [Green Version]

- Matos Fernández, L. Adaptación de dos cuestionarios de motivación: Autorregulación del Aprendizaje y Clima de Aprendizaje. Persona 2009, 12, 167–185. [Google Scholar] [CrossRef]

- Black, A.E.; Deci, E.L. The effects of instructors’ autonomy support and students’ autonomous motivation on learning organic chemistry: A self-determination theory perspective. Sci. Educ. 2000, 84, 740–756. [Google Scholar] [CrossRef]

- Chávez Ventura, G.M.; Merino Soto, C. Validez estructural de la escala de autorregulación del aprendizaje para estudiantes universitarios. Rev. Digit. Investig. Doc. Univ. 2016, 9, 65–76. [Google Scholar] [CrossRef]

- Elosua, P. Evaluación progresiva de la invarianza factorial entre las versiones original y adaptada de una escala de autoconcepto. Psicothema 2005, 17, 356–362. [Google Scholar]

- Banarjee, P.; Kumar, K. A Study on Self-Regulated Learning and Academic Achievement among the Science Graduate Students. Int. J. Multidisc. Approach Stud. 2014, 1, 329–342. [Google Scholar]

- Charter, R.A. Formulas for Reliable and Abnormal Differences in Raw Test Scores. Percept. Mot. Ski. 1996, 83, 1017–1018. [Google Scholar] [CrossRef]

- Dominguez Lara, S.A.; Merino Soto, C.; Navarro Loli, J.S. Estimación paramétrica de la confiabilidad y diferencias confiables. Rev. Med. Chile 2016, 144, 406–407. [Google Scholar] [CrossRef]

- Chung-chien Chang, K. Examining Learners’ Self-regulatory Behaviors and Their Task Engagement in Writing Revision. Bull. Educ. Psychol. 2017, 48, 449–467. [Google Scholar]

- Ho, F.L. Self-Determination Theory: The Roles of Emotion and Trait Mindfulness in Motivation; Linnaeus University: Växjö, Sweden, 2016. [Google Scholar]

- Jeno, L.M.; Grytnes, J.-A.; Vandvik, V. The effect of a mobile-application tool on biology students’ motivation and achievement in species identification: A Self-Determination Theory perspective. Comput. Educ. 2017, 107, 1–12. [Google Scholar] [CrossRef]

- Hall, N.R. Autonomy and the Student Experience in Introductory Physics; University of California: Los Angeles, CA, USA, 2013. [Google Scholar]

- Elosua, P.; Zumbo, B. Coeficientes de fiabilidad para escalas de respuesta ordenada. Psicothema 2008, 20, 896–901. [Google Scholar]

- Aquiahuatl Torres, E.C. Metodología de la Investigación Interdisciplinaria. Tomo I Investigación Monodisciplinaria; Self Published Ink.: Mexico City, Mexico, 2015. [Google Scholar]

- Ato, M.; López-García, J.J.; Benavente, A. Un sistema de clasificación de los diseños de investigación en psicología. An. Psicol. 2013, 29, 1038–1059. [Google Scholar] [CrossRef]

- León, O.G.; Montero, I. Sistema de clasificación del método en los informes de investigación en Psicología. Int. J. Clin. Health Psychol. 2005, 5, 115–127. [Google Scholar]

- American Psychological Association. Ethical Principles of Psychologists and Code of Conduct. Available online: https://www.apa.org/ethics/code (accessed on 18 May 2019).

- Chávez Ventura, G.; Santa Cruz Espinoza, H.; Grimaldo Muchotrigo, M.P. El consentimiento informado en las publicaciones latinoamericanas de Psicología. Av. Psicol. Latinoam. 2014, 32, 345–359. [Google Scholar] [CrossRef]

- Yu, Y.; Shiu, C.-S.; Yang, J.P.; Wang, M.; Simoni, J.M.; Chen, W.-t.; Cheng, J.; Zhao, H. Factor analyses of a social support scale using two methods. Qual. Life Res. 2015, 24, 787–794. [Google Scholar] [CrossRef] [PubMed]

- Böckenholt, U.; Meiser, T. Response style analysis with threshold and multi-process IRT models: A review and tutorial. Br. J. Math. Stat. Psychol. 2017, 70, 159–181. [Google Scholar] [CrossRef]

- Tennant, A. Disordered Thresholds: An example from the Functional Independence Measure. Rasch Meas. Trans. 2004, 2004, 945–948. [Google Scholar]

- Masters, G.N. A Rasch model for partial credit scoring. Psychometrika 1982, 47, 149–174. [Google Scholar] [CrossRef]

- Masters, G.N. The Analysis of Partial Credit Scoring. Appl. Meas. Educ. 1988, 1, 279–297. [Google Scholar] [CrossRef]

- Luo, G. The relationship between the Rating Scale and Partial Credit Models and the implication of disordered thresholds of the Rasch models for polytomous responses. J. Appl. Meas. 2005, 6, 443–455. [Google Scholar] [PubMed]

- Mair, P.; Hatzinger, R. Extended Rasch Modeling: The eRm Package for the Application of IRT Models in R. J. Stat. Softw. 2007, 20, 1–20. [Google Scholar] [CrossRef]

- Hock, M. iana: GUI for Item Analysis. In R Package (Version 0.1); R Core Team: Vienna, Austria, 2017. [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing; R Core Team: Vienna, Austria, 2021. [Google Scholar]

- Salas-Blas, E.; Merino-Soto, C.; Pérez-Amezcua, B.; Toledano-Toledano, F. Social Networks Addiction (SNA-6)—Short: Validity of Measurement in Mexican Youths. Front. Psychol. 2022, 12, 774847. [Google Scholar] [CrossRef] [PubMed]

- Merino-Soto, C.; Juárez-García, A.; Salinas-Escudero, G.; Toledano-Toledano, F. Item-Level Psychometric Analysis of the Psychosocial Processes at Work Scale (PROPSIT) in Workers. Int. J. Environ. Res. Public Health 2022, 19, 7972. [Google Scholar] [CrossRef] [PubMed]

- Mokken, R.J. A Theory and Procedure of Scale Analysis: With Applications in Political Research; De Gruyter Mouton: Berlin, Germany; New York, NY, USA, 2011. [Google Scholar]

- Molenaar, I.W.; Sijtsma, K. Mokken’s approach to reliability estimation extended to multicategory items. Kwant. Methoden 1988, 9, 115–126. [Google Scholar]

- Brodin, U.B. A ‘3 Step’ IRT Strategy for Evaluation of the Use of Sum Scores in Small Studies with Questionnaires Using Items with Ordered Response Levels; Karolinska Institutet: Stockholm, Sweden, 2014. [Google Scholar]

- Sijtsma, K.; van der Ark, L.A. A tutorial on how to do a Mokken scale analysis on your test and questionnaire data. Br. J. Math. Stat. Psychol. 2017, 70, 137–158. [Google Scholar] [CrossRef]

- Straat, J.H.; van der Ark, L.A.; Sijtsma, K. Using Conditional Association to Identify Locally Independent Item Sets. Methodology 2016, 12, 117–123. [Google Scholar] [CrossRef]

- van der Ark, L.A. New Developments in Mokken Scale Analysis in R. J. Stat. Softw. 2012, 48, 1–27. [Google Scholar] [CrossRef]

- Muthén, B. Goodness of Fit with Categorical and Other Non-Normal Variables. In Testing Structural Equation Models; Bollen, K.A., Long, J.S., Eds.; Sage Publications: Newbury Park, CA, USA, 1993; pp. 205–243. [Google Scholar]

- West, S.G.; Taylor, A.B.; Wu, W. Model fit and model selection in structural equation modeling. In The Handbook of Structural Equation Modeling; Hoyle, R.H., Ed.; The Guilford Press: New York, NY, USA, 2012; pp. 209–231. [Google Scholar]

- Wu, H.; Estabrook, R. Identification of Confirmatory Factor Analysis Models of Different Levels of Invariance for Ordered Categorical Outcomes. Psychometrika 2016, 81, 1014–1045. [Google Scholar] [CrossRef] [Green Version]

- Kang, Y.; McNeish, D.M.; Hancock, G.R. The role of measurement quality on practical guidelines for assessing measurement and structural invariance. Educ. Psychol. Meas. 2016, 76, 533–561. [Google Scholar] [CrossRef]

- McDonald, R.P. An index of goodness-of-fit based on noncentrality. J. Classif. 1989, 6, 97–103. [Google Scholar] [CrossRef]

- Cohen, J. A power primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Ruscio, J.; Roche, B. Variance Heterogeneity in Published Psychological Research. Methodology 2012, 8, 1. [Google Scholar] [CrossRef]

- Rosseel, Y. lavaan: An R Package for Structural Equation Modeling. J. Stat. Softw. 2012, 48, 1–36. [Google Scholar] [CrossRef]

- Wanous, J.P.; Reichers, A.E. Estimating the Reliability of a Single-Item Measure. Psychol. Rep. 1996, 78, 631–634. [Google Scholar] [CrossRef]

- Zijlmans, E.A.O.; van der Ark, L.A.; Tijmstra, J.; Sijtsma, K. Methods for Estimating Item-Score Reliability. Appl. Psychol. Meas. 2018, 42, 553–570. [Google Scholar] [CrossRef] [PubMed]

- Zijlmans, E.A.O.; Tijmstra, J.; van der Ark, L.A.; Sijtsma, K. Item-Score Reliability in Empirical-Data Sets and Its Relationship With Other Item Indices. Educ. Psychol. Meas. 2018, 78, 998–1020. [Google Scholar] [CrossRef]

- Green, S.B.; Yang, Y. Reliability of Summed Item Scores Using Structural Equation Modeling: An Alternative to Coefficient Alpha. Psychometrika 2009, 74, 155–167. [Google Scholar] [CrossRef]

- Payne, R.W.; Jones, H.G. Statistics for the investigation of individual cases. J. Clin. Psychol. 1957, 13, 115–121. [Google Scholar] [CrossRef]

- Hemker, B.T.; Sijtsma, K.; Molenaar, I.W. Selection of Unidimensional Scales From a Multidimensional Item Bank in the Polytomous Mokken I RT Model. Appl. Psychol. Meas. 1995, 19, 337–352. [Google Scholar] [CrossRef]

- van Schuur, W.H. Mokken Scale Analysis: Between the Guttman Scale and Parametric Item Response Theory. Polit. Anal. 2003, 11, 139–163. [Google Scholar] [CrossRef]

- Douglas, J.; Kim, H.R.; Habing, B.; Gao, F. Investigating Local Dependence with Conditional Covariance Functions. J. Educ. Behav. Stat. 1998, 23, 129–151. [Google Scholar] [CrossRef]

- Saris, W.E.; Satorra, A.; van der Veld, W.M. Testing Structural Equation Models or Detection of Misspecifications? Struct. Equ. Modeling 2009, 16, 561–582. [Google Scholar] [CrossRef]

- Satorra, A.; Bentler, P.M. Ensuring Positiveness of the Scaled Difference Chi-square Test Statistic. Psychometrika 2010, 75, 243–248. [Google Scholar] [CrossRef] [PubMed]

- Yoon, M.; Millsap, R.E. Detecting Violations of Factorial Invariance Using Data-Based Specification Searches: A Monte Carlo Study. Struct. Equ. Modeling 2007, 14, 435–463. [Google Scholar] [CrossRef]

- Cochran, W.G. The Combination of Estimates from Different Experiments. Biometrics 1954, 10, 101–129. [Google Scholar] [CrossRef]

- Higgins, J.P.; Thompson, S.G. Quantifying heterogeneity in a meta-analysis. Stat. Med. 2002, 21, 1539–1558. [Google Scholar] [CrossRef] [PubMed]

- Díaz Mujica, A.; Pérez Villalobos, M.V.; González-Pienda, J.A.; Núñez Pérez, J.C. Impacto de un entrenamiento en aprendizaje autorregulado en estudiantes universitarios. Perf. Educ. 2017, 39, 87–104. [Google Scholar] [CrossRef]

- Cambridge-Williams, T.; Winsler, A.; Kitsantas, A.; Bernard, E. University 100 Orientation Courses and Living-Learning Communities Boost Academic Retention and Graduation via Enhanced Self-Efficacy and Self-Regulated Learning. J. Coll. Stud. Retent. 2013, 15, 243–268. [Google Scholar] [CrossRef]

- Kim, K.H.; Cramond, B.; Bandalos, D.L. The Latent Structure and Measurement Invariance of Scores on the Torrance Tests of Creative Thinking-Figural. Educ. Psychol. Meas. 2006, 66, 459–477. [Google Scholar] [CrossRef]

- Byrne, B.M. Structural Equation Modeling with Mplus: Basic Concepts, Applications, and Programming, 1st ed.; Routledge: New York, NY, USA, 2011. [Google Scholar]

- Merino-Soto, C.; Calderón-De la Cruz, G.A. Validez de estudios peruanos sobre estrés y burnout. Rev. Peru. Med. Exp. Salud Publica 2018, 35, 353–354. [Google Scholar] [CrossRef] [PubMed]

- Merino-Soto, C.; Angulo-Ramos, M. Metric Studies of the Compliance Questionnaire on Rheumatology (CQR): A Case of Validity Induction? Reumatol. Clin. 2021. [Google Scholar] [CrossRef] [PubMed]

- Merino-Soto, C.; Angulo-Ramos, M. Validity induction: Comments on the study of Compliance Questionnaire for Rheumatology. Rev. Colomb. Reumatol. 2021, 28, 312–313. [Google Scholar] [CrossRef]

| Descriptive Information | Correlations | |||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | Skew. | Kurt. | au1 | au3 | au6 | au9 | au11 | au12 | au2 | au4 | au7 | au8 | au10 | au14 | au5 | au13 | Gender | Age | |

| Autonomy (F1) | ||||||||||||||||||||

| au1 | 3.790 | 0.993 | −0.138 | −0.806 | 1.000 | −0.130 | 0.029 | |||||||||||||

| au3 | 3.948 | 1.062 | −0.664 | −0.199 | 0.514 | 1.000 | −0.024 | −0.043 | ||||||||||||

| au6 | 4.310 | 0.898 | −1.14 | 0.774 | 0.228 | 0.257 | 1.000 | −0.006 | −0.070 | |||||||||||

| au9 | 4.227 | 0.942 | −0.973 | 0.223 | 0.209 | 0.316 | 0.459 | 1.000 | 0.070 | −0.139 | ||||||||||

| au11 | 4.413 | 0.900 | −1.57 | 2.186 | 0.238 | 0.260 | 0.330 | 0.315 | 1.000 | 0.050 | 0.090 | |||||||||

| au12 | 4.153 | 0.981 | −0.898 | 0.078 | 0.176 | 0.161 | 0.198 | 0.239 | 0.280 | 1.000 | −0.015 | 0.056 | ||||||||

| Control (F2) | ||||||||||||||||||||

| au2 | 1.621 | 0.977 | 1.447 | 1.314 | 0.022 | 0.017 | 0.031 | 0.055 | −0.053 | 0.081 | 1.000 | −0.109 | −0.071 | |||||||

| au4 | 2.894 | 1.371 | 0.034 | −1.05 | 0.144 | 0.284 | 0.152 | 0.168 | 0.103 | 0.169 | 0.266 | 1.000 | −0.008 | −0.056 | ||||||

| au7 | 2.148 | 1.296 | 0.779 | −0.551 | 0.020 | −0.001 | 0.125 | 0.063 | 0.002 | 0.081 | 0.453 | 0.299 | 1.000 | −0.044 | −0.098 | |||||

| au8 | 2.384 | 1.300 | 0.541 | −0.691 | −0.075 | −0.073 | 0.170 | 0.152 | 0.011 | 0.149 | 0.362 | 0.139 | 0.479 | 1.000 | −0.069 | −0.131 | ||||

| au10 | 2.122 | 1.227 | 0.751 | −0.436 | −0.041 | 0.044 | 0.173 | 0.156 | −0.079 | 0.099 | 0.409 | 0.386 | 0.477 | 0.414 | 1.000 | −0.026 | −0.080 | |||

| au14 | 2.297 | 1.406 | 0.670 | −0.854 | 0.060 | 0.056 | 0.136 | 0.086 | 0.040 | 0.131 | 0.377 | 0.269 | 0.632 | 0.361 | 0.462 | 1.000 | −0.075 | −0.075 | ||

| au5 | 3.930 | 1.085 | −0.682 | −0.216 | 0.117 | 0.128 | 0.512 | 0.403 | 0.252 | 0.177 | 0.146 | 0.185 | 0.208 | 0.246 | 0.232 | 0.159 | 1.000 | 0.011 | −0.128 | |

| au13 | 3.968 | 1.096 | −0.852 | 0.054 | 0.148 | 0.140 | 0.229 | 0.276 | 0.322 | 0.633 | 0.105 | 0.136 | 0.112 | 0.219 | 0.154 | 0.181 | 0.200 | 1.000 | 0.013 | −0.002 |

| Scalability (H Coefficient) | Monotonicity (n = 596) | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Initial Analysis | Final Analysis | ||||||||||||

| Total Sample (n = 596) | Level 1 Semester (n = 136) | Level 2 Semester (n = 309) | Level 3 Semester (n = 131) | Total Sample (n = 596) | #vi | #zsig | CRIT | ||||||

| H | s.e. | H | s.e. | H | s.e. | H | s.e. | H | s.e. | ||||

| Autonomy (F1) | |||||||||||||

| au1 | 0.339 | 0.036 | 0.320 | 0.068 | 0.365 | 0.055 | 0.320 | 0.066 | 0.413 | 0.039 | 0 | 0 | 0 |

| au3 | 0.314 | 0.034 | 0.339 | 0.072 | 0.325 | 0.049 | 0.273 | 0.065 | 0.399 | 0.037 | 0 | 0 | 0 |

| au6 | 0.345 | 0.035 | 0.281 | 0.078 | 0.370 | 0.051 | 0.332 | 0.055 | 0.395 | 0.041 | 1 | 0 | 19 |

| au9 | 0.315 | 0.033 | 0.332 | 0.064 | 0.345 | 0.044 | 0.239 | 0.069 | 0.363 | 0.040 | 0 | 0 | 0 |

| au11 | 0.288 | 0.043 | 0.235 | 0.084 | 0.300 | 0.067 | 0.336 | 0.061 | – | – | – | – | – |

| au12 | 0.187 | 0.037 | 0.105 | 0.069 | 0.205 | 0.053 | 0.187 | 0.073 | – | – | – | – | – |

| Total | 0.296 | 0.029 | 0.269 | 0.055 | 0.316 | 0.044 | 0.279 | 0.048 | 0.392 | 0.033 | |||

| Control (F2) | |||||||||||||

| au2 | 0.393 | 0.035 | 0.322 | 0.084 | 0.486 | 0.042 | 0.262 | 0.077 | 0.414 | 0.040 | 2 | 0 | 17 |

| au4 | 0.258 | 0.032 | 0.183 | 0.067 | 0.254 | 0.046 | 0.354 | 0.057 | – | – | |||

| au7 | 0.451 | 0.026 | 0.413 | 0.056 | 0.478 | 0.037 | 0.421 | 0.053 | 0.522 | 0.027 | 0 | 0 | 0 |

| au8 | 0.374 | 0.027 | 0.359 | 0.054 | 0.407 | 0.038 | 0.315 | 0.059 | 0.445 | 0.032 | 1 | 0 | 9 |

| au10 | 0.439 | 0.025 | 0.389 | 0.057 | 0.474 | 0.033 | 0.407 | 0.058 | 0.465 | 0.030 | 0 | 0 | 0 |

| au14 | 0.424 | 0.026 | 0.340 | 0.063 | 0.474 | 0.034 | 0.400 | 0.053 | 0.490 | 0.028 | 1 | 0 | 5 |

| au5 | 0.290 | 0.036 | 0.323 | 0.059 | 0.327 | 0.048 | 0.154 | 0.089 | – | – | – | – | – |

| au13 | 0.257 | 0.039 | 0.271 | 0.067 | 0.280 | 0.055 | 0.200 | 0.084 | – | – | – | – | – |

| Total | 0.367 | 0.022 | 0.326 | 0.046 | 0.401 | 0.029 | 0.329 | 0.047 | 0.472 | 0.026 | |||

| λF1 | λF2 | h2 | ritc | ritem | |

|---|---|---|---|---|---|

| au1 | 0.674 | 0.454 | 0.436 | 0.300 | |

| au3 | 0.689 | 0.474 | 0.503 | 0.400 | |

| au6 | 0.739 | 0.546 | 0.412 | 0.268 | |

| au9 | 0.704 | 0.496 | 0.431 | 0.294 | |

| au2 | 0.635 | 0.403 | 0.514 | 0.341 | |

| au7 | 0.872 | 0.760 | 0.696 | 0.626 | |

| au8 | 0.666 | 0.444 | 0.519 | 0.348 | |

| au10 | 0.723 | 0.522 | 0.576 | 0.429 | |

| au14 | 0.819 | 0.671 | 0.609 | 0.479 | |

| Descriptive statistics | |||||

| M | 16.275 | 10.572 | |||

| SD | 2.754 | 4.642 | |||

| Skew. | −0.583 | 0.765 | |||

| Kurt. | 0.212 | −0.118 | |||

| Clinicometric indicators | |||||

| SEMF1 | SEMF2 | SEMD | DED | ||

| Value | 1.470 | 2.060 | 6.943 | 13 | |

| Z | |||||

| 85% | 2.116 | 2.966 | 10 | 19 | |

| 90% | 2.418 | 3.389 | 11 | 22 | |

| 95% | 2.882 | 4.038 | 14 | 26 | |

| 99% | 3.787 | 5.307 | 18 | 34 | |

| Invariance Models | Fit Estimates | Fit Differences | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| WLSMV-χ2 (df) | CFI | TLI | G-h | Mc | RMSEA (90% CI) | ΔWLSMV-χ2 (df) | ΔCFI | ΔTLI | ΔG-h | ΔMc | ΔRMSEA | |

| Configuration | 159.314 (78) | 0.973 | 0.963 | 0.970 | 0.933 | 0.073 (0.056, 0.089) | – | – | – | – | – | – |

| Thresholds | 171.101 (92) | 0.974 | 0.969 | 0.971 | 0.935 | 0.066 (0.050, 0.081) | 17.79 (14) | 0.001 | 0.006 | 0.001 | 0.002 | −0.007 |

| Loads, thresholds | 183.521 (106) | 0.975 | 0.974 | 0.971 | 0.936 | 0.061 (0.046, 0.075) | 13.21 (14) | 0.001 | 0.005 | 0.000 | 0.001 | −0.005 |

| Loads, thresholds, intercepts | 206.634 (110) | 0.968 | 0.969 | 0.965 | 0.922 | 0.067 (0.053, 0.081) | 10.07 * (14) | −0.007 | −0.005 | −0.006 | −0.014 | 0.006 |

| Residuals | 200.111 (124) | 0.975 | 0.978 | 0.971 | 0.938 | 0.056 (0.041, 0.070) | 1.40 (14) | 0.007 | 0.009 | 0.006 | 0.016 | −0.011 |

| Comparison of Latent Means | Variances and Latent Correlations | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| F1 (Autonomy) | F2 (Control) | ||||||||||

| g1 | g2 | g3 | g1 | g2 | g3 | g1 | g2 | g3 | SVH | ||

| Variances | |||||||||||

| g1 (1st to 2nd semester) | – | – | F1 | 0.746 | 0.779 | 1.062 | 0.116 | ||||

| g2 (3rd semester) | F2 | 0.401 | 1.041 | 0.462 | 0.321 | ||||||

| Δno-Z | −0.075 | – | −0.323 * | – | – | SVH | 0.300 | 0.143 | 0.393 | ||

| ΔZ | −0.085 | – | −0.317 * | – | – | ||||||

| Correlations | |||||||||||

| g3 (4th to 10th semester) | g1 | g2 | g3 | ||||||||

| Δno-Z | 0.029 | 0.116 | – | −0.011 | 0.322 * | – | r(F1,F2) | −0.075 | 0.097 | 0.244 | |

| ΔZ | 0.028 | 0.322 | – | −0.017 | 0.459 * | – | |||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Merino-Soto, C.; Chávez-Ventura, G.; López-Fernández, V.; Chans, G.M.; Toledano-Toledano, F. Learning Self-Regulation Questionnaire (SRQ-L): Psychometric and Measurement Invariance Evidence in Peruvian Undergraduate Students. Sustainability 2022, 14, 11239. https://doi.org/10.3390/su141811239

Merino-Soto C, Chávez-Ventura G, López-Fernández V, Chans GM, Toledano-Toledano F. Learning Self-Regulation Questionnaire (SRQ-L): Psychometric and Measurement Invariance Evidence in Peruvian Undergraduate Students. Sustainability. 2022; 14(18):11239. https://doi.org/10.3390/su141811239

Chicago/Turabian StyleMerino-Soto, César, Gina Chávez-Ventura, Verónica López-Fernández, Guillermo M. Chans, and Filiberto Toledano-Toledano. 2022. "Learning Self-Regulation Questionnaire (SRQ-L): Psychometric and Measurement Invariance Evidence in Peruvian Undergraduate Students" Sustainability 14, no. 18: 11239. https://doi.org/10.3390/su141811239

APA StyleMerino-Soto, C., Chávez-Ventura, G., López-Fernández, V., Chans, G. M., & Toledano-Toledano, F. (2022). Learning Self-Regulation Questionnaire (SRQ-L): Psychometric and Measurement Invariance Evidence in Peruvian Undergraduate Students. Sustainability, 14(18), 11239. https://doi.org/10.3390/su141811239