Crop Identification and Analysis in Typical Cultivated Areas of Inner Mongolia with Single-Phase Sentinel-2 Images

Abstract

:1. Introduction

2. Materials and Methods

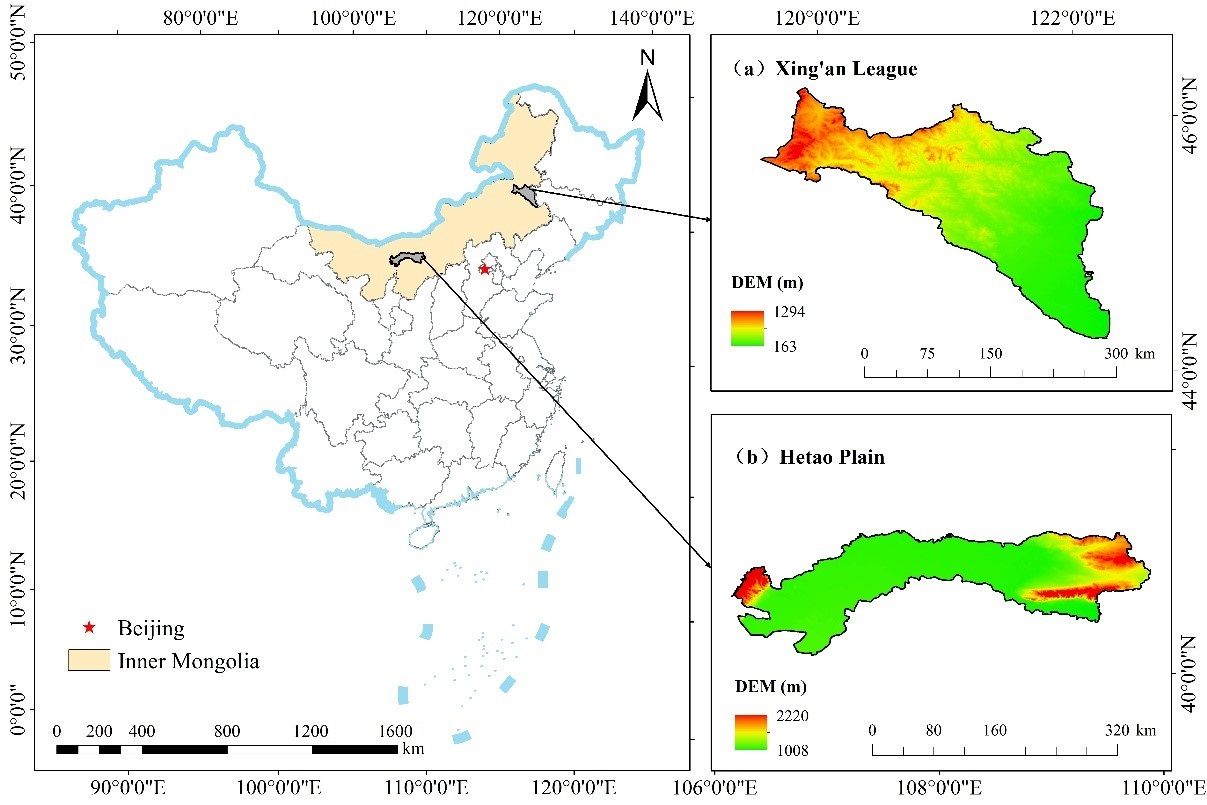

2.1. Study Area

2.2. Data and Samples

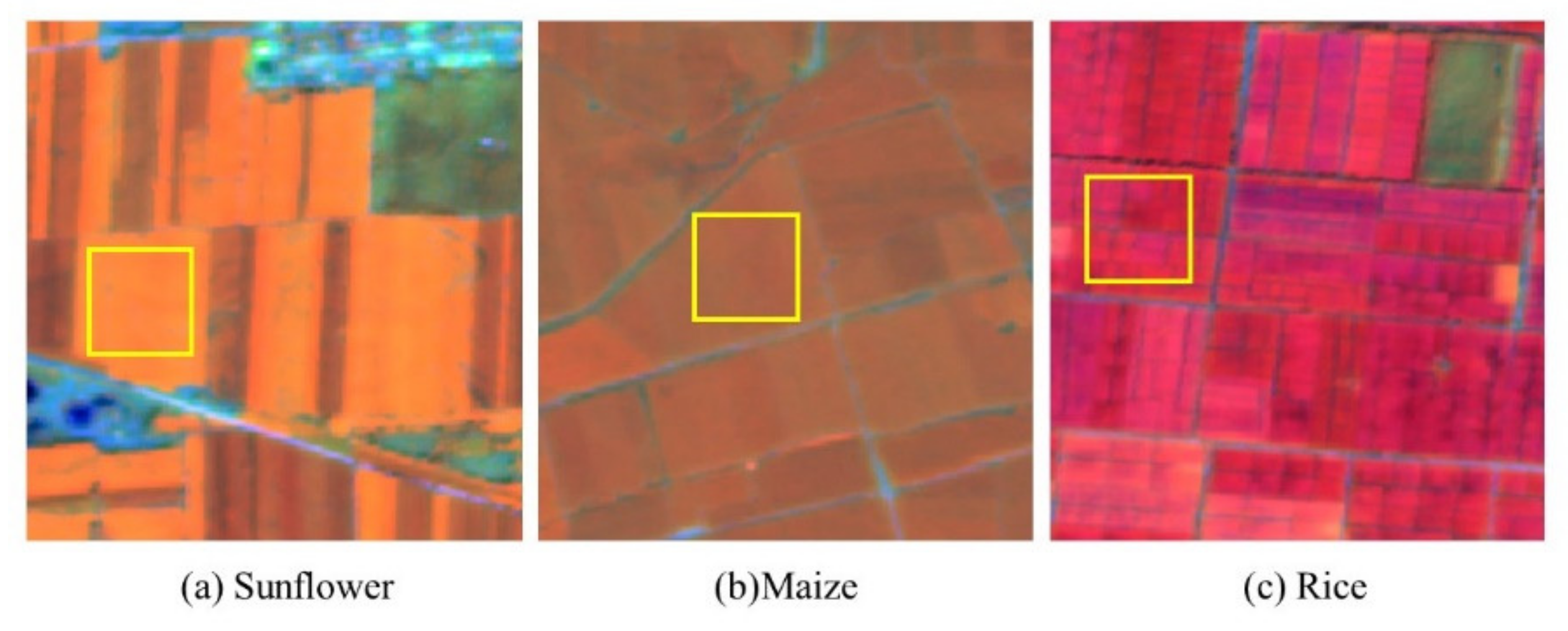

2.2.1. Remote Sensing Data and Processing

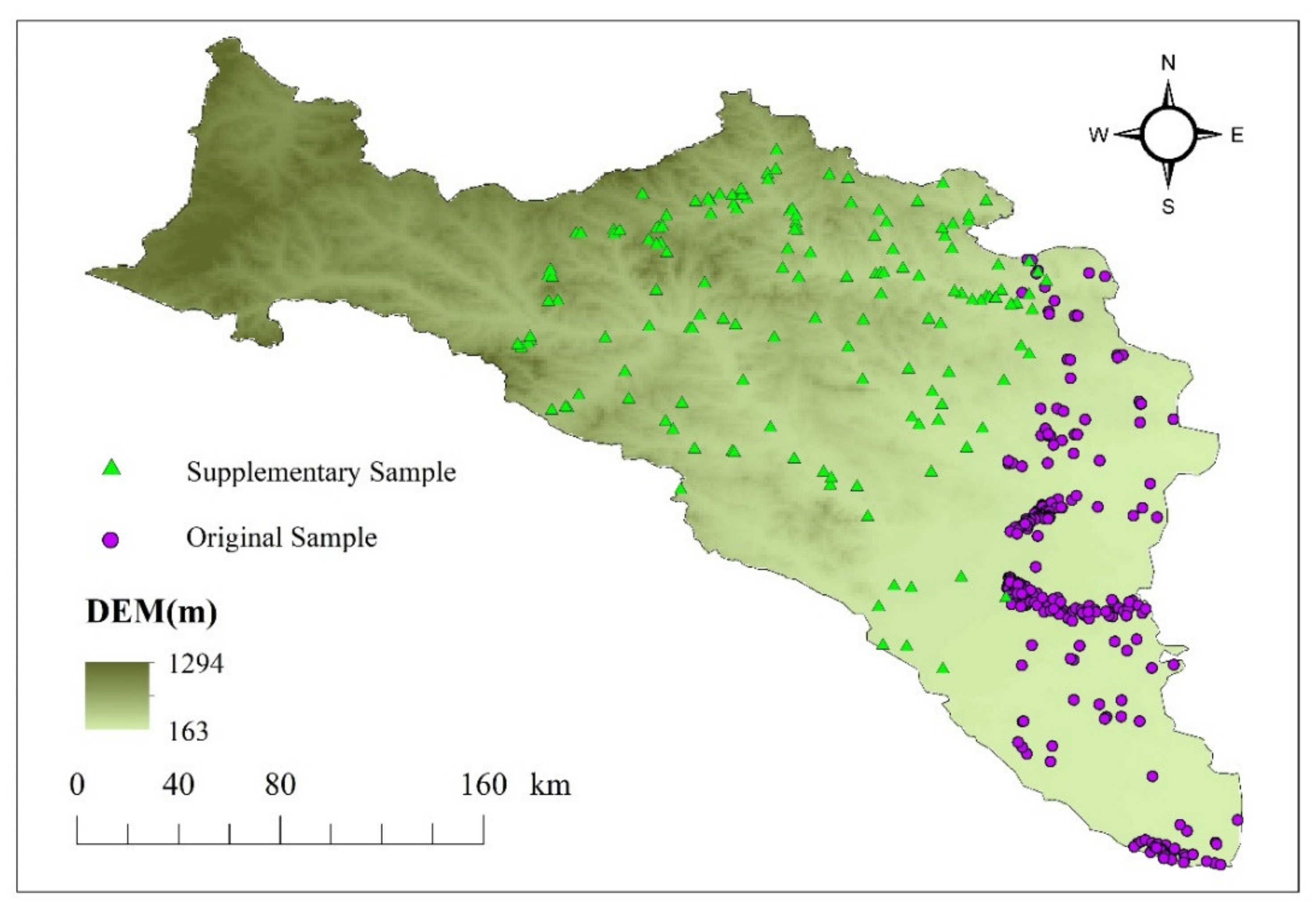

2.2.2. Samples

2.2.3. Auxiliary Data

2.3. Methods and Models

2.3.1. Sample Data Cleaning and Division

2.3.2. Sample Neighborhood Information Acquisition

2.3.3. Crop Classification Model Construction

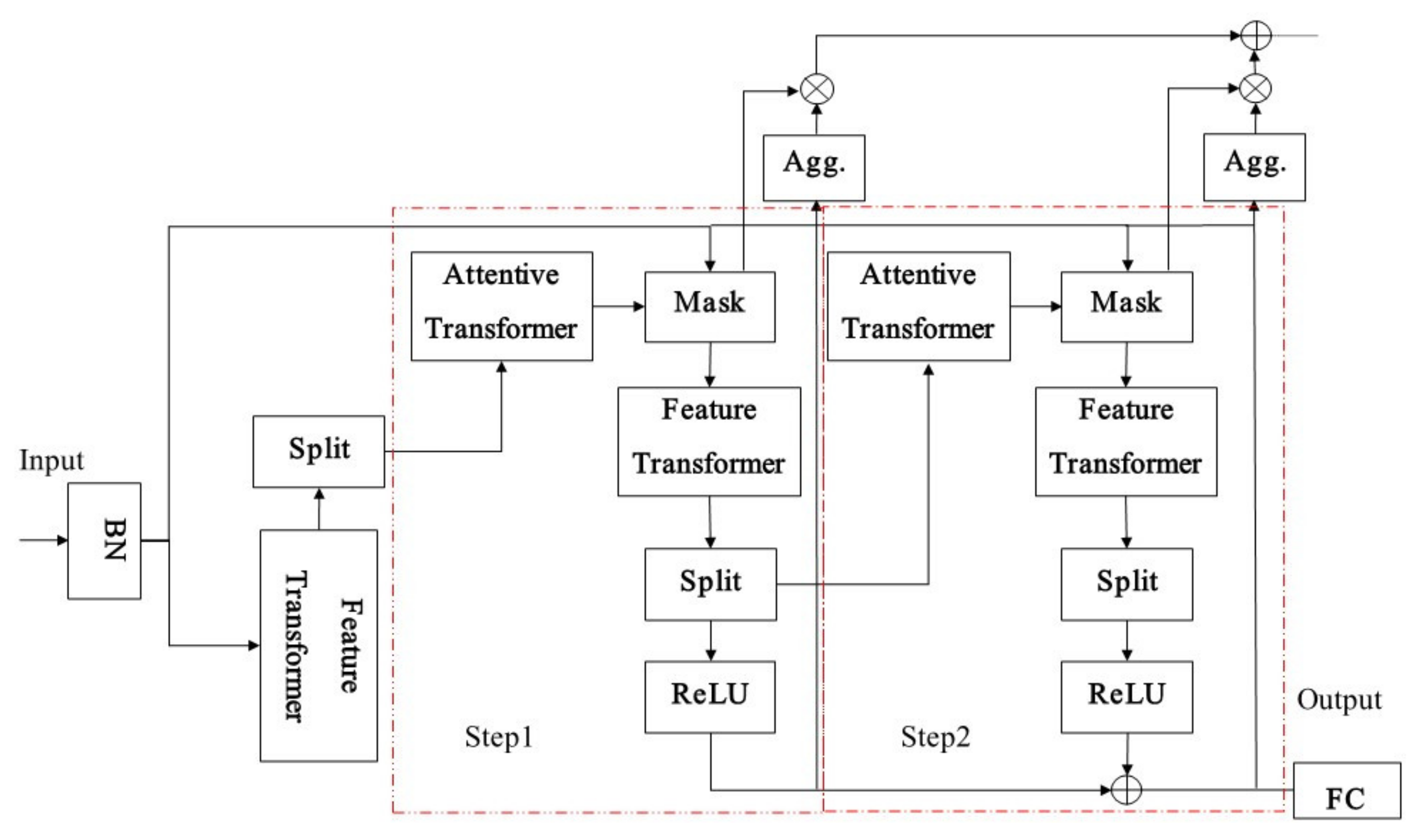

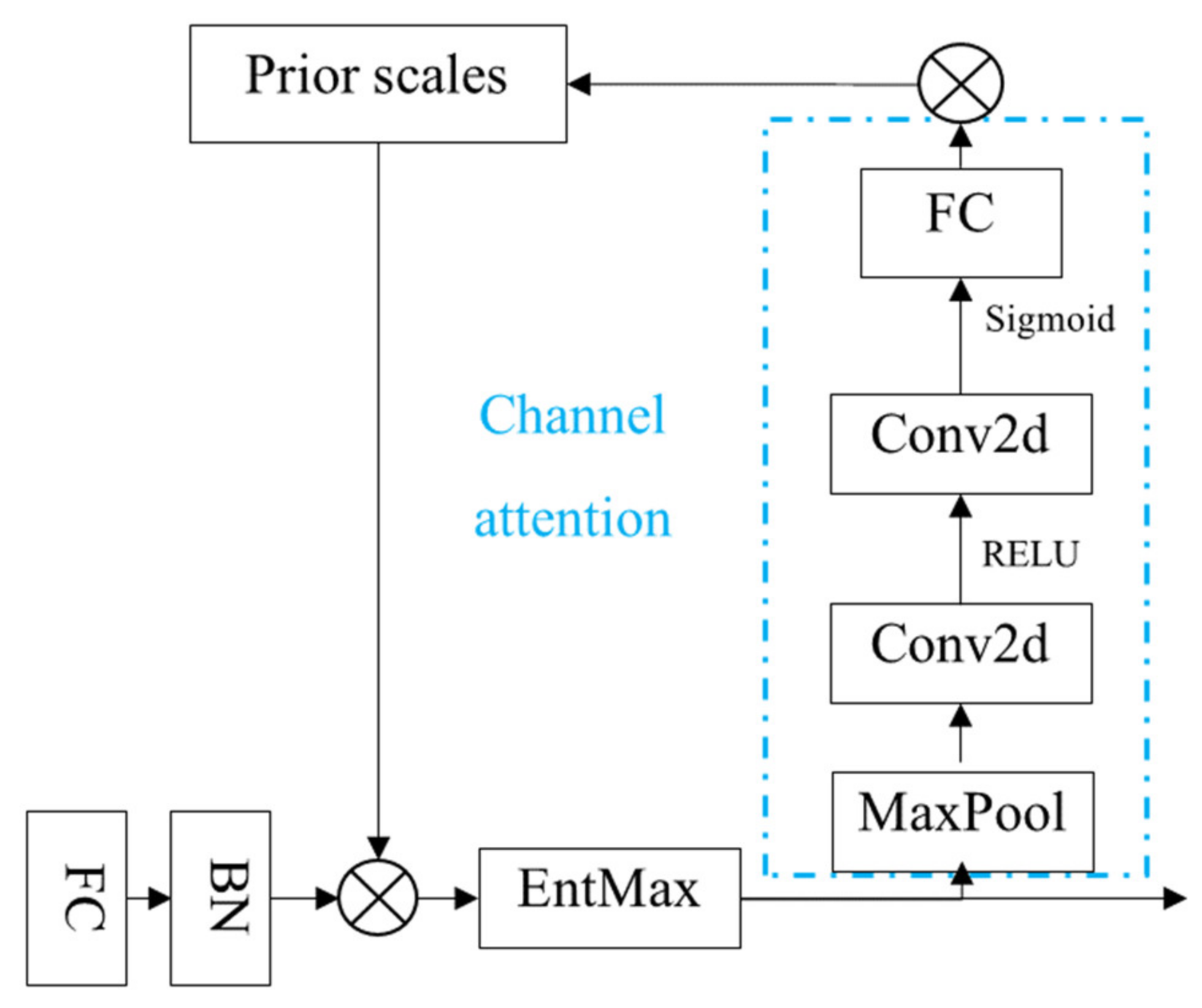

2.3.4. Accuracy Evaluation

3. Experiments and Results

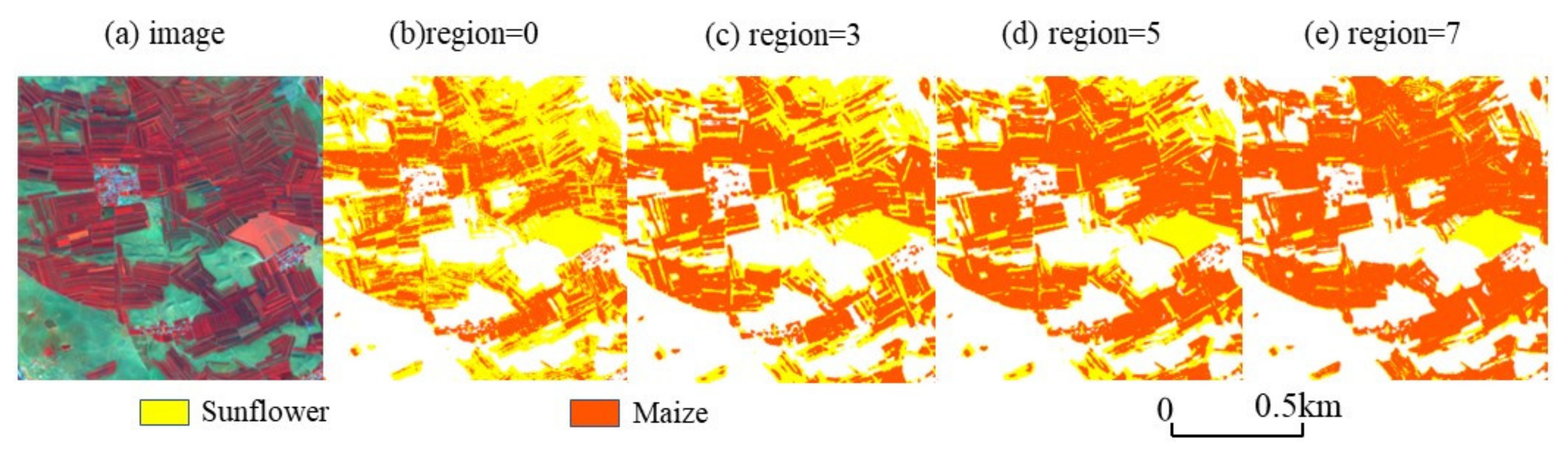

3.1. Neighborhood Size Determination

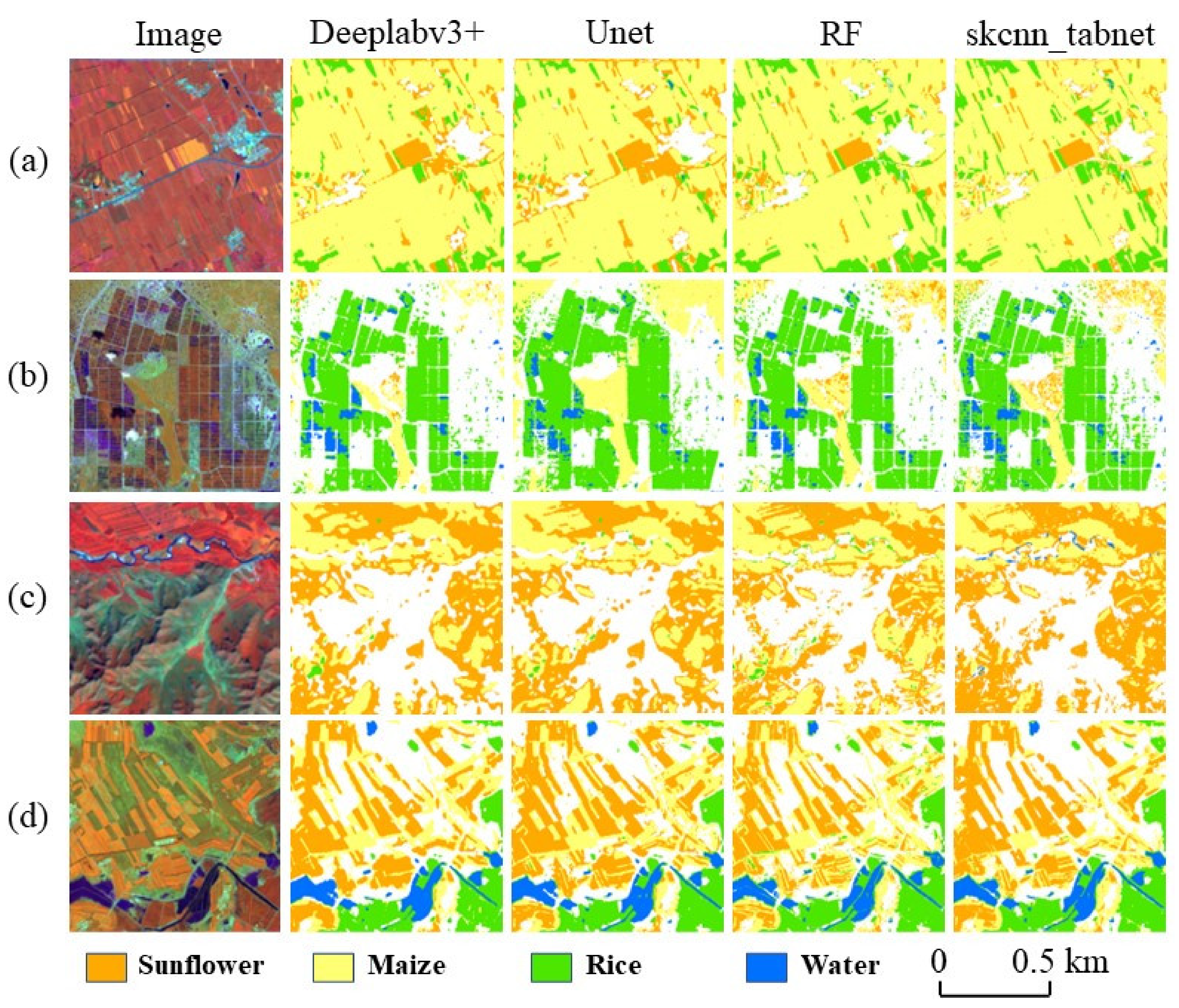

3.2. Experiments

3.3. Accuracy Verification

4. Discussion

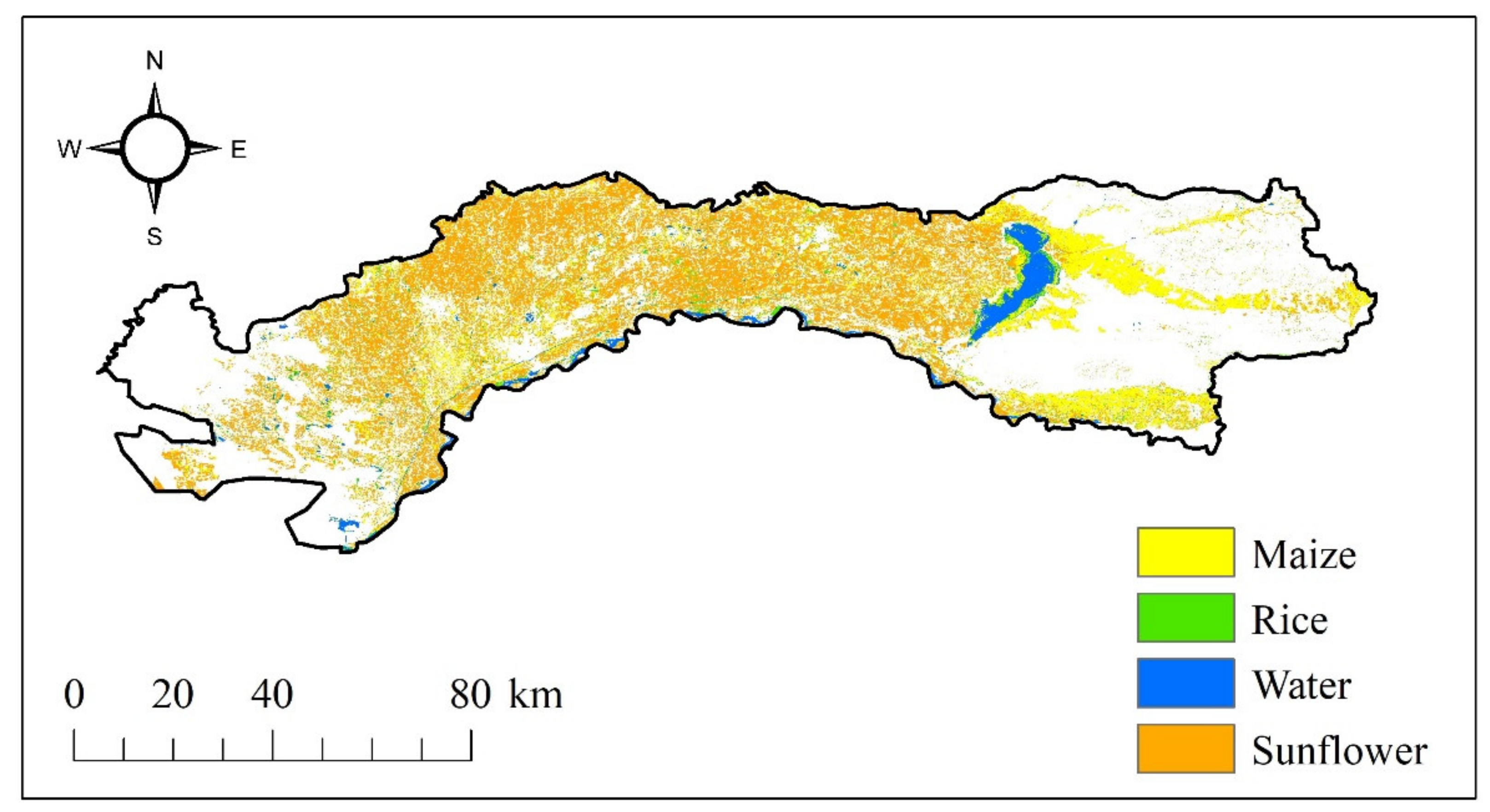

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yu, B.; Shang, S. Multi-year Mapping of Maize and Sunflower in Hetao Irrigation District of China with High Spatial and Temporal Resolution Vegetation Index Series. Remote Sens. 2017, 9, 855. [Google Scholar] [CrossRef] [Green Version]

- Li, Z.; Bagan, H.; Yamagata, Y. Analysis of Spatiotemporal Land Cover Changes in Inner Mongolia Using Self-organizing Map Neural Network and Grid Cells Method. Sci. Total Environ. 2018, 636, 1180–1191. [Google Scholar] [CrossRef] [PubMed]

- Yang, Q.; Zhang, D. The Influence of Agricultural Industrial Policy on Non-grain Production of Cultivated Land: A Case Study of the “one Village, One Product” Strategy Implemented in Guanzhong Plain of China. Land Use Policy 2021, 108, 105579. [Google Scholar] [CrossRef]

- Ibrahim, E.S.; Rufin, P.; Nill, L.; Kamali, B.; Nendel, C.; Hostert, P. Mapping Crop Types and Cropping Systems in Nigeria with Sentinel-2 Imagery. Remote Sens. 2021, 13, 3523. [Google Scholar] [CrossRef]

- You, N.; Dong, J.; Huang, J.; Du, G.; Zhang, G.; He, Y.; Yang, T.; Di, Y.; Xiao, X. The 10-m Crop Type Maps in Northeast China During 2017–2019. Sci. Data 2021, 8, 1–11. [Google Scholar] [CrossRef] [PubMed]

- Tian, H.; Huang, N.; Niu, Z.; Qin, Y.C.; Pei, J.; Wang, J. Mapping Winter Crops in China with Multi-source Satellite Imagery and Phenology-based Algorithm. Remote Sens. 2019, 11, 820. [Google Scholar] [CrossRef] [Green Version]

- Li, X.; Sun, C.; Meng, H.; Ma, X.; Huang, G.; Xu, X. A Novel Efficient Method for Land Cover Classification in Fragmented Agricultural Landscapes Using Sentinel Satellite Imagery. Remote Sens. 2022, 14, 2045. [Google Scholar] [CrossRef]

- He, Z.; Li, S.; Wang, Y.; Dai, L.; Lin, S. Monitoring Rice Phenology Based on Backscattering Characteristics of Multi-temporal Radarsat-2 Datasets. Remote Sens. 2018, 10, 340. [Google Scholar] [CrossRef] [Green Version]

- Jiang, L.; Shang, S.; Yang, Y.; Guan, H. Mapping Interannual Variability of Maize Cover in a Large Irrigation District Using a Vegetation Index–phenological Index Classifier. Comput. Electron. Agric. 2016, 123, 351–361. [Google Scholar] [CrossRef]

- Ming, Z.; Qing, B.Z.; Zhong, X.C.; Jia, L.; Yong, Z.; Chongfa, C. Crop Discrimination in Northern China with Double Cropping Systems Using Fourier Analysis of Time-series Modis Data. Int. J. Appl. Earth Obs. Geoinf. 2008, 10, 476–485. [Google Scholar] [CrossRef]

- Johnson, M.D.; Hsieh, W.W.; Cannon, A.J.; Davidson, A.; Bédard, F. Crop Yield Forecasting on the Canadian Prairies by Remotely Sensed Vegetation Indices and Machine Learning Methods. Agric. For. Meteorol. 2016, 218, 74–84. [Google Scholar] [CrossRef]

- Abubakar, G.A.; Wang, K.; Shahtahamssebi, A.; Xue, X.; Belete, M.; Gudo, A.J.A.; Shuka, K.A.M.; Gan, M. Mapping Maize Fields by Using Multi-temporal Sentinel-1a and Sentinel-2a Images in Makarfi, Northern Nigeria, Africa. Sustainability 2020, 12, 2539. [Google Scholar] [CrossRef] [Green Version]

- You, N.; Dong, J. Examining Earliest Identifiable Timing of Crops Using All Available Sentinel 1/2 Imagery and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 161, 109–123. [Google Scholar] [CrossRef]

- Wang, S.; Azzari, G.; Lobell, D.B. Crop Type Mapping Without Field-level Labels: Random Forest Transfer and Unsupervised Clustering Techniques. Remote Sens. Environ. 2019, 222, 303–317. [Google Scholar] [CrossRef]

- Htitiou, A.; Boudhar, A.; Chehbouni, A.; Benabdelouahab, T. National-scale Cropland Mapping Based on Phenological Metrics, Environmental Covariates, and Machine Learning on Google Earth Engine. Remote Sens. 2021, 13, 4378. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, J.; Xun, L.; Wang, J.; Wu, Z.; Henchiri, M.; Zhang, S.; Zhang, S.; Bai, Y.; Yang, S.; et al. Evaluating the Effectiveness of Machine Learning and Deep Learning Models Combined Time-series Satellite Data for Multiple Crop Types Classification Over a Large-scale Region. Remote Sens. 2022, 14, 2341. [Google Scholar] [CrossRef]

- Shelestov, A.; Lavreniuk, M.; Kussul, N. Large Scale Crop Classification Using Google Earth Engine Platform. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Worth, TX, USA, 23–28 July 2017; pp. 3696–3699. [Google Scholar]

- Zhang, C.; Di, L.; Lin, L.; Li, H.; Guo, L.; Yang, Z.; Yu, E.G.; Di, Y.; Yang, A. Towards Automation of In-season Crop Type Mapping Using Spatiotemporal Crop Information and Remote Sensing Data. Agric. Syst. 2022, 201, 103462. [Google Scholar] [CrossRef]

- Ofori-ampofo, S.; Pelletier, C.; Lang, S. Crop Type Mapping from Optical and Radar Time Series Using Attention-based Deep Learning. Remote Sens. 2021, 13, 4668. [Google Scholar] [CrossRef]

- Mo, Y.; Wu, Y.; Yang, X.; Liu, F.; Liao, Y. Review the State-of-the-art Technologies of Semantic Segmentation Based on Deep Learning. Neurocomputing 2022, 493, 626–646. [Google Scholar] [CrossRef]

- Chen, Y.; Song, X.; Wang, S. Impacts of Spatial Heterogeneity on Crop Area Mapping in Canada Using Modis Data. J. Photogramm. Remote Sens. 2016, 119, 451–461. [Google Scholar] [CrossRef]

- Du, M.; Huang, J.; Wei, P.; Yang, L.; Chai, D.; Peng, D.; Sha, J.; Sun, W.; Huang, R. Dynamic Mapping of Paddy Rice Using Multi-temporal Landsat Data Based on a Deep Semantic Segmentation Model. Agronomy 2022, 12, 1583. [Google Scholar] [CrossRef]

- Der, Y.M.; Tseng, H.H.; Hsu, Y.C. Real-time Crop Classification Using Edge Computing and Deep Learning. In Proceedings of the 2020 IEEE 17th Annual Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 10–13 January 2020; pp. 1–4. [Google Scholar]

- Wang, C.; Zhang, R.; Chang, L. A Study on the Dynamic Effects and Ecological Stress of Eco-environment in the Headwaters of the Yangtze River Based on Improved Deeplabv3+ Network. Remote Sens. 2022, 14, 2225. [Google Scholar] [CrossRef]

- Yang, L.-T.; Zhao, J.-F.; Jiang, X.-P.; Wang, S.; Li, L.-H.; Xie, H.-F. Effects of Climate Change on the Climatic Production Potential of Potatoes in Inner Mongolia, China. Sustainability 2022, 14, 7836. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, P.; Zhang, F.; Liu, X.; Yue, Q.; Wang, Y. Optimal Irrigation Water Allocation in Hetao Irrigation District Considering Decision Makers’ Preference under Uncertainties. Agric. Water Manag. 2021, 246, 106670. [Google Scholar] [CrossRef]

- Shen, H.; Lin, L.; Li, J.; Yuan, Q.; Zhao, L. A Residual Convolutional Neural Network for Polarimetric Sar Image Super-resolution. ISPRS J. Photogramm. Remote Sens. 2020, 161, 90–108. [Google Scholar] [CrossRef]

- Haines, E. Point in Polygon Strategies. Graph. Gems 1994, 4, 24–46. [Google Scholar]

- Zhang, L.; Zhang, Q.; Du, B.; Huang, X.; Tang, Y.Y.; Tao, D. Simultaneous Spectral-spatial Feature Selection and Extraction for Hyperspectral Images. IEEE Trans. Cybern. 2016, 48, 16–28. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Arik, S.Ö.; Pfister, T. Tabnet: Attentive Interpretable Tabular Learning. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; pp. 6679–6687. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Zhu, Y.; Papandreou, G. Encoder-decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Yang, L.; Wang, L.; Huang, J.; Mansaray, L.R.; Mijiti, R. Monitoring Policy-driven Crop Area Adjustments in Northeast China Using Landsat-8 Imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101892. [Google Scholar] [CrossRef]

- Hao, P.; Wang, L.; Zhan, Y.; Wang, C.; Niu, Z.; Wu, M. Crop Classification Using Crop Knowledge of the Previous-year: Case Study in Southwest Kansas, USA. Eur. J. Remote Sens. 2016, 49, 1061–1077. [Google Scholar] [CrossRef] [Green Version]

- Siesto, G.; Fernández-Sellers, M.; Lozano-Tello, A. Crop Classification of Satellite Imagery Using Synthetic Multitemporal and Multispectral Images in Convolutional Neural Networks. Remote Sens. 2021, 13, 3378. [Google Scholar] [CrossRef]

| Bands | Name | Central Wavelength (nm) | Band Width (nm) | Spatial Resolution (m) |

|---|---|---|---|---|

| 1 | Coastal aerosol | 442.7 | 21 | 60 |

| 2 | Blue | 492.4 | 66 | 10 |

| 3 | Green | 559.8 | 36 | 10 |

| 4 | Red | 664.6 | 31 | 10 |

| 5 | Vegetation red edge | 704.1 | 15 | 20 |

| 6 | Vegetation red edge | 740.5 | 15 | 20 |

| 7 | Vegetation red edge | 782.8 | 20 | 20 |

| 8 | NIR 1 | 832.8 | 106 | 10 |

| 8A | Narrow NIR | 864.7 | 21 | 20 |

| 9 | Water vapor | 945.1 | 20 | 60 |

| 10 | SWIR 2 Cirrus | 1373.5 | 31 | 60 |

| 11 | SWIR | 1613.7 | 91 | 20 |

| 12 | SWIR | 2202.4 | 175 | 20 |

| Type | Number of ROIs | Number of Pixels |

|---|---|---|

| Maize | 471 | 209,720 |

| Sunflower | 326 | 153,489 |

| Rice | 207 | 130,193 |

| Waters | 29 | 56,079 |

| Other Crops | 20 | 47,701 |

| Method | Accuracy Category | Maize | Sunflower | Rice | Waters | Others | Average |

|---|---|---|---|---|---|---|---|

| Deeplabv3+ | IOU | 0.7258 (±0.028) | 0.6092 (±0.037) | 0.7254 (±0.030) | 0.9141 (±0.021) | 0.4172 (±0.044) | 0.6783 |

| F1 score | 0.8462 (±0.039) | 0.7541 (±0.026) | 0.8368 (±0.029) | 0.9543 (±0.035) | 0.5834 (±0.026) | 0.7949 | |

| Overall accuracy | 0.8149 (±0.031) | ||||||

| UNet | IOU | 0.7650 (±0.043) | 0.6476 (±0.046) | 0.7461 (±0.027) | 0.9226 (±0.033) | 0.4477 (±0.038) | 0.7058 |

| F1 score | 0.8663 (± 0.029) | 0.7827 (± 0.032) | 0.8534 (± 0.050) | 0.9586 (± 0.027) | 0.6176 (±0.033) | 0.8157 | |

| Overall accuracy | 0.8266 (± 0.038) | ||||||

| RF | F1 score | 0.7684 (±0.051) | 0.6798 (±0.060) | 0.7503 (±0.026) | 0.9244 (±0.031) | 0.6706 (±0.042) | 0.7587 |

| Overall accuracy | 0.8396 (±0.043) | ||||||

| Skcnn_Tabnet | IOU | 0.9063 (±0.026) | 0.8432 (±0.027) | 0.8738 (±0.037) | 0.9822 (±0.036) | 0.6951 (±0.029) | 0.8601 |

| F1 score | 0.9428 (±0.034) | 0.9103 (±0.029) | 0.9289 (±0.026) | 0.9878 (±0.031) | 0.7562 (±0.028) | 0.9052 | |

| Overall accuracy | 0.9270 (±0.026) | ||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, J.; Zhang, X.; Chen, Z.; Bai, Y. Crop Identification and Analysis in Typical Cultivated Areas of Inner Mongolia with Single-Phase Sentinel-2 Images. Sustainability 2022, 14, 12789. https://doi.org/10.3390/su141912789

Tang J, Zhang X, Chen Z, Bai Y. Crop Identification and Analysis in Typical Cultivated Areas of Inner Mongolia with Single-Phase Sentinel-2 Images. Sustainability. 2022; 14(19):12789. https://doi.org/10.3390/su141912789

Chicago/Turabian StyleTang, Jing, Xiaoyong Zhang, Zhengchao Chen, and Yongqing Bai. 2022. "Crop Identification and Analysis in Typical Cultivated Areas of Inner Mongolia with Single-Phase Sentinel-2 Images" Sustainability 14, no. 19: 12789. https://doi.org/10.3390/su141912789