Best Practices in Knowledge Transfer: Insights from Top Universities

Abstract

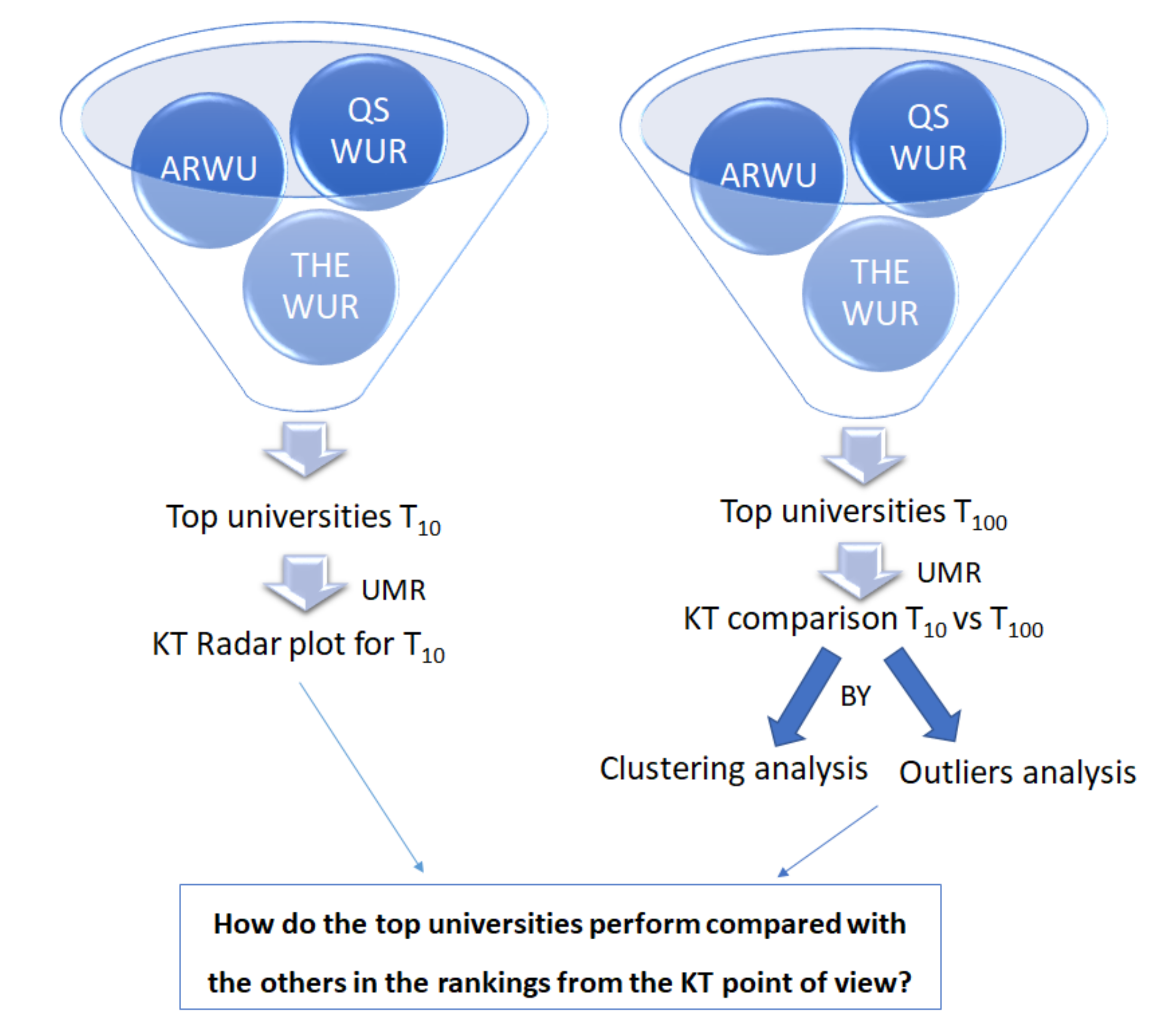

1. Introduction

- -

- Identify and analyze three of the best-known global university rankings in order to identify the world’s top universities and, at the same time, evaluate the coherence between rankings;

- -

- Search and select a set of specialized KT indicators for evaluating the world top universities from the KT point of view;

- -

- Verify if the world’s top universities, according to the global rankings, continue to best perform from KT point of view.

1.1. Global University Rankings

- The Academic Ranking of World Universities (ARWU) was first published in June 2003 by the Center for World-Class Universities (CWCU), Graduate School of Education (formerly the Institute of Higher Education) of Shanghai Jiao Tong University, China, and updated on an annual basis. Since 2009 the Academic Ranking of World Universities (ARWU) has been published and copyrighted by Shanghai Ranking Consultancy, a fully independent organization on higher education intelligence that is not legally subordinated to any universities or government agencies. ARWU uses six objective indicators to rank world universities, including the number of alumni and staff winning Nobel Prizes and Fields Medals, the number of highly cited researchers selected by Clarivate Analytics, the number of articles published in journals such as Nature and Science, the number of articles indexed in Science Citation Index—Expanded and Social Sciences Citation Index, and the per capita performance of a university. More than 1800 universities are ranked by ARWU every year and the best 1000 are published [51].

- The QS World University Rankings® (QSWUR) lists and ranks over 1000 universities from around the world, covering 80 different locations; it continues to rely on a remarkably consistent methodological framework, compiled using six simple metrics that effectively capture university performance. Universities are evaluated according to the following six metrics: Academic Reputation, Employer Reputation, Faculty/Student Ratio, Citations per faculty, International Faculty Ratio, International Student Ratio [52].

- The Times Higher Education World University Rankings (THEWUR) includes almost 1400 universities across 92 countries, standing as the largest and most diverse university rankings ever to date. It is based on 13 carefully balanced and comprehensive performance indicators and is trusted by students, academics, university leaders, industry, and governments. Its performance indicators are grouped into five main areas: teaching (the learning environment); research (volume, income, and reputation); citations (research influence); international outlook (staff, students, and research) and industry income (knowledge transfer) [53].

1.2. U-Multirank 2020 (UMR)

- Co-publications with industrial partners: the percentage of a department’s research publications that list an author affiliated with an address that refers to a for-profit business enterprise or private sector R&D unit (excluding for-profit hospitals and education organizations).

- Income from private sources: the percentage of external research revenues (including not-for-profit organizations) coming from private sources, excluding tuition fees. Measured in €1.000s using Purchasing Power Parities and computed per FTE (full time equivalent) academic staff.

- Patents awarded (absolute numbers): the number of patents assigned to inventors working at the university in the respective reference period.

- Patents awarded (size-normalized): the number of patents assigned to inventors working at the university over the respective reference period, computed per 1.000 students to take into consideration the size of the institution.

- Industry co-patents: the percentage of the number of patents assigned to inventors working at the university during the respective reference period, which were obtained in cooperation with at least one applicant from the industry.

- Spinoffs: the number of spinoffs (i.e., firms established on the basis of a formal KT arrangement with the university) recently created by the university (computed per 1000 FTE academic staff).

- Publications cited in patents: the percentage of the university’s research publications that were cited in at least one international patent (as included in the PATSTAT database).

- Income from continuous professional development: the percentage of the university’s total revenues that is generated from activities delivering Continuous Professional Development courses and training.

- Graduate companies: the number of companies newly founded by graduates and computed per 1000 graduates.

2. Methodology

- (1)

- Co-publications with industrial partners

- (2)

- Patents awarded (absolute numbers)

- (3)

- Patents awarded (size-normalized)

- (4)

- Industry co-patents

- (5)

- Publications cited in patents

3. Methodology Application and Results

3.1. First Step

3.2. Second Step

- (1)

- Co-publications with industrial partners

- (2)

- Patents awarded (absolute numbers)

- (3)

- Patents awarded (size-normalized)

- (4)

- Industry co-patents

- (5)

- Publications cited in patents.

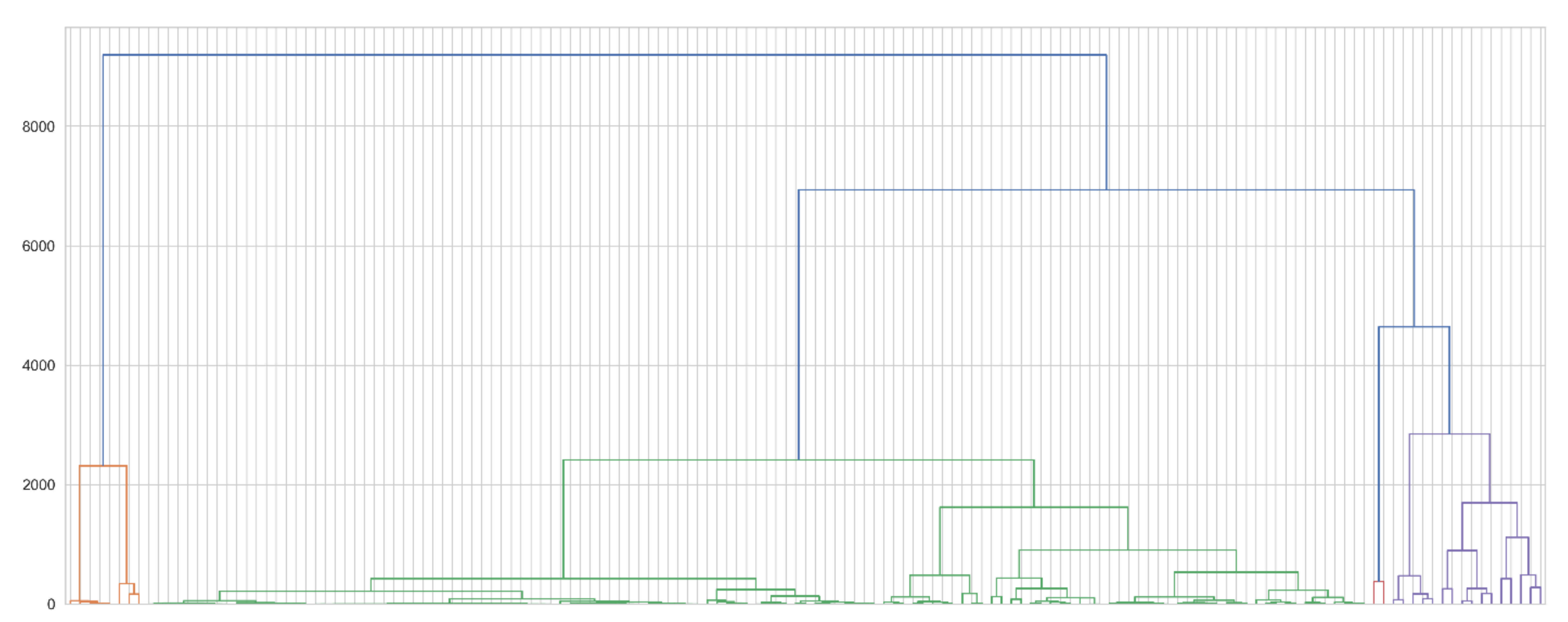

3.3. Third Step

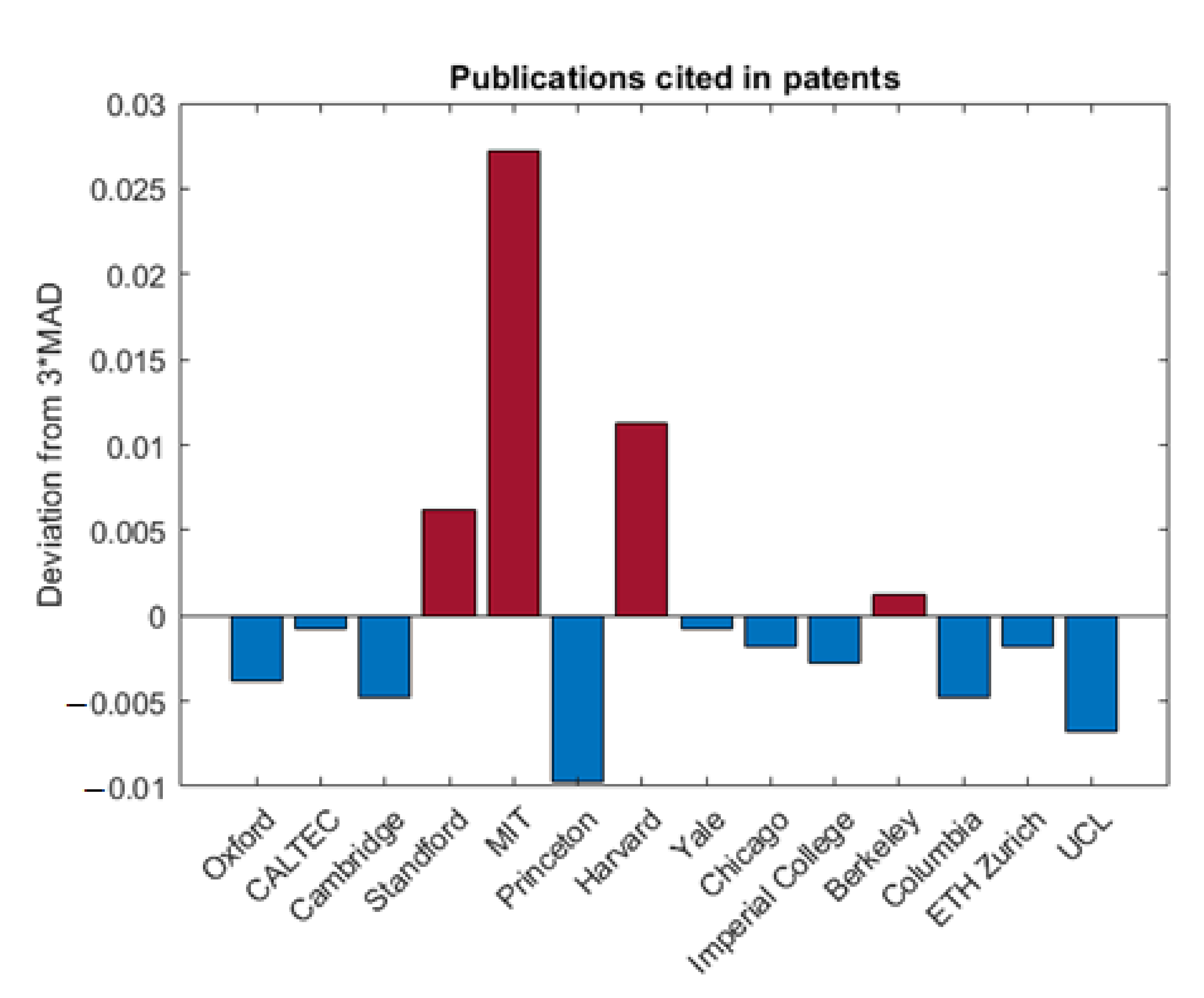

3.4. Fourth Step

4. Discussion

- -

- According to the results in Section 3.2, only 4 universities in T10 are clearly present in the identified clusters: Stanford University, CALTEC, University of Berkeley (UCB) and Harvard University

- -

- As emerge from Section 3.4, only 2 university over 14 (MIT, Harvard) result to be stand out with respect to the remaining ones if all the three indicators, “co-publications with industrial partners”, “patent awarded (as absolute number)” and “publications cited in patents”, are jointly used;

- -

- By combining the two previous results, it is possible to state that only 5 over 14 universities in T10 (Berkeley, Stanford, MIT, Harvard, CALTEC) exhibit a high-level performance (they are included in the first 30 position over the 123) in KT if compared with the remaining universities in T100;

- -

- A joint reading of the results obtained in Section 3 does not return a coherent and clear interpretation. The third mission and the process of knowledge transfer that takes place toward the territory can manifest itself in a variety of forms that are difficult to quantify with simple indicators. The complexity and the many facets of KT are such that they cannot be reduced to a fewer significant elements and that probably the quantitative data collected need to be integrated with qualitative data and context data.

- -

- The most popular global university rankings probably fail to effectively photograph third mission activities. They certainly do the best in the case of the other university missions: teaching and research.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Secundo, G.; de Beer, C.; Schutte, C.S.L.; Passiante, G. Mobilising Intellectual Capital to Improve European Universities’ Competitiveness: The Technology Transfer Offices’ Role. J. Intellect. Cap. 2017, 18, 607–624. [Google Scholar] [CrossRef]

- Agasisti, T.; Barra, C.; Zotti, R. Research, Knowledge Transfer, and Innovation: The Effect of Italian Universities’ Efficiency on Local Economic Development 2006–2012. J. Reg. Sci. 2019, 59, 819–849. [Google Scholar] [CrossRef]

- Research & Innovation Valorisation chanNels and Tools—Publications Office of the EU. Available online: https://op.europa.eu/en/web/eu-law-and-publications/publication-detail/-/publication/f35fded6-bc0b-11ea-811c-01aa75ed71a1 (accessed on 29 October 2022).

- World Economic Forum. How Universities Can Become a Platform for Social Change. Available online: https://www.weforum.org/agenda/2019/06/universities-platform-social-change-tokyo/ (accessed on 29 October 2022).

- Trippl, M.; Sinozic, T.; Lawton Smith, H. The Role of Universities in Regional Development: Conceptual Models and Policy Institutions in the UK, Sweden and Austria. Eur. Plan. Stud. 2015, 23, 1722–1740. [Google Scholar] [CrossRef]

- Cesaroni, F.; Piccaluga, A. The Activities of University Knowledge Transfer Offices: Towards the Third Mission in Italy. J. Technol. Transf. 2016, 41, 753–777. [Google Scholar] [CrossRef]

- di Berardino, D.; Corsi, C. A Quality Evaluation Approach to Disclosing Third Mission Activities and Intellectual Capital in Italian Universities. J. Intellect. Cap. 2018, 19, 178–201. [Google Scholar] [CrossRef]

- Compagnucci, L.; Spigarelli, F. The Third Mission of the University: A Systematic Literature Review on Potentials and Constraints. Technol. Forecast. Soc. Change 2020, 161, 120284. [Google Scholar] [CrossRef]

- Abreu, M.; Demirel, P.; Grinevich, V.; Karataş-Özkan, M. Entrepreneurial Practices in Research-Intensive and Teaching-Led Universities. Small Bus. Econ. 2016, 47, 695–717. [Google Scholar] [CrossRef]

- Urdari, C.; Farcas, T.V.; Tiron-Tudor, A. Assessing the Legitimacy of HEIs’ Contributions to Society: The Perspective of International Rankings. Sustain. Account. Manag. Policy J. 2017, 8, 191–215. [Google Scholar] [CrossRef]

- Backs, S.; Günther, M.; Stummer, C. Stimulating Academic Patenting in a University Ecosystem: An Agent-Based Simulation Approach. J. Technol. Transf. 2019, 44, 434–461. [Google Scholar] [CrossRef]

- Zawdie, G. Knowledge Exchange and the Third Mission of Universities: Introduction: The Triple Helix and the Third Mission—Schumpeter Revisited. Ind. High. Educ. 2010, 24, 151–155. [Google Scholar] [CrossRef]

- Lee, J.J.; Vance, H.; Stensaker, B.; Ghosh, S. Global Rankings at a Local Cost? The Strategic Pursuit of Status and the Third Mission. Comp. Educ. 2020, 56, 236–256. [Google Scholar] [CrossRef]

- Montesinos, P.; Carot, J.M.; Martinez, J.M.; Mora, F. Third Mission Ranking for World Class Universities: Beyond Teaching and Research. High. Educ. Eur. 2008, 33, 259–271. [Google Scholar] [CrossRef]

- Kapetaniou, C.; Lee, S.H. A Framework for Assessing the Performance of Universities: The Case of Cyprus. Technol. Forecast. Soc. Change 2017, 123, 169–180. [Google Scholar] [CrossRef]

- Laredo, P. Revisiting the Third Mission of Universities: Toward a Renewed Categorization of University Activities? High. Educ. Policy 2007, 20, 441–456. [Google Scholar] [CrossRef]

- Mariani, G.; Carlesi, A.; Scarfò, A.A. Academic Spinoff as a Value Driver of Intellectual Capital: The Case of University of Pisa. J. Intellect. Cap. 2018, 19, 202–226. [Google Scholar] [CrossRef]

- Markuerkiaga, L.; Caiazza, R.; Igartua, J.I.; Errasti, N. Factors Fostering Students’ Spin-off Firm Formation: An Empirical Comparative Study of Universities from North and South Europe. J. Manag. Dev. 2021, 35, 814–846. [Google Scholar] [CrossRef]

- Giuri, P.; Munari, F.; Scandura, A.; Toschi, L. The Strategic Orientation of Universities in Knowledge Transfer Activities. Technol Forecast Soc. Change 2019, 138, 261–278. [Google Scholar] [CrossRef]

- Pausits, A. The Knowledge Society and Diversification of Higher Education: From the Social Contract to the Mission of Universities. In European Higher Education Area; Springer: Cham, Switzerland, 2015; pp. 267–284. [Google Scholar] [CrossRef]

- The Future of Higher Education Is Social Impact. Available online: https://ssir.org/articles/entry/the_future_of_higher_education_is_social_impact (accessed on 29 October 2022).

- Campbell, A.; Cavalade, C.; Haunold, C.; Karanikic, P.; Piccaluga, A. Knowledge Transfer Metrics—Towards a European-Wide Set of Harmonised Indicators; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar] [CrossRef]

- Scanlan, J. A Capability Maturity Framework for Knowledge Transfer. Ind. High. Educ. 2018, 32, 235–244. [Google Scholar] [CrossRef]

- Azagra-Caro, J.M.; Barberá-Tomás, D.; Edwards-Schachter, M.; Tur, E.M. Dynamic Interactions between University-Industry Knowledge Transfer Channels: A Case Study of the Most Highly Cited Academic Patent. Res. Policy 2017, 46, 463–474. [Google Scholar] [CrossRef]

- Wynn, M.G. University-Industry Technology Transfer in the UK: Emerging Research and Opportunities; IGI Global: Hershey, PA, USA, 2018. [Google Scholar]

- Griliches, Z. The Search for R&D Spillovers. Scand. J. Econ. 1992, 94, S29. [Google Scholar] [CrossRef]

- Scarrà, D.; Piccaluga, A. The Impact of Technology Transfer and Knowledge Spillover from Big Science: A Literature Review. Technovation 2022, 116, 102165. [Google Scholar] [CrossRef]

- O’Reilly, N.M.; Robbins, P.; Scanlan, J. Dynamic Capabilities and the Entrepreneurial University: A Perspective on the Knowledge Transfer Capabilities of Universities. J. Small Bus. Entrep. 2019, 31, 243–263. [Google Scholar] [CrossRef]

- Olcay, G.A.; Bulu, M. Is Measuring the Knowledge Creation of Universities Possible?: A Review of University Rankings. Technol. Forecast Soc. Change 2017, 123, 153–160. [Google Scholar] [CrossRef]

- Landinez, L.; Kliewe, T.; Diriba, H. Entrepreneurial University Indicators in Global University Rankings. In Developing Engaged and Entrepreneurial Universities: Theories, Concepts and Empirical Findings; Springer: Singapore, 2019; pp. 57–85. [Google Scholar] [CrossRef]

- European Commission Press Release. New International University Ranking: Commission Welcomes Launch of U-Multirank. Available online: https://ec.europa.eu/commission/presscorner/detail/en/IP_14_548 (accessed on 29 October 2022).

- Dip, J.A. What Does U-Multirank Tell Us about Knowledge Transfer and Research? Scientometrics 2021, 126, 3011–3039. [Google Scholar] [CrossRef]

- Bellantuono, L.; Monaco, A.; Tangaro, S.; Amoroso, N.; Aquaro, V.; Bellotti, R. An equity-oriented rethink of global rankings with complex networks mapping development. Sci. Rep. 2020, 10, 18046. [Google Scholar] [CrossRef] [PubMed]

- Marhl, M.; Pausits, A. Third Mission Indicators for New Ranking Methodologies. Lifelong Educ. XXI Century 2013, 1, 89–101. [Google Scholar] [CrossRef]

- Ringel, L.; Espeland, W.; Sauder, M.; Werron, T. Worlds of Rankings. Res. Sociol. Organ. 2021, 74, 1–23. [Google Scholar] [CrossRef]

- Dill, D.D.; Soo, M. Academic Quality, League Tables, and Public Policy: A Cross-National Analysis of University Ranking Systems. Higher Educ. 2005, 49, 495–533. [Google Scholar] [CrossRef]

- Bougnol, M.L.; Dulá, J.H. Technical Pitfalls in University Rankings. High Educ. 2015, 69, 859–866. [Google Scholar] [CrossRef]

- Sauder, M.; Espeland, W.N. The Discipline of Rankings: Tight Coupling and Organizational Change. Am. Sociol. Rev. 2009, 74, 63–82. [Google Scholar] [CrossRef]

- Oțoiu, A.; Țițan, E. To what extent ict resources influence the learning experience? an inquiry using u-multirank data. INTED2021 Proc. 2021, 1, 40–45. [Google Scholar] [CrossRef]

- Johnes, J. University Rankings: What Do They Really Show? Scientometrics 2018, 115, 585–606. [Google Scholar] [CrossRef]

- Frondizi, R.; Fantauzzi, C.; Colasanti, N.; Fiorani, G. The Evaluation of Universities’ Third Mission and Intellectual Capital: Theoretical Analysis and Application to Italy. Sustainability 2019, 11, 3455. [Google Scholar] [CrossRef]

- Hazelkorn, E.; Loukkola, T.; Zhang, T. Rankings in Institutional Strategies and Processes: Impact or Illusion Illusion; European University Association: Brussels, Belgium, 2014. [Google Scholar]

- Bellantuono, L.; Monaco, A.; Amoroso, N.; Aquaro, V.; Bardoscia, M.; Loiotile, A.D.; Lombardi, A.; Tangaro, S.; Bellotti, R. Territorial Bias in University Rankings: A Complex Network Approach. Sci. Rep. 2022, 12, 4995. [Google Scholar] [CrossRef] [PubMed]

- Degli Esposti, M.; Vidotto, G. II Gruppo di Lavoro CRUI Sui Ranking Internazionali: Attività, Risultati e Prospettive 2017–2020; Fondazione CRUI: Roma, Italy, 2020; ISBN 978-88-96524-32-9. [Google Scholar]

- Salomaa, M.; Cinar, R.; Charles, D. Rankings and Regional Development: The Cause or the Symptom of Universities’ Insufficient Regional Contributions? High. Educ. Gov. Policy 2021, 2, 31–44. [Google Scholar]

- Rauhvargers, A. Global University Rankings and Their Impact—Report II; European University Association: Brussels, Belgium, 2013; pp. 21–23. [Google Scholar]

- Loukkola, T. Europe: Impact and Influence of Rankings in Higher Education. In Global Rankings and the Geopolitics of Higher Education; Routledge: London, UK, 2016; pp. 127–139. [Google Scholar] [CrossRef]

- Pusser, B.; Marginson, S. University Rankings in Critical Perspective. J. High. Educ. 2013, 84, 544–568. [Google Scholar] [CrossRef]

- Aguillo, I.F.; Bar-Ilan, J.; Levene, M.; Ortega, J.L. Comparing University Rankings. Scientometrics 2010, 85, 243–256. [Google Scholar] [CrossRef]

- Moed, H.F. A Critical Comparative Analysis of Five World University Rankings. Scientometrics 2017, 110, 967–990. [Google Scholar] [CrossRef]

- Academic Ranking of World Universities (ARWU). Available online: https://www.shanghairanking.com/rankings/arwu/2020 (accessed on 20 September 2021).

- QS World University Rankings® (QSWUR). Available online: https://www.topuniversities.com/university-rankings/world-university-rankings/2020 (accessed on 20 September 2021).

- Times Higher Education World University Rankings (THEWUR). Available online: https://www.timeshighereducation.com/world-university-rankings/2020/worldranking#!/page/0/length/25/sort_by/rank/sort_order/asc/cols/stats (accessed on 20 September 2021).

- Van Vught, F.; Ziegele, F. Design and Testing the Feasibility of a Multidimensional Global University Ranking Final Report; Consortium for Higher Education and Research Performance Assessment: Twente, The Netherlands, 2011. [Google Scholar]

- Prado, A. Performances of the Brazilian Universities in the “u-multirank” in the Period 2017–2020; SciELO Preprints: São Paulo, Brasil, 2021. [Google Scholar] [CrossRef]

- Decuypere, M.; Landri, P. Governing by Visual Shapes: University Rankings, Digital Education Platforms and Cosmologies of Higher Education. Crit. Stud. Educ. 2020, 62, 17–33. [Google Scholar] [CrossRef]

- Kaiser, F.; Zeeman, N. U-Multirank: Data Analytics and Scientometrics. In Research Analytics; Auerbach Publications: Boca Raton, FL, USA, 2017; pp. 185–220. [Google Scholar] [CrossRef]

- Westerheijden, D.F.; Federkeil, G. U-Multirank: A European multidimensional transparency tool in higher education. Int. High. Educ. 2018, 4, 77–96. [Google Scholar]

- U-multirank. Available online: https://www.umultirank.org/about/u-multirank/the-project/ (accessed on 20 September 2022).

- Roux, M. A Comparative Study of Divisive Hierarchical Clustering Algorithms. Arxiv 2015, arXiv:1506.08977. [Google Scholar] [CrossRef]

- Ahmed, M.; Seraj, R.; Islam, S.M.S. The K-Means Algorithm: A Comprehensive Survey and Performance Evaluation. Electronics 2020, 9, 1295. [Google Scholar] [CrossRef]

- Wiley. Robust Statistics, 2nd Edition. Available online: https://www.wiley.com/en-us/Robust+Statistics%2C+2nd+Edition-p-9780470129906 (accessed on 29 October 2022).

- Leys, C.; Ley, C.; Klein, O.; Bernard, P.; Licata, L. Detecting Outliers: Do Not Use Standard Deviation around the Mean, Use Absolute Deviation around the Median. J. Exp. Soc. Psychol. 2013, 49, 764–766. [Google Scholar] [CrossRef]

- Simmons, J.P.; Nelson, L.D.; Simonsohn, U. False-Positive Psychology: Undisclosed Flexibility in Data Collection and Analysis Allows Presenting Anything as Significant. Psychol. Sci. 2011, 22, 1359–1366. [Google Scholar] [CrossRef]

- Van de Wiel, M.A.; Bucchianico, A.D. Fast Computation of the Exact Null Distribution of Spearman’s ρ and Page’s L Statistic for Samples with and without Ties. J. Stat. Plan. Inference 2001, 92, 133–145. [Google Scholar] [CrossRef]

- Rossi, F.; Rosli, A. Indicators of University–Industry Knowledge Transfer Performance and Their Implications for Universities: Evidence from the United Kingdom. Stud. High. Educ. 2014, 40, 1970–1991. [Google Scholar] [CrossRef]

- Piirainen, K.A.; Andersen, A.D.; Andersen, P.D. Foresight and the Third Mission of Universities: The Case for Innovation System Foresight. Foresight 2016, 18, 24–40. [Google Scholar] [CrossRef]

- Djoundourian, S.; Shahin, W. Academia–Business Cooperation: A Strategic Plan for an Innovative Executive Education Program. Ind. High. Educ. 2022, 42, 09504222221083852. [Google Scholar] [CrossRef]

- Kohus, Z.; Baracskai, Z.; Czako, K. The Relationship between University-Industry Co-Publication Outputs. In Proceedings of the 20th International Scientific Conference on Economic and Social Development. Varazdin Development and Entrepreneurship Agency, Budapest, Hungary, 4–5 September 2020; pp. 109–122. [Google Scholar]

- Perkmann, M.; Neely, A.; Walsh, K. How Should Firms Evaluate Success in University–Industry Alliances? A Performance Measurement System. R&D Manag. 2011, 41, 202–216. [Google Scholar] [CrossRef]

- Tijssen, R. Joint Research Publications: A Performance Indicator of University-Industry Collaboration. Assess. Eval. High. Educ. 2011, 5, 19–40. [Google Scholar]

- Tijssen, R.J.W.; van Leeuwen, T.N.; van Wijk, E. Benchmarking University-Industry Research Cooperation Worldwide: Performance Measurements and Indicators Based on Co-Authorship Data for the World’s Largest Universities. Res. Eval. 2009, 18, 13–24. [Google Scholar] [CrossRef]

- Giunta, A.; Pericoli, F.M.; Pierucci, E. University–Industry Collaboration in the Biopharmaceuticals: The Italian Case. J. Technol. Transf. 2016, 41, 818–840. [Google Scholar] [CrossRef]

- Levy, R.; Roux, P.; Wolff, S. An Analysis of Science-Industry Collaborative Patterns in a Large European University. J. Technol. Transf. 2009, 34, 1–23. [Google Scholar] [CrossRef]

- Tijssen, R.J.W. Universities and Industrially Relevant Science: Towards Measurement Models and Indicators of Entrepreneurial Orientation. Res. Policy 2006, 35, 1569–1585. [Google Scholar] [CrossRef]

- Yegros-Yegros, A.; Azagra-Caro, J.M.; López-Ferrer, M.; Tijssen, R.J.W.; Yegros-Yegros, A.; Azagra-Caro, J.M.; López-Ferrer, M.; Tijssen, R.J.W. Do University–Industry Co-Publication Outputs Correspond with University Funding from Firms? Res. Eval. 2016, 25, 136–150. [Google Scholar] [CrossRef]

- Wong, P.K.; Singh, A. Do Co-Publications with Industry Lead to Higher Levels of University Technology Commercialization Activity? Scientometrics 2013, 97, 245–265. [Google Scholar] [CrossRef]

- University-Industry Collaboration: A Closer Look for Research Leaders. Available online: https://www.elsevier.com/research-intelligence/university-industry-collaboration (accessed on 29 October 2022).

- Seppo, M.; Lilles, A. Indicators Measuring University-Industry Cooperation. Est. Discuss. Econ. Policy 2012, 20, 204. [Google Scholar] [CrossRef]

- Katz, J.S.; Martin, B.R. What Is Research Collaboration? Res. Policy 1997, 26, 1–18. [Google Scholar] [CrossRef]

- Metrics for Knowledge Transfer from Public Research Organisations in Europe Report from the European Commission’s Expert Group on Knowledge Transfer Metrics. Available online: https://ec.europa.eu/info/research-and-innovation_en (accessed on 20 September 2022).

- Huang, Z.; Chen, H.; Yip, A.; Ng, G.; Guo, F.; Chen, Z.-K.; Roco, M.C.; Huang, Z.; Chen, H.; Yip, A.; et al. Longitudinal Patent Analysis for Nanoscale Science and Engineering: Country, Institution and Technology Field. JNR 2003, 5, 333–363. [Google Scholar] [CrossRef]

- Finne, H.; Day, A.; Piccaluga, A.; Spithoven, A.; Walter, P.; Wellen, D. A Composite Indicator for Knowledge Transfer Report from the European Commission’s Expert Group on Knowledge Transfer Indicators. Available online: https://op.europa.eu/en/publication-detail/-/publication/d3260d80-5e59-11ed-92ed-01aa75ed71a1 (accessed on 5 October 2022).

- Archive: Patent Statistics—Statistics Explained. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php?title=Archive:Patent_statistics&oldid=112826 (accessed on 29 October 2022).

- Choi, J.; Jang, D.; Jun, S.; Park, S. A Predictive Model of Technology Transfer Using Patent Analysis. Sustainability 2015, 7, 16175–16195. [Google Scholar] [CrossRef]

- Hammarfelt, B. Linking Science to Technology: The “Patent Paper Citation” and the Rise of Patentometrics in the 1980s. J. Doc. 2021, 77, 1413–1429. [Google Scholar] [CrossRef]

- Yamashita, Y. Exploring Characteristics of Patent-Paper Citations and Development of New Indicators; IntechOpen: London, UK, 2018. [Google Scholar] [CrossRef]

- OECD iLibrary. OECD Science, Technology and Industry Scoreboard 2015: Innovation for Growth and Society. Available online: https://www.oecd-ilibrary.org/science-and-technology/oecd-science-technology-and-industry-scoreboard-2015_sti_scoreboard-2015-en (accessed on 29 October 2022).

- Hamano, Y. University–Industry Collaboration; WIPO: Geneva, Switzerland, 2018. [Google Scholar]

- Li, L.; Chen, Q.; Jia, X.; Herrera-Viedma, E. Co-Patents’ Commercialization: Evidence from China. Econ. Resarch-Ekon. Istraživanja 2020, 34, 1709–1726. Available online: http://www.tandfonline.com/action/authorSubmission?journalCode=rero20&page=instructions (accessed on 20 September 2022). [CrossRef]

- Cerulli, G.; Marin, G.; Pierucci, E.; Potì, B. Do Company-Owned Academic Patents Influence Firm Performance? Evidence from the Italian Industry. J. Technol. Transf. 2022, 47, 242–269. [Google Scholar] [CrossRef]

- Peeters, H.; Callaert, J.; van Looy, B. Do Firms Profit from Involving Academics When Developing Technology? J. Technol. Transf. 2020, 45, 494–521. [Google Scholar] [CrossRef]

- Hudson, J.; Khazragui, H.F. Into the Valley of Death: Research to Innovation. Drug Discov. Today 2013, 18, 610–613. [Google Scholar] [CrossRef]

- Hockaday, T. University Technology Transfer: What It Is and How to Do It; Johns Hopkins University Press: Baltimore, MD, USA, 2020; p. 340. [Google Scholar]

| ARWU | QSWUR | THEWUR | ||||

|---|---|---|---|---|---|---|

| Rank | University | Country | University | Country | University | Country |

| 1 | Harvard University | US | Massachusetts Institute of Technology (MIT) | US | University of Oxford | UK |

| 2 | Stanford University | US | Stanford University | US | California Institute of Technology (CALTECH) | US |

| 3 | University of Cambridge | UK | Harvard University | US | University of Cambridge | UK |

| 4 | Massachusetts Institute of Technology (MIT) | US | University of Oxford | UK | Stanford University | US |

| 5 | University of California, Berkeley | US | California Institute of Technology (CALTECH) | US | Massachusetts Institute of Technology (MIT) | US |

| 6 | Princeton University | US | ETH Zurich—Swiss Federal Institute of Technology | CH | Princeton University | US |

| 7 | Columbia University | US | University of Cambridge | UK | Harvard University | US |

| 8 | California Institute of Technology (CALTECH) | US | University College London (UCL) | UK | Yale University | US |

| 9 | University of Oxford | UK | Imperial College London | UK | University of Chicago | US |

| 10 | University of Chicago | US | University of Chicago | US | Imperial College London | UK |

| Co-Publications with Industrial Partners | Patents Awarded (Absolute Number) | Patents Awarded (Size-Normalized) | Industry Co-Patents | Publications Cited in Patents | GPI KT | |

|---|---|---|---|---|---|---|

| University of California, Berkeley (UCB) | 7.10% | 98.94% | 2.00% | 10.02% | 2.30% | 24.07% |

| Harvard University | 8.00% | 66.12% | 1.45% | 8.26% | 3.30% | 17.43% |

| Massachusetts Institute of Technology (MIT) | 10.50% | 57.72% | 4.04% | 5.86% | 4.90% | 16.60% |

| Standford University | 9.40% | 40.63% | 1.68% | 11.13% | 2.80% | 13.13% |

| California Institute of Technology (CALTEC) | 7.60% | 29.42% | 10.88% | 7.33% | 2.10% | 11.47% |

| ETH Zurich—Swiss Federal Institute of Technology | 8.70% | 6.94% | 0.29% | 38.91% | 2.00% | 11.37% |

| University of Cambridge | 8.20% | 4.86% | 0.22% | 25.59% | 1.70% | 8.11% |

| University of Chicago | 6.80% | 4.89% | 0.24% | 20.76% | 2.00% | 6.94% |

| Columbia University | 7.80% | 19.97% | 0.54% | 4.23% | 1.70% | 6.85% |

| University College London (UCL) | 7.40% | 8.30% | 0.18% | 14.88% | 1.50% | 6.45% |

| Yale University | 6.70% | 8.20% | 0.51% | 11.97% | 2.10% | 5.90% |

| University of Oxford | 7.10% | 6.38% | 0.22% | 12.75% | 1.80% | 5.65% |

| Imperial College London | 10.20% | 4.17% | 0.20% | 10.95% | 1.90% | 5.48% |

| Princeton University | 7.30% | 5.88% | 0.61% | 11.11% | 1.20% | 5.22% |

| Position in T10 | Position in T100 according to GPI KT | |

|---|---|---|

| University of California, Berkeley (UCB) | 1 | 6 |

| Harvard University | 2 | 14 |

| Massachusetts Institute of Technology (MIT) | 3 | 15 |

| Stanford University | 4 | 26 |

| California Institute of Technology (CALTEC) | 5 | 30 |

| ETH Zurich—Swiss Federal Institute of Technology | 6 | 31 |

| University of Cambridge | 7 | 45 |

| University of Chicago | 8 | 62 |

| Columbia University | 9 | 65 |

| University College London (UCL) | 10 | 71 |

| Yale University | 11 | 79 |

| University of Oxford | 12 | 84 |

| Imperial College London | 13 | 88 |

| Princeton University | 14 | 93 |

| Co-Publications with Industrial Partners | Patents Awarded (Absolute Number) | Patents Awarded (Size-Normalized) | Industry Co-Patents | Publications Cited in Patents | GPI KT | |

|---|---|---|---|---|---|---|

| Tsinghua University | 5.60% | 42.18% | 29.09% | 82.28% | 1.20% | 32.07% |

| University of California, San Diego (UCSD) | 10.60% | 98.98% | 2.34% | 10.02% | 2.70% | 24.93% |

| University of California, Santa Barbara | 8.20% | 98.94% | 3.30% | 10.02% | 2.30% | 24.55% |

| University of California, Los Angeles (UCLA) | 8.00% | 100.00% | 1.85% | 10.15% | 2.50% | 24.50% |

| Boston University | 7.90% | 4.46% | 100.00% | 6.85% | 1.80% | 24.20% |

| University of California, Berkeley (UCB) | 7.10% | 98.94% | 2.00% | 10.02% | 2.30% | 24.07% |

| University of California, Davis | 6.70% | 98.94% | 2.19% | 10.02% | 1.70% | 23.91% |

| The University of Tokyo | 8.30% | 19.11% | 19.36% | 70.63% | 1.90% | 23.86% |

| Seoul National University | 8.10% | 28.13% | 28.27% | 46.52% | 1.80% | 22.56% |

| Weizmann Institute of Science | 4.90% | 6.74% | 89.68% | 5.12% | 3.50% | 21.99% |

| Kyoto University | 8.80% | 12.93% | 17.01% | 57.71% | 1.80% | 19.65% |

| Tokyo Institute of Technology (Tokyo Tech) | 11.10% | 7.24% | 3.62% | 72.17% | 1.70% | 19.17% |

| Tohoku University | 10.20% | 13.98% | 0.69% | 68.89% | 1.60% | 19.07% |

| Harvard University | 8.00% | 66.12% | 1.45% | 8.26% | 3.30% | 17.43% |

| N. cluster | Universities Contained into the Cluster |

|---|---|

| 1 | University of California, Santa Barbara University of California, Los Angeles (UCLA) University of California, Berkeley (UCB) University of California, Davis University of California, San Diego (UCSD) Harvard University KAIST—Korea Advanced Institute of Science &Technology |

| 2 | KU Leuven University of Toronto Boston University Weizmann Institute of Science The University of Queensland Université Grenoble Alpes The University of New South Wales (UNSW Sydney) The University of Melbourne University of Copenhagen Aarhus University The Hong Kong University of Science and Technology Sungkyunkwan University (SKKU) The University of Tokyo Korea University Kyoto University Seoul National University Tsinghua University |

| 3 | Stanford University California Institute of Technology (CALTEC) |

| 4 | Universities in the rest of the world |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Demarinis Loiotile, A.; De Nicolò, F.; Agrimi, A.; Bellantuono, L.; La Rocca, M.; Monaco, A.; Pantaleo, E.; Tangaro, S.; Amoroso, N.; Bellotti, R. Best Practices in Knowledge Transfer: Insights from Top Universities. Sustainability 2022, 14, 15427. https://doi.org/10.3390/su142215427

Demarinis Loiotile A, De Nicolò F, Agrimi A, Bellantuono L, La Rocca M, Monaco A, Pantaleo E, Tangaro S, Amoroso N, Bellotti R. Best Practices in Knowledge Transfer: Insights from Top Universities. Sustainability. 2022; 14(22):15427. https://doi.org/10.3390/su142215427

Chicago/Turabian StyleDemarinis Loiotile, Annamaria, Francesco De Nicolò, Adriana Agrimi, Loredana Bellantuono, Marianna La Rocca, Alfonso Monaco, Ester Pantaleo, Sabina Tangaro, Nicola Amoroso, and Roberto Bellotti. 2022. "Best Practices in Knowledge Transfer: Insights from Top Universities" Sustainability 14, no. 22: 15427. https://doi.org/10.3390/su142215427

APA StyleDemarinis Loiotile, A., De Nicolò, F., Agrimi, A., Bellantuono, L., La Rocca, M., Monaco, A., Pantaleo, E., Tangaro, S., Amoroso, N., & Bellotti, R. (2022). Best Practices in Knowledge Transfer: Insights from Top Universities. Sustainability, 14(22), 15427. https://doi.org/10.3390/su142215427