Developing an AI-Based Learning System for L2 Learners’ Authentic and Ubiquitous Learning in English Language

Abstract

1. Introduction

2. Literature Review

2.1. Technology-Supported Language Learning

2.2. Language Learning in Authentic and Ubiquitous Contexts

2.3. AI-Enabled Language Learning

3. Research Purpose and Research Questions

- (1)

- What is the AIELL system’s developmental process for facilitating students’ acquisition of English vocabulary and grammar?

- (2)

- What are the AIELL system’s key features for supporting students’ authentic and ubiquitous learning in English?

- (3)

- What is the correlation between design features and student engagement in the mobile learning environment?

4. Design and Development of the AIELL System

4.1. Design Process

4.2. Design Principles and Key Technologies

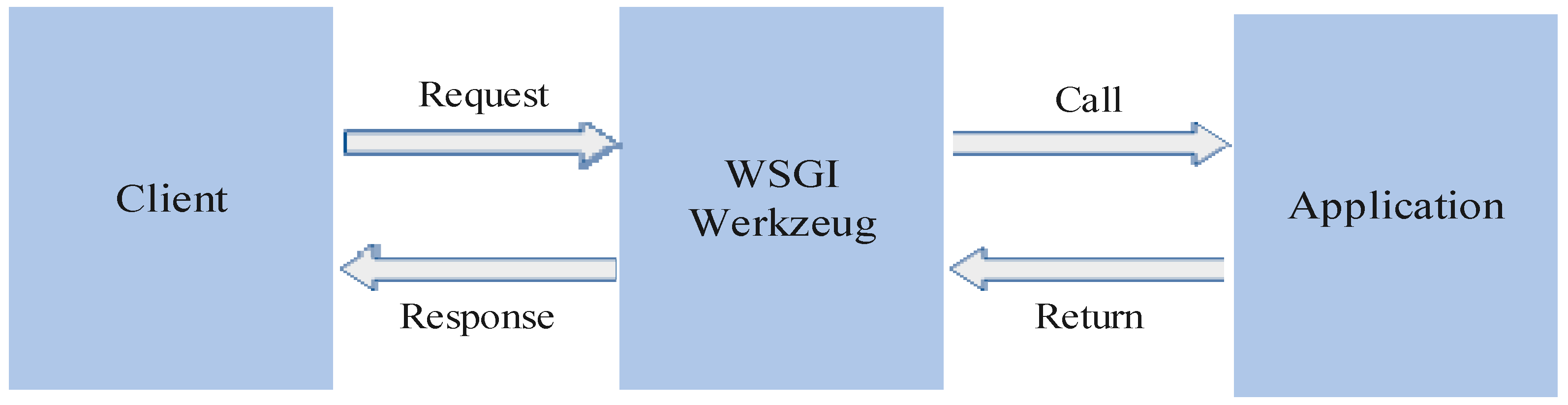

4.2.1. PyCharm Flask for System Construction

- The user operates the browser and sends the request.

- The request is forwarded to the corresponding web server.

- The web server forwards the request to the web application, and the application processes the request.

- The application returns the result to the web server.

- The browser receives the response and presents it to the user.

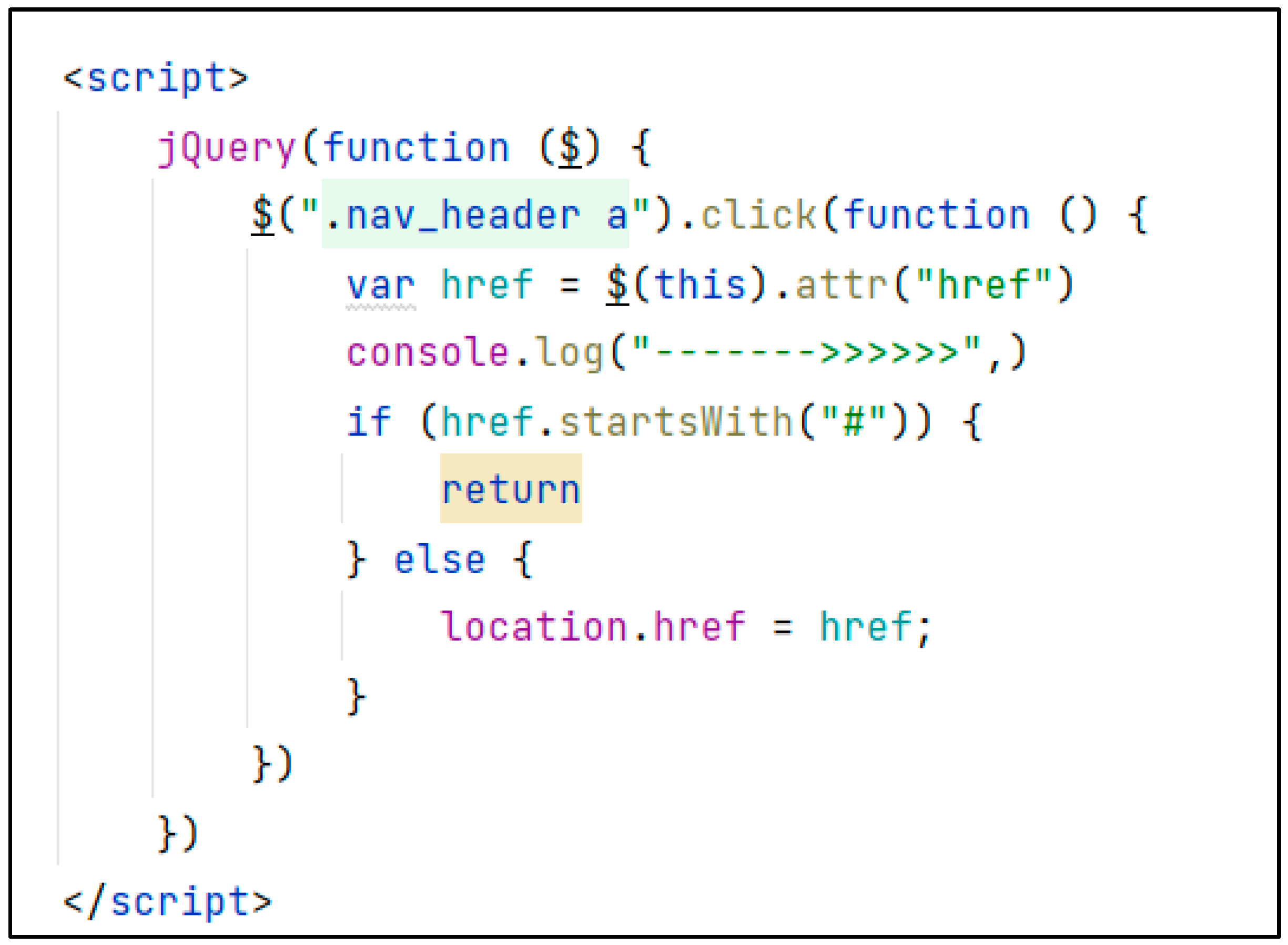

4.2.2. jQuery of JavaScript

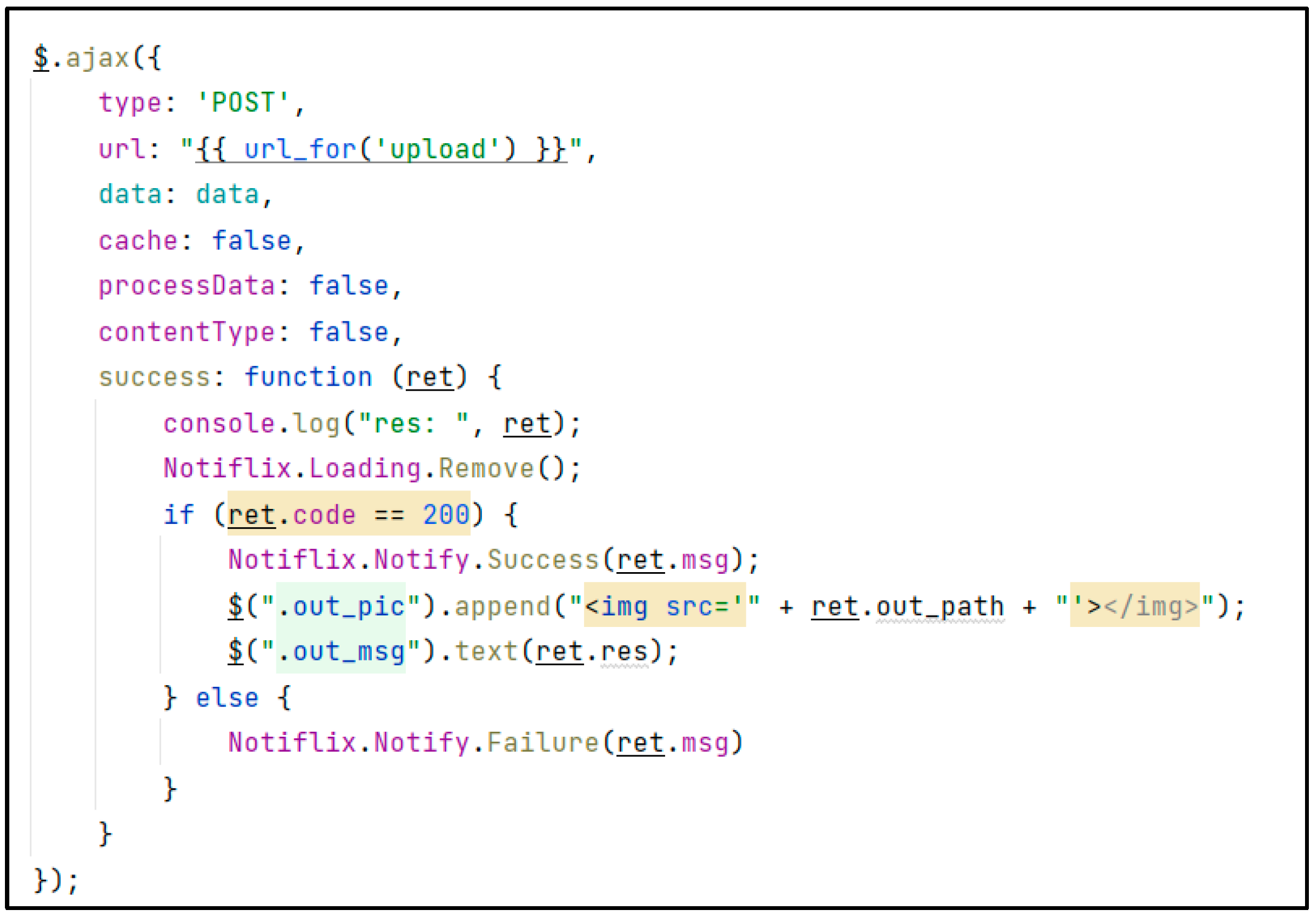

4.2.3. Ajax Asynchronous Request

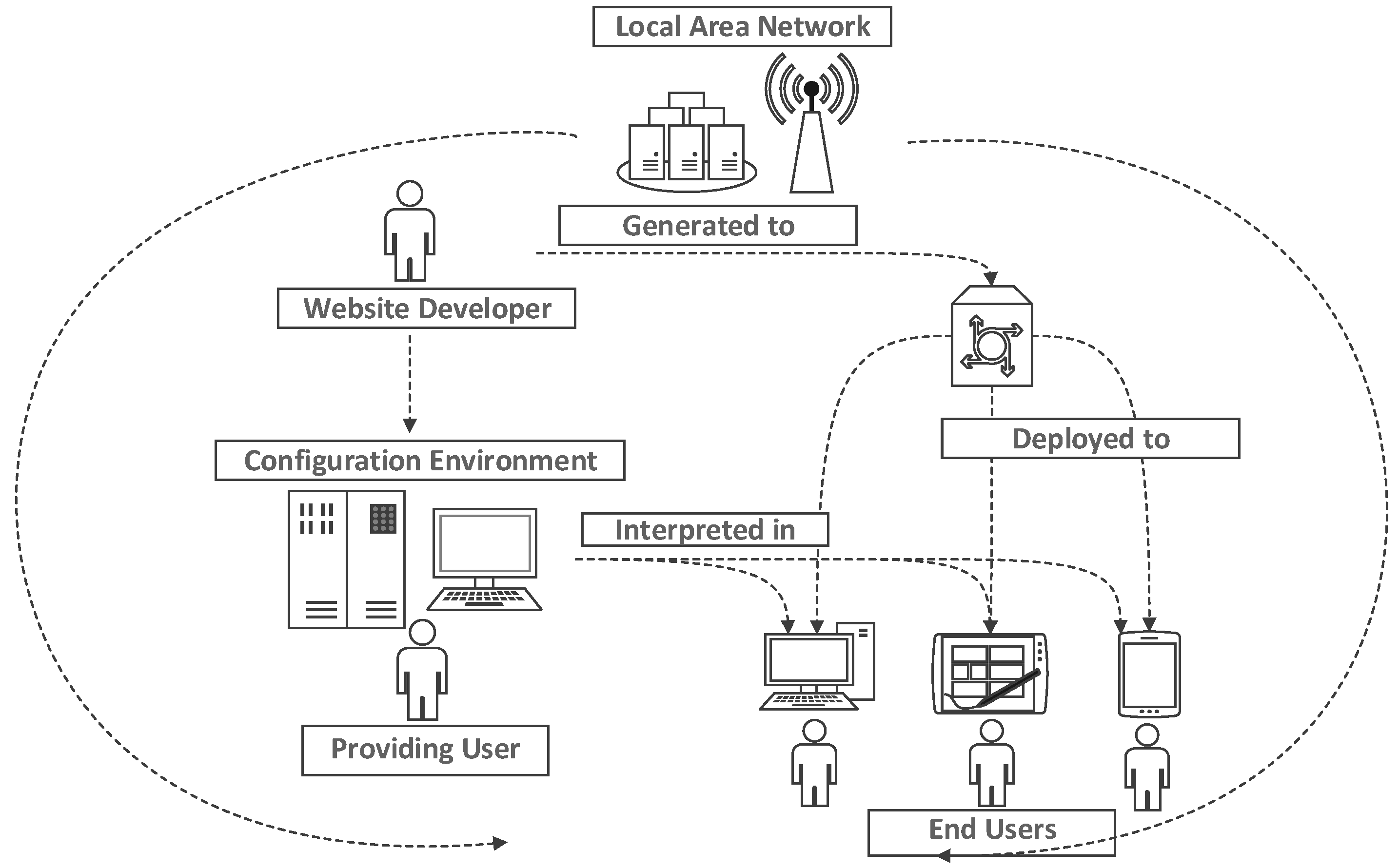

4.3. Configuration of the Designed Environment

4.4. Key Features and Functions of AIELL

4.4.1. The Local Environment with Flexible and Stable Conditions

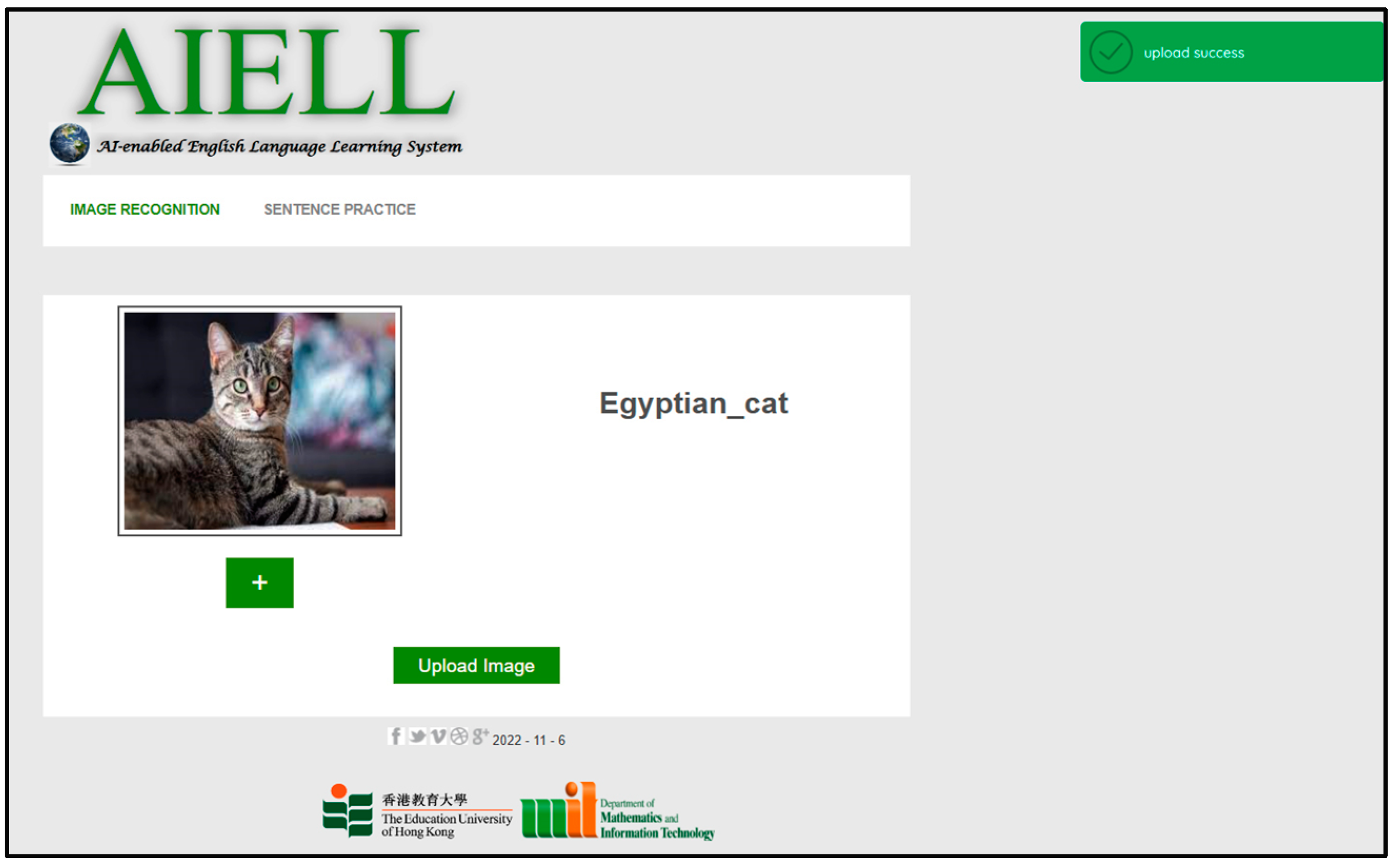

4.4.2. AI Image Recognition for Capturing Real-Life Objects in Authentic Learning Contexts Supported by Mobile Devices

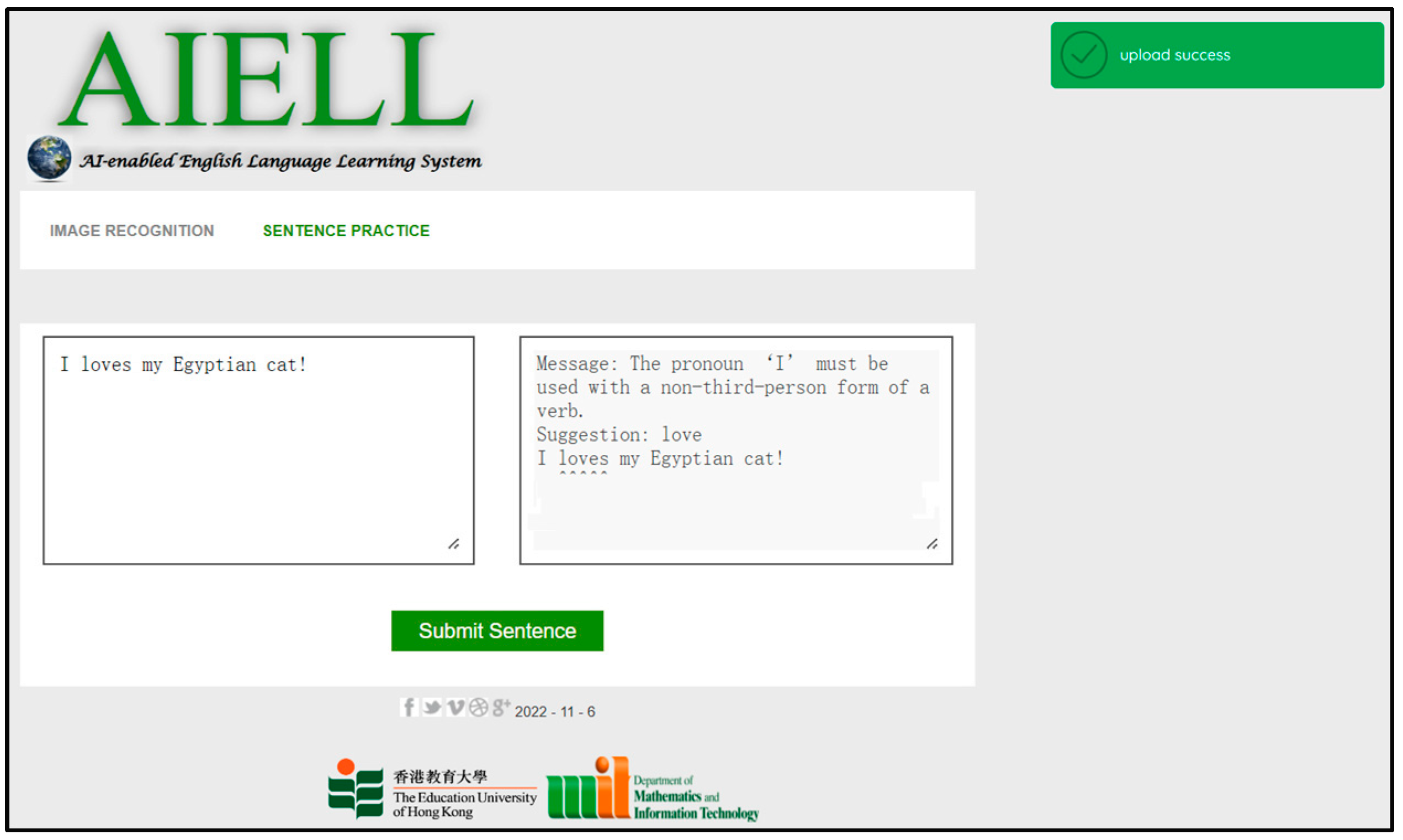

4.4.3. Automatic Grammar Correction for Sentence Practices with Object Related Vocabulary

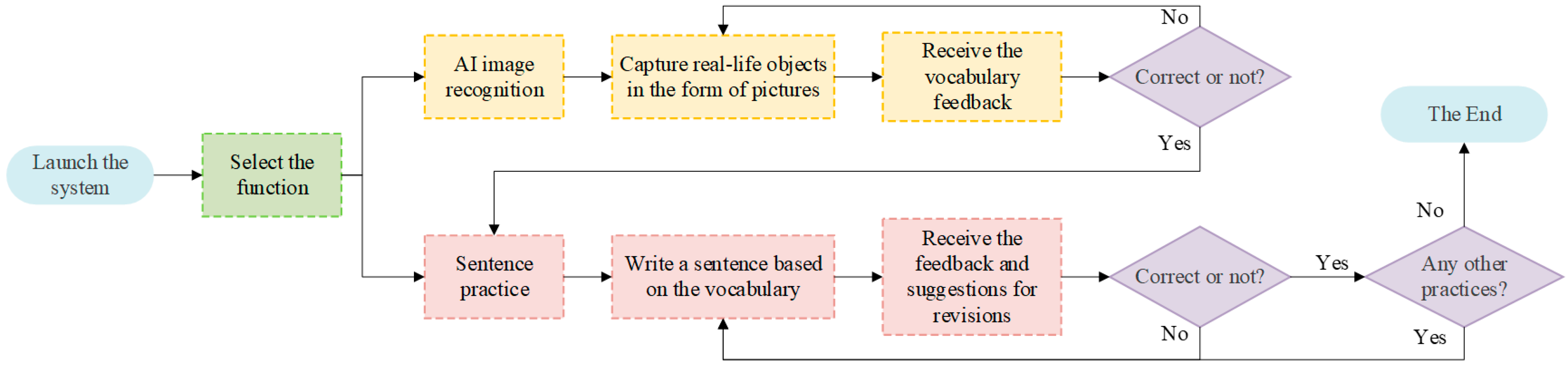

4.5. Workflow of the AIELL System

5. Evaluation Study

5.1. Participants

5.2. Data Collection and Analysis

5.2.1. Student Demonstration Test

5.2.2. Usability Test

5.2.3. Interview

- (1)

- What features or functions did you try during the demonstration test?

- (2)

- Do you believe that this experience will help lower-grade students learn English? Why?

- (3)

- Do you believe that this experience will make you a better teacher? Why?

- (4)

- Do you believe that the AIELL system is useful in the mobile learning environment?

6. Results

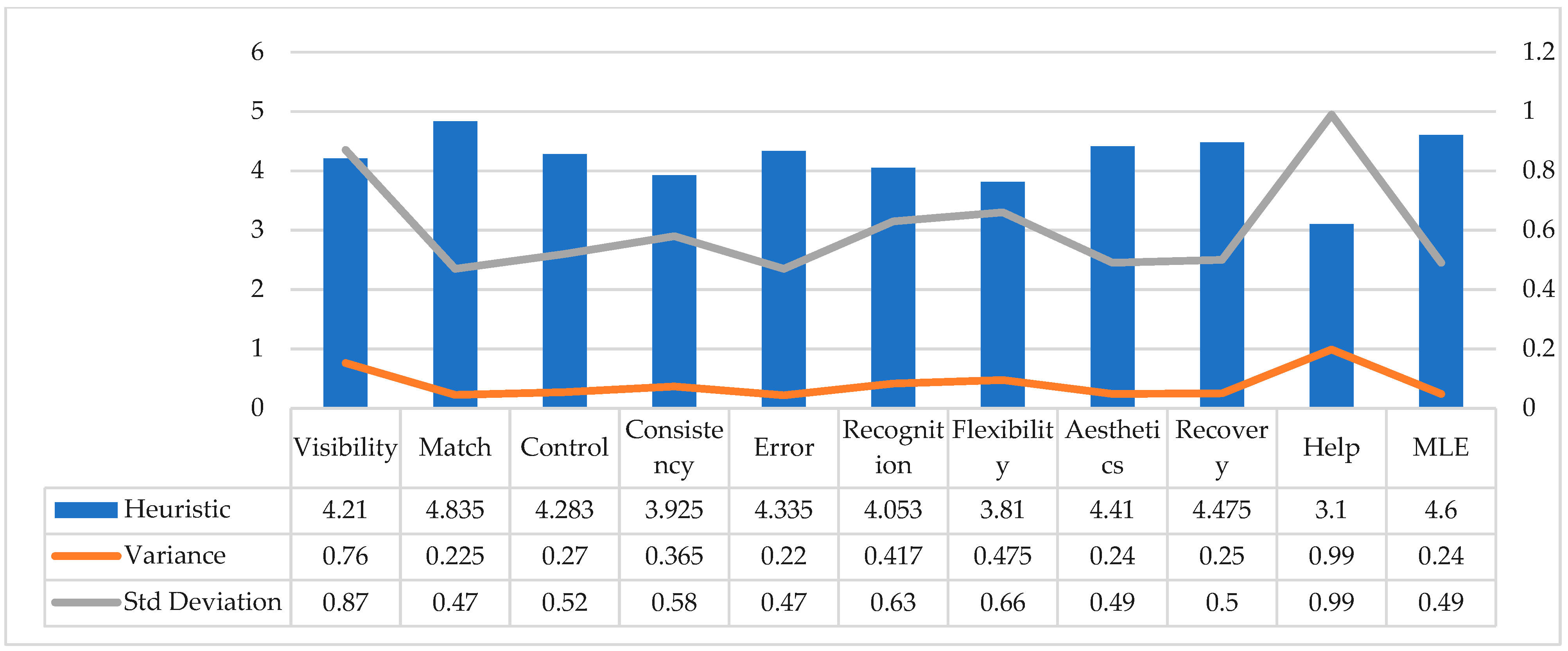

6.1. Overall Performance on Usability of AIELL System

6.1.1. Consistency

6.1.2. Recognition

6.1.3. Flexibility

6.1.4. Help

6.2. Correlations between Heuristic Dimensions and ME

6.3. Interview Responses

7. Study Limitations and Implications

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Tang, K.-Y.; Chang, C.-Y.; Hwang, G.-J. Trends in artificial intelligence-supported e-learning: A systematic review and co-citation network analysis (1998–2019). Interact. Learn. Environ. 2021, 1–19. [Google Scholar] [CrossRef]

- Kong, S.C.; Cheung, M.Y.W.; Zhang, G. Evaluating an artificial intelligence literacy programme for developing university students’ conceptual understanding, literacy, empowerment and ethical aware-ness. Educ. Technol. Soc. 2023, 26, 16–30. [Google Scholar]

- Hariharasudan, A.; Kot, S. A Scoping Review on Digital English and Education 4.0 for Industry 4. Soc. Sci. 2018, 7, 227. [Google Scholar] [CrossRef]

- Sarker, I.H. AI-Based Modeling: Techniques, Applications and Research Issues Towards Automation, Intelligent and Smart Systems. SN Comput. Sci. 2022, 3, 1–20. [Google Scholar] [CrossRef] [PubMed]

- Zawacki-Richter, O.; Marín, V.I.; Bond, M.; Gouverneur, F. Systematic review of research on artificial intelligence applications in higher education–where are the educators? Int. J. Educ. Technol. High. Educ. 2019, 16, 1–27. [Google Scholar] [CrossRef]

- Kim, W.-H.; Kim, J.-H. Individualized AI Tutor Based on Developmental Learning Networks. IEEE Access 2020, 8, 27927–27937. [Google Scholar] [CrossRef]

- Laitinen, K.; Laaksonen, S.-M.; Koivula, M. Slacking with the Bot: Programmable Social Bot in Virtual Team Interaction. J. Comput. Commun. 2021, 26, 343–361. [Google Scholar] [CrossRef]

- Mandal, S.; Naskar, S.K. Solving arithmetic mathematical word problems: A review and recent advancements. Inf. Technol. Appl. Math. 2019, 699, 95–114. [Google Scholar]

- Su, J.; Yang, W. Artificial intelligence in early childhood education: A scoping review. Comput. Educ. Artif. Intell. 2022, 3, 100049. [Google Scholar] [CrossRef]

- Villardón-Gallego, L.; García-Carrión, R.; Yáñez-Marquina, L.; Estévez, A. Impact of the Interactive Learning Environments in Children’s Prosocial Behavior. Sustainability 2018, 10, 2138. [Google Scholar] [CrossRef]

- Deng, L.; Liu, Y. Deep Learning in Natural Language Processing; Springer: Berlin/Heidelberg, Germany, 2018. [Google Scholar]

- Reinders, H.; Lan, Y.J. Big data in language education and research. Lang. Learn. Technol. 2021, 25, 1–3. [Google Scholar]

- Lee, S.-M. A systematic review of context-aware technology use in foreign language learning. Comput. Assist. Lang. Learn. 2019, 35, 294–318. [Google Scholar] [CrossRef]

- Chang, C.-K.; Hsu, C.-K. A mobile-assisted synchronously collaborative translation–annotation system for English as a foreign language (EFL) reading comprehension. Comput. Assist. Lang. Learn. 2011, 24, 155–180. [Google Scholar] [CrossRef]

- Godwin-Jones, R. Partnering with AI: Intelligent writing assistance and instructed language learning. Lang. Learn. Technol. 2022, 26, 5–24. [Google Scholar]

- Lin, P.-Y.; Chai, C.-S.; Jong, M.S.-Y.; Dai, Y.; Guo, Y.; Qin, J. Modeling the structural relationship among primary students’ motivation to learn artificial intelligence. Comput. Educ. Artif. Intell. 2020, 2, 100006. [Google Scholar] [CrossRef]

- Fryer, L.K.; Nakao, K.; Thompson, A. Chatbot learning partners: Connecting learning experiences, interest and competence. Comput. Hum. Behav. 2018, 93, 279–289. [Google Scholar] [CrossRef]

- Fryer, L.; Coniam, D.; Carpenter, R.; Lăpușneanu, D. Bots for language learning now: Current and future directions. Lang. Learn. Technol. 2020, 24, 8–22. [Google Scholar]

- Heift, T. Web Delivery of Adaptive and Interactive Language Tutoring: Revisited. Int. J. Artif. Intell. Educ. 2015, 26, 489–503. [Google Scholar] [CrossRef]

- Ahmed, V.; Opoku, A. Technology supported learning and pedagogy in times of crisis: The case of COVID-19 pandemic. Educ. Inf. Technol. 2021, 27, 365–405. [Google Scholar] [CrossRef]

- Dong, Y.; Yu, X.; Alharbi, A.; Ahmad, S. AI-based production and application of English multimode online reading using multi-criteria decision support system. Soft Comput. 2022, 26, 10927–10937. [Google Scholar] [CrossRef]

- Cheng, L.; Ritzhaupt, A.D.; Antonenko, P. Effects of the flipped classroom instructional strategy on students’ learning outcomes: A meta-analysis. Educ. Technol. Res. Dev. 2019, 67, 793–824. [Google Scholar] [CrossRef]

- Huang, X.; Zou, D.; Cheng, K.S.; Chen, X.; Xie, H. Trends, research issues and applications of artificial intelligence in language education. Educ. Technol. Soc. 2023, 26, 12–131. [Google Scholar]

- Yeung, W.K.; Sun, D. An exploration of inquiry-based authentic learning enabled by mobile technology for primary science. Int. J. Mob. Learn. Organ. 2021, 15, 1–28. [Google Scholar] [CrossRef]

- Shadiev, R.; Wang, X.; Liu, T.; Yang, M. Improving students’ creativity in familiar versus unfamiliar mo-bile-assisted language learning environments. Interact. Learn. Environ. 2022, 1–23. [Google Scholar]

- Pikhart, M. Intelligent information processing for language education: The use of artificial intelligence in language learning apps. Procedia Comput. Sci. 2020, 176, 1412–1419. [Google Scholar] [CrossRef]

- Chiu, T.K. Digital support for student engagement in blended learning based on self-determination theory. Comput. Hum. Behav. 2021, 124, 106909. [Google Scholar] [CrossRef]

- Wei, Y. Toward Technology-Based Education and English as a Foreign Language Motivation: A Review of Literature. Front. Psychol. 2022, 13. [Google Scholar] [CrossRef]

- Lenkaitis, C.A. Technology as a mediating tool: Videoconferencing, L2 learning, and learner autonomy. Comput. Assist. Lang. Learn. 2019, 33, 483–509. [Google Scholar] [CrossRef]

- Srivani, V.; Hariharasudan, A.; Nawaz, N.; Ratajczak, S. Impact of Education 4.0 among engineering students for learning English language. PLoS ONE 2022, 17, e0261717. [Google Scholar] [CrossRef]

- Chen, X.; Xie, H.; Zou, D.; Hwang, G.-J. Application and theory gaps during the rise of Artificial Intelligence in Education. Comput. Educ. Artif. Intell. 2020, 1, 100002. [Google Scholar] [CrossRef]

- Kinshuk; Graf, S. Ubiquitous Learning. In Encyclopedia of the Sciences of Learning; Seel, N.M., Ed.; Springer: Boston, MA, USA, 2012. [Google Scholar]

- Milrad, M.; Wong, L.H.; Sharples, M.; Hwang, G.J.; Looi, C.K.; Ogata, H. Seamless Learning: An International Perspective on Next-Generation Technology-Enhanced Learning. In Handbook of Mobile Learning; Berge, Z.L., Muilenburg, L.Y., Eds.; Routledge: London, UK, 2013; pp. 95–108. [Google Scholar]

- Hwang, G.-J.; Lai, C.-L.; Wang, S.-Y. Seamless flipped learning: A mobile technology-enhanced flipped classroom with effective learning strategies. J. Comput. Educ. 2015, 2, 449–473. [Google Scholar] [CrossRef]

- Ma, Q. University L2 Learners. Voices and Experience in Making Use of Dictionary Apps in Mobile Assisted Language Learning (MALL). Int. J. Comput. Lang. Learn. Teach. 2019, 9, 18–36. [Google Scholar] [CrossRef]

- Shadiev, R.; Liu, T.; Hwang, W.Y. Review of research on mobile-assisted language learning in familiar, authentic environments. Br. J. Educ. Technol. 2020, 51, 709–720. [Google Scholar] [CrossRef]

- Hao, T.; Wang, Z.; Ardasheva, Y. Technology-assisted vocabulary learning for EFL learners: A meta-analysis. J. Res. Educ. Eff. 2021, 14, 645–667. [Google Scholar] [CrossRef]

- Park, G.-P.; French, B.F. Gender differences in the Foreign Language Classroom Anxiety Scale. System 2013, 41, 462–471. [Google Scholar] [CrossRef]

- Reiber-Kuijpers, M.; Kral, M.; Meijer, P. Digital reading in a second or foreign language: A systematic literature review. Comput. Educ. 2020, 163, 104115. [Google Scholar] [CrossRef]

- Lan, Y.-J.; Tam, V.T.T. The impact of 360° videos on basic Chinese writing: A preliminary exploration. Educ. Technol. Res. Dev. 2022, 1–24. [Google Scholar] [CrossRef]

- Ma, Q. Examining the role of inter-group peer online feedback on wiki writing in an EAP context. Comput. Assist. Lang. Learn. 2019, 33, 197–216. [Google Scholar] [CrossRef]

- Marden, M.P.; Herrington, J. Design principles for integrating authentic activities in an online community of foreign language learners. Issues Educ. Res. 2020, 30, 635–654. [Google Scholar]

- Karakaya, K.; Bozkurt, A. Mobile-assisted language learning (MALL) research trends and patterns through bibliometric analysis: Empowering language learners through ubiquitous educational technologies. System 2022, 110, 102925. [Google Scholar] [CrossRef]

- Wilson, S.G. The flipped class: A method to address the challenges of an undergraduate statistics course. Teach. Psychol. 2013, 40, 193–199. [Google Scholar] [CrossRef]

- Thompson, J.; Childers, G. The impact of learning to code on elementary students’ writing skills. Comput. Educ. 2021, 175, 104336. [Google Scholar] [CrossRef]

- Sun, D.; Looi, C.K. Boundary interaction: Towards developing a mobile technology-enabled science curriculum to integrate learning in the informal spaces. Br. J. Educ. Technol. 2018, 49, 505–515. [Google Scholar] [CrossRef]

- Li, S.; Wang, W. Effect of blended learning on student performance in K-12 settings: A meta-analysis. J. Comput. Assist. Learn. 2022, 38, 1254–1272. [Google Scholar] [CrossRef]

- Carvalho, L.; Martinez-Maldonado, R.; Tsai, Y.S.; Markauskaite, L.; De Laat, M. How can we design for learning in an AI world? Comput. Educ. Artif. Intell. 2022, 3, 100053. [Google Scholar]

- Chwo, G.S.M.; Marek, M.; Wu, W.-C.V. Meta-analysis of MALL research and design. System 2018, 74, 62–72. [Google Scholar] [CrossRef]

- Chih-Cheng, L.; Hsien-Sheng, H.; Sheng-ping, T.; Hsin-jung, C. Learning English Vocabulary collaboratively in a technology-supported classroom. TOJET Turk. Online J. Educ. Technol. 2014, 13, 162–173. [Google Scholar]

- Liu, T.-Y. A context-aware ubiquitous learning environment for language listening and speaking. J. Comput. Assist. Learn. 2009, 25, 515–527. [Google Scholar] [CrossRef]

- Lan, Y.J. Immersion, interaction and experience-oriented learning: Bringing virtual reality into FL learning. Lang. Learn. Technol. 2020, 24, 1–15. [Google Scholar]

- Hasnine, M.N.; Akçapınar, G.; Mouri, K.; Ueda, H. An Intelligent Ubiquitous Learning Environment and Analytics on Images for Contextual Factors Analysis. Appl. Sci. 2020, 10, 8996. [Google Scholar] [CrossRef]

- Kukulska-Hulme, A. Conclusions: A Lifelong Perspective on Mobile Language Learning. In Mobile Assisted Language Learning Across Educational Contexts; Morgana, V., Kukulska-Hulme, A., Eds.; Routledge: London, UK, 2021. [Google Scholar]

- Shadiev, R.; Wang, X.; Halubitskaya, Y.; Huang, Y.M. Enhancing foreign language learning outcomes and mitigating cultural attributes inherent in Asian culture in a mobile-assisted language learning environment. Sustainability 2022, 14, 8428. [Google Scholar] [CrossRef]

- Seyyedrezaei, M.S.; Amiryousefi, M.; Gimeno-Sanz, A.; Tavakoli, M. A meta-analysis of the relative effectiveness of technology-enhanced language learning on ESL/EFL writing performance: Retrospect and prospect. Comput. Assist. Lang. Learn. 2022, 1–34. [Google Scholar] [CrossRef]

- Casey, C. Incorporating cognitive apprenticeship in multi-media. Educ. Technol. Res. Dev. 1996, 44, 71–84. [Google Scholar] [CrossRef]

- Wong, L.-H.; King, R.B.; Chai, C.S.; Liu, M. Seamlessly learning Chinese: Contextual meaning making and vocabulary growth in a seamless Chinese as a second language learning environment. Instr. Sci. 2016, 44, 399–422. [Google Scholar] [CrossRef]

- Golonka, E.M.; Bowles, A.R.; Frank, V.M.; Richardson, D.L.; Freynik, S. Technologies for foreign language learning: A review of technology types and their effectiveness. Comput. Assist. Lang. Learn. 2014, 27, 70–105. [Google Scholar] [CrossRef]

- Hwang, W.-Y.; Manabe, K.; Cai, D.-J.; Ma, Z.-H. Collaborative Kinesthetic English Learning With Recognition Technology. J. Educ. Comput. Res. 2019, 58, 946–977. [Google Scholar] [CrossRef]

- Zhao, X. Leveraging Artificial Intelligence (AI) Technology for English Writing: Introducing Wordtune as a Digital Writing Assistant for EFL Writers. RELC J. 2022. [Google Scholar] [CrossRef]

- EI Shazly, R. Effects of artificial intelligence on English speaking anxiety and speaking performance: A case study. Expert Syst. 2021, 38, e12667. [Google Scholar] [CrossRef]

- Huang, M.-H. Designing website attributes to induce experiential encounters. Comput. Hum. Behav. 2003, 19, 425–442. [Google Scholar] [CrossRef]

- Grinberg, M. Flask Web Development: Developing Web Applications with Python; O’Reilly Media: Newton, MA, USA, 2018. [Google Scholar]

- Spencer, R.; Smalley, S.; Loscocco, P.; Hibler, M.; Andersen, D.; Lepreau, J. The Flask Security Architecture: System Support for Diverse Security Policies. In Proceedings of the 8th USENIX Security Symposium, Washington, DC, USA, 23–36 August 1999. [Google Scholar] [CrossRef]

- Ghimire, D. Comparative study on Python web frameworks: Flask and Django. Bachelor’s Thesis, Metropolia University of Applied Sciences, Helsinki, Finland, 2020. [Google Scholar]

- Lokhande, P.S.; Aslam, F.; Hawa, N.; Munir, J.; Gulamgaus, M. Efficient way of web development using python and flask. Int. J. Adv. Res. Comput. Sci. 2015, 6, 54–57. [Google Scholar]

- Gardner, J. The Web Server Gateway Interface (WSGI). In The Definitive Guide to Pylons; Gardner, J., Ed.; Apress: New York, NY, USA, 2009; pp. 369–388. [Google Scholar]

- Taneja, S.; Gupta, P.R. Python as a tool for web server application development. JIMS 8i-Int. J. Inf. Commun. Comput. Technol. 2014, 2, 77–83. [Google Scholar]

- Liao, Y.; Zhang, Z.; Yang, Y. Web Applications Based on AJAX Technology and Its Framework. In Communications and Information Processing; Zhao, M., Sha, J., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 320–326. [Google Scholar]

- Osmani, A. Learning JavaScript Design Patterns: A JavaScript and jQuery Developer’s Guide; O’Reilly Media: Newton, MA, USA, 2012. [Google Scholar]

- Woychowsky, E. AJAX: Creating Web Pages with Asynchronous JavaScript and XML; Prentice Hall: Upper Saddle River, NJ, USA, 2006. [Google Scholar]

- Liu, T.; Tan, T.; Chu, Y. QR Code and Augmented Reality-Supported Mobile English Learning System. In Mobile Multimedia Processing; Jiang, X., Ma, M.Y., Chen, C.W., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 37–52. [Google Scholar]

- Rolon-Mérette, D.; Ross, M.; Rolon-Mérette, T.; Church, K. Introduction to Anaconda and Python: Installation and setup. Python Res. Psychol. 2016, 16, S5–S11. [Google Scholar] [CrossRef]

- Gulli, A.; Pal, S. Deep Learning with Keras; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Ketkar, N.; Moolayil, J. Introduction to Keras. In Deep Learning with Python; Springer: New York, NY, USA, 2017; pp. 97–111. [Google Scholar]

- Moolayil, J. An Introduction to Deep Learning and Keras. In Learn Keras for Deep Neural Networks; Apress: Berkeley, CA, USA, 2018; pp. 1–16. [Google Scholar] [CrossRef]

- Tammina, S. Transfer learning using VGG-16 with Deep Convolutional Neural Network for Classifying Images. Int. J. Sci. Res. Publ. (IJSRP) 2019, 9, 143–150. [Google Scholar] [CrossRef]

- Laborda, J.G.; Litzler, M.F. Current perspectives in teaching English for specific purposes. Onomázein 2015, 31, 38–51. [Google Scholar] [CrossRef]

- Looi, C.-K.; Sun, D.; Wu, L.; Seow, P.; Chia, G.; Wong, L.-H.; Soloway, E.; Norris, C. Implementing mobile learning curricula in a grade level: Empirical study of learning effectiveness at scale. Comput. Educ. 2014, 77, 101–115. [Google Scholar] [CrossRef]

- Hsieh, Y.-Z.; Su, M.-C.; Chen, S.Y.; Chen, G.-D. The development of a robot-based learning companion: A user-centered design approach. Interact. Learn. Environ. 2013, 23, 356–372. [Google Scholar] [CrossRef]

- Nielsen, J. Finding usability problems through heuristic evaluation. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Monterey, CA, USA, 3–7 May 1992; pp. 373–380. [Google Scholar]

- Hildebrand, E.A.; Bekki, J.M.; Bernstein, B.L.; Harrison, C.J. Online Learning Environment Design: A Heuristic Evaluation. In Proceedings of the 2013 ASEE Annual Conference & Exposition, Atlanta, GA, USA, 23–26 June 2013; pp. 23.945.1–23.945.11. [Google Scholar] [CrossRef]

- Tzafilkou, K.; Perifanou, M.; Economides, A.A. Development and validation of a students’ remote learning attitude scale (RLAS) in higher education. Educ. Inf. Technol. 2021, 26, 7279–7305. [Google Scholar] [CrossRef]

- Frank, M.C.; Braginsky, M.; Yurovsky, D.; Marchman, V.A. Variability and Consistency in Early Language Learning: The Wordbank Project; MIT Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Smith, A.C.; Monaghan, P.; Huettig, F. Complex word recognition behaviour emerges from the richness of the word learning environment. In Proceedings of the 14th Neural Computation and Psychology Workshop, Lancaster, UK, 21–23 August 2014; pp. 99–114. [Google Scholar]

- Sharples, M.; Corlett, D.; Westmancott, O. The Design and Implementation of a Mobile Learning Resource. Pers. Ubiquitous Comput. 2002, 6, 220–234. [Google Scholar] [CrossRef]

- Viberg, O.; Andersson, A.; Wiklund, M. Designing for sustainable mobile learning–re-evaluating the concepts “formal” and “informal”. Interact. Learn. Environ. 2021, 29, 130–141. [Google Scholar] [CrossRef]

- Zimmerman, H.T.; Land, S.M.; Maggiore, C.; Millet, C. Supporting children’s outdoor science learning with mobile computers: Integrating learning on-the-move strategies with context-sensitive computing. Learn. Media Technol. 2019, 44, 457–472. [Google Scholar] [CrossRef]

- Braham, A.; Buendía, F.; Khemaja, M.; Gargouri, F. Generation of Adaptive Mobile Applications Based on Design Patterns for User Interfaces. Proceedings 2019, 31, 19. [Google Scholar] [CrossRef]

- Hervás, R.; Bravo, J. Towards the ubiquitous visualization: Adaptive user-interfaces based on the Semantic Web. Interact. Comput. 2011, 23, 40–56. [Google Scholar] [CrossRef]

- Chow, J.K.; Palmeri, T.J.; Gauthier, I. Haptic object recognition based on shape relates to visual object recognition ability. Psychol. Res. 2021, 86, 1262–1273. [Google Scholar] [CrossRef]

- Sun, D.; Looi, C.-K.; Yang, Y.; Sun, J. Design and implement boundary activity based learning (BABL) principle in science inquiry: An exploratory study. J. Educ. Technol. Soc. 2020, 23, 147–162. [Google Scholar]

- Arini, D.N.; Hidayat, F.; Winarti, A.; Rosalina, E. Artificial intelligence (AI)-based mobile learning in ELT for EFL learners: The implementation and learners’ attitudes. Int. J. Educ. Stud. Soc. Sci. (IJESSS) 2022, 2, 88–95. [Google Scholar] [CrossRef]

- Jayatilleke, B.G.; Ranawaka, G.R.; Wijesekera, C.; Kumarasinha, M.C.B. Development of mobile application through design-based research. Asian Assoc. Open Univ. J. 2019, 13, 145–168. [Google Scholar] [CrossRef]

| # | Dimensions | Abbreviation | Test Items |

|---|---|---|---|

| 1 | Visibility of system status | Visibility |

|

| 2 | Match between the system and the real world | Match |

|

| 3 | User control and freedom | Control |

|

| 4 | Consistency and adherence to standards | Consistency |

|

| 5 | Error prevention (usability-related errors in particular) | Error |

|

| 6 | Recognition (not recall) | Recognition |

|

| 7 | Flexibility and efficiency of use | Flexibility |

|

| 8 | Aesthetics and minimalism of design | Aesthetics |

|

| 9 | Recognition, diagnosis, and correction of errors | Recovery |

|

| 10 | Help and documentation | Help |

|

| 11 | The mobile learning environment | MLE |

|

| Visibility | Match | Control | Consistency | Error | Recognition | Flexibility | Aesthetics | Recovery | Help | MLE | ||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Visibility | Pearson Correlation | 1 | 0.473 * | 0.525 * | 0.563 ** | 0.560 * | 0.587 ** | 0.607 ** | −0.675 ** | −0.586 ** | 0.061 | 0.325 |

| Sig. (2-tailed) | 0.035 | 0.018 | 0.010 | 0.010 | 0.006 | 0.005 | 0.001 | 0.007 | 0.799 | 0.162 | ||

| Match | Pearson Correlation | 0.473 * | 1 | 0.706 ** | 0.667 ** | 0.827 ** | 0.720 ** | 0.669 ** | −0.648 ** | −0.716 ** | 0.192 | 0.599 ** |

| Sig. (2-tailed) | 0.035 | 0.001 | 0.001 | 0.000 | 0.000 | 0.001 | 0.002 | 0.000 | 0.416 | 0.005 | ||

| Control | Pearson Correlation | 0.525 * | 0.706 ** | 1 | 0.863 ** | 0.934 ** | 0.952 ** | 0.874 ** | −0.642 ** | −0.487 * | 0.254 | 0.465 * |

| Sig. (2-tailed) | 0.018 | 0.001 | 0.000 | 0.000 | 0.000 | 0.000 | 0.002 | 0.029 | 0.281 | 0.039 | ||

| Consistency | Pearson Correlation | 0.563 ** | 0.667 ** | 0.863 ** | 1 | 0.908 ** | 0.919 ** | 0.893 ** | −0.677 ** | −0.471 * | 0.083 | 0.534 * |

| Sig. (2-tailed) | 0.010 | 0.001 | 0.000 | 0.000 | 0.000 | 0.000 | 0.001 | 0.036 | 0.728 | 0.015 | ||

| Error | Pearson Correlation | 0.560 * | 0.827 ** | 0.934 ** | 0.908 ** | 1 | 0.923 ** | 0.884 ** | −0.686 ** | −0.531 * | 0.076 | 0.635 ** |

| Sig. (2-tailed) | 0.010 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.001 | 0.016 | 0.749 | 0.003 | ||

| Recognition | Pearson Correlation | 0.587 ** | 0.720 ** | 0.952 ** | 0.919 ** | 0.923 ** | 1 | 0.949 ** | −0.762 ** | −0.586 ** | 0.178 | .481 * |

| Sig. (2-tailed) | 0.006 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.007 | 0.453 | 0.032 | ||

| Flexibility | Pearson Correlation | 0.607 ** | 0.669 ** | 0.874 ** | 0.893 ** | 0.884 ** | 0.949 ** | 1 | −0.814 ** | −0.619 ** | 0.099 | 0.370 |

| Sig. (2-tailed) | 0.005 | 0.001 | 0.000 | 0.000 | 0.000 | 0.000 | 0.000 | 0.004 | 0.677 | 0.108 | ||

| Aesthetics | Pearson Correlation | −0.675 ** | −0.648 ** | −0.642 ** | −0.677 ** | −0.686 ** | −0.762 ** | −0.814 ** | 1 | 0.739 ** | −0.083 | −0.233 |

| Sig. (2-tailed) | 0.001 | 0.002 | 0.002 | 0.001 | 0.001 | 0.000 | 0.000 | 0.000 | 0.727 | 0.323 | ||

| Recovery | Pearson Correlation | −0.586 ** | −0.716 ** | −0.487 * | −0.471 * | −0.531 * | −0.586 ** | −0.619 ** | 0.739 ** | 1 | −0.106 | −0.147 |

| Sig. (2-tailed) | 0.007 | 0.000 | 0.029 | 0.036 | 0.016 | 0.007 | 0.004 | 0.000 | 0.656 | 0.537 | ||

| Help | Pearson Correlation | 0.061 | 0.192 | 0.254 | 0.083 | 0.076 | 0.178 | 0.099 | −0.083 | −0.106 | 1 | −0.164 |

| Sig. (2-tailed) | 0.799 | 0.416 | 0.281 | 0.728 | 0.749 | 0.453 | 0.677 | 0.727 | 0.656 | 0.490 | ||

| MCLE | Pearson Correlation | 0.325 | 0.599 ** | 0.465 * | 0.534 * | 0.635 ** | 0.481 * | 0.370 | −0.233 | −0.147 | −0.164 | 1 |

| Sig. (2-tailed) | 0.162 | 0.005 | 0.039 | 0.015 | 0.003 | 0.032 | 0.108 | 0.323 | 0.537 | 0.490 | ||

| N | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | 20 | |

| Mean | Std. Deviation | Std. Error Mean | 95% Confidence Interval of the Difference | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Lower | Upper | t | df | Sig. (2-tailed) | |||||

| Pair 1 | Visibility—MlE | −0.35000 | 0.93330 | 0.20869 | −0.78680 | 0.08680 | −1.677 | 19 | 0.110 |

| Pair 2 | Match—MlE | 4.10000 | 0.78807 | 0.17622 | 3.73117 | 4.46883 | 23.267 | 19 | 0.000 |

| Pair 3 | Control—MlE | 8.25000 | 1.37171 | 0.30672 | 7.60802 | 8.89198 | 26.897 | 19 | 0.000 |

| Pair 4 | Consistency—MlE | 3.25000 | 0.96655 | 0.21613 | 2.79764 | 3.70236 | 15.038 | 19 | 0.000 |

| Pair 5 | Error—MlE | 4.10000 | 0.71818 | 0.16059 | 3.76388 | 4.43612 | 25.531 | 19 | 0.000 |

| Pair 6 | Recognition—MlE | 7.55000 | 1.60509 | 0.35891 | 6.79879 | 8.30121 | 21.036 | 19 | 0.000 |

| Pair 7 | Flexibility—MlE | 3.10000 | 1.20961 | 0.27048 | 2.53388 | 3.66612 | 11.461 | 19 | 0.000 |

| Pair 8 | Aesthetics—MlE | 4.25000 | 1.20852 | 0.27023 | 3.68439 | 4.81561 | 15.727 | 19 | 0.000 |

| Pair 9 | Recovery—MlE | 4.35000 | 1.18210 | 0.26433 | 3.79676 | 4.90324 | 16.457 | 19 | 0.000 |

| Pair 10 | Help—MlE | 1.60000 | 1.09545 | 0.24495 | 1.08732 | 2.11268 | 6.532 | 19 | 0.000 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, F.; Sun, D.; Ma, Q.; Looi, C.-K. Developing an AI-Based Learning System for L2 Learners’ Authentic and Ubiquitous Learning in English Language. Sustainability 2022, 14, 15527. https://doi.org/10.3390/su142315527

Jia F, Sun D, Ma Q, Looi C-K. Developing an AI-Based Learning System for L2 Learners’ Authentic and Ubiquitous Learning in English Language. Sustainability. 2022; 14(23):15527. https://doi.org/10.3390/su142315527

Chicago/Turabian StyleJia, Fenglin, Daner Sun, Qing Ma, and Chee-Kit Looi. 2022. "Developing an AI-Based Learning System for L2 Learners’ Authentic and Ubiquitous Learning in English Language" Sustainability 14, no. 23: 15527. https://doi.org/10.3390/su142315527

APA StyleJia, F., Sun, D., Ma, Q., & Looi, C.-K. (2022). Developing an AI-Based Learning System for L2 Learners’ Authentic and Ubiquitous Learning in English Language. Sustainability, 14(23), 15527. https://doi.org/10.3390/su142315527