A Modified Rainbow-Based Deep Reinforcement Learning Method for Optimal Scheduling of Charging Station

Abstract

:1. Introduction

- To the best of our knowledge, this is the first time to propose a CS scheduling strategy that combines the EV random charging behavior characteristics with DRL. It improves the agent’s perception and learning ability based on the comprehensive perception of the “EV-CS-DN” environment information. Considering the uncertainty of the EV arrival and departure times, the electric access time of EVs is controlled by the relay action of the charging module to achieve energy resource matching within the EV parking time slot. The proposed method can reasonably solve the overstay issue and improve the operation efficiency of CSs.

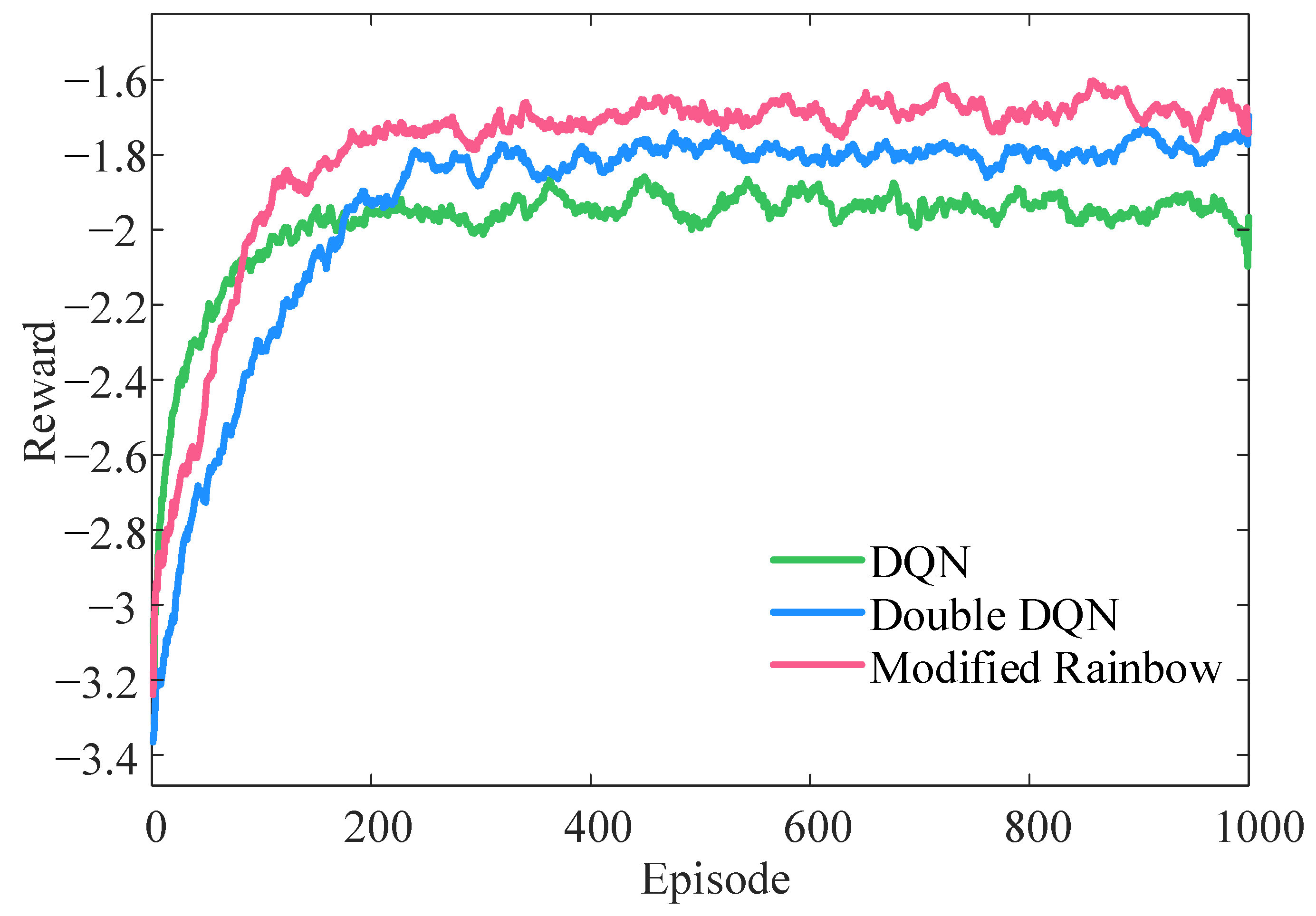

- As the basic version of the DQN-based rainbow algorithm has shortcomings of overlearning and poor stability in the late training stage, we improved it by introducing the learning rate attenuation strategy. In this way, the agent maintains a large learning rate in the early training stage to ensure exploration ability. As the episode increases, the learning rate gradually decays until it is maintained at a low level, ensuring that the agent fully uses the previous experience in the later training stage.

- Under the realistic CSs operating scenarios, we further verified the practicability of our proposed model and algorithm. The experimental results show that the modified rainbow method overcomes the limitations of low training efficiency and poor application stability of the DRL algorithms. The CS operating cost and new energy consumption are effectively optimized. Especially, the proposed method exhibits promising performance in adapting to extreme weather and equipment failure scenarios.

2. Problem Formulation

2.1. State

2.2. Action

2.3. Reward

- EV charging satisfaction cost

- 2.

- CS operation cost

- 3.

- PV curtailment penalty

2.4. Action-Value Function

3. Proposed Modified Rainbow-Based Solution

- Double DQN

- 2.

- Dueling DQN

- 3.

- Prioritized replay buffer

- 4.

- Learning rate attenuation

| Algorithm 1: Modified Rainbow-based Solution Method |

|

4. Case Studies

4.1. Case Study Setup

4.2. Training Process Analysis

4.3. Application Results Analysis

4.4. Generalization Performance Assessment

4.5. Algorithm Performance Comparison

5. Conclusions

- The well-trained agent intelligently formulates an EV charging plan according to the current environmental state to achieve the multiple stakeholders’ optimal benefit. Especially under extreme scenarios, the proposed method exhibits superior generalization capabilities and meets the needs of engineering applications. The proposed method improved the PV utilization to 90.31% and reduced the CS operating cost by 9.72% on average.

- The modified rainbow method overcomes the low training efficiency and poor stability of the classical DRL algorithms. The proposed method effectively balanced the convergence and stability and significantly enhanced the performance by 12.81%.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Kapustin, N.O.; Grushevenko, D.A. Long-term electric vehicles outlook and their potential impact on electric grid. Energy Policy 2020, 137, 111103. [Google Scholar] [CrossRef]

- Dong, F.; Liu, Y. Policy evolution and effect evaluation of new-energy vehicle industry in China. Resour. Policy 2020, 67, 101655. [Google Scholar] [CrossRef]

- Rajendran, G.; Vaithilingam, C.A.; Misron, N.; Naidu, K.; Ahmen, M.R. A comprehensive review on system architecture and international standards for electric vehicle charging stations. J. Energy Storage 2021, 42, 103099. [Google Scholar] [CrossRef]

- Das, H.S.; Rahman, M.M.; Li, S.; Tan, C.W. Electric vehicles standards, charging infrastructure, and impact on grid integration: A technological review. Renew. Sustain. Energy Rev. 2020, 120, 109618. [Google Scholar] [CrossRef]

- Zhang, J.; Yan, J.; Liu, Y.; Zhang, H.; Lv, G. Daily electric vehicle charging load profiles considering demographics of vehicle users. Appl. Energy 2020, 274, 115063. [Google Scholar] [CrossRef]

- Moghaddam, Z.; Ahmad, I.; Habibi, D.; Masoum, M.A.S. A coordinated dynamic pricing model for electric vehicle charging stations. IEEE Trans. Transp. Electr. 2019, 5, 226–238. [Google Scholar] [CrossRef]

- Luo, C.; Huang, Y.; Gupta, V. Stochastic dynamic pricing for EV charging stations with renewable integration and energy storage. IEEE Trans. Smart Grid 2018, 9, 1494–1505. [Google Scholar] [CrossRef]

- Zhang, Q.; Hu, Y.; Tan, W.; Li, C.; Ding, Z. Dynamic time-of-use pricing strategy for electric vehicle charging considering user satisfaction degree. Appl. Sci. 2020, 10, 3247. [Google Scholar] [CrossRef]

- Raja S, C.; Kumar N M, V.; J, S.K.; Nesamalar J, J.D. Enhancing system reliability by optimally integrating PHEV charging station and renewable distributed generators: A Bi-level programming approach. Energy 2021, 229, 120746. [Google Scholar] [CrossRef]

- Li, D.; Zouma, A.; Liao, J.; Yang, H. An energy management strategy with renewable energy and energy storage system for a large electric vehicle charging station. eTransportation 2020, 6, 100076. [Google Scholar] [CrossRef]

- Yang, M.; Zhang, L.; Zhao, Z.; Wang, L. Comprehensive benefits analysis of electric vehicle charging station integrated photovoltaic and energy storage. J. Clean. Prod. 2021, 302, 126967. [Google Scholar] [CrossRef]

- Nishimwe H., L.F.; Yoon, S.-G. Combined optimal planning and operation of a fast EV-charging station integrated with solar PV and ESS. Energies 2021, 14, 3152. [Google Scholar] [CrossRef]

- Zeng, T.; Zhang, H.; Moura, S. Solving overstay and stochasticity in PEV charging station planning with real data. IEEE Trans. Ind. Inform. 2020, 16, 3504–3514. [Google Scholar] [CrossRef]

- Sadeghianpourhamami, N.; Deleu, J.; Develder, C. Definition and evaluation of model-free coordination of electrical vehicle charging with reinforcement learning. IEEE Trans. Smart Grid 2020, 11, 203–214. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Bi, S.; Zhang, Y.A. Reinforcement learning for real-time pricing and scheduling control in EV charging stations. IEEE Trans. Ind. Inform. 2021, 17, 849–859. [Google Scholar] [CrossRef]

- Wan, Z.; Li, H.; He, H.; Prokhorov, D. Model-free real-time EV charging scheduling based on deep reinforcement learning. IEEE Trans. Smart Grid 2019, 10, 5246–5257. [Google Scholar] [CrossRef]

- Li, H.; Li, G.; Wang, K. Real-time dispatch strategy for electric vehicles based on deep reinforcement learning. Automat. Electr. Power Syst. 2020, 44, 161–167. [Google Scholar] [CrossRef]

- Lee, K.; Ahmed, M.A.; Kang, D.; Kim, Y. Deep reinforcement learning based optimal route and charging station selection. Energies 2020, 13, 6255. [Google Scholar] [CrossRef]

- Qian, T.; Shao, C.; Wang, X.; Shahidehpour, M. Deep reinforcement learning for EV charging navigation by coordinating smart grid and intelligent transportation system. IEEE Trans. Smart Grid 2020, 11, 1714–1723. [Google Scholar] [CrossRef]

- Li, H.; Wan, Z.; He, H. Constrained EV charging scheduling based on safe deep reinforcement learning. IEEE Trans. Smart Grid 2020, 11, 2427–2439. [Google Scholar] [CrossRef]

- Hessel, M.; Modayil, J.; van Hasselt, H.; Schaul, T.; Ostrovski, G.; Dabney, W.; Horgan, D.; Piot, B.; Azar, M.; Silver, D. Rainbow: Combining improvements in deep reinforcement learning. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence (AAAI-18), New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Harrold, D.J.B.; Cao, J.; Fan, Z. Data-driven battery operation for energy arbitrage using rainbow deep reinforcement learning. Energy 2022, 238, 121958. [Google Scholar] [CrossRef]

- Xiao, G.; Wu, M.; Shi, Q.; Zhou, Z.; Chen, X. DeepVR: Deep reinforcement learning for predictive panoramic video streaming. IEEE Trans. Cogn. Commun. 2019, 5, 1167–1177. [Google Scholar] [CrossRef]

- Yang, J.; Yang, M.; Wang, M.; Du, P.; Yu, Y. A deep reinforcement learning method for managing wind farm uncertainties through energy storage system control and external reserve purchasing. Int. J. Electr. Power 2020, 119, 105928. [Google Scholar] [CrossRef]

- Yang, T.; Zhao, L.; Li, W.; Zomaya, A.Y. Reinforcement learning in sustainable energy and electric systems: A survey. Annu. Rev. Control 2020, 49, 145–163. [Google Scholar] [CrossRef]

- Cui, S.; Wang, Y.; Shi, Y.; Xiao, J. An efficient peer-to-peer energy-sharing framework for numerous community prosumers. IEEE Trans. Ind. Inform. 2020, 16, 7402–7412. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, W.; Wei, W.; Tai, N.; Li, R. Logistics-Energy Collaborative Optimization Scheduling Method for Large Seaport Integrated Energy System. Available online: https://kns.cnki.net/kcms/detail/11.2107.TM.20210811.1724.013.html (accessed on 12 August 2021).

- Liu, X.; Feng, T. Energy-storage configuration for EV fast charging stations considering characteristics of charging load and wind-power fluctuation. Glob. Energy Interconnect. 2021, 4, 48–57. [Google Scholar] [CrossRef]

- Lin, X.; Liu, T.; Wang, Z. Annual Report on Green Development of China’s Urban Transportation (2019); Social Science Literature Press: Beijing, China, 2019. [Google Scholar]

| Parameters | Value | Unit |

|---|---|---|

| Charging pile output power | 60 | kW |

| PV maximum power | 225 | kW |

| ESS capacity | 295.68 | kWh |

| ESS maximum power | 90 | kW |

| ESS maximum SOC | 0.95 | / |

| ESS minimum SOC | 0.1 | / |

| EV battery capacity | 40 | kWh |

| EV expected SOC | 0.9 | / |

| Number of EVs | 100 | / |

| Equipment efficiency | 0.95 | / |

| Penalty coefficient | 15.82 | USD |

| Penalty coefficient | 0.01 | USD/kWh |

| Penalty coefficient | 0.0158 | USD/kWh |

| CS Power Purchase Cost/USD | PV Utilization Rate | ESS Charging-Discharging Capacity/kWh | |

|---|---|---|---|

| Uncoordinated | 172.95 | 86.04% | 763.58 |

| DQN | 164.25 | 86.33% | 784.45 |

| DDQN | 151.47 | 87.38% | 745.32 |

| Modified rainbow | 147.02 | 90.53% | 792.14 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, R.; Chen, Z.; Xing, Q.; Zhang, Z.; Zhang, T. A Modified Rainbow-Based Deep Reinforcement Learning Method for Optimal Scheduling of Charging Station. Sustainability 2022, 14, 1884. https://doi.org/10.3390/su14031884

Wang R, Chen Z, Xing Q, Zhang Z, Zhang T. A Modified Rainbow-Based Deep Reinforcement Learning Method for Optimal Scheduling of Charging Station. Sustainability. 2022; 14(3):1884. https://doi.org/10.3390/su14031884

Chicago/Turabian StyleWang, Ruisheng, Zhong Chen, Qiang Xing, Ziqi Zhang, and Tian Zhang. 2022. "A Modified Rainbow-Based Deep Reinforcement Learning Method for Optimal Scheduling of Charging Station" Sustainability 14, no. 3: 1884. https://doi.org/10.3390/su14031884

APA StyleWang, R., Chen, Z., Xing, Q., Zhang, Z., & Zhang, T. (2022). A Modified Rainbow-Based Deep Reinforcement Learning Method for Optimal Scheduling of Charging Station. Sustainability, 14(3), 1884. https://doi.org/10.3390/su14031884