A Hybridization of Spatial Modeling and Deep Learning for People’s Visual Perception of Urban Landscapes

Abstract

1. Introduction

1.1. Visual Perception

1.2. Importance of People’s Perception of the Urban Visual Environment

1.3. Literature Survey

1.4. Spatial Modeling

1.5. Research Objectives and Research Questions

- How much is each of the criteria affecting the sense of sight correlated with each other?

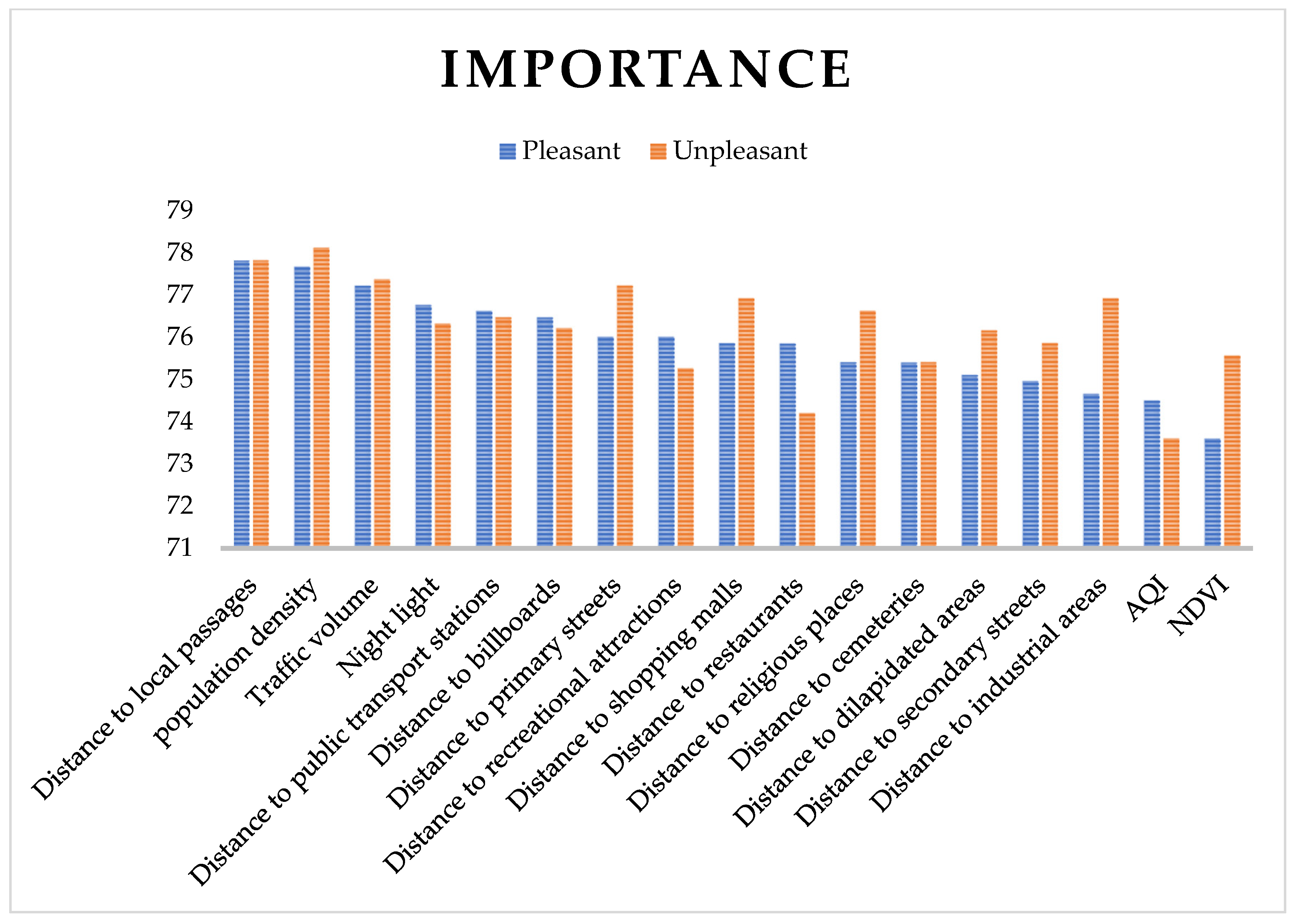

- What is the importance of the criteria affecting each pleasant and unpleasant sight?

- How accurate is the CNN algorithm in spatial modeling of the two states of people’s sense of sight?

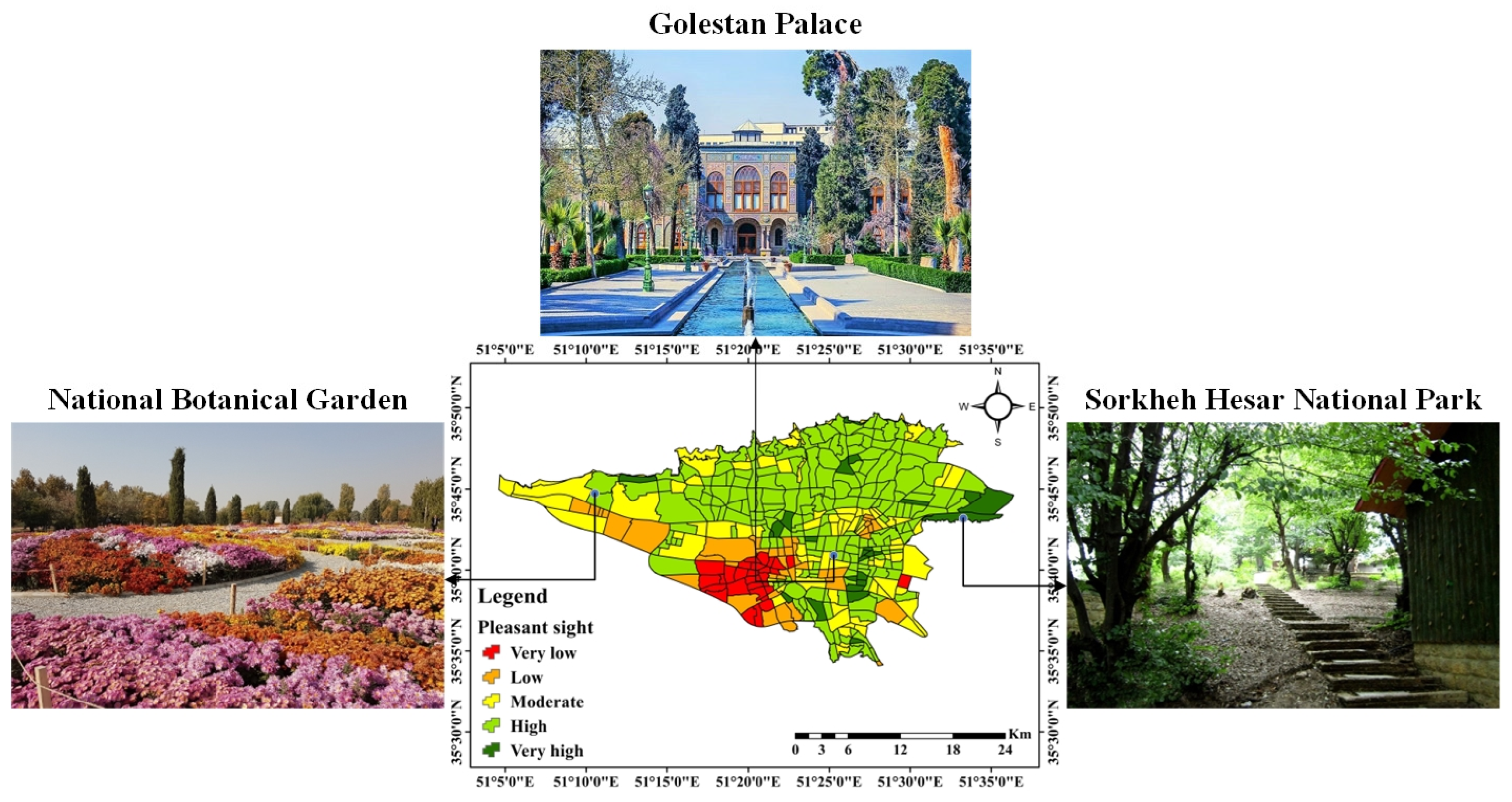

- What is the status of each region of Tehran in terms of the perception of each of the states of pleasant and unpleasant sights?

1.6. Research Innovation

1.7. Research Structure

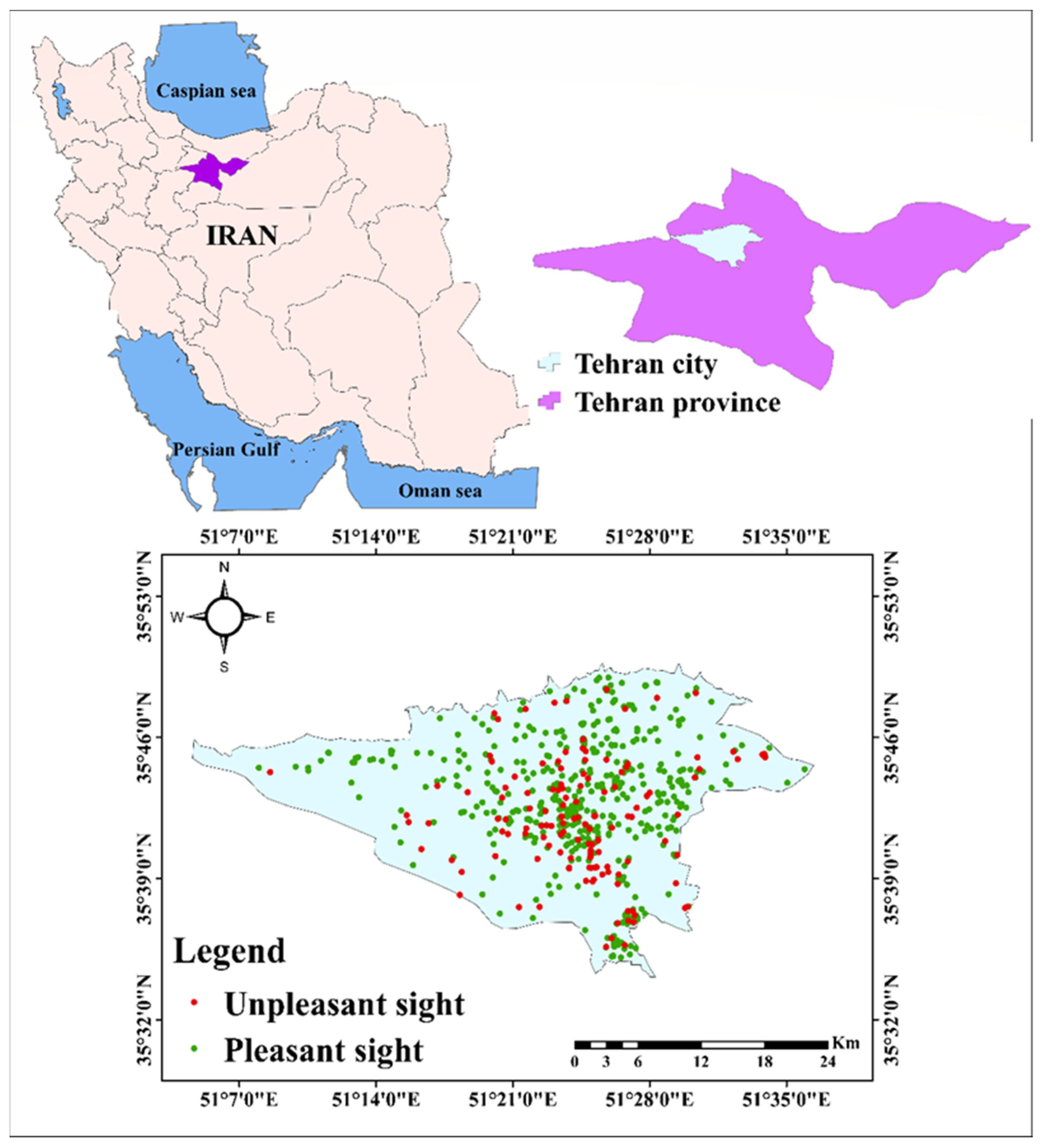

2. Study Area

3. Methodology

- The spatial database containing dependent and independent data was constructed in the first stage. The dependent data was gathered through a questionnaire (Appendix A) and consisted of the visual perception of 663 people. The independent data consisted of distances to industrial areas, public transport stations, recreational attractions, primary streets, secondary streets, local passages, billboards, restaurants, shopping malls, dilapidated areas, cemeteries, religious places, traffic volume, population density, night light, air quality index (AQI), and normalized difference vegetation index (NDVI). For each of the visual sense states, including pleasant sight and unpleasant sight, according to the number of places selected by users in the questionnaire (points with value 1), random points (points with value 0) were considered separately. For each of these states (pleasant and unpleasant), all the points and values related to each were prepared as dependent data, along with the values associated with the relevant, effective spatial criteria as independent data as a spatial database.

- The spatial correlation between the criterion was determined using the Pearson correlation coefficient method, the relevance of the criteria was determined using the OneR approach, and spatial modeling was performed using the deep learning model in the second stage. The spatial database prepared in the previous step was used to perform these methods. In modeling, 70% of the data in the database was used for training and 30% for validation.

- After learning the CNN from the training data in the database, this learning was extended to all parts of Tehran city (with a pixel size of 30 m). By using the values determined for each point of Tehran city by the CNN algorithm, using interpolation by the kriging method (to prepare a raster map), in the third step, the potential map of the people’s visual perception in Tehran was prepared, including two states of pleasant sight and unpleasant sight.

- At last, indexes of mean square error (MSE), accuracy, receiver operating characteristic (ROC) curve, and area under the curve (AUC) were employed to analyze the modeling and the potential map of people’s visual perception. The receiver operating characteristic (ROC) curve and area under the curve (AUC) were prepared using 30% of spatial database data.

3.1. Database Construction

People’s Visual Perception Data

- Identifying the place in Tehran on a map (It was impossible to find the location through written questionnaires and Instagram; thus, the participants were asked to give an accurate address, and the geographic coordinates were extracted from google maps).

- They identify the sort of the selected place (home address, work address, and the address of the chosen location).

- Choosing the type of sense of sight perceived by users in the selected location in a question containing two options (pleasant and unpleasant).

3.2. Spatial Criteria Affecting People’s Visual Perception

- Night light

- Distance to recreational attractions

- Distance to industrial areas

- Distance to public transport stations

- Distance to streets

- Distance to local passages

- Traffic volume

- Distance to billboards

- Distance to restaurants

- Distance to shopping malls

- Distance to dilapidated areas

- Population density

- Distance to cemeteries

- Distance to religious places

- NDVI

- AQI

4. Methods

4.1. Pearson Correlation Coefficient

4.2. Convolutional Neural Network (CNN)

- Convolutional Layer: The convolutional layer is essential in CNN as a local feature extractor. The convolutional layer employs three fundamental strategies to increase network operation and eliminate numerous free variables: receptive fields, sparse connectivity, and parameter sharing. The convolutional layer is measured based on Equation (4):where is the q in the 1st layer, is the trainable convolution core in the 1st layer, is the bias matrix, is the selection of entries, and f is the activation key.

- Pooling layer: This layer produces down-sampled versions of input maps through the pooling operation. The input map is initially divided into non-overlapping rectangular regions (of u∗u size), then the new map is measured by summarizing the maximum values or average of the rectangular areas. Equation (5) is used to measure pooling layers.Down is a sub-sampling function.

- Fully-connected layer: It is a standard neural network layer that depicts the non-linear relationships between entry and discharging layers using activation and bias functions. These layers are placed after convolutional and sub-sampling layers and transform two-dimensional maps into one-dimensional vectors. Data is processed in these layers according to Equation (6):and stand for the weighted matrix and bias matrix of the fully-connected layer, respectively (w and b are both trainable parameters) [68].

4.3. Feature Importance

4.4. Evaluation of Model Accuracy

5. Results

5.1. Pearson Correlation Coefficient Results

5.2. Feature Importance Results

5.3. Modeling Results

5.4. Validation

6. Discussion

6.1. Assessment of Effective Factors

6.2. Assessment of Modeling

6.3. Landscape Policies

6.4. Limitations and Future Directions

7. Conclusions

- The results showed that the strongest correlation among criteria that affect people’s visual perception belonged to the distances to restaurants and billboards, shopping centers and billboards, and recreational attractions and billboards.

- Distance to local passages, population density, and traffic volume were the most significant criteria in the two sight modes of pleasant and unpleasant.

- The results of the ROC curve showed good accuracy of the CNN algorithm in modeling the two modes of sight sense, and the pleasant sight mode had a higher accuracy than the unpleasant sight.

- The results demonstrated that the northern, southwest, central, southern, and eastern areas of the city were high potential in terms of pleasant visuals. Southwestern, central, and southern areas of the city were a high potential for unpleasant sights.

- In general, in pleasant sight, areas with very high potential occupy a small percentage of Tehran city, and we mostly see areas with high potential. In an unpleasant sight state, there are relatively large areas with high and very high potential, which causes concern. Among the advantages of understanding these areas through potential maps, it can be pointed out that creating visually beautiful areas is needed in all areas of Tehran, and this need is felt more in the city’s southwestern edge. According to the maps of effective criteria, the presence of industrial areas, lack of recreational attractions, relatively high AQI, low night light, and the lack of restaurants and shopping centers in the southwest can be a reason for the unpleasant visual perception in those areas. Proposed measures to eliminate negative factors affecting visual perception and create areas with visual beauty to increase citizens’ quality of life according to the potential maps are among the achievements of this research. The potential maps obtained from this study can help urban planners and managers to provide a more favorable visual space (removing unpleasant sight elements and creating pleasant sight elements in required places) to urban users because being in an excellent visual environment is the desire of all residents because they spend much time in the city and it has many effects on them.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Majdzadeh, S.A.; Mirzaei, R.; Madahi, S.M.; Mabhoot, M.R.; Heidari, A. Identifying and Assessing the Semantic and Visual Perception Signs in the Identification of Fahadan Neighborhood of Yazd. Creat. City Des. 2021, 4, 55–68. [Google Scholar]

- Cole, S.; Balcetis, E. Motivated perception for self-regulation: How visual experience serves and is served by goals. In Advances in Experimental Social Psychology; Elsevier: Amsterdam, The Netherlands, 2021; Volume 64, pp. 129–186. [Google Scholar]

- Orloff, S. Learning Re-Enabled: A Practical Guide to Helping Children with Learning Disabilities; Mosby: Maryland Heights, MO, USA, 2004. [Google Scholar]

- Jana, M.K.; De, T. Visual pollution can have a deep degrading effect on urban and suburban community: A study in few places of Bengal, India, with special reference to unorganized billboards. Eur. Sci. J. 2015, 8, 94–101. [Google Scholar]

- Farahani, M.; Razavi-Termeh, S.V.; Sadeghi-Niaraki, A. A spatially based machine learning algorithm for potential mapping of the hearing senses in an urban environment. Sustain. Cities Soc. 2022, 80, 103675. [Google Scholar] [CrossRef]

- Dai, L.; Zheng, C.; Dong, Z.; Yao, Y.; Wang, R.; Zhang, X.; Ren, S.; Zhang, J.; Song, X.; Guan, Q. Analyzing the correlation between visual space and residents’ psychology in Wuhan, China using street-view images and deep-learning technique. City Environ. Interact. 2021, 11, 100069. [Google Scholar] [CrossRef]

- Perovic, S.; Folic, N.K. Visual perception of public open spaces in Niksic. Procedia-Soc. Behav. Sci. 2012, 68, 921–933. [Google Scholar] [CrossRef][Green Version]

- Abkar, M.; Kamal, M.; Maulan, S.; Davoodi, S.R. Determining the visual preference of urban landscapes. Sci. Res. Essays 2011, 6, 1991–1997. [Google Scholar]

- Golkar, K. Conceptual evolution of urban visual environment; from cosmetic approach through to sustainable approach. Environ. Sci. 2008, 5, 90–114. [Google Scholar]

- Sottini, V.A.; Barbierato, E.; Capecchi, I.; Borghini, T.; Saragosa, C. Assessing the perception of urban visual quality: An approach integrating big data and geostatistical techniques. Aestimum 2021, 79, 75–102. [Google Scholar] [CrossRef]

- Wartmann, F.M.; Frick, J.; Kienast, F.; Hunziker, M. Factors influencing visual landscape quality perceived by the public. Results from a national survey. Landsc. Urban Plan. 2021, 208, 104024. [Google Scholar] [CrossRef]

- Nasar, J.L. The evaluative image of the city. J. Am. Plan. Assoc. 1990, 56, 41–53. [Google Scholar] [CrossRef]

- Elena, E.; Cristian, M.; Suzana, P. Visual pollution: A new axiological dimension of marketing. Eur. Integr.–New Chall. 2011, 1, 1836. [Google Scholar]

- Wakil, K.; Naeem, M.A.; Anjum, G.A.; Waheed, A.; Thaheem, M.J.; Hussnain, M.Q.u.; Nawaz, R. A hybrid tool for visual pollution Assessment in urban environments. Sustainability 2019, 11, 2211. [Google Scholar] [CrossRef]

- Polat, A.T.; Akay, A. Relationships between the visual preferences of urban recreation area users and various landscape design elements. Urban For. Urban Green. 2015, 14, 573–582. [Google Scholar] [CrossRef]

- Van Zanten, B.T.; Van Berkel, D.B.; Meentemeyer, R.K.; Smith, J.W.; Tieskens, K.F.; Verburg, P.H. Continental-scale quantification of landscape values using social media data. Proc. Natl. Acad. Sci. USA 2016, 113, 12974–12979. [Google Scholar] [CrossRef] [PubMed]

- Tenerelli, P.; Püffel, C.; Luque, S. Spatial assessment of aesthetic services in a complex mountain region: Combining visual landscape properties with crowdsourced geographic information. Landsc. Ecol. 2017, 32, 1097–1115. [Google Scholar] [CrossRef]

- Wigness, M.; Eum, S.; Rogers, J.G.; Han, D.; Kwon, H. A rugd dataset for autonomous navigation and visual perception in unstructured outdoor environments. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macao, China, 4–8 November 2019; pp. 5000–5007. [Google Scholar]

- Abd-Alhamid, F.; Kent, M.; Bennett, C.; Calautit, J.; Wu, Y. Developing an innovative method for visual perception evaluation in a physical-based virtual environment. Build. Environ. 2019, 162, 106278. [Google Scholar] [CrossRef]

- Jeon, J.Y.; Jo, H.I. Effects of audio-visual interactions on soundscape and landscape perception and their influence on satisfaction with the urban environment. Build. Environ. 2020, 169, 106544. [Google Scholar] [CrossRef]

- Jo, H.I.; Jeon, J.Y. Effect of the appropriateness of sound environment on urban soundscape assessment. Build. Environ. 2020, 179, 106975. [Google Scholar] [CrossRef]

- Wakil, K.; Tahir, A.; Hussnain, M.Q.u.; Waheed, A.; Nawaz, R. Mitigating urban visual pollution through a multistakeholder spatial decision support system to optimize locational potential of billboards. ISPRS Int. J. Geo-Inf. 2021, 10, 60. [Google Scholar] [CrossRef]

- Ahmed, N.; Islam, M.N.; Tuba, A.S.; Mahdy, M.; Sujauddin, M. Solving visual pollution with deep learning: A new nexus in environmental management. J. Environ. Manag. 2019, 248, 109253. [Google Scholar] [CrossRef]

- Gosal, A.; Ziv, G. Landscape aesthetics: Spatial modelling and mapping using social media images and machine learning. Ecol. Indic. 2020, 117, 106638. [Google Scholar] [CrossRef]

- Jamil, A.; ali Hameed, A.; Bazai, S.U. Land Cover Classification using Machine Learning Approaches from High Resolution Images. J. Appl. Emerg. Sci. 2021, 11, 108–112. [Google Scholar]

- Wei, J.; Yue, W.; Li, M.; Gao, J. Mapping human perception of urban landscape from street-view images: A deep-learning approach. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102886. [Google Scholar] [CrossRef]

- Hameed, M.; Yang, F.; Bazai, S.U.; Ghafoor, M.I.; Alshehri, A.; Khan, I.; Baryalai, M.; Andualem, M.; Jaskani, F.H. Urbanization detection using LiDAR-based remote sensing images of azad Kashmir using novel 3D CNNs. J. Sens. 2022, 2022, 6430120. [Google Scholar] [CrossRef]

- Li, Y.; Yabuki, N.; Fukuda, T. Measuring visual walkability perception using panoramic street view images, virtual reality, and deep learning. Sustain. Cities Soc. 2022, 86, 104140. [Google Scholar] [CrossRef]

- Tasnim, N.H.; Afrin, S.; Biswas, B.; Anye, A.A.; Khan, R. Automatic classification of textile visual pollutants using deep learning networks. Alex. Eng. J. 2023, 62, 391–402. [Google Scholar] [CrossRef]

- Sun, P.; Lu, W.; Jin, L. How the natural environment in downtown neighborhood affects physical activity and sentiment: Using social media data and machine learning. Health Place 2023, 79, 102968. [Google Scholar] [CrossRef]

- Yasmin, F.; Hassan, M.M.; Hasan, M.; Zaman, S.; Kaushal, C.; El-Shafai, W.; Soliman, N.F. PoxNet22: A fine-tuned model for the classification of monkeypox disease using transfer learning. IEEE Access 2023, 11, 24053–24076. [Google Scholar] [CrossRef]

- Hassan, M.M.; Zaman, S.; Mollick, S.; Hassan, M.M.; Raihan, M.; Kaushal, C.; Bhardwaj, R. An efficient Apriori algorithm for frequent pattern in human intoxication data. Innov. Syst. Softw. Eng. 2023, 19, 61–69. [Google Scholar] [CrossRef]

- Zhang, F.; Zhou, B.; Liu, L.; Liu, Y.; Fung, H.H.; Lin, H.; Ratti, C. Measuring human perceptions of a large-scale urban region using machine learning. Landsc. Urban Plan. 2018, 180, 148–160. [Google Scholar] [CrossRef]

- Rodaway, P. Sensuous Geographies: Body, Sense and Place; Routledge: Oxford, UK, 2002. [Google Scholar]

- Kiwelekar, A.W.; Mahamunkar, G.S.; Netak, L.D.; Nikam, V.B. Deep learning techniques for geospatial data analysis. In Machine Learning Paradigms; Springer: Berlin/Heidelberg, Germany, 2020; pp. 63–81. [Google Scholar]

- Das, H.; Pradhan, C.; Dey, N. Deep Learning for Data Analytics: Foundations, Biomedical Applications, and Challenges; Academic Press: Cambridge, MA, USA, 2020. [Google Scholar]

- Miglani, A.; Kumar, N. Deep learning models for traffic flow prediction in autonomous vehicles: A review, solutions, and challenges. Veh. Commun. 2019, 20, 100184. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, P.; Ke, Y.; Zhang, Z.; Wang, M.; Li, P.; Zhang, S. Urban land use and land cover classification using novel deep learning models based on high spatial resolution satellite imagery. Sensors 2018, 18, 3717. [Google Scholar] [CrossRef] [PubMed]

- Shickel, B.; Tighe, P.J.; Bihorac, A.; Rashidi, P. Deep EHR: A survey of recent advances in deep learning techniques for electronic health record (EHR) analysis. IEEE J. Biomed. Health Inform. 2017, 22, 1589–1604. [Google Scholar] [CrossRef]

- Zhang, W.; Yu, Y.; Qi, Y.; Shu, F.; Wang, Y. Short-term traffic flow prediction based on spatio-temporal analysis and CNN deep learning. Transp. A: Transp. Sci. 2019, 15, 1688–1711. [Google Scholar] [CrossRef]

- Porzi, L.; Rota Bulò, S.; Lepri, B.; Ricci, E. Predicting and understanding urban perception with convolutional neural networks. In Proceedings of the 23rd ACM International Conference on Multimedia, Brisbane, Australia, 26–30 October 2015; pp. 139–148. [Google Scholar]

- Timilsina, S.; Sharma, S.; Aryal, J. Mapping urban trees within cadastral parcels using an object-based convolutional neural network. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, 4, 111–117. [Google Scholar] [CrossRef]

- Chauhan, R.; Kaur, H.; Alankar, B. Air quality forecast using convolutional neural network for sustainable development in urban environments. Sustain. Cities Soc. 2021, 75, 103239. [Google Scholar] [CrossRef]

- Tyrväinen, L.; Ojala, A.; Korpela, K.; Lanki, T.; Tsunetsugu, Y.; Kagawa, T. The influence of urban green environments on stress relief measures: A field experiment. J. Environ. Psychol. 2014, 38, 1–9. [Google Scholar] [CrossRef]

- Radomska, M.; Yurkiv, M.; Nazarkov, T. The Assessment of the Visual Pollution from Industrial Facilities in Natural Landscapes. Available online: http://www.kdu.edu.ua/EKB_jurnal/2019_1(27)/PDF/45_49.pdf (accessed on 30 January 2021).

- Galindo, M.P.; Hidalgo, M.C. Aesthetic preferences and the attribution of meaning: Environmental categorization processes in the evaluation of urban scenes. Int. J. Psychol. 2005, 40, 19–27. [Google Scholar] [CrossRef]

- Ghorbanzadeh, O.; Meena, S.R.; Blaschke, T.; Aryal, J. UAV-based slope failure detection using deep-learning convolutional neural networks. Remote Sens. 2019, 11, 2046. [Google Scholar] [CrossRef]

- Nami, P.; Jahanbakhsh, P.; Fathalipour, A. the role and heterogeneity of visual pollution on the quality of urban landscape using GIS; case study: Historical Garden in City of Maraqeh. Open J. Geol. 2016, 6, 20–29. [Google Scholar] [CrossRef]

- Zaeimdar, M.; Khalilnezhad Sarab, F.; Rafati, M. Investigation of the relation between visual pollution and citizenry health in the city of Tehran (case study: Municipality districts No. 1 & 12 of Tehran). Anthropog. Pollut. 2019, 3, 1–10. [Google Scholar]

- Rombauts, P. Aspects of visual Task Comfort in an Urban Environment. In Proceedings of the Lighting and City Beautification Congress, Istanbul, Turkey, 12–14 September 2001; pp. 99–104. [Google Scholar]

- Mokras-Grabowska, J. New urban recreational spaces. Attractiveness, infrastructure arrangements, identity. The example of the city of Łódź. Misc. Geogr. Reg. Stud. Dev. 2018, 22, 219–224. [Google Scholar] [CrossRef]

- Aljoufie, M. The impact assessment of increasing population density on Jeddah road transportation using spatial-temporal analysis. Sustainability 2021, 13, 1455. [Google Scholar] [CrossRef]

- Bakhshi, M. The position of green space in improving beauty and quality of sustainable space of city. Environ. Conserv. J. 2015, 16, 269–276. [Google Scholar] [CrossRef]

- Hiremath, S. Population Growth and Solid Waste Disposal: A burning Problem in the Indian Cities. Indian Streams Res. J. 2016, 6, 141–147. [Google Scholar]

- Tudor, C.A.; Iojă, I.C.; Hersperger, A.; Pǎtru-Stupariu, I. Is the residential land use incompatible with cemeteries location? Assessing the attitudes of urban residents. Carpathian J. Earth Environ. Sci. 2013, 8, 153–162. [Google Scholar]

- Nejad, J.M.; Azemati, H.; Abad, A.S.H. Investigating Sacred Architectural Values of Traditional Mosques Based on the Improvement of Spiritual Design Quality in the Architecture of Modern Mosques. Int. J. Architect. Eng. Urban Plan 2019, 29, 47–59. [Google Scholar]

- Nowghabi, A.S.; Talebzadeh, A. Psychological influence of advertising billboards on city sight. Civ. Eng. J. 2019, 5, 390–397. [Google Scholar] [CrossRef]

- Kshetri, T. Ndvi, ndbi & ndwi calculation using landsat 7, 8. GeoWorld 2018, 2, 32–34. [Google Scholar]

- Malm, W.C.; Leiker, K.K.; Molenar, J.V. Human perception of visual air quality. J. Air Pollut. Control Assoc. 1980, 30, 122–131. [Google Scholar] [CrossRef]

- Oltra, C.; Sala, R. A Review of the Social Research on Public Perception and Engagement Practices in Urban Air Pollution; IAEA: Vienna, Austria, 2014. [Google Scholar]

- Xu, H.; Deng, Y. Dependent evidence combination based on shearman coefficient and pearson coefficient. IEEE Access 2017, 6, 11634–11640. [Google Scholar] [CrossRef]

- Zhu, H.; You, X.; Liu, S. Multiple ant colony optimization based on pearson correlation coefficient. IEEE Access 2019, 7, 61628–61638. [Google Scholar] [CrossRef]

- Ding, A.; Zhang, Q.; Zhou, X.; Dai, B. Automatic recognition of landslide based on CNN and texture change detection. In Proceedings of the 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 444–448. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Saha, S.; Sarkar, R.; Roy, J.; Hembram, T.K.; Acharya, S.; Thapa, G.; Drukpa, D. Measuring landslide vulnerability status of Chukha, Bhutan using deep learning algorithms. Sci. Rep. 2021, 11, 16374. [Google Scholar] [CrossRef]

- Panahi, M.; Sadhasivam, N.; Pourghasemi, H.R.; Rezaie, F.; Lee, S. Spatial prediction of groundwater potential mapping based on convolutional neural network (CNN) and support vector regression (SVR). J. Hydrol. 2020, 588, 125033. [Google Scholar] [CrossRef]

- Zhu, Q.; Chen, J.; Zhu, L.; Duan, X.; Liu, Y. Wind speed prediction with spatio–temporal correlation: A deep learning approach. Energies 2018, 11, 705. [Google Scholar] [CrossRef]

- Sun, T.; Li, H.; Wu, K.; Chen, F.; Zhu, Z.; Hu, Z. Data-driven predictive modelling of mineral prospectivity using machine learning and deep learning methods: A case study from southern Jiangxi Province, China. Minerals 2020, 10, 102. [Google Scholar] [CrossRef]

- Lu, Y.; Huo, Y.; Yang, Z.; Niu, Y.; Zhao, M.; Bosiakov, S.; Li, L. Influence of the Parameters of the Convolutional Neural Network Model in Predicting the Effective Compressive Modulus of Porous Structure; Frontiers Media S.A: Lausanne, Switzerland, 2022. [Google Scholar]

- Razavi-Termeh, S.V.; Sadeghi-Niaraki, A.; Farhangi, F.; Choi, S.-M. COVID-19 risk mapping with considering socio-economic criteria using machine learning algorithms. Int. J. Environ. Res. Public Health 2021, 18, 9657. [Google Scholar] [CrossRef]

- Al Sayaydeha, O.N.; Mohammad, M.F. Diagnosis of the Parkinson disease using enhanced fuzzy min-max neural network and OneR attribute evaluation method. In Proceedings of the 2019 International Conference on Advanced Science and Engineering (ICOASE), Duhok, Iraq, 2–4 April 2019; pp. 64–69. [Google Scholar]

- Wu, S.; Flach, P. A scored AUC metric for classifier evaluation and selection. In Proceedings of the Second Workshop on ROC Analysis in ML, Bonn, Germany, 11 August 2005. [Google Scholar]

- Davis, J.; Goadrich, M. The relationship between Precision-Recall and ROC curves. In Proceedings of the 23rd International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2006; pp. 233–240. [Google Scholar]

- Lei, X.; Chen, W.; Panahi, M.; Falah, F.; Rahmati, O.; Uuemaa, E.; Kalantari, Z.; Ferreira, C.S.S.; Rezaie, F.; Tiefenbacher, J.P. Urban flood modeling using deep-learning approaches in Seoul, South Korea. J. Hydrol. 2021, 601, 126684. [Google Scholar] [CrossRef]

- Youssef, A.M.; Al-Kathery, M.; Pradhan, B. Landslide susceptibility mapping at Al-Hasher area, Jizan (Saudi Arabia) using GIS-based frequency ratio and index of entropy models. Geosci. J. 2015, 19, 113–134. [Google Scholar] [CrossRef]

- Siddiqui, K.A.; Tarani, S.S.A.; Fatani, S.A.; Raza, A.; Butt, R.M.; Azeema, N. Effect of size, location and content of billboards on brand awareness. J. Bus. Stud. Q. 2016, 8, 40. [Google Scholar]

- Edquist, J.; Horberry, T.; Hosking, S.; Johnston, I. Effects of advertising billboards during simulated driving. Appl. Ergon. 2011, 42, 619–626. [Google Scholar] [CrossRef] [PubMed]

- ZAMiRi, M. The role of urban advertising in quality of urban land scape. Curr. World Environ. 2016, 11, 14. [Google Scholar] [CrossRef]

- Carmona, M. Principles for public space design, planning to do better. Urban Des. Int. 2019, 24, 47–59. [Google Scholar] [CrossRef]

- Foster, S.; Giles-Corti, B. The built environment, neighborhood crime and constrained physical activity: An exploration of inconsistent findings. Prev. Med. 2008, 47, 241–251. [Google Scholar] [CrossRef]

- Hillnhütter, H. Stimulating urban walking environments—Can we measure the effect? Environ. Plan. B Urban Anal. City Sci. 2022, 49, 275–289. [Google Scholar] [CrossRef]

- Wright, C.; Curtis, B. Aesthetics and the urban road environment. Proc. Inst. Civ. Eng.-Munic. Eng. 2002, 151, 145–150. [Google Scholar] [CrossRef]

- Ahmed, S.A.G.; Mushref, Z.J. Three-Dimensional Modeling of Visual Pollution of Generator Wires in Ramadi City. PalArch’s J. Archaeol. Egypt/Egyptol. 2021, 18, 1659–1668. [Google Scholar]

- Taylor, N. The aesthetic experience of traffic in the modern city. Urban Stud. 2003, 40, 1609–1625. [Google Scholar] [CrossRef]

- Bankole, O.E. Urban environmental graphics: Impact, problems and visual pollution of signs and billboards in Nigerian cities. Int. J. Educ. Res. 2013, 1, 1–12. [Google Scholar]

- Rozman Cafuta, M. Visual perception and evaluation of artificial night light in urban open areas. Informatologia 2014, 47, 257–263. [Google Scholar]

- Boyce, P.R. The benefits of light at night. Build. Environ. 2019, 151, 356–367. [Google Scholar] [CrossRef]

- Dabbagh, E. The Effects of Color and Light on the Beautification of Urban Space and the Subjective Perception of Citizens. Int. J. Eng. Sci. Invent. 2019, 8, 20–25. [Google Scholar]

- Allahyari, H.; Nasehi, S.; Salehi, E.; Zebardast, L. Evaluation of visual pollution in urban squares, using SWOT, AHP, and QSPM techniques (Case study: Tehran squares of Enghelab and Vanak). Pollution 2017, 3, 655–667. [Google Scholar]

- Alam, P.; Ahmade, K. Impact of solid waste on health and the environment. Int. J. Sustain. Dev. Green Econ. 2013, 2, 165–168. [Google Scholar]

- Azeema, N.; Nazuk, A. Is billboard a visual pollution in Pakistan. Int. J. Sci. Eng. Res 2016, 7, 862–874. [Google Scholar]

- Achsani, R.A.; Wonorahardjo, S. Studies on Visual Environment Phenomena of Urban Areas: A Systematic Review. In IOP Conference Series: Earth and Environmental Science; IOP Publishing: Bristol, UK, 2020; p. 012016. [Google Scholar]

- Romanova, E. Increase in population density and aggravation of social and psychological problems in areas with high-rise construction. E3S Web Conf. 2018, 33, 03061. [Google Scholar] [CrossRef]

- Karimimoshaver, M.; Hajivaliei, H.; Shokri, M.; Khalesro, S.; Aram, F.; Shamshirband, S. A model for locating tall buildings through a visual analysis approach. Appl. Sci. 2020, 10, 6072. [Google Scholar] [CrossRef]

- Voronych, Y. Visual pollution of urban space in Lviv. Przestrz. I Forma 2013, 20, 309–314. [Google Scholar]

- Song, Y.; Wang, R.; Fernandez, J.; Li, D. Investigating sense of place of the Las Vegas Strip using online reviews and machine learning approaches. Landsc. Urban Plan. 2021, 205, 103956. [Google Scholar] [CrossRef]

- Saghir, B. Tackling Urban Visual Pollution to Enhance the Saudi Cityscape; CLG: Riyadh, Saudi Arabia, 2019. [Google Scholar]

- Ye, Y.; Zeng, W.; Shen, Q.; Zhang, X.; Lu, Y. The visual quality of streets: A human-centred continuous measurement based on machine learning algorithms and street view images. Environ. Plan. B Urban Anal. City Sci. 2019, 46, 1439–1457. [Google Scholar] [CrossRef]

- Zhang, G.; Wang, M.; Liu, K. Forest fire susceptibility modeling using a convolutional neural network for Yunnan province of China. Int. J. Disaster Risk Sci. 2019, 10, 386–403. [Google Scholar] [CrossRef]

- Rere, L.; Fanany, M.I.; Arymurthy, A.M. Metaheuristic algorithms for convolution neural network. Comput. Intell. Neurosci. 2016, 2016, 1537325. [Google Scholar] [CrossRef] [PubMed]

- Wong, J.; Tam, K. Spatial identity of fashion brands: The visibility network in complex shopping malls. In Proceedings of the the IFFTI 2019 Conference, Manchester, UK, 8–12 April 2019; pp. 168–185. Available online: https://fashioninstitute.mmu.ac.uk/ifftipapers/paper-85 (accessed on 8 April 2019).

- Du, H.; Jiang, H.; Song, X.; Zhan, D.; Bao, Z. Assessing the visual aesthetic quality of vegetation landscape in urban green space from a visitor’s perspective. J. Urban Plan. Dev. 2016, 142, 04016007. [Google Scholar] [CrossRef]

- Uzun, O.; Müderrisoğlu, H. Visual landscape quality in landscape planning: Examples of Kars and Ardahan cities in Turkey. Afr. J. Agric. Res. 2011, 6, 1627–1638. [Google Scholar]

- Pascal, M.; Pascal, L.; Bidondo, M.-L.; Cochet, A.; Sarter, H.; Stempfelet, M.; Wagner, V. A review of the epidemiological methods used to investigate the health impacts of air pollution around major industrial areas. J. Environ. Public Health 2013, 2013, 737926. [Google Scholar] [CrossRef]

- Khanal, K.K. Visual pollution and eco-dystopia: A study of billboards and signs in Bharatpur metropolitan city. Res. J. Engl. Lang. Lit 2018, 6, 202–208. [Google Scholar]

- Mohamed, M.A.S.; Ibrahim, A.O.; Dodo, Y.A.; Bashir, F.M. Visual pollution manifestations negative impacts on the people of Saudi Arabia. Int. J. Adv. Appl. Sci 2021, 8, 94–101. [Google Scholar]

| Researcher | Purpose | Methodology | Results |

|---|---|---|---|

| [15] | Studied the relationship between users’ visual preferences in recreational activities in urban areas and various factors of landscape design | taking photographs of the area in phases, using a photo-questionnaire design, and applying a statistical analysis | Identifying factors with constructive impacts and destructive effects on visual quality |

| [16] | Quantification of landscape values on a continental scale | social media data | Panoramic results show that Flicker and Instagram can be used to determine the properties of a landscape, and social media data can be used to comprehend how people value a landscape in social, political, and ecological terms. |

| [17] | Studied the aesthetic services of a mountain region | Combination of visual traits of landscapes and crowdsourced geographic information | Identifying visual factors that attract domestic and foreign tourists |

| [14] | Presented a new tool for Visual Pollution Assessment (VPA) in urban environments to quantify visual pollution | The explicit and systematic combination of expert and general opinions for classifying and categorizing Visual Pollution Objects (VPOs) | The significant role of VPA in evaluating visual pollution |

| [18] | Autonomous navigation and visual perception in unstructured outdoor environments | A Robot Unstructured Ground Driving (RUGD) Dataset | Introducing the unique challenges of this data as it relates to navigation tasks |

| [19] | Visual Perception Evaluation in a Physical-Based Virtual Environment | Using virtual reality technology to compare a 3-dimensional virtual office simulator with real office | There is no significant difference in the two environments based on the studied parameters—The ability of the proposed method to provide realistic, immersive environments |

| [20] | Studied effects of audio-visual interactions on soundscape and landscape perception and their influence on satisfaction with the urban environment | virtual reality technology | The effect of the availability of visual information on the auditory perception of a number of human-made and natural sounds and the effect of the availability of audio information on the visual perception of various visual elements—The effect of audio information on the perception of the naturalness of a landscape—The effect of audio and visual information is 24 and 76 percent respectively on overall satisfaction |

| [21] | Effect of the appropriateness of sound environment on urban soundscape assessment | Virtual reality technology | The interaction of appropriateness of sound sources with individuals’ perception of visual elements—The effect of traffic sounds and birdsong on participants’ initial perception of urban soundscape quality—There is a relationship between “human” sounds originating from human activity and the “comfort” aspects of soundscape quality |

| [22] | Studied the reduction in urban visual pollution | Multilateral decision-making and geographic information system | Identifying the best spots for billboard installation through focused management |

| Researcher | Purpose | Methodology | Results |

|---|---|---|---|

| [23] | Solving visual pollution | deep learning | Training accuracy of 95% and validation accuracy of 85% have been achieved by the deep learning model—Relation of the upper limit of accuracy to the size of the dataset size |

| [24] | studying the aesthetic of landscapes | Spatial modeling and mapping, social media images, and machine learning | Finding important variables such as the pleasant nature of rural areas, mountainous landforms, and vegetation for aesthetic value by the predictive model |

| [6] | Analyzing the correlation between visual space and residents’ psychology in Wuhan, China | street-view images and deep-learning technique | There is a strong relationship between urban visual space indicators and residents’ psychological perceptions |

| [25] | Land Cover Classification | Machine Learning Approaches from High-Resolution Images | More accuracy of ANN than SVM—Improving the average accuracy after applying postprocessing using majority analysis. |

| [26] | studying people’s perception of Shanghai landscapes | deep learning and street view images | More assurance and more liveliness, but against more depression in highly urbanized areas |

| [27] | Urbanization Detection Using LiDAR-Based Remote Sensing Images of Azad Kashmir | Novel 3D CNNs | The overall accuracy and kappa value are very good for the suggested 3D CNN approach—Proposed 3D CNN approach makes better use of urbanization than the commonly utilized pixel-based support vector machine classifier |

| [28] | Measuring visual walkability perception | panoramic street view images, virtual reality, and deep learning | Validating the accuracy of the VWP classification deep multitask learning (VWPCL) model for predicting visual walkability perception (VWP) |

| [29] | Automatic classification of textile visual pollutants | deep learning networks (Faster R-CNN, YOLOv5, and EfficientDet) | The best performance belongs to the EfficientDet framework |

| [30] | Studying the effect of the natural environment in the downtown neighborhood on physical activity and sentiment | social media data and machine learning | The favorable influence of blue space visibility, activity facilities, street furniture, and safety on physical activity with a social gradient- Positive correlation between amenities, perceived street safety, and beauty to public sentiment- The consistency of social media findings on the environment and physical activity with traditional surveys from the same time period |

| [31] | Classification of Monkeypox Disease | Transfer Learning | PoxNet22 outperforms other methods in its classification of monkeypox. |

| [32] | Investigation of frequent pattern in human intoxication data | Apriori algorithm | Eight significant rules were discovered, with a confidence level of 95% and a support level of 45% |

| Independent Variables | Source of Data |

|---|---|

| Distance to local passages | OpenStreetMap (https://www.openstreetmap.org) (accessed on1 January 2021) (1:100,000) |

| population density | Statistical Centre of Iran (2017) |

| Traffic volume | Tehran Traffic Control Company (2015–2020) |

| Night light | VIRS (Visible Infrared Imaging Radiometer Suite) image in Google Earth Engine (https://earthengine.google.com/) (accessed on1 January 2021) |

| Distance to public transport stations | land use layers (1:10,000) |

| Distance to billboards | Tehran enhancement organization |

| Distance to primary streets | OpenStreetMap (2021) (1:100,000) |

| Distance to recreational attractions | land use layers (1:10,000) |

| Distance to shopping malls | land use layers (1:10,000) |

| Distance to restaurants | land use layers (1:10,000) |

| Distance to religious places | land use layer (1:10,000) |

| Distance to cemeteries | land use layer (1:10,000) |

| Distance to dilapidated areas | land use layer (1:10,000) |

| Distance to secondary streets | OpenStreetMap (2021) (1:100,000) |

| Distance to industrial areas | land use layers (1:10,000) |

| AQI | Landsat 8 images in the Google Earth Engine platform (2010–2020) |

| NDVI | Tehran air quality control company (23 stations) (2010–2020) |

| Train | Test | |||

|---|---|---|---|---|

| Sight Type | MSE | Accuracy | MSE | Accuracy |

| Pleasant | 0.02 | 0.97 | 0.22 | 0.75 |

| Unpleasant | 0.05 | 0.93 | 0.20 | 0.74 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Farahani, M.; Razavi-Termeh, S.V.; Sadeghi-Niaraki, A.; Choi, S.-M. A Hybridization of Spatial Modeling and Deep Learning for People’s Visual Perception of Urban Landscapes. Sustainability 2023, 15, 10403. https://doi.org/10.3390/su151310403

Farahani M, Razavi-Termeh SV, Sadeghi-Niaraki A, Choi S-M. A Hybridization of Spatial Modeling and Deep Learning for People’s Visual Perception of Urban Landscapes. Sustainability. 2023; 15(13):10403. https://doi.org/10.3390/su151310403

Chicago/Turabian StyleFarahani, Mahsa, Seyed Vahid Razavi-Termeh, Abolghasem Sadeghi-Niaraki, and Soo-Mi Choi. 2023. "A Hybridization of Spatial Modeling and Deep Learning for People’s Visual Perception of Urban Landscapes" Sustainability 15, no. 13: 10403. https://doi.org/10.3390/su151310403

APA StyleFarahani, M., Razavi-Termeh, S. V., Sadeghi-Niaraki, A., & Choi, S.-M. (2023). A Hybridization of Spatial Modeling and Deep Learning for People’s Visual Perception of Urban Landscapes. Sustainability, 15(13), 10403. https://doi.org/10.3390/su151310403