Transformer Architecture-Based Transfer Learning for Politeness Prediction in Conversation

Abstract

:1. Introduction

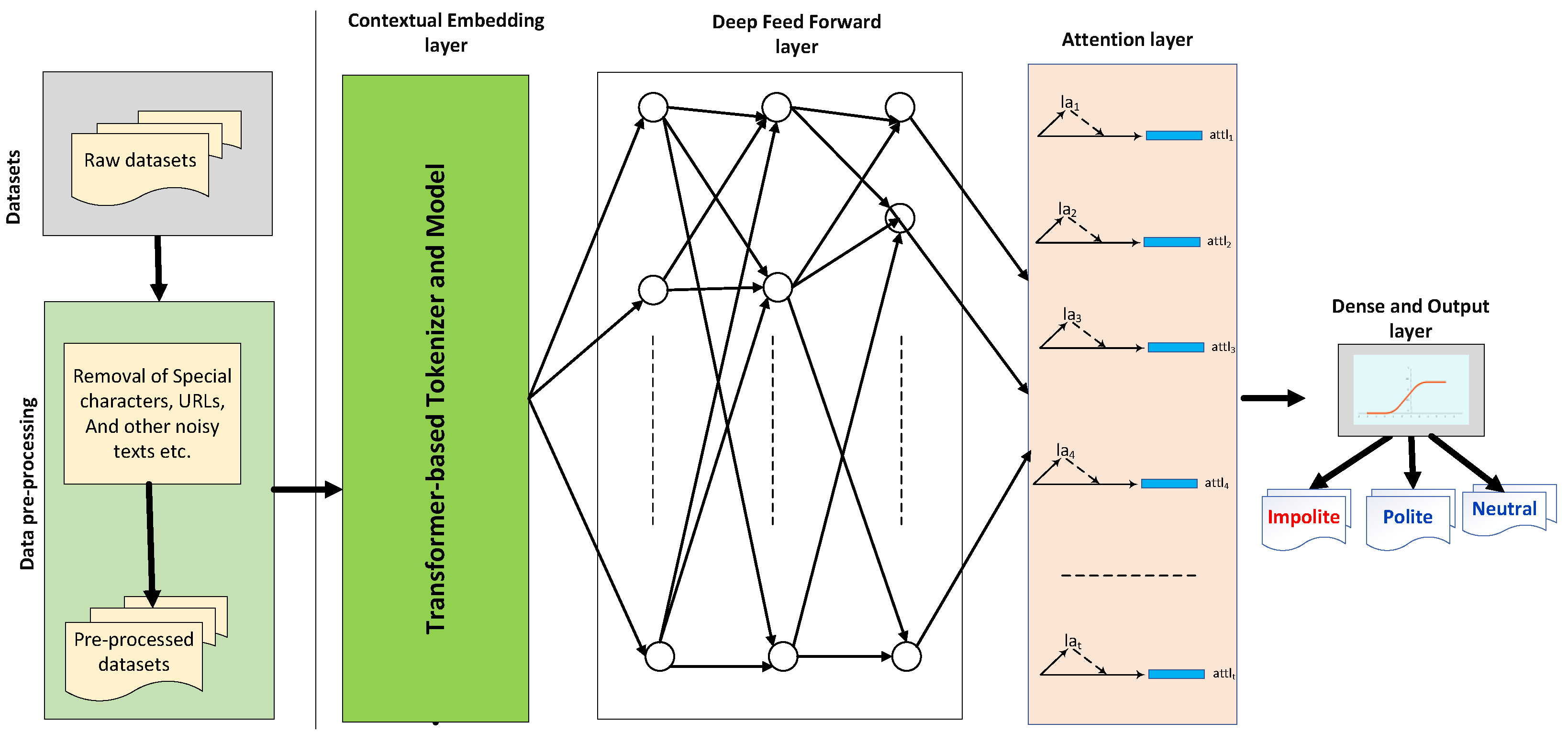

Our Contributions

- Introduce a deep model for the understudied problem of politeness prediction by integrating the strength of transformer architecture-based large language models, feed-forward dense layer, and attention mechanism. Presented model learns an efficient text representation, passed to a sigmoid layer to classify into polite and impolite categories.

- Perform an in-depth investigation of the presented model by applying the initial weight assignment from transformer-based language models in politeness prediction over two benchmark datasets, including a blog dataset.

- Conduct an ablation study to investigate the impact of various neural network components like the attention mechanism towards the politeness prediction.

- Also, investigate the impact of different values of hyperparameters like batch size and optimization algorithm on the efficacy of the presented model to discover their optimal number.

2. Literature Survey

3. Models and Methods

3.1. Data Pre-Processing

3.1.1. Contraction Expansion

3.1.2. URL Filtration

3.1.3. Special Character Removal

3.2. Contextual Embedding Layer

3.3. Attention-Aware Deep Feed Forward Network Layer

3.4. Output Layer

4. Experiment

4.1. Evaluation Datasets

4.2. Experimental Setting

4.3. Experimental Results

4.3.1. Comparative Evaluation

- Aubakirova and Bansal [22]: In this paper, the authors introduced a simple neural network employing the convolutional neural network to predict the politeness in requesting sentences. Further, the authors performed network visualization using activation clusters, first derivative saliency, and embedding space transformation to analyze the linguistic signals of politeness.

- Mizil et al. [8]: In this early study, the authors presented a computational framework employing the domain-independent lexicon and syntactic features to analyze the linguistic aspect of politeness. They further trained an SVM classifier to predict politeness. They also investigated the relationship between politeness and social power.

- BiLSTM: The first baseline, BiLSTM, is a simple RNN network. It incorporates both left-to-right and right-to-left contexts during representation learning. This model has an input layer to receive the embedding-based text representation followed by a BiLSTM layer having 128 neurons. Finally, a final softmax layer classifies the text into polite and non-polite categories.

- LSTM: The second baseline is an LSTM network to compare its performance against the presented model. The baseline network has an LSTM layer with 128 neurons. It also has an input layer and a softmax layer for classification. It also uses GloVe embedding of 200d as input to the model.

- BiGRU: It is the third baseline of this paper. It uses 128 neurons in the BiGRU layer for tweet representation learning. It also has an Embedding layer using 200d Glove embedding and a softmax layer to perform final classification.

- ANN: The model performance is also compared with a simple artificial neural network. This ANN model has 3 hidden layers having 128, 64, and 32 neurons. It also has an embedding layer and a final softmax layer for classification. This baseline model takes 200d GloVe embedding as input.

- fBERT [29]: It is a BERT model, pre-trained on a large English offensive language corpus (SOLID), containing more than 1.4 million offensive instances.

- HateBERT [30]: It is another BERT model, pretrained for abusive language detection. It is trained on a Reddit dataset of communities banned for being offensive, abusive, or hateful.

4.3.2. Ablation Analysis

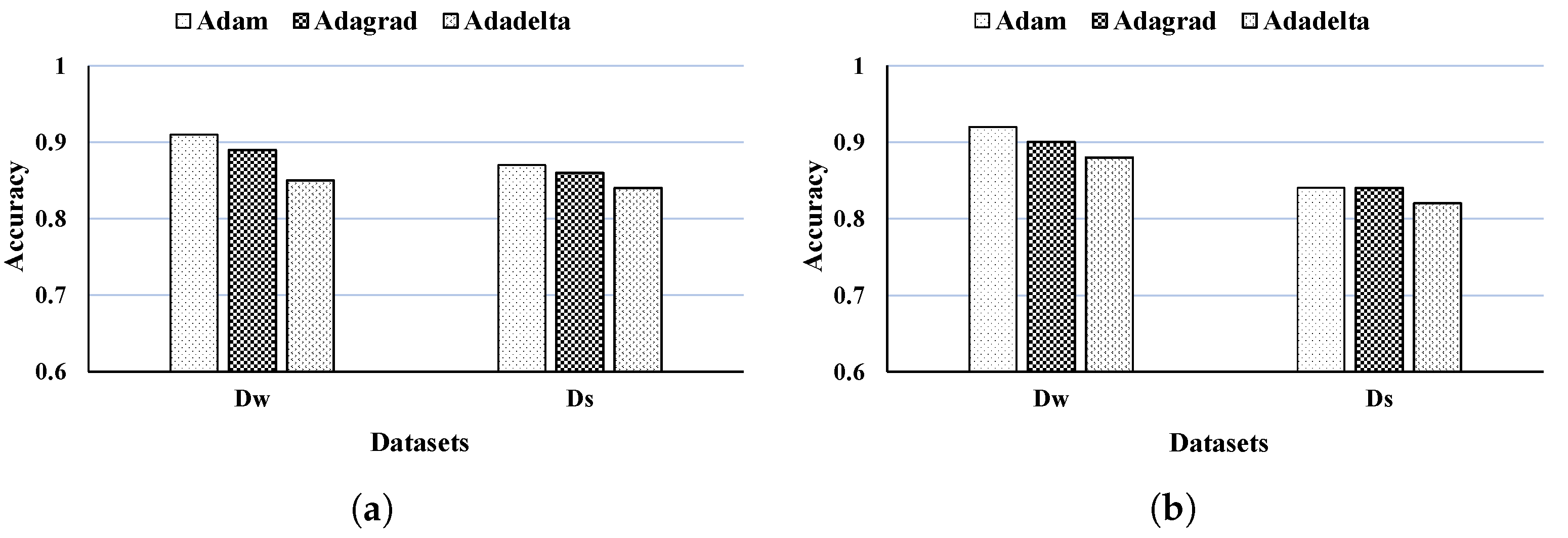

5. Discussion: Evaluation of Hyperparameters Impact

5.1. Batch Size

5.2. Optimization Algorithms

6. Conclusions and Future Directions of Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Khan, S. Business Intelligence Aspect for Emotions and Sentiments Analysis. In Proceedings of the First International Conference on Electrical, Electronics, Information and Communication Technologies, ICEEICT, Trichy, India, 16–18 February 2022; pp. 1–5. [Google Scholar]

- Haq, A.U.; Li, J.P.; Ahmad, S.; Khan, S.; Alshara, M.A.; Alotaibi, R.M. Diagnostic Approach for Accurate Diagnosis of COVID-19 Employing Deep Learning and Transfer Learning Techniques through Chest X-ray Images Clinical Data in E-Healthcare. Sensors 2021, 21, 8219. [Google Scholar] [CrossRef] [PubMed]

- Qaisar, A.; Ibrahim, M.E.; Khan, S.; Baig, A.R. Hypo-Driver: A Multiview Driver Fatigue and Distraction Level Detection System. Cmc-Comput. Mater. Contin. 2021, 71, 1999–2017. [Google Scholar]

- Abulaish, M.; Kumari, N.; Fazil, M.; Singh, B. A Graph-Theoretic Embedding-Based Approach for Rumor Detection in Twitter. In Proceedings of the IEEE/WIC/ACM International Conference on Web Intelligence, Thessaloniki, Greece, 14–17 October 2019; pp. 466–470. [Google Scholar]

- Mahajan, S.; Pandit, A.K. Hybrid method to supervise feature selection using signal processing and complex algebra techniques. Multimed. Tools Appl. 2023, 82, 8213–8234. [Google Scholar] [CrossRef]

- Khan, S.; Fazil, M.; Sejwal, V.K.; Alshara, M.A.; Alotaibi, R.M.; Kamal, A.; Baig, A. BiCHAT: BiLSTM with deep CNN and hierarchical attention for hate speech detection. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 4335–4344. [Google Scholar] [CrossRef]

- Khan, S.; Kamal, A.; Fazil, M.; Alshara, M.A.; Sejwal, V.K.; Alotaibi, R.M.; Baig, A.; Alqahtani, S. HCovBi-Caps: Hate Speech Detection using Convolutional and Bi-Directional Gated Recurrent Unit with Capsule Network. IEEE Access 2022, 10, 7881–7894. [Google Scholar] [CrossRef]

- Danescu-Niculescu-Mizil, C.; Sudhof, M.; Jurafsky, D.; Leskovec, J.; Potts, C. A computational approach to politeness with application to social factors. In Proceedings of the International Conference of the Association for Computational Linguistics, Sofia, Bulgaria, 4–9 August 2013; pp. 250–259. [Google Scholar]

- Madaan, A.; Setlur, A.; Parekh, T.; Poczos, B.; Neubig, G.; Yang, Y.; Salakhutdinov, R.; Black, A.W.; Prabhumoye, S. Politeness Transfer: A Tag and Generate Approach. In Proceedings of the International Conference of the Association for Computational Linguistics, Virtual, 5–10 July 2020; pp. 1869–1881. [Google Scholar]

- Niu, T.; Bansal, M. Polite Dialogue Generation Without Parallel Data. Trans. Assoc. Comput. Linguist. 2018, 6, 373–389. [Google Scholar] [CrossRef] [Green Version]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Liu, Y.; Ott, M.; Goyal, N.; Du, J.; Joshi, M.; Chen, D.; Levy, O.; Lewis, M.; Zettlemoyer, L.; Stoyanov, V. RoBERTa: A Robustly Optimized BERT Pretraining Approach. In Proceedings of the ICLR, Addis Ababa, Ethiopia, 26–30 April 2020; pp. 1–13. [Google Scholar]

- Wen, T.H.; Vandyke, D.; Mrksic, N.; Gasic, M.; Rojas-Barahona, L.M.; Ultes, P.H.S.S.; Young, S. A Network-based End-to-End Trainable Task-oriented Dialogue System. In Proceedings of the International Conference of European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; pp. 438–449. [Google Scholar]

- Shi, W.; Yu, Z. Sentiment Adaptive End-to-End Dialog Systems. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1509–1519. [Google Scholar]

- Mishra, K.; Firdaus, M.; Ekbal, A. Please be polite: Towards building a politeness adaptive dialogue system for goal-oriented conversations. Neurocomputing 2022, 494, 242–254. [Google Scholar] [CrossRef]

- Brown, P.; Levinson, S.C.; Levinson, S.C. Politeness: Some Universals in Language Usage; Cambridge University Press: Cambridge, UK, 1987; Volume 4. [Google Scholar]

- Niu, T.; Bansal, M. Polite Dialogue Generation Without Parallel Data. In Proceedings of the the European Conference on Information Retrieval, Padua, Italy, 20–23 March 2018; Springer: Cham, Switzerland, 2018; pp. 810–817. [Google Scholar]

- Peng, D.; Zhou, M.; Liu, C.; Ai, J. Human–machine dialogue modelling with the fusion of word- and sentence-level emotion. Knowl.-Based Syst. 2019, 192, 105319. [Google Scholar] [CrossRef]

- Iordache, C.P.; Trausan-Matu, S. Analysis and prediction of politeness in conversations. In Proceedings of the International Conference on Human Computer Interaction, Bucharest, Romania, 16–17 September 2021; pp. 15–20. [Google Scholar]

- Zhang, J.; Chang, J.P.; Danescu-Niculescu-Mizil, C. Conversations Gone Awry: Detecting Early Signs of Conversational Failure. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Melbourne, Australia, 15–20 July 2018; pp. 1350–1361. [Google Scholar]

- Chang, J.P.; Danescu-Niculescu-Mizil, C. Trouble on the Horizon: Forecasting the Derailment of Online Conversations as they Develop. In Proceedings of the International Conference on Empirical Methods in Natural Language Processing, Hongkong, China, 3–7 November 2019; pp. 1–12. [Google Scholar]

- Aubakirova, M.; Bansal, M. Interpreting Neural Networks to Improve Politeness Comprehension. In Proceedings of the International Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 2035–2041. [Google Scholar]

- Li, M.; Hickman, L.; Tay, L.; Ungar, L.; Guntuku, S.C. Studying Politeness across Cultures using English Twitter and Mandarin Weibo. In Proceedings of the CSCW, Virtual, 17–21 October 2020; pp. 1–15. [Google Scholar]

- Lee, J.G.; Lee, K.M. Polite speech strategies and their impact on drivers’ trust in autonomous vehicles. Comput. Hum. Behav. 2022, 127, 107015. [Google Scholar] [CrossRef]

- Mishra, K.; Firdaus, M.; Ekbal, A. Predicting Politeness Variations in Goal-Oriented Conversations. IEEE Trans. Comput. Soc. Syst. 2022, 10, 1–10. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; pp. 1–11. [Google Scholar]

- Ji, S.; Zhang, T.; Ansari, L.; Fu, J.; Tiwari, P.; Cambria, E. MentalBERT: Publicly Available Pretrained Language Models for Mental Healthcare. In Proceedings of the the Thirteenth Language Resources and Evaluation Conference, Marseille, France, 20–25 June 2022; European Language Resources Association: Paris, France, 2022; pp. 7184–7190. [Google Scholar]

- Yang, Z.; Yang, D.; Dyer, C.; He, X.; Smola, A.; Hovy, E. Hierarchical Attention Networks for Document Classification. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1480–1489. [Google Scholar]

- Sarkar, D.; Zampieri, M.; Ranasinghe, T.; Ororbia, A. fBERT: A Neural Transformer for Identifying Offensive Content. In Proceedings of the Proc. of the EMNLP, Punta Cana, Dominican Republic, 1–6 August 2021; pp. 1792–1798. [Google Scholar]

- Caselli, T.; Basile, V.; Mitrović, J.; Granitzer, M. HateBERT: Retraining BERT for Abusive Language Detection in English. In Proceedings of the 5th Workshop on Online Abuse and Harms, Online, 7–13 November 2021; pp. 17–25. [Google Scholar]

| Dataset | Dataset Size | #Polite | #Impolite | Neutral |

|---|---|---|---|---|

| D | 4353 | 1089 | 1089 | 2175 |

| D | 8254 | 3302 | 1651 | 3301 |

| Hyperparameter | Value |

|---|---|

| Model learning rate | |

| Batch size | 16 |

| Loss method | Categorical Cross-Entropy |

| Optimization algorithm | Adam |

| Dropout | |

| Epoch | 20 |

| Datasets → | D | D |

|---|---|---|

| Methods ↓ | Accuracy | Accuracy |

| Proposed Model [BERT] | 0.91 | 0.87 |

| Proposed Model [RoBERTa] | 0.92 | 0.84 |

| Aubakirova and Bansal [22] | 0.85 | 0.66 |

| Mizil et al. [8] | 0.83 | 0.78 |

| fBERT [29] | 0.90 | 0.87 |

| HateBERT [30] | 0.89 | 0.83 |

| ANN | 0.87 | 0.82 |

| BiLSTM | 0.88 | 0.76 |

| LSTM | 0.87 | 0.76 |

| BiGRU | 0.88 | 0.78 |

| Datasets → | D | D |

|---|---|---|

| Methods ↓ | Accuracy | Accuracy |

| Proposed Model [BERT] | 0.91 | 0.87 |

| Proposed Model [RoBERTa] | 0.92 | 0.84 |

| Proposed model [BERT] (without FNN) | 0.89 | 0.86 |

| Proposed Model [RoBERTa] (without FNN) | 0.89 | 0.87 |

| Proposed model [BERT] (without Attention) | 0.91 | 0.86 |

| Proposed Model [RoBERTa] (without Attention) | 0.91 | 0.83 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, S.; Fazil, M.; Imoize, A.L.; Alabduallah, B.I.; Albahlal, B.M.; Alajlan, S.A.; Almjally, A.; Siddiqui, T. Transformer Architecture-Based Transfer Learning for Politeness Prediction in Conversation. Sustainability 2023, 15, 10828. https://doi.org/10.3390/su151410828

Khan S, Fazil M, Imoize AL, Alabduallah BI, Albahlal BM, Alajlan SA, Almjally A, Siddiqui T. Transformer Architecture-Based Transfer Learning for Politeness Prediction in Conversation. Sustainability. 2023; 15(14):10828. https://doi.org/10.3390/su151410828

Chicago/Turabian StyleKhan, Shakir, Mohd Fazil, Agbotiname Lucky Imoize, Bayan Ibrahimm Alabduallah, Bader M. Albahlal, Saad Abdullah Alajlan, Abrar Almjally, and Tamanna Siddiqui. 2023. "Transformer Architecture-Based Transfer Learning for Politeness Prediction in Conversation" Sustainability 15, no. 14: 10828. https://doi.org/10.3390/su151410828

APA StyleKhan, S., Fazil, M., Imoize, A. L., Alabduallah, B. I., Albahlal, B. M., Alajlan, S. A., Almjally, A., & Siddiqui, T. (2023). Transformer Architecture-Based Transfer Learning for Politeness Prediction in Conversation. Sustainability, 15(14), 10828. https://doi.org/10.3390/su151410828