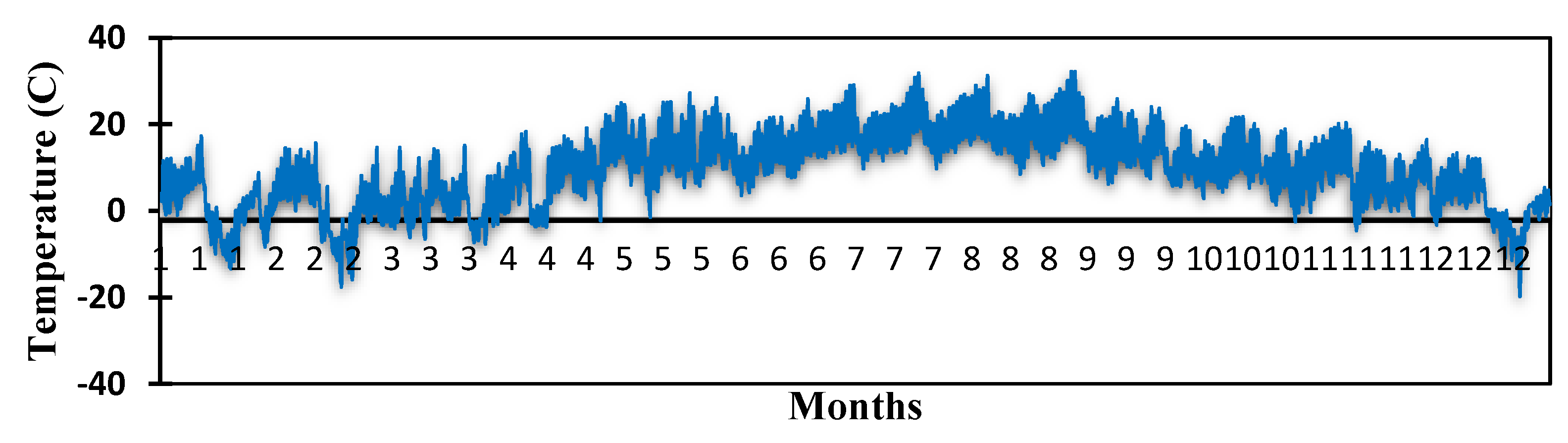

In this section, the performance of the study is extensively examined and discussed. Due to the diversity of climatic challenges, experiments are designed to simulate surrounding climatic conditions on solar panels depending on Equation (12).

3.1. Classification Performance Results

For the first classification case, we used the original dataset without any type of preprocessing or noise; for the second classification case, we used the motion blur dataset for training with upsampling on partial class; the idea is to use motion blur as training data to indicate whether these datasets are valid for training or not and whether this type of dataset can be relied upon to obtain better test results with extreme climate.

The conducted dataset is divided into 60% of the data utilized for training, while 20% was used for each validation and testing.

Figure 10 shows the accuracies and losses of all compared architectures for the first proposed approach in the experimental setup. For the training,

Figure 10a,c illustrated the accuracy and loss results of VGG-16, VGG-19, RESNET-18, RESNET-50, and RESNET-101, respectively. The learning behavior of VGG-16 is more stable with less fluctuation than VGG-19: it can reach a steady state before 100 iterations. On the other hand, VGG-19 reaches stability after 183 iterations. The learning behavior of RESNET-101 outperformed RESNET-18 and RESNET-50 architectures by achieving steady-state results with fewer iterations (approximately 60 iterations). The training performance also shows that the BIDA-CNN model with a series network outperforms the other models, which supports the effectiveness of fast learning. The learning behavior of the BIDA-CNN model is nonvolatile and steadier with less fluctuation than RESNET. The performance of the BIDA-CNN model is close to VGG, but it is not possible to rely on VGG because it contains a high number of layers that can be lost in the features of the images in the next cases.

For the validation, validation is performed after every 1 iteration for all models used in the analysis. The validation process is devoid of underfitting and overfitting.

Figure 10b shows the minimum and maximum accuracies for VGG-16 are 60% and 100%, and VGG-19 are 80% and 100%, while the median and mode for VGG-16 are 99.18% and 100% and VGG-19 are 100% and 100%, respectively. The minimum and maximum accuracies for RESNET-18 are 43.75% and 100%, for RESNET-50 68.75% and 100%, and for RESNET-101 26.25% and 100%. In addition, the median and mode for RESNET-18 are 100% and 100%, for RESNET-50 100% and 100%, and for RESNET-101 100% and 100%, respectively. The minimum, maximum, median, and mode for the BIDA-CNN model are 20%, 100%, 100%, and 100%, respectively.

Figure 10d shows that the loss results confirm the accuracy results, where the loss of VGG-16 suffers from a high fluctuation at the beginning of learning.

Table 3 and

Table 4 show the numerical results and the evaluation metrics of all compared architectures for the first proposed approach (original data). The experiment runs with 30 epochs and 690 iterations (for each epoch (E) 27 iterations (I)), and Mini batch accuracy (

) results for the last iterations of the last 5 epochs). The best-obtained validation accuracy (

) is 100% for all models without RESNET-101. The lowest Mini batch loss (

) is 0, obtained by VGG-19, where the lowest loss validation (

) obtained from VGG-19 is 1.4901 × 10

−8. The learning rate (LR) for all the architectures is 0.0003. The average of the lowest validation loss for all iterations

mean) is 0.01865, which was achieved by the BIDA-CNN model.

The evaluation metrics and accuracies for the testing used to determine the level of model quality are presented in

Table 4.

Table 4 shows the sensitivity, precision, F1-score, and final accuracies for testing. The five CNN-based models VGG-16, VGG-19, RESNET-18, RESNET-50, and RESNET-101, as well as the BIDA-CNN model, achieved a testing accuracy of 100% in the first case. We can observe three findings by comparing

Table 3 with

Figure 10: (i) with the excessive convolutional network layers, the input will lose some important features, affecting the training and testing process; (ii) the skip connection process of RESNET does not always provide the network with informative features that would raise the training accuracy and thus obtain high results; and (iii) this high performance of the models may result from the clean dataset. Thus, motion blur will be applied to simulate extreme climate and complicate the analysis process. In return, the data of the minority class will be increased using upsampling preprocessing to avoid issues resulting in the dataset suffering from inadequate knowledge that can appear due to the complexity of the analysis process after applying the motion blur.

When evaluating the models, it is essential to utilize a variety of different metric evaluations. This is due to the fact that the performance of a model may be satisfactory when using one measurement from one metric of evaluation, but it may be unsatisfactory when using another measurement from another metric of evaluation. It is essential to use evaluation metrics in order to ensure that your model is functioning correctly and to its full potential.

A confusion matrix is a table used to show the performance and effectiveness analysis of the classification model. The evaluation performance of a classification model can be represented graphically and summarized using a confusion matrix.

Figure 11a shows that the proposed solar panel classification models are evaluated using confusion-matrix-based performance metrics. The confusion matrix includes actual classes and predicted classes by displaying the values of true positive, true negative, false positive, and false negative. Moreover, through these values, the sensitivity, specificity, precision, and overall accuracy metrics can be calculated. Consequently, the comparison results for our models can be seen in

Figure 11a, where the five CNN-based models and BIDA-CNN model metrics achieved high accuracies by providing more accurate diagnostic performance on the solar panels’ dataset.

Figure 11b shows the results obtained by VGG-16, VGG-19, RESNET-18, RESNET-50, and RESNET-101 models, as well as our proposed BIDA-CNN model, where no class missed to classify from the all_snow, no_snow, and partial classes. The RESNET-101 model struggled with the issues in the training process, but there are no labels missed in the testing process (all_snow, no_snow, and partial classes); this is because the probability of classification in the softmax layer was high (approximately higher than 95%), and because of that, no labels were missed in the testing process, as we present in

Figure 12, taking into account that the result is compared in the confusion matrix with 20% out of the conducted dataset.

In the second proposed approach, the upsampling method is applied to the imbalance classes with minority samples. The partial class in the conducted dataset suffers from inadequate knowledge that could provide better prediction performance. This can be accomplished by using a variety of techniques, such as rotating and inverting the images. The dataset upsampling process aims to increase the variability and uniformity of the CNN models. This procedure aids the models in learning more about the input space. Moreover, in this approach, we simulate the challenges faced by surveillance cameras while detecting the condition of solar panels to simulate that we applied motion blur on the original dataset with linear motion across 21 pixels at an angle of 11 degrees.

The balance dataset is trained using the five implemented models and the BIDA-CNN model. The accuracy and loss results of VGG-16, VGG-19, RESNET-18, RESNET-50, and RESNET-101 are shown in

Figure 13a and

Figure 13c, respectively. We can analyze that VGG-16 is more stable with less fluctuation than VGG-19 from a learning behavior perspective, it converges faster and reaches the steady state at an earlier stage of iterations (250 iterations); furthermore, VGG-19 reaches stability after 167 iterations with fluctuation between 390 to 470. The learning behavior of RESNET-101 outperformed RESNET-18 and RESNET-50 architectures by achieving steady-state results with 100 iterations. Our proposed BIDA-CNN model achieved higher fast learning and operation speed than other models. The BIDA-CNN model achieved a steady state with 260 iterations; furthermore, the fluctuations of the BIDA-CNN model were fewer than other models, as observed in

Figure 13c.

The other part of the dataset is validated with 20%. The same with the first section of this experiment, the validation is performed after every 1 iteration for all models used in the analysis. As used for the validation process, it does not involve underfitting and overfitting. The obtained accuracies of validation for all models are shown in

Figure 13b; the accuracies improve iteratively with time. The minimum and maximum accuracies for VGG-16 are 36.67% and 100%, and for VGG-19 17.78% and 100%, while the median and mode for VGG-16 are 98.89% and 98.89%, and for VGG-19 100% and 100%, respectively. The minimum and maximum accuracies for RESNET-18 are 17.78% and 100%, for RESNET-50 18.89% and 98.89%, and for RESNET-101 35.56% and 98.89%. In addition, the median and mode for RESNET-18 are 96.67% and 98.89%, for RESNET-50 91.11 and 92.22%, and for RESNET-101 91.11% and 93.33%, respectively.

Figure 13d shows the loss results confirm the validation accuracy results, where the lowest Mini batch loss

and the lowest validation loss (

) were obtained from VGG-19. The minimum, maximum, median, and mode for the BIDA-CNN model are 21.11%, 100%, 100%, and 100%, respectively; this indicates that the model outperforms the rest of the models used in this experiment. Furthermore, the BIDA-CNN model outperformed the other five models used in this experiment by an average lowest validation loss

mean) of 0.03797.

We can deeply observe three findings by comparing

Figure 10 and

Figure 13: (i) the motion blur increased the fluctuation in the training process; (ii) the motion blur led to the instability of the validation process accuracy in networks with a high number of layers (RESNET-18, RESNET-50, and RESNET-101), and this led to there being fluctuations until the end of the validation process, however, the fluctuations remained confined between 90% to 100%, which represents the positive probability in the softmax layer, and we observe that all classification categories are correct with a variable probability that always tends to be positive; and (iii) the deblurring model is the ideal solution under extreme climates, which helps to obtain clean and clear data that can be conducted within classification and detection models and achieve high performance results.

To determine the level of model quality, the evaluations, metrics, and testing accuracies for all models are included in

Table 5 and

Table 6. These are used to determine how well the model is constructed. The quantitative results are well presented in

Table 5 and

Table 6, and the numerical results and the evaluation metrics of all compared architectures for the second proposed approach are clearly discussed. The experiment runs with 30 epochs and 810 iterations (for each epoch (E) 27 iterations (I)), and the Mini batch accuracy (

) results for the last 5 epochs are 100%. The best validation accuracy (

) is 100%, obtained from the VGG-16, VGG-19, and BIDA-CNN models. The lowest Mini batch loss (

) is 3.5763 × 10

−8, obtained by VGG-19, whereas the loss validation (

) obtained from VGG-19 is 2.0662 × 10

−6. The learning rate (LR) for all the architectures is 0.0003. Based on

mean, the best model in this experiment is the BIDA-CNN model, achieving 0.03797. In addition, all models used in this experiment recorded 100% overall precision, sensitivity, F1-score, and accuracy in the testing. Achieving high accuracy does not mean that the model probability is 100%; the probability ranges from 50% to 99%. We always work on proposing models that increase the probability of being correct through a mathematical model that improves the process of extracting features with important information that is input into the decision layer.

Figure 14 shows that the diagonal matrix represents a true positive that the model has correctly predicted class dataset values; moreover, the values that are biased from the diagonal matrix are false predicted values. In addition,

Figure 14a shows there are no objects that were missed in the classes, taking into account that the result is compared in the confusion matrix with 20% out of the conducted dataset.

Figure 14b represents the data distribution over the classes; it shows which class gives high-performance prediction and which class struggles with noise and outliers. Each of the tested models achieves different data distribution or classification. In

Figure 14b, the classes are presented in the form of a histogram. Each bar contains part of the data (class).

Figure 14b shows the predicted classes by all models, where all classes are well-predicted (no missed classification).

In this experiment, the softmax activation function is used in the output hidden layer of tested architectures. The softmax function normalizes the received outputs from the previous layers by translating them from weighted sum values into probabilities that add up to one, then determines the class values. After that, the achieved probability values of the predicted classes from the output layer are compared with the desired target. Cross-entropy is frequently used to calculate the difference between the expected and predicted multinomial probability distributions, and this difference is then used to update the model (see Equations (9)–(11)).

Figure 15 shows the practical investigation of this activation function.