Critical Analysis of the GreenMetric World University Ranking System: The Issue of Comparability

Abstract

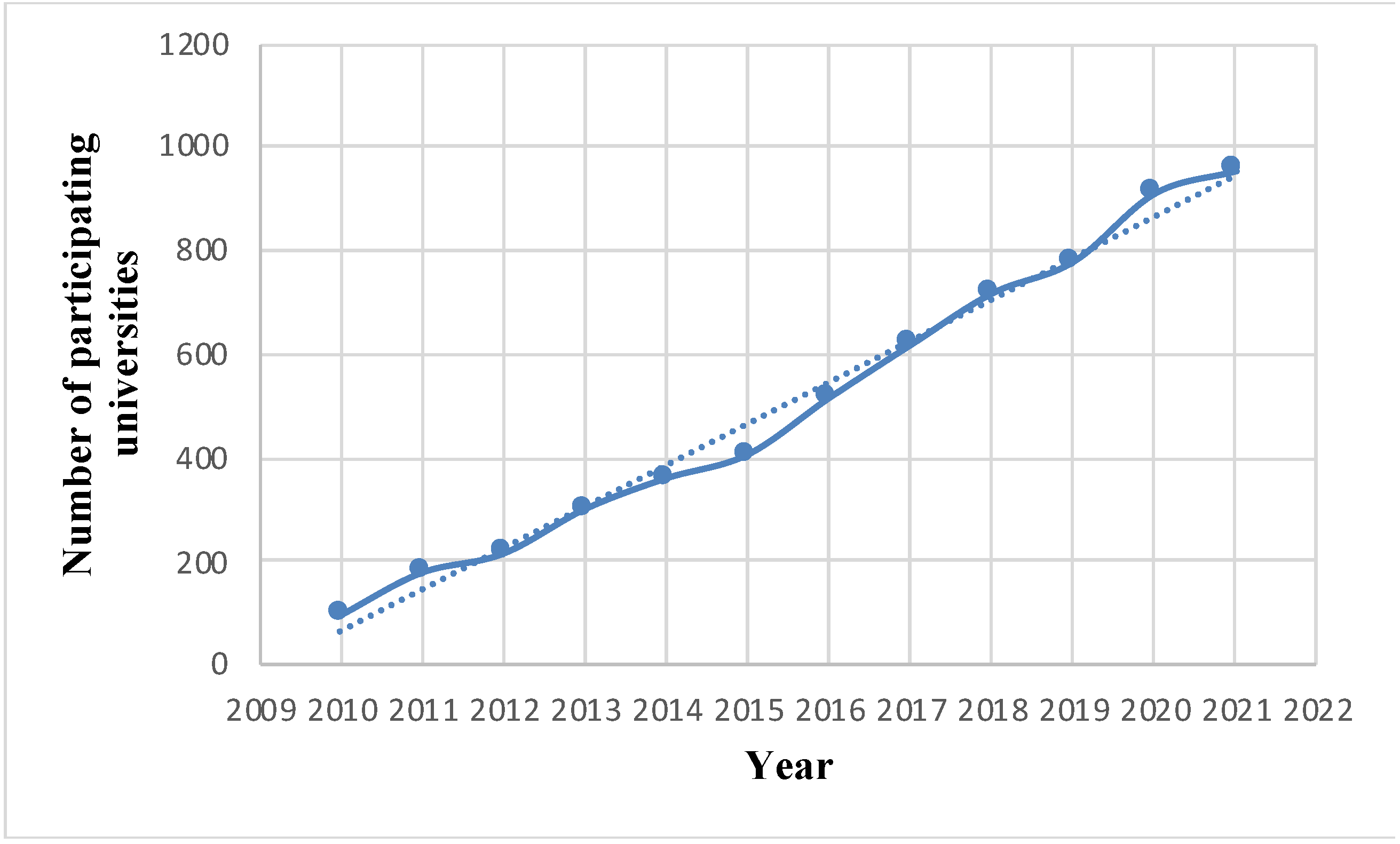

1. Introduction

- Rector of Wageningen University & Research (ranked first in 2021) Magnificus Arthur Mol said in an interview, “Of course, we are thrilled with the first place. And it would be great if we were surpassed because this would mean that other universities work on sustainability even harder than we do. But it won’t be easy, because sustainability is embedded in the genes of our students and employees”.

- John Atherton, Pro Vice Chancellor and Chair of the University of Nottingham Sustainability Committee said in an interview, “The University of Nottingham is delighted to once again be recognised for the hard work it is doing to embed environmental sustainability across the institution and make it an integral part of our education and research. Our global research programme includes a focus on sustainable food production and future food security, green chemicals to tackle greenhouse gases, and sustainable propulsion”.

- Yanike Sophie, coordinator of the Green Office at the University of Groningen, embraces the high participation of students and employees of the RUG saying, “Our projects only work because of this high involvement”.

- Camille Kirk, director of the Office of Sustainabilty at UC Davis, said, “Being internationally recognized again for our leadership gives every Aggie a chance to pause and feel pride in the commitment and investment that UC Davis has made in sustainability”.

2. Materials and Methods

- “Setting and Infrastructure” (SI);

- “Energy and Climate Change” (EC);

- “Waste” (WS);

- “Water” (WR);

- “Transportation” (TR);

- “Education and Research” (ED).

3. Results and Discussions

3.1. Critical Detailed Review of the Items Considered

- Number of renewable energy sources on campus (EC3): According to the UI GreenMetric ranking system, an HEI obtains a higher score by simply increasing the variety of renewable energy sources employed. Although this may encourage some university to employ a larger number of renewable energy sources among those available, the inclusion of this item may turn out to unreasonably penalize those HEIs employing only one or few sources of renewable energy. As a matter of fact, an HEI may record a higher score by simply adding a source of renewable energy to what is already being employed, regardless of the overall amount of power provided by these sources. If an HEI employs an additional source of renewable energy, but the overall amount of renewable power provided is the same or decreases, then a higher score will be obtained. Furthermore, the inclusion of this item for sustainability assessment leads to penalizing HEIs based on their locations in the world, which strongly affects the availability of renewable energies. As a matter of fact, an HEI may be located in an area where the access to renewable energy sources is much more limited than in other areas where another HEI taking part in the ranking is located. This may lead to fewer scores for the former compared with the latter, thus penalizing an HEI’s sustainability ranking simply based on its location. This item should not be attributed any sustainability score.

- Total electricity usage divided by total campus’ population (kWh per person) (EC4): The inclusion of this item seems controversial. According to the UI, the “total electricity” is to be considered, specifically not only the fraction of electrical energy produced from CO2-emitting sources but also the fraction of electrical energy produced from renewable sources. In practice, the aim of this item is to measure the electrical energy efficiency, but the amount of electricity usage should be more relevant when evaluating sustainability with respect to the fraction produced by energy sources different from the renewable ones. This is because the energy produced from renewable sources does not emit CO2 and should therefore not reduce the sustainability of an HEI substantially. Moreover, in case a university is characterized by the presence of high-energy-demand laboratories, the amount to be considered should be the one without the electricity used for research activities so that only the community-related consumption will be taken into account. This is to avoid penalizing some university simply because more CO2-emitting research activity is performed therein. Aside from that, the methodology according to which the total campus population is to be computed is not presented clearly in the questionnaire and does not seem to take into account the actual amount of time that people are spending on campus activities. This period of time can largely vary from one university to another depending on the culture of the campus’ population, which strongly affects the amount of electricity used and therefore should be considered carefully when used for a ranking system that involves universities from all over the world. Finally, this item should be rethought, adding strategic information on its assessment and doubled to take into account the renewability (or not) of the sources. The number of daylight hours is also a relevant influencing factor. As a suggestion for an improved indicator, the total electricity usage should be normalized with respect to the actual amount of time people spend on campus, and the number of daylight hours should be incorporated as well. Furthermore, the percentage of electrical energy provided by CO2-emitting sources should be given a higher weight in the calculation.

- Greenhouse gas emission reduction program (EC7): Although, in principle, setting up programs for GHG emission reduction shows good intentions for the improvement of an HEI’s sustainability, the inclusion of this item for ranking purposes can unfairly penalize universities that do not need to implement these types of programs. Some universities, due to the typical weather conditions and the culture of the people attending campus activities, present very low GHG emissions and therefore need few to no programs for GHG emission mitigation, while others in other locations may need to implement more GHG reduction programs due to their actual higher need. Therefore, the number of programs for GHG reduction should be considered a function of the actual need of the institution along with the effectiveness of these programs, namely the effective reduction in GHG emissions upon program implementation. As a suggestion for improvement, the indicator can be amended by dividing the total number of GHG emission reduction programs by the current amount of GHG emissions. However, guidelines on how to count for the implementation of these programs in an HEI need to be clearly established, as what should count would be the number of executive actions entailed by each program, rather than the mere number of programs.

- Total carbon footprint divided by total campus’ population (EC8): According to the UI GreenMetric ranking system, HEIs emitting lower amounts of carbon dioxide or other GHGs obtain higher scores than those emitting more GHGs. This quantity, measured as the carbon dioxide equivalent (CO2-eq), is considered in relation to the total number of people living in the HEI. Although this item is meant to reliably quantify the sustainability of an HEI, considering the official guidelines, the way the total carbon footprint is computed may be misleading. First, the guidelines provide a mere recommendation and not a structured consistent procedure that each HEI must follow in order to come up with a reliable estimation of its CO2-eq emissions. Thus, an HEI may follow the recommendation provided or calculate its carbon dioxide emissions based on another methodology. The discrepancy among methods followed by different HEIs potentially leads to penalizing an HEI with respect to others simply based on the method which was arbitrarily adopted. That aside, the way the emissions of carbon dioxide linked to the electric energy consumption is recommended to be calculated is flawed. According to the UI GreenMetric ranking system, the total amount of electrical energy consumed in a year is multiplied by a fixed CO2 emission factor, regardless of whether the energy is produced from renewable energy sources or not. However, this is not always representative of the actual CO2 emissions. First, the average CO2 emission factors vary from one country to another, taking into account all electrical energy sources employed as reported, for instance, by the European Environmental Agency [29]. Secondly, the emission factor for the electrical energy used at a campus or university should reflect the average fraction of energy not produced by renewable energy. This fraction changes according to the country where the HEI is located. A clear example of how changing the CO2 emission factor for electrical energy strongly affects the overall carbon footprint can be found in the work of Boiocchi et al. [30]. Furthermore, the list of carbon dioxide emission sources recommended should be extended by including the contributions emitted due to the treatment and handling of waste, wastewater and drinking water fluxes coming to and leaving the institution. For instance, an HEI may employ all kinds of sophisticated technologies for optimizing the treatment of waste and wastewater with and without the purpose of recycling and reuse while increasing the amount of CO2 emitted due to the amount and source of the energy employed by the same technologies. The evidence of CO2 emissions due to power consumption by these technologies is numerous [30,31,32,33,34,35,36,37,38]. Additionally, nitrous oxide, a strong greenhouse gas with a global warming potential about 300 times stronger than CO2, can be emitted during the typical biological nitrogen removal processes for domestic wastewaters, according to the treatment system’s design and operational patterns [38,39,40,41]. This in turn can affect the overall carbon footprints of campuses and should therefore be considered. Finally, it is important to keep in mind that some universities will be favored over others simply because they are in a region where more renewable energy sources are available. As a suggestion for improvement, first, the list of CO2-emitting sources by the HEI should be extended by considering the electrical energy consumed for waste and wastewater handling. Secondly, to compute the CO2 emissions, each electrical energy consumption contribution should be multiplied by a CO2 emission factor reflecting the CO2 emitted according to the specific energy mix actually employed. Finally, similar to the case of item EC7, the obtained quantity should be normalized with respect not only to the number of people living on campus but also with respect to the actual time spent therein and the availability of renewable energy sources.

- Program to reduce the use of paper and plastic on campus (WS2): By considering this item for sustainability assessment, a mere increase in the number of programs aimed at reducing the use of paper and plastics in HEIs can lead to obtaining a higher score. Although the inclusion of this item may encourage HEI administrations to create new programs, this can lead to biased evaluations of sustainability. First and foremost, the mere number of programs in general does not necessarily imply the effectiveness of them. An HEI may prepare and implement several programs which then turn out to be ineffective with respect to their original scope. An HEI can implement one program aimed at reducing the use of paper and plastic, but when measuring if paper and plastic usage has been reduced compared with the time when the program was not in force, it can be found that the use of paper and plastics has not been effectively reduced. Furthermore, when considering this item to rank HEIs’ sustainability, the comparison can be flawed. For example, an HEI may already have a very low consumption of paper and plastics and not need any programs to further reduce it, while another HEI may have very high consumption of paper and plastic and therefore needs to implement several programs to reduce it. When this item is included, the former university gets penalized compared with the latter, while in reality a lot more paper and plastic are consumed by the latter than the former. The number of programs to reduce the use of paper and plastic by campuses should be checked with respect to their effectiveness and considered jointly with the actual need for them within the context of the campus itself. It is also important to point out that an HEI may include only a few executive actions within a program, while another may split the same number actions into more programs. In this case, while the actual number of actions aimed at reducing plastic and paper usage is the same, the HEI that splits these actions into multiple programs will receive a higher score, thus deceiving (intentionally or unintentionally) the ranking system. From the guidelines, it is unclear whether the UI GreenMetric ranking system takes into account the actual number of actions included within a program rather than the number of programs. All of that should be clarified or modified in a new version of the guidelines. As a suggestion for an improved indicator, the total number of executive actions reducing the usage of paper and plastic on campus should be normalized by the current amount of paper and plastic being used by the same campus per capita. Furthermore, criteria should be established in order to evaluate whether an action is likely to be effective or not in reducing the usage of paper and plastics, rather than considering all of the actions in a program effective to the same degree.

- Sewage disposal (WS6): Different sustainability points are assigned to an HEI according to the way its wastewater is disposed. Specifically, if wastewater is disposed untreated into waterways, then the HEI gets no points. If it is disposed at the same destination but treated, then a score is assigned. Furthermore, an HEI gets increasingly higher scores if, instead of being disposed into waterways, its wastewater is reused, downcycled or upcycled. It can be deduced that the UI GreenMetric ranking system acknowledges the added value of nutrients in wastewater as well as the value of the water itself by assigning higher scores to those HEIs valorizing these resources. However, the guidelines do not make crystal clear what is meant by “water reuse” and its difference, if applicable, from the concept of “water recycling”. Oftentimes, the words “water reuse” and “water recycling” are used in reference to the same meaning. However, there is a subtle difference between the words “reuse” and “recycle”, which consists of the fact that “reuse” literally means using an already-used object as it is without treatment, whereas “recycle” means turning an object into its raw form before using it again [42]. Nevertheless, in the context of water, the terms “water reuse” and “water recycling” are often confused. According to some opinion, the term “water reuse” refers more specifically to the recycling of water for potable usage [43]. However, there is no univocal statement clarifying the difference between water reuse and water recycling. Anderson [44] stated that water reuse can be for agricultural, urban and industrial purposes and has been confused with recycling. Aside from the difference between reuse and recycling, downcycling and upcycling mean that the object is recycled for a lower or upper purpose, respectively, compared with its previous usage purpose. In this context, especially if recycled for potable usage, wastewaters need to be subjected to heavy treatments to guarantee safe human consumption [45,46,47]. For this reason, the treatment requirements for water recycling could be higher than those for water agricultural reuse. For combined sewer systems where black and gray waters are collected jointly [48], water recycling for potable use likely becomes inconvenient due to the high treatment requirements. Therefore, water recycling may not always be feasible to the same extent for all the HEIs taking part in the UI GreenMetric ranking system, depending on a variety of context specific conditions that are difficult or impossible to change, such as the original construction of the sewage collection system. Furthermore, as presented in the official guidelines, higher scores are assigned if an HEI prefers water upcycling or downcycling to reuse. It is questionable whether wastewater upcycling or downcycling should always be considered more sustainable than reuse. For instance, treatments for recycling water for potable usage may not valorize wastewater nutrients such as phosphorus and nitrogen, which would otherwise be valued if the same wastewater were reused for agricultural purposes. If gray and black waters are collected separately, then the former can be more conveniently upcycled, while the latter can be more conveniently reused according to the treatment required without the need to penalize one disposal mode over another. Based on these considerations, sustainability assessment of different campus wastewater disposal modes should be more carefully carried out while taking into account (1) the context of the campus considered, such as the campus sewage collection system, (2) the chances and the needs for water recycling and reuse, (3) the treatment feasibility to make wastewater suitable for reuse and down- and upcycling and (4) the country-specific regulations for water reuse and recycling [49]. Specific indicators taking into account the effective valorization of both water and the nutrient contents should be elaborated.

- Water recycling program implementation (WR2): According to this item, an HEI will increasingly obtain scores by augmenting the amount of water recycled for human usage. The questionnaire allows an HEI to declare if a program for water recycling has not been implemented yet or, if implemented, how much water has been recycled. With regard to the latter, the guidelines do not make explicit if the amount of recycled water should be expressed as a percentage of the total recyclable water or as a percentage of the total wastewater. It seems that the questionnaire does not make room for those cases where water recycling is not feasible. Possible options for water recycling involve the collection of rain and gray and black waters and their related treatment before delivery for some selected usage. It can be deduced that water recycling is not always feasible for all universities. As a matter of fact, it requires a separate wastewater collection system, where gray and black waters are collected separately from their respective sources. Contrary to gray water recycling, recycling of black water or domestic wastewater (i.e., combined gray and black waters) for potable or other domestic usage may be performed at the expense of very sophisticated treatments and may become inconvenient or hardly carried out. Unless an HEI is under construction, it is very challenging to completely turn over a wastewater collection system. An HEI can therefore be penalized compared with another simply due to original construction choices, which are not easily reversible at all. Secondly, the recycling of rainwater can be carried out only in those places where rainwater heights are significant. Based on these considerations, recycling water is not possible to the same extent for all HEIs due to their locations and due to irreversible construction characteristics. The ranking system does not offer any option for those universities where recycling is simply not feasible or can be carried out only marginally. Although this parameter is very important as it encourages HEIs to recycle more and more water, this should be evaluated while taking into account the actual feasibility of water recycling technologies within the context of the HEIs in order to eliminate biases linked to HEI construction or location. An improved indicator should consider the number of executive actions implemented for water recycling divided by the actual amount of water that is recyclable, which does not correspond to 100% of the total water consumption.

- The total number of vehicles (cars and motorcycles) divided by the total campus’ population (TR1): By analyzing the information required in the questionnaire, as detailed in the guidelines, this item appears to be controversial. Considering the total number of vehicles without discriminating among the various types (i.e., cars or motorcycles) can give a distorted vision of an institution’s sustainability because of the different environmental impacts of different kinds of vehicles. As a clear example, the amount of CO2 emitted per kilometer and per user and the amount of air pollutants differ substantially based on whether a car or a motorcycle is chosen [50,51]. An improved indicator should employ proper estimations of the CO2 emitted per kilometer by each type of vehicle and then multiply the same value by the actual kilometers travelled. These can be known by carrying out surveys among the campus population.

- Program to limit or decrease the parking area on campus for the last three years (TR6): In some universities, the induced traffic remains out of the area of the campus as a result of the availability of external parking areas. Therefore, this item should be modified in order to include initiatives aimed at limiting or decreasing all the parking areas occupied by the university’s community, regardless of whether the parking area is within or outside the campus area. More generally, this item should incorporate initiatives aimed at planning more sustainable long-distance travel. For instance, universities promoting the use of trains instead of aircraft should be advantageous in the rankings. Finally, it must be pointed out that the efforts for generating reliable data regarding accesses to the university can present increasing challenges from the case of a single-area campus to the cases of universities with buildings spread out in an urban territory.

- The ratio of sustainability courses to total courses/subjects (ED1): According to the UI GreenMetric Ranking system, an HEI obtains a higher score by simply increasing the number of sustainability courses compared with the total number of courses taught at the same institution. The inclusion of this item could be deceiving to the sustainability ranking system in a variety of ways. First, the number of hours of sustainability courses should be preferred over the mere number of sustainability courses. As a matter of fact, HEIs arbitrarily split the sustainability content to teach into variable numbers of courses, and this can lead to penalizing those HEIs splitting their sustainability content into fewer courses. Secondly, by considering the number of sustainability courses with respect to the number of total courses, an HEI already teaching a larger variety of subjects and having already set a high amount of time to be spent on sustainability teachings will achieve a lower sustainability score simply due to a longer time being spent teaching subjects other than sustainability. The number of people attending these courses is also worthy of consideration, as having a small-sized audience can hinder the spread of knowledge about sustainability even if the number of sustainability courses is high. The heterogeneity of the sustainability content taught is also important, as the information taught should not be focused on few specific sustainability subjects while neglecting many others. Based on this, as a start for improvement, the indicator should be computed by dividing the number of hours spent for teaching sustainability by the total number of hours for teaching all subjects. In addition, a threshold above which increasing the number of hours for sustainability further does not further increase the assignment of scores that should be established. More points should be assigned if the percentage of the total students attending the course is higher.

- Percentage of university budget for sustainability efforts (SI6) and the ratio of sustainability research funding to total research funding (ED2): According to both of these items, an HEI can obtain a higher sustainability ranking compared with others by increasing the amount of funding for the implementation of sustainability programs and for research related to sustainability. According to the ranking system, this has to be considered with respect to the total university budget and the total research funding. In this regard, the items included tend to not take into account the fact that the need for more sustainability in each HEI is context-specific and varies for the same HEI over time. An HEI may need more sustainability efforts than another, which means that more funding needs to be invested there compared with another HEI having already achieved a good level of sustainability. In other words, the amount of funding for sustainability implementation and research should be compared against the actual need of the HEI. Additionally, similar to the discussion about item ED1, an HEI already spending a lot of funding for sustainability will receive a lower score by simply augmenting funding for projects and research dealing with topics different from sustainability. Furthermore, funding for research and sustainability efforts—even if related to the total funding—can also change the function of the income of the country where the HEI is located, as the means and tools for sustainability improvement present different economic burdens from one country to another. Therefore, it becomes very difficult to make an unbiased comparison among universities belonging to different countries in relation to the funding for sustainability program implementations and research. As a replacement for the original one, an amended indicator for SI6 should be a division between the budget for sustainability efforts and the total budget available for all university activities. This quantity should then be divided by the amount of sustainability actions that actually need to take place. Similar amendments are suggested for item ED2.

- Number of scholarly publications on sustainability (ED3): According to the guidelines for the year 2022, this item must be filled out with the average number of scholarly indexed publications about sustainability in the last 3 years. Although the number of scientific publications dealing with sustainability could represent a good indicator of research activities related to sustainability in a university, it is important to also consider the research heterogeneity and novelty in these publications, the relevance of the journal where the research is published with respect to the sustainability topic and, most importantly, the actual impact this research has (for instance, the number of research outputs that have ended up being actively applied to improve sustainability). Although these features are not always easily quantifiable, considering that only the quantity of indexed publications may encourage some universities to privilege quantity over quality, as the peer review process among different journals is not always comparable [52,53]. Furthermore, similar to the case of program implementation, research outputs for sustainability always need to be considered with respect to the actual need for them. This is because research on sustainability should always reflect the need for novel knowledge and tools in the field. This need may saturate over time, as there is not always the same need for an HEI to invest in new research programs. Sometimes, the research carried out by a university provides useful enough outputs for another university, thus reducing the need for the latter to carry out research and the number of scholarly publications produced therefrom. Aside from that, sustainability has several branches (e.g., the six criteria of the UI GreenMetric system), and a university may focus its research on one branch while another university may focus its research on another. Considering that the amount of research content publishable likely differs from one branch to another due to several circumstantial factors (e.g., the state of development of the subject and the acceptance rate of the journals dealing with the subject) [54], it turns out to be unfair to compare the overall mere amount of publications on sustainability by worldwide universities. To take into account more fairly the research activity on sustainability, the published articles should first be grouped according to the branch of sustainability they belong to. Secondly, each article belonging to the same sustainability branch should be counted, using the impact factor of the publishing journal as a weight. A distinct score should be assigned for each sustainability branch. In this way, a biased assignment of scores, by virtue of choosing to carry out more research on a sustainability branch having by itself a higher citation rate, can be expected to be avoided.

- Number of events related to sustainability (ED4): With respect to this item, an HEI obtains a higher score by simply increasing the number of events related to sustainability. However, the overall number of hours these sustainability events last is largely more important than the mere number of events. This is to avoid an HEI achieving a higher score by simply splitting up the same number of hours for sustainability into a larger number of events. That aside, the number of people involved and, more generally, the number of people attending those events is very relevant too. Similar to the case of item ED3, the heterogeneity of the topics dealt with during these events should also be considered when assigning scores. As an improved indicator, the cumulative number of hours spent on events related to sustainability should be considered instead of the mere number of events.

- Number of student organizations related to sustainability (ED5): By including this item, an HEI would achieve a higher score by simply having more student organizations related to sustainability, regardless of the actual total number of students enrolled at the university itself. Thus, a university where fewer students are enrolled will achieve a lower sustainability score only because it has fewer student organizations related to sustainability compared with another university where more students are enrolled. This unfairly penalizes small-sized universities. Furthermore, the performance of these organizations in terms of efforts for sustainability improvements deserves consideration when assigning scores. The number of student organizations related to sustainability should be divided by the number of overall students enrolled in the same institution.

3.2. Other Considerations

4. Conclusions and Future Perspectives

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Samara, F.; Ibrahim, S.; Yousuf, M.E.; Armour, R. Carbon Footprint at a United Arab Emirates University: GHG Protocol. Sustainability 2022, 14, 2522. [Google Scholar] [CrossRef]

- Schiavon, M.; Ragazzi, M.; Magaril, E.; Chashchin, M.; Karaeva, A.; Torretta, V.; Rada, E.C. Planning sustainability in higher education: Three case studies. WIT Trans. Ecol. Environ. 2021, 253, 99–110. [Google Scholar] [CrossRef]

- Reche, C.; Viana, M.; Rivas, I.; Bouso, L.; Àlvarez-Pedrerol, M.; Alastuey, A.; Sunyer, J.; Querol, X. Outdoor and Indoor UFP in Primary Schools across Barcelona. Sci. Total Environ. 2014, 493, 943–953. [Google Scholar] [CrossRef]

- Ragazzi, M.; Rada, E.C.; Zanoni, S.; Passamani, G.; Dalla Valle, L. Particulate Matter and Carbon Dioxide Monitoring in Indoor Places. Int. J. Sustain. Dev. Plan. 2017, 12, 1032–1042. [Google Scholar] [CrossRef]

- Erlandson, G.; Magzamen, S.; Carter, E.; Sharp, J.L.; Reynolds, S.J.; Schaeffer, J.W. Characterization of Indoor Air Quality on a College Campus: A Pilot Study. Int. J. Environ. Res. Public Health 2019, 16, 2721. [Google Scholar] [CrossRef] [PubMed]

- Karaeva, A.; Cioca, L.I.; Ionescu, G.; Magaril, E.R.; Rada, E.C. Renewable Sources and Its Applications Awareness in Educational Institutions. In Proceedings of the 2019 International Conference on ENERGY and ENVIRONMENT (CIEM), Timisoara, Romania, 17–18 October 2019; pp. 338–342. [Google Scholar] [CrossRef]

- Duma, C.; Basliu, V.; Dragan, V.M. Assessment of the Impact of Environmental Factors from a Space Corresponding to a Higher Education Institution. In Proceedings of the 18th International Multidisciplinary Scientific Geoconference Sgem 2018, Albena, Bulgaria, 2–8 July 2018; Volume 18, pp. 121–128. [Google Scholar] [CrossRef]

- Rada, E.C.; Bresciani, C.; Girelli, E.; Ragazzi, M.; Schiavon, M.; Torretta, V. Analysis and Measures to Improve Waste Management in Schools. Sustainability 2016, 8, 840. [Google Scholar] [CrossRef]

- Khoshbakht, M.; Zomorodian, M.; Tahsildoost, M. A Content Analysis of Sustainability Declaration in Australian Universities. In Proceedings of the 54th International Conference of the Architectural Science Association (ANZAScA) 2020, Auckland, New Zealand, 26–27 November 2020; pp. 41–50. [Google Scholar]

- Sousa, S.; Correia, E.; Leite, J.; Viseu, C. Environmental Behavior among Higher Education Students. In Proceedings of the 5th World Congress on Civil, Structural, and Environmental Engineering (CSEE’20), Virtual, 18–20 October 2020. [Google Scholar] [CrossRef]

- Torretta, V.; Rada, E.C.; Panaitescu, V.; Apostol, T. Some Considerations on Particulate Generated by Traffic. UPB Sci. Bull. Ser. D Mech. Eng. 2012, 74, 241–248. [Google Scholar]

- Akhtar, S.; Khan, K.U.; Atlas, F.; Irfan, M. Stimulating Student’s pro-Environmental Behavior in Higher Education Institutions: An Ability–Motivation–Opportunity Perspective. Environ. Dev. Sustain. 2022, 24, 4128–4149. [Google Scholar] [CrossRef]

- Leiva-Brondo, M.; Lajara-Camilleri, N.; Vidal-Meló, A.; Atarés, A.; Lull, C. Spanish University Students’ Awareness and Perception of Sustainable Development Goals and Sustainability Literacy. Sustainability 2022, 14, 4552. [Google Scholar] [CrossRef]

- Bertossi, A.; Marangon, F. A Literature Review on the Strategies Implemented by Higher Education Institutions from 2010 to 2020 to Foster Pro-Environmental Behavior of Students. Int. J. Sustain. High. Educ. 2022, 23, 522–547. [Google Scholar] [CrossRef]

- Atici, K.B.; Yasayacak, G.; Yildiz, Y.; Ulucan, A. Green University and Academic Performance: An Empirical Study on UI GreenMetric and World University Rankings. J. Clean. Prod. 2021, 291, 125289. [Google Scholar] [CrossRef]

- Galleli, B.; Teles, N.E.B.; Santos, J.A.R.d.; Freitas-Martins, M.S.; Hourneaux Junior, F. Sustainability University Rankings: A Comparative Analysis of UI Green Metric and the Times Higher Education World University Rankings. Int. J. Sustain. High. Educ. 2022, 23, 404–425. [Google Scholar] [CrossRef]

- Time Higher Education THE Impact Ranking. Available online: https://www.timeshighereducation.com/rankings/impact/2020/overall#!/page/0/length/25/sort_by/rank/sort_order/asc/cols/undefined (accessed on 1 January 2023).

- Urbanski, M.; Filho, W.L. Measuring Sustainability at Universities by Means of the Sustainability Tracking, Assessment and Rating System (STARS): Early Findings from STARS Data. Environ. Dev. Sustain. 2015, 17, 209–220. [Google Scholar] [CrossRef]

- Studenten Voor Morgen SustainaBul. Available online: https://www.studentenvoormorgen.nl/en/sustainabul/ (accessed on 1 January 2023).

- Green Offfice Movement University Sustainability Assessment Framework (UniSAF). Available online: https://www.greenofficemovement.org/sustainability-assessment/ (accessed on 1 January 2023).

- Suwartha, N.; Sari, R.F. Evaluating UI GreenMetric as a Tool to Support Green Universities Development: Assessment of the Year 2011 Ranking. J. Clean. Prod. 2013, 61, 46–53. [Google Scholar] [CrossRef]

- Baricco, M.; Tartaglino, A.; Gambino, P.; Dansero, E.; Cottafava, D.; Cavaglià, G. University of Turin Performance in UI GreenMetric Energy and Climate Change. E3S Web Conf. 2018, 48, 03003. [Google Scholar] [CrossRef]

- Fuentes, J.E.; Garcia, C.E.; Olaya, R.A. Estimation of the Setting and Infrastructure Criterion of the Ui Greenmetric Ranking Using Unmanned Aerial Vehicles. Sustainability 2022, 14, 46. [Google Scholar] [CrossRef]

- Lourrinx, E.; Hadiyanto; Budihardjo, M.A. Implementation of UI GreenMetric at Diponegoro University in Order to Environmental Sustainability Efforts. E3S Web Conf. 2019, 125, 02007. [Google Scholar] [CrossRef]

- Lauder, A.; Sari, R.F.; Suwartha, N.; Tjahjono, G. Critical Review of a Global Campus Sustainability Ranking: GreenMetric. J. Clean. Prod. 2015, 108, 852–863. [Google Scholar] [CrossRef]

- University Indonesia GreenMetric Ranking System. Available online: https://greenmetric.ui.ac.id/ (accessed on 1 January 2023).

- Ragazzi, M.; Ghidini, F. Environmental Sustainability of Universities: Critical Analysis of a Green Ranking. Energy Procedia 2017, 119, 111–120. [Google Scholar] [CrossRef]

- Veidemane, A. Education for Sustainable Development in Higher Education Rankings: Challenges and Opportunities for Developing Internationally Comparable Indicators. Sustainability 2022, 14, 5102. [Google Scholar] [CrossRef]

- European Environment Agency. CO2 Intensity of Electricity Generation; European Environment Agency: Copenhagen, Denmark, 2017.

- Boiocchi, R.; Bertanza, G. Evaluating the Potential Impact of Energy-Efficient Ammonia Control on the Carbon Footprint of a Full-Scale Wastewater Treatment Plant. Water Sci. Technol. 2022, 85, 1673–1687. [Google Scholar] [CrossRef]

- Flores-Alsina, X.; Arnell, M.; Amerlinck, Y.; Corominas, L.; Gernaey, K.V.; Guo, L.; Lindblom, E.; Nopens, I.; Porro, J.; Shaw, A.; et al. Balancing Effluent Quality, Economic Cost and Greenhouse Gas Emissions during the Evaluation of (Plant-Wide) Control/Operational Strategies in WWTPs. Sci. Total Environ. 2014, 466–467, 616–624. [Google Scholar] [CrossRef] [PubMed]

- Eriksson, M.; Strid, I.; Hansson, P.A. Carbon Footprint of Food Waste Management Options in the Waste Hierarchy—A Swedish Case Study. J. Clean. Prod. 2015, 93, 115–125. [Google Scholar] [CrossRef]

- Sun, L.; Li, Z.; Fujii, M.; Hijioka, Y.; Fujita, T. Carbon Footprint Assessment for the Waste Management Sector: A Comparative Analysis of China and Japan. Front. Energy 2018, 12, 400–410. [Google Scholar] [CrossRef]

- Pérez, J.; de Andrés, J.M.; Lumbreras, J.; Rodríguez, E. Evaluating Carbon Footprint of Municipal Solid Waste Treatment: Methodological Proposal and Application to a Case Study. J. Clean. Prod. 2018, 205, 419–431. [Google Scholar] [CrossRef]

- Cornejo, P.K.; Santana, M.V.E.; Hokanson, D.R.; Mihelcic, J.R.; Zhang, Q. Carbon Footprint of Water Reuse and Desalination: A Review of Greenhouse Gas Emissions and Estimation Tools. J. Water Reuse Desalin. 2014, 4, 238–252. [Google Scholar] [CrossRef]

- Shrestha, E.; Ahmad, S.; Johnson, W.; Batista, J.R. The Carbon Footprint of Water Management Policy Options. Energy Policy 2012, 42, 201–212. [Google Scholar] [CrossRef]

- Mo, W.; Zhang, Q. Can Municipal Wastewater Treatment Systems Be Carbon Neutral? J. Environ. Manag. 2012, 112, 360–367. [Google Scholar] [CrossRef]

- Maktabifard, M.; Awaitey, A.; Merta, E.; Haimi, H.; Zaborowska, E.; Mikola, A.; Mąkinia, J. Comprehensive Evaluation of the Carbon Footprint Components of Wastewater Treatment Plants Located in the Baltic Sea Region. Sci. Total Environ. 2022, 806, 150436. [Google Scholar] [CrossRef]

- Kampschreur, M.J.; Temmink, H.; Kleerebezem, R.; Jetten, M.S.M.; van Loosdrecht, M.C.M. Nitrous Oxide Emission during Wastewater Treatment. Water Res. 2009, 43, 4093–4103. [Google Scholar] [CrossRef]

- Hobson, J. CH4 and N2O Emissions from Waste Water Handling. Good Pract. Guid. Uncertain. Manag. 2000, 441–454. [Google Scholar]

- Boiocchi, R.; Gernaey, K.V.; Sin, G. Extending the Benchmark Simulation Model N°2 with Processes for Nitrous Oxide Production and Side-Stream Nitrogen Removal. Comput. Aided Chem. Eng. 2015, 37, 2477–2482. [Google Scholar] [CrossRef]

- Clearance Solutions Ltd. Available online: https://www.clearancesolutionsltd.co.uk/reuse-and-recycling/the-three-rs-the-difference-between-recycling-reusing (accessed on 1 January 2023).

- IDE Technologies. Available online: https://blog.ide-tech.com/recover-recycle-reuse-the-inevitability-of-water-reuse-as-a-sustainable-way-to-ensure-water-resiliency (accessed on 1 January 2023).

- Anderson, J. The Environmental Benefits of Water Recycling and Reuse. Water Sci. Technol. Water Supply 2003, 3, 1–10. [Google Scholar] [CrossRef]

- Gupta, V.K.; Ali, I.; Saleh, T.A.; Nayak, A.; Agarwal, S. Chemical Treatment Technologies for Waste-Water Recycling—An Overview. RSC Adv. 2012, 2, 6380–6388. [Google Scholar] [CrossRef]

- Bixio, D.; Thoeye, C.; De Koning, J.; Joksimovic, D.; Savic, D.; Wintgens, T.; Melin, T. Wastewater Reuse in Europe. Desalination 2006, 187, 89–101. [Google Scholar] [CrossRef]

- Ghernaout, D. Increasing Trends Towards Drinking Water Reclamation from Treated Wastewater. World J. Appl. Chem. 2018, 3, 1–9. [Google Scholar] [CrossRef]

- Boiocchi, R.; Zhang, Q.; Gao, M.; Liu, Y. Modeling and Optimization of an Upflow Anaerobic Sludge Blanket (UASB) System Treating Blackwaters. J. Environ. Chem. Eng. 2022, 10, 107614. [Google Scholar] [CrossRef]

- Angelakis, A.N.; Bontoux, L.; Lazarova, V. Challenges and Prospectives for Water Recycling and Reuse in EU Countries. Water Sci. Technol. Water Supply 2003, 3, 59–68. [Google Scholar] [CrossRef]

- Vasic, A.M.; Weilenmann, M. Comparison of Real-World Emissions from Two-Wheelers and Passenger Cars. Environ. Sci. Technol. 2006, 40, 149–154. [Google Scholar] [CrossRef]

- Chan, C.C.; Nien, C.K.; Tsai, C.Y.; Her, G.R. Comparison of Tail-Pipe Emissions from Motorcycles and Passenger Cars. J. Air Waste Manag. Assoc. 1995, 45, 116–124. [Google Scholar] [CrossRef]

- Wicherts, J.M. Peer Review Quality and Transparency of the Peer-Review Process in Open Access and Subscription Journals. PLoS ONE 2016, 11, e0147913. [Google Scholar] [CrossRef] [PubMed]

- Gasparyan, A.Y. Peer Review in Scholarly Biomedical Journals: A Few Things That Make a Big Difference. J. Korean Med. Sci. 2013, 28, 970–971. [Google Scholar] [CrossRef] [PubMed]

- Björk, B.C. Acceptance Rates of Scholarly Peerreviewed Journals: A Literature Survey. Prof. La Inf. 2019, 28, 1–9. [Google Scholar] [CrossRef]

- Xie, Y. Values and Limitations of Statistical Models. Res. Soc. Stratif. Mobil. 2011, 29, 343–349. [Google Scholar] [CrossRef]

- Stigler, G.J. The Limitations of Statistical Demand Curves. J. Am. Stat. Assoc. 2012, 34, 469–481. [Google Scholar] [CrossRef]

- Friedman, J.H. The Role of Statistics in the Data Revolution? Int. Stat. Rev. 2001, 69, 5–10. [Google Scholar] [CrossRef]

- European Commission: Recovery and Resilience Facility. Available online: https://commission.europa.eu/energy-climate-change-environment/implementation-eu-countries/energy-and-climate-governance-and-reporting/national-energy-and-climate-plans_en (accessed on 1 January 2023).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Boiocchi, R.; Ragazzi, M.; Torretta, V.; Rada, E.C. Critical Analysis of the GreenMetric World University Ranking System: The Issue of Comparability. Sustainability 2023, 15, 1343. https://doi.org/10.3390/su15021343

Boiocchi R, Ragazzi M, Torretta V, Rada EC. Critical Analysis of the GreenMetric World University Ranking System: The Issue of Comparability. Sustainability. 2023; 15(2):1343. https://doi.org/10.3390/su15021343

Chicago/Turabian StyleBoiocchi, Riccardo, Marco Ragazzi, Vincenzo Torretta, and Elena Cristina Rada. 2023. "Critical Analysis of the GreenMetric World University Ranking System: The Issue of Comparability" Sustainability 15, no. 2: 1343. https://doi.org/10.3390/su15021343

APA StyleBoiocchi, R., Ragazzi, M., Torretta, V., & Rada, E. C. (2023). Critical Analysis of the GreenMetric World University Ranking System: The Issue of Comparability. Sustainability, 15(2), 1343. https://doi.org/10.3390/su15021343