Predictive Analytics for Sustainable E-Learning: Tracking Student Behaviors

Abstract

:1. Introduction

2. Related Work

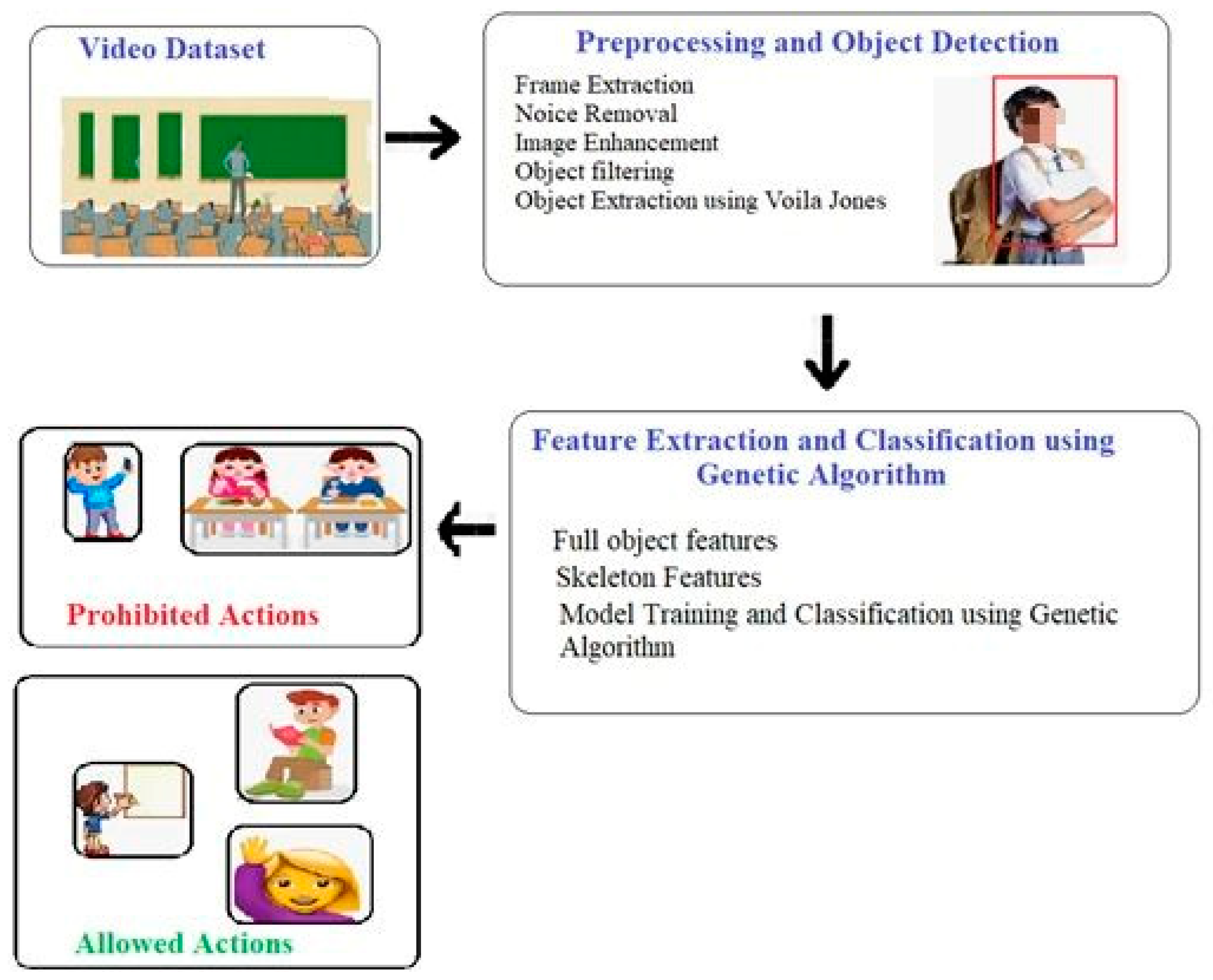

3. Proposed System Methodology

| Algorithm 1 Multistage processing to detect students’ behavior in e-Learning. |

| Input: Image (1) Step 1: Preprocessing Phase 1.1 Apply Noise Removal Techniques 1_{denoised} = Denoise(l) 1.2 Perform Image Enhancement I_{enhanced) = Enhance(l_{denoised}) 1.3 Apply Object Filtering I_{filtered) = Filter(I_{enhanced}) Step 2: Object Extraction 2.1 Use Viola Jones Object Detection Detected_Objects = ViolaJones(l_{filtered}) Step 3: Feature Extraction 3.1 Extract Full Object Features Full_Object_Features = CalculateStatistics(Detected_Objects) 3.2 Generate Stick Model Skeleton Skeletons = ExtractSkeletons(Detected_Objects) 3.3 Extract Skeleton Features Skeleton_Features = Measure Skeleton Attributes(Skeletons) Step 4: Training and Classification using Genetic Algorithm 4.1 Define Genetic Algorithm Parameters Parameters = DefineParameters() 4.2 Initialize Population Population = InitializePopulation(Parameters) 4.3 Evaluate Fitness Fitness_Values = EvaluateFitness(Population) 4.4 Genetic Operations New:_Population = ApplyGeneticOperators(Population, Fitness_Values) 4.5 Replace Population Population = New_Population 4.6 Termination Criterion Termination = CheckTerminationCriterion() Step 5: Classification and Result Analysis 5.1 Select Best Features Best_Features = SelectBestFeatures(Population) 5.2 Train Classifier Classifier = TrainClassifier(Best_Features) 5.3 Perform Classification Classification_Results = Classifylmage(l, Classifier) 5.4 Analyze Results Analysis_Metrics = Analyze ClassificationResults(Classification_Results) Output: Behavior Type[Classification_Results] End Algorithm |

3.1. Image Preprocessing

3.2. Object Extraction

3.3. Feature Extraction

3.3.1. Full Silhouette Features

3.3.2. Stick Model Features

3.4. Feature Optimization and Classification

4. Experiments and Results

4.1. Dataset

4.1.1. MED (Motion Emotion Dataset)

4.1.2. Edu Net Dataset

4.2. Performance Metric and Experimental Outcome

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- James, R.J.E.; Tunney, R.J. The need for a behavioral analysis of behavioral addiction. Clin. Psychol. Rev. 2017, 52, 69–76. [Google Scholar] [CrossRef] [PubMed]

- Miah, S.J.; Vu, H.Q.; Gammack, J.; McGrath, M. A big data analytics method for tourist behaviour analysis. Inf. Manag. 2017, 54, 771–785. [Google Scholar] [CrossRef]

- Zhang, X.; Wen, S.; Yan, L.; Feng, J.; Xia, Y. A Hybrid-Convolution Spatial–Temporal Recurrent Network for Traffic Flow Prediction. Comput. J. 2022, bxac171. [Google Scholar] [CrossRef]

- Li, B.; Tan, Y.; Wu, A.; Duan, G. A distributionally robust optimization based method for stochastic model predictive control. IEEE Trans. Autom. Control. 2021, 67, 5762–5776. [Google Scholar] [CrossRef]

- Matthew, T.; Banhazi, T.M. A brief review of the application of machine vision in livestock behavior analysis. Agrárinform./J. Agric. Inform. 2016, 7, 23–42. [Google Scholar]

- Jaganeshwari, K.; Djodilatchoumy, S. An Automated Testing Tool Based on Graphical User Interface with Exploratory Behavioural Analysis. J. Theor. Appl. Inf. Technol. 2022, 22, 6657–6666. [Google Scholar]

- Michalis, V.; Nikou, C.; Kakadiaris, I.A. A review of human activity recognition methods. Front. Robot. AI 2015, 2, 28. [Google Scholar]

- Qian, L.; Zheng, Y.; Li, L.; Ma, Y.; Zhou, C.; Zhang, D. A New Method of Inland Water Ship Trajectory Prediction Based on Long Short-Term Memory Network Optimized by Genetic Algorithm. Appl. Sci. 2022, 12, 4073. [Google Scholar] [CrossRef]

- Guo, F.; Zhou, W.; Lu, Q.; Zhang, C. Path extension similarity link prediction method based on matrix algebra in directed networks. Comput. Commun. 2022, 187, 83–92. [Google Scholar] [CrossRef]

- Ferhat, A.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F.; Oukhellou, L.; Amirat, Y. Physical human activity recognition using wearable sensors. Sensors 2015, 15, 31314–31338. [Google Scholar]

- Xie, X.; Xie, B.; Cheng, J.; Chu, Q.; Dooling, T. A simple Monte Carlo method for estimating the chance of a cyclone impact. Nat. Hazards 2021, 107, 2573–2582. [Google Scholar] [CrossRef]

- Gupta, N.; Gupta, S.K.; Pathak, R.K.; Jain, V.; Rashidi, P.; Suri, J.S. Human activity recognition in artificial intelligence framework: A narrative review. Artif. Intell. Rev. 2022, 55, 4755–4808. [Google Scholar] [PubMed]

- Jiang, H.; Wang, M.; Zhao, P.; Xiao, Z.; Dustdar, S. A Utility-Aware General Framework With Quantifiable Privacy Preservation for Destination Prediction in LBSs. IEEE/ACM Trans. Netw. 2021, 29, 2228–2241. [Google Scholar] [CrossRef]

- Long, W.; Xiao, Z.; Wang, D.; Jiang, H.; Chen, J.; Li, Y.; Alazab, M. Unified Spatial-Temporal Neighbor Attention Network for Dynamic Traffic Prediction. IEEE Trans. Veh. Technol. 2023, 72, 1515–1529. [Google Scholar] [CrossRef]

- Xiao, Z.; Li, H.; Jiang, H.; Li, Y.; Alazab, M.; Zhu, Y.; Dustdar, S. Predicting Urban Region Heat via Learning Arrive-Stay-Leave Behaviors of Private Cars. IEEE Trans. Intell. Transp. Syst. 2023, 24, 10843–10856. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and modeling of wifi signal based human activity recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking, Paris, France, 7–11 September 2015; pp. 65–76. [Google Scholar]

- Abdulmajid, M.; Pyun, J.-Y. Deep recurrent neural networks for human activity recognition. Sensors 2017, 17, 2556. [Google Scholar]

- Ortiz, R.; Jorge, L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-aware human activity recognition using smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef]

- Chen, G.; Chen, P.; Huang, W.; Zhai, J. Continuance Intention Mechanism of Middle School Student Users on Online Learning Platform Based on Qualitative Comparative Analysis Method. Math. Probl. Eng. 2022, 2022, 3215337. [Google Scholar] [CrossRef]

- Xiong, Z.; Liu, Q.; Huang, X. The influence of digital educational games on preschool Children’s creative thinking. Comput. Educ. 2022, 189, 104578. [Google Scholar] [CrossRef]

- Lu, S.; Liu, M.; Yin, L.; Yin, Z.; Liu, X.; Zheng, W.; Kong, X. The multi-modal fusion in visual question answering: A review of attention mechanisms. PeerJ Comput. Sci. 2023, 9, e1400. [Google Scholar] [CrossRef] [PubMed]

- Ann, R.C.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar]

- Chen, K.; Zhang, D.; Yao, L.; Guo, B.; Yu, Z.; Liu, Y. Deep learning for sensor-based human activity recognition: Overview, challenges, and opportunities. ACM Comput. Surv. CSUR 2021, 54, 1–40. [Google Scholar] [CrossRef]

- Qiu, S.; Zhao, H.; Jiang, N.; Wang, Z.; Liu, L.; An, Y.; Fortino, G. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Li, Y.; Yang, G.; Su, Z.; Li, S.; Wang, Y. Human activity recognition based on multi-environment sensor data. Inf. Fusion 2023, 91, 47–63. [Google Scholar] [CrossRef]

- Kun, X.; Huang, J.; Wang, H. LSTM-CNN architecture for human activity recognition. IEEE Access 2020, 8, 56855–56866. [Google Scholar]

- Liu, X.; Shi, T.; Zhou, G.; Liu, M.; Yin, Z.; Yin, L.; Zheng, W. Emotion classification for short texts: An improved multi-label method. Humanit. Soc. Sci. Commun. 2023, 10, 306. [Google Scholar] [CrossRef]

- Feng, W.; Hannafin, J. Design-based research and technology-enhanced learning environments. Educ. Technol. Res. Dev. 2005, 53, 5–23. [Google Scholar]

- Liu, X.; Zhou, G.; Kong, M.; Yin, Z.; Li, X.; Yin, L.; Zheng, W. Developing Multi-Labelled Corpus of Twitter Short Texts: A Semi-Automatic Method. Systems 2023, 11, 390. [Google Scholar] [CrossRef]

- Lu, C.; Shi, J.; Jia, J. Abnormal event detection at 150 fps in Matlab. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 1–8 December 2013. [Google Scholar]

- Degardin, B.; Proença, H. Human Activity Analysis: Iterative Weak/Self-Supervised Learning Frameworks for Detecting Abnormal Events. In Proceedings of the IEEE International Joint Conference on Biometrics (IJCB), Houston, TX, USA, 28 September–1 October 2020. [Google Scholar]

- Merad, D.; Drap, P. Tracking multiple persons under partial and global occlusions: Application to customers’ behavior analysis. Pattern Recognit. Lett. 2016, 81, 11–20. [Google Scholar] [CrossRef]

- Chen, T.; Chen, H. Anomaly detection in crowded scenes using motion energy model. Multimed. Tools Appl. 2018, 77, 14137–14152. [Google Scholar] [CrossRef]

- Klingner, J. The pupillometric precision of a remote video eye tracker. In Proceedings of the ETRA 2010 (Eye Tracking Research and Applications Symposium), Austin, TX, USA, 22–24 March 2010; pp. 259–262. [Google Scholar]

- Srichanyachon, N. EFL Learners’ Perceptions of Using LMS. TOJET Turk. Online J. Educ. Technol. 2014, 13, 30–35. [Google Scholar]

- Liang, M.; Hu, X. Recurrent Convolutional Neural Network for Object Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3367–3375. [Google Scholar]

- Jalal, A.; Mahmood, M.; Siddiqi, M.A. Robust spatiotemporal features for human interaction recognition via an artificial neural network. In Proceedings of the IEEE Conference on International Conference on Frontiers of Information Technology, Islamabad, Pakistan, 17–19 December 2018. [Google Scholar]

- Jalal, A.; Quaid, M.A.K.; Sidduqi, M.A. A Triaxial acceleration-based human motion detection for an ambient smart home system. In Proceedings of the IEEE International Conference on Applied Sciences and Technology, Islamabad, Pakistan, 8–12 January 2019. [Google Scholar]

- Dahlstrom, E.; Brooks, D.C.; Bichsel, J. The Current Ecosystem of Learning Management Systems in Higher Education: Student, Faculty, and IT Perspectives; Educause: Boulder, CO, USA, 2014. [Google Scholar]

- Nawaratne, R.; Yu, X. Spatiotemporal anomaly detection using deep learning for real-time video surveillance. IEEE Trans. Ind. Inform. 2019, 16, 393–402. [Google Scholar] [CrossRef]

- Oliveira, P.C.D.; Cunha, C.; Nakayama, M.K. Learning Management Systems (LMS) and e-learning management: An integrative review and research agenda. JISTEM-J. Inf. Syst. Technol. Manag. 2016, 13, 157–180. [Google Scholar] [CrossRef]

- Ahmad, F. Deep image retrieval using artificial neural network interpolation and indexing based on similarity measurement. CAAI Trans. Intell. Technol. 2022, 7, 200–218. [Google Scholar] [CrossRef]

- Hassan, F.S.; Gutub, A. Improving data hiding within color images using hue component of HSV colour space. CAAI Trans. Intell. Technol. 2022, 7, 56–68. [Google Scholar] [CrossRef]

- Quaid, M.A.K.; Jalal, A. Wearable sensors based human behavioral pattern recognition using statistical features and reweighted genetic algorithm. Multimed. Tools Appl. 2019, 79, 6061–6083. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Human actions tracking and recognition based on body parts detection via an artificial neural network. In Proceedings of the IEEE International Conference on Advancements in Computational Sciences, Lahore, Pakistan, 17–19 February 2020. [Google Scholar]

- Golestani, N.; Moghaddam, M. Human activity recognition using magnetic induction-based motion signals and deep recurrent neural networks. Nat. Commun. 2020, 11, 1551. [Google Scholar] [CrossRef] [PubMed]

- Liu, X.; Song, M.; Tao, D.; Bu, J.; Chen, C. Random Geometric Prior Forest for Multiclass Object Segmentation. IEEE Trans. Image Process. 2015, 24, 3060–3070. [Google Scholar] [PubMed]

- Jalal, A.; Khalid, N.; Kim, K. Automatic recognition of human interaction via hybrid descriptors and maximum entropy Markov model using depth sensors. Entropy 2020, 22, 817. [Google Scholar] [CrossRef] [PubMed]

- Rafique, A.; Ahmad, J.; Kim, K. Automated sustainable multi-object segmentation and recognition via modified sampling consensus and kernel sliding perceptron. Symmetry 2020, 13, 1928. [Google Scholar] [CrossRef]

- Zhang, J.; Ye, G.; Tu, Z.; Qin, Y.; Qin, Q.; Zhang, J.; Liu, J. A spatial attentive and temporal dilated (SATD) GCN for skeleton-based action recognition. CAAI Trans. Intell. Technol. 2022, 7, 46–55. [Google Scholar] [CrossRef]

- Pervaiz, M.; Jalal, A.; Kim, K. Hybrid algorithm for multi-people counting and tracking for smart surveillance. In Proceedings of the IEEE 2021 International Bhurban Conference on Applied Sciences and Technologies (IBCAST), Islamabad, Pakistan, 12–16 January 2021. [Google Scholar]

- Khalid, N.; Gochoo, M.; Jalal, A.; Kim, K. Modeling two-person segmentation and locomotion for stereoscopic action identification: A sustainable video surveillance system. Sustainability 2021, 12, 970. [Google Scholar] [CrossRef]

- Cong, R.; Lei, J.; Fu, H.; Cheng, M.-M.; Lin, W.; Huang, Q. Review of Visual Saliency Detection with Comprehensive Information. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2941–2959. [Google Scholar] [CrossRef]

- Nadeem, A.; Jalal, A.; Kim, K. Automatic human posture estimation for sports activity recognition with robust body parts detection and entropy Markov model. Multimed. Tools Appl. 2021, 80, 21465–21498. [Google Scholar] [CrossRef]

- Meng, J.; Li, Y.; Liang, H.; Ma, Y. Single-image Dehazing based on two-stream convolutional neural network. J. Artif. Intell. Technol. 2022, 2, 100–110. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, K.; Liu, L.; Lan, H.; Lin, L. Tcgl: Temporal contrastive graph for self-supervised video representation learning. IEEE Trans. Image Process. 2022, 31, 1978–1993. [Google Scholar] [CrossRef] [PubMed]

- Zheng, M.; Zhi, K.; Zeng, J.; Tian, C.; You, L. A hybrid CNN for image denoising. J. Artif. Intell. Technol. 2022, 2, 93–99. [Google Scholar] [CrossRef]

- Hu, X.; Kuang, Q.; Cai, Q.; Xue, Y.; Zhou, W.; Li, Y. A Coherent Pattern Mining Algorithm Based on All Contiguous Column Bicluster. J. Artif. Intell. Technol. 2022, 2, 80–92. [Google Scholar] [CrossRef]

- Alberto, R.; Briones, A.; Hernandez, G.; Prieto, J.; Chamoso, P. Artificial neural network analysis of the academic performance of students in virtual learning environments. Neurocomputing 2021, 423, 713–720. [Google Scholar]

- Rawashdeh, A.; Zuhir, A.; Mohammed, E.Y.; Al Arab, A.R.; Alara, M.; Al-Rawashdeh, B. Advantages and disadvantages of using e-learning in university education: Analyzing students’ perspectives. Electron. J. E-Learn. 2021, 19, 107–117. [Google Scholar] [CrossRef]

- Fuady, I.; Sutarjo, M.A.S.; Ernawati, E. Analysis of students’ perceptions of online learning media during the COVID-19 pandemic Study of e-learning media: Zoom, Google Meet, Google Classroom, and LMS. Randwick Int. Soc. Sci. J. 2021, 2, 51–56. [Google Scholar] [CrossRef]

- Li, T.; Fan, Y.; Li, Y.; Tarkoma, S.; Hui, P. Understanding the Long-Term Evolution of Mobile App Usage. IEEE Trans. Mob. Comput. 2023, 22, 1213–1230. [Google Scholar] [CrossRef]

| Reference | Objectives | Advantages | Disadvantages |

|---|---|---|---|

| [28] | Early exploration of e-learning behavior tracking. | Established the importance of engagement metrics. Paved the way for future research. | Lack of real-time monitoring, Limited emphasis on individualized feedback. |

| [29] | Focus on learner-centered tracking using a mixed methods approach, including surveys and behavioral analysis. | Enhanced understanding of self-regulation. | Time-consuming data analysis and difficulties in measuring subjective behaviors. |

| [30] | Use of predictive modeling for retention. Used method of longitudinal data collection and machine learning techniques. | Informed personalized interventions. Highlighted the role of social interaction. | Challenges in predicting non-academic behaviors and privacy concerns. |

| [31] | Application of data mining techniques. Performed analysis of large-scale learning data using data mining algorithms. | Insights into collaborative learning behaviors and identification of factors affecting performance. | Ethical considerations, resource-intensive data collection, and limited explanation of causality. |

| [32] | Integration of multimodal data sources. Data fusion of various sources, including clickstream and biometric data. | Comprehensive learner profile generation and personalized learning pathway recommendations. | Technical challenges in data fusion and limited generalizability. Privacy and security concerns. |

| [33] | Explore tracking student engagement. Performed surveys, interviews, and behavioral data analysis and identified factors influencing online behaviors. | Established the importance of engagement metrics. Informative for instructional design. | Limited sample size, lack of long-term data, and dependency on self-reported data. |

| [34] | Investigate social network influence using social network analysis and content analysis. | Provide insights into collaborative learning dynamics. Integration of social network analysis. | Limited focus on non-cognitive behaviors, ethical considerations, and incomplete data from private groups. |

| [35] | Examine online procrastination using surveys and analysis of online procrastination behaviors. Proposed strategies for reducing procrastination. | Implications for time management in e-learning. | Limited generalizability, self-reporting bias, and limited consideration of other behaviors. |

| [36] | Developed a system for giving students immediate feedback throughout an online course using a behavior analytic approach. | Identified effect of individual differences on students behavior in e-learning settings. | Results can be biased based on false feedback. |

| [37] | Discovered that students who had high levels of motivation and self-efficacy were more likely to participate in active learning strategies. | Suggest parameters that will increase student performance and engagement in e-learning. | Motivation level is a derived parameter that cannot be measured accurately; it can affect performance of the system. |

| DataSet | MED Dataset | |||||||

| Activity | Allowed Behaviors | Prohibited Behavior | ||||||

| Actions | Happy | Sad | Excited | Neutral | Panic | Fight | Scared | Angry |

| Accuracy | 89% | 86% | 89% | 82% | 92% | 94% | 89% | 87% |

| Average Accuracy | 85.75% | 90.5% | ||||||

| Dataset | Edu-Net Dataset | |||||||

| Activity | Allowed Behaviors | Prohibited Behaviors | ||||||

| Action | Writing on Board | Writing on Book | Reading Book | Hand Raise | Sleeping on Chair | Eating Food | Holding Mobile Phone | Fighting |

| Accuracy | 83% | 85% | 89% | 82% | 83% | 84% | 89% | 87% |

| Average | 85% | 86% | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mudawi, N.A.; Pervaiz, M.; Alabduallah, B.I.; Alazeb, A.; Alshahrani, A.; Alotaibi, S.S.; Jalal, A. Predictive Analytics for Sustainable E-Learning: Tracking Student Behaviors. Sustainability 2023, 15, 14780. https://doi.org/10.3390/su152014780

Mudawi NA, Pervaiz M, Alabduallah BI, Alazeb A, Alshahrani A, Alotaibi SS, Jalal A. Predictive Analytics for Sustainable E-Learning: Tracking Student Behaviors. Sustainability. 2023; 15(20):14780. https://doi.org/10.3390/su152014780

Chicago/Turabian StyleMudawi, Naif Al, Mahwish Pervaiz, Bayan Ibrahimm Alabduallah, Abdulwahab Alazeb, Abdullah Alshahrani, Saud S. Alotaibi, and Ahmad Jalal. 2023. "Predictive Analytics for Sustainable E-Learning: Tracking Student Behaviors" Sustainability 15, no. 20: 14780. https://doi.org/10.3390/su152014780