Drone Lidar Deep Learning for Fine-Scale Bare Earth Surface and 3D Marsh Mapping in Intertidal Estuaries

Abstract

:1. Introduction

2. Study Area and Methods

2.1. Study Area and Field Experiment

2.2. Approaches

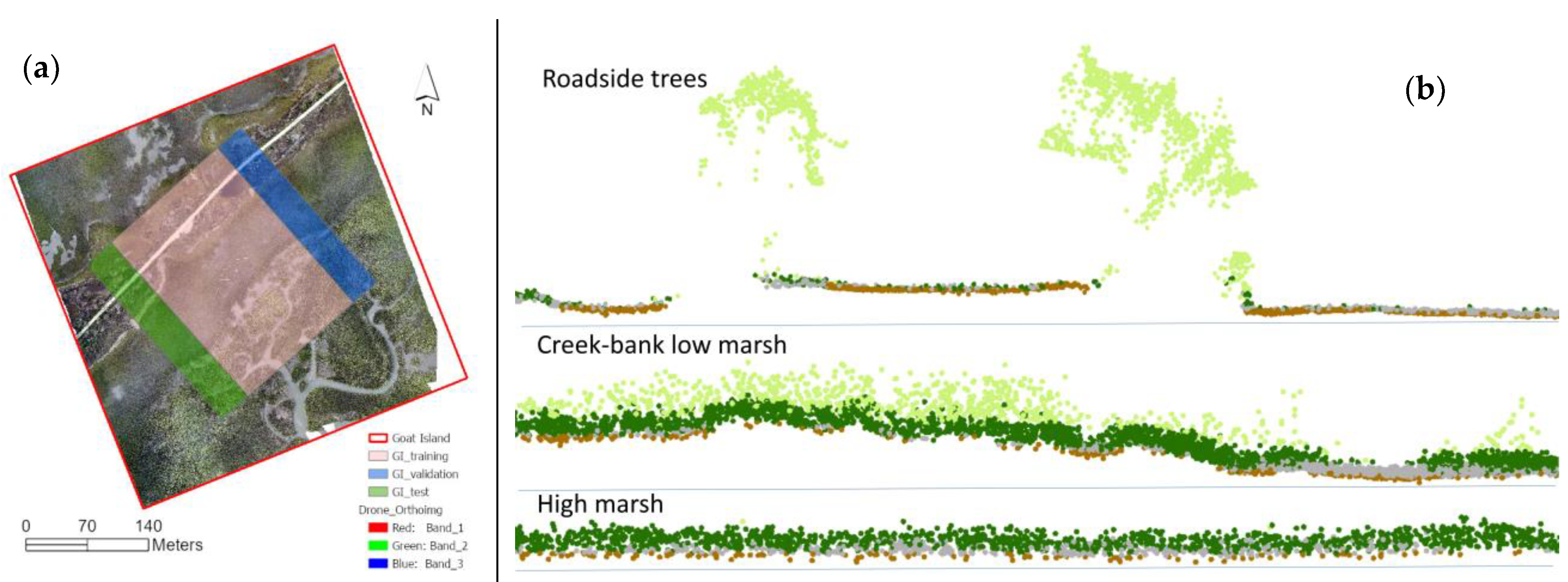

2.2.1. Deep Learning for Lidar Point Cloud Classification

2.2.2. Deep Learning for Orthoimage Classification

2.2.3. Extracting Bare Earth Surface and Marsh Height

3. Results and Discussion

3.1. Characteristics of Drone Lidar Point Cloud

3.2. Deep Learning Classification of Lidar Point Cloud

3.2.1. RandLA-Net Accuracy Assessment

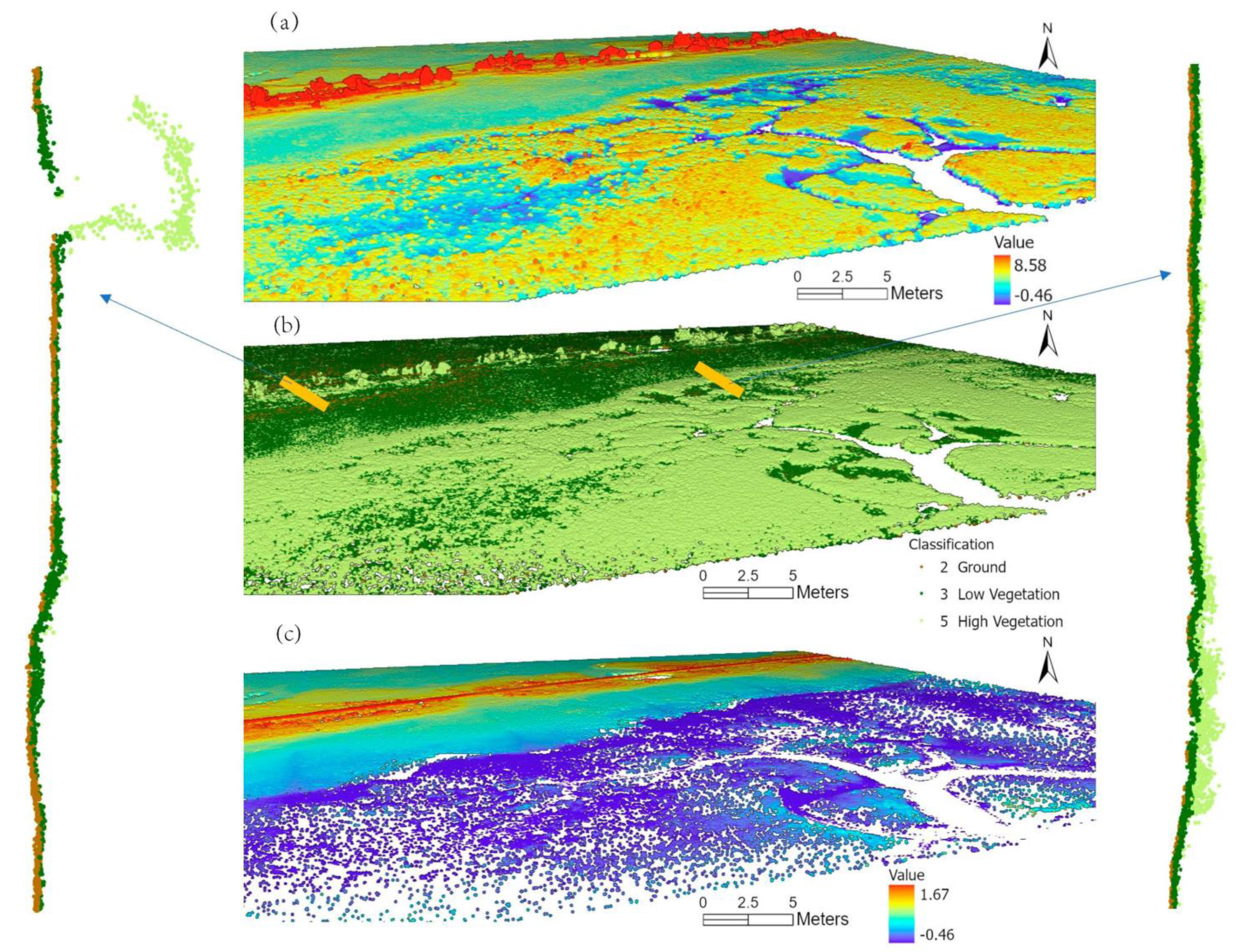

3.2.2. RandLA-Net Extracted Point Classes

3.3. Bare Earth Surface and Marsh Mapping

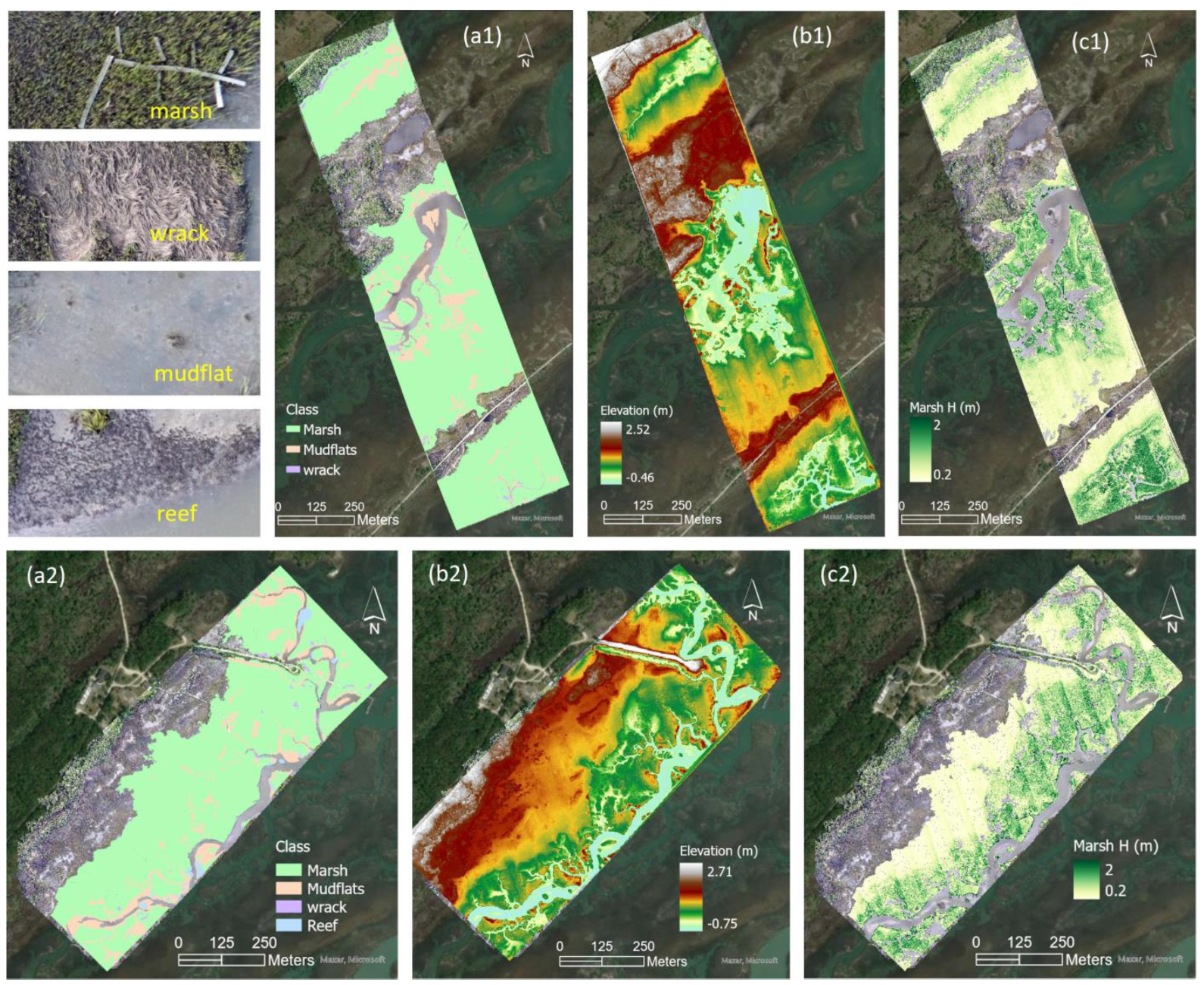

3.3.1. Marsh/Non-Marsh Distribution

3.3.2. Bare Earth Surface and Marsh Height

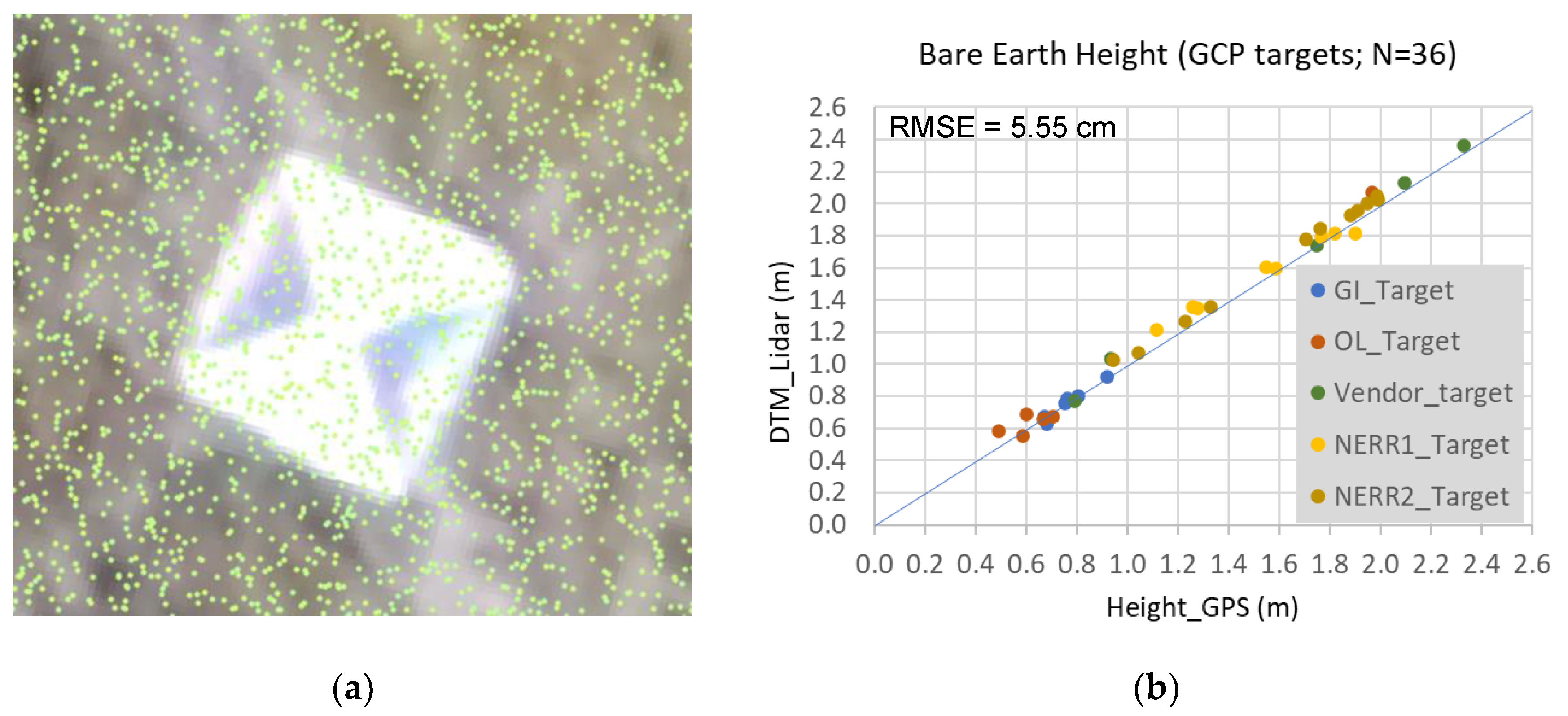

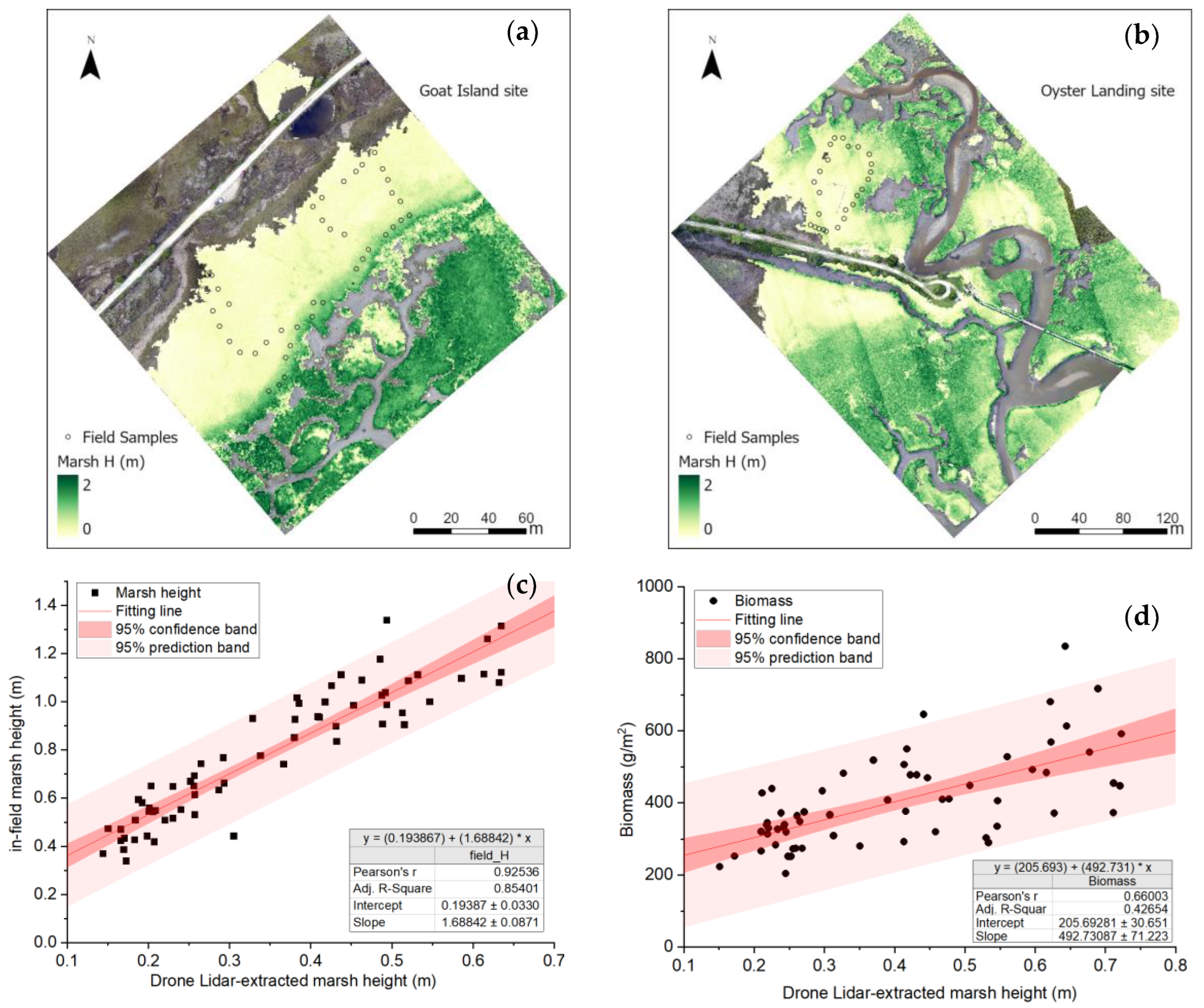

3.4. Comparison between Lidar-Extracted and Field Measurements

3.5. Further Thoughts: Drone Lidar for Intertidal Ecosystem Monitoring

4. Conclusions

- (1)

- Deep learning classification effectively delineates ground, low vegetation, and high vegetation points in the drone Lidar point cloud at an overall accuracy of around 0.84.

- (2)

- Drone Lidar systems could be utilized to extract centimeter-level bare earth surfaces at a vertical accuracy of 5.55 cm (RMSE) in intertidal zones.

- (3)

- The drone Lidar-extracted marsh height was lower than the in-field measurements, but they possessed a strong linear relationship (Pearson’s r = 0.93). With the collected 65 samples, the adjusted Lidar-extracted marsh height reached an RMSE of 0.12 cm.

- (4)

- It is worth mentioning that the classified drone Lidar point cloud fairly delineates the high marsh and low marsh habitats along a gently decreasing topographic gradient in the estuary.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sanger, D.; Parker, C. Guide to the Salt Marshes and Tidal Creeks of the Southeastern United States; South Carolina Department of Natural Resources: Charleston, SC, USA, 2016; p. 112. [Google Scholar]

- Sweet, W.V.; Hamlington, B.D.; Kopp, R.E.; Weaver, C.P.; Barnard, P.L.; Bekaert, D.; Brooks, W.; Craghan, M.; Dusek, G.; Frederikse, T.; et al. Global and Regional Sea Level Rise Scenarios for the United States: Updated Mean Projections and Extreme Water Level Probabilities along U.S. Coastlines; NOAA Technical Report NOS 01; NOAA National Ocean Service: Silver Spring, MD, USA, 2022; p. 111. [Google Scholar]

- U.S. Geological Survey (USGS). USGS Lidar Point Cloud (LPC). 2014. Available online: https://data.usgs.gov/datacatalog/data/USGS:b7e353d2-325f-4fc6-8d95-01254705638a (accessed on 15 September 2023).

- Wang, C.; Morgan, G.; Hodgson, M.E. sUAS for 3D tree surveying: Comparative experiments on a closed-canopy earthen dam. Forests 2021, 12, 659. [Google Scholar] [CrossRef]

- Schmid, K.A.; Hadley, B.C.; Wijekoon, N. Vertical accuracy and use of topographic Lidar data in coastal marshes. J. Coast. Res. 2011, 27, 116–132. [Google Scholar] [CrossRef]

- Hladik, C.; Alber, M. Accuracy assessment and correction of a Lidar-derived salt marsh digital elevation model. Remote Sens. Environ. 2012, 121, 224–235. [Google Scholar] [CrossRef]

- Amante, C. Estimating coastal digital elevation model uncertainty. J. Coast. Res. 2018, 34, 1382–1397. [Google Scholar] [CrossRef]

- Medeiros, S.C.; Bobinsky, J.S.; Abdelwahab, K. Locality of topographic ground truth data for salt marsh Lidar DEM elevation bias mitigation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5766–5775. [Google Scholar] [CrossRef]

- Fernandez-Nunez, M.; Burningham, H.; Zujar, J.O. Improving accuracy of Lidar-derived terrain models for saltmarsh management. J. Coast Conserv. 2017, 21, 209–222. [Google Scholar] [CrossRef]

- Abeysinghe, T.; Simic Milas, A.; Arend, K.; Hohman, B.; Reil, P.; Gregory, A.; Vázquez-Ortega, A. Mapping invasive Phragmites Australis in the Old Woman CREEK Estuary using UAV remote sensing and machine learning classifiers. Remote Sens. 2019, 11, 1380. [Google Scholar] [CrossRef]

- Dai, W.; Li, H.; Chen, X.; Xu, F.; Zhou, Z.; Zhang, C. Saltmarsh expansion in response to morphodynamic evolution: Eield observations in the Jiangsu coast using UAV. J. Coast. Res. 2020, 95 (Suppl. S1), 433. [Google Scholar] [CrossRef]

- Haskins, J.; Endris, C.; Thomsen, A.S.; Gerbl, F.; Fountain, M.C.; Wasson, K. UAV to inform restoration: A case study from a California tidal marsh. Front. Environ. Sci. 2021, 9, 642906. [Google Scholar] [CrossRef]

- Meng, X.; Shang, N.; Zhang, X.; Li, C.; Zhao, K.; Qiu, X.; Weeks, E. Photogrammetric UAV mapping of terrain under dense coastal vegetation: An object-oriented classification ensemble algorithm for classification and terrain correction. Remote Sens. 2017, 9, 1187. [Google Scholar] [CrossRef]

- Durgan, S.; Zhang, C.; Duecaster, A. Evaluation and enhancement of unmanned aircraft system photogrammetric data quality for coastal wetlands. GISci. Remote Sens. 2020, 57, 865–881. [Google Scholar] [CrossRef]

- Pinton, D.; Canestrelli, A.; Wilkinson, B.; Ifju, P.; Ortega, A. A new algorithm for estimating ground elevation and vegetation characteristics in coastal salt marshes from high-resolution UAV-based Lidar point clouds. Earth Surf. Process. Landf. 2020, 45, 3687–3701. [Google Scholar] [CrossRef]

- Curcio, A.C.; Peralta, G.; Aranda, M.; Barbero, L. Evaluating the performance of high spatial resolution UAV-photogrammetry and UAV-Lidar for salt marshes: The Cádiz Bay study case. Remote Sens. 2022, 14, 3582. [Google Scholar] [CrossRef]

- Blount, T.; Silvestri, S.; Marani, M.; D’Alpaos, A. Lidar derived salt marsh topography and biomass: Defining accuracy and spatial patterns of uncertainty. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2023, XLVIII-1/W1-2023, 57–62. [Google Scholar] [CrossRef]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. PointNet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; Volume 18, pp. 5099–5108. [Google Scholar]

- Li, Y.; Bu, R.; Sun, M.; Wu, W.; Di, X.; Chen, B. PointCNN: Convolution on X-transformed points. In Proceedings of the 32nd Conference on Neural Information Processing Systems, Montréal, QC, Canada, 3–8 December 2018; pp. 828–838. [Google Scholar]

- Wang, Y.; Sun, Y.; Liu, Z.; Sarma, S.E.; Bronstein, M.M.; Solomon, J. Dynamic graph CNN for learning on point clouds. ACM Trans. Graph 2019, 38, 1–12. [Google Scholar] [CrossRef]

- Li, Y.; Ma, L.; Zhong, Z.; Cao, D.; Li, J. TGNet: Geometric graph CNN on 3-D point cloud segmentation. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3588–3600. [Google Scholar] [CrossRef]

- Diab, A.; Kashef, R.; Shaker, A. Deep learning for Lidar point cloud classification in remote sensing. Sensors 2022, 22, 7868. [Google Scholar] [CrossRef]

- ESRI. Introduction to Deep Learning and Point Clouds, ArcGIS Pro 3.1. 2023. Available online: https://pro.arcgis.com/en/pro-app/latest/help/data/las-dataset/introduction-to-deep-learning-and-point-clouds.htm (accessed on 15 September 2023).

- ASPRS. LAS Specification 1.4–R14; Published by the American Society for Photogrammetry and Remote Sensing (ASPRS). 2019. Available online: https://www.asprs.org/wp-content/uploads/2019/03/LAS_1_4_r14.pdf (accessed on 15 September 2023).

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Ma, Z.; Li, J.; Liu, J.; Zeng, Y.; Wan, Y.; Zhang, J. An improved RandLa-Net algorithm incorporated with NDT for automatic classification and extraction of raw point cloud data. Electronics 2022, 11, 2795. [Google Scholar] [CrossRef]

- Huang, X.; Wang, C.; Li, Z.; Ning, H. A visual-textual fused approach to automated tagging of flood-related tweets during a flood event. Int. J. Digit. Earth 2018, 11, 1248–1264. [Google Scholar]

- Pashaei, M.; Kamangir, H.; Starek, M.J.; Tissot, P. Review and evaluation of deep learning architectures for efficient land cover mapping with UAS hyper-spatial imagery: A case study over a wetland. Remote Sens. 2020, 12, 959. [Google Scholar] [CrossRef]

- Mielcarek, M.; Stereńczak, K.; Khosravipour, A. Testing and evaluating different LiDAR-derived canopy height model generation methods for tree height estimation. Int. J. Appl. Earth Obs. Geoinf. 2018, 71, 132–143. [Google Scholar] [CrossRef]

- Morris, J.; Sundberg, K. LTREB: Aboveground Biomass, Plant Density, Annual Aboveground Productivity, and Plant Heights in Control and Fertilized Plots in a Spartina Alterniflora-Dominated Salt Marsh, North Inlet, Georgetown, SC: 1984–2020. Ver. 5. Environmental Data Initiative. 2021. Available online: https://portal.edirepository.org/nis/mapbrowse?packageid=edi.135.5 (accessed on 15 September 2023).

- U.S. Fish and Wildlife Service (FWS). The National Wetlands Inventory. 2018. Available online: https://data.nal.usda.gov/dataset/national-wetlands-inventory (accessed on 15 September 2023).

| Class | Training Set (2.66 ha) | Validation Set (0.59 ha) | Test Set (0.65 ha) | |||||

|---|---|---|---|---|---|---|---|---|

| # of Points | Percent | Precision | Recall | Score | Precision | Recall | Score | |

| Ground | 2,656,130 | 8.99% | 0.873 | 0.885 | 0.879 | 0.917 | 0.858 | 0.886 |

| Low vegetation | 10,537,971 | 35.65% | 0.957 | 0.782 | 0.860 | 0.928 | 0.759 | 0.835 |

| High Vegetation | 5,209,755 | 17.62% | 0.678 | 0.971 | 0.798 | 0.685 | 0.974 | 0.804 |

| Unassigned | 11,157,757 | 37.74% | / | / | / | / | / | / |

| Overall accuracy | 0.844 | 0.834 | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, C.; Morgan, G.R.; Morris, J.T. Drone Lidar Deep Learning for Fine-Scale Bare Earth Surface and 3D Marsh Mapping in Intertidal Estuaries. Sustainability 2023, 15, 15823. https://doi.org/10.3390/su152215823

Wang C, Morgan GR, Morris JT. Drone Lidar Deep Learning for Fine-Scale Bare Earth Surface and 3D Marsh Mapping in Intertidal Estuaries. Sustainability. 2023; 15(22):15823. https://doi.org/10.3390/su152215823

Chicago/Turabian StyleWang, Cuizhen, Grayson R. Morgan, and James T. Morris. 2023. "Drone Lidar Deep Learning for Fine-Scale Bare Earth Surface and 3D Marsh Mapping in Intertidal Estuaries" Sustainability 15, no. 22: 15823. https://doi.org/10.3390/su152215823