Smart and Automated Infrastructure Management: A Deep Learning Approach for Crack Detection in Bridge Images

Abstract

:1. Introduction

1.1. Motivation and Research Gap

1.2. Reasons for Selecting Pakistan as a Case Study

1.3. Research Questions and Objectives

- What types of cracks are present in bridges and similar infrastructure in developing countries?

- How can bridge cracks be differentiated from images based on their severity and segmented to calculate their width, height, and area?

- How to develop and test a deep learning system to determine cracks in bridge images for developing countries?

- To detect and differentiate between bridge cracks from images with higher accuracy.

- To locate, classify, and differentiate cracks based on their severity and segment them to calculate the width, height, and area.

- To develop a deep learning-based approach for determining cracks in the images of bridges and infrastructure projects of developing countries.

1.4. Novelty and Potential Contribution

1.5. Organization of the Paper

2. Related Literature

2.1. Types of Cracks in Bridges

- Load-induced cracks [32]: These are produced in concrete bridges when subjected to standard static, dynamic, and secondary pressures. There are two main types of load cracks: secondary stress cracks and direct stress cracks.

- Temperature change-induced cracks [33]: Concrete exhibits thermal expansion and contraction in response to temperature changes. Temperature changes are generally a result of annual temperature variations, sunshine, sudden cool-off, heat hydration, etc. The concrete will deform when the structure’s internal or external temperature changes. The structures experience stress if the deformation is constrained. A temperature crack in concrete will appear when the applied stress exceeds its tensile strength. Temperature stress in some long-span bridges may approach or surpass the live load stress. Such temperature cracks are distinguished from other cracks primarily by their ability to grow or contract in response to changes in temperature.

- Shrinkage-induced cracks [34]: These cracks are caused by concrete shrinkage. The two most common types of concrete shrinkage are plastic and dry. Other types include spontaneous and carbonization shrinkage.

- Ground deformation-induced cracks [35]: A structure can experience additional stress due to the foundation’s unequal vertical settlement or horizontal displacement, which exceeds the tensile strength of the concrete and causes structural cracking. Some common causes of such uneven settlement include greater variations in soil/ground quality, enormous structural load variation or unequal distribution, varying types of foundations, melting or freezing of foundation/footings, varying foundation factors after bridge construction, etc. These reasons cause ground deformation that leads to cracks in bridges.

- Steel corrosion-induced cracks [36]: Steel bars can be corroded due to oxidation. Carbon dioxide can carbonize the concrete’s protective layer on the steel bar’s surface because of the concrete’s poor quality or the inadequate thickness of the protective layer, reducing the concrete’s alkalinity around the steel bar. Another reason can be that the steel bars are surrounded by a high concentration of chloride ions due to chloride’s intervention. The rust and corrosion reactions are amplified by membrane damage, the iron ions in the steel bar, oxygen, and water entering the concrete. The volume of the rusted iron hydroxide is then about two to four times greater than the original, causing expansion stress on the surrounding concrete. This leads to the cracking and peeling of the concrete’s protective layer. As a result, the steel bars develop cracks along their longitudinal axis, and the concrete surface is gradually corroded.

- Material quality-induced cracks [37]: Such cracks are caused due to the lower quality of construction materials such as cement, sand, aggregate, mixing of water, and admixtures.

- Construction process-induced cracks [38]: A lower-quality construction, transportation, pouring, or hoisting process, inexperienced workforce, and poor on-site quality management can lead to vertical, horizontal, oblique, and other geometric distortions leading to the development of surface, deep, or penetrating cracks of variable width.

2.2. Image Processing Models for Detecting Cracks

2.2.1. Image Filtering Techniques

2.2.2. Beamlet Transform

2.2.3. Shi-Tomasi Algorithm

2.3. Classical Machine Learning Methods

2.3.1. K-Means Clustering

2.3.2. Support Vector Machines (SVM)

2.3.3. Random Structured Forests

2.4. Deep Learning Methods

2.4.1. Convolutional Neural Network

2.4.2. Region Proposal Networks

2.4.3. Fully Convolutional Network (FCN)

2.4.4. U-Net

2.4.5. Skip-Squeeze-and-Excitation Networks (SSENets)

2.4.6. CrackNet

2.4.7. GoogleNet

2.4.8. You Only Look Once (YOLO)

3. Materials and Methods

3.1. Data Preparation

3.1.1. Dataset

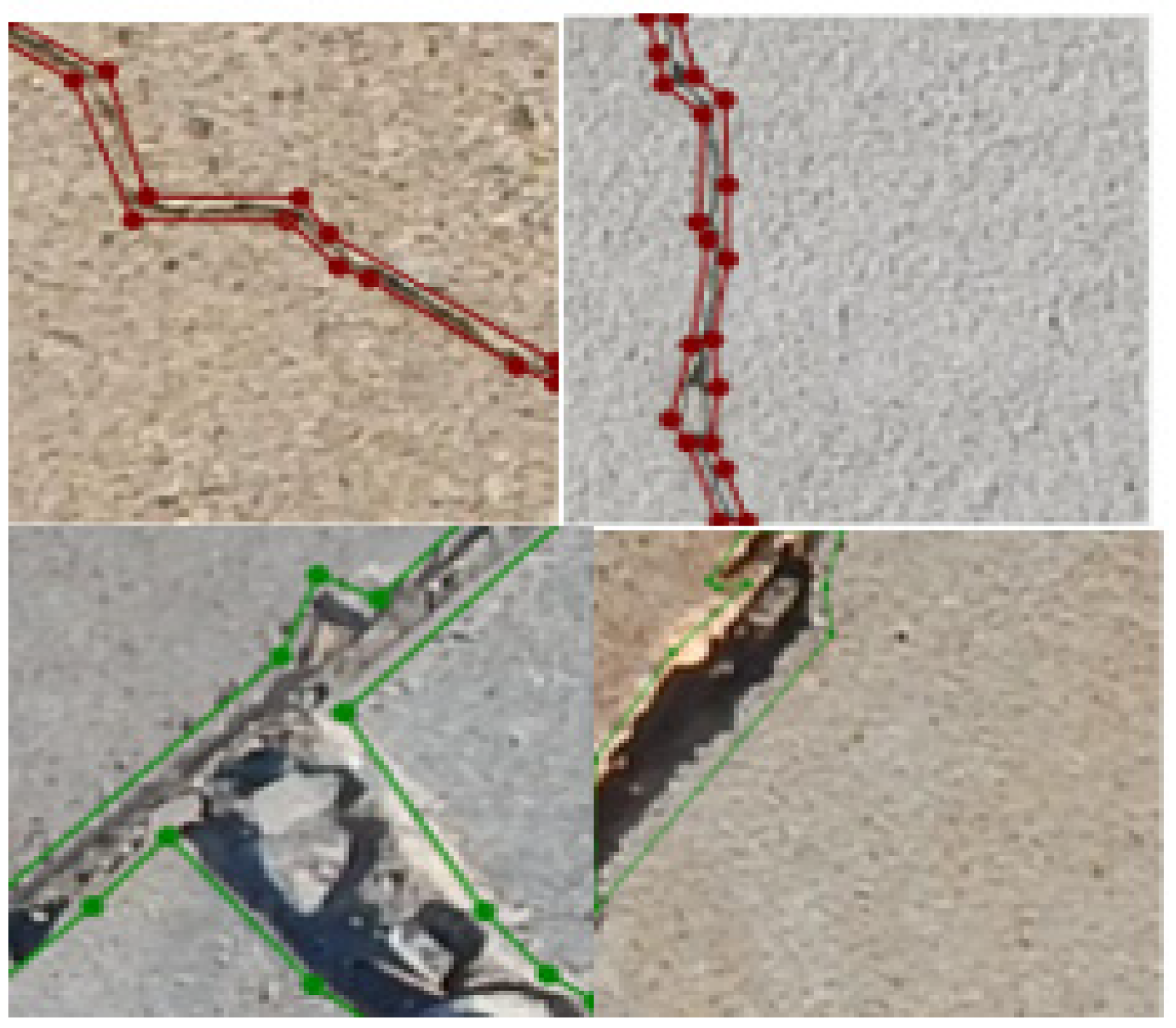

3.1.2. Data Annotation

3.1.3. Data Resizing

3.1.4. Data Augmentation

3.1.5. Data Splitting

3.2. Model Description and Functions

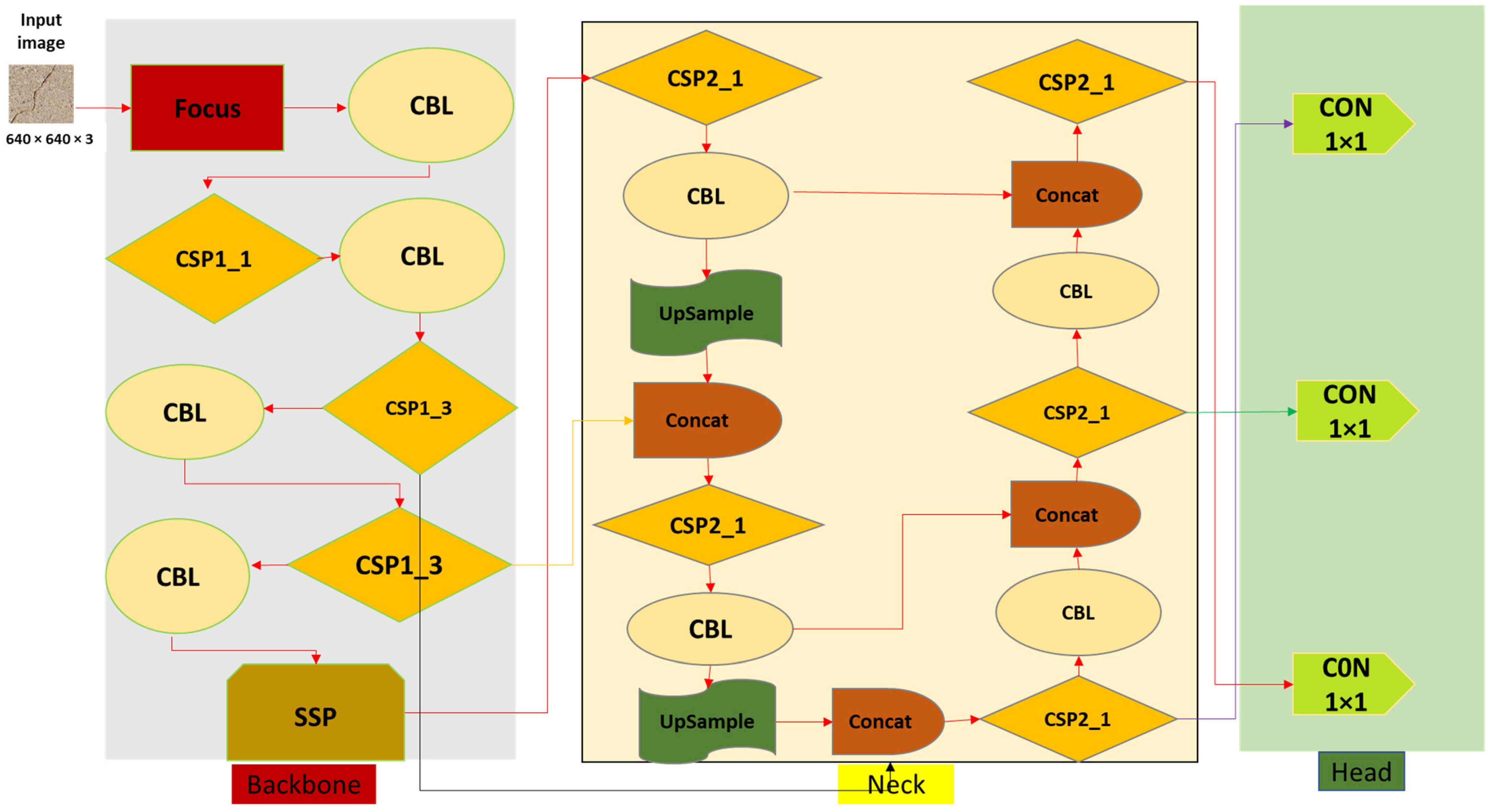

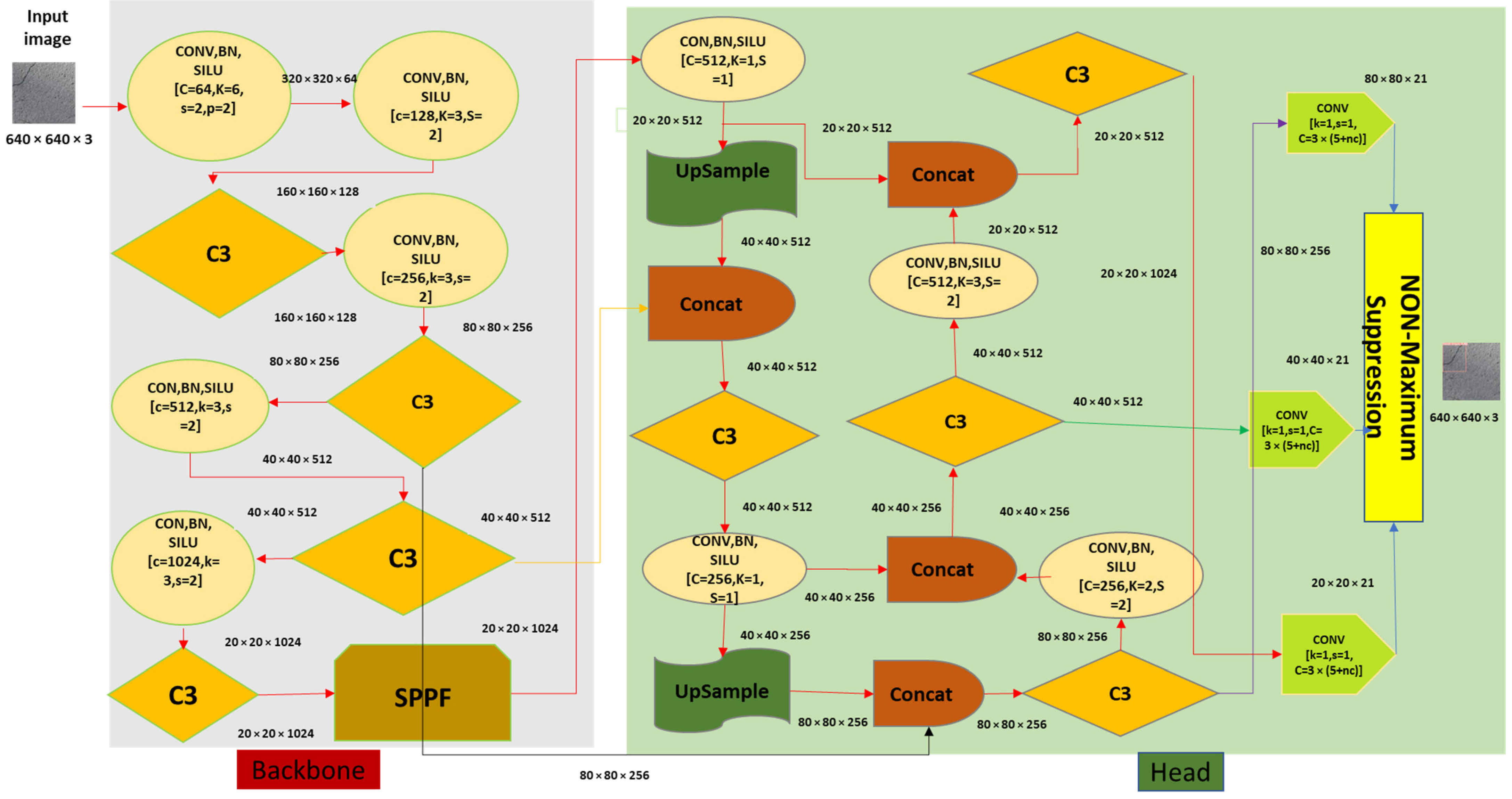

3.2.1. YOLOv5 Architecture

- (1)

- Backbone

- (2)

- Neck

- (3)

- Head

3.2.2. YOLOv5 Variants

3.2.3. Activation Function

3.2.4. Optimization Function

3.2.5. Cost/Loss Function

3.2.6. YOLOv5 Model Training

3.2.7. YOLOv5 Model Testing

3.3. Segmentation

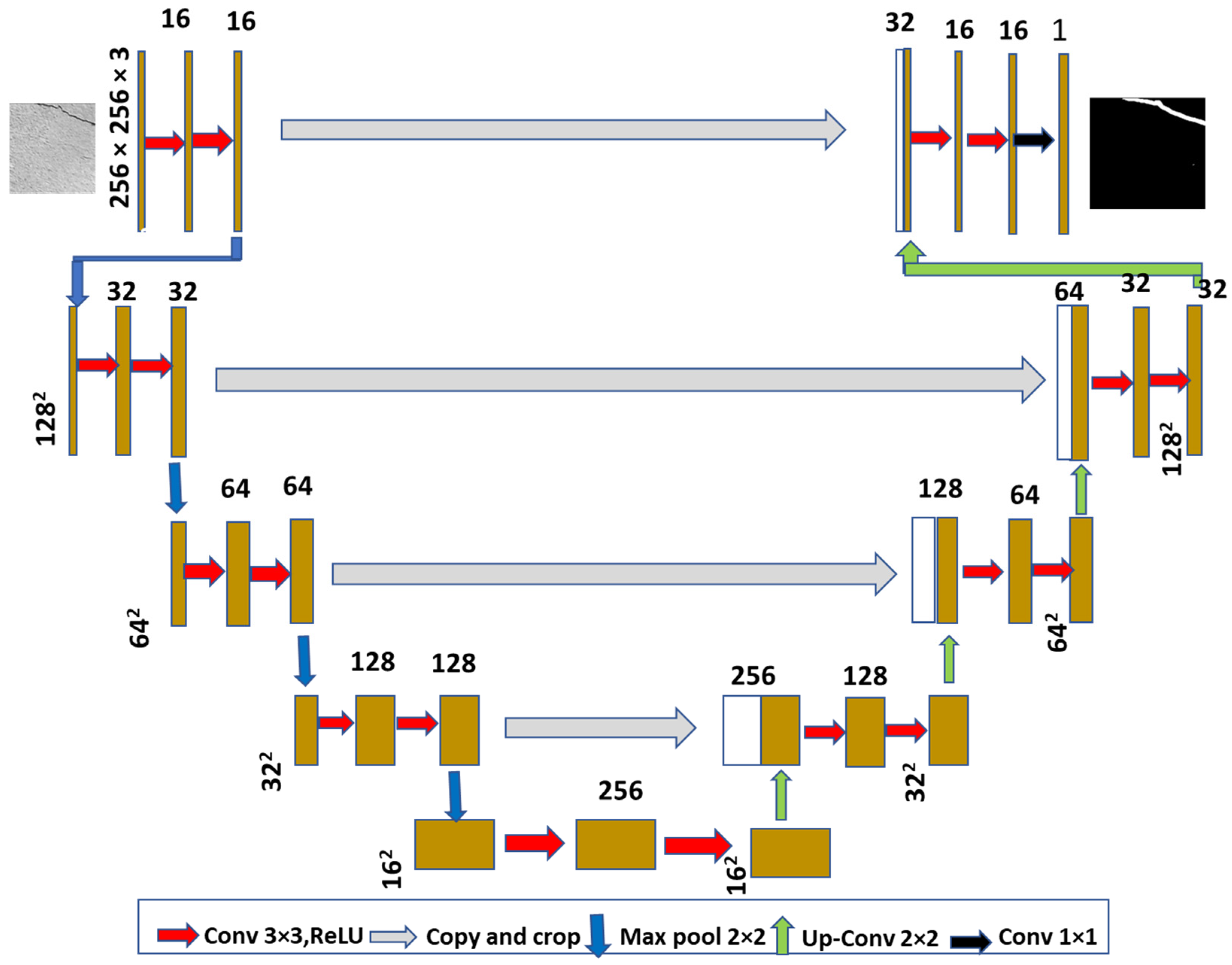

3.3.1. U-Net Model Architecture

3.3.2. U-Net Training

3.3.3. U-Net Testing

3.4. Crack Size Measurement

4. Experiment Design, Measures, and Results

4.1. Hardware Configuration

4.2. Performance Measures

- Box Loss: It measures how accurately the algorithm can pinpoint an object’s center and the precision of the bounding box enclosing the crack in the tested models.

- Object Loss: It provides the likelihood of an object appearing in a particular location of interest as determined by the objectness score. The image likely contains the object if the score is high.

- Classification loss: A type of cross-entropy that shows how accurately a given class has been predicted.

- Intersection over Union (IoU): It determines the variation between the predicted and ground truth labels in our tested models. When detecting objects, the model predicts several bounding boxes for every object and eliminates the one which is not needed based on the threshold value and each bounding box’s confidence scores. The threshold value is defined according to requirements. The box is eliminated if the IoU value does not exceed the threshold value (set for cracks). IoU is computed using Equation (1).

- Precision: It is used to measure correct predictions and is determined using Equation (2).

- Recall: It corresponds to the true positive rate and is determined using Equation (3). Recall measures the percentage of the true bounding box that was correctly predicted in the current study.

- Average Precision (AP): The area under the precision-recall curve is used to determine AP.

- Mean Average Precision (mAP): Mean AP (mAP) considers both precision and recall by averaging the recall values between 0 and 1, and sums up the precision-recall curve in a single numeral metric.

- mAP_0.5: This is the mean mAP at the IoU threshold of 0.5.

- mAP_0.5:0.95: This is the average mAP across a range of different IoU thresholds, from 0.5 to 0.95.

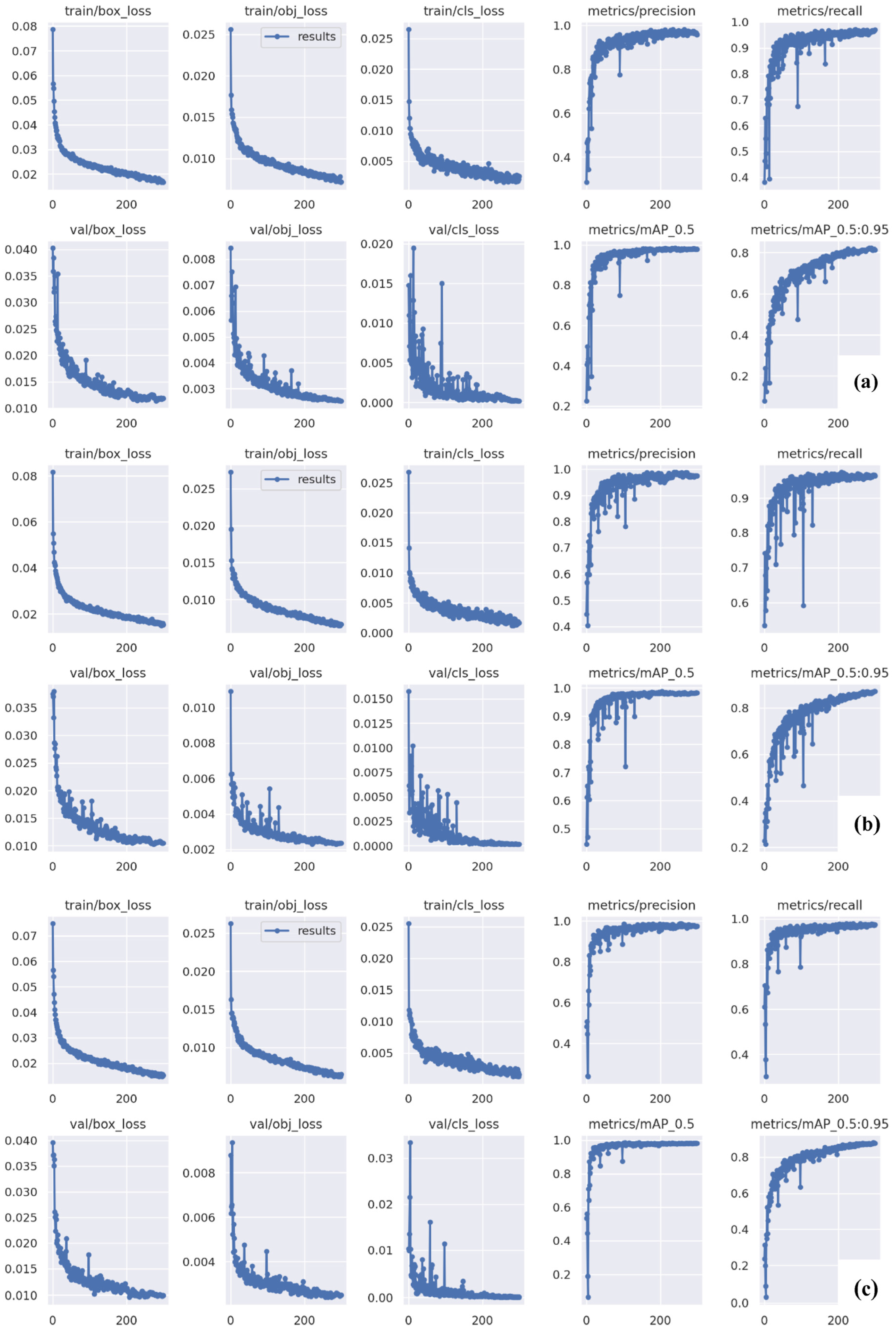

4.3. Model Training, Validation, and Testing Results

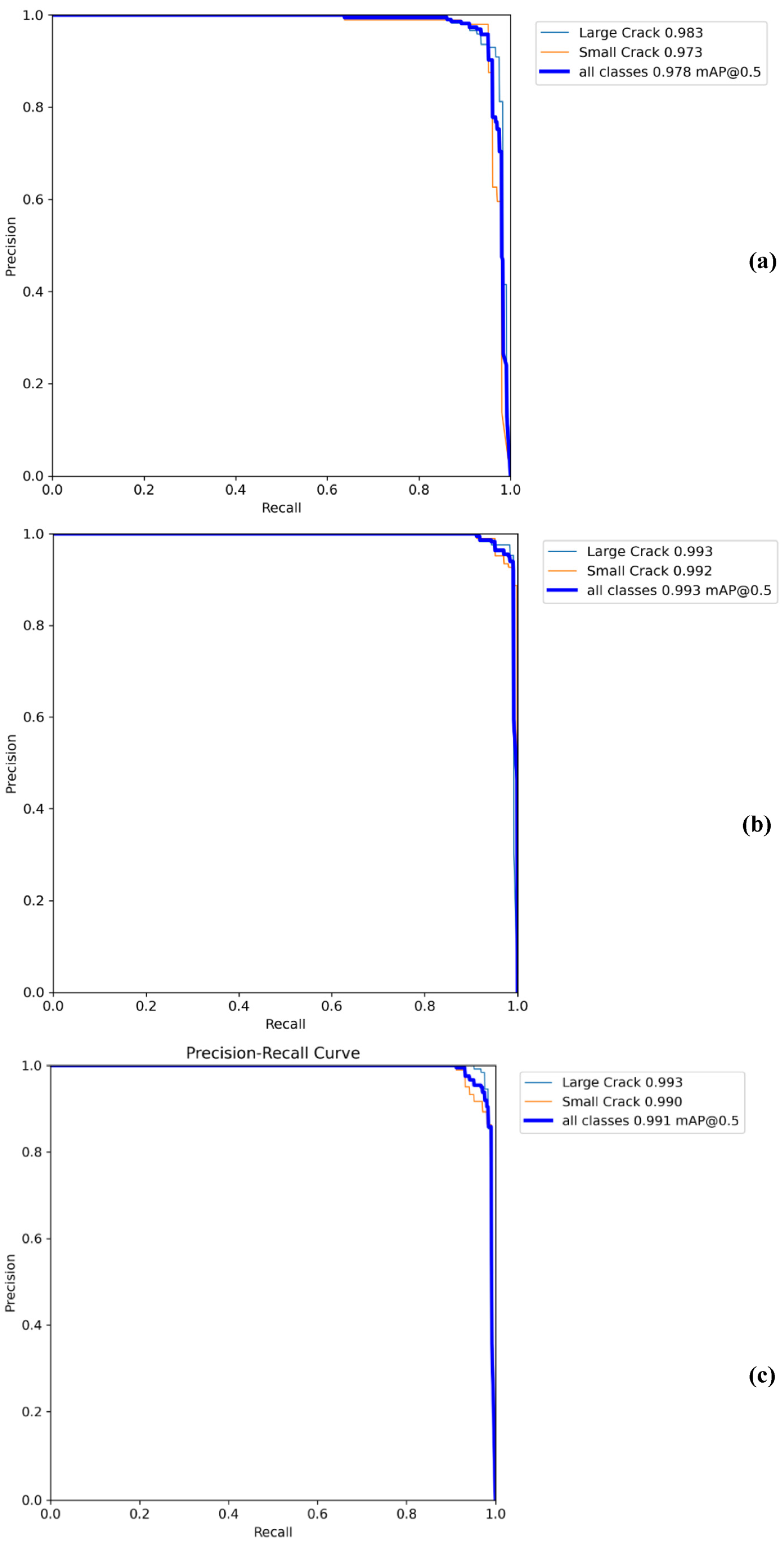

4.3.1. Testing Results for SDNET2018 Dataset

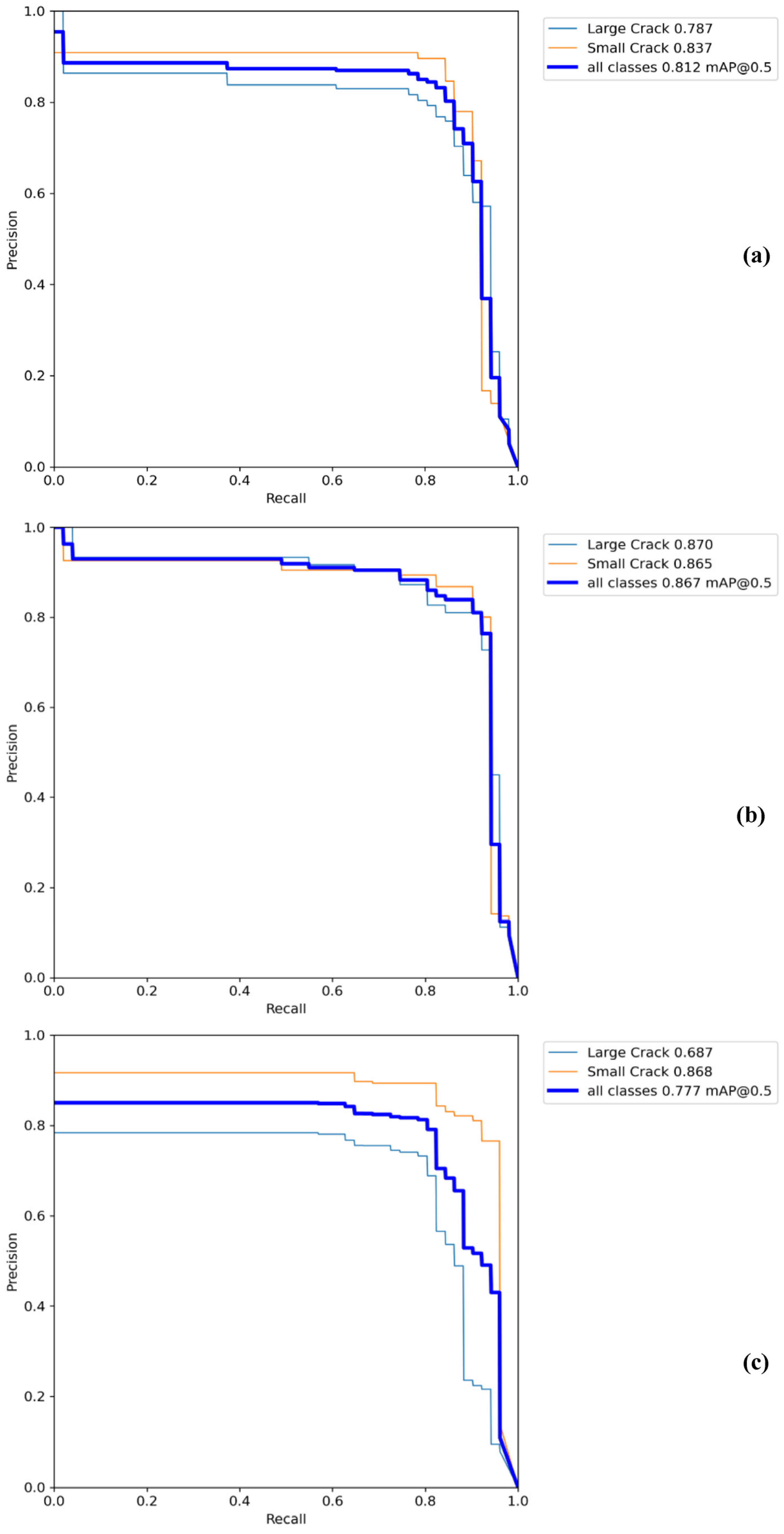

4.3.2. Testing Results for the Pakistani Dataset

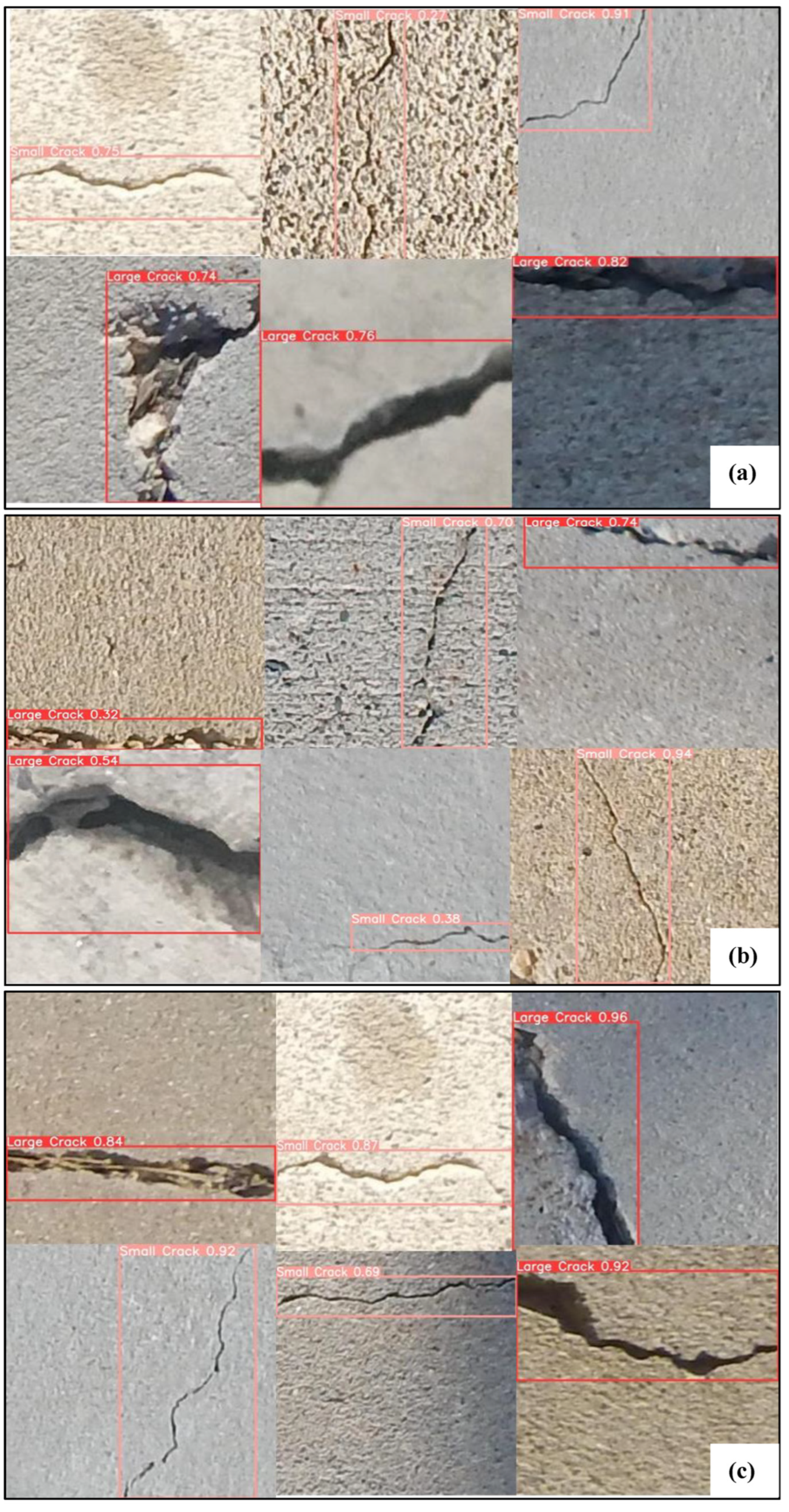

4.4. Crack Detection Results

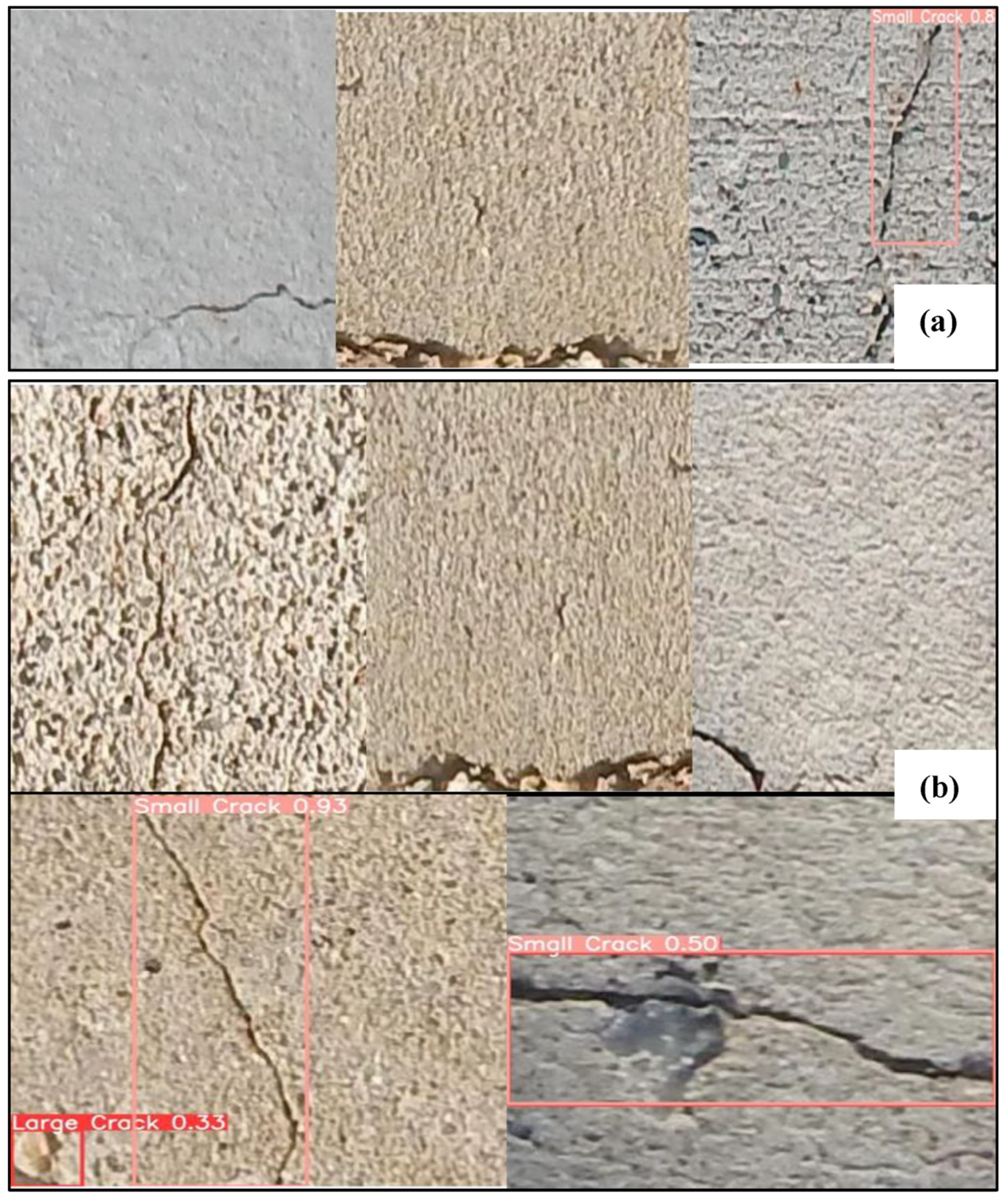

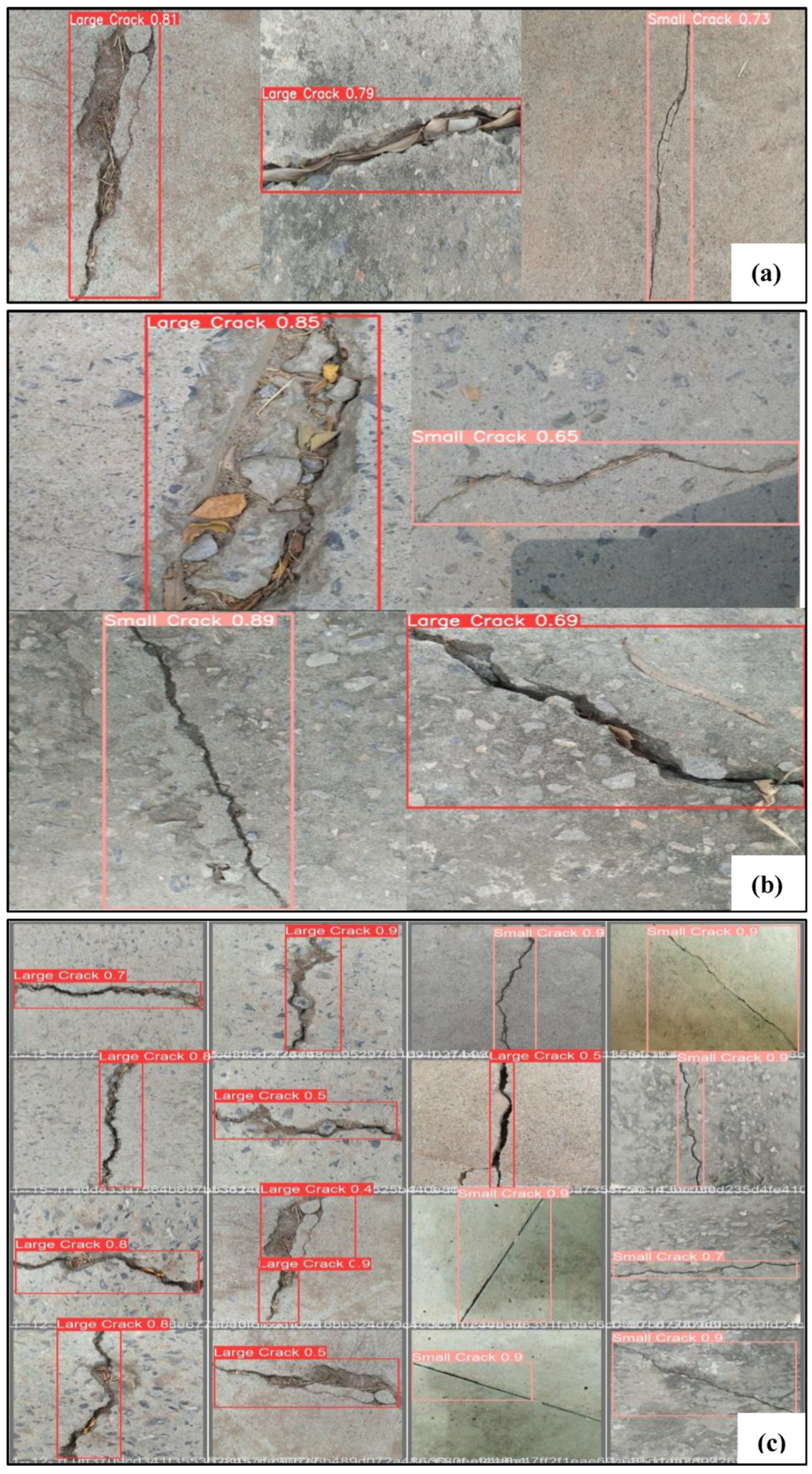

4.4.1. Crack Detection Results of YOLOv5 Models Using SDNET2018 Dataset

4.4.2. Crack Detection Results of YOLOv5 Models Using the Pakistani Dataset

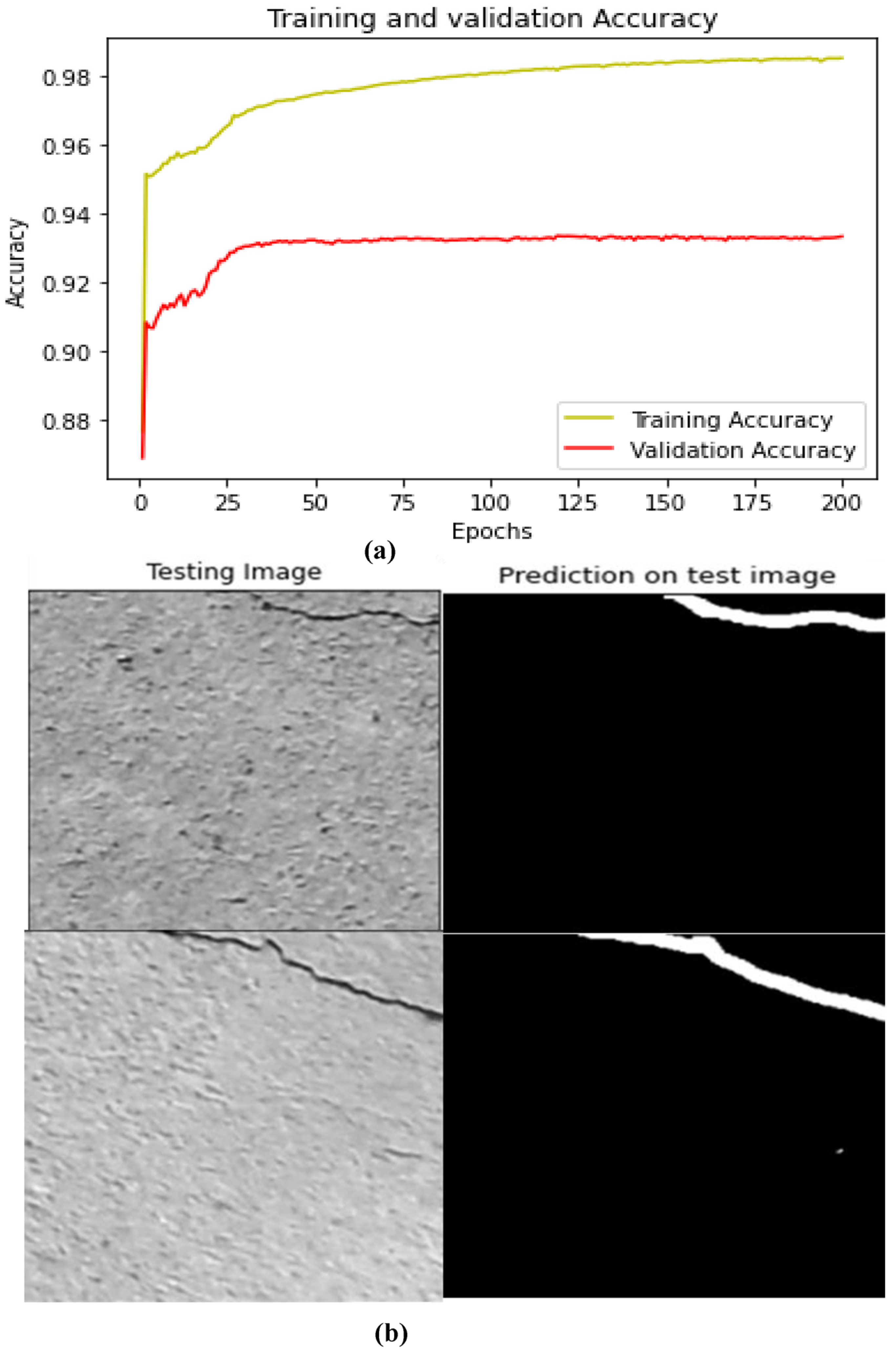

4.5. Segmentation Results

4.5.1. Crack Size Measurement

4.5.2. Crack Size Variations

4.6. Comparison with Previous YOLO Models

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Hu, W.; Wang, W.; Ai, C.; Wang, J.; Wang, W.; Meng, X.; Liu, J.; Tao, H.; Qiu, S. Machine vision-based surface crack analysis for transportation infrastructure. Autom. Constr. 2021, 132, 103973. [Google Scholar] [CrossRef]

- Munawar, H.S.; Ullah, F.; Shahzad, D.; Heravi, A.; Qayyum, S.; Akram, J. Civil infrastructure damage and corrosion detection: An application of machine learning. Buildings 2022, 12, 156. [Google Scholar] [CrossRef]

- Islam, N.U.; Lee, S. Cross domain image transformation using effective latent space association. In Proceedings of the International Conference on Intelligent Autonomous Systems, Singapore, 1–3 March 2018; pp. 706–716. [Google Scholar]

- Munawar, H.S.; Ullah, F.; Heravi, A.; Thaheem, M.J.; Maqsoom, A. Inspecting Buildings Using Drones and Computer Vision: A Machine Learning Approach to Detect Cracks and Damages. Drones 2021, 6, 5. [Google Scholar] [CrossRef]

- Maqsoom, A.; Aslam, B.; Yousafzai, A.; Ullah, F.; Ullah, S.; Imran, M. Extracting built-up areas from spectro-textural information using machine learning. Soft Comput. 2022, 26, 7789–7808. [Google Scholar] [CrossRef]

- Qiao, W.; Ma, B.; Liu, Q.; Wu, X.; Li, G. Computer vision-based bridge damage detection using deep convolutional networks with expectation maximum attention module. Sensors 2021, 21, 824. [Google Scholar] [CrossRef]

- Ullah, F. Smart Tech 4.0 in the Built Environment: Applications of Disruptive Digital Technologies in Smart Cities, Construction, and Real Estate. Buildings 2022, 12, 1516. [Google Scholar] [CrossRef]

- Sirshar, M.; Paracha, M.F.K.; Akram, M.U.; Alghamdi, N.S.; Zaidi, S.Z.Y.; Fatima, T. Attention based automated radiology report generation using CNN and LSTM. PLoS ONE 2022, 17, e0262209. [Google Scholar] [CrossRef]

- Islam, N.U.; Lee, S. Interpretation of deep CNN based on learning feature reconstruction with feedback weights. IEEE Access 2019, 7, 25195–25208. [Google Scholar] [CrossRef]

- Lee, S.; Islam, N.U. Robust image translation and completion based on dual auto-encoder with bidirectional latent space regression. IEEE Access 2019, 7, 58695–58703. [Google Scholar] [CrossRef]

- Chen, L.; Chen, W.; Wang, L.; Zhai, C.; Hu, X.; Sun, L.; Tian, Y.; Huang, X.; Jiang, L. Convolutional neural networks (CNNs)-based multi-category damage detection and recognition of high-speed rail (HSR) reinforced concrete (RC) bridges using test images. Eng. Struct. 2023, 276, 115306. [Google Scholar] [CrossRef]

- Li, R.; Yu, J.; Li, F.; Yang, R.; Wang, Y.; Peng, Z. Automatic bridge crack detection using Unmanned aerial vehicle and Faster R-CNN. Constr. Build. Mater. 2023, 362, 129659. [Google Scholar] [CrossRef]

- Islam, N.U.; Lee, S.; Park, J. Accurate and consistent image-to-image conditional adversarial network. Electronics 2020, 9, 395. [Google Scholar] [CrossRef] [Green Version]

- Mushtaq, M.; Akram, M.U.; Alghamdi, N.S.; Fatima, J.; Masood, R.F. Localization and Edge-Based Segmentation of Lumbar Spine Vertebrae to Identify the Deformities Using Deep Learning Models. Sensors 2022, 22, 1547. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–6 October 2016; pp. 21–37. [Google Scholar]

- Liu, C.; Tao, Y.; Liang, J.; Li, K.; Chen, Y. Object detection based on YOLO network. In Proceedings of the 2018 IEEE 4th Information Technology and Mechatronics Engineering Conference (ITOEC), Chongqing, China, 14–16 December 2018; pp. 799–803. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1–9. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [Green Version]

- Fatima, J.; Mohsan, M.; Jameel, A.; Akram, M.U.; Muzaffar Syed, A. Vertebrae localization and spine segmentation on radiographic images for feature-based curvature classification for scoliosis. Concurr. Comput. Pract. Exp. 2022, 34, e7300. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wu, W.; Liu, H.; Li, L.; Long, Y.; Wang, X.; Wang, Z.; Li, J.; Chang, Y. Application of local fully Convolutional Neural Network combined with YOLO v5 algorithm in small target detection of remote sensing image. PLoS ONE 2021, 16, e0259283. [Google Scholar] [CrossRef]

- Wynne, Z.; Stratford, T.; Reynolds, T.P. Perceptions of long-term monitoring for civil and structural engineering. In Proceedings of the Structures, Atlanta, GA, USA, 20–23 April 2022; pp. 1616–1623. [Google Scholar]

- Bao, Y.; Tang, Z.; Li, H.; Zhang, Y. Computer vision and deep learning–based data anomaly detection method for structural health monitoring. Struct. Health Monit. 2019, 18, 401–421. [Google Scholar] [CrossRef]

- Choudhry, R.M.; Aslam, M.A.; Hinze, J.W.; Arain, F.M. Cost and schedule risk analysis of bridge construction in Pakistan: Establishing risk guidelines. J. Constr. Eng. Manag. 2014, 140, 04014020. [Google Scholar] [CrossRef]

- Dawn. Pakistan: 70 Killed in NWFP Rain, Floods—Mardan Bridge Collapses. Dawn. 6 August 2006. Available online: https://www.dawn.com/news/204850/70-killed-in-nwfp-rain-floods-mardan-bridge-collapses (accessed on 15 December 2022).

- Najam, A. Many Killed and Injured as Karachi’s Shershah Bridge Collapses; More Still Trapped. Available online: https://pakistaniat.com/2007/09/01/pakistan-karachi-shersha-bridge-collapse-dead-infrastructure-killed-bridge/ (accessed on 14 October 2022).

- Pakistan Bridge Collapse Death Toll at 10. Available online: https://www.upi.com/Top_News/2007/09/02/Pakistan-bridge-collapse-death-toll-at-10/33361188736809/?st_rec=59951186106548&u3L=1 (accessed on 14 October 2022).

- Desk, W. Neelum Valley’s Kundal Shahi bridge Takes 40 People down with It. Daily Times. 14 May 2018. Available online: https://dailytimes.com.pk/239950/rescue-operation-to-recover-missing-students-in-neelam-valley-continues (accessed on 15 December 2022).

- Jamal, S. Pakistan: 25 Tourists Feared Dead as Bridge Collapses in Neelum Valley. Gulf News, 13 May 2018. [Google Scholar]

- Davies, R. Pakistan—Massive Floods Destroy Bridge in Gilgit-Baltistan. FloodList, 8 May 2022. [Google Scholar]

- Dawn. Under-Construction Bridge on Swabi-Mardan Road Collapses. Dawn. 27 January 2022. Available online: https://www.dawn.com/news/1671676 (accessed on 15 December 2022).

- Gong, M.; Chen, J. Numerical investigation of load-induced fatigue cracking in curved ramp bridge deck pavement considering tire-bridge interaction. Constr. Build. Mater. 2022, 353, 129119. [Google Scholar] [CrossRef]

- Talukdar, S.; Banthia, N.; Grace, J.; Cohen, S. Climate change-induced carbonation of concrete infrastructure. Proc. Inst. Civ. Eng.-Constr. Mater. 2014, 167, 140–150. [Google Scholar] [CrossRef]

- Ababneh, A.N.; Al-Rousan, R.Z.; Alhassan, M.A.; Sheban, M.A. Assessment of shrinkage-induced cracks in restrained and unrestrained cement-based slabs. Constr. Build. Mater. 2017, 131, 371–380. [Google Scholar] [CrossRef]

- Wang, T.-T. Characterizing crack patterns on tunnel linings associated with shear deformation induced by instability of neighboring slopes. Eng. Geol. 2010, 115, 80–95. [Google Scholar] [CrossRef]

- Sun, B.; Xiao, R.-c.; Ruan, W.-d.; Wang, P.-b. Corrosion-induced cracking fragility of RC bridge with improved concrete carbonation and steel reinforcement corrosion models. Eng. Struct. 2020, 208, 110313. [Google Scholar] [CrossRef]

- Zhang, C.; Cai, J.; Cheng, X.; Zhang, X.; Guo, X.; Li, Y. Interface and crack propagation of cement-based composites with sulfonated asphalt and plasma-treated rock asphalt. Constr. Build. Mater. 2020, 242, 118161. [Google Scholar] [CrossRef]

- Wan, M. Discussion on Crack Control in Road Bridge Design and Construction. J. World Archit. 2020, 4, 14–16. [Google Scholar] [CrossRef]

- Alshboul, O.; Shehadeh, A.; Tatari, O.; Almasabha, G.; Saleh, E. Multiobjective and multivariable optimization for earthmoving equipment. J. Facil. Manag. 2022. [Google Scholar] [CrossRef]

- Alshboul, O.; Shehadeh, A.; Almasabha, G.; Almuflih, A.S. Extreme Gradient Boosting-Based Machine Learning Approach for Green Building Cost Prediction. Sustainability 2022, 14, 6651. [Google Scholar] [CrossRef]

- Salman, M.; Mathavan, S.; Kamal, K.; Rahman, M. Pavement crack detection using the Gabor filter. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 2039–2044. [Google Scholar]

- Lins, R.G.; Givigi, S.N. Automatic crack detection and measurement based on image analysis. IEEE Trans. Instrum. Meas. 2016, 65, 583–590. [Google Scholar] [CrossRef]

- Nishikawa, T.; Yoshida, J.; Sugiyama, T.; Fujino, Y. Concrete crack detection by multiple sequential image filtering. Comput.-Aided Civ. Infrastruct. Eng. 2012, 27, 29–47. [Google Scholar] [CrossRef]

- Ying, L.; Salari, E. Beamlet transform-based technique for pavement crack detection and classification. Comput.-Aided Civ. Infrastruct. Eng. 2010, 25, 572–580. [Google Scholar] [CrossRef]

- Mstafa, R.J.; Younis, Y.M.; Hussein, H.I.; Atto, M. A new video steganography scheme based on Shi-Tomasi corner detector. IEEE Access 2020, 8, 161825–161837. [Google Scholar] [CrossRef]

- Fujita, Y.; Hamamoto, Y. A robust automatic crack detection method from noisy concrete surfaces. Mach. Vis. Appl. 2011, 22, 245–254. [Google Scholar] [CrossRef]

- Yeum, C.M.; Dyke, S.J. Vision-based automated crack detection for bridge inspection. Comput.-Aided Civ. Infrastruct. Eng. 2015, 30, 759–770. [Google Scholar] [CrossRef]

- Kong, X.; Li, J. Vision-based fatigue crack detection of steel structures using video feature tracking. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 783–799. [Google Scholar] [CrossRef]

- Shan, B.; Zheng, S.; Ou, J. A stereovision-based crack width detection approach for concrete surface assessment. KSCE J. Civ. Eng. 2016, 20, 803–812. [Google Scholar] [CrossRef]

- Shi, Y.; Cui, L.; Qi, Z.; Meng, F.; Chen, Z. Automatic road crack detection using random structured forests. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3434–3445. [Google Scholar] [CrossRef]

- Gavilán, M.; Balcones, D.; Marcos, O.; Llorca, D.F.; Sotelo, M.A.; Parra, I.; Ocaña, M.; Aliseda, P.; Yarza, P.; Amírola, A. Adaptive road crack detection system by pavement classification. Sensors 2011, 11, 9628–9657. [Google Scholar] [CrossRef] [PubMed]

- Oliveira, H.; Correia, P.L. Automatic road crack detection and characterization. IEEE Trans. Intell. Transp. Syst. 2012, 14, 155–168. [Google Scholar] [CrossRef]

- Zaidi, S.Z.Y.; Akram, M.U.; Jameel, A.; Alghamdi, N.S. A deep learning approach for the classification of TB from NIH CXR dataset. IET Image Process. 2022, 16, 787–796. [Google Scholar] [CrossRef]

- Alshboul, O.; Shehadeh, A.; Almasabha, G.; Mamlook, R.E.A.; Almuflih, A.S. Evaluating the impact of external support on green building construction cost: A hybrid mathematical and machine learning prediction approach. Buildings 2022, 12, 1256. [Google Scholar] [CrossRef]

- Alshboul, O.; Almasabha, G.; Shehadeh, A.; Mamlook, R.E.A.; Almuflih, A.S.; Almakayeel, N. Machine learning-based model for predicting the shear strength of slender reinforced concrete beams without stirrups. Buildings 2022, 12, 1166. [Google Scholar] [CrossRef]

- Aslam, B.; Maqsoom, A.; Cheema, A.H.; Ullah, F.; Alharbi, A.; Imran, M. Water quality management using hybrid machine learning and data mining algorithms: An indexing approach. IEEE Access 2022, 10, 119692–119705. [Google Scholar] [CrossRef]

- Bae, H.; Jang, K.; An, Y.-K. Deep super resolution crack network (SrcNet) for improving computer vision–based automated crack detectability in in situ bridges. Struct. Health Monit. 2021, 20, 1428–1442. [Google Scholar] [CrossRef]

- Islam, M.M.; Kim, J.-M. Vision-based autonomous crack detection of concrete structures using a fully convolutional encoder–decoder network. Sensors 2019, 19, 4251. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Dung, C.V. Autonomous concrete crack detection using deep fully convolutional neural network. Autom. Constr. 2019, 99, 52–58. [Google Scholar] [CrossRef]

- Yang, X.; Li, H.; Yu, Y.; Luo, X.; Huang, T.; Yang, X. Automatic pixel-level crack detection and measurement using fully convolutional network. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1090–1109. [Google Scholar] [CrossRef]

- Li, H.; Xu, H.; Tian, X.; Wang, Y.; Cai, H.; Cui, K.; Chen, X. Bridge crack detection based on SSENets. Appl. Sci. 2020, 10, 4230. [Google Scholar] [CrossRef]

- Xu, H.; Su, X.; Wang, Y.; Cai, H.; Cui, K.; Chen, X. Automatic bridge crack detection using a convolutional neural network. Appl. Sci. 2019, 9, 2867. [Google Scholar] [CrossRef] [Green Version]

- Cha, Y.J.; Choi, W.; Büyüköztürk, O. Deep learning-based crack damage detection using convolutional neural networks. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 361–378. [Google Scholar] [CrossRef]

- Pauly, L.; Hogg, D.; Fuentes, R.; Peel, H. Deeper networks for pavement crack detection. In Proceedings of the 34th ISARC, Taipei, Taiwan, 28 June–1 July 2017; pp. 479–485. [Google Scholar]

- Su, C.; Wang, W. Concrete cracks detection using convolutional neuralnetwork based on transfer learning. Math. Probl. Eng. 2020, 2020, 7240129. [Google Scholar] [CrossRef]

- Li, S.; Zhao, X. Image-based concrete crack detection using convolutional neural network and exhaustive search technique. Adv. Civ. Eng. 2019, 2019, 6520620. [Google Scholar] [CrossRef] [Green Version]

- Yang, Q.; Shi, W.; Chen, J.; Lin, W. Deep convolution neural network-based transfer learning method for civil infrastructure crack detection. Autom. Constr. 2020, 116, 103199. [Google Scholar] [CrossRef]

- Deng, W.; Mou, Y.; Kashiwa, T.; Escalera, S.; Nagai, K.; Nakayama, K.; Matsuo, Y.; Prendinger, H. Vision based pixel-level bridge structural damage detection using a link ASPP network. Autom. Constr. 2020, 110, 102973. [Google Scholar] [CrossRef]

- Yu, Z.; Shen, Y.; Shen, C. A real-time detection approach for bridge cracks based on YOLOv4-FPM. Autom. Constr. 2021, 122, 103514. [Google Scholar] [CrossRef]

- Chen, T.; Cai, Z.; Zhao, X.; Chen, C.; Liang, X.; Zou, T.; Wang, P. Pavement crack detection and recognition using the architecture of segNet. J. Ind. Inf. Integr. 2020, 18, 100144. [Google Scholar] [CrossRef]

- Bang, S.; Park, S.; Kim, H.; Kim, H. Encoder–decoder network for pixel-level road crack detection in black-box images. Comput.-Aided Civ. Infrastruct. Eng. 2019, 34, 713–727. [Google Scholar] [CrossRef]

- Ni, F.; Zhang, J.; Chen, Z. Pixel-level crack delineation in images with convolutional feature fusion. Struct. Control Health Monit. 2019, 26, e2286. [Google Scholar] [CrossRef] [Green Version]

- Maeda, H.; Sekimoto, Y.; Seto, T.; Kashiyama, T.; Omata, H. Road damage detection and classification using deep neural networks with smartphone images. Comput.-Aided Civ. Infrastruct. Eng. 2018, 33, 1127–1141. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Cao, Y.; Wang, Y.; Wang, W. Computer vision-based concrete crack detection using U-net fully convolutional networks. Autom. Constr. 2019, 104, 129–139. [Google Scholar] [CrossRef]

- Escalona, U.; Arce, F.; Zamora, E.; Sossa, H. Fully convolutional networks for automatic pavement crack segmentation. Comput. Sist. 2019, 23, 451–460. [Google Scholar] [CrossRef]

- Fan, Z.; Li, C.; Chen, Y.; Wei, J.; Loprencipe, G.; Chen, X.; Di Mascio, P. Automatic crack detection on road pavements using encoder-decoder architecture. Materials 2020, 13, 2960. [Google Scholar] [CrossRef]

- Huyan, J.; Li, W.; Tighe, S.; Zhai, J.; Xu, Z.; Chen, Y. Detection of sealed and unsealed cracks with complex backgrounds using deep convolutional neural network. Autom. Constr. 2019, 107, 102946. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.; Li, B.; Yang, E.; Dai, X.; Peng, Y.; Fei, Y.; Liu, Y.; Li, J.Q.; Chen, C. Automated pixel-level pavement crack detection on 3D asphalt surfaces using a deep-learning network. Comput.-Aided Civ. Infrastruct. Eng. 2017, 32, 805–819. [Google Scholar] [CrossRef]

- Zhang, A.; Wang, K.C.; Fei, Y.; Liu, Y.; Tao, S.; Chen, C.; Li, J.Q.; Li, B. Deep learning–based fully automated pavement crack detection on 3D asphalt surfaces with an improved CrackNet. J. Comput. Civ. Eng. 2018, 32, 04018041. [Google Scholar] [CrossRef] [Green Version]

- Fei, Y.; Wang, K.C.; Zhang, A.; Chen, C.; Li, J.Q.; Liu, Y.; Yang, G.; Li, B. Pixel-level cracking detection on 3D asphalt pavement images through deep-learning-based CrackNet-V. IEEE Trans. Intell. Transp. Syst. 2019, 21, 273–284. [Google Scholar] [CrossRef]

- Islam, N.; Park, J. bCNN-Methylpred: Feature-Based Prediction of RNA Sequence Modification Using Branch Convolutional Neural Network. Genes 2021, 12, 1155. [Google Scholar] [CrossRef] [PubMed]

- Ali, R.; Zeng, J.; Cha, Y.-J. Deep learning-based crack detection in a concrete tunnel structure using multispectral dynamic imaging. In Proceedings of the Smart Structures and NDE for Industry 4.0, Smart Cities, and Energy Systems, Online, 27 April–8 May 2020; pp. 12–19. [Google Scholar]

- Islam, N.U.; Park, J. Depth estimation from a single RGB image using fine-tuned generative adversarial network. IEEE Access 2021, 9, 32781–32794. [Google Scholar] [CrossRef]

- Hawkins, D.M. The problem of overfitting. J. Chem. Inf. Comput. Sci. 2004, 44, 1–12. [Google Scholar] [CrossRef] [PubMed]

- Van Herk, M. A fast algorithm for local minimum and maximum filters on rectangular and octagonal kernels. Pattern Recognit. Lett. 1992, 13, 517–521. [Google Scholar] [CrossRef]

- Jung, H.-K.; Choi, G.-S. Improved YOLOv5: Efficient Object Detection Using Drone Images under Various Conditions. Appl. Sci. 2022, 12, 7255. [Google Scholar] [CrossRef]

- Cepni, S.; Atik, M.E.; Duran, Z. Vehicle detection using different deep learning algorithms from image sequence. Balt. J. Mod. Comput. 2020, 8, 347–358. [Google Scholar] [CrossRef]

- Andhy Panca Saputra, K. Waste Object Detection and Classification using Deep Learning Algorithm: YOLOv4 and YOLOv4-tiny. Turk. J. Comput. Math. Educ. (TURCOMAT) 2021, 12, 5583–5595. [Google Scholar]

| Year | Name/Location | Casualties | Ref |

|---|---|---|---|

| 2006 | Kalpani Bridge, Marden KPK, Pakistan | 70 | [25] |

| 2007 | SherShah Bridge, Karachi Sindh, Pakistan | 4 | [26] |

| 2007 | Northern Bypass Bridge, Karachi, Sindh, Pakistan | 10 | [27] |

| 2018 | Kundal Shahi bridge, Neelam valley Kashmir, Pakistan | 40 | [28] |

| 2018 | Jagran Nullah, Neelum Valley Kashmir, Pakistan | 25 | [29] |

| 2022 | Hassanabad Bridge, Hunza Gilgit-Baltistan, Pakistan | - | [30] |

| 2022 | Gohati Bridge, Swabi KPK, Pakistan | - | [31] |

| Model | Source/Device | Dataset | Domain | Advantages | Limitations | Results |

|---|---|---|---|---|---|---|

| Image filtering- (Gabor Filter) [41] | Canon IXUS 80 IS | 5 images 336 × 339 pixels | Pavement | Detect multidirectional cracks | The results are presented using only 5 images | Precision = 95% |

| Image filtering (Particle Filter) [42] | IP camera | 14 images 12 MP camera | Civil structure | The dimensions of the crack are determined using a single camera | - | Range of error = 7.51% to 8.59% |

| Image filtering and GP [43] | Digital camera | 17 images (Variable resolutions) | Concrete | Accurately detects cracks in the surface images captured in diverse conditions | - | Accuracy = 80% |

| Beamlet Transform [44] | Digital camera | Images of 256 × 256 pixels | Pavement | A robust method for crack extraction | Unable to determine the crack width | Fast and noise-resistant approach |

| Shi-Tomasi Algorithm [45] | Consumer-grade digital camera | Real-time detection | Steel bridge | Robust to various lighting situations and complicated textures | Accuracy is affected by the camera resolution | - |

| Model | Source/Device | Dataset | Domain | Advantages | Limitations | Results |

|---|---|---|---|---|---|---|

| Gaussian Models, K-means clustering [49] | Digital camera | 84 images 1536 × 2048 pixels | Road | Crack width is accurately determined | Narrow cracks are not accurately detected | F-Measure = 97% |

| SVM, MDNMS [50] | Line scan camera, laser beams | 7250 images, 4000 × 1000 pixels | Road | Easily distinguish between the 10 types of pavements | - | Precision = 98.29% Recall = 93.86% |

| SVM, Random Forests [51] | CDN and AigleRN datasets | 38 CDN, 118 AigleRN images 480 × 320 pixels | Road | Reliable for noisy images | There is no measurement of crack width | Precision = 96.73% |

| Model | Source/Device | Dataset | Domain | Advantages | Limitations | Results |

|---|---|---|---|---|---|---|

| Fully convolutional network (FCN) [58] | Open-source data | 4000 images 224 × 224 pixels | Concrete | Accurately identify the crack path | - | F1 average and Recall = 92% |

| FCN [59] | Public dataset | 500 annotated images 227 × 227 pixels | Concrete | Accurately detect cracks and their density | Challenging when the image is too noisy | F1 and average Precision = 90% |

| FCN [60] | Online dataset | 800 plus building images | Concrete wall | No preprocessing required | Cannot accurately detect thin and image border cracks | Accuracy = 97.96%, Precision = 81.73% F1 score = 79.95% |

| EMA-Dense Net [6] | Public concrete crack dataset and bridge images, 5D Mark IV digital single-lens reflex camera | 400 bridge & 800+ concrete crack images 4464 × 2976 pixels | Bridge and concrete | Robust and accurate detection | High-resolution images needed | MPA = 87.42%, MIoU 92.59% for the public concrete crack dataset. MioU = 79.87%, MPA = 86.35% for the bridge damage dataset. |

| SSENet [61] | CMOS surface array camera | 6069 images 224 × 224 pixels | Bridge | - | Detection accuracy reduces with reduced negative samples | Accuracy = 97% |

| CNN [62] | CMOS surface array camera | 6069 images 224 × 224 pixels | Bridge | Superior performance than traditional models | - | Accuracy = 96.37% |

| CNN [63] | DSLR Camera | 332 raw images | Concrete | Accurately detect thin cracks | Extensive training is needed | Accuracy = 98% |

| CNN [64] | Smartphone | 500 images 3264 × 2448 pixels | Pavement | High detection accuracy | Location variance problems | Accuracy = 91.3%, Precision = 90.7% |

| CNN [65] | ImageNet dataset, bridge images | 6000 crack, 600 non-crack images | Bridge | Good detection accuracy | The severity of the crack is not assessed | Accuracy = 99.1% |

| CNN [66] | ImageNet dataset, bridge images | 60,000 images | Bridge | Accurately detect cracks in real time | - | Validation accuracy = 99.06%, Testing accuracy = 98.32% |

| DCCN [67] | Mixed online and in-person data | CCIC (14,000), SDNET (4916), BCD (5000) images | Concrete, bridge | High accuracy, less training time | Quantitative representation of transfer knowledge is not studied | CCIC accuracy = 99.83%, BCD = 99.72%, and SDNET = 97.07%. |

| LinkASSP Net [68] | Digital camera | 732 images | Bridge | Superior detection of minority class | Cannot assess damage in practice | High F1 score |

| YOLOv4-FPM [69] | Digital SLR camera/UAV -Dajiang M210RTK | 376 images | Bridge | Accurately detect cracks in images of various size | Pruning rate optimization is required to reduce storage space | - |

| SegNet [70] | Online dataset | - | Pavement, bridge | Superior detection and generalization | A huge dataset is required | Mean average precision (mAP) = 83% |

| Deep encoder decoder [71] | Black box camera | 527 images | Road pavement | Robust and high detection accuracy | Requires a superior and efficient approach to detect pixel-level cracks | Recall = 77.68%, precision = 71.98% |

| GoogleNet, FPN [72] | Canon digital camera | 128,000 images, 224 × 224 pixel | Civil structures | Accurately delineate cracks | Time-consuming | Precision = 80.13% Recall = 86.09% F-Measure = 81.55% |

| SSD Inception V2, SSD MobileNet [73] | Smartphone | 9053 images | Road | Cost-efficient | Extensive training needed | Recall > 71%, Precision > 77% |

| U-Net [74] | Ordinary camera | 84 images, 512 × 512 pixel | Concrete | Higher accuracy than FCN | Hyper parameters should be artificially adjusted | Precision = 90% |

| U-Net [75] | CFD and Aigle-RN datasets | 118 images (CFD), 38 images (AigleRN) | Pavement | Accurately detect thin cracks, less processing time | - | CFD Precision = 97.31% and Recall = 94.28%, Aigle-RN Recall = 82.9% and precision = 93.51% |

| U-HDN [76] | Public dataset (CFD and AigleRN) | 118 images (CFD), 38 images (AigleRN) | Pavement | Accurately detect pavements cracks | Less efficient and high computational cost | CFD precision = 0.945, and Recall = 0.936, Aigle-RN Recall = 0.931 and precision = 0.921 |

| Crack DN [77] | Smartphones, ordinary cameras | 12,000 images, 500 × 356 pixel | Road | Easily detect sealed and unsealed cracks | Lower detection speed | Mean average precision(mAP) > 0.90, Accuracy > 0.85 |

| CrackNet [78] | 5,000 3D pavement images | 2000 3D images | Pavement | Detect cracks at pixel level | Hairline cracks not accurately detected | Precision = 90.13%, Recall = 87.63%, F-measure = 88.86% |

| CrackNet II [79] | PaveVison3D system | 3000 Images | Asphalt pavement | Robust network for detecting hairline cracks | A complex problem like suppressing cracklike patterns is not solved | Recall = 89.06%, Precision = 90.20%, F-measure = 89.62% |

| CrackNet-V [80] | PaveVision3D system | 3083 Images | Pavement | Detect fine cracks quickly | Less accurate in terms of wide crack detection | Precision = 84.31%, F1 score = 87.12%, Recall = 90.12% |

| CrackNet-R [78] | PaveVision 3D system | 4000 Images | Pavement | Higher speed and accuracy | - | Recall = 95.00%, Precision = 88.89%, F-measure = 91.84% |

| Step | Small Crack | Large Crack |

|---|---|---|

| Training | 650 | 773 |

| Validation | 182 | 245 |

| Testing | 96 | 123 |

| Testing on Pakistani bridge images | 91 | 110 |

| Total | 1019 | 1251 |

| Parameter | Value |

|---|---|

| GPU | P100, T4, V100 |

| CPU | 2 × vCPU |

| RAM | 52 GB |

| Background execution | yes |

| Model | Batch Size | Epoch | Class | Precision % | Recall % | [email protected] % |

|---|---|---|---|---|---|---|

| YOLOv5s | 16 | 300 | All | 0.973 | 0.963 | 0.98 |

| Large crack | 0.972 | 0.969 | 0.99 | |||

| Small crack | 0.973 | 0.958 | 0.97 | |||

| YOLOv5m | 16 | 300 | All | 0.974 | 0.966 | 0.983 |

| Large crack | 0.976 | 0.98 | 0.988 | |||

| Small crack | 0.972 | 0.953 | 0.978 | |||

| YOLOv5l | 16 | 300 | All | 0.98 | 0.968 | 0.981 |

| Large crack | 0.983 | 0.972 | 0.987 | |||

| Small crack | 0.978 | 0.963 | 0.976 |

| Model | No of Test Images | Precision | Recall | [email protected] | Inference Time |

|---|---|---|---|---|---|

| YOLOv5s | 219 | 0.973 | 0.931 | 0.978 | 0.8 ms |

| YOLOv5m | 219 | 0.977 | 0.967 | 0.993 | 1.1 ms |

| YOLOv5l | 219 | 0.993 | 0.94 | 0.991 | 1.2 ms |

| Model | Class | Precision | Recall | [email protected] |

|---|---|---|---|---|

| YOLOv5s | All | 0.83 | 0.84 | 0.812 |

| Large Crack | 0.765 | 0.843 | 0.787 | |

| Small Crack | 0.895 | 0.836 | 0.837 | |

| YOLOv5m | All | 0.821 | 0.898 | 0.867 |

| Large Crack | 0.806 | 0.895 | 0.87 | |

| Small Crack | 0.837 | 0.902 | 0.865 | |

| YOLOv5l | All | 0.808 | 0.814 | 0.777 |

| Large Crack | 0.73 | 0.804 | 0.687 | |

| Small Crack | 0.885 | 0.824 | 0.868 |

| Model | Total Images | Correct Detection | Wrong/Missed Detection |

|---|---|---|---|

| YOLOv5s | 219 | 217 | 2 |

| YOLOv5m | 219 | 219 | 0 |

| YOLOv5l | 219 | 216 | 3 |

| Model | Total Images | Correct Detection | Wrong/Missed Detection |

|---|---|---|---|

| YOLOv5s | 201 | 190 | 11 |

| YOLOv5m | 201 | 195 | 6 |

| YOLOv5l | 201 | 182 | 19 |

| Characteristics | Sub-Characteristics | Values |

|---|---|---|

| Dataset | Training images | 1423 |

| Validation images | 427 | |

| Accuracy | Training | 98.3 |

| Validation | 93.4 | |

| Epoch | 200 | |

| Batch Size | 12 | |

| Model | Precision | Recall | [email protected] |

|---|---|---|---|

| YOLOv3-SPP | 0.903 | 0.875 | 0.941 |

| YOLOv4 | 0.96 | 0.90 | 0.951 |

| YOLOv5m | 0.974 | 0.966 | 0.983 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Inam, H.; Islam, N.U.; Akram, M.U.; Ullah, F. Smart and Automated Infrastructure Management: A Deep Learning Approach for Crack Detection in Bridge Images. Sustainability 2023, 15, 1866. https://doi.org/10.3390/su15031866

Inam H, Islam NU, Akram MU, Ullah F. Smart and Automated Infrastructure Management: A Deep Learning Approach for Crack Detection in Bridge Images. Sustainability. 2023; 15(3):1866. https://doi.org/10.3390/su15031866

Chicago/Turabian StyleInam, Hina, Naeem Ul Islam, Muhammad Usman Akram, and Fahim Ullah. 2023. "Smart and Automated Infrastructure Management: A Deep Learning Approach for Crack Detection in Bridge Images" Sustainability 15, no. 3: 1866. https://doi.org/10.3390/su15031866